It’s Just Adding One Word at a Time

只需每次增加一个单词

That ChatGPT can automatically generate something that reads even superficially like human-written text is remarkable, and unexpected. But how does it do it? And why does it work? My purpose here is to give a rough outline of what’s going on inside ChatGPT—and then to explore why it is that it can do so well in producing what we might consider to be meaningful text. I should say at the outset that I’m going to focus on the big picture of what’s going on—and while I’ll mention some engineering details, I won’t get deeply into them. (And the essence of what I’ll say applies just as well to other current “large language models” [LLMs] as to ChatGPT.)

ChatGPT 能自动生成表面上看似人类编写的文本,这非常了不起,令人意外。它是怎么做到的呢?为什么它能成功?我在这里的目的是粗略描述一下 ChatGPT 的内部运作情况,然后探讨它为何能如此出色地生成我们认为有意义的文本。我要在一开始就说明,我将重点讨论整体情况——虽然我会提到一些工程细节,但不会深入探讨。(我所说的内容同样适用于其他当前的“大型语言模型” [LLMs]),以及 ChatGPT。

The first thing to explain is that what ChatGPT is always fundamentally trying to do is to produce a “reasonable continuation” of whatever text it’s got so far, where by “reasonable” we mean “what one might expect someone to write after seeing what people have written on billions of webpages, etc.”

首先需要解释的是,ChatGPT 的基本功能是对已有的文本进行“合理续写”,这里所谓的“合理”,是指在阅读了数十亿网页上的内容后,可能会继续编写的内容。

So let’s say we’ve got the text “The best thing about AI is its ability to”. Imagine scanning billions of pages of human-written text (say on the web and in digitized books) and finding all instances of this text—then seeing what word comes next what fraction of the time. ChatGPT effectively does something like this, except that (as I’ll explain) it doesn’t look at literal text; it looks for things that in a certain sense “match in meaning”. But the end result is that it produces a ranked list of words that might follow, together with “probabilities”:

假设我们有一段文本“人工智能的最大优点是它的能力”。想象一下,扫描数十亿页的人工撰写内容(例如互联网上和数字化的书籍),找到所有这一文本的实例——然后观察在大多数情况下下一个词是什么。ChatGPT 基本上做了类似的事情,只不过(正如我会解释的那样),它并不看字面上的文本,而是寻找在某种意义上“意义一致”的内容。但最终它会产生一个可能的后续词的排名列表,以及附带的“概率”:

And the remarkable thing is that when ChatGPT does something like write an essay what it’s essentially doing is just asking over and over again “given the text so far, what should the next word be?”—and each time adding a word. (More precisely, as I’ll explain, it’s adding a “token”, which could be just a part of a word, which is why it can sometimes “make up new words”.)

非常了不起的是,当 ChatGPT 写作文时,它其实只是在不断地根据前面的文本问“下一个词是什么?”——然后每次添加一个词。(更准确地说,它是在添加一个“标记”,这个标记可能只是单词的一部分,所以它有时会“创造新词”。)

But, OK, at each step it gets a list of words with probabilities. But which one should it actually pick to add to the essay (or whatever) that it’s writing? One might think it should be the “highest-ranked” word (i.e. the one to which the highest “probability” was assigned). But this is where a bit of voodoo begins to creep in. Because for some reason—that maybe one day we’ll have a scientific-style understanding of—if we always pick the highest-ranked word, we’ll typically get a very “flat” essay, that never seems to “show any creativity” (and even sometimes repeats word for word). But if sometimes (at random) we pick lower-ranked words, we get a “more interesting” essay.

但是,好吧,在每一步,它都会得到一个带有概率的单词列表。但实际要选择哪一个来添加到它正在写的作文(或其他内容)中呢?人们可能会认为它应该选择“概率最高”的单词(即被赋予“最高概率”的单词)。但这有点像巫术。因为出于某种原因——也许某一天我们会对其有科学理解——如果我们总是选择概率最高的单词,我们通常会得到一篇非常“平淡”的文章,看起来从未“展示任何创造力”(甚至有时会逐字重复)。但如果有时(随机地)选择概率较低的单词,我们会得到一篇“更有趣”的文章。

The fact that there’s randomness here means that if we use the same prompt multiple times, we’re likely to get different essays each time. And, in keeping with the idea of voodoo, there’s a particular so-called “temperature” parameter that determines how often lower-ranked words will be used, and for essay generation, it turns out that a “temperature” of 0.8 seems best. (It’s worth emphasizing that there’s no “theory” being used here; it’s just a matter of what’s been found to work in practice. And for example the concept of “temperature” is there because exponential distributions familiar from statistical physics happen to be being used, but there’s no “physical” connection—at least so far as we know.)

随机性的存在意味着如果我们多次使用相同的提示,每次可能会产生不同的文章。按照巫术的概念,有一个特定的“温度”参数决定了使用低阶词汇的频率,而生成文章的最佳“温度”似乎是 0.8。(需要强调的是,这里没有使用任何理论知识;这只是实践中发现可行的做法。举例来说,“温度”这个概念来源于统计物理学中的指数分布,但至少据我们所知,两者之间没有“物理”联系。)

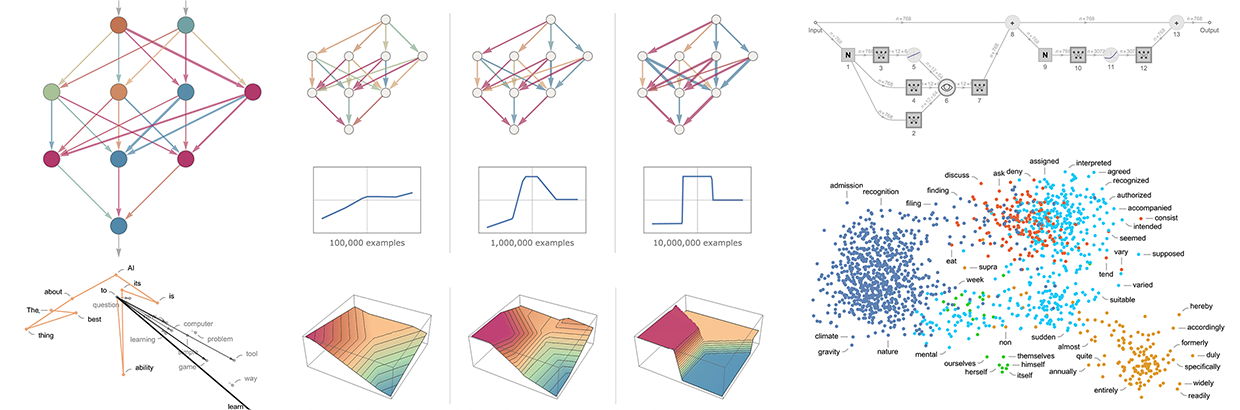

Before we go on I should explain that for purposes of exposition I’m mostly not going to use the full system that’s in ChatGPT; instead I’ll usually work with a simpler GPT-2 system, which has the nice feature that it’s small enough to be able to run on a standard desktop computer. And so for essentially everything I show I’ll be able to include explicit Wolfram Language code that you can immediately run on your computer. (Click any picture here to copy the code behind it.)

在我们继续之前,我需要解释一下,为了方便说明,我大部分时间不会使用 ChatGPT 的完整系统,而是使用一个更简单的 GPT-2 系统。这个系统的一个优点是它足够小,可以在标准台式电脑上运行。因此,几乎所有我展示的内容都可以包含明确的 Wolfram 语言代码,您可以立即在电脑上运行这些代码。(点击这里的任何图片来复制其背后的代码。)

For example, here’s how to get the table of probabilities above. First, we have to retrieve the underlying “language model” neural net:

示例:我们需要先检索出“语言模型”神经网络,然后才能获取上面的概率表:

Later on, we’ll look inside this neural net, and talk about how it works. But for now we can just apply this “net model” as a black box to our text so far, and ask for the top 5 words by probability that the model says should follow:

稍后,我们会深入了解这个神经网络,讨论它的工作原理。但现在,我们可以将这个“网络模型”作为一个黑盒,应用到现有的文本上,看看模型预测出的概率最高的五个后续单词:

This takes that result and makes it into an explicit formatted “dataset”:

这将结果转换成一个明确格式化的“数据集”:

Here’s what happens if one repeatedly “applies the model”—at each step adding the word that has the top probability (specified in this code as the “decision” from the model):

如果重复多次“应用模型”,在每一步都添加模型给出的最高概率的词,就会发生以下情况:

What happens if one goes on longer? In this (“zero temperature”) case what comes out soon gets rather confused and repetitive:

如果时间延长会怎样?在这种“零温度”的情况下,结果很快会变得非常混乱和重复:

But what if instead of always picking the “top” word one sometimes randomly picks “non-top” words (with the “randomness” corresponding to “temperature” 0.8)? Again one can build up text:

但是,如果不总是选择最常见的词语,而是随机地选择一些不太常见的词语(这种“随机性”的参数被称为“温度”,比如 0.8),会怎样呢?同样可以生成文本:

And every time one does this, different random choices will be made, and the text will be different—as in these 5 examples:

每次这样做时,都会做出不同的随机选择,文本也会有所不同——如下这 5 个例子所示:

It’s worth pointing out that even at the first step there are a lot of possible “next words” to choose from (at temperature 0.8), though their probabilities fall off quite quickly (and, yes, the straight line on this log-log plot corresponds to an n–1 “power-law” decay that’s very characteristic of the general statistics of language):

值得注意的是,即便是在第一步,也有很多可能的“下一个词”可供选择(在温度 0.8 时),不过它们的概率下降得非常快(没错,这个对数-对数图上的直线符合 n –1 的“幂律”衰减,这,对于语言的整体统计特征是很典型的):

So what happens if one goes on longer? Here’s a random example. It’s better than the top-word (zero temperature) case, but still at best a bit weird:

那么,如果一个人走得更远会发生什么呢?这里有一个随机例子。这比选择最高词的情况要好一些,但仍然有点奇怪:

This was done with the simplest GPT-2 model (from 2019). With the newer and bigger GPT-3 models the results are better. Here’s the top-word (zero temperature) text produced with the same “prompt”, but with the biggest GPT-3 model:

这是用 2019 年的最简单 GPT-2 模型生成的。使用更新和更大的 GPT-3 模型,效果更好。以下是用相同“提示词”生成的零温度文本,但使用的是最大的 GPT-3 模型:

And here’s a random example at “temperature 0.8”:

这是一个在“温度 0.8”下的随机例子:

Where Do the Probabilities Come From?

概率从哪里来?

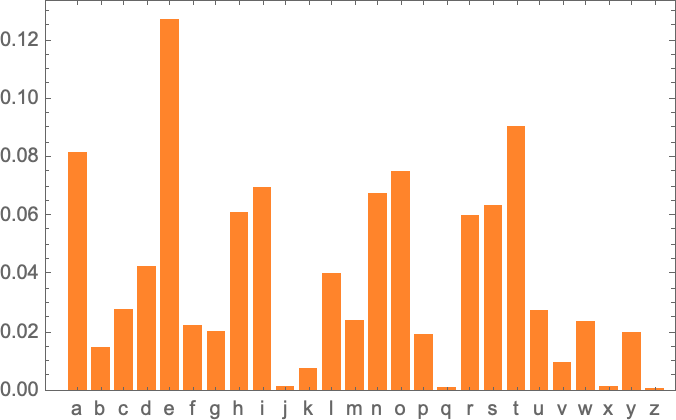

OK, so ChatGPT always picks its next word based on probabilities. But where do those probabilities come from? Let’s start with a simpler problem. Let’s consider generating English text one letter (rather than word) at a time. How can we work out what the probability for each letter should be?

好的,ChatGPT 总是根据概率选择下一个词。但这些概率来自哪里呢?让我们先从一个简单的问题入手。假设我们是一个字母一个字母地生成英语文本,该如何计算每个字母的概率呢?

A very minimal thing we could do is just take a sample of English text, and calculate how often different letters occur in it. So, for example, this counts letters in the Wikipedia article on “cats”:

我们可以做的一个最基本的事情就是获取一段英语文本样本,计算不同字母出现的频率。例如,这是维基百科文章中关于“猫”的字母计数:

And this does the same thing for “dogs”:

这对于“狗”来说也是一样的:

The results are similar, but not the same (“o” is no doubt more common in the “dogs” article because, after all, it occurs in the word “dog” itself). Still, if we take a large enough sample of English text we can expect to eventually get at least fairly consistent results:

结果相似,但并不完全相同(由于“o”本身在“dog”这个词中出现,所以它在“dogs”文章中更常见)。尽管如此,如果我们取足够大的英文文本样本,最终可能会得到相对一致的结果:

Here’s a sample of what we get if we just generate a sequence of letters with these probabilities:

这是根据这些概率生成的一串字母的样本:

We can break this into “words” by adding in spaces as if they were letters with a certain probability:

我们可以通过在字母之间随机添加空格来将其分解为“单词”:

We can do a slightly better job of making “words” by forcing the distribution of “word lengths” to agree with what it is in English:

我们可以通过让“单词长度”的分布与英语中的一致,来更好地构造“单词”:

We didn’t happen to get any “actual words” here, but the results are looking slightly better. To go further, though, we need to do more than just pick each letter separately at random. And, for example, we know that if we have a “q”, the next letter basically has to be “u”.

我们这里虽然没有得到任何具体的单词,但结果略有改善。不过,要进一步改进,我们需要的不仅仅是随机选择每个字母。比如,如果出现“q”,那么下一个字母基本上必须是“u”。

Here’s a plot of the probabilities for letters on their own:

这是一个显示单个字母概率的图表:

And here’s a plot that shows the probabilities of pairs of letters (“2-grams”) in typical English text. The possible first letters are shown across the page, the second letters down the page:

这里有一个图表,显示了典型英语文本中字母对(“二元组”)的概率。可能的第一个字母在页面的横向,第二个字母在页面的纵向:

And we see here, for example, that the “q” column is blank (zero probability) except on the “u” row. OK, so now instead of generating our “words” a single letter at a time, let’s generate them looking at two letters at a time, using these “2-gram” probabilities. Here’s a sample of the result—which happens to include a few “actual words”:

举个例子,在这里我们看到“q”列是空的(零概率),只有在“u”行上有值。那么,现在我们不再是一次生成一个字母的“单词”,而是使用这些“2-gram”概率一次生成包含两个字母的“单词”。以下是一个示例—其中偶尔会包含一些“实际单词”:

With sufficiently much English text we can get pretty good estimates not just for probabilities of single letters or pairs of letters (2-grams), but also for longer runs of letters. And if we generate “random words” with progressively longer n-gram probabilities, we see that they get progressively “more realistic”:

当我们拥有足够多的英文文本时,不仅可以准确估算单个字母或字母对(双字组)的概率,还可以估算更长的字母序列的概率。而且如果我们利用越来越长的 n-gram 概率生成“随机单词”,会发现它们变得越来越“真实”:

But let’s now assume—more or less as ChatGPT does—that we’re dealing with whole words, not letters. There are about 40,000 reasonably commonly used words in English. And by looking at a large corpus of English text (say a few million books, with altogether a few hundred billion words), we can get an estimate of how common each word is. And using this we can start generating “sentences”, in which each word is independently picked at random, with the same probability that it appears in the corpus. Here’s a sample of what we get:

但现在让我们假设——类似于 ChatGPT 的做法——我们处理的是整个单词,而不是字母。英语中大约有 40,000 个常用词。通过查看一个庞大的英文文本语料库(例如几百万本书,总计几百亿个单词),我们可以估计每个单词的使用频率。利用这些频率,我们可以开始生成“句子”。在这些句子中,每个单词都是独立随机选择的,选择概率与它在语料库中出现的频率相同。以下是我们生成的一个示例:

Not surprisingly, this is nonsense. So how can we do better? Just like with letters, we can start taking into account not just probabilities for single words but probabilities for pairs or longer n-grams of words. Doing this for pairs, here are 5 examples of what we get, in all cases starting from the word “cat”:

不意外,这是一派胡言。那么我们怎么能做得更好呢?和字母一样,我们可以开始考虑不仅是单个单词的概率,还要考虑词对或更长的词组的概率。以下是从“猫”这个词开始的 5 个示例:

It’s getting slightly more “sensible looking”. And we might imagine that if we were able to use sufficiently long n-grams we’d basically “get a ChatGPT”—in the sense that we’d get something that would generate essay-length sequences of words with the “correct overall essay probabilities”. But here’s the problem: there just isn’t even close to enough English text that’s ever been written to be able to deduce those probabilities.

它看起来稍微更“合理”了。我们可以想象,如果我们能够使用足够长的 n 元语法,我们基本上会得到类似 ChatGPT 的东西——意思是说,我们会得到能够生成长度级别的文章且具有“整体正确概率”的单词序列。但问题是:甚至连接近足够多的英文文本都没被写出来来推断这些概率。

In a crawl of the web there might be a few hundred billion words; in books that have been digitized there might be another hundred billion words. But with 40,000 common words, even the number of possible 2-grams is already 1.6 billion—and the number of possible 3-grams is 60 trillion. So there’s no way we can estimate the probabilities even for all of these from text that’s out there. And by the time we get to “essay fragments” of 20 words, the number of possibilities is larger than the number of particles in the universe, so in a sense they could never all be written down.

在网络爬虫中可能会出现几千亿个单词;在已数字化的书籍中可能会有另外的一千亿个单词。但是,即使是 40,000 个常用单词,2 元组的可能数量已经达到 16 亿,而 3 元组的可能数量则高达 60 万亿。因此,我们无法通过现有的文本来估计所有这些概率。而当我们谈到包含 20 个单词的“文章片段”时,其可能的组合数量比宇宙中的粒子还要多,从某种意义上说,它们永远无法全部写下来。

So what can we do? The big idea is to make a model that lets us estimate the probabilities with which sequences should occur—even though we’ve never explicitly seen those sequences in the corpus of text we’ve looked at. And at the core of ChatGPT is precisely a so-called “large language model” (LLM) that’s been built to do a good job of estimating those probabilities.

那么我们能做什么呢?主要的想法是创建一个模型,可以估计序列出现的概率——即使这些序列在我们查看的文本库中从未明确出现。而 ChatGPT 的核心正是这样的一个“大型语言模型”(LLM),它被构建来很好地估计这些概率。

What Is a Model?

什么是模型?

Say you want to know (as Galileo did back in the late 1500s) how long it’s going to take a cannon ball dropped from each floor of the Tower of Pisa to hit the ground. Well, you could just measure it in each case and make a table of the results. Or you could do what is the essence of theoretical science: make a model that gives some kind of procedure for computing the answer rather than just measuring and remembering each case.

假如你想知道(就像伽利略在 16 世纪末所做的那样),一个炮弹从比萨斜塔的每一层掉落到地面需要多长时间。你可以在每种情况下进行测量并制作一张结果表。或者你可以做理论科学的本质工作:建立一个模型,通过计算得出答案,而不仅仅是测量和记住每一种情况。

Let’s imagine we have (somewhat idealized) data for how long the cannon ball takes to fall from various floors:

设想一下,我们有一些理想化的数据,显示炮弹从不同楼层坠落的时间:

How do we figure out how long it’s going to take to fall from a floor we don’t explicitly have data about? In this particular case, we can use known laws of physics to work it out. But say all we’ve got is the data, and we don’t know what underlying laws govern it. Then we might make a mathematical guess, like that perhaps we should use a straight line as a model:

我们怎么知道从没有明确数据的楼层掉下来需要多长时间?在这种情况下,我们可以利用已知的物理定律来计算。但假如我们只有数据,不知道背后的定律是什么,那么我们可能会通过数学猜测,比如用一条直线来进行建模:

We could pick different straight lines. But this is the one that’s on average closest to the data we’re given. And from this straight line we can estimate the time to fall for any floor.

我们可以选择不同的直线,但这一条是与我们给定数据平均最接近的。通过这条直线,我们可以估算每层楼的坠落时间。

How did we know to try using a straight line here? At some level we didn’t. It’s just something that’s mathematically simple, and we’re used to the fact that lots of data we measure turns out to be well fit by mathematically simple things. We could try something mathematically more complicated—say a + b x + c x2—and then in this case we do better:

我们怎么知道在这里尝试使用直线?某种程度上,我们并不知道。这只是数学上很简单的东西,我们已经习惯于很多测量的数据正好符合简单的数学模型。我们可以尝试更复杂的数学模型——比如 a + b x + c x 2 ——在这种情况下效果会更好:

Things can go quite wrong, though. Like here’s the best we can do with a + b/x + c sin(x):

事情可能会非常糟糕。不过,这就是我们对 a + b/x + c sin(x)所能做的最好结果:

It is worth understanding that there’s never a “model-less model”. Any model you use has some particular underlying structure—then a certain set of “knobs you can turn” (i.e. parameters you can set) to fit your data. And in the case of ChatGPT, lots of such “knobs” are used—actually, 175 billion of them.

理解没有“无模型的模型”这一点是很重要的。你使用的任何模型都具有特定的基础结构——并且有一系列可以调整的参数来适应数据。在 ChatGPT 的情况下,实际上使用了 1750 亿个这样的参数。

But the remarkable thing is that the underlying structure of ChatGPT—with “just” that many parameters—is sufficient to make a model that computes next-word probabilities “well enough” to give us reasonable essay-length pieces of text.

但值得注意的是,ChatGPT 的底层结构,虽然参数“不多”,却足以使模型“很好地”计算出下一个单词的概率,从而为我们提供合理长度的文章。

Models for Human-Like Tasks

人类任务模型

The example we gave above involves making a model for numerical data that essentially comes from simple physics—where we’ve known for several centuries that “simple mathematics applies”. But for ChatGPT we have to make a model of human-language text of the kind produced by a human brain. And for something like that we don’t (at least yet) have anything like “simple mathematics”. So what might a model of it be like?

我们上面提到的例子是为基于简单物理学的数值数据建立模型,这些数据遵循“简单数学”的规律,这个规律我们已经知道了几个世纪。但对于 ChatGPT,我们需要为人类大脑生成的人类语言文本建立模型。对于这种情况,我们现在还没有类似“简单数学”的理论。那么,这个模型可能是什么样的呢?

Before we talk about language, let’s talk about another human-like task: recognizing images. And as a simple example of this, let’s consider images of digits (and, yes, this is a classic machine learning example):

在谈论语言之前,让我们先谈谈另一个类似人类的任务:识别图像。作为一个简单的例子,让我们考虑一下数字的图像(对,这是一个经典的机器学习例子)。

One thing we could do is get a bunch of sample images for each digit:

我们可以为每个数字收集一些样本图像:

Then to find out if an image we’re given as input corresponds to a particular digit we could just do an explicit pixel-by-pixel comparison with the samples we have. But as humans we certainly seem to do something better—because we can still recognize digits, even when they’re for example handwritten, and have all sorts of modifications and distortions:

然后,为了确定输入的图像是否对应特定的数字,我们可以与已有样本逐像素进行比较。但人类显然能做得更好——因为即使是手写的数字,即便有各种修改和扭曲,我们仍能认出来:

When we made a model for our numerical data above, we were able to take a numerical value x that we were given, and just compute a + b x for particular a and b. So if we treat the gray-level value of each pixel here as some variable xi is there some function of all those variables that—when evaluated—tells us what digit the image is of? It turns out that it’s possible to construct such a function. Not surprisingly, it’s not particularly simple, though. And a typical example might involve perhaps half a million mathematical operations.

当我们为上面的数值数据建立模型时,可以获取一个给定的数值 x,然后计算出特定的 a 和 b,即 a + b x。因此,如果将这里每个像素的灰度值作为变量 x,是否存在一种函数,通过计算这些变量来确定图像中的数字呢?事实证明可以构造这样的函数。不过,这并不简单。一个典型的例子可能需要约五十万次数学运算。

But the end result is that if we feed the collection of pixel values for an image into this function, out will come the number specifying which digit we have an image of. Later, we’ll talk about how such a function can be constructed, and the idea of neural nets. But for now let’s treat the function as black box, where we feed in images of, say, handwritten digits (as arrays of pixel values) and we get out the numbers these correspond to:

最终结果是,如果我们将一幅图像的像素值集输入到这个函数中,输出将是识别图像中哪个数字的数字。稍后,我们会讨论如何构建这样的函数,以及神经网络的概念。但目前我们先将这个函数视为一个黑盒子,我们输入手写数字的图像(像素值数组),输出它们对应的数字:

But what’s really going on here? Let’s say we progressively blur a digit. For a little while our function still “recognizes” it, here as a “2”. But soon it “loses it”, and starts giving the “wrong” result:

但这里到底发生了什么?假设我们逐渐模糊一个数字。一段时间内我们的函数仍然“识别”它,把它当作“2”。但很快它就“认不出来”了,并开始给出“错误”的结果:

But why do we say it’s the “wrong” result? In this case, we know we got all the images by blurring a “2”. But if our goal is to produce a model of what humans can do in recognizing images, the real question to ask is what a human would have done if presented with one of those blurred images, without knowing where it came from.

但为什么我们说这是“错误”的结果呢?在这种情况下,我们知道模糊了一个“2”得到了所有的图像。但如果我们的目标是构建一个模拟人类识别图像能力的模型,真正的问题是,如果给一个人一个不知道来源的模糊图像,他们会怎么做。

And we have a “good model” if the results we get from our function typically agree with what a human would say. And the nontrivial scientific fact is that for an image-recognition task like this we now basically know how to construct functions that do this.

如果我们从函数获得的结果通常与人的判断一致,那么这个模型就是“好的”。 一个重要的科学事实是,对于这种图像识别任务,我们现在基本知道如何构建实现这一功能的算法。

Can we “mathematically prove” that they work? Well, no. Because to do that we’d have to have a mathematical theory of what we humans are doing. Take the “2” image and change a few pixels. We might imagine that with only a few pixels “out of place” we should still consider the image a “2”. But how far should that go? It’s a question of human visual perception. And, yes, the answer would no doubt be different for bees or octopuses—and potentially utterly different for putative aliens.

我们可以用“数学证明”它们有效吗?嗯,不能。因为要做到这一点,我们需要一个关于人类行为的数学理论。拿一个数字“2”的图像并改变几个像素。我们可能会觉得即使只有几个像素“错位”,这个图像仍然是“2”。但这种情况应该延续到什么程度?这是一个关于人类视觉感知的问题。而且,是的,对于蜜蜂或章鱼来说,答案无疑会不同——而对假设中的外星人来说,可能会完全不同。

Neural Nets 神经网络

OK, so how do our typical models for tasks like image recognition actually work? The most popular—and successful—current approach uses neural nets. Invented—in a form remarkably close to their use today—in the 1940s, neural nets can be thought of as simple idealizations of how brains seem to work.

好的,那么我们的典型任务模型(如图像识别)实际上是如何工作的呢?目前最流行且成功的方法是使用神经网络。神经网络于 1940 年代发明,其形式与今天的使用非常接近,可以被看作是大脑工作方式的简单理想化。

In human brains there are about 100 billion neurons (nerve cells), each capable of producing an electrical pulse up to perhaps a thousand times a second. The neurons are connected in a complicated net, with each neuron having tree-like branches allowing it to pass electrical signals to perhaps thousands of other neurons. And in a rough approximation, whether any given neuron produces an electrical pulse at a given moment depends on what pulses it’s received from other neurons—with different connections contributing with different “weights”.

在人类大脑中,大约有 1000 亿个神经元(神经细胞),每个神经元每秒可以产生多达一千次的电脉冲。神经元以复杂的网络相互连接,每个神经元都有树状分支,使其能够将电信号传递给数以千计的其他神经元。大致而言,某个特定神经元在特定时刻是否产生电脉冲,取决于它从其他神经元接收到的脉冲——不同的连接以不同的“权重”起作用。

When we “see an image” what’s happening is that when photons of light from the image fall on (“photoreceptor”) cells at the back of our eyes they produce electrical signals in nerve cells. These nerve cells are connected to other nerve cells, and eventually the signals go through a whole sequence of layers of neurons. And it’s in this process that we “recognize” the image, eventually “forming the thought” that we’re “seeing a 2” (and maybe in the end doing something like saying the word “two” out loud).

当我们“看到一个图像”时,实际上是图像的光子落在眼睛后部的“光受器”细胞上,进而在神经细胞中产生电信号。这些神经细胞相互连接,最终这些信号通过一系列神经元传递。通过这个过程,我们“识别”了图像,最终“想到”我们看到了“2”(最后可能会把“二”这个字大声说出来)。

The “black-box” function from the previous section is a “mathematicized” version of such a neural net. It happens to have 11 layers (though only 4 “core layers”):

前一节提到的“黑箱”函数是这种神经网络的“数学版”。它有 11 层(但只有 4 层是“核心层”):

There’s nothing particularly “theoretically derived” about this neural net; it’s just something that—back in 1998—was constructed as a piece of engineering, and found to work. (Of course, that’s not much different from how we might describe our brains as having been produced through the process of biological evolution.)

这个神经网络没有所谓的“理论推导”;它只是 1998 年作为一项工程被构建,并且确实有效。(当然,这和我们描述大脑是通过生物进化产生的方式没什么不同。)

OK, but how does a neural net like this “recognize things”? The key is the notion of attractors. Imagine we’ve got handwritten images of 1’s and 2’s:

好的,但是这样的神经网络如何“识别事物”?关键在于吸引子的概念。想象一下,我们有手写的数字 1 和 2 的图像:

We somehow want all the 1’s to “be attracted to one place”, and all the 2’s to “be attracted to another place”. Or, put a different way, if an image is somehow “closer to being a 1” than to being a 2, we want it to end up in the “1 place” and vice versa.

我们希望所有的 1 都聚集到一个地方,所有的 2 都聚集到另一个地方。换句话说,如果某个图像更接近 1 而不是 2,我们希望它最终位于代表 1 的区域,反之亦然。

As a straightforward analogy, let’s say we have certain positions in the plane, indicated by dots (in a real-life setting they might be positions of coffee shops). Then we might imagine that starting from any point on the plane we’d always want to end up at the closest dot (i.e. we’d always go to the closest coffee shop). We can represent this by dividing the plane into regions (“attractor basins”) separated by idealized “watersheds”:

作为一个简单的类比,假设我们在平面上有一些特定的位置,用点来表示(在现实生活中可能是咖啡店的位置)。然后,我们可以想象,从平面上的任何一点出发,我们总是会想要到达最近的点(即,我们总是会去最近的咖啡店)。我们可以通过将平面划分为由理想化的“分水岭”分隔开的区域(称为“吸引盆地”)来表示这一点。

We can think of this as implementing a kind of “recognition task” in which we’re not doing something like identifying what digit a given image “looks most like”—but rather we’re just, quite directly, seeing what dot a given point is closest to. (The “Voronoi diagram” setup we’re showing here separates points in 2D Euclidean space; the digit recognition task can be thought of as doing something very similar—but in a 784-dimensional space formed from the gray levels of all the pixels in each image.)

我们可以将这视为一种“识别任务”,但不是在识别某个图像最像哪个数字,而是直接观察给定点最接近哪个点。(我们展示的“Voronoi 图”将二维欧几里得空间中的点分开;数字识别任务可以视为在一个由所有像素灰度级组成的 784 维空间中进行类似的操作。)

So how do we make a neural net “do a recognition task”? Let’s consider this very simple case:

那么我们如何让神经网络执行识别任务呢?让我们来看一个非常简单的例子:

Our goal is to take an “input” corresponding to a position {x,y}—and then to “recognize” it as whichever of the three points it’s closest to. Or, in other words, we want the neural net to compute a function of {x,y} like:

我们的目标是获取位置 {x,y} 的“输入”,然后将其识别为距离最近的三个点中的任意一个。换句话说,我们希望神经网络计算一个关于 {x,y} 的函数:

So how do we do this with a neural net? Ultimately a neural net is a connected collection of idealized “neurons”—usually arranged in layers—with a simple example being:

那么我们如何使用神经网络来实现这一点呢?归根结底,神经网络是由理想化的“神经元”组成的连接体——通常以层级排列——下面是一个简单的例子:

Each “neuron” is effectively set up to evaluate a simple numerical function. And to “use” the network, we simply feed numbers (like our coordinates x and y) in at the top, then have neurons on each layer “evaluate their functions” and feed the results forward through the network—eventually producing the final result at the bottom:

每个“神经元”实际上都被设置为评估一个简单的函数。使用这个网络时,我们只需从顶部输入数字(例如我们的 x 和 y 坐标),然后让每一层的神经元“评估它们的函数”,并将结果通过网络传递出去,最终在底部生成最终结果:

In the traditional (biologically inspired) setup each neuron effectively has a certain set of “incoming connections” from the neurons on the previous layer, with each connection being assigned a certain “weight” (which can be a positive or negative number). The value of a given neuron is determined by multiplying the values of “previous neurons” by their corresponding weights, then adding these up and adding a constant—and finally applying a “thresholding” (or “activation”) function. In mathematical terms, if a neuron has inputs

在传统的(生物学启发的)设置中,每个神经元实际上有一组来自前一层神经元的“输入连接”,每个连接都有一个特定的“权重”(权重可以是正数或负数)。神经元的值由“前一层神经元”的值乘以相应的权重相加,再加上一个常数,最后应用“阈值”或“激活”函数来决定。用数学术语来说,如果一个神经元的输入是 x = {x0, x1, …},那么我们计算 f[w . x + b],其中权重 w 和常数 b 通常为网络中的每个神经元不同;函数 f 通常是相同的。

Computing w . x + b is just a matter of matrix multiplication and addition. The “activation function” f introduces nonlinearity (and ultimately is what leads to nontrivial behavior). Various activation functions commonly get used; here we’ll just use Ramp (or ReLU):

计算 w . x + b 只是矩阵乘法和加法的问题。“激活函数” f 引入了非线性(最终导致复杂行为)。通常使用各种激活函数;在这里我们只使用 Ramp (或 ReLU):

For each task we want the neural net to perform (or, equivalently, for each overall function we want it to evaluate) we’ll have different choices of weights. (And—as we’ll discuss later—these weights are normally determined by “training” the neural net using machine learning from examples of the outputs we want.)

对于我们希望神经网络执行的每个任务(或等同于我们希望它评估的每个整体功能),我们会有不同的权重选择。(而且——我们稍后会讨论——这些权重通常通过使用机器学习,从我们想要的输出示例中训练神经网络来确定。)

Ultimately, every neural net just corresponds to some overall mathematical function—though it may be messy to write out. For the example above, it would be:

最终,每个神经网络只是对应某个整体的数学函数——尽管表达式可能很复杂。对于上面的例子,它将是:

The neural net of ChatGPT also just corresponds to a mathematical function like this—but effectively with billions of terms.

ChatGPT 的神经网络也是一个类似的数学函数,但它实际上包含了数十亿个参数。

But let’s go back to individual neurons. Here are some examples of the functions a neuron with two inputs (representing coordinates x and y) can compute with various choices of weights and constants (and Ramp as activation function):

但是让我们回到单个神经元。以下是一些示例,展示了一个具有两个输入(表示坐标 x 和 y)的神经元,在选择不同的权重和常数以及 Ramp 作为激活函数时可以计算的功能:

But what about the larger network from above? Well, here’s what it computes:

那么,更大的网络又是怎样的呢?好吧,这是它的计算结果:

It’s not quite “right”, but it’s close to the “nearest point” function we showed above.

虽然不完全“正确”,但它已经很接近我们之前展示的“最近点”功能了。

Let’s see what happens with some other neural nets. In each case, as we’ll explain later, we’re using machine learning to find the best choice of weights. Then we’re showing here what the neural net with those weights computes:

让我们看看其他一些神经网络会有什么样的表现。在每种情况下,我们都会如稍后解释的那样,利用机器学习找出最优的权重。然后这里展示的是这个神经网络在那些权重下的计算结果:

Bigger networks generally do better at approximating the function we’re aiming for. And in the “middle of each attractor basin” we typically get exactly the answer we want. But at the boundaries—where the neural net “has a hard time making up its mind”—things can be messier.

较大的网络通常在逼近我们目标函数时表现更好。在“每个吸引子盆地的中心”,我们通常能准确得到想要的答案。但在边界——神经网络“难以抉择”的地方——情况可能会更复杂。

With this simple mathematical-style “recognition task” it’s clear what the “right answer” is. But in the problem of recognizing handwritten digits, it’s not so clear. What if someone wrote a “2” so badly it looked like a “7”, etc.? Still, we can ask how a neural net distinguishes digits—and this gives an indication:

通过这个简单的数学形式的“识别任务”,很清楚什么是“正确答案”。但在识别手写数字的问题上,就不那么明显了。如果有人把一个“2”写得很糟看起来像个“7”呢?尽管如此,我们仍然可以询问神经网络如何区分数字——这可以提供一些指示:

Can we say “mathematically” how the network makes its distinctions? Not really. It’s just “doing what the neural net does”. But it turns out that that normally seems to agree fairly well with the distinctions we humans make.

我们能用数学方式说明网络如何进行区分吗?其实不能。它只是“做神经网络该做的事情”。但事实证明,这通常和我们人类的区分相当一致。

Let’s take a more elaborate example. Let’s say we have images of cats and dogs. And we have a neural net that’s been trained to distinguish them. Here’s what it might do on some examples:

我们来看看一个更详细的例子。假设我们有一些猫和狗的图片,还有一个经过训练的神经网络来区分它们。看一下它在某些情况下的表现:

Now it’s even less clear what the “right answer” is. What about a dog dressed in a cat suit? Etc. Whatever input it’s given the neural net will generate an answer, and in a way reasonably consistent with how humans might. As I’ve said above, that’s not a fact we can “derive from first principles”. It’s just something that’s empirically been found to be true, at least in certain domains. But it’s a key reason why neural nets are useful: that they somehow capture a “human-like” way of doing things.

现在更加不清楚什么是“正确答案”了。如果是一只穿着猫服的狗呢?等等。无论输入什么,神经网络都会生成一个答案,而且这个答案和人类的方式较为一致。正如我之前所说的,这并不是一个可以“从基本原理推导出来”的事实。这只是经验上发现的真实情况,至少在某些领域是如此。这也是神经网络有用的重要原因之一:它们在某种程度上捕捉到了“类似人类”的处理方式。

Show yourself a picture of a cat, and ask “Why is that a cat?”. Maybe you’d start saying “Well, I see its pointy ears, etc.” But it’s not very easy to explain how you recognized the image as a cat. It’s just that somehow your brain figured that out. But for a brain there’s no way (at least yet) to “go inside” and see how it figured it out. What about for an (artificial) neural net? Well, it’s straightforward to see what each “neuron” does when you show a picture of a cat. But even to get a basic visualization is usually very difficult.

给自己看一张猫的照片,问“为什么那是一只猫?”。也许你会说“嗯,因为它有尖耳朵等等。”但要解释你是怎么认出这是一只猫的并不容易。你的大脑通过某种方式判断出来,但目前还不能“深入”大脑看看是怎么判断出来的。而对于(人工)神经网络呢?展示一张猫的图片时,可以直接看到每个“神经元”的反应。但即使是得到一个基本的可视化效果也通常非常困难。

In the final net that we used for the “nearest point” problem above there are 17 neurons. In the net for recognizing handwritten digits there are 2190. And in the net we’re using to recognize cats and dogs there are 60,650. Normally it would be pretty difficult to visualize what amounts to 60,650-dimensional space. But because this is a network set up to deal with images, many of its layers of neurons are organized into arrays, like the arrays of pixels it’s looking at.

在我们用于“最近点”问题的最终网络中,有 17 个神经元。在用于识别手写数字的网络中,有 2190 个神经元。而在我们识别猫和狗的网络中,有 60,650 个神经元。通常,要想象一个 60,650 维的空间是很困难的。但因为这是一个处理图像的网络,所以它的许多神经层被组织成数组,就像它正在查看的像素数组一样。

And if we take a typical cat image

如果我们看一张典型的猫照片

then we can represent the states of neurons at the first layer by a collection of derived images—many of which we can readily interpret as being things like “the cat without its background”, or “the outline of the cat”:

然后,我们可以通过一系列派生图像来表示第一层神经元的状态——其中有许多图像我们可以轻松地识别为“没有背景的猫”或“猫的轮廓”:

By the 10th layer it’s harder to interpret what’s going on:

当到达第十层时,很难理解发生了什么:

But in general we might say that the neural net is “picking out certain features” (maybe pointy ears are among them), and using these to determine what the image is of. But are those features ones for which we have names—like “pointy ears”? Mostly not.

但总体来说,我们可以说神经网络在“识别某些特征”(比如可能包括尖耳朵),并利用这些特征来判断图像是什么。但这些特征是否有我们熟悉的名字,例如“尖耳朵”?大多没有。

Are our brains using similar features? Mostly we don’t know. But it’s notable that the first few layers of a neural net like the one we’re showing here seem to pick out aspects of images (like edges of objects) that seem to be similar to ones we know are picked out by the first level of visual processing in brains.

我们的大脑是否使用相似的特征?大多数情况下我们并不清楚。不过值得注意的是,像我们展示的这种神经网络的前几层,似乎会挑选出图像的一些方面(例如物体的边缘),这些方面似乎与我们知道的大脑视觉处理的第一级所挑选的相似。

But let’s say we want a “theory of cat recognition” in neural nets. We can say: “Look, this particular net does it”—and immediately that gives us some sense of “how hard a problem” it is (and, for example, how many neurons or layers might be needed). But at least as of now we don’t have a way to “give a narrative description” of what the network is doing. And maybe that’s because it truly is computationally irreducible, and there’s no general way to find what it does except by explicitly tracing each step. Or maybe it’s just that we haven’t “figured out the science”, and identified the “natural laws” that allow us to summarize what’s going on.

但假设我们想要在神经网络中实现一个“猫识别理论”。我们可以说:“看,这个特定的网络做到了”,这能让我们大概了解“这个问题的难度”(比如,需要多少神经元或层)。然而,至少目前我们还无法“叙述性地”描述这个网络在做什么。也许这是因为它确实在计算上无法简化,没有通用的方法可以找到它的运行原理,除非逐步明确追踪。或者,可能只是因为我们还没有“搞清楚其科学原理”,并找出那些能让我们总结出正在发生的事情的“自然法则”。

We’ll encounter the same kinds of issues when we talk about generating language with ChatGPT. And again it’s not clear whether there are ways to “summarize what it’s doing”. But the richness and detail of language (and our experience with it) may allow us to get further than with images.

在讨论用 ChatGPT 生成语言时,我们会遇到类似的问题。同样,也不确定是否有办法“总结它的工作原理”。不过,语言的丰富性和细节(以及我们对语言的体验)或许能让我们比在处理图像时走得更远。

Machine Learning, and the Training of Neural Nets

机器学习与神经网络训练

We’ve been talking so far about neural nets that “already know” how to do particular tasks. But what makes neural nets so useful (presumably also in brains) is that not only can they in principle do all sorts of tasks, but they can be incrementally “trained from examples” to do those tasks.

到目前为止,我们一直在讨论那些“已经知道”如何执行特定任务的神经网络。然而,神经网络之所以如此有用(在大脑中也可能如此),不仅因为它们原则上可以执行各种任务,还因为它们可以通过示例逐步“训练”来完成这些任务。

When we make a neural net to distinguish cats from dogs we don’t effectively have to write a program that (say) explicitly finds whiskers; instead we just show lots of examples of what’s a cat and what’s a dog, and then have the network “machine learn” from these how to distinguish them.

当我们创建一个神经网络来区分猫和狗时,我们不需要具体编写一个程序去识别猫须;相反,我们只需要展示大量猫和狗的例子,然后让网络通过 “机器学习” 来学会如何区分它们。

And the point is that the trained network “generalizes” from the particular examples it’s shown. Just as we’ve seen above, it isn’t simply that the network recognizes the particular pixel pattern of an example cat image it was shown; rather it’s that the neural net somehow manages to distinguish images on the basis of what we consider to be some kind of “general catness”.

重点在于训练好的网络能够从它被展示的特定例子中“举一反三”。正如我们上面所看到的,这不仅仅是网络识别它所展示的特定猫图像的像素模式,而是神经网络能够在某种“猫的总体特征”基础上区分图像。

So how does neural net training actually work? Essentially what we’re always trying to do is to find weights that make the neural net successfully reproduce the examples we’ve given. And then we’re relying on the neural net to “interpolate” (or “generalize”) “between” these examples in a “reasonable” way.

那么神经网络的训练是怎么运作的呢?基本上,我们一直在寻找能够让神经网络成功再现我们提供的示例的权重。然后,我们依赖神经网络以一种“合理”的方式在这些示例间进行“插值”或“泛化”。

Let’s look at a problem even simpler than the nearest-point one above. Let’s just try to get a neural net to learn the function:

我们来看一个比上面的最近点问题更简单的问题。我们来尝试让一个神经网络学习这个函数:

For this task, we’ll need a network that has just one input and one output, like:

完成这个任务,我们需要一个只有单一输入和输出的网络,例如:

But what weights, etc. should we be using? With every possible set of weights the neural net will compute some function. And, for example, here’s what it does with a few randomly chosen sets of weights:

但我们应该使用哪些权重呢?对于每一组可能的权重,神经网络都会计算出某些函数。例如,以下是它用几组随机选择的权重得到的结果:

And, yes, we can plainly see that in none of these cases does it get even close to reproducing the function we want. So how do we find weights that will reproduce the function?

而且,是的,我们可以清楚地看到在这些情况下没有一个能够接近重现我们想要的功能。那么我们该如何找到能够重现该功能的权重呢?

The basic idea is to supply lots of “input → output” examples to “learn from”—and then to try to find weights that will reproduce these examples. Here’s the result of doing that with progressively more examples:

基本的想法是通过提供大量“输入→输出”示例来进行学习,然后尝试找到可以重现这些示例的权重。以下是使用越来越多的示例得出的结果:

At each stage in this “training” the weights in the network are progressively adjusted—and we see that eventually we get a network that successfully reproduces the function we want. So how do we adjust the weights? The basic idea is at each stage to see “how far away we are” from getting the function we want—and then to update the weights in such a way as to get closer.

在每一阶段的“训练”中,网络中的权重都会逐步调整——最终我们会得到一个成功重现目标函数的网络。那么,我们如何调整权重呢?基本思路是每个阶段评估一下“我们距离目标函数有多远”,然后以缩短这个距离的方式来更新权重。

To find out “how far away we are” we compute what’s usually called a “loss function” (or sometimes “cost function”). Here we’re using a simple (L2) loss function that’s just the sum of the squares of the differences between the values we get, and the true values. And what we see is that as our training process progresses, the loss function progressively decreases (following a certain “learning curve” that’s different for different tasks)—until we reach a point where the network (at least to a good approximation) successfully reproduces the function we want:

为了找出“我们离目标有多远”,我们会计算通常称为“损失函数”(有时称为“代价函数”)的东西。在这里,我们使用一个简单的 L2 损失函数,它只是将我们得到的值与真实值之间差的平方和起来。我们可以看到的是,随着训练过程的进展,损失函数逐渐减少(根据不同任务会有不同的“学习曲线”)—直到我们的网络(至少在某种程度上)能够成功地再现我们想要实现的函数:

Alright, so the last essential piece to explain is how the weights are adjusted to reduce the loss function. As we’ve said, the loss function gives us a “distance” between the values we’ve got, and the true values. But the “values we’ve got” are determined at each stage by the current version of neural net—and by the weights in it. But now imagine that the weights are variables—say wi. We want to find out how to adjust the values of these variables to minimize the loss that depends on them.

好的,所以最后一个要解释的关键部分是如何调整权重来减少损失函数。正如我们所说,损失函数给出了我们得到的值与真实值之间的“误差”。但实际上,“我们得到的值”在每个阶段都由当前版本的神经网络及其权重决定。现在,想象权重是变量,比如 w。我们希望找出如何调整这些变量的值以最小化依赖于它们的损失。

For example, imagine (in an incredible simplification of typical neural nets used in practice) that we have just two weights w1 and w2. Then we might have a loss that as a function of w1 and w2 looks like this:

例如,想象一下(这非常简化了实际使用的典型神经网络)我们只有两个权重 w 1 和 w 2 。那么,我们可能会有一个损失函数,其作为 w 1 和 w 2 的函数如下:

Numerical analysis provides a variety of techniques for finding the minimum in cases like this. But a typical approach is just to progressively follow the path of steepest descent from whatever previous w1, w2 we had:

数值分析提供了多种技术来处理这种情况中的最小值问题。然而,一种常见的方法是沿着我们之前的 w 1 ,w 2 的最陡下降路径逐步前进:

Like water flowing down a mountain, all that’s guaranteed is that this procedure will end up at some local minimum of the surface (“a mountain lake”); it might well not reach the ultimate global minimum.

就像水流下山一样,这个过程最终只会到达表面的某个局部最低点(“一个山湖”);它可能不会到达全球最低点。

It’s not obvious that it would be feasible to find the path of the steepest descent on the “weight landscape”. But calculus comes to the rescue. As we mentioned above, one can always think of a neural net as computing a mathematical function—that depends on its inputs, and its weights. But now consider differentiating with respect to these weights. It turns out that the chain rule of calculus in effect lets us “unravel” the operations done by successive layers in the neural net. And the result is that we can—at least in some local approximation—“invert” the operation of the neural net, and progressively find weights that minimize the loss associated with the output.

显然,在“权重景观”上找到最陡下降路径并不容易。但微积分可以解决这个问题。如前所述,我们可以始终将神经网络视为一个依赖其输入和权重的数学函数。现在考虑对这些权重进行微分。事实证明,微积分的链式法则实际上可以让我们“解构”神经网络各层的操作。结果是,我们可以至少在局部近似下“反转”神经网络的操作,并逐步找到能够最小化输出损失的权重。

The picture above shows the kind of minimization we might need to do in the unrealistically simple case of just 2 weights. But it turns out that even with many more weights (ChatGPT uses 175 billion) it’s still possible to do the minimization, at least to some level of approximation. And in fact the big breakthrough in “deep learning” that occurred around 2011 was associated with the discovery that in some sense it can be easier to do (at least approximate) minimization when there are lots of weights involved than when there are fairly few.

上图显示了在只有 2 个权重的简单情况下,我们可能需要进行的优化。事实上,即使权重更多(ChatGPT 使用了 1750 亿个),我们仍然可以进行某种程度的近似优化。实际上,2011 年前后在“深度学习”领域的重大突破之一是发现,当涉及大量权重时,进行近似优化在某种意义上比涉及较少权重时更容易。

In other words—somewhat counterintuitively—it can be easier to solve more complicated problems with neural nets than simpler ones. And the rough reason for this seems to be that when one has a lot of “weight variables” one has a high-dimensional space with “lots of different directions” that can lead one to the minimum—whereas with fewer variables it’s easier to end up getting stuck in a local minimum (“mountain lake”) from which there’s no “direction to get out”.

换句话说,有点违反直觉的是,使用神经网络解决复杂问题比解决简单问题更容易。原因大致是,当有很多“权重变量”时,会有一个高维空间,有“很多不同的方向”可以找到最低点;而变量少的话,人们更容易陷入一个局部最低点(“山中湖”),从中没有“离开的方向”。

It’s worth pointing out that in typical cases there are many different collections of weights that will all give neural nets that have pretty much the same performance. And usually in practical neural net training there are lots of random choices made—that lead to “different-but-equivalent solutions”, like these:

值得注意的是,在一般情况下,有很多不同的权重组合可以产生表现几乎相同的神经网络。而且在实际的神经网络训练过程中,通常会做出许多随机选择,这会导致“不同但等效的解决方案”,如下所示:

But each such “different solution” will have at least slightly different behavior. And if we ask, say, for an “extrapolation” outside the region where we gave training examples, we can get dramatically different results:

但每个这样的“不同解决方案”至少会有些微不同的行为。如果我们要求在给定训练示例区域之外进行“外推”,我们可能会得到截然不同的结果:

But which of these is “right”? There’s really no way to say. They’re all “consistent with the observed data”. But they all correspond to different “innate” ways to “think about” what to do “outside the box”. And some may seem “more reasonable” to us humans than others.

但是这些中哪个是“正确的”?其实无法确定。它们都“与观察数据一致”。但它们对应不同的“先天”思维方式来“跳出框架”考虑问题。对我们人类来说,有些可能显得“更合理”。

The Practice and Lore of Neural Net Training

神经网络训练的实践与理论

Particularly over the past decade, there’ve been many advances in the art of training neural nets. And, yes, it is basically an art. Sometimes—especially in retrospect—one can see at least a glimmer of a “scientific explanation” for something that’s being done. But mostly things have been discovered by trial and error, adding ideas and tricks that have progressively built a significant lore about how to work with neural nets.

特别是在过去十年里,神经网络训练技术取得了许多进展。是的,这确实是一种艺术。有时候,尤其是事后回顾,人们可能会发现某些做法在某种程度上有“科学”的解释。但大多数情况下,很多东西是通过不断试验和失败发现的,并加入了各种想法和技巧,逐渐形成了处理神经网络的丰富经验。

There are several key parts. First, there’s the matter of what architecture of neural net one should use for a particular task. Then there’s the critical issue of how one’s going to get the data on which to train the neural net. And increasingly one isn’t dealing with training a net from scratch: instead a new net can either directly incorporate another already-trained net, or at least can use that net to generate more training examples for itself.

有几个关键部分。首先,需要决定使用哪种神经网络架构来完成特定任务。其次是如何获取用于训练神经网络的数据这一重要问题。而且,越来越多的情况是,不再从头开始训练网络,而是一个新网络可以直接整合一个已经训练好的网络,或者至少可以使用那个网络为自己生成更多的训练样本。

One might have thought that for every particular kind of task one would need a different architecture of neural net. But what’s been found is that the same architecture often seems to work even for apparently quite different tasks. At some level this reminds one of the idea of universal computation (and my Principle of Computational Equivalence), but, as I’ll discuss later, I think it’s more a reflection of the fact that the tasks we’re typically trying to get neural nets to do are “human-like” ones—and neural nets can capture quite general “human-like processes”.

可能有人会认为,每一种特定类型的任务都需要不同的神经网络架构。然而,事实证明,相同的架构往往也能胜任明显不同的任务。某种程度上,这让人想起了通用计算的概念(以及我的计算等价性原理),但正如我稍后将讨论的那样,我认为这更反映了我们通常要求神经网络执行的是“类人”的任务,而神经网络能够捕捉到相当普遍的“类人过程”。

In earlier days of neural nets, there tended to be the idea that one should “make the neural net do as little as possible”. For example, in converting speech to text it was thought that one should first analyze the audio of the speech, break it into phonemes, etc. But what was found is that—at least for “human-like tasks”—it’s usually better just to try to train the neural net on the “end-to-end problem”, letting it “discover” the necessary intermediate features, encodings, etc. for itself.

在早期的神经网络研究中,人们倾向于认为应该“尽量减少神经网络的工作量”。例如,在将语音转换为文本时,认为应该先分析语音音频,把它分解成音素等。但后来发现,至少对于“类人任务”,通常最好还是在“端到端”问题上训练神经网络,让它自己“发现”所需的中间特征、编码等。

There was also the idea that one should introduce complicated individual components into the neural net, to let it in effect “explicitly implement particular algorithmic ideas”. But once again, this has mostly turned out not to be worthwhile; instead, it’s better just to deal with very simple components and let them “organize themselves” (albeit usually in ways we can’t understand) to achieve (presumably) the equivalent of those algorithmic ideas.

还有一种想法是,要向神经网络中引入复杂的单独组件,以便"明确实施特定的算法思想"。然而,这再次证明这并不值得;相反,最好是只处理非常简单的组件,让它们“自行组织”(尽管通常是以我们无法理解的方式)来实现那些算法思想的等价物。

That’s not to say that there are no “structuring ideas” that are relevant for neural nets. Thus, for example, having 2D arrays of neurons with local connections seems at least very useful in the early stages of processing images. And having patterns of connectivity that concentrate on “looking back in sequences” seems useful—as we’ll see later—in dealing with things like human language, for example in ChatGPT.

这并不是说神经网络没有相关的“结构化理念”。例如,在图像处理的早期阶段,具有局部连接的二维神经元阵列非常有用。而且,如我们稍后将看到的那样,专注于“回顾序列”的连接模式,对于处理诸如人类语言之类的内容,例如在 ChatGPT 中,似乎非常有用。

But an important feature of neural nets is that—like computers in general—they’re ultimately just dealing with data. And current neural nets—with current approaches to neural net training—specifically deal with arrays of numbers. But in the course of processing, those arrays can be completely rearranged and reshaped. And as an example, the network we used for identifying digits above starts with a 2D “image-like” array, quickly “thickening” to many channels, but then “concentrating down” into a 1D array that will ultimately contain elements representing the different possible output digits:

但神经网络的一个重要特性是,它们和计算机一样,最终只是处理数据。而现在的神经网络——用当前的训练方法——处理的是数字数组。但在处理过程中,这些数组可以完全被重新排列和重塑。比如,我们用来识别数字的网络,开始时是一个二维的“图像状”数组,很快变成包含多个通道的厚阵列,然后“集中”成一个一维数组,最终包含表示不同可能输出数字的元素:

But, OK, how can one tell how big a neural net one will need for a particular task? It’s something of an art. At some level the key thing is to know “how hard the task is”. But for human-like tasks that’s typically very hard to estimate. Yes, there may be a systematic way to do the task very “mechanically” by computer. But it’s hard to know if there are what one might think of as tricks or shortcuts that allow one to do the task at least at a “human-like level” vastly more easily. It might take enumerating a giant game tree to “mechanically” play a certain game; but there might be a much easier (“heuristic”) way to achieve “human-level play”.

但是,好的,一个人怎么知道需要多大一个神经网络来完成特定的任务呢?这有点像一门艺术。在某种程度上,关键是要知道“任务有多难”。但是对于类似人类的任务,这通常很难估计。是的,可能有一种用计算机非常“机械地”完成任务的系统方法。但是很难知道是否有一些可以认为是技巧或捷径的方法,让一个人以“类似人类的水平”更轻松地完成任务。可能需要枚举一个巨大的游戏树来“机械地”玩某个特定游戏,但可能存在一种更简单的(“启发式”)方法来实现“人类水平的游戏”。

When one’s dealing with tiny neural nets and simple tasks one can sometimes explicitly see that one “can’t get there from here”. For example, here’s the best one seems to be able to do on the task from the previous section with a few small neural nets:

当处理小型神经网络和简单任务时,有时你会明确地发现“从这里无法到达那里”。例如,以下是在上一节任务中,使用几个小型神经网络可达到的最优结果:

And what we see is that if the net is too small, it just can’t reproduce the function we want. But above some size, it has no problem—at least if one trains it for long enough, with enough examples. And, by the way, these pictures illustrate a piece of neural net lore: that one can often get away with a smaller network if there’s a “squeeze” in the middle that forces everything to go through a smaller intermediate number of neurons. (It’s also worth mentioning that “no-intermediate-layer”—or so-called “perceptron”—networks can only learn essentially linear functions—but as soon as there’s even one intermediate layer it’s always in principle possible to approximate any function arbitrarily well, at least if one has enough neurons, though to make it feasibly trainable one typically has some kind of regularization or normalization.)

我们发现,如果神经网络太小,它无法实现我们想要的功能。但当网络达到一定规模时,它就没问题了——前提是训练时间足够长,并且有足够的样本。此外,这些图片还说明了一个神经网络的经验:如果中间有一个“挤压”环节,将所有内容通过一个较少的中间神经元数量,通常可以使用较小的网络。 顺便一提,“无中间层”(或称“感知器”)的网络只能学习线性函数。但只要存在一个中间层,原则上就可以任意逼近任何函数,至少当有足够的神经元时。尽管如此,为了让其更易于训练,通常会进行某种形式的正则化或标准化。)

OK, so let’s say one’s settled on a certain neural net architecture. Now there’s the issue of getting data to train the network with. And many of the practical challenges around neural nets—and machine learning in general—center on acquiring or preparing the necessary training data. In many cases (“supervised learning”) one wants to get explicit examples of inputs and the outputs one is expecting from them. Thus, for example, one might want images tagged by what’s in them, or some other attribute. And maybe one will have to explicitly go through—usually with great effort—and do the tagging. But very often it turns out to be possible to piggyback on something that’s already been done, or use it as some kind of proxy. And so, for example, one might use alt tags that have been provided for images on the web. Or, in a different domain, one might use closed captions that have been created for videos. Or—for language translation training—one might use parallel versions of webpages or other documents that exist in different languages.

好的,假设我们已经确定了一种神经网络架构。接下来是获取训练数据的问题。许多神经网络的实际挑战——以及一般的机器学习挑战——都集中在获取或准备必要的训练数据上。在很多情况下(“监督学习”),我们需要明确的输入和期望输出的例子。例如,我们可能需要标记出图片中的内容或其他属性。也许我们需要亲自费力地进行标记。但通常会发现,有可能依赖已经完成的工作,或将其作为某种代理来使用。 比如,可以使用为网页上的图片提供的替代标签。或者,在其他领域,可以使用为视频创建的字幕。又或者——在语言翻译训练中,可以使用存在于不同语言的网页或其他文档的对应版本。

How much data do you need to show a neural net to train it for a particular task? Again, it’s hard to estimate from first principles. Certainly the requirements can be dramatically reduced by using “transfer learning” to “transfer in” things like lists of important features that have already been learned in another network. But generally neural nets need to “see a lot of examples” to train well. And at least for some tasks it’s an important piece of neural net lore that the examples can be incredibly repetitive. And indeed it’s a standard strategy to just show a neural net all the examples one has, over and over again. In each of these “training rounds” (or “epochs”) the neural net will be in at least a slightly different state, and somehow “reminding it” of a particular example is useful in getting it to “remember that example”. (And, yes, perhaps this is analogous to the usefulness of repetition in human memorization.)

需要向神经网络展示多少数据才能训练它完成特定任务?其实很难从基本原理上估计。当然,利用“迁移学习”将另一个网络中已经学到的关键特征列表“迁移过来”,可以显著减少需求量。但总体来说,神经网络需要“看到大量的例子”才能训练好。且至少对于某些任务,有一个神经网络的重要经验是这些例子可以非常重复。确实,一个标准策略就是不断地向神经网络反复展示所有例子。 在每一轮“训练”(或“纪元”)中,神经网络的状态都会有些许不同,通过某种方式“提醒”它一个特定的例子,有助于它“记住那个例子”。(是的,这或许类似于重复对于人类记忆的作用。))

But often just repeating the same example over and over again isn’t enough. It’s also necessary to show the neural net variations of the example. And it’s a feature of neural net lore that those “data augmentation” variations don’t have to be sophisticated to be useful. Just slightly modifying images with basic image processing can make them essentially “as good as new” for neural net training. And, similarly, when one’s run out of actual video, etc. for training self-driving cars, one can go on and just get data from running simulations in a model videogame-like environment without all the detail of actual real-world scenes.

但是,仅仅重复同样的例子往往是不够的。还需要向神经网络展示不同的变体。而在神经网络领域,有一个特点是,这些“数据增强”变体并不需要非常复杂就可以发挥作用。只需通过基本的图像处理略微修改图像,就可以使它们对于神经网络训练来说几乎“焕然一新”。类似地,当用于训练自动驾驶汽车的实际视频资源耗尽时,可以在类似于电子游戏的模拟环境中获取数据,而不必包含实际现实场景中的所有细节。

How about something like ChatGPT? Well, it has the nice feature that it can do “unsupervised learning”, making it much easier to get it examples to train from. Recall that the basic task for ChatGPT is to figure out how to continue a piece of text that it’s been given. So to get it “training examples” all one has to do is get a piece of text, and mask out the end of it, and then use this as the “input to train from”—with the “output” being the complete, unmasked piece of text. We’ll discuss this more later, but the main point is that—unlike, say, for learning what’s in images—there’s no “explicit tagging” needed; ChatGPT can in effect just learn directly from whatever examples of text it’s given.

ChatGPT 这样的东西怎么样?它有一个很好的功能,可以进行“无监督学习”,这使得获取训练示例变得容易得多。记住,ChatGPT 的基本任务是根据给定的文本片段推断接下来的内容。因此,要获取“训练示例”,只需要获取一段文本,屏蔽住末尾部分,并将其用作“输入”,然后用完整的、未屏蔽的文本作为“输出”就可以了。我们稍后会详细讨论,但主要的区别是,和图像识别不同,不需要“显式标记”;ChatGPT 可以直接从任何提供的文本示例中学习。

OK, so what about the actual learning process in a neural net? In the end it’s all about determining what weights will best capture the training examples that have been given. And there are all sorts of detailed choices and “hyperparameter settings” (so called because the weights can be thought of as “parameters”) that can be used to tweak how this is done. There are different choices of loss function (sum of squares, sum of absolute values, etc.). There are different ways to do loss minimization (how far in weight space to move at each step, etc.). And then there are questions like how big a “batch” of examples to show to get each successive estimate of the loss one’s trying to minimize. And, yes, one can apply machine learning (as we do, for example, in Wolfram Language) to automate machine learning—and to automatically set things like hyperparameters.

好的,那么关于神经网络的实际学习过程呢?最终,这全都是关于确定哪些权重可以最好地捕捉所给出的训练示例。而且有各种详细的选择和“超参数设置”(因为权重可以被视为“参数”)来调整实现这一目标的方法。损失函数有多种选择(平方和,绝对值和等)。对于损失最小化有不同的方法(如每一步在权重空间中移动的距离等)。还有一些问题,比如需要展示多大批量的示例来获得每次的损失估计。 而且,确实可以通过机器学习(例如在我们使用的 Wolfram 语言中)来实现机器学习自动化,并自动设置超参数等内容。

But in the end the whole process of training can be characterized by seeing how the loss progressively decreases (as in this Wolfram Language progress monitor for a small training):

但最终,整个训练过程可以通过观察损失值的逐渐减少来进行描述(如图所示,这是一个用于小型训练的 Wolfram 语言进度监控器):

And what one typically sees is that the loss decreases for a while, but eventually flattens out at some constant value. If that value is sufficiently small, then the training can be considered successful; otherwise it’s probably a sign one should try changing the network architecture.

通常情况是,损失首先会下降一段时间,然后最终趋于一个固定值。如果这个固定值足够小,那么可以认为训练是成功的;否则,这可能表明需要尝试调整网络架构。

Can one tell how long it should take for the “learning curve” to flatten out? Like for so many other things, there seem to be approximate power-law scaling relationships that depend on the size of neural net and amount of data one’s using. But the general conclusion is that training a neural net is hard—and takes a lot of computational effort. And as a practical matter, the vast majority of that effort is spent doing operations on arrays of numbers, which is what GPUs are good at—which is why neural net training is typically limited by the availability of GPUs.

能否估计“学习曲线”要多久才能平稳?和其他很多事情一样,这似乎取决于神经网络的规模和所使用的数据量之间的幂律关系。但总体结论是,训练神经网络非常困难——需要大量的计算资源。在实际操作中,大部分计算工作是对数字数组进行运算,这正是 GPU 所擅长的——这也是神经网络训练通常受限于 GPU 供应的原因。

In the future, will there be fundamentally better ways to train neural nets—or generally do what neural nets do? Almost certainly, I think. The fundamental idea of neural nets is to create a flexible “computing fabric” out of a large number of simple (essentially identical) components—and to have this “fabric” be one that can be incrementally modified to learn from examples. In current neural nets, one’s essentially using the ideas of calculus—applied to real numbers—to do that incremental modification. But it’s increasingly clear that having high-precision numbers doesn’t matter; 8 bits or less might be enough even with current methods.

将来是否会有更好的方法来训练神经网络,或者通常完成神经网络的任务?我几乎可以肯定。神经网络的基本思想是通过大量简单(几乎相同)的组件创建一个灵活的“计算结构”,并使其能够通过逐步修改从例子中学习。在当前的神经网络中,我们基本上是使用微积分的思想——应用于实数——来进行这种逐步修改。但是越来越明显的是,高精度的数字并不重要;即使是当前的方法,8 位甚至更少的精度可能也足够。

With computational systems like cellular automata that basically operate in parallel on many individual bits it’s never been clear how to do this kind of incremental modification, but there’s no reason to think it isn’t possible. And in fact, much like with the “deep-learning breakthrough of 2012” it may be that such incremental modification will effectively be easier in more complicated cases than in simple ones.

对于诸如细胞自动机之类在多个单独比特上并行操作的计算系统,一直没有明确如何进行这种增量修改,但没有理由认为它不可能。而且,实际上,就像 2012 年的“深度学习突破”一样,这种增量修改在更复杂的情况下可能会更容易。

Neural nets—perhaps a bit like brains—are set up to have an essentially fixed network of neurons, with what’s modified being the strength (“weight”) of connections between them. (Perhaps in at least young brains significant numbers of wholly new connections can also grow.) But while this might be a convenient setup for biology, it’s not at all clear that it’s even close to the best way to achieve the functionality we need. And something that involves the equivalent of progressive network rewriting (perhaps reminiscent of our Physics Project) might well ultimately be better.

神经网络——可能有点类似大脑——被设置为具有一个基本固定的神经元网络,所修改的是它们之间连接的强度(“权重”)。(也许在至少年轻的大脑中,大量全新的连接也能形成。)但尽管这可能对生物学来说很方便,但这是否是实现我们所需功能的最佳方式还完全不清楚。涉及渐进网络重写的机制(可能让人联想到我们的物理项目)最终可能会更好。

But even within the framework of existing neural nets there’s currently a crucial limitation: neural net training as it’s now done is fundamentally sequential, with the effects of each batch of examples being propagated back to update the weights. And indeed with current computer hardware—even taking into account GPUs—most of a neural net is “idle” most of the time during training, with just one part at a time being updated. And in a sense this is because our current computers tend to have memory that is separate from their CPUs (or GPUs). But in brains it’s presumably different—with every “memory element” (i.e. neuron) also being a potentially active computational element. And if we could set up our future computer hardware this way it might become possible to do training much more efficiently.

但即使在现有的神经网络框架内,也存在一个关键限制:目前的神经网络训练本质上是顺序进行的,每批样本的效果都会向后传播以更新权重。实际上,即使考虑到 GPU,使用目前的计算机硬件,大部分时间神经网络的大部分在训练过程中都是“闲置”的,只有一部分在更新。从某种意义上说,这是因为目前的计算机内存通常与其 CPU(或 GPU)是分开的。但在大脑中,每个“记忆元件”(即神经元)也可能是一个活跃的计算元件。 如果我们能这样配置未来的计算机硬件,可能能大大提高训练效率。

“Surely a Network That’s Big Enough Can Do Anything!”

只要网络足够大,什么都能做到!

The capabilities of something like ChatGPT seem so impressive that one might imagine that if one could just “keep going” and train larger and larger neural networks, then they’d eventually be able to “do everything”. And if one’s concerned with things that are readily accessible to immediate human thinking, it’s quite possible that this is the case. But the lesson of the past several hundred years of science is that there are things that can be figured out by formal processes, but aren’t readily accessible to immediate human thinking.

像 ChatGPT 这样的功能看起来非常强大,以至于有人可能会认为,如果继续训练越来越大的神经网络,它们最终能解决所有问题。如果关注的是可以迅速被人类理解的事情,确实有这种可能性。但是,过去几百年的科学教训告诉我们,有些事情可以通过正式过程理解,但不容易被人类直观思维立即接受。

Nontrivial mathematics is one big example. But the general case is really computation. And ultimately the issue is the phenomenon of computational irreducibility. There are some computations which one might think would take many steps to do, but which can in fact be “reduced” to something quite immediate. But the discovery of computational irreducibility implies that this doesn’t always work. And instead there are processes—probably like the one below—where to work out what happens inevitably requires essentially tracing each computational step:

非平凡的数学就是一个很好的例子。但总体来说,其实是计算问题。最终的问题是计算不可约现象。有些计算看似需要很多步骤,但实际上可以“简化”为非常直接的形式。但计算不可约性的发现表明这并不总是可行的。相反,有些过程——可能像下面这个——要确定其结果,必然需要逐步追踪每个计算步骤:

The kinds of things that we normally do with our brains are presumably specifically chosen to avoid computational irreducibility. It takes special effort to do math in one’s brain. And it’s in practice largely impossible to “think through” the steps in the operation of any nontrivial program just in one’s brain.

我们平时用大脑进行的活动,很可能是为了避免计算上的复杂性。用大脑做数学需要特别的努力。在实际操作中,仅靠大脑来“思考”任何复杂程序的步骤几乎是不可能的。

But of course for that we have computers. And with computers we can readily do long, computationally irreducible things. And the key point is that there’s in general no shortcut for these.

但是,当然有计算机帮助。使用计算机,我们可以轻松完成耗时且计算复杂的任务。关键是,这些任务通常没有捷径。

Yes, we could memorize lots of specific examples of what happens in some particular computational system. And maybe we could even see some (“computationally reducible”) patterns that would allow us to do a little generalization. But the point is that computational irreducibility means that we can never guarantee that the unexpected won’t happen—and it’s only by explicitly doing the computation that you can tell what actually happens in any particular case.

是的,我们可以记住很多关于某些特定计算系统中发生情况的具体例子。也许我们甚至可以看到一些(“计算上可约”)的模式,这些模式让我们可以进行一些泛化。但重点是计算不可约性意味着我们永远无法保证意外不会发生——只有通过明确地进行计算,你才能知道在任何特定情况下实际会发生什么。

And in the end there’s just a fundamental tension between learnability and computational irreducibility. Learning involves in effect compressing data by leveraging regularities. But computational irreducibility implies that ultimately there’s a limit to what regularities there may be.

最后,可学习性和计算不可约性之间始终存在着一种根本的矛盾。学习实际上是通过利用规律来压缩数据。但计算不可约性意味着,归根结底,规律是有限的。

As a practical matter, one can imagine building little computational devices—like cellular automata or Turing machines—into trainable systems like neural nets. And indeed such devices can serve as good “tools” for the neural net—like Wolfram|Alpha can be a good tool for ChatGPT. But computational irreducibility implies that one can’t expect to “get inside” those devices and have them learn.

实际而言,可以设想将小型计算设备(如元胞自动机或图灵机)嵌入到可训练系统(如神经网络)中。而这样的设备确实可以为神经网络提供有效的“工具”——就像 Wolfram|Alpha 是 ChatGPT 的好工具一样。但是,计算不可约性意味着无法“进入”这些设备并使其学习。

Or put another way, there’s an ultimate tradeoff between capability and trainability: the more you want a system to make “true use” of its computational capabilities, the more it’s going to show computational irreducibility, and the less it’s going to be trainable. And the more it’s fundamentally trainable, the less it’s going to be able to do sophisticated computation.

换句话说,能力和可训练性之间存在一个最终的权衡:系统越能发挥其计算能力,它就越表现出计算不可约性,训练难度就越大。反之,系统越容易训练,它执行复杂计算的能力就越弱。

(For ChatGPT as it currently is, the situation is actually much more extreme, because the neural net used to generate each token of output is a pure “feed-forward” network, without loops, and therefore has no ability to do any kind of computation with nontrivial “control flow”.)

对于当前版本的 ChatGPT 来说,情况实际上更为极端,因为生成每个输出令牌的神经网络是一个纯“前馈”网络,没有循环,因此无法进行复杂的“控制流”计算。

Of course, one might wonder whether it’s actually important to be able to do irreducible computations. And indeed for much of human history it wasn’t particularly important. But our modern technological world has been built on engineering that makes use of at least mathematical computations—and increasingly also more general computations. And if we look at the natural world, it’s full of irreducible computation—that we’re slowly understanding how to emulate and use for our technological purposes.

当然,有人可能会想,能进行不可简化计算是否真的重要。实际上,在人类历史的大部分时间里,这并不是特别重要。然而,我们现代的技术世界是建立在至少利用数学计算的工程基础上的,并且越来越多地依赖于更广泛的计算。如果我们看自然界,它充满了不可简化的计算——我们正在逐步理解如何模拟和利用这些计算来实现我们的技术目标。

Yes, a neural net can certainly notice the kinds of regularities in the natural world that we might also readily notice with “unaided human thinking”. But if we want to work out things that are in the purview of mathematical or computational science the neural net isn’t going to be able to do it—unless it effectively “uses as a tool” an “ordinary” computational system.

是的,神经网络确实可以发现我们通过“未经辅助的人类思维”能注意到的自然规律。但是,如果我们想要解决数学或计算科学领域的问题,神经网络是办不到的,除非它能有效地利用一个“普通”的计算系统作为工具。

But there’s something potentially confusing about all of this. In the past there were plenty of tasks—including writing essays—that we’ve assumed were somehow “fundamentally too hard” for computers. And now that we see them done by the likes of ChatGPT we tend to suddenly think that computers must have become vastly more powerful—in particular surpassing things they were already basically able to do (like progressively computing the behavior of computational systems like cellular automata).

但这一切可能会有些令人困惑。过去,我们认为很多任务——包括写论文——对计算机来说“基本上太难了”。如今,当我们看到像 ChatGPT 这样的程序完成这些任务时,我们往往会觉得计算机的能力大大增强了,尤其是在超越它们以前基本能做的事情(比如逐步计算细胞自动机的行为)。

But this isn’t the right conclusion to draw. Computationally irreducible processes are still computationally irreducible, and are still fundamentally hard for computers—even if computers can readily compute their individual steps. And instead what we should conclude is that tasks—like writing essays—that we humans could do, but we didn’t think computers could do, are actually in some sense computationally easier than we thought.

但这并不是正确的结论。计算上不可化简的过程仍然是不可化简的,计算机处理起来仍然很困难——尽管计算机可以轻松执行每一步操作。我们应该得出的结论是,像写作文这样我们人类能做到但认为计算机无能为力的任务,实际上在某种程度上比我们想象中更容易计算。

In other words, the reason a neural net can be successful in writing an essay is because writing an essay turns out to be a “computationally shallower” problem than we thought. And in a sense this takes us closer to “having a theory” of how we humans manage to do things like writing essays, or in general deal with language.

换句话说,神经网络能够成功地写作文章的原因是,写作文章实际上是一个比我们想象中“计算上更简单”的问题。从某种意义上说,这使我们更接近于理解“我们人类是如何完成写作文章或处理语言等事情的理论”。

If you had a big enough neural net then, yes, you might be able to do whatever humans can readily do. But you wouldn’t capture what the natural world in general can do—or that the tools that we’ve fashioned from the natural world can do. And it’s the use of those tools—both practical and conceptual—that have allowed us in recent centuries to transcend the boundaries of what’s accessible to “pure unaided human thought”, and capture for human purposes more of what’s out there in the physical and computational universe.

如果你有一个足够大的神经网络,那么,是的,你可能能够做到人类可以轻松做到的任何事情。但你无法捕捉到大自然本身的能力——或我们从自然世界中创造的工具所能做的事情。正是这些工具的使用——无论是实际的还是概念上的——使我们在最近几个世纪中得以超越“纯粹无助的人类思维”的界限,并为人类的目的捕捉到更多物理和计算宇宙中的事物。

The Concept of Embeddings

嵌入的概念

Neural nets—at least as they’re currently set up—are fundamentally based on numbers. So if we’re going to to use them to work on something like text we’ll need a way to represent our text with numbers. And certainly we could start (essentially as ChatGPT does) by just assigning a number to every word in the dictionary. But there’s an important idea—that’s for example central to ChatGPT—that goes beyond that. And it’s the idea of “embeddings”. One can think of an embedding as a way to try to represent the “essence” of something by an array of numbers—with the property that “nearby things” are represented by nearby numbers.

当前的神经网络基本上是基于数字构建的。所以如果我们要用它们来处理文本,就需要找到一种用数字表示文本的方法。当然,我们可以像 ChatGPT 那样,通过为字典中的每个单词分配一个数字来开始。但是有一个重要的概念,对 ChatGPT 来说尤为重要,那就是“嵌入”的概念。可以把嵌入看作是一种用数字数组来表示事物“本质”的方法,其中“相近的事物”由相近的数字来表示。

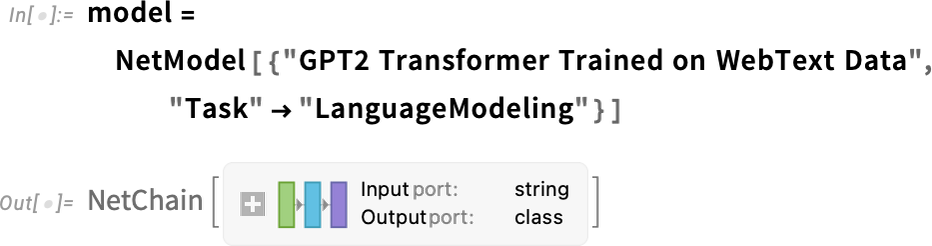

And so, for example, we can think of a word embedding as trying to lay out words in a kind of “meaning space” in which words that are somehow “nearby in meaning” appear nearby in the embedding. The actual embeddings that are used—say in ChatGPT—tend to involve large lists of numbers. But if we project down to 2D, we can show examples of how words are laid out by the embedding:

因此,例如,我们可以将词嵌入看作是尝试在一种“意义空间”中布置词汇,其中意义相近的词汇在嵌入中靠得很近。实际使用的嵌入,比如在 ChatGPT 中,通常涉及大量数字列表。但如果我们投影到二维平面上,我们可以展示词汇在嵌入中的排列方式:

And, yes, what we see does remarkably well in capturing typical everyday impressions. But how can we construct such an embedding? Roughly the idea is to look at large amounts of text (here 5 billion words from the web) and then see “how similar” the “environments” are in which different words appear. So, for example, “alligator” and “crocodile” will often appear almost interchangeably in otherwise similar sentences, and that means they’ll be placed nearby in the embedding. But “turnip” and “eagle” won’t tend to appear in otherwise similar sentences, so they’ll be placed far apart in the embedding.

是的,我们看到的确实非常擅长捕捉典型的日常印象。但是我们如何构建这样的嵌入呢?大致的想法是查看大量的文本(这里是从网络上获取的 50 亿个单词),然后看不同单词出现的“环境”有多“相似”。比如,“鳄鱼”和“短吻鳄”通常会在相似的句子中互换出现,这意味着它们在嵌入中会被放在一起。但“萝卜”和“鹰”在相似的句子中很少出现,所以它们在嵌入中会被放得很远。

But how does one actually implement something like this using neural nets? Let’s start by talking about embeddings not for words, but for images. We want to find some way to characterize images by lists of numbers in such a way that “images we consider similar” are assigned similar lists of numbers.

但是,如何实际使用神经网络实现这样的东西呢?让我们先来谈谈图像的嵌入,而不是单词的嵌入。我们希望找到一种方法,通过数字列表来表征图像,使得“我们认为相似的图像”具有相似的数字列表。

How do we tell if we should “consider images similar”? Well, if our images are, say, of handwritten digits we might “consider two images similar” if they are of the same digit. Earlier we discussed a neural net that was trained to recognize handwritten digits. And we can think of this neural net as being set up so that in its final output it puts images into 10 different bins, one for each digit.

我们如何判断两张图像是否相似呢?如果我们的图像是手写数字,例如,我们可以认为两张图像相似如果它们展示的是相同的数字。之前我们讨论了一个用于识别手写数字的神经网络。我们可以把这个神经网络想象成,它会在最终输出时把图像分类到 10 个不同的类别中,每个数字对应一个类别。

But what if we “intercept” what’s going on inside the neural net before the final “it’s a ‘4’” decision is made? We might expect that inside the neural net there are numbers that characterize images as being “mostly 4-like but a bit 2-like” or some such. And the idea is to pick up such numbers to use as elements in an embedding.

但是,如果我们在神经网络做出最终的“这是一个‘4’”的决定之前“拦截”正在发生的事情呢?可能在神经网络内部有一些数字,表示图像是“主要像 4,但有点像 2”之类的。这个想法是捕捉这些数字用作嵌入元素。

So here’s the concept. Rather than directly trying to characterize “what image is near what other image”, we instead consider a well-defined task (in this case digit recognition) for which we can get explicit training data—then use the fact that in doing this task the neural net implicitly has to make what amount to “nearness decisions”. So instead of us ever explicitly having to talk about “nearness of images” we’re just talking about the concrete question of what digit an image represents, and then we’re “leaving it to the neural net” to implicitly determine what that implies about “nearness of images”.

这个概念是这样的。我们不直接尝试去描述“哪个图像接近哪个图像”,而是采用一个明确的任务(在本例中是数字识别),我们可以获得明确的训练数据—然后利用神经网络在执行此任务时必须隐含做出的“接近性”判断。因此,我们不需要明确讨论“图像的接近性”,而是讨论图像代表的具体数字问题,然后让神经网络隐含地决定“图像接近性”的意义。

So how in more detail does this work for the digit recognition network? We can think of the network as consisting of 11 successive layers, that we might summarize iconically like this (with activation functions shown as separate layers):

那么,数字识别网络具体是如何工作的呢?我们可以将其视为由 11 个连续层组成,可以这样图示化总结(激活函数作为单独的层显示):

At the beginning we’re feeding into the first layer actual images, represented by 2D arrays of pixel values. And at the end—from the last layer—we’re getting out an array of 10 values, which we can think of saying “how certain” the network is that the image corresponds to each of the digits 0 through 9.

开始时我们将实际图像(用像素值的二维数组表示)输入到第一层。最后一层的输出是一个包含 10 个值的数组,我们可以理解为网络对图像是数字 0 到 9 中哪一个的确定性。

Feed in the image ![]() and the values of the neurons in that last layer are:

and the values of the neurons in that last layer are:

输入图像 ![]() 后,最后一层的神经元值是:

后,最后一层的神经元值是:

In other words, the neural net is by this point “incredibly certain” that this image is a 4—and to actually get the output “4” we just have to pick out the position of the neuron with the largest value.

换句话说,此时神经网络已经“非常确定”这张图像是 4——要得到输出“4”,我们只需找出数值最大的那个神经元的位置。

But what if we look one step earlier? The very last operation in the network is a so-called softmax which tries to “force certainty”. But before that’s been applied the values of the neurons are:

但是如果我们往前看一步呢?网络中的最后一个操作是一个所谓的 softmax,它试图“强制确定性”。但是在它应用之前,神经元的值是:

The neuron representing “4” still has the highest numerical value. But there’s also information in the values of the other neurons. And we can expect that this list of numbers can in a sense be used to characterize the “essence” of the image—and thus to provide something we can use as an embedding. And so, for example, each of the 4’s here has a slightly different “signature” (or “feature embedding”)—all very different from the 8’s:

代表“4”的神经元仍然具有最高的数值,但是其他神经元的数值也包含了信息。我们可以预期,这些数字列表在某种程度上可以描述图像的“本质”,从而提供我们可以用作嵌入的信息。所以,例如,每个 4 都有稍微不同的“特征签名”(或“特征嵌入”)——这与 8 的特征截然不同。

Here we’re essentially using 10 numbers to characterize our images. But it’s often better to use much more than that. And for example in our digit recognition network we can get an array of 500 numbers by tapping into the preceding layer. And this is probably a reasonable array to use as an “image embedding”.

在这里,我们基本上使用 10 个数字来描述我们的图像。但通常使用更多会更好。例如,在我们的数字识别网络中,通过利用前一层的输出,我们可以得到一个由 500 个数字组成的数组。这可能是一个合理的“图像嵌入”数组。

If we want to make an explicit visualization of “image space” for handwritten digits we need to “reduce the dimension”, effectively by projecting the 500-dimensional vector we’ve got into, say, 3D space:

如果我们想清晰地可视化手写数字的“图像空间”,需要“降维”,实际上就是把我们得到的 500 维向量投影到 3 维空间:

We’ve just talked about creating a characterization (and thus embedding) for images based effectively on identifying the similarity of images by determining whether (according to our training set) they correspond to the same handwritten digit. And we can do the same thing much more generally for images if we have a training set that identifies, say, which of 5000 common types of object (cat, dog, chair, …) each image is of. And in this way we can make an image embedding that’s “anchored” by our identification of common objects, but then “generalizes around that” according to the behavior of the neural net. And the point is that insofar as that behavior aligns with how we humans perceive and interpret images, this will end up being an embedding that “seems right to us”, and is useful in practice in doing “human-judgement-like” tasks.

我们刚刚讨论了如何通过判断图像是否对应于相同的手写数字(根据我们的训练集)来创建图像的表征(即嵌入)。如果我们有一个训练集,可以识别出每张图像是 5000 种常见物体(如猫、狗、椅子等)中的哪一种,那么我们可以更广泛地处理图像。这样,我们可以创建一个图像嵌入,其“基础”是我们对常见物体的识别,并且根据神经网络的表现进行“泛化”。 关键是,当这种行为与我们人类感知和解读图像的方式一致时,它将形成一种“对我们来说似乎正确”的表示,并且在执行类似“人类判断”的任务时非常有用。

OK, so how do we follow the same kind of approach to find embeddings for words? The key is to start from a task about words for which we can readily do training. And the standard such task is “word prediction”. Imagine we’re given “the ___ cat”. Based on a large corpus of text (say, the text content of the web), what are the probabilities for different words that might “fill in the blank”? Or, alternatively, given “___ black ___” what are the probabilities for different “flanking words”?

好,那么我们如何采用类似的方法来找到单词的嵌入呢?关键在于从一个单词任务开始,这个任务可以轻松训练。标准任务是“单词预测”。想象一下,给出“the ___ cat”。基于大量的文本语料库(比如网络上的文本内容),填补空白的不同单词的概率是多少?或者,给出“___ black ___”,不同“夹在中间的词”的概率是多少?

How do we set this problem up for a neural net? Ultimately we have to formulate everything in terms of numbers. And one way to do this is just to assign a unique number to each of the 50,000 or so common words in English. So, for example, “the” might be 914, and “ cat” (with a space before it) might be 3542. (And these are the actual numbers used by GPT-2.) So for the “the ___ cat” problem, our input might be {914, 3542}. What should the output be like? Well, it should be a list of 50,000 or so numbers that effectively give the probabilities for each of the possible “fill-in” words. And once again, to find an embedding, we want to “intercept” the “insides” of the neural net just before it “reaches its conclusion”—and then pick up the list of numbers that occur there, and that we can think of as “characterizing each word”.

我们如何为神经网络设置这个问题?最终,我们必须将所有内容转换成数字。 一种方法是为大约 5 万个常见的英语单词分配一个唯一的编号。例如,“the”可以是 914,而“cat”(前面带有空格)可以是 3542(这也是 GPT-2 使用的实际编号)。 因此,对于“the ___ cat”这个问题,我们的输入可能是{914, 3542}。那么,输出应该是什么样的呢? 它应该是一个包含大约 5 万个数字的列表,表示每个可能填入单词的概率。 为了找到一个嵌入,我们想要在神经网络“得出结论”之前“截取”它的“内部”,然后获取出现的数字列表,这些数字可以视为“每个单词的表征”。

OK, so what do those characterizations look like? Over the past 10 years there’ve been a sequence of different systems developed (word2vec, GloVe, BERT, GPT, …), each based on a different neural net approach. But ultimately all of them take words and characterize them by lists of hundreds to thousands of numbers.

好的,那么这些表征具体长什么样呢?在过去的 10 年里,(word2vec、GloVe、BERT、GPT 等)被开发出来了一系列不同的系统,每个系统都基于不同的神经网络方法。但最终它们都是通过包含数百到数千个数字的列表来表征单词。

In their raw form, these “embedding vectors” are quite uninformative. For example, here’s what GPT-2 produces as the raw embedding vectors for three specific words:

这些“嵌入向量”在原始形式下信息量很少。例如,以下是 GPT-2 为三个特定单词生成的原始嵌入向量:

If we do things like measure distances between these vectors, then we can find things like “nearnesses” of words. Later we’ll discuss in more detail what we might consider the “cognitive” significance of such embeddings. But for now the main point is that we have a way to usefully turn words into “neural-net-friendly” collections of numbers.