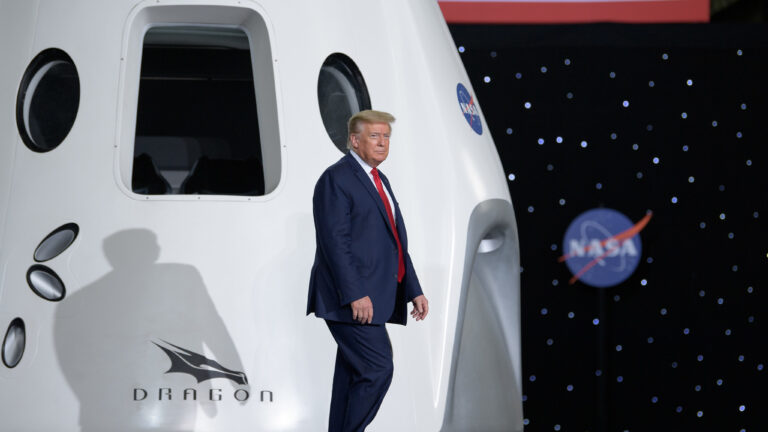

Anthropic has announced a partnership with Palantir and Amazon Web Services to bring its Claude AI models to unspecified US intelligence and defense agencies. Claude, a family of AI language models similar to those that power ChatGPT, will work within Palantir's platform using AWS hosting to process and analyze data. But some critics have called out the deal as contradictory to Anthropic's widely-publicized "AI safety" aims.

Anthropic 已宣佈與 Palantir 和 Amazon Web Services 合作,將其Claude AI 模型提供給未指明的美國情報和國防機構。Claude 是一系列類似於驅動ChatGPT的 AI 語言模型,它將在 Palantir 的平台上運行,並使用 AWS 託管來處理和分析數據。但一些批評人士指出,這項交易與 Anthropic 廣為宣傳的「AI 安全」目標相矛盾。

On X, former Google co-head of AI ethics Timnit Gebru wrote of Anthropic's new deal with Palantir, "Look at how they care so much about 'existential risks to humanity.'"

在 X 上,前 Google AI 倫理聯合主管 Timnit Gebru寫道,關於 Anthropic 與 Palantir 的新協議,「看看他們有多麼關心『對人類的生存風險』。」

The partnership makes Claude available within Palantir's Impact Level 6 environment (IL6), a defense-accredited system that handles data critical to national security up to the "secret" classification level. This move follows a broader trend of AI companies seeking defense contracts, with Meta offering its Llama models to defense partners and OpenAI pursuing closer ties with the Defense Department.

該合作夥伴關係使 Claude 可在 Palantir 的6 級影響環境(IL6)中使用,這是一個獲得國防認證的系統,可處理對國家安全至關重要的數據,最高可達「秘密」級別。此舉緊隨 AI 公司尋求國防合同的更廣泛趨勢,Meta向國防合作夥伴提供其 Llama 模型,而 OpenAI則尋求與國防部建立更緊密的聯繫。

In a press release, the companies outlined three main tasks for Claude in defense and intelligence settings: performing operations on large volumes of complex data at high speeds, identifying patterns and trends within that data, and streamlining document review and preparation.

在一份新聞稿中,這些公司概述了 Claude 在國防和情報領域的三項主要任務:以高速對大量複雜數據執行操作、識別數據中的模式和趨勢,以及簡化文件審閱和準備工作。

While the partnership announcement suggests broad potential for AI-powered intelligence analysis, it states that human officials will retain their decision-making authority in these operations. As a reference point for the technology's capabilities, Palantir reported that one (unnamed) American insurance company used 78 AI agents powered by their platform and Claude to reduce an underwriting process from two weeks to three hours.

雖然合作夥伴關係公告暗示了 AI 驅動的情報分析的廣泛潛力,但它指出,人類官員將保留在這些行動中的決策權。作為該技術能力的參考點,Palantir報告稱,一家(未具名)美國保險公司使用了 78 個由其平台和 Claude 驅動的 AI 代理,將承保流程從兩週縮短到三個小時。

The new collaboration builds on Anthropic's earlier integration of Claude into AWS GovCloud, a service built for government cloud computing. Anthropic, which recently began operations in Europe, has been seeking funding at a valuation up to $40 billion. The company has raised $7.6 billion, with Amazon as its primary investor.

新的合作建立在 Anthropic 之前將 Claude 整合到AWS GovCloud的基礎上,這項服務是為政府雲計算而構建的。Anthropic 最近開始在歐洲運營,一直在尋求以高達 400 億美元的估值進行融資。該公司已籌集了 76 億美元,亞馬遜是其主要投資者。

Loading comments...

載入留言中...

Loading comments...

載入留言中...