Abstract 摘要

Proteins are essential to life, and understanding their structure can facilitate a mechanistic understanding of their function. Through an enormous experimental effort1,2,3,4, the structures of around 100,000 unique proteins have been determined5, but this represents a small fraction of the billions of known protein sequences6,7. Structural coverage is bottlenecked by the months to years of painstaking effort required to determine a single protein structure. Accurate computational approaches are needed to address this gap and to enable large-scale structural bioinformatics. Predicting the three-dimensional structure that a protein will adopt based solely on its amino acid sequence—the structure prediction component of the ‘protein folding problem’8—has been an important open research problem for more than 50 years9. Despite recent progress10,11,12,13,14, existing methods fall far short of atomic accuracy, especially when no homologous structure is available. Here we provide the first computational method that can regularly predict protein structures with atomic accuracy even in cases in which no similar structure is known. We validated an entirely redesigned version of our neural network-based model, AlphaFold, in the challenging 14th Critical Assessment of protein Structure Prediction (CASP14)15, demonstrating accuracy competitive with experimental structures in a majority of cases and greatly outperforming other methods. Underpinning the latest version of AlphaFold is a novel machine learning approach that incorporates physical and biological knowledge about protein structure, leveraging multi-sequence alignments, into the design of the deep learning algorithm.

蛋白质对于生命至关重要,对其结构的理解可以促进对其功能的机制性理解。通过巨大的实验努力,已经确定了大约 10 万种独特蛋白质的结构,但这只是已知数十亿个蛋白质序列中的一小部分。结构覆盖受到瓶颈,因为确定单个蛋白质结构需要数月到数年的艰苦努力。需要准确的计算方法来填补这一差距,并实现大规模的结构生物信息学。仅根据氨基酸序列预测蛋白质将采取的三维结构——即“蛋白质折叠问题”的结构预测部分——已经超过 50 年是一个重要的开放研究问题。尽管近年来取得了进展,但现有方法在原子精度方面仍然远远不够,尤其是在没有同源结构的情况下。在这里,我们提供了第一个能够在没有已知相似结构的情况下,定期以原子精度预测蛋白质结构的计算方法。 我们验证了完全重新设计的基于神经网络的模型 AlphaFold 的新版本,在具有挑战性的第 14 届蛋白质结构预测关键评估(CASP14)中表现出色,在大多数情况下其准确性可与实验结构相媲美,并且显著优于其他方法。AlphaFold 最新版本的基础是一个新颖的机器学习方法,该方法结合了关于蛋白质结构的物理和生物知识,利用多序列比对来设计深度学习算法。

Similar content being viewed by others

相似内容被其他人观看

Main 主菜单

The development of computational methods to predict three-dimensional (3D) protein structures from the protein sequence has proceeded along two complementary paths that focus on either the physical interactions or the evolutionary history. The physical interaction programme heavily integrates our understanding of molecular driving forces into either thermodynamic or kinetic simulation of protein physics16 or statistical approximations thereof17. Although theoretically very appealing, this approach has proved highly challenging for even moderate-sized proteins due to the computational intractability of molecular simulation, the context dependence of protein stability and the difficulty of producing sufficiently accurate models of protein physics. The evolutionary programme has provided an alternative in recent years, in which the constraints on protein structure are derived from bioinformatics analysis of the evolutionary history of proteins, homology to solved structures18,19 and pairwise evolutionary correlations20,21,22,23,24. This bioinformatics approach has benefited greatly from the steady growth of experimental protein structures deposited in the Protein Data Bank (PDB)5, the explosion of genomic sequencing and the rapid development of deep learning techniques to interpret these correlations. Despite these advances, contemporary physical and evolutionary-history-based approaches produce predictions that are far short of experimental accuracy in the majority of cases in which a close homologue has not been solved experimentally and this has limited their utility for many biological applications.

蛋白质序列预测三维(3D)结构计算方法的发展主要沿着两条互补的路径进行,一条侧重于物理相互作用,另一条侧重于进化历史。物理相互作用项目将我们对分子驱动力的理解深度融入蛋白质物理的热力学或动力学模拟,或其统计近似 16 。尽管在理论上非常吸引人,但由于分子模拟的计算不可行性、蛋白质稳定性的上下文依赖性以及产生足够准确的蛋白质物理模型难度较大,这种方法对于即使是中等大小的蛋白质也证明是非常具有挑战性的。近年来,进化项目提供了一种替代方案,其中蛋白质结构的约束来自于蛋白质进化历史的生物信息学分析,与已解决结构的同源性 18,19 和成对进化相关性 20,21,22,23,24 。 这种生物信息学方法极大地受益于蛋白质数据银行(PDB)中实验性蛋白质结构的稳步增长,基因组测序的爆炸式增长以及深度学习技术在解释这些相关性方面的快速发展。尽管取得了这些进步,但基于当代物理和进化历史的方法在大多数情况下产生的预测与实验精度相去甚远,尤其是在没有解决实验性近缘同源物的情况下,这限制了它们在许多生物学应用中的实用性。

In this study, we develop the first, to our knowledge, computational approach capable of predicting protein structures to near experimental accuracy in a majority of cases. The neural network AlphaFold that we developed was entered into the CASP14 assessment (May–July 2020; entered under the team name ‘AlphaFold2’ and a completely different model from our CASP13 AlphaFold system10). The CASP assessment is carried out biennially using recently solved structures that have not been deposited in the PDB or publicly disclosed so that it is a blind test for the participating methods, and has long served as the gold-standard assessment for the accuracy of structure prediction25,26.

在这项研究中,我们开发了一种计算方法,据我们所知,这是第一种能够在大多数情况下预测蛋白质结构,达到接近实验精度的方法。我们开发的神经网络 AlphaFold 参加了 CASP14 评估(2020 年 5 月至 7 月;以“AlphaFold2”团队名义参加,与我们的 CASP13 AlphaFold 系统 10 完全不同的模型)。CASP 评估每两年进行一次,使用最近解决的结构,这些结构尚未存入 PDB 或公开披露,因此对参与的方法来说是一个盲测,长期以来一直是结构预测准确性的黄金标准评估 25,26 。

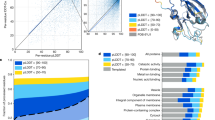

In CASP14, AlphaFold structures were vastly more accurate than competing methods. AlphaFold structures had a median backbone accuracy of 0.96 Å r.m.s.d.95 (Cα root-mean-square deviation at 95% residue coverage) (95% confidence interval = 0.85–1.16 Å) whereas the next best performing method had a median backbone accuracy of 2.8 Å r.m.s.d.95 (95% confidence interval = 2.7–4.0 Å) (measured on CASP domains; see Fig. 1a for backbone accuracy and Supplementary Fig. 14 for all-atom accuracy). As a comparison point for this accuracy, the width of a carbon atom is approximately 1.4 Å. In addition to very accurate domain structures (Fig. 1b), AlphaFold is able to produce highly accurate side chains (Fig. 1c) when the backbone is highly accurate and considerably improves over template-based methods even when strong templates are available. The all-atom accuracy of AlphaFold was 1.5 Å r.m.s.d.95 (95% confidence interval = 1.2–1.6 Å) compared with the 3.5 Å r.m.s.d.95 (95% confidence interval = 3.1–4.2 Å) of the best alternative method. Our methods are scalable to very long proteins with accurate domains and domain-packing (see Fig. 1d for the prediction of a 2,180-residue protein with no structural homologues). Finally, the model is able to provide precise, per-residue estimates of its reliability that should enable the confident use of these predictions.

在 CASP14 中,AlphaFold 的结构比其他方法准确得多。AlphaFold 的骨架准确度中位数为 0.96 Å r.m.s.d. 95 (95%残基覆盖率的 Cα均方根偏差,95%置信区间为 0.85–1.16 Å),而表现最佳的下一个方法骨架准确度中位数为 2.8 Å r.m.s.d. 95 (95%置信区间为 2.7–4.0 Å)(在 CASP 结构域上测量;见图 1a 的骨架准确度和补充图 14 的全原子准确度)。为了比较这个准确度,碳原子的宽度大约为 1.4 Å。除了非常准确的域结构(见图 1b)外,当骨架非常准确时,AlphaFold 还能产生高度准确的单链(见图 1c),并且即使有强模板,也能显著优于基于模板的方法。AlphaFold 的全原子准确度为 1.5 Å r.m.s.d. 95 (95%置信区间为 1.2–1.6 Å),而最佳替代方法为 3.5 Å r.m.s.d. 95 (95%置信区间为 3.1–4.2 Å)。我们的方法可以扩展到非常长的蛋白质,具有准确的域和域包装(见图)。 1d 用于预测一个具有 2,180 个残基且无结构同源物的蛋白质。最后,该模型能够提供精确的、针对每个残基的可靠性估计,这应该能够使这些预测得到自信的应用。

图 1:AlphaFold 生成高度精确的结构。

a, The performance of AlphaFold on the CASP14 dataset (n = 87 protein domains) relative to the top-15 entries (out of 146 entries), group numbers correspond to the numbers assigned to entrants by CASP. Data are median and the 95% confidence interval of the median, estimated from 10,000 bootstrap samples. b, Our prediction of CASP14 target T1049 (PDB 6Y4F, blue) compared with the true (experimental) structure (green). Four residues in the C terminus of the crystal structure are B-factor outliers and are not depicted. c, CASP14 target T1056 (PDB 6YJ1). An example of a well-predicted zinc-binding site (AlphaFold has accurate side chains even though it does not explicitly predict the zinc ion). d, CASP target T1044 (PDB 6VR4)—a 2,180-residue single chain—was predicted with correct domain packing (the prediction was made after CASP using AlphaFold without intervention). e, Model architecture. Arrows show the information flow among the various components described in this paper. Array shapes are shown in parentheses with s, number of sequences (Nseq in the main text); r, number of residues (Nres in the main text); c, number of channels.

a, AlphaFold 在 CASP14 数据集(n = 87 个蛋白质结构域)上的性能相对于前 15 名(146 个参赛者中),组数对应于 CASP 分配给参赛者的编号。数据为中位数和 95%置信区间的中位数,由 10,000 个自助样本估计。b, 我们对 CASP14 目标 T1049(PDB 6Y4F,蓝色)的预测与真实(实验)结构(绿色)的比较。晶体结构 C 端四个残基的 B 因子异常,未显示。c, CASP14 目标 T1056(PDB 6YJ1)。一个预测良好的锌结合位点的例子(尽管 AlphaFold 没有明确预测锌离子,但其侧链预测准确)。d, CASP 目标 T1044(PDB 6VR4)——一个 2,180 个残基的单链——在 AlphaFold 预测中正确地预测了结构域的组装(预测是在 CASP 之后使用 AlphaFold 进行的,没有干预)。e, 模型架构。箭头显示了本文中描述的各种组件之间的信息流。括号中显示数组形状,s 表示序列数量(正文中的 N seq );r 表示残基数量(正文中的 N res );c 表示通道数量。

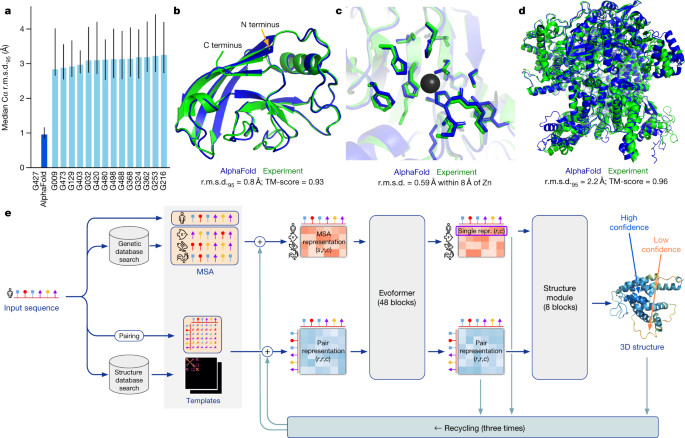

We demonstrate in Fig. 2a that the high accuracy that AlphaFold demonstrated in CASP14 extends to a large sample of recently released PDB structures; in this dataset, all structures were deposited in the PDB after our training data cut-off and are analysed as full chains (see Methods, Supplementary Fig. 15 and Supplementary Table 6 for more details). Furthermore, we observe high side-chain accuracy when the backbone prediction is accurate (Fig. 2b) and we show that our confidence measure, the predicted local-distance difference test (pLDDT), reliably predicts the Cα local-distance difference test (lDDT-Cα) accuracy of the corresponding prediction (Fig. 2c). We also find that the global superposition metric template modelling score (TM-score)27 can be accurately estimated (Fig. 2d). Overall, these analyses validate that the high accuracy and reliability of AlphaFold on CASP14 proteins also transfers to an uncurated collection of recent PDB submissions, as would be expected (see Supplementary Methods 1.15 and Supplementary Fig. 11 for confirmation that this high accuracy extends to new folds).

我们在图 2a 中展示了 AlphaFold 在 CASP14 中展现的高精度,这一精度也扩展到了大量最近发布的 PDB 结构;在这个数据集中,所有结构都是在我们的训练数据截止日期之后存入 PDB 的,并且作为完整链进行分析(详见方法,补充图 15 和补充表 6 以获取更多详细信息)。此外,我们还观察到,当骨架预测准确时,侧链的精度也较高(图 2b),并且我们表明我们的置信度度量,即预测的局部距离差异测试(pLDDT),可以可靠地预测相应预测的 Cα局部距离差异测试(lDDT-Cα)的精度(图 2c)。我们还发现,全局超级对齐度量模板建模得分(TM-score) 27 可以准确估计(图 2d)。总的来说,这些分析验证了 AlphaFold 在 CASP14 蛋白质上的高精度和可靠性也转移到未经筛选的最近 PDB 提交的集合中,正如预期的那样(详见补充方法 1.15 和补充图 11 以确认这种高精度也扩展到新的折叠)。

图 2:AlphaFold 在最近 PDB 结构上的准确性。

The analysed structures are newer than any structure in the training set. Further filtering is applied to reduce redundancy (see Methods). a, Histogram of backbone r.m.s.d. for full chains (Cα r.m.s.d. at 95% coverage). Error bars are 95% confidence intervals (Poisson). This dataset excludes proteins with a template (identified by hmmsearch) from the training set with more than 40% sequence identity covering more than 1% of the chain (n = 3,144 protein chains). The overall median is 1.46 Å (95% confidence interval = 1.40–1.56 Å). Note that this measure will be highly sensitive to domain packing and domain accuracy; a high r.m.s.d. is expected for some chains with uncertain packing or packing errors. b, Correlation between backbone accuracy and side-chain accuracy. Filtered to structures with any observed side chains and resolution better than 2.5 Å (n = 5,317 protein chains); side chains were further filtered to B-factor <30 Å2. A rotamer is classified as correct if the predicted torsion angle is within 40°. Each point aggregates a range of lDDT-Cα, with a bin size of 2 units above 70 lDDT-Cα and 5 units otherwise. Points correspond to the mean accuracy; error bars are 95% confidence intervals (Student t-test) of the mean on a per-residue basis. c, Confidence score compared to the true accuracy on chains. Least-squares linear fit lDDT-Cα = 0.997 × pLDDT − 1.17 (Pearson’s r = 0.76). n = 10,795 protein chains. The shaded region of the linear fit represents a 95% confidence interval estimated from 10,000 bootstrap samples. In the companion paper39, additional quantification of the reliability of pLDDT as a confidence measure is provided. d, Correlation between pTM and full chain TM-score. Least-squares linear fit TM-score = 0.98 × pTM + 0.07 (Pearson’s r = 0.85). n = 10,795 protein chains. The shaded region of the linear fit represents a 95% confidence interval estimated from 10,000 bootstrap samples.

分析的结构比训练集中任何结构都要新。进一步过滤以减少冗余(见方法)。a,全链骨架 r.m.s.d.直方图(95%覆盖率的 Cα r.m.s.d.)。误差线为 95%置信区间(泊松分布)。该数据集排除了训练集中与模板(通过 hmmsearch 识别)具有超过 40%序列同源性且覆盖超过链的 1%的蛋白质(n = 3,144 个蛋白质链)。总体中位数为 1.46 Å(95%置信区间为 1.40–1.56 Å)。请注意,此度量将高度敏感于结构堆积和结构准确性;对于某些堆积不确定或堆积错误的链,预期会有较高的 r.m.s.d.。b,骨架准确性与侧链准确性的相关性。筛选出具有任何观察到的侧链且分辨率优于 2.5 Å的结构(n = 5,317 个蛋白质链);侧链进一步筛选至 B 因子<30 Å 2 。如果一个旋转体的预测扭转角在 40°以内,则将其分类为正确。每个点汇总了 lDDT-Cα的范围,70 lDDT-Cα以上为 2 单位,否则为 5 单位。 """

点对应平均准确度;误差线表示基于每个残基的 95%置信区间(Student t-test)。c,与链的真准确度相比的置信度分数。最小二乘线性拟合 lDDT-Cα = 0.997 × pLDDT - 1.17(皮尔逊相关系数 = 0.76)。n = 10,795 个蛋白质链。线性拟合的阴影区域表示由 10,000 个自助样本估计的 95%置信区间。在配套论文①#中,提供了对 pLDDT 作为置信度度量可靠性的进一步量化。d,pTM 与完整链 TM 分数之间的相关性。最小二乘线性拟合 TM-score = 0.98 × pTM + 0.07(皮尔逊相关系数 = 0.85)。n = 10,795 个蛋白质链。线性拟合的阴影区域表示由 10,000 个自助样本估计的 95%置信区间。

"""

The AlphaFold network AlphaFold 网络

AlphaFold greatly improves the accuracy of structure prediction by incorporating novel neural network architectures and training procedures based on the evolutionary, physical and geometric constraints of protein structures. In particular, we demonstrate a new architecture to jointly embed multiple sequence alignments (MSAs) and pairwise features, a new output representation and associated loss that enable accurate end-to-end structure prediction, a new equivariant attention architecture, use of intermediate losses to achieve iterative refinement of predictions, masked MSA loss to jointly train with the structure, learning from unlabelled protein sequences using self-distillation and self-estimates of accuracy.

AlphaFold 通过结合基于蛋白质结构进化、物理和几何约束的新型神经网络架构和训练程序,极大地提高了结构预测的准确性。特别是,我们展示了一种新的架构,可以联合嵌入多个序列比对(MSA)和成对特征,一种新的输出表示和相关损失,能够实现准确的端到端结构预测;一种新的等变注意力架构;使用中间损失来实现预测的迭代优化;使用掩码 MSA 损失与结构联合训练;通过自蒸馏和自估计准确度来从未标记的蛋白质序列中学习。

The AlphaFold network directly predicts the 3D coordinates of all heavy atoms for a given protein using the primary amino acid sequence and aligned sequences of homologues as inputs (Fig. 1e; see Methods for details of inputs including databases, MSA construction and use of templates). A description of the most important ideas and components is provided below. The full network architecture and training procedure are provided in the Supplementary Methods.

AlphaFold 网络直接利用给定的蛋白质的初级氨基酸序列和同源序列的比对作为输入,预测所有重原子的 3D 坐标(图 1e;有关输入的详细信息,包括数据库、多重序列比对构建和使用模板的使用方法,请参阅方法部分)。以下提供了最重要的思想和组件的描述。完整的网络架构和训练过程详见补充方法。

The network comprises two main stages. First, the trunk of the network processes the inputs through repeated layers of a novel neural network block that we term Evoformer to produce an Nseq × Nres array (Nseq, number of sequences; Nres, number of residues) that represents a processed MSA and an Nres × Nres array that represents residue pairs. The MSA representation is initialized with the raw MSA (although see Supplementary Methods 1.2.7 for details of handling very deep MSAs). The Evoformer blocks contain a number of attention-based and non-attention-based components. We show evidence in ‘Interpreting the neural network’ that a concrete structural hypothesis arises early within the Evoformer blocks and is continuously refined. The key innovations in the Evoformer block are new mechanisms to exchange information within the MSA and pair representations that enable direct reasoning about the spatial and evolutionary relationships.

该网络包含两个主要阶段。首先,网络的主体部分通过一系列新颖的神经网络块(我们称之为 Evoformer)对输入进行处理,生成一个 N seq × N res 矩阵(N seq ,序列数量;N res ,残基数量),该矩阵代表处理后的多重序列比对(MSA)以及一个 N res × N res 矩阵,该矩阵代表残基对。MSA 表示初始化于原始 MSA(尽管请参阅补充方法 1.2.7 以了解处理非常深层次的 MSA 的详细情况)。Evoformer 块包含多个基于注意力和非注意力的组件。我们在“解释神经网络”部分展示了证据,表明在 Evoformer 块中,一个具体的结构假设在早期出现,并且不断得到细化。Evoformer 块的关键创新在于新的机制,这些机制能够在 MSA 和残基表示之间交换信息,从而实现直接推理空间和进化关系。

The trunk of the network is followed by the structure module that introduces an explicit 3D structure in the form of a rotation and translation for each residue of the protein (global rigid body frames). These representations are initialized in a trivial state with all rotations set to the identity and all positions set to the origin, but rapidly develop and refine a highly accurate protein structure with precise atomic details. Key innovations in this section of the network include breaking the chain structure to allow simultaneous local refinement of all parts of the structure, a novel equivariant transformer to allow the network to implicitly reason about the unrepresented side-chain atoms and a loss term that places substantial weight on the orientational correctness of the residues. Both within the structure module and throughout the whole network, we reinforce the notion of iterative refinement by repeatedly applying the final loss to outputs and then feeding the outputs recursively into the same modules. The iterative refinement using the whole network (which we term ‘recycling’ and is related to approaches in computer vision28,29) contributes markedly to accuracy with minor extra training time (see Supplementary Methods 1.8 for details).

网络的主干部分之后是结构模块,该模块通过旋转和平移为蛋白质的每个残基引入显式的 3D 结构(全局刚体坐标系)。这些表示在平凡状态下初始化,所有旋转设置为恒等变换,所有位置设置为原点,但很快就能发展和细化出具有精确原子细节的高度准确的蛋白质结构。该网络这一部分的关键创新包括打破链结构,以允许同时局部细化结构的所有部分,一个新颖的等变变换器,使网络能够隐式地推理未表示的侧链原子,以及一个损失项,该损失项对残基的定向正确性给予很大权重。在结构模块以及整个网络中,我们通过反复应用最终损失到输出,然后将输出递归地输入到相同的模块中,强化了迭代细化的概念。 迭代使用整个网络进行精炼(我们称之为“回收”,与计算机视觉中的方法相关 28,29 ),显著提高了准确性,同时额外训练时间增加不多(详见补充方法 1.8 的详细信息)。

Evoformer

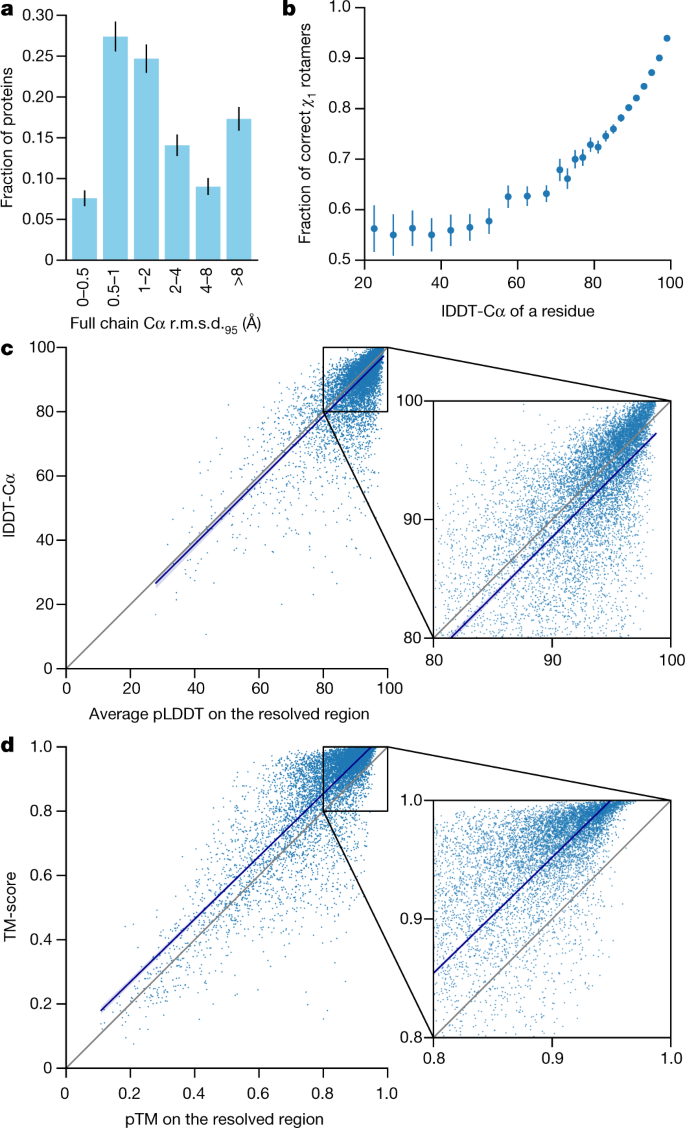

The key principle of the building block of the network—named Evoformer (Figs. 1e, 3a)—is to view the prediction of protein structures as a graph inference problem in 3D space in which the edges of the graph are defined by residues in proximity. The elements of the pair representation encode information about the relation between the residues (Fig. 3b). The columns of the MSA representation encode the individual residues of the input sequence while the rows represent the sequences in which those residues appear. Within this framework, we define a number of update operations that are applied in each block in which the different update operations are applied in series.

网络构建模块的核心原则被称为 Evoformer(见图 1e,3a),其关键在于将蛋白质结构的预测视为一个在 3D 空间中的图推理问题,其中图的边由邻近的氨基酸残基定义。成对表示的元素编码了氨基酸残基之间的关系(见图 3b)。多重序列比对(MSA)表示的列编码了输入序列中的单个氨基酸残基,而行则代表出现这些残基的序列。在这个框架下,我们定义了一系列更新操作,这些操作在每个模块中依次应用。

图 3:建筑细节。

a, Evoformer block. Arrows show the information flow. The shape of the arrays is shown in parentheses. b, The pair representation interpreted as directed edges in a graph. c, Triangle multiplicative update and triangle self-attention. The circles represent residues. Entries in the pair representation are illustrated as directed edges and in each diagram, the edge being updated is ij. d, Structure module including Invariant point attention (IPA) module. The single representation is a copy of the first row of the MSA representation. e, Residue gas: a representation of each residue as one free-floating rigid body for the backbone (blue triangles) and χ angles for the side chains (green circles). The corresponding atomic structure is shown below. f, Frame aligned point error (FAPE). Green, predicted structure; grey, true structure; (Rk, tk), frames; xi, atom positions.

a, Evoformer 模块。箭头表示信息流。数组的形状用括号表示。b, 将成对表示解释为图中的有向边。c, 三角形乘性更新和三角形自注意力。圆圈代表残基。成对表示中的条目用有向边表示,每个图中的更新边为 ij。d, 结构模块包括不变点注意力(IPA)模块。单个表示是 MSA 表示的第一行的副本。e, 残基气:每个残基作为骨架(蓝色三角形)和侧链的χ角度(绿色圆圈)的一个自由浮动的刚体表示。相应的原子结构显示在下方。f, 框对齐点误差(FAPE)。绿色,预测结构;灰色,真实结构;(R k, t k ),帧;x,原子位置。

The MSA representation updates the pair representation through an element-wise outer product that is summed over the MSA sequence dimension. In contrast to previous work30, this operation is applied within every block rather than once in the network, which enables the continuous communication from the evolving MSA representation to the pair representation.

MSA 表示通过元素级的外积更新对表示,并在 MSA 序列维度上求和。与之前的工作 30 不同,这种操作是在每个块内应用,而不是在网络中一次性应用,这使 MSA 表示与对表示之间的连续通信成为可能。

Within the pair representation, there are two different update patterns. Both are inspired by the necessity of consistency of the pair representation—for a pairwise description of amino acids to be representable as a single 3D structure, many constraints must be satisfied including the triangle inequality on distances. On the basis of this intuition, we arrange the update operations on the pair representation in terms of triangles of edges involving three different nodes (Fig. 3c). In particular, we add an extra logit bias to axial attention31 to include the ‘missing edge’ of the triangle and we define a non-attention update operation ‘triangle multiplicative update’ that uses two edges to update the missing third edge (see Supplementary Methods 1.6.5 for details). The triangle multiplicative update was developed originally as a more symmetric and cheaper replacement for the attention, and networks that use only the attention or multiplicative update are both able to produce high-accuracy structures. However, the combination of the two updates is more accurate.

在成对表示中,存在两种不同的更新模式。这两种模式都源于对成对表示一致性的需求——为了将氨基酸的成对描述表示为单个 3D 结构,必须满足许多约束条件,包括距离的三角不等式。基于这种直觉,我们将成对表示的更新操作按照涉及三个不同节点的三角形边进行排列(见图 3c)。特别是,我们为轴向注意力 31 添加了一个额外的对数偏置,以包括三角形的“缺失边”,并定义了一个非注意力更新操作“三角形乘性更新”,该操作使用两条边来更新缺失的第三条边(详细信息请参见补充方法 1.6.5)。三角形乘性更新最初是作为比注意力更对称且成本更低的替代品而开发的,仅使用注意力或乘性更新的网络都能产生高精度的结构。然而,两种更新的组合精度更高。

We also use a variant of axial attention within the MSA representation. During the per-sequence attention in the MSA, we project additional logits from the pair stack to bias the MSA attention. This closes the loop by providing information flow from the pair representation back into the MSA representation, ensuring that the overall Evoformer block is able to fully mix information between the pair and MSA representations and prepare for structure generation within the structure module.

我们还在 MSA 表示中使用了轴注意力的一种变体。在 MSA 的序列注意力期间,我们将从对堆栈投影额外的 logits 来偏置 MSA 注意力。这通过从对表示向 MSA 表示提供信息流来闭合循环,确保整体 Evoformer 块能够充分混合对和 MSA 表示之间的信息,并为结构模块中的结构生成做好准备。

End-to-end structure prediction

端到端结构预测

The structure module (Fig. 3d) operates on a concrete 3D backbone structure using the pair representation and the original sequence row (single representation) of the MSA representation from the trunk. The 3D backbone structure is represented as Nres independent rotations and translations, each with respect to the global frame (residue gas) (Fig. 3e). These rotations and translations—representing the geometry of the N-Cα-C atoms—prioritize the orientation of the protein backbone so that the location of the side chain of each residue is highly constrained within that frame. Conversely, the peptide bond geometry is completely unconstrained and the network is observed to frequently violate the chain constraint during the application of the structure module as breaking this constraint enables the local refinement of all parts of the chain without solving complex loop closure problems. Satisfaction of the peptide bond geometry is encouraged during fine-tuning by a violation loss term. Exact enforcement of peptide bond geometry is only achieved in the post-prediction relaxation of the structure by gradient descent in the Amber32 force field. Empirically, this final relaxation does not improve the accuracy of the model as measured by the global distance test (GDT)33 or lDDT-Cα34 but does remove distracting stereochemical violations without the loss of accuracy.

结构模块(图 3d)在三维骨架结构上操作,使用来自主干的多序列比对(MSA)表示的配对表示和原始序列行(单表示)。三维骨架结构被表示为 N 个独立旋转和翻译,每个旋转和翻译都相对于全局框架(残基气体)(图 3e)。这些旋转和翻译——代表 N-Cα-C 原子的几何形状——优先考虑蛋白质骨架的取向,使得每个残基的侧链在该框架内的位置受到高度约束。相反,肽键几何形状完全不受约束,网络在应用结构模块时经常违反链约束,因为打破这一约束可以使得链的所有部分进行局部细化,而不必解决复杂的环闭合问题。在微调过程中,通过违反损失项鼓励满足肽键几何形状。通过在 Amber 32 力场中通过梯度下降进行结构预测后的松弛,才能精确地执行肽键几何形状。 从经验上看,这种最终的松弛处理并不能通过全局距离测试(GDT) 33 或 lDDT-Cα 34 来提高模型的准确性,但它可以消除令人分心的立体化学违规现象,而不会损失准确性。

The residue gas representation is updated iteratively in two stages (Fig. 3d). First, a geometry-aware attention operation that we term ‘invariant point attention’ (IPA) is used to update an Nres set of neural activations (single representation) without changing the 3D positions, then an equivariant update operation is performed on the residue gas using the updated activations. The IPA augments each of the usual attention queries, keys and values with 3D points that are produced in the local frame of each residue such that the final value is invariant to global rotations and translations (see Methods ‘IPA’ for details). The 3D queries and keys also impose a strong spatial/locality bias on the attention, which is well-suited to the iterative refinement of the protein structure. After each attention operation and element-wise transition block, the module computes an update to the rotation and translation of each backbone frame. The application of these updates within the local frame of each residue makes the overall attention and update block an equivariant operation on the residue gas.

残留气体表示法通过两个阶段迭代更新(图 3d)。首先,使用一种我们称之为“不变点注意力”(IPA)的几何感知注意力操作,在不改变 3D 位置的情况下更新一组 N res 神经激活(单个表示),然后对残留气体执行基于更新激活的等变更新操作。IPA 通过在每个残基的局部坐标系中产生的 3D 点来增强通常的注意力查询、键和值,使得最终值对全局旋转和平移不变(详见方法“IPA”的细节)。3D 查询和键也对注意力施加了强烈的空间/局部性偏差,这对于蛋白质结构的迭代细化非常合适。在每个注意力操作和逐元素转换块之后,该模块计算每个骨架帧的旋转和平移更新。将这些更新应用于每个残基的局部坐标系中,使得整体注意力和更新块对残留气体执行等变操作。

Predictions of side-chain χ angles as well as the final, per-residue accuracy of the structure (pLDDT) are computed with small per-residue networks on the final activations at the end of the network. The estimate of the TM-score (pTM) is obtained from a pairwise error prediction that is computed as a linear projection from the final pair representation. The final loss (which we term the frame-aligned point error (FAPE) (Fig. 3f)) compares the predicted atom positions to the true positions under many different alignments. For each alignment, defined by aligning the predicted frame (Rk, tk) to the corresponding true frame, we compute the distance of all predicted atom positions xi from the true atom positions. The resulting Nframes × Natoms distances are penalized with a clamped L1 loss. This creates a strong bias for atoms to be correct relative to the local frame of each residue and hence correct with respect to its side-chain interactions, as well as providing the main source of chirality for AlphaFold (Supplementary Methods 1.9.3 and Supplementary Fig. 9).

预测侧链χ角度以及结构最终每残基的准确性(pLDDT)是通过在网络的末端最终激活上使用小的每残基网络来计算的。TM-score(pTM)的估计是通过从最终的成对表示进行线性投影得到的成对错误预测获得的。最终的损失(我们称之为帧对齐点误差(FAPE)(图 3f))比较了预测的原子位置与真实位置在许多不同的对齐方式下的差异。对于每个对齐,即通过将预测的框架(R k ,t k )与相应的真实框架对齐来定义,我们计算所有预测原子位置 x 与真实原子位置的距离。得到的 N frames × N atoms 距离被一个夹紧的 L 1 损失所惩罚。这为原子相对于每个残基的局部框架正确以及相对于其侧链相互作用正确提供了强烈的偏差,同时也是 AlphaFold(补充方法 1.9.3 和补充图 9)的主要手性来源。

Training with labelled and unlabelled data

训练使用标注和无标注数据

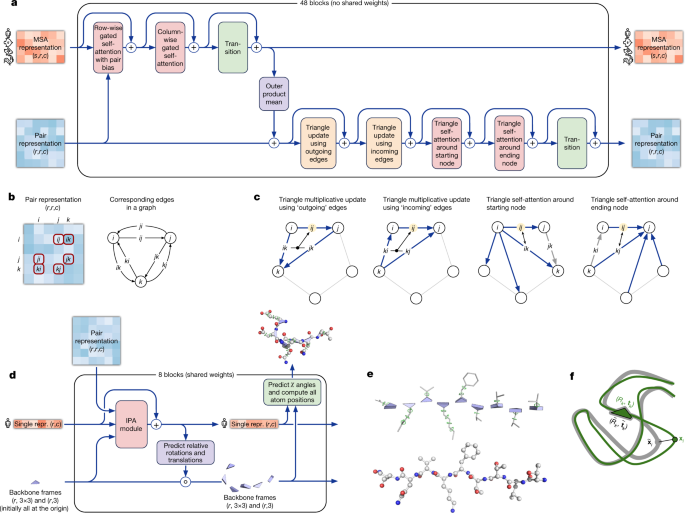

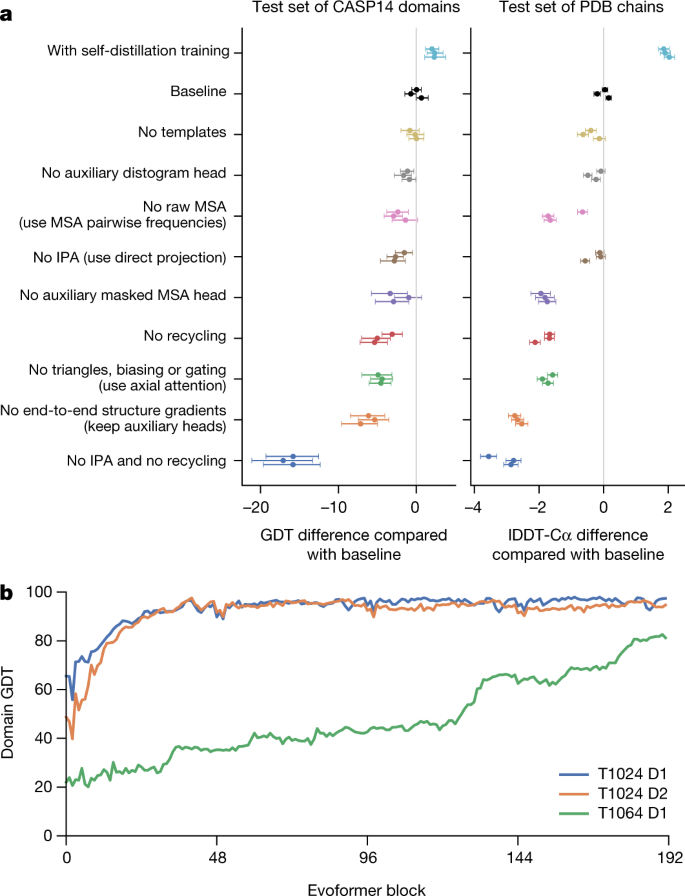

The AlphaFold architecture is able to train to high accuracy using only supervised learning on PDB data, but we are able to enhance accuracy (Fig. 4a) using an approach similar to noisy student self-distillation35. In this procedure, we use a trained network to predict the structure of around 350,000 diverse sequences from Uniclust3036 and make a new dataset of predicted structures filtered to a high-confidence subset. We then train the same architecture again from scratch using a mixture of PDB data and this new dataset of predicted structures as the training data, in which the various training data augmentations such as cropping and MSA subsampling make it challenging for the network to recapitulate the previously predicted structures. This self-distillation procedure makes effective use of the unlabelled sequence data and considerably improves the accuracy of the resulting network.

AlphaFold 架构仅使用 PDB 数据上的监督学习就能训练到高精度,但我们可以通过类似噪声学生自蒸馏的方法来提高精度(图 4a)。在这个过程中,我们使用一个训练好的网络来预测来自 Uniclust30 的约 350,000 个不同序列的结构,并创建一个新的预测结构数据集,该数据集经过筛选,只包含高置信度的子集。然后,我们再次从头开始使用相同的架构进行训练,训练数据是 PDB 数据和这个新的预测结构数据集的混合,其中各种训练数据增强,如裁剪和 MSA 子采样,使得网络难以重新预测之前预测的结构。这种自蒸馏过程有效地利用了未标记的序列数据,显著提高了所得网络的精度。

图 4:解释神经网络。

a, Ablation results on two target sets: the CASP14 set of domains (n = 87 protein domains) and the PDB test set of chains with template coverage of ≤30% at 30% identity (n = 2,261 protein chains). Domains are scored with GDT and chains are scored with lDDT-Cα. The ablations are reported as a difference compared with the average of the three baseline seeds. Means (points) and 95% bootstrap percentile intervals (error bars) are computed using bootstrap estimates of 10,000 samples. b, Domain GDT trajectory over 4 recycling iterations and 48 Evoformer blocks on CASP14 targets LmrP (T1024) and Orf8 (T1064) where D1 and D2 refer to the individual domains as defined by the CASP assessment. Both T1024 domains obtain the correct structure early in the network, whereas the structure of T1064 changes multiple times and requires nearly the full depth of the network to reach the final structure. Note, 48 Evoformer blocks comprise one recycling iteration.

a, 消融实验针对两个目标集进行:CASP14 蛋白质结构域集(n = 87 个蛋白质结构域)和 PDB 测试集,其中模板覆盖率为≤30%,在 30%相似度下的蛋白质链(n = 2,261 条蛋白质链)。结构域使用 GDT 进行评分,链使用 lDDT-Cα进行评分。消融结果与三个基线种子平均值的差异进行报告。使用 10,000 个样本的 bootstrap 估计计算平均值(得分)和 95%的 bootstrap 百分位数区间(误差线)。

b, 在 CASP14 目标 LmrP(T1024)和 Orf8(T1064)上,经过 4 次循环迭代和 48 个 Evoformer 块后的结构域 GDT 轨迹。其中 D1 和 D2 分别指代 CASP 评估中定义的各个结构域。T1024 的两个结构域在网络早期就获得了正确的结构,而 T1064 的结构则多次变化,需要接近网络全深才能达到最终结构。注意,48 个 Evoformer 块构成一次循环迭代。

Additionally, we randomly mask out or mutate individual residues within the MSA and have a Bidirectional Encoder Representations from Transformers (BERT)-style37 objective to predict the masked elements of the MSA sequences. This objective encourages the network to learn to interpret phylogenetic and covariation relationships without hardcoding a particular correlation statistic into the features. The BERT objective is trained jointly with the normal PDB structure loss on the same training examples and is not pre-trained, in contrast to recent independent work38.

此外,我们还随机屏蔽或突变 MSA 中的单个残基,并采用类似 BERT 的 37 目标来预测 MSA 序列中的屏蔽元素。该目标鼓励网络学习解释系统发育和共变关系,而不需要在特征中硬编码特定的相关性统计。BERT 目标与正常的 PDB 结构损失在相同的训练样本上联合训练,并且不是预先训练的,与最近的独立工作 38 不同。

Interpreting the neural network

神经网络的解读

To understand how AlphaFold predicts protein structure, we trained a separate structure module for each of the 48 Evoformer blocks in the network while keeping all parameters of the main network frozen (Supplementary Methods 1.14). Including our recycling stages, this provides a trajectory of 192 intermediate structures—one per full Evoformer block—in which each intermediate represents the belief of the network of the most likely structure at that block. The resulting trajectories are surprisingly smooth after the first few blocks, showing that AlphaFold makes constant incremental improvements to the structure until it can no longer improve (see Fig. 4b for a trajectory of accuracy). These trajectories also illustrate the role of network depth. For very challenging proteins such as ORF8 of SARS-CoV-2 (T1064), the network searches and rearranges secondary structure elements for many layers before settling on a good structure. For other proteins such as LmrP (T1024), the network finds the final structure within the first few layers. Structure trajectories of CASP14 targets T1024, T1044, T1064 and T1091 that demonstrate a clear iterative building process for a range of protein sizes and difficulties are shown in Supplementary Videos 1–4. In Supplementary Methods 1.16 and Supplementary Figs. 12, 13, we interpret the attention maps produced by AlphaFold layers.

为了理解 AlphaFold 如何预测蛋白质结构,我们在保持主网络所有参数冻结的情况下,为网络中的 48 个 Evoformer 块分别训练了一个结构模块(补充方法 1.14)。包括我们的回收阶段,这为我们提供了一条 192 个中间结构的轨迹——每个 Evoformer 块一个——其中每个中间结构代表了网络在该块处对最可能结构的信念。这些轨迹在最初的几个块之后出奇地平滑,表明 AlphaFold 在无法再改进之前一直在不断改进结构(见图 4b 中的准确度轨迹)。这些轨迹还说明了网络深度的作用。对于像 SARS-CoV-2 的 ORF8(T1064)这样的极具挑战性的蛋白质,网络在确定一个良好的结构之前会在许多层中搜索和重新排列二级结构元素。对于像 LmrP(T1024)这样的其他蛋白质,网络在最初的几层内就能找到最终结构。 在补充视频 1-4 中展示了 CASP14 靶点 T1024、T1044、T1064 和 T1091 的结构轨迹,这些轨迹展示了针对不同大小和难度的蛋白质的清晰迭代构建过程。在补充方法 1.16 和补充图 12、13 中,我们解释了 AlphaFold 层生成的注意力图。

Figure 4a contains detailed ablations of the components of AlphaFold that demonstrate that a variety of different mechanisms contribute to AlphaFold accuracy. Detailed descriptions of each ablation model, their training details, extended discussion of ablation results and the effect of MSA depth on each ablation are provided in Supplementary Methods 1.13 and Supplementary Fig. 10.

图 4a 展示了 AlphaFold 组件的详细消融分析,证明了多种不同的机制共同促成了 AlphaFold 的准确性。每个消融模型的详细描述、训练细节、消融结果的深入讨论以及 MSA 深度对每个消融模型的影响,均见补充方法 1.13 和补充图 10。

MSA depth and cross-chain contacts

MSA 深度和跨链接触

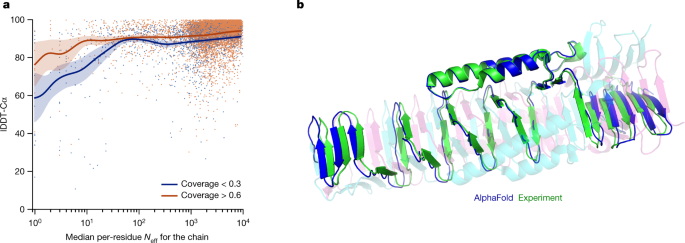

Although AlphaFold has a high accuracy across the vast majority of deposited PDB structures, we note that there are still factors that affect accuracy or limit the applicability of the model. The model uses MSAs and the accuracy decreases substantially when the median alignment depth is less than around 30 sequences (see Fig. 5a for details). We observe a threshold effect where improvements in MSA depth over around 100 sequences lead to small gains. We hypothesize that the MSA information is needed to coarsely find the correct structure within the early stages of the network, but refinement of that prediction into a high-accuracy model does not depend crucially on the MSA information. The other substantial limitation that we have observed is that AlphaFold is much weaker for proteins that have few intra-chain or homotypic contacts compared to the number of heterotypic contacts (further details are provided in a companion paper39). This typically occurs for bridging domains within larger complexes in which the shape of the protein is created almost entirely by interactions with other chains in the complex. Conversely, AlphaFold is often able to give high-accuracy predictions for homomers, even when the chains are substantially intertwined (Fig. 5b). We expect that the ideas of AlphaFold are readily applicable to predicting full hetero-complexes in a future system and that this will remove the difficulty with protein chains that have a large number of hetero-contacts.

尽管 AlphaFold 在大多数已提交的 PDB 结构中具有很高的准确性,但我们注意到仍有一些因素会影响准确性或限制模型的适用性。该模型使用多重序列比对(MSA),当序列比对的中位数深度小于约 30 个序列时,准确性会显著下降(详见图 5a)。我们观察到存在一个阈值效应,即当 MSA 深度超过约 100 个序列时,改进带来的收益很小。我们假设 MSA 信息在网络的早期阶段用于粗略地找到正确的结构是必要的,但将这种预测细化成高精度模型并不完全依赖于 MSA 信息。我们观察到的另一个重大限制是,与异型接触相比,AlphaFold 对于具有少量链内或同型接触的蛋白质要弱得多(更多细节请参阅配套论文 39 )。这种情况通常发生在大型复合物中的桥接域内,其中蛋白质的形状几乎完全由与其他链的相互作用所决定。 相反,AlphaFold 经常能够对同源蛋白给出高精度的预测,即使这些链相互缠绕得非常严重(图 5b)。我们预计 AlphaFold 的理念可以很容易地应用于预测未来系统中完整的异源复合物,这将消除具有大量异源接触的蛋白质链的困难。

a, Backbone accuracy (lDDT-Cα) for the redundancy-reduced set of the PDB after our training data cut-off, restricting to proteins in which at most 25% of the long-range contacts are between different heteromer chains. We further consider two groups of proteins based on template coverage at 30% sequence identity: covering more than 60% of the chain (n = 6,743 protein chains) and covering less than 30% of the chain (n = 1,596 protein chains). MSA depth is computed by counting the number of non-gap residues for each position in the MSA (using the Neff weighting scheme; see Methods for details) and taking the median across residues. The curves are obtained through Gaussian kernel average smoothing (window size is 0.2 units in log10(Neff)); the shaded area is the 95% confidence interval estimated using bootstrap of 10,000 samples. b, An intertwined homotrimer (PDB 6SK0) is correctly predicted without input stoichiometry and only a weak template (blue is predicted and green is experimental).

Related work 相关研究工作

The prediction of protein structures has had a long and varied development, which is extensively covered in a number of reviews14,40,41,42,43. Despite the long history of applying neural networks to structure prediction14,42,43, they have only recently come to improve structure prediction10,11,44,45. These approaches effectively leverage the rapid improvement in computer vision systems46 by treating the problem of protein structure prediction as converting an ‘image’ of evolutionary couplings22,23,24 to an ‘image’ of the protein distance matrix and then integrating the distance predictions into a heuristic system that produces the final 3D coordinate prediction. A few recent studies have been developed to predict the 3D coordinates directly47,48,49,50, but the accuracy of these approaches does not match traditional, hand-crafted structure prediction pipelines51. In parallel, the success of attention-based networks for language processing52 and, more recently, computer vision31,53 has inspired the exploration of attention-based methods for interpreting protein sequences54,55,56.

蛋白质结构的预测经历了漫长而多样化的发展历程,这在许多综述中都有详细阐述①。尽管神经网络在结构预测中的应用已有很长时间的历史②,但它们最近才开始在结构预测方面取得改进③。这些方法有效地利用了计算机视觉系统的快速进步④,将蛋白质结构预测问题视为将进化耦合的‘图像’转换为蛋白质距离矩阵的‘图像’,然后将距离预测整合到一个启发式系统中,该系统产生最终的 3D 坐标预测。一些最近的研究已经开发出来直接预测 3D 坐标⑤,但这些方法的准确性并不匹配传统的手工制作的预测流程⑥。与此同时,基于注意力的网络在语言处理⑦以及最近在计算机视觉⑧方面的成功,激发了探索基于注意力的方法来解释蛋白质序列⑨。

Discussion 讨论

The methodology that we have taken in designing AlphaFold is a combination of the bioinformatics and physical approaches: we use a physical and geometric inductive bias to build components that learn from PDB data with minimal imposition of handcrafted features (for example, AlphaFold builds hydrogen bonds effectively without a hydrogen bond score function). This results in a network that learns far more efficiently from the limited data in the PDB but is able to cope with the complexity and variety of structural data.

我们设计 AlphaFold 所采用的方法论是生物信息学与物理方法的结合:我们利用物理和几何归纳偏见构建组件,这些组件能够从 PDB 数据中学习,同时尽量减少手工特征的强制(例如,AlphaFold 能够有效地构建氢键,而不需要氢键得分函数)。这使得网络能够从有限的 PDB 数据中学习得更加高效,同时能够应对结构数据的复杂性和多样性。

In particular, AlphaFold is able to handle missing the physical context and produce accurate models in challenging cases such as intertwined homomers or proteins that only fold in the presence of an unknown haem group. The ability to handle underspecified structural conditions is essential to learning from PDB structures as the PDB represents the full range of conditions in which structures have been solved. In general, AlphaFold is trained to produce the protein structure most likely to appear as part of a PDB structure. For example, in cases in which a particular stochiometry, ligand or ion is predictable from the sequence alone, AlphaFold is likely to produce a structure that respects those constraints implicitly.

特别地,AlphaFold 能够处理缺失物理背景信息的情况,并在诸如相互缠绕的同源聚体或仅在未知血红素存在时才能折叠的蛋白质等具有挑战性的案例中生成准确的模型。处理不明确的构象条件的能力对于从 PDB 结构中学习至关重要,因为 PDB 代表了结构被解决的全部条件范围。总的来说,AlphaFold 被训练生成最有可能作为 PDB 结构一部分的蛋白质结构。例如,在仅从序列本身就可以预测特定化学计量比、配体或离子的情况下,AlphaFold 很可能会生成一个隐式地遵守这些约束的结构。

AlphaFold has already demonstrated its utility to the experimental community, both for molecular replacement57 and for interpreting cryogenic electron microscopy maps58. Moreover, because AlphaFold outputs protein coordinates directly, AlphaFold produces predictions in graphics processing unit (GPU) minutes to GPU hours depending on the length of the protein sequence (for example, around one GPU minute per model for 384 residues; see Methods for details). This opens up the exciting possibility of predicting structures at the proteome-scale and beyond—in a companion paper39, we demonstrate the application of AlphaFold to the entire human proteome39.

AlphaFold 已经向实验界展示了其价值,无论是用于分子替换 57 还是用于解读冷冻电子显微镜图谱 58 。此外,由于 AlphaFold 直接输出蛋白质坐标,其预测结果的时间取决于蛋白质序列的长度(例如,对于 384 个残基的模型,大约需要一分钟的 GPU 时间;具体方法见方法部分),这为在蛋白质组规模甚至更大规模上预测结构提供了令人兴奋的可能性。在 companion paper 39 中,我们展示了 AlphaFold 在整个人类蛋白质组中的应用 39 。

The explosion in available genomic sequencing techniques and data has revolutionized bioinformatics but the intrinsic challenge of experimental structure determination has prevented a similar expansion in our structural knowledge. By developing an accurate protein structure prediction algorithm, coupled with existing large and well-curated structure and sequence databases assembled by the experimental community, we hope to accelerate the advancement of structural bioinformatics that can keep pace with the genomics revolution. We hope that AlphaFold—and computational approaches that apply its techniques for other biophysical problems—will become essential tools of modern biology.

基因组测序技术和数据的爆炸性增长已经彻底改变了生物信息学,但实验结构确定的内在挑战阻止了我们对结构知识的类似扩张。通过开发一个精确的蛋白质结构预测算法,并结合实验社区构建的庞大且精心整理的结构和序列数据库,我们希望加速结构生物信息学的进步,使其能够跟上基因组革命的步伐。我们希望 AlphaFold——以及应用其技术的计算方法来解决其他生物物理问题——将成为现代生物学的必备工具。

Methods 方法

Full algorithm details 完整算法细节

Extensive explanations of the components and their motivations are available in Supplementary Methods 1.1–1.10, in addition, pseudocode is available in Supplementary Information Algorithms 1–32, network diagrams in Supplementary Figs. 1–8, input features in Supplementary Table 1 and additional details are provided in Supplementary Tables 2, 3. Training and inference details are provided in Supplementary Methods 1.11–1.12 and Supplementary Tables 4, 5.

详细的组件及其动机的说明可在补充方法 1.1-1.10 中找到,此外,伪代码可在补充信息算法 1-32 中找到,网络图可在补充图 1-8 中找到,输入特征可在补充表 1 中找到,补充细节可在补充表 2、3 中提供。训练和推理的详细信息可在补充方法 1.11-1.12 和补充表 4、5 中找到。

IPA 国际音标

The IPA module combines the pair representation, the single representation and the geometric representation to update the single representation (Supplementary Fig. 8). Each of these representations contributes affinities to the shared attention weights and then uses these weights to map its values to the output. The IPA operates in 3D space. Each residue produces query points, key points and value points in its local frame. These points are projected into the global frame using the backbone frame of the residue in which they interact with each other. The resulting points are then projected back into the local frame. The affinity computation in the 3D space uses squared distances and the coordinate transformations ensure the invariance of this module with respect to the global frame (see Supplementary Methods 1.8.2 ‘Invariant point attention (IPA)’ for the algorithm, proof of invariance and a description of the full multi-head version). A related construction that uses classic geometric invariants to construct pairwise features in place of the learned 3D points has been applied to protein design59.

IPA 模块将成对表示、单个表示和几何表示结合起来更新单个表示(补充图 8)。这些表示中的每一个都会为共享的注意力权重贡献亲和力,然后利用这些权重将它们的值映射到输出。IPA 在三维空间中运行。每个残基在其局部框架中产生查询点、关键点和值点。这些点使用与其相互作用的残基的主干框架投影到全局框架中。然后,这些点被投影回局部框架。在三维空间中的亲和力计算使用平方距离,坐标变换确保该模块对全局框架的不变性(有关算法、不变性的证明以及完整的多头版本描述,请参阅补充方法 1.8.2“不变点注意力(IPA)”)。一种相关构造,使用经典几何不变量来构建成对特征,以替代学习到的 3D 点,已应用于蛋白质设计 59 。

In addition to the IPA, standard dot product attention is computed on the abstract single representation and a special attention on the pair representation. The pair representation augments both the logits and the values of the attention process, which is the primary way in which the pair representation controls the structure generation.

除了 IPA 之外,标准点积注意力还在抽象的单个表示和特殊的对表示上计算。对表示增强了注意力过程中的 logits 和 values,这是对表示控制结构生成的主要方式。

Inputs and data sources 输入和数据来源

Inputs to the network are the primary sequence, sequences from evolutionarily related proteins in the form of a MSA created by standard tools including jackhmmer60 and HHBlits61, and 3D atom coordinates of a small number of homologous structures (templates) where available. For both the MSA and templates, the search processes are tuned for high recall; spurious matches will probably appear in the raw MSA but this matches the training condition of the network.

网络输入包括主要序列、通过标准工具(如 jackhmmer 60 和 HHBlits 61 )创建的进化相关蛋白的序列比对(MSA),以及可用的少量同源结构(模板)的 3D 原子坐标。对于 MSA 和模板,搜索过程都调整为高召回率;原始 MSA 中可能会出现虚假匹配,但这符合网络的训练条件。

One of the sequence databases used, Big Fantastic Database (BFD), was custom-made and released publicly (see ‘Data availability’) and was used by several CASP teams. BFD is one of the largest publicly available collections of protein families. It consists of 65,983,866 families represented as MSAs and hidden Markov models (HMMs) covering 2,204,359,010 protein sequences from reference databases, metagenomes and metatranscriptomes.

所使用的序列数据库之一是“大奇幻数据库”(BFD),该数据库是定制开发并公开发布的(见“数据可用性”)。它被多个 CASP 团队所使用。BFD 是公开可用的最大的蛋白质家族集合之一。它由 65,983,866 个家族组成,这些家族以多重序列比对(MSA)和隐马尔可夫模型(HMM)的形式呈现,覆盖了来自参考数据库、宏基因组学和宏转录组学中的 22,204,359,010 个蛋白质序列。

BFD was built in three steps. First, 2,423,213,294 protein sequences were collected from UniProt (Swiss-Prot&TrEMBL, 2017-11)62, a soil reference protein catalogue and the marine eukaryotic reference catalogue7, and clustered to 30% sequence identity, while enforcing a 90% alignment coverage of the shorter sequences using MMseqs2/Linclust63. This resulted in 345,159,030 clusters. For computational efficiency, we removed all clusters with less than three members, resulting in 61,083,719 clusters. Second, we added 166,510,624 representative protein sequences from Metaclust NR (2017-05; discarding all sequences shorter than 150 residues)63 by aligning them against the cluster representatives using MMseqs264. Sequences that fulfilled the sequence identity and coverage criteria were assigned to the best scoring cluster. The remaining 25,347,429 sequences that could not be assigned were clustered separately and added as new clusters, resulting in the final clustering. Third, for each of the clusters, we computed an MSA using FAMSA65 and computed the HMMs following the Uniclust HH-suite database protocol36.

BFD 的构建分为三个步骤。首先,从 UniProt(Swiss-Prot 和 TrEMBL,2017 年 11 月) 62 、土壤参考蛋白目录和海洋真核生物参考目录 7 中收集了 2423213294 个蛋白质序列,并使用 MMseqs2/Linclust 63 进行聚类,聚类标准为 30%序列相似度,同时确保较短序列的 90%比对覆盖率,最终得到 345159030 个聚类。为了提高计算效率,我们移除了所有成员数少于三个的聚类,最终得到 61083719 个聚类。其次,我们通过 MMseqs2 64 将 166510624 个代表性蛋白质序列从 Metaclust NR(2017 年 5 月;丢弃所有短于 150 个残基的序列) 63 添加到聚类中,并将满足序列相似度和覆盖率标准的序列分配到得分最高的聚类中。剩余的 25347429 个无法分配的序列被单独聚类并作为新的聚类添加,从而得到最终的聚类。第三,对于每个聚类,我们使用 FAMSA 65 计算多重序列比对(MSA),并按照 Uniclust HH-suite 数据库协议 36 计算隐马尔可夫模型(HMM)。

The following versions of public datasets were used in this study. Our models were trained on a copy of the PDB5 downloaded on 28 August 2019. For finding template structures at prediction time, we used a copy of the PDB downloaded on 14 May 2020, and the PDB7066 clustering database downloaded on 13 May 2020. For MSA lookup at both training and prediction time, we used Uniref9067 v.2020_01, BFD, Uniclust3036 v.2018_08 and MGnify6 v.2018_12. For sequence distillation, we used Uniclust3036 v.2018_08 to construct a distillation structure dataset. Full details are provided in Supplementary Methods 1.2.

以下版本公开数据集被用于本研究。我们的模型在 2019 年 8 月 28 日下载的 PDB 5 副本上进行训练。在预测时寻找模板结构,我们使用了 2020 年 5 月 14 日下载的 PDB 副本和 2020 年 5 月 13 日下载的 PDB70 66 聚类数据库。在训练和预测时的序列相似性搜索中,我们使用了 Uniref90 67 v.2020_01、BFD、Uniclust30 36 v.2018_08 和 MGnify 6 v.2018_12。在序列蒸馏中,我们使用了 Uniclust30 36 v.2018_08 构建蒸馏结构数据集。详细内容见补充方法 1.2。

For MSA search on BFD + Uniclust30, and template search against PDB70, we used HHBlits61 and HHSearch66 from hh-suite v.3.0-beta.3 (version 14/07/2017). For MSA search on Uniref90 and clustered MGnify, we used jackhmmer from HMMER368. For constrained relaxation of structures, we used OpenMM v.7.3.169 with the Amber99sb force field32. For neural network construction, running and other analyses, we used TensorFlow70, Sonnet71, NumPy72, Python73 and Colab74.

对于在 BFD + Uniclust30 上进行的 MSA 搜索以及针对 PDB70 的模板搜索,我们使用了 hh-suite v.3.0-beta.3(版本日期:2017 年 7 月 14 日)中的 HHBlits 61 和 HHSearch 66 。对于在 Uniref90 和 MGnify 聚类上进行 MSA 搜索,我们使用了 HMMER3 68 中的 jackhmmer。对于结构的约束松弛,我们使用了 OpenMM v.7.3.1 69 与 Amber99sb 力场 32 。对于神经网络构建、运行和其他分析,我们使用了 TensorFlow 70 、Sonnet 71 、NumPy 72 、Python 73 和 Colab 74 。

To quantify the effect of the different sequence data sources, we re-ran the CASP14 proteins using the same models but varying how the MSA was constructed. Removing BFD reduced the mean accuracy by 0.4 GDT, removing Mgnify reduced the mean accuracy by 0.7 GDT, and removing both reduced the mean accuracy by 6.1 GDT. In each case, we found that most targets had very small changes in accuracy but a few outliers had very large (20+ GDT) differences. This is consistent with the results in Fig. 5a in which the depth of the MSA is relatively unimportant until it approaches a threshold value of around 30 sequences when the MSA size effects become quite large. We observe mostly overlapping effects between inclusion of BFD and Mgnify, but having at least one of these metagenomics databases is very important for target classes that are poorly represented in UniRef, and having both was necessary to achieve full CASP accuracy.

为了量化不同序列数据源的影响,我们使用相同的模型重新运行了 CASP14 蛋白质,但改变了 MSA 构建的方式。移除 BFD 使平均准确度降低了 0.4 GDT,移除 Mgnify 使平均准确度降低了 0.7 GDT,同时移除两者使平均准确度降低了 6.1 GDT。在每种情况下,我们发现大多数目标蛋白的准确度变化很小,但少数异常值的变化非常大(20+ GDT)。这与图 5a 中的结果一致,其中 MSA 的深度相对不重要,直到接近大约 30 个序列的阈值时,MSA 的大小效应才变得相当大。我们观察到 BFD 和 Mgnify 的加入具有相似的影响,但对于在 UniRef 中代表性较差的目标类,至少需要有一个这些宏基因组数据库,而两者都需要才能达到完整的 CASP 准确度。

Training regimen 训练计划

To train, we use structures from the PDB with a maximum release date of 30 April 2018. Chains are sampled in inverse proportion to cluster size of a 40% sequence identity clustering. We then randomly crop them to 256 residues and assemble into batches of size 128. We train the model on Tensor Processing Unit (TPU) v3 with a batch size of 1 per TPU core, hence the model uses 128 TPU v3 cores. The model is trained until convergence (around 10 million samples) and further fine-tuned using longer crops of 384 residues, larger MSA stack and reduced learning rate (see Supplementary Methods 1.11 for the exact configuration). The initial training stage takes approximately 1 week, and the fine-tuning stage takes approximately 4 additional days.

为了训练,我们使用了截至 2018 年 4 月 30 日的 PDB 结构,链段以 40%序列相似度的聚类大小成反比进行采样。然后我们将它们随机裁剪至 256 个残基,并组装成大小为 128 的批次。我们在每个 TPU 核心上使用 1 个批次的 Tensor Processing Unit (TPU) v3 进行训练,因此模型使用了 128 个 TPU v3 核心。模型训练至收敛(约 1000 万个样本)后,进一步使用 384 个残基的更长裁剪、更大的 MSA 堆叠和降低的学习率进行微调(具体配置见补充方法 1.11)。初始训练阶段大约需要 1 周时间,微调阶段再额外需要大约 4 天。

The network is supervised by the FAPE loss and a number of auxiliary losses. First, the final pair representation is linearly projected to a binned distance distribution (distogram) prediction, scored with a cross-entropy loss. Second, we use random masking on the input MSAs and require the network to reconstruct the masked regions from the output MSA representation using a BERT-like loss37. Third, the output single representations of the structure module are used to predict binned per-residue lDDT-Cα values. Finally, we use an auxiliary side-chain loss during training, and an auxiliary structure violation loss during fine-tuning. Detailed descriptions and weighting are provided in the Supplementary Information.

该网络由 FAPE 损失函数和多个辅助损失函数进行监督。首先,将最终的成对表示线性投影到分箱距离分布(distogram)预测,并使用交叉熵损失进行评分。其次,对输入的 MSA 进行随机掩码,并要求网络使用类似 BERT 的损失函数从输出 MSA 表示中重建掩码区域。第三,使用结构模块的输出单表示来预测每残基的 binned lDDT-Cα值。最后,在训练过程中使用辅助侧链损失,在微调过程中使用辅助结构违反损失。详细描述和权重在补充信息中提供。

An initial model trained with the above objectives was used to make structure predictions for a Uniclust dataset of 355,993 sequences with the full MSAs. These predictions were then used to train a final model with identical hyperparameters, except for sampling examples 75% of the time from the Uniclust prediction set, with sub-sampled MSAs, and 25% of the time from the clustered PDB set.

一个使用上述目标训练的初始模型被用于对包含 355,993 个序列的 Uniclust 数据集进行结构预测,这些序列具有完整的多重序列比对(MSA)。然后,使用相同的超参数训练了一个最终模型,唯一的区别是在 75%的时间内从 Uniclust 预测集中采样示例,并使用子采样 MSA,而在 25%的时间内从聚类 PDB 集中采样。

We train five different models using different random seeds, some with templates and some without, to encourage diversity in the predictions (see Supplementary Table 5 and Supplementary Methods 1.12.1 for details). We also fine-tuned these models after CASP14 to add a pTM prediction objective (Supplementary Methods 1.9.7) and use the obtained models for Fig. 2d.

我们使用不同的随机种子训练了五个不同的模型,其中一些使用了模板,一些没有,以鼓励预测的多样性(详细信息请参见补充表 5 和补充方法 1.12.1)。此外,我们在 CASP14 之后对这些模型进行了微调,增加了 pTM 预测目标(参见补充方法 1.9.7),并使用这些模型制作了图 2d。

Inference regimen 推理方案

We inference the five trained models and use the predicted confidence score to select the best model per target.

我们推断出五个训练好的模型,并使用预测的置信度分数来选择每个目标的最佳模型。

Using our CASP14 configuration for AlphaFold, the trunk of the network is run multiple times with different random choices for the MSA cluster centres (see Supplementary Methods 1.11.2 for details of the ensembling procedure). The full time to make a structure prediction varies considerably depending on the length of the protein. Representative timings for the neural network using a single model on V100 GPU are 4.8 min with 256 residues, 9.2 min with 384 residues and 18 h at 2,500 residues. These timings are measured using our open-source code, and the open-source code is notably faster than the version we ran in CASP14 as we now use the XLA compiler75.

使用我们的 AlphaFold 的 CASP14 配置,网络的主体部分多次运行,每次都使用不同的随机选择进行 MSA 聚类中心(详细信息请参阅补充方法 1.11.2 中的集成过程)。结构预测的完整时间因蛋白质长度而异。使用单个模型在 V100 GPU 上运行的代表性时间如下:256 个残基为 4.8 分钟,384 个残基为 9.2 分钟,2,500 个残基为 18 小时。这些时间使用我们的开源代码进行测量,值得注意的是,我们的开源代码比我们在 CASP14 中使用的版本要快,因为我们现在使用了 XLA 编译器。

Since CASP14, we have found that the accuracy of the network without ensembling is very close or equal to the accuracy with ensembling and we turn off ensembling for most inference. Without ensembling, the network is 8× faster and the representative timings for a single model are 0.6 min with 256 residues, 1.1 min with 384 residues and 2.1 h with 2,500 residues.

Inferencing large proteins can easily exceed the memory of a single GPU. For a V100 with 16 GB of memory, we can predict the structure of proteins up to around 1,300 residues without ensembling and the 256- and 384-residue inference times are using the memory of a single GPU. The memory usage is approximately quadratic in the number of residues, so a 2,500-residue protein involves using unified memory so that we can greatly exceed the memory of a single V100. In our cloud setup, a single V100 is used for computation on a 2,500-residue protein but we requested four GPUs to have sufficient memory.

在推断大型蛋白质时,很容易超过单个 GPU 的内存容量。对于配备 16GB 内存的 V100,我们可以在不进行集成的情况下预测约 1300 个残基的蛋白质结构,而 256 个和 384 个残基的推断时间都使用了单个 GPU 的内存。内存使用量大约与残基数呈二次方关系,因此,对于含有 2500 个残基的蛋白质,需要使用统一内存,以便大大超出单个 V100 的内存容量。在我们的云配置中,单个 V100 用于计算含有 2500 个残基的蛋白质,但我们请求了四个 GPU 以确保有足够的内存。

Searching genetic sequence databases to prepare inputs and final relaxation of the structures take additional central processing unit (CPU) time but do not require a GPU or TPU.

Metrics

The predicted structure is compared to the true structure from the PDB in terms of lDDT metric34, as this metric reports the domain accuracy without requiring a domain segmentation of chain structures. The distances are either computed between all heavy atoms (lDDT) or only the Cα atoms to measure the backbone accuracy (lDDT-Cα). As lDDT-Cα only focuses on the Cα atoms, it does not include the penalty for structural violations and clashes. Domain accuracies in CASP are reported as GDT33 and the TM-score27 is used as a full chain global superposition metric.

预测的结构与 PDB 中的真实结构在 lDDT 指标 34 方面进行比较,因为该指标报告了域的准确性,而无需对链结构进行域分割。距离可以是所有重原子的距离(lDDT),也可以仅计算 Cα原子的距离来衡量骨架准确性(lDDT-Cα)。由于 lDDT-Cα仅关注 Cα原子,因此它不包括结构违规和碰撞的惩罚。在 CASP 中报告的域准确性为 GDT 33 ,而 TM-score 27 则用作全链全局叠加指标。

We also report accuracies using the r.m.s.d.95 (Cα r.m.s.d. at 95% coverage). We perform five iterations of (1) a least-squares alignment of the predicted structure and the PDB structure on the currently chosen Cα atoms (using all Cα atoms in the first iteration); (2) selecting the 95% of Cα atoms with the lowest alignment error. The r.m.s.d. of the atoms chosen for the final iterations is the r.m.s.d.95. This metric is more robust to apparent errors that can originate from crystal structure artefacts, although in some cases the removed 5% of residues will contain genuine modelling errors.

我们同时报告了使用 r.m.s.d. 95 (95%覆盖率的 Cα r.m.s.d.)的准确性。我们进行了五次迭代,包括:(1)在当前选择的 Cα原子上对预测结构和 PDB 结构进行最小二乘法对齐(第一次迭代使用所有 Cα原子);(2)选择对齐误差最低的 95%的 Cα原子。最终迭代所选原子的 r.m.s.d.为 r.m.s.d. 95 。这个指标对来自晶体结构伪影的明显误差更为稳健,尽管在某些情况下,被移除的 5%的残基可能包含真实的建模错误。

Test set of recent PDB sequences

最近 PDB 序列测试集

For evaluation on recent PDB sequences (Figs. 2a–d, 4a, 5a), we used a copy of the PDB downloaded 15 February 2021. Structures were filtered to those with a release date after 30 April 2018 (the date limit for inclusion in the training set for AlphaFold). Chains were further filtered to remove sequences that consisted of a single amino acid as well as sequences with an ambiguous chemical component at any residue position. Exact duplicates were removed, with the chain with the most resolved Cα atoms used as the representative sequence. Subsequently, structures with less than 16 resolved residues, with unknown residues or solved by NMR methods were removed. As the PDB contains many near-duplicate sequences, the chain with the highest resolution was selected from each cluster in the PDB 40% sequence clustering of the data. Furthermore, we removed all sequences for which fewer than 80 amino acids had the alpha carbon resolved and removed chains with more than 1,400 residues. The final dataset contained 10,795 protein sequences.

对于评估最近 PDB 序列(图 2a-d,4a,5a),我们使用了 2021 年 2 月 15 日下载的 PDB 副本。结构被筛选为发布日期在 2018 年 4 月 30 日之后(AlphaFold 训练集的日期限制)的结构。进一步筛选链,以去除由单个氨基酸组成的序列以及在任何残基位置具有模糊化学成分的序列。删除了完全相同的副本,以具有最多解析 Cα原子的链作为代表性序列。随后,删除了解决结构中少于 16 个解析残基、未知残基或通过 NMR 方法解决的序列。由于 PDB 包含许多近重复序列,因此从 PDB 40%序列聚类的数据中选择了每个簇中分辨率最高的链。此外,删除了少于 80 个氨基酸具有解析的α碳的所有序列,以及具有超过 1,400 个残基的链。最终数据集包含 10,795 个蛋白质序列。

The procedure for filtering the recent PDB dataset based on prior template identity was as follows. Hmmsearch was run with default parameters against a copy of the PDB SEQRES fasta downloaded 15 February 2021. Template hits were accepted if the associated structure had a release date earlier than 30 April 2018. Each residue position in a query sequence was assigned the maximum identity of any template hit covering that position. Filtering then proceeded as described in the individual figure legends, based on a combination of maximum identity and sequence coverage.

该过程是基于先前模板同源性对最近的 PDB 数据集进行筛选的。使用默认参数运行了 Hmmsearch,针对 2021 年 2 月 15 日下载的 PDB SEQRES fasta 副本。如果相关结构在 2018 年 4 月 30 日之前发布,则接受模板匹配。将查询序列中的每个残基位置分配给覆盖该位置的任何模板匹配的最大同源性。然后根据最大同源性和序列覆盖率的组合,按照各个图例中所述的方法进行筛选。

The MSA depth analysis was based on computing the normalized number of effective sequences (Neff) for each position of a query sequence. Per-residue Neff values were obtained by counting the number of non-gap residues in the MSA for this position and weighting the sequences using the Neff scheme76 with a threshold of 80% sequence identity measured on the region that is non-gap in either sequence.

MSA 深度分析基于计算查询序列每个位置的标准化有效序列数(N eff )。每个残基的 N eff 值是通过计算该位置 MSA 中非间隙残基的数量,并使用 N eff 方案 76 进行加权得到的,加权阈值基于非间隙序列区域 80%的序列同源性。

Reporting summary 报告摘要

Further information on research design is available in the Nature Research Reporting Summary linked to this paper.

更多关于研究设计的信息可在与本文相关的《自然研究报道概要》中找到。

Data availability 数据可用性

All input data are freely available from public sources.

所有输入数据均来自公开来源,免费提供。

Structures from the PDB were used for training and as templates (https://www.wwpdb.org/ftp/pdb-ftp-sites; for the associated sequence data and 40% sequence clustering see also https://ftp.wwpdb.org/pub/pdb/derived_data/ and https://cdn.rcsb.org/resources/sequence/clusters/bc-40.out). Training used a version of the PDB downloaded 28 August 2019, while the CASP14 template search used a version downloaded 14 May 2020. The template search also used the PDB70 database, downloaded 13 May 2020 (https://wwwuser.gwdg.de/~compbiol/data/hhsuite/databases/hhsuite_dbs/).

蛋白质数据库(PDB)中的结构被用于训练和作为模板(https://www.wwpdb.org/ftp/pdb-ftp-sites;有关相关序列数据和 40%序列聚类,请参阅 https://ftp.wwpdb.org/pub/pdb/derived_data/和 https://cdn.rcsb.org/resources/sequence/clusters/bc-40.out)。训练使用了 2019 年 8 月 28 日下载的 PDB 版本,而 CASP14 模板搜索使用了 2020 年 5 月 14 日下载的版本。模板搜索还使用了 PDB70 数据库,该数据库于 2020 年 5 月 13 日下载(https://wwwuser.gwdg.de/~compbiol/data/hhsuite/databases/hhsuite_dbs/)。

We show experimental structures from the PDB with accession numbers 6Y4F77, 6YJ178, 6VR479, 6SK080, 6FES81, 6W6W82, 6T1Z83 and 7JTL84.

我们展示了来自 PDB 的实验结构,其访问号为 6Y4F 77 、6YJ1 78 、6VR4 79 、6SK0 80 、6FES 81 、6W6W 82 、6T1Z 83 和 7JTL 84 。

For MSA lookup at both the training and prediction time, we used UniRef90 v.2020_01 (https://ftp.ebi.ac.uk/pub/databases/uniprot/previous_releases/release-2020_01/uniref/), BFD (https://bfd.mmseqs.com), Uniclust30 v.2018_08 (https://wwwuser.gwdg.de/~compbiol/uniclust/2018_08/) and MGnify clusters v.2018_12 (https://ftp.ebi.ac.uk/pub/databases/metagenomics/peptide_database/2018_12/). Uniclust30 v.2018_08 was also used as input for constructing a distillation structure dataset.

在训练和预测阶段进行 MSA 查找时,我们使用了 UniRef90 v.2020_01(https://ftp.ebi.ac.uk/pub/databases/uniprot/previous_releases/release-2020_01/uniref/)、BFD(https://bfd.mmseqs.com)、Uniclust30 v.2018_08(https://wwwuser.gwdg.de/~compbiol/uniclust/2018_08/)和 MGnify 聚类 v.2018_12(https://ftp.ebi.ac.uk/pub/databases/metagenomics/peptide_database/2018_12/)。Uniclust30 v.2018_08 还被用作构建蒸馏结构数据集的输入。

Code availability 代码可用性

Source code for the AlphaFold model, trained weights and inference script are available under an open-source license at https://github.com/deepmind/alphafold.

AlphaFold 模型的源代码、训练权重和推理脚本已在开源许可下提供,可在 https://github.com/deepmind/alphafold 获取。

Neural networks were developed with TensorFlow v.1 (https://github.com/tensorflow/tensorflow), Sonnet v.1 (https://github.com/deepmind/sonnet), JAX v.0.1.69 (https://github.com/google/jax/) and Haiku v.0.0.4 (https://github.com/deepmind/dm-haiku). The XLA compiler is bundled with JAX and does not have a separate version number.

神经网络是在 TensorFlow v.1(https://github.com/tensorflow/tensorflow)、Sonnet v.1(https://github.com/deepmind/sonnet)、JAX v.0.1.69(https://github.com/google/jax/)和 Haiku v.0.0.4(https://github.com/deepmind/dm-haiku)等框架下开发的。XLA 编译器与 JAX 集成,没有单独的版本号。

For MSA search on BFD+Uniclust30, and for template search against PDB70, we used HHBlits and HHSearch from hh-suite v.3.0-beta.3 release 14/07/2017 (https://github.com/soedinglab/hh-suite). For MSA search on UniRef90 and clustered MGnify, we used jackhmmer from HMMER v.3.3 (http://eddylab.org/software/hmmer/). For constrained relaxation of structures, we used OpenMM v.7.3.1 (https://github.com/openmm/openmm) with the Amber99sb force field.

在 BFD+Uniclust30 上进行 MSA 搜索,以及针对 PDB70 进行模板搜索时,我们使用了 hh-suite v.3.0-beta.3 版本(发布日期:2017 年 7 月 14 日,网址:https://github.com/soedinglab/hh-suite)中的 HHBlits 和 HHSearch。在 UniRef90 和 MGnify 聚类上进行 MSA 搜索时,我们使用了 HMMER v.3.3 版本中的 jackhmmer(网址:http://eddylab.org/software/hmmer/)。对于结构的约束松弛,我们使用了 OpenMM v.7.3.1(网址:https://github.com/openmm/openmm)与 Amber99sb 力场。

Construction of BFD used MMseqs2 v.925AF (https://github.com/soedinglab/MMseqs2) and FAMSA v.1.2.5 (https://github.com/refresh-bio/FAMSA).

BFD 的构建使用了 MMseqs2 v.925AF(https://github.com/soedinglab/MMseqs2)和 FAMSA v.1.2.5(https://github.com/refresh-bio/FAMSA)。

Data analysis used Python v.3.6 (https://www.python.org/), NumPy v.1.16.4 (https://github.com/numpy/numpy), SciPy v.1.2.1 (https://www.scipy.org/), seaborn v.0.11.1 (https://github.com/mwaskom/seaborn), Matplotlib v.3.3.4 (https://github.com/matplotlib/matplotlib), bokeh v.1.4.0 (https://github.com/bokeh/bokeh), pandas v.1.1.5 (https://github.com/pandas-dev/pandas), plotnine v.0.8.0 (https://github.com/has2k1/plotnine), statsmodels v.0.12.2 (https://github.com/statsmodels/statsmodels) and Colab (https://research.google.com/colaboratory). TM-align v.20190822 (https://zhanglab.dcmb.med.umich.edu/TM-align/) was used for computing TM-scores. Structure visualizations were created in Pymol v.2.3.0 (https://github.com/schrodinger/pymol-open-source).

数据分析使用了 Python 3.6 版本(https://www.python.org/)、NumPy 1.16.4 版本(https://github.com/numpy/numpy)、SciPy 1.2.1 版本(https://www.scipy.org/)、seaborn 0.11.1 版本(https://github.com/mwaskom/seaborn)、Matplotlib 3.3.4 版本(https://github.com/matplotlib/matplotlib)、bokeh 1.4.0 版本(https://github.com/bokeh/bokeh)、pandas 1.1.5 版本(https://github.com/pandas-dev/pandas)、plotnine 0.8.0 版本(https://github.com/has2k1/plotnine)、statsmodels 0.12.2 版本(https://github.com/statsmodels/statsmodels)和 Colab(https://research.google.com/colaboratory)。使用 TM-align v.20190822(https://zhanglab.dcmb.med.umich.edu/TM-align/)进行 TM 分数计算。结构可视化是在 Pymol v.2.3.0(https://github.com/schrodinger/pymol-open-source)中创建的。

References 参考文献

Thompson, M. C., Yeates, T. O. & Rodriguez, J. A. Advances in methods for atomic resolution macromolecular structure determination. F1000Res. 9, 667 (2020).

汤普森,M. C.,叶茨,T. O. & 罗德里格斯,J. A. 原子分辨率大分子结构测定方法进展。F1000Res. 9,667(2020)。Bai, X.-C., McMullan, G. & Scheres, S. H. W. How cryo-EM is revolutionizing structural biology. Trends Biochem. Sci. 40, 49–57 (2015).

白,X.-C.,麦克马伦,G. & 舍雷尔斯,S. H. W. 《冷冻电镜如何革新结构生物学》。《生物化学趋势》第 40 卷,第 49-57 页(2015 年)。Jaskolski, M., Dauter, Z. & Wlodawer, A. A brief history of macromolecular crystallography, illustrated by a family tree and its Nobel fruits. FEBS J. 281, 3985–4009 (2014).

贾斯科尔斯基,M.,道特,Z. & 沃拉达韦尔,A. 以家族树及其诺贝尔果实为例,简述大分子晶体学的历史。FEBS J. 281,3985–4009(2014)。Wüthrich, K. The way to NMR structures of proteins. Nat. Struct. Biol. 8, 923–925 (2001).

吴宇驰,K. 蛋白质核磁共振结构解析之路。自然结构生物学,第 8 卷,第 923-925 页(2001 年)。wwPDB Consortium. Protein Data Bank: the single global archive for 3D macromolecular structure data. Nucleic Acids Res. 47, D520–D528 (2018).

蛋白质数据银行联盟. 蛋白质数据银行:3D 大分子结构数据的单一全球档案。核酸研究,第 47 卷,D520–D528 页(2018 年)。Mitchell, A. L. et al. MGnify: the microbiome analysis resource in 2020. Nucleic Acids Res. 48, D570–D578 (2020).

米切尔,A. L. 等人. MGnify:2020 年的微生物组分析资源。核酸研究,48 卷,D570–D578(2020 年)。Steinegger, M., Mirdita, M. & Söding, J. Protein-level assembly increases protein sequence recovery from metagenomic samples manyfold. Nat. Methods 16, 603–606 (2019).

Steinegger, M.,Mirdita, M. & Söding, J. 蛋白质水平组装显著提高宏基因组样本中的蛋白质序列恢复率。Nat. Methods 16,603–606(2019)。Dill, K. A., Ozkan, S. B., Shell, M. S. & Weikl, T. R. The protein folding problem. Annu. Rev. Biophys. 37, 289–316 (2008).

迪尔,K. A.,奥兹坎,S. B.,谢尔,M. S. & 韦克尔,T. R. 蛋白质折叠问题。年度生物物理评论,第 37 卷,第 289-316 页(2008 年)。Anfinsen, C. B. Principles that govern the folding of protein chains. Science 181, 223–230 (1973).

安芬森,C. B. 《蛋白质链折叠的原理》。科学 181 卷,第 223-230 页(1973 年)。Senior, A. W. et al. Improved protein structure prediction using potentials from deep learning. Nature 577, 706–710 (2020).

""" Senior, A. W. 等人. 利用深度学习势能改进蛋白质结构预测。自然 577,第 706-710 页(2020 年)。Wang, S., Sun, S., Li, Z., Zhang, R. & Xu, J. Accurate de novo prediction of protein contact map by ultra-deep learning model. PLOS Comput. Biol. 13, e1005324 (2017).

王,S.,孙,S.,李,Z.,张,R. & 徐,J. 通过超深度学习模型准确预测蛋白质接触图。PLOS Comput. Biol. 13,e1005324(2017)。Zheng, W. et al. Deep-learning contact-map guided protein structure prediction in CASP13. Proteins 87, 1149–1164 (2019).

郑伟等. 深度学习接触图引导的 CASP13 蛋白质结构预测. 蛋白质杂志,87 卷,第 1149-1164 页(2019 年)。Abriata, L. A., Tamò, G. E. & Dal Peraro, M. A further leap of improvement in tertiary structure prediction in CASP13 prompts new routes for future assessments. Proteins 87, 1100–1112 (2019).

阿布里塔,L. A.,塔莫,G. E.,达尔·帕拉罗,M. A. 在 CASP13 中对三级结构预测的进一步改进促使未来评估出现新的途径。蛋白质 87,第 1100-1112 页(2019 年)。Pearce, R. & Zhang, Y. Deep learning techniques have significantly impacted protein structure prediction and protein design. Curr. Opin. Struct. Biol. 68, 194–207 (2021).

佩奇,R. & 张,Y. 深度学习技术在蛋白质结构预测和蛋白质设计方面产生了重大影响。当前结构生物学评论,68,194-207(2021)。Moult, J., Fidelis, K., Kryshtafovych, A., Schwede, T. & Topf, M. Critical assessment of techniques for protein structure prediction, fourteenth round. CASP 14 Abstract Book https://www.predictioncenter.org/casp14/doc/CASP14_Abstracts.pdf (2020).

Moult, J., Fidelis, K., Kryshtafovych, A., Schwede, T. & Topf, M. 蛋白质结构预测技术评估,第十四轮。CASP 14 摘要集 https://www.predictioncenter.org/casp14/doc/CASP14_Abstracts.pdf (2020)。Brini, E., Simmerling, C. & Dill, K. Protein storytelling through physics. Science 370, eaaz3041 (2020).

布利尼,E.,西蒙林,C. & 戴尔,K. 通过物理学讲述蛋白质故事。科学 370 卷,eaaz3041(2020 年)。Sippl, M. J. Calculation of conformational ensembles from potentials of mean force. An approach to the knowledge-based prediction of local structures in globular proteins. J. Mol. Biol. 213, 859–883 (1990).

Sippl, M. J. 从平均力势计算构象集合。一种基于知识预测球状蛋白质局部结构的方法。J. Mol. Biol. 213, 859–883 (1990)。Šali, A. & Blundell, T. L. Comparative protein modelling by satisfaction of spatial restraints. J. Mol. Biol. 234, 779–815 (1993).

沙利,A. & 布伦德尔,T. L. 通过满足空间约束进行比较蛋白质建模。J. Mol. Biol. 234, 779–815 (1993)。Roy, A., Kucukural, A. & Zhang, Y. I-TASSER: a unified platform for automated protein structure and function prediction. Nat. Protocols 5, 725–738 (2010).

罗伊,A.,库楚库拉尔,A. & 张毅,Y. I-TASSER:一种用于自动化蛋白质结构和功能预测的统一平台。Nat. Protocols 5,第 725-738 页(2010 年)。Altschuh, D., Lesk, A. M., Bloomer, A. C. & Klug, A. Correlation of co-ordinated amino acid substitutions with function in viruses related to tobacco mosaic virus. J. Mol. Biol. 193, 693–707 (1987).

Altschuh, D.,Lesk, A. M.,Bloomer, A. C. 与 Klug, A. 研究了与烟草花叶病毒相关的病毒中协调氨基酸替换与功能的相关性。J. Mol. Biol. 193,第 693-707 页(1987 年)。Shindyalov, I. N., Kolchanov, N. A. & Sander, C. Can three-dimensional contacts in protein structures be predicted by analysis of correlated mutations? Protein Eng. 7, 349–358 (1994).

Shindyalov, I. N.,Kolchanov, N. A. & Sander, C. 通过分析相关突变,能否预测蛋白质结构中的三维接触?蛋白质工程,7,349-358(1994)。Weigt, M., White, R. A., Szurmant, H., Hoch, J. A. & Hwa, T. Identification of direct residue contacts in protein–protein interaction by message passing. Proc. Natl Acad. Sci. USA 106, 67–72 (2009).

魏特,M.,怀特,R. A.,舒尔曼,H.,霍赫,J. A. & 华,T. 通过消息传递识别蛋白质-蛋白质相互作用中的直接残基接触。美国国家科学院院刊 106,67–72(2009)。Marks, D. S. et al. Protein 3D structure computed from evolutionary sequence variation. PLoS ONE 6, e28766 (2011).

Marks, D. S. 等人. 从进化序列变异计算出的蛋白质 3D 结构。PLoS ONE 6,e28766(2011 年)。Jones, D. T., Buchan, D. W. A., Cozzetto, D. & Pontil, M. PSICOV: precise structural contact prediction using sparse inverse covariance estimation on large multiple sequence alignments. Bioinformatics 28, 184–190 (2012).

琼斯,D. T.,布坎,D. W. A.,科齐托,D. & 佩尔蒂,M. PSICOV:基于稀疏逆协方差估计的大规模多序列比对中的精确结构接触预测。生物信息学 28,184–190(2012)。Moult, J., Pedersen, J. T., Judson, R. & Fidelis, K. A large-scale experiment to assess protein structure prediction methods. Proteins 23, ii–iv (1995).

Moult, J., Pedersen, J. T., Judson, R. & Fidelis, K. A large-scale experiment to assess protein structure prediction methods. Proteins 23, ii–iv (1995). 莫尔特,J.,佩德森,J. T.,朱迪森,R. & 菲德尔,K. 评估蛋白质结构预测方法的大规模实验。蛋白质 23,ii–iv(1995)。Kryshtafovych, A., Schwede, T., Topf, M., Fidelis, K. & Moult, J. Critical assessment of methods of protein structure prediction (CASP)-round XIII. Proteins 87, 1011–1020 (2019).

克里什塔福维奇,A.,施维德,T.,托普夫,M.,费德尔,K.,莫尔特,J. 蛋白质结构预测方法评估(CASP)-第 13 轮。蛋白质 87,1011–1020(2019)。Zhang, Y. & Skolnick, J. Scoring function for automated assessment of protein structure template quality. Proteins 57, 702–710 (2004).

张,Y. & 斯科尼克,J. 蛋白质结构模板质量自动评估的评分函数。蛋白质 57,702–710(2004)。Tu, Z. & Bai, X. Auto-context and its application to high-level vision tasks and 3D brain image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 32, 1744–1757 (2010).

图,Z. & 白,X. 自适应上下文及其在高层次视觉任务和 3D 脑图像分割中的应用。IEEE Transactions on Pattern Analysis and Machine Intelligence, 32, 1744–1757 (2010)。Carreira, J., Agrawal, P., Fragkiadaki, K. & Malik, J. Human pose estimation with iterative error feedback. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 4733–4742 (2016).

Carreira, J., Agrawal, P., Fragkiadaki, K. & Malik, J. 基于迭代误差反馈的人体姿态估计。载于 IEEE 计算机视觉与模式识别会议论文集,第 4733-4742 页(2016 年)。Mirabello, C. & Wallner, B. rawMSA: end-to-end deep learning using raw multiple sequence alignments. PLoS ONE 14, e0220182 (2019).

Mirabello, C. & Wallner, B. rawMSA:基于原始多重序列比对的全端深度学习。PLoS ONE 14, e0220182 (2019)。Huang, Z. et al. CCNet: criss-cross attention for semantic segmentation. In Proc. IEEE/CVF International Conference on Computer Vision 603–612 (2019).

黄,Z. 等. CCNet:用于语义分割的交叉注意力机制。载于 IEEE/CVF 国际计算机视觉会议论文集,第 603-612 页(2019 年)。Hornak, V. et al. Comparison of multiple Amber force fields and development of improved protein backbone parameters. Proteins 65, 712–725 (2006).

霍纳克,V. 等. 多个 Amber 力场的比较及蛋白质骨架参数的改进开发。蛋白质 65,第 712-725 页(2006 年)。Zemla, A. LGA: a method for finding 3D similarities in protein structures. Nucleic Acids Res. 31, 3370–3374 (2003).

Zemla, A. LGA:一种用于寻找蛋白质结构三维相似性的方法。核酸研究,31 卷,第 3370-3374 页(2003 年)。Mariani, V., Biasini, M., Barbato, A. & Schwede, T. lDDT: a local superposition-free score for comparing protein structures and models using distance difference tests. Bioinformatics 29, 2722–2728 (2013).

马里亚尼,V.,比亚齐尼,M.,巴巴托,A. & 施韦德,T. lDDT:一种基于距离差异测试的局部超叠加评分方法,用于比较蛋白质结构和模型。生物信息学 29,2722-2728(2013)。Xie, Q., Luong, M.-T., Hovy, E. & Le, Q. V. Self-training with noisy student improves imagenet classification. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 10687–10698 (2020).

谢,Q.,吕,M.-T.,霍伊,E.,黎,Q. V. 自监督噪声学生提升 ImageNet 分类。载于 IEEE/CVF 计算机视觉与模式识别会议论文集,第 10687-10698 页(2020 年)。Mirdita, M. et al. Uniclust databases of clustered and deeply annotated protein sequences and alignments. Nucleic Acids Res. 45, D170–D176 (2017).

Mirdita, M. 等人. 分簇和深度注释的蛋白质序列和比对的无簇数据库。核酸研究,45,D170–D176(2017)。Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies 1, 4171–4186 (2019).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT:用于语言理解的深度双向变换器预训练。载于 2019 年北美计算语言学协会分会会议:人机语言技术 1,第 4171-4186 页(2019 年)。Rao, R. et al. MSA transformer. In Proc. 38th International Conference on Machine Learning PMLR 139, 8844–8856 (2021).

Rao, R. 等人. MSA Transformer. 在《第 38 届国际机器学习会议论文集》(PMLR 139),第 8844-8856 页(2021 年)。Tunyasuvunakool, K. et al. Highly accurate protein structure prediction for the human proteome. Nature https://doi.org/10.1038/s41586-021-03828-1 (2021).

Tunyasuvunakool, K. 等人. 高精度人类蛋白质结构预测。自然 https://doi.org/10.1038/s41586-021-03828-1 (2021)。Kuhlman, B. & Bradley, P. Advances in protein structure prediction and design. Nat. Rev. Mol. Cell Biol. 20, 681–697 (2019).

库尔曼,B. & 布拉德利,P. 蛋白质结构预测与设计进展。自然-分子细胞生物学,第 20 卷,第 681-697 页(2019 年)。Marks, D. S., Hopf, T. A. & Sander, C. Protein structure prediction from sequence variation. Nat. Biotechnol. 30, 1072–1080 (2012).

Marks, D. S.,Hopf, T. A.,Sander, C. 蛋白质结构预测与序列变异。自然生物技术杂志,第 30 卷,第 1072-1080 页(2012 年)。Qian, N. & Sejnowski, T. J. Predicting the secondary structure of globular proteins using neural network models. J. Mol. Biol. 202, 865–884 (1988).

钱,N. & Sejnowski,T. J. 利用神经网络模型预测球状蛋白质的二级结构。J. Mol. Biol. 202,865–884(1988)。Fariselli, P., Olmea, O., Valencia, A. & Casadio, R. Prediction of contact maps with neural networks and correlated mutations. Protein Eng. 14, 835–843 (2001).

法里斯埃利,P.,奥尔梅亚,O.,瓦伦西亚,A. & 卡萨迪奥,R. 利用神经网络和相关性突变预测接触图。蛋白质工程,14,835-843(2001)。Yang, J. et al. Improved protein structure prediction using predicted interresidue orientations. Proc. Natl Acad. Sci. USA 117, 1496–1503 (2020).

杨,J. 等. 利用预测的残基取向改进蛋白质结构预测。美国国家科学院院刊 117,1496–1503(2020)。Li, Y. et al. Deducing high-accuracy protein contact-maps from a triplet of coevolutionary matrices through deep residual convolutional networks. PLOS Comput. Biol. 17, e1008865 (2021).

李,Y. 等. 通过深度残差卷积网络从三个共进化矩阵中推断高精度蛋白质接触图. PLOS 计算生物学 17,e1008865(2021 年)。He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

他,K.,张,X.,任,S.,孙,J. 深度残差学习在图像识别中的应用。载于 IEEE 计算机视觉与模式识别会议论文集,第 770-778 页(2016 年)。AlQuraishi, M. End-to-end differentiable learning of protein structure. Cell Syst. 8, 292–301 (2019).

阿卢拉希,M. 蛋白质结构的端到端可微分学习。细胞系统,第 8 卷,第 292-301 页(2019 年)。Senior, A. W. et al. Protein structure prediction using multiple deep neural networks in the 13th Critical Assessment of Protein Structure Prediction (CASP13). Proteins 87, 1141–1148 (2019).

《A. W. Senior 等人. 在第 13 届蛋白质结构预测关键评估(CASP13)中利用多个深度神经网络进行蛋白质结构预测。蛋白质 87,第 1141-1148 页(2019 年)。》Ingraham, J., Riesselman, A. J., Sander, C. & Marks, D. S. Learning protein structure with a differentiable simulator. in Proc. International Conference on Learning Representations (2019).

Ingraham, J., Riesselman, A. J., Sander, C. & Marks, D. S. 在《国际学习表示会议论文集》(2019 年)中提出:使用可微分的模拟器学习蛋白质结构。Li, J. Universal transforming geometric network. Preprint at https://arxiv.org/abs/1908.00723 (2019).

李,J. 《通用变换几何网络》。预印本,https://arxiv.org/abs/1908.00723 (2019)。Xu, J., McPartlon, M. & Li, J. Improved protein structure prediction by deep learning irrespective of co-evolution information. Nat. Mach. Intell. 3, 601–609 (2021).

徐,J.,麦克帕特伦,M. & 李,J. 不依赖于共进化信息,通过深度学习改进蛋白质结构预测。自然机器智能 3,601–609(2021)。Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems 5998–6008 (2017).

瓦斯瓦尼,A. 等. 《注意力即是所需》。载于《神经信息处理系统进展》第 5998-6008 页(2017 年)。Wang, H. et al. Axial-deeplab: stand-alone axial-attention for panoptic segmentation. in European Conference on Computer Vision 108–126 (Springer, 2020).

王,H. 等. 轴向 deeplab:用于全景分割的独立轴向注意力机制. 欧洲计算机视觉会议论文集第 108-126 页(Springer,2020 年)。Alley, E. C., Khimulya, G., Biswas, S., AlQuraishi, M. & Church, G. M. Unified rational protein engineering with sequence-based deep representation learning. Nat. Methods 16, 1315–1322 (2019).

Alley, E. C.,Khimulya, G.,Biswas, S.,AlQuraishi, M. & Church, G. M. 基于序列深度表示学习的统一理性蛋白质工程。自然方法 16,第 1315-1322 页(2019 年)。Heinzinger, M. et al. Modeling aspects of the language of life through transfer-learning protein sequences. BMC Bioinformatics 20, 723 (2019).

海因茨 inger, M. 等人. 通过迁移学习建模生命语言方面的蛋白质序列。生物信息学杂志 20, 723 (2019)。Rives, A. et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc. Natl Acad. Sci. USA 118, e2016239118 (2021).

Rives, A. 等人. 通过无监督学习扩展至 2.5 亿个蛋白质序列,生物结构和功能得以出现。美国国家科学院院刊 118, e2016239118 (2021)。Pereira, J. et al. High-accuracy protein structure prediction in CASP14. Proteins https://doi.org/10.1002/prot.26171 (2021).

Gupta, M. et al. CryoEM and AI reveal a structure of SARS-CoV-2 Nsp2, a multifunctional protein involved in key host processes. Preprint at https://doi.org/10.1101/2021.05.10.443524 (2021).

Ingraham, J., Garg, V. K., Barzilay, R. & Jaakkola, T. Generative models for graph-based protein design. in Proc. 33rd Conference on Neural Information Processing Systems (2019).

Johnson, L. S., Eddy, S. R. & Portugaly, E. Hidden Markov model speed heuristic and iterative HMM search procedure. BMC Bioinformatics 11, 431 (2010).

Remmert, M., Biegert, A., Hauser, A. & Söding, J. HHblits: lightning-fast iterative protein sequence searching by HMM-HMM alignment. Nat. Methods 9, 173–175 (2012).