Hunting for Memory Leaks in asyncio Applications

寻找异步应用程序中的内存泄漏

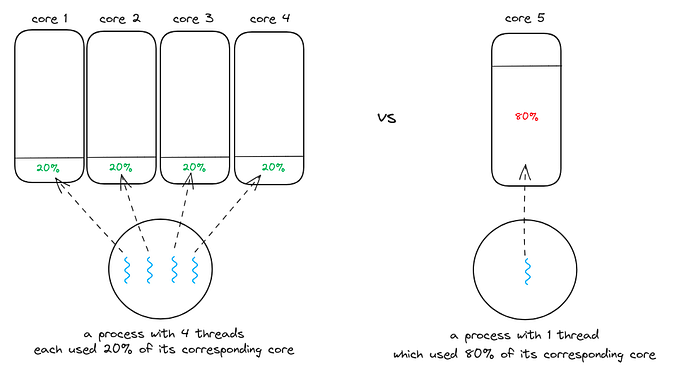

Asyncio allows you to write high performance network applications in Python. It’s been a breath of fresh air in reducing complexity and resource abuse common in sync applications. However bugs happen — and they happen to asyncio apps too.

Asyncio 允许您使用 Python 编写高性能网络应用程序。这对于降低同步应用程序中常见的复杂性和资源滥用来说是一股清新的空气。然而错误还是会发生——而且它们也会发生在异步应用程序中。

Sailing into the last two weeks of 2017 that I fully intended to spend experimenting with various eggnog recipes. I was alerted by our DevOps team that our asyncio app was consuming 10GB of memory. That is approximately 100 times more than it should!

进入 2017 年的最后两周,我完全打算花时间尝试各种蛋酒食谱。我们的 DevOps 团队提醒我,我们的 asyncio 应用程序正在消耗 10GB 内存。这大约是应有的 100 倍!

I reviewed all recent changes and nothing obvious jumped up at me. Also to make matters worse I had no idea what is triggering the memory allocation as restart would fix this problem — and it could take several hours for the app to grow again — but grow it did. And it ate all the memory it could.

我回顾了最近的所有变化,没有任何明显的变化引起我的注意。更糟糕的是,我不知道是什么触发了内存分配,因为重新启动可以解决这个问题——应用程序可能需要几个小时才能再次增长——但它确实增长了。它吃掉了它能吃掉的所有内存。

Code reviews and minor bug fixes accomplished nothing to change this.

代码审查和小错误修复并没有改变这一点。

I knew I was facing the dreaded question.

我知道我正面临着这个可怕的问题。

What is the application actually doing?

应用程序实际上在做什么?

I realized I needed an endpoint where I could examine such stats at runtime (since that is where the problem happens). So I wrote simple view called /stats where I started displaying various useful info.

我意识到我需要一个端点,可以在运行时检查此类统计信息(因为这就是问题发生的地方)。因此,我编写了名为 /stats 的简单视图,在其中开始显示各种有用的信息。

How many coroutines does the application use?

应用程序使用了多少个协程?

Here we are helped by asyncio.Task.all_tasks method which returns a list of all currently running coroutines. Better yet we can inspect these coroutines to gain more insight. We can figure out if a coroutine is cancelled if it has an exception and most important in our case what actually created it by examining the stack.

在这里,我们得到了asyncio.Task.all_tasks方法的帮助,该方法返回所有当前正在运行的协程的列表。更好的是,我们可以检查这些协程以获得更多见解。如果协程出现异常,我们可以确定它是否被取消,在我们的例子中最重要的是通过检查堆栈来确定实际创建它的内容。

def show_coro(c):

data = OrderedDict([

('txt', str(c)),

('type', str(type(c))),

('done', c.done()),

('cancelled', False),

('stack', None),

('exception', None),

])

if not c.done():

data['stack'] = [format_frame(x) for x in c.get_stack()]

else:

if c.cancelled():

data['cancelled'] = True

else:

data['exception'] = str(c.exception()) return datadef format_frame(f):

keys = ['f_code', 'f_lineno']

return OrderedDict([(k, str(getattr(f, k))) for k in keys])

Which will give us an output that can look something like this.

这会给我们一个看起来像这样的输出。

{

"txt": "<Task pending coro=<writer_process() running at /var/www/app/server/influx.py:52> wait_for=<Future pending cb=[Task._wakeup()]>>",

"type": "<class 'asyncio.tasks.Task'>",

"done": false,

"cancelled": false,

"stack": [

{

"f_code": "<code object writer_process at 0x7f04df9a6b70, file \"/var/www/app/server/influx.py\", line 36>",

"f_lineno": "52"

}

],

"exception": null

},Where is the memory going? What is being allocated?

记忆去哪儿了?正在分配什么?

Here we are helped by the tracemalloc module. Which will show us what is being allocated and where.

在这里,我们得到了tracemalloc模块的帮助。这将告诉我们正在分配什么以及在哪里。

if tracemalloc.is_tracing():

snapshot = tracemalloc.take_snapshot()

top_stats = snapshot.statistics('lineno')

view_data['top_10_trace'] = [str(x) for x in top_stats[:10]]Which may look like this.

可能看起来像这样。

"top_10_trace": [

"/usr/lib/python3.5/site-packages/raven/breadcrumbs.py:47: size=40.6 KiB, count=100, average=416 B",

"/usr/lib/python3.5/logging/__init__.py:1270: size=35.3 KiB, count=468, average=77 B",

"/usr/lib/python3.5/json/decoder.py:355: size=28.5 KiB, count=449, average=65 B",

...The answers to these two questions is all I needed to solve my troubles.

这两个问题的答案就是解决我的烦恼所需要的。

First of all, tracemalloc tipped me off that I have an extremely high number of allocations tied up in search results objects. This was unexpected since the search results should be freed up after being returned to the user.

首先,tracemalloc 告诉我,我有大量的分配与搜索结果对象相关。这是出乎意料的,因为搜索结果在返回给用户后应该被释放。

Close examination of what happens with the search results object made me realize that we also log a number of results to our statistics database and we do so in separate coroutine (as to not to block the response to user with what is just our internal statistics collection).

仔细检查搜索结果对象发生的情况使我意识到,我们还将许多结果记录到我们的统计数据库中,并且我们在单独的协程中执行此操作(以免阻止仅使用我们的内部统计收集来阻止对用户的响应) )。

That led me to the conclusion that perhaps something is wrong with those logging coroutines. Under normal circumstances, one node would run between 15 to 40 coroutines — when the huge memory increase happened the number of coroutines was 3000 or more.

这让我得出结论,这些日志记录协程可能出了问题。正常情况下,一个节点会运行 15 到 40 个协程——当内存大幅增加时,协程数量会达到 3000 个甚至更多。

Mystery solved! The whole problem was caused by our statistics database suffering temporary failures and being unresponsive, for short periods of time. When that happened our application had a large number of coroutines running, all waiting to write to the stats database.

谜团解开了!整个问题是由于我们的统计数据库在短时间内出现临时故障且无响应而引起的。当这种情况发生时,我们的应用程序运行了大量协程,所有协程都在等待写入统计数据库。

The optimization and fix was simple.

优化和修复很简单。

When logging the number of search results we don’t need to pass in the entire result set, just the number of results.

记录搜索结果的数量时,我们不需要传递整个结果集,只需传递结果的数量。

Second and more importantly ensure we do not have long lived logging coroutines running. We can specify a timeout by using asycnio.wait_for(coroutine, 5). By using this feature, occasional slowdowns of external services don’t create performance problems in our applications since we’ll timeout after 5 seconds.

其次,更重要的是确保我们没有长期运行的日志记录协程。我们可以使用asycnio.wait_for(coroutine, 5)指定超时。通过使用此功能,外部服务的偶尔变慢不会在我们的应用程序中造成性能问题,因为我们将在 5 秒后超时。

The application has now been running for days and only consuming 100MB of memory.

该应用程序现已运行数天,仅消耗 100MB 内存。

May your code be leak free in 2018 but if it does leak — you know what to do.

祝你的代码在 2018 年不会泄漏,但如果确实泄漏,你知道该怎么做。

Keep following us on Medium and Twitter for more snippets of the Tech world according to G!

请继续在Medium和Twitter上关注我们,了解 G 报道的科技世界的更多片段!

Want to help G Adventures change people’s lives and travel the world? Check out all our jobs and apply today.

想帮助 G Adventures 改变人们的生活并环游世界吗?查看我们所有的职位并立即申请。