COVER 封面面

PREFACE 前言言

THE MOVE TOWARD MORE ALGORITHMIC APPROACH 向更加算法化的方法迈进进

COMPETITION 竞争竞争

ACCEPTING RISK 接受风险风险

THE LONG BULL MARKET 长牛市市

WHAT'S NEW IN THE SIXTH EDITION 第六版中的新内容内容

COMPANION WEBSITE 伴随网站网站

WITH APPRECIATION 感恩恩

CHAPTER 1: Introduction 第一章:引言言

THE EXPANDING ROLE OF TECHNICAL ANALYSIS 技术分析日益重要的作用作用

CONVERGENCE OF TRADING STYLES IN STOCKS AND FUTURES 股票和期货交易风格的趋同同

PROFESSIONAL AND AMATEUR 专业和业余余

RANDOM WALK 随机漫步步

DECIDING ON A TRADING STYLE 决定交易风格格

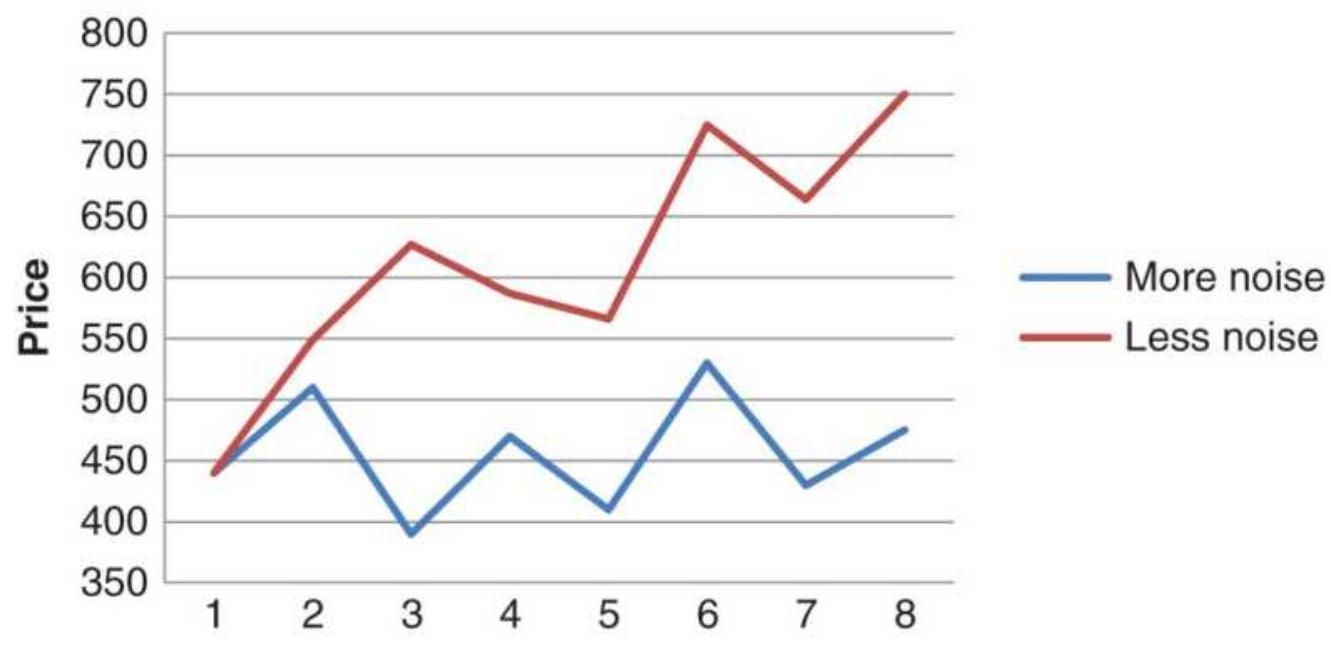

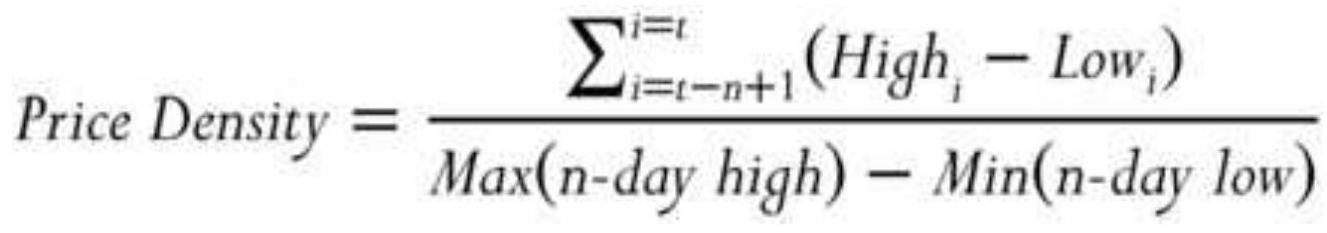

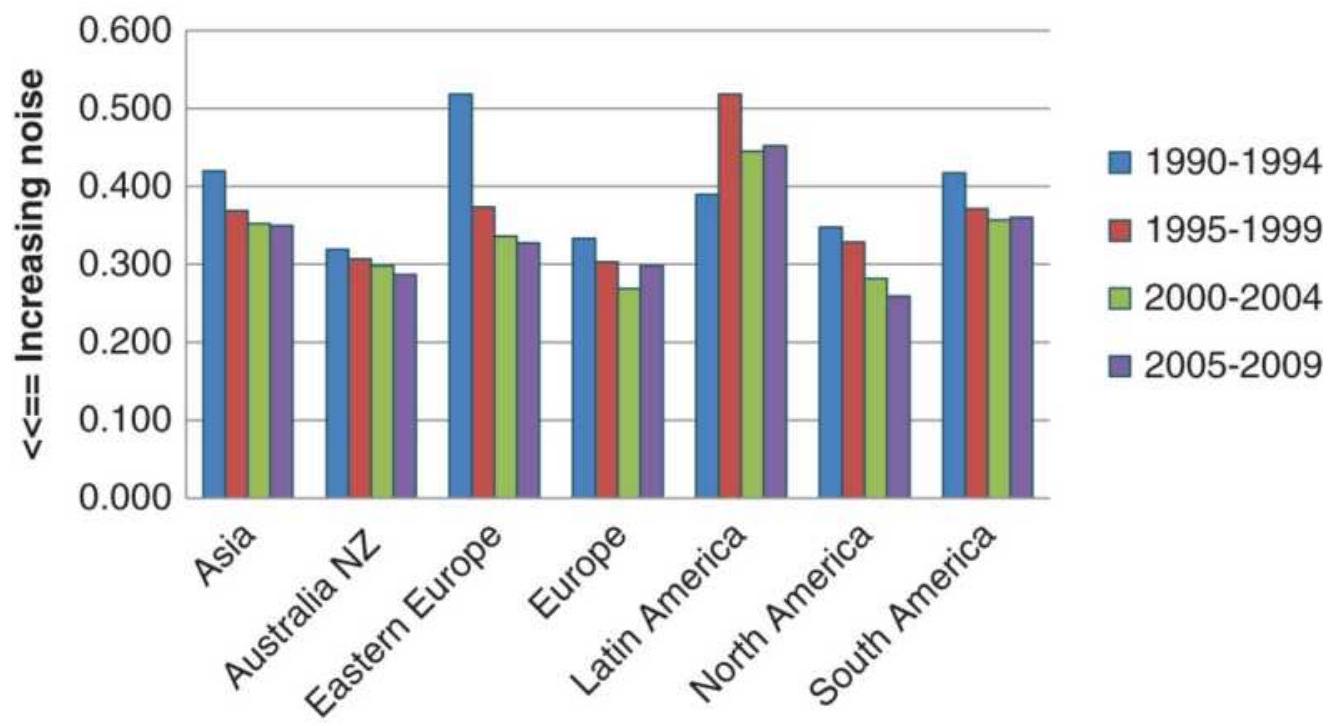

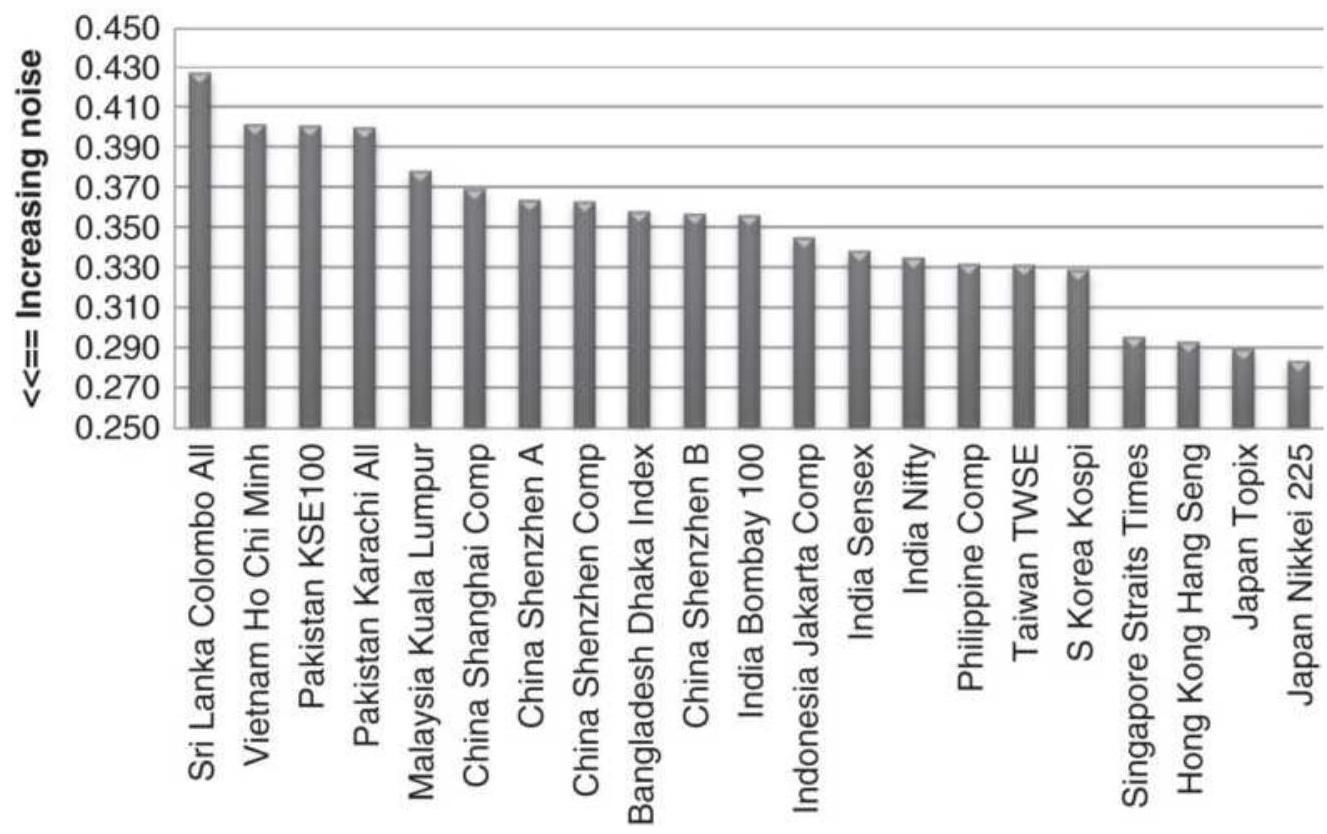

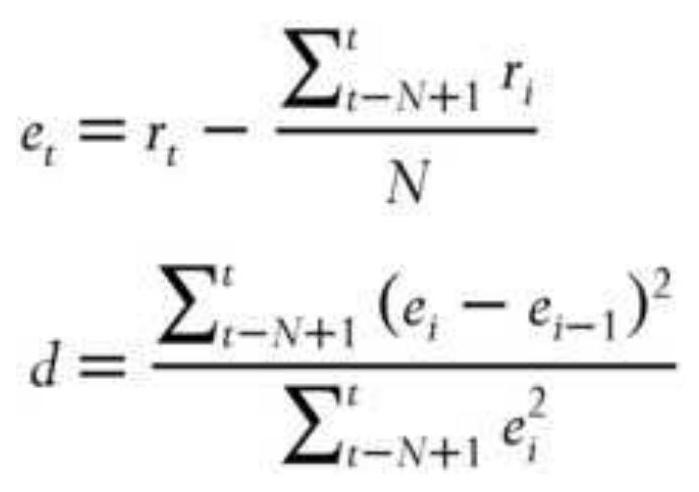

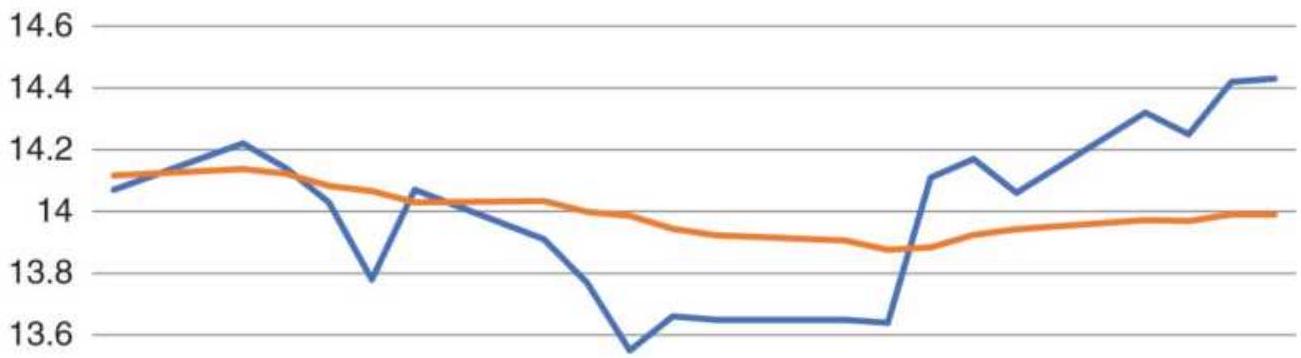

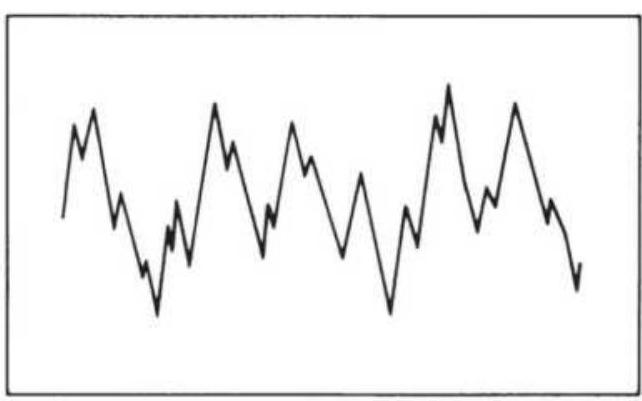

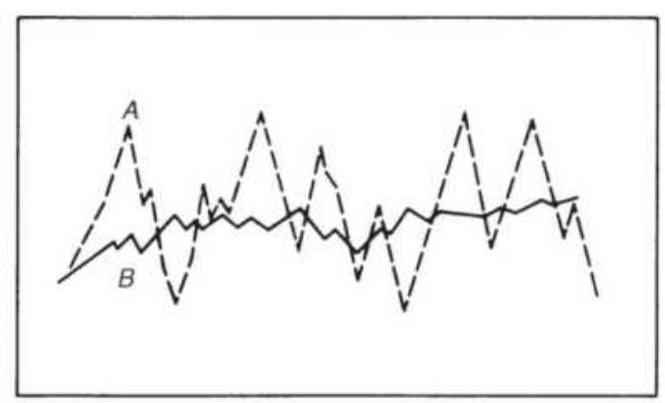

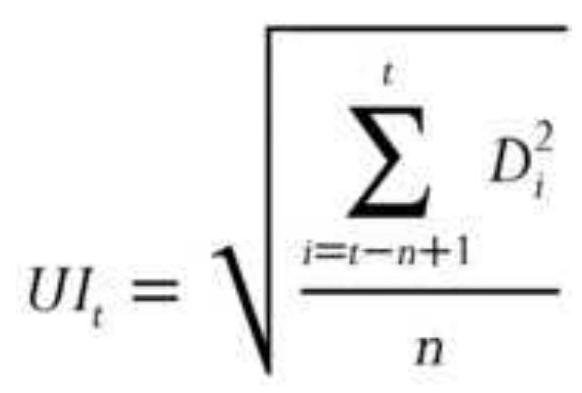

MEASURING NOISE 测量噪声声

MATURING MARKETS AND 成熟市场与与

GLOBALIZATION 全球化化

BACKGROUND MATERIAL 背景材料材料

SYSTEM DEVELOPMENT GUIDELINES``` 系统开发指南指南

OBJECTIVES OF THIS BOOK 本书目标目标

PROFILE OF A TRADING SYSTEM 交易系统概要概要

A WORD ABOUT THE NOTATION USED IN 关于所使用的符号的说明说明

THIS BOOK 这本书书

A FINAL COMMENT 最后的评论评论

CHAPTER 2: Basic Concepts and Calculations 第 2 章:基本概念和计算计算

A BRIEF WORD ABOUT DATA 关于数据的简短说明说明

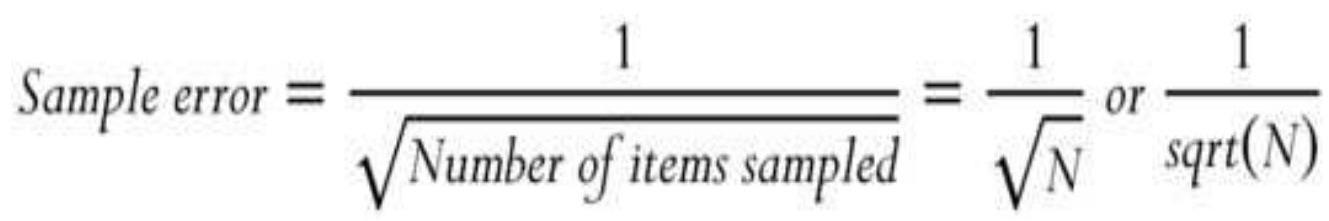

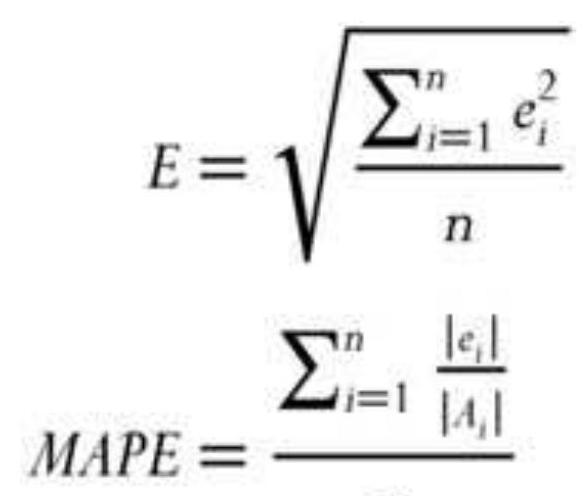

SIMPLE MEASURES OF ERROR 简单误差测量法法

ON AVERAGE 平均来说来说

PRICE DISTRIBUTION 价格分布布

MOMENTS OF THE DISTRIBUTION: MEAN, 分布的矩:均值,,

VARIANCE, SKEWNESS, AND KURTOSIS 方差、偏斜度和峰度度

CHOOSING BETWEEN FREQUENCY 选择频率之间之间

DISTRIBUTION AND STANDARD 分配与标准标准

DEVIATION 偏差差

MEASURING SIMILARITY 测量相似性性

STANDARDIZING RISK AND RETURN 标准化风险和回报报

THE INDEX 索引引

AN OVERVIEW OF PROBABILITY 概率概述述

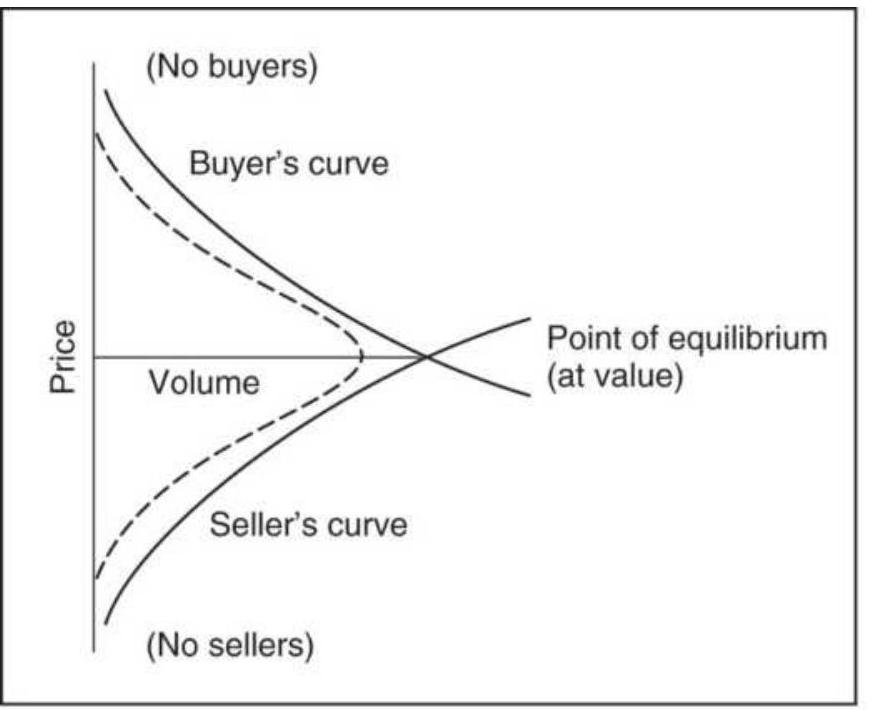

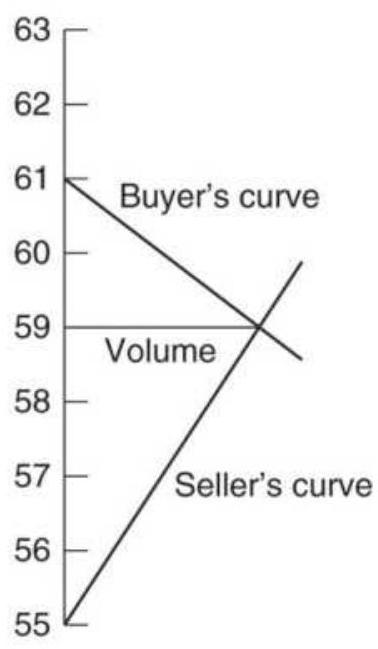

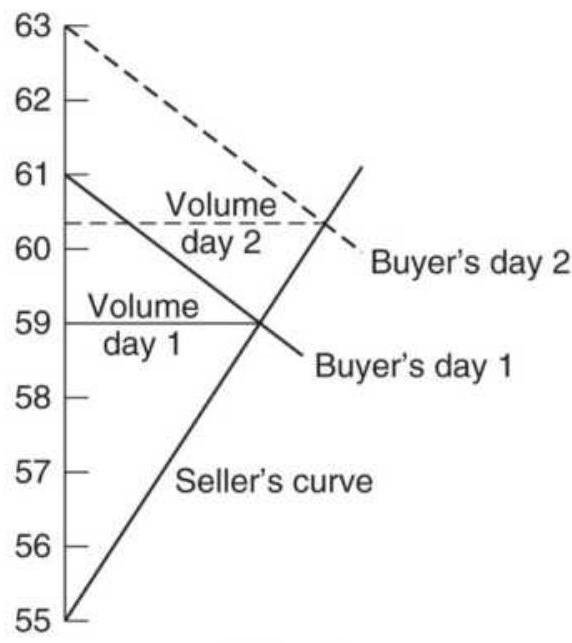

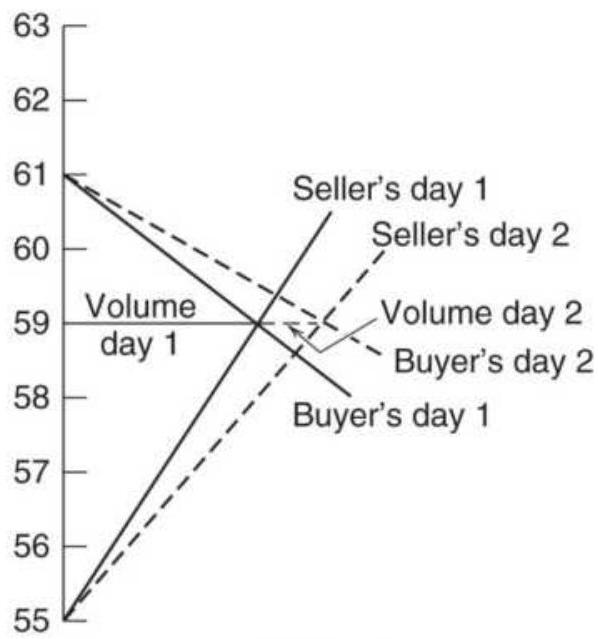

SUPPLY AND DEMAND 供求求

NOTES 笔记记

CHAPTER 3: Charting 第 3 章:制图图

FINDING CONSISTENT PATTERNS 寻找一致的模式模式

WHAT CAUSES THE MAJOR PRICE MOVES 是什么导致了主要价格波动动

AND TRENDS? 和趋势??

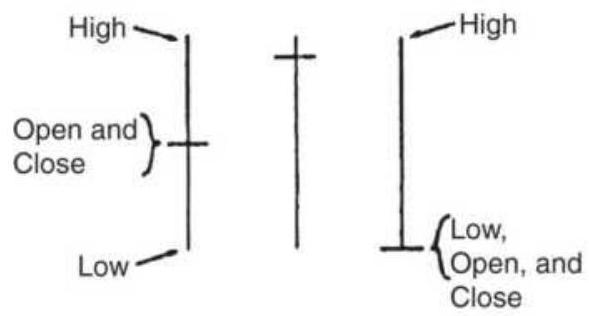

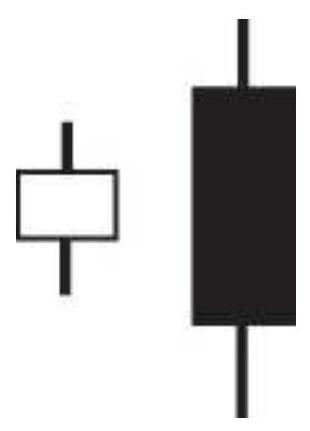

THE BAR CHART AND ITS 柱状图及其其

INTERPRETATION BY CHARLES DOW

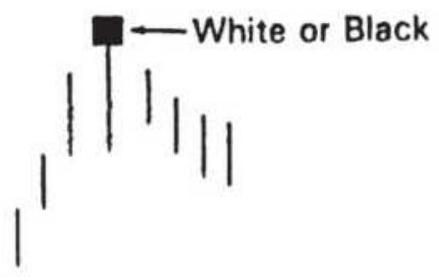

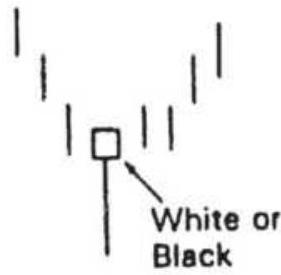

CHART FORMATIONS

TRENDLINES

ONE-DAY PATTERNS

CONTINUATION PATTERNS

BASIC CONCEPTS IN CHART TRADING

ACCUMULATION AND DISTRIBUTION:

BOTTOMS AND TOPS

EPISODIC PATTERNS

PRICE OBJECTIVES FOR BAR CHARTING

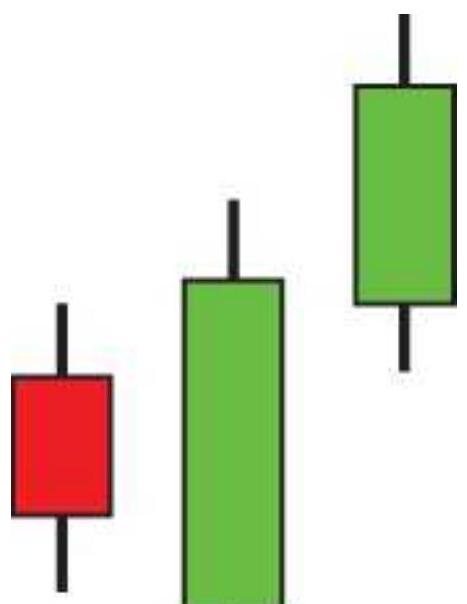

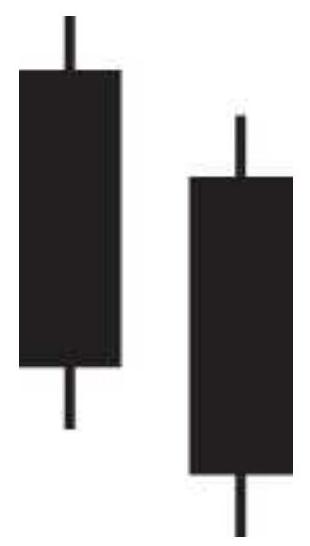

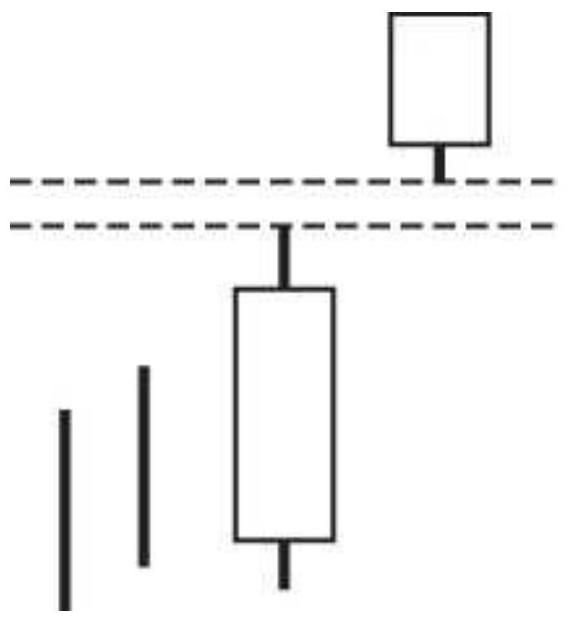

IMPLIED STRATEGIES IN CANDLESTICK

CHARTS

PRACTICAL USE OF THE BAR CHART

EVOLUTION IN PRICE PATTERNS

NOTES

CHAPTER 4: Charting Systems

DUNNIGAN AND THE THRUST METHOD

NOFRI'S CONGESTION-PHASE SYSTEM

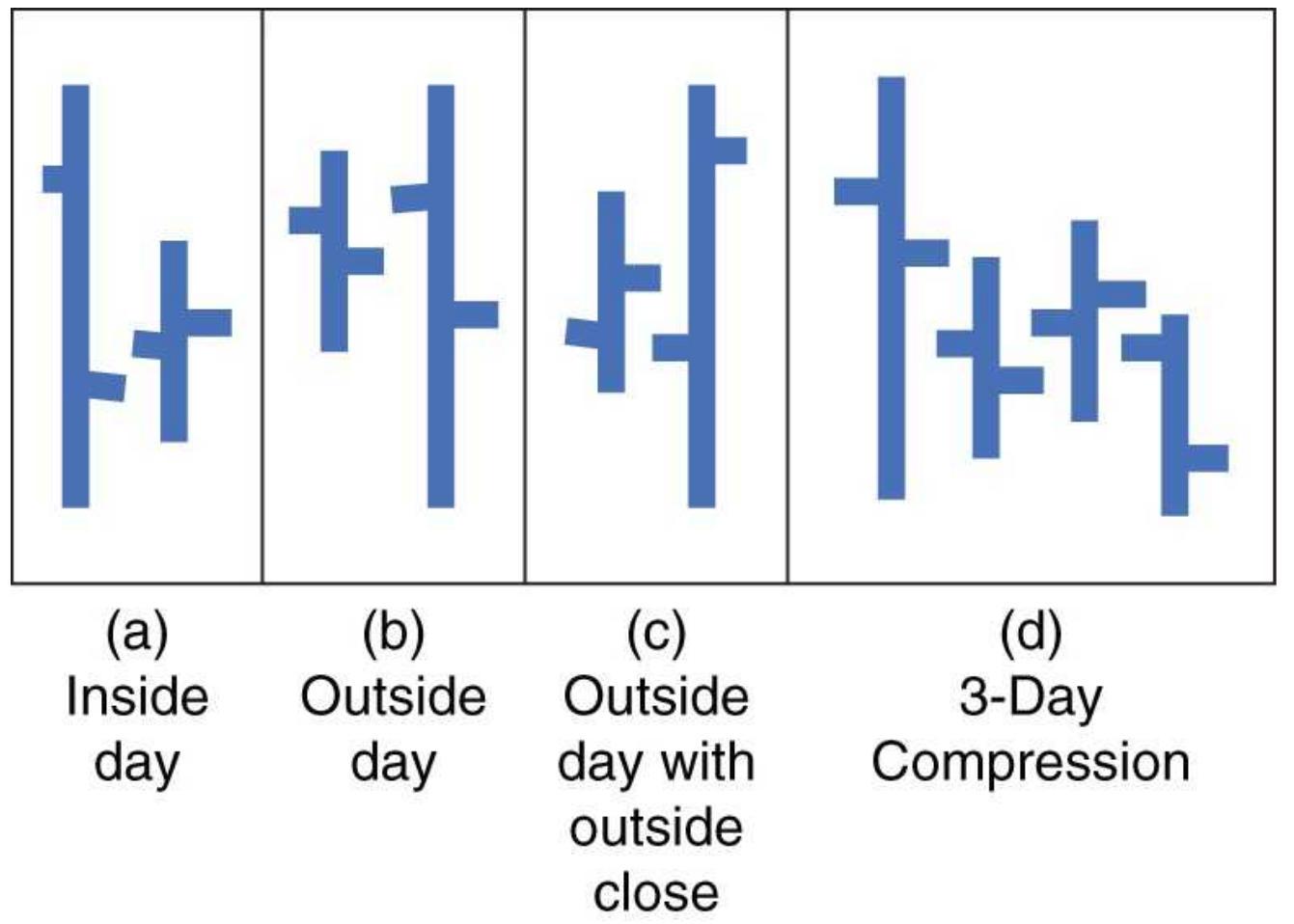

OUTSIDE DAYS AND INSIDE DAYS

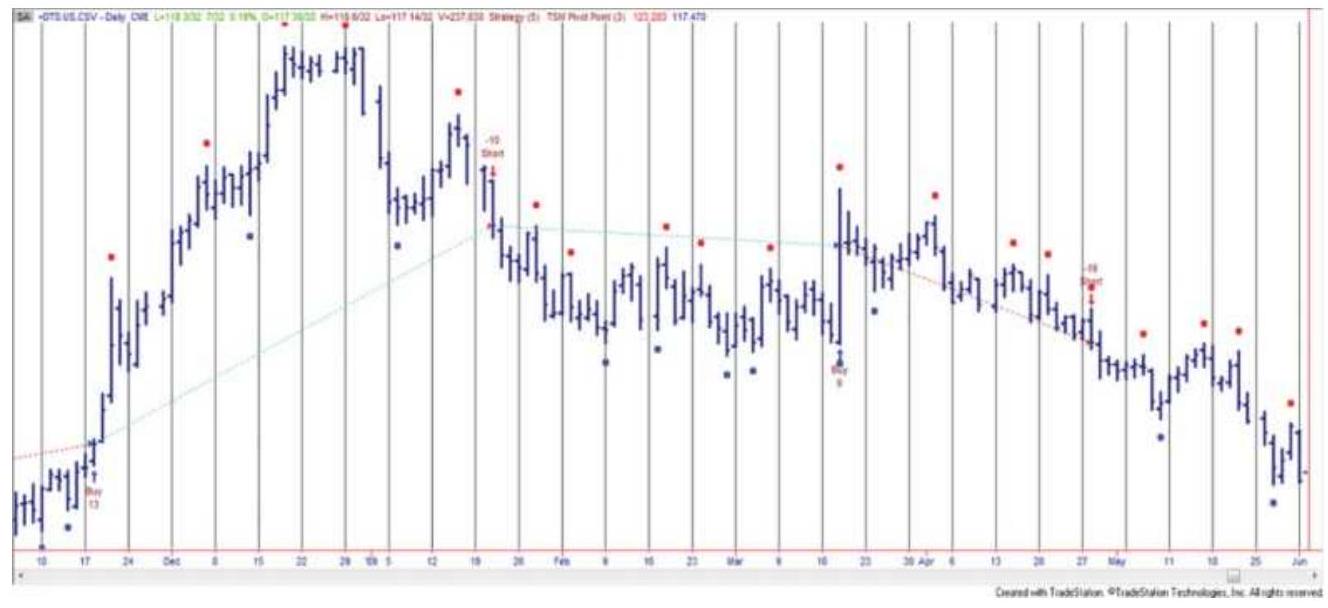

PIVOT POINTS

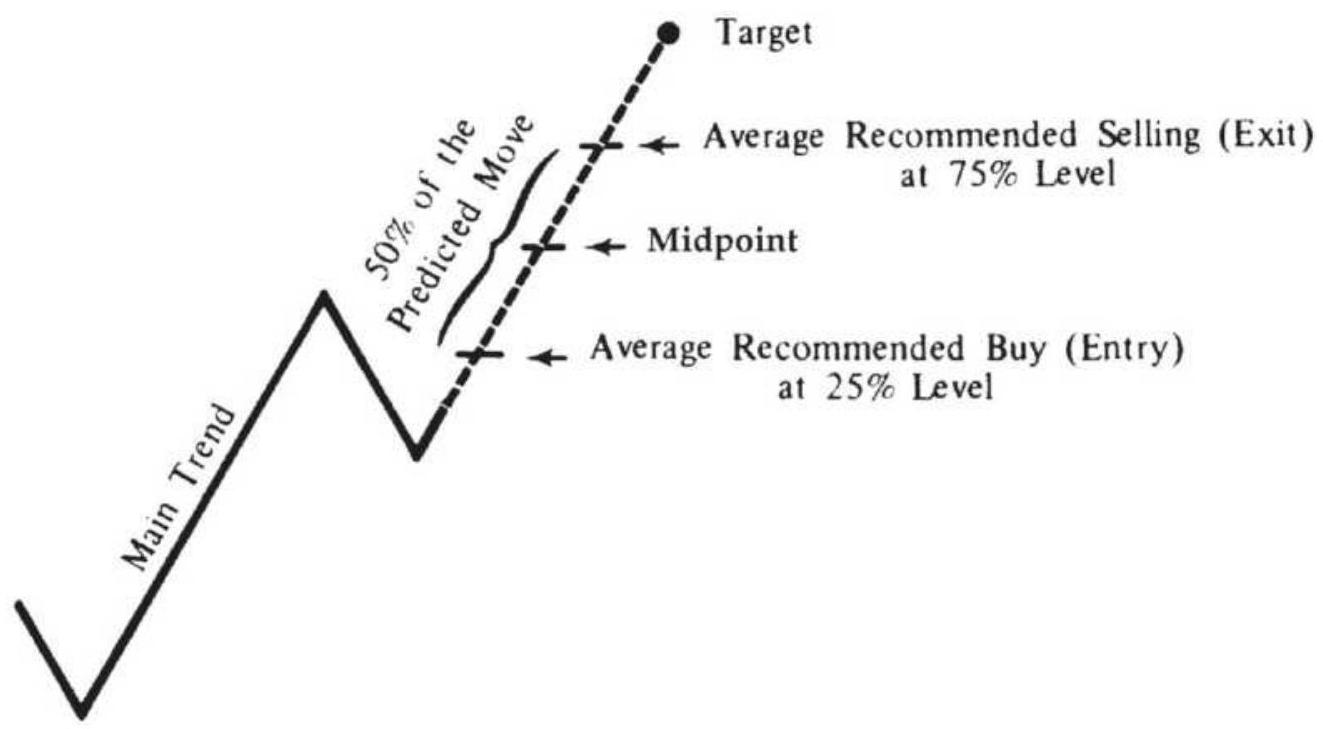

ACTION AND REACTION

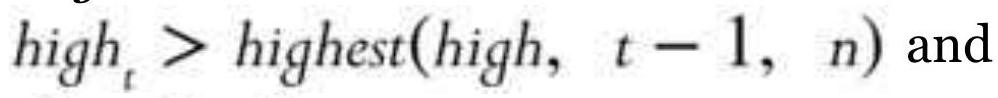

PROGRAMMING THE CHANNEL BREAKOUT

MOVING CHANNELS

COMMODITY CHANNEL INDEX

WYCKOFF'S COMBINED TECHNIQUES

COMPLEX PATTERNS

COMPUTER RECOGNITION OF CHART

PATTERNS

NOTES

CHAPTER 5: Event-Driven Trends

SWING TRADING

POINT-AND-FIGURE CHARTING

THE \(N\)-DAY BREAKOUT

NOTES

CHAPTER 6: Regression Analysis

COMPONENTS OF A TIME SERIES

CHARACTERISTICS OF THE PRICE DATA

LINEAR REGRESSION

LINEAR CORRELATION

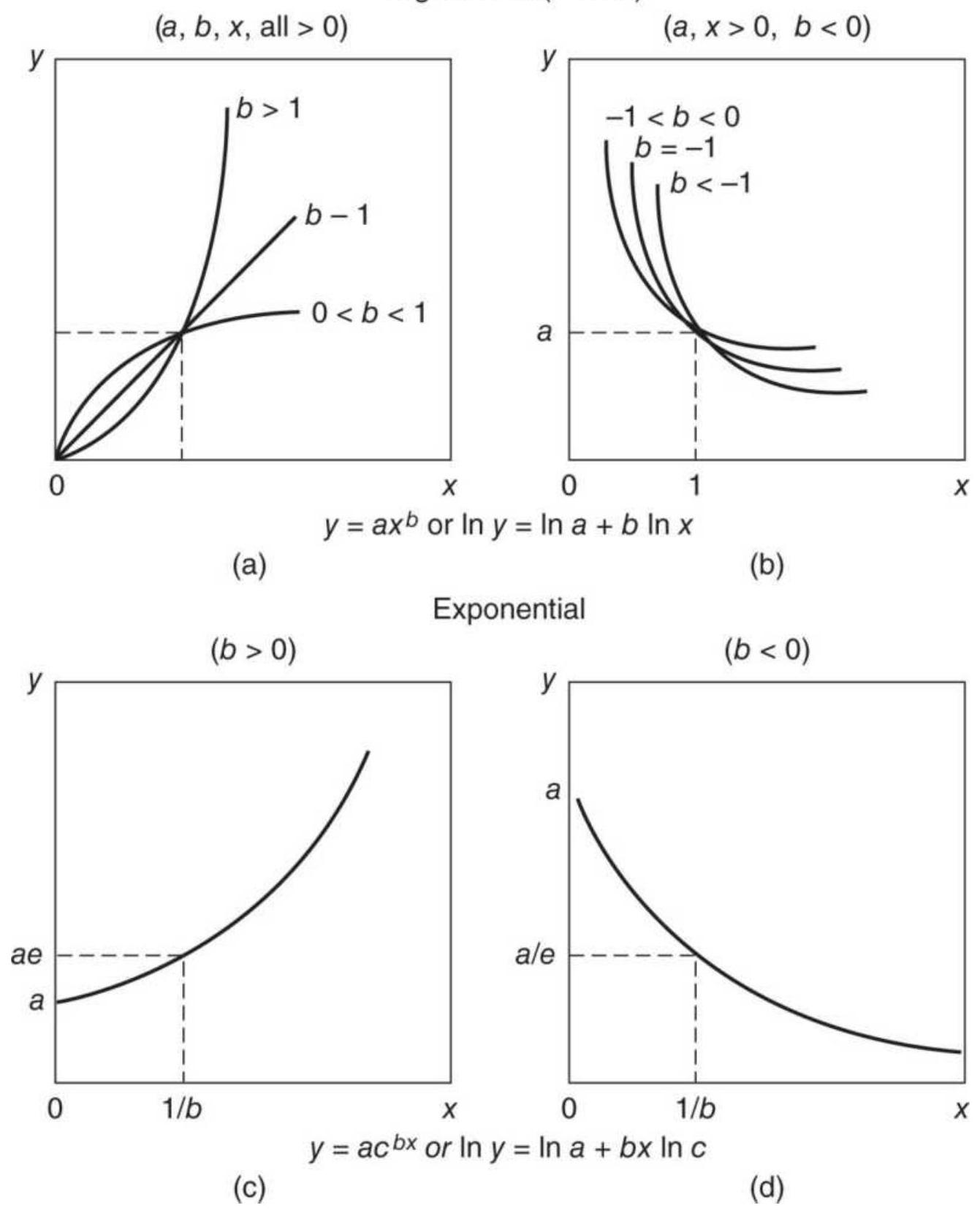

NONLINEAR APPROXIMATIONS FOR TWO

VARIABLES

TRANSFORMING NONLINEAR TO LINEAR

MULTIVARIATE APPROXIMATIONS

ARIMA

BASIC TRADING SIGNALS USING A LINEAR

REGRESSION MODEL

MEASURING MARKET STRENGTH

NOTES

CHAPTER 7: Time-Based Trend Calculations

FORECASTING AND FOLLOWING

PRICE CHANGE OVER TIME```

THE MOVING AVERAGE

THE MOVING MEDIAN

GEOMETRIC MOVING AVERAGE

ACCUMULATIVE AVERAGE

DROP-OFF EFFECT

EXPONENTIAL SMOOTHING

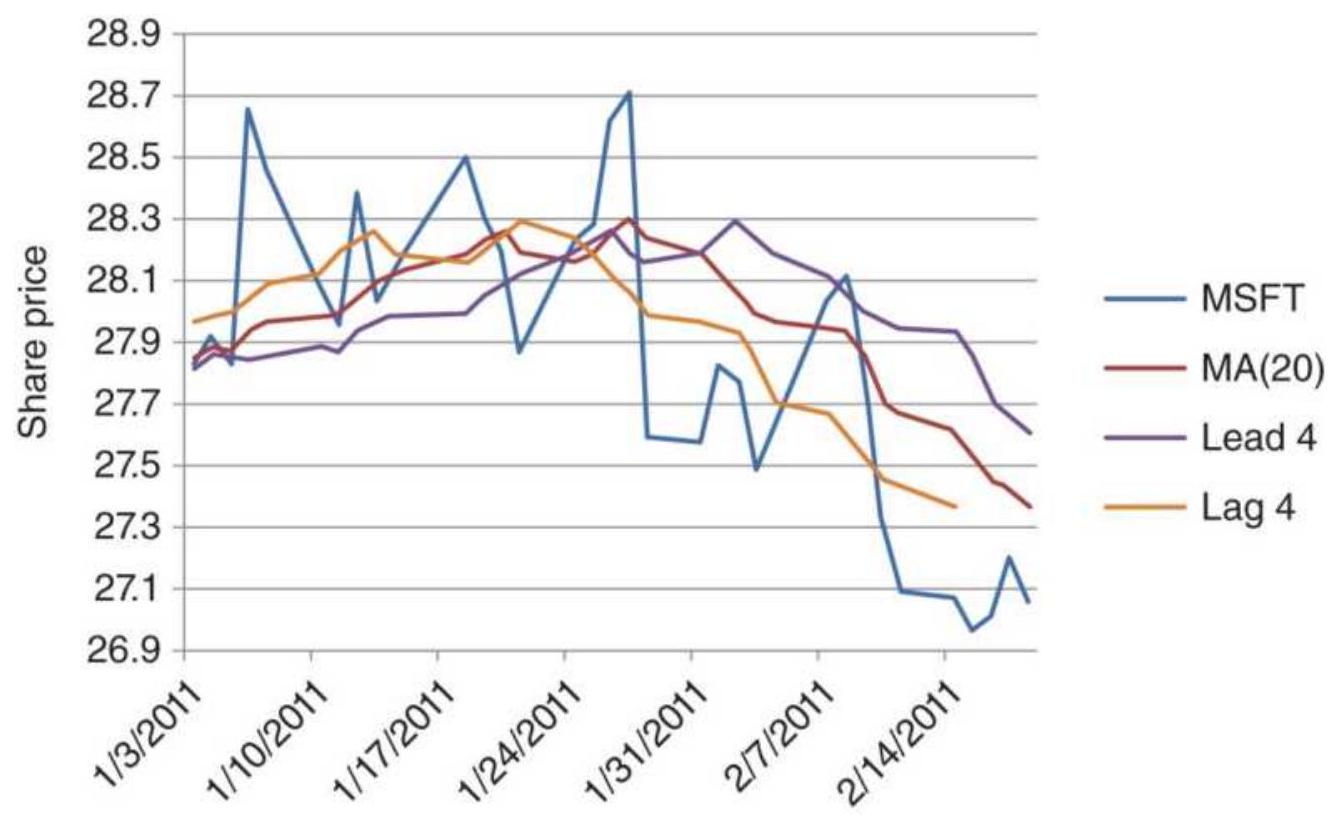

PLOTTING LAGS AND LEADS

NOTES

CHAPTER 8: Trend Systems

WHY TREND SYSTEMS WORK

BASIC BUY AND SELL SIGNALS

BANDS AND CHANNELS

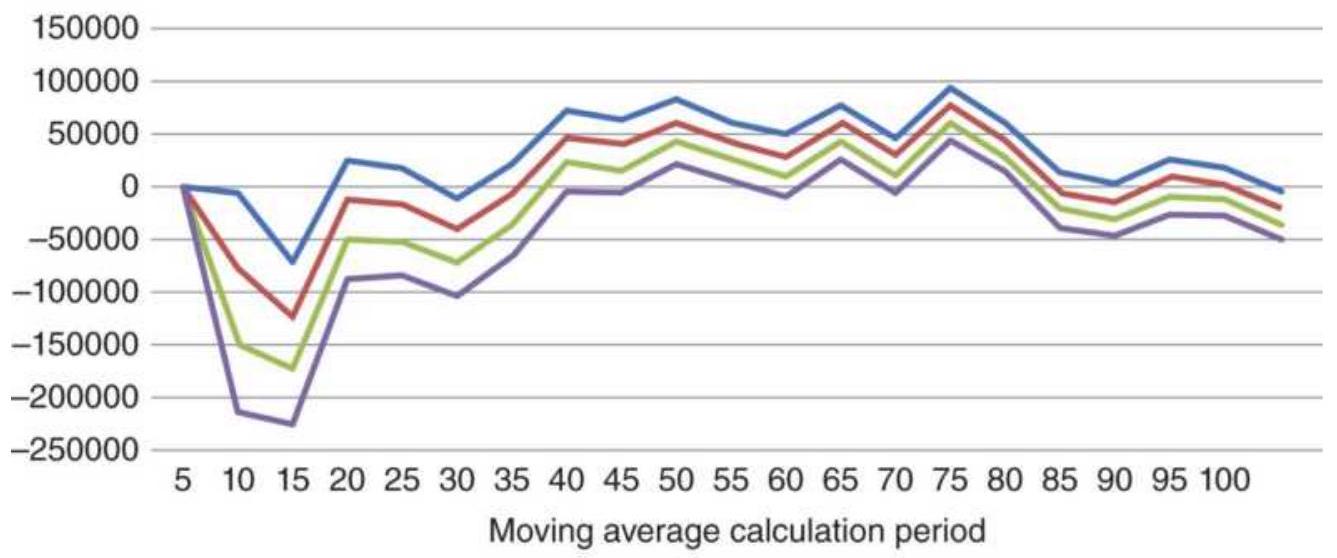

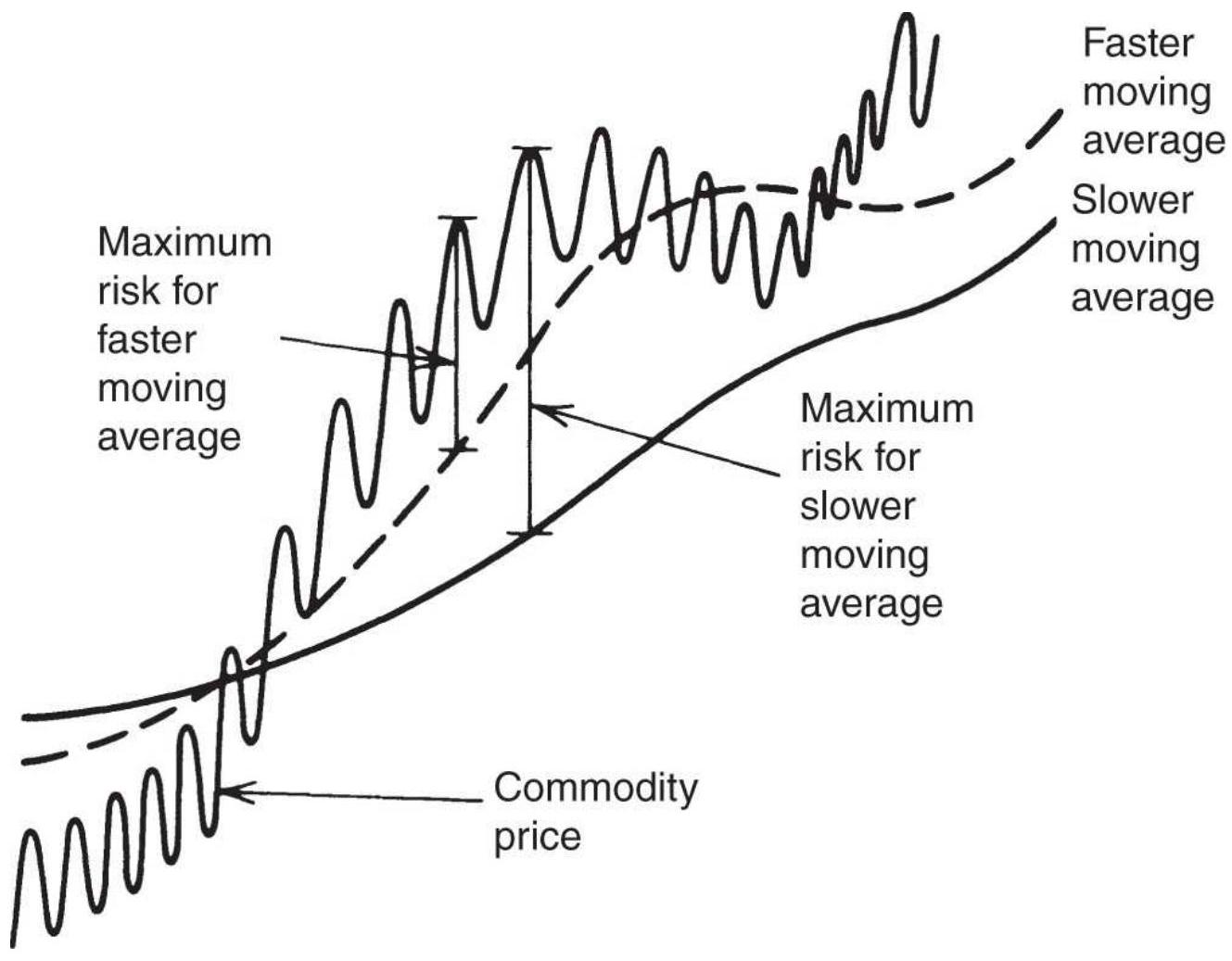

CHOOSING THE CALCULATION PERIOD

FOR THE TREND

A FEW CLASSIC SINGLE-TREND SYSTEMS

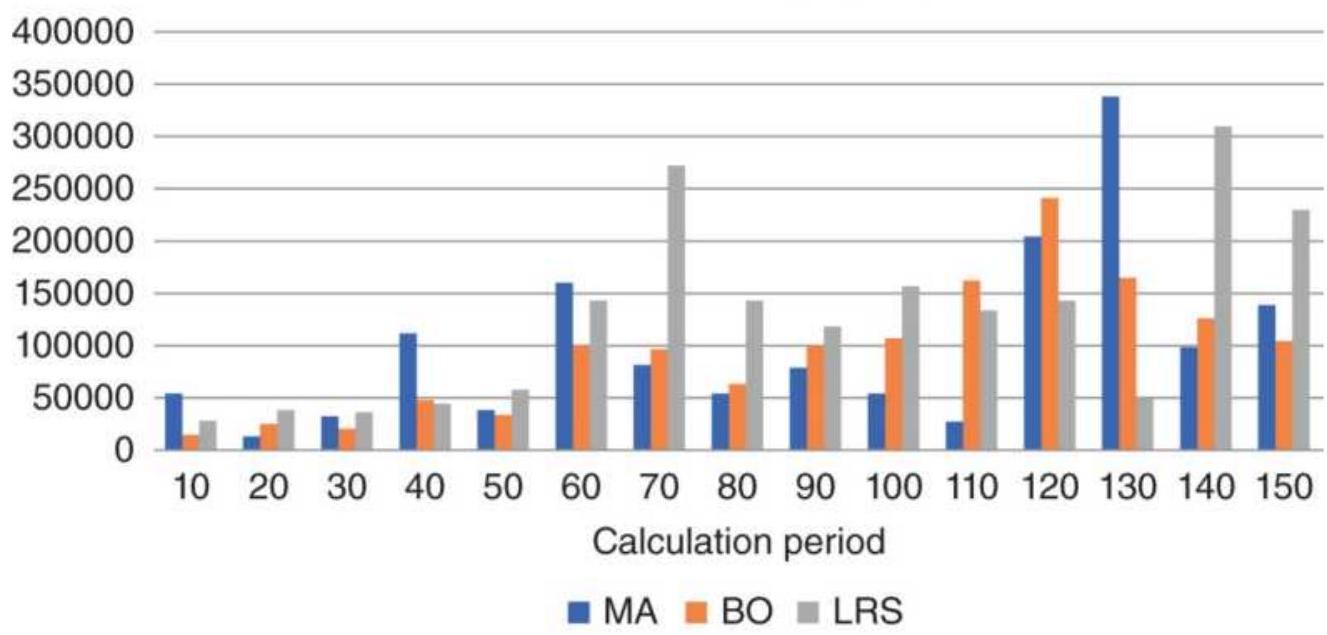

COMPARISON OF SINGLE-TREND SYSTEMS

TECHNIQUES USING TWO TRENDLINES

THREE TRENDS

COMPREHENSIVE STUDIES

SELECTING THE TREND SPEED TO FIT THE

PROBLEM

MOVING AVERAGE SEQUENCES: SIGNAL

PROGRESSION

EARLY EXITS FROM A TREND

PROJECTING MOVING AVERAGE

CROSSOVERS

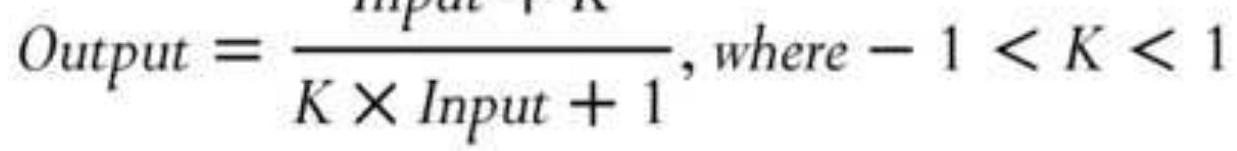

EARLY IDENTIFICATION OF A TREND 趋势的早期识别别

CHANGE 改变改变

NOTES 笔记记

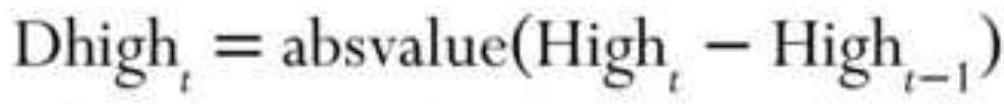

CHAPTER 9: Momentum and Oscillators 第九章:动量与振荡器器

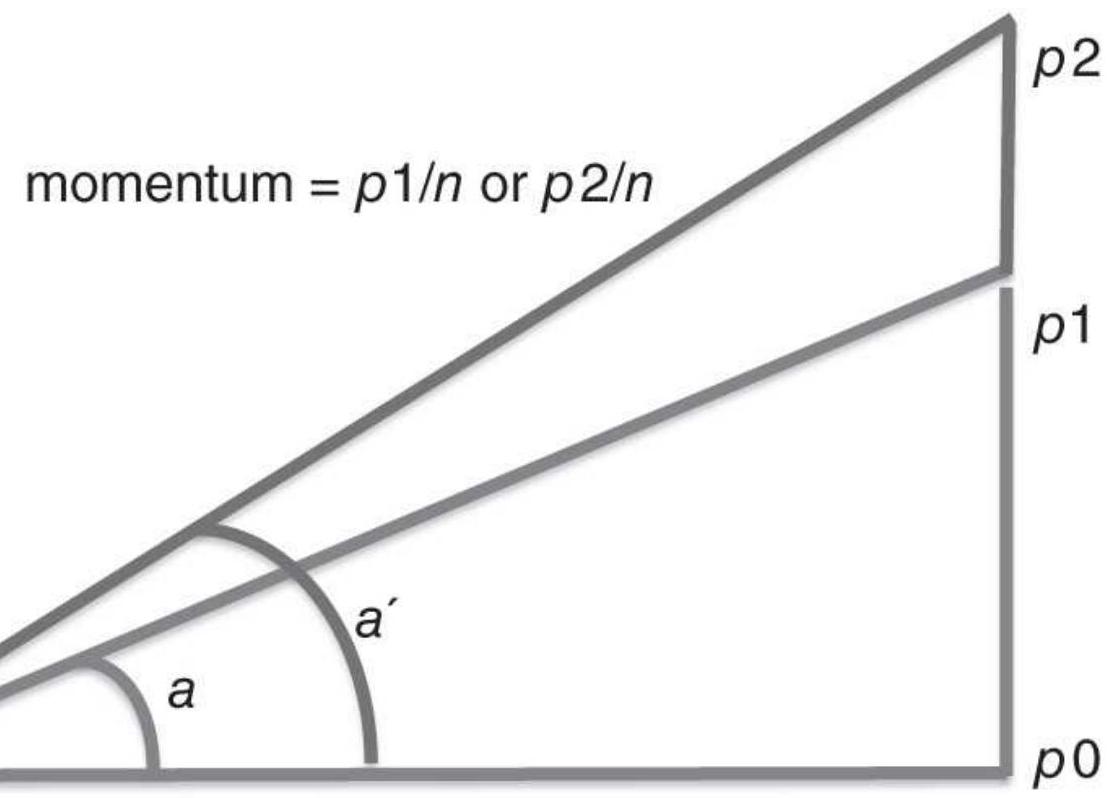

MOMENTUM 动量量

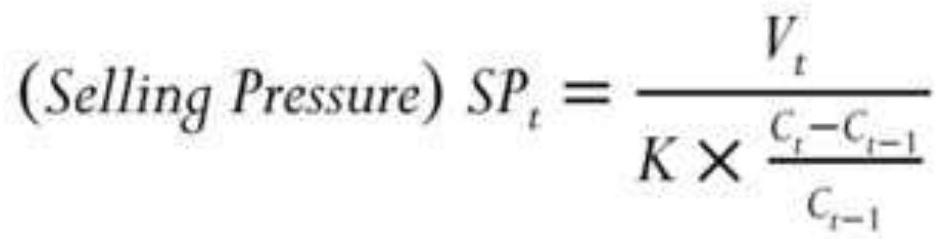

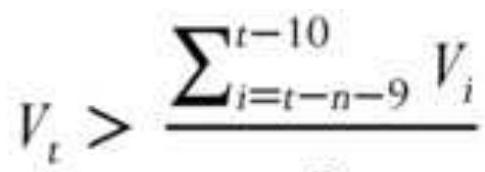

ADDING VOLUME TO MOMENTUM 添加动量到势头头

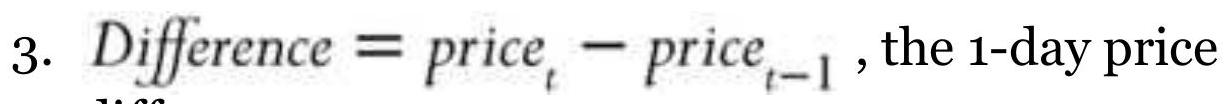

DIVERGENCE INDEX 发散指数指数

VISUALIZING MOMENTUM 可视化动量量

OSCILLATORS 振荡器器

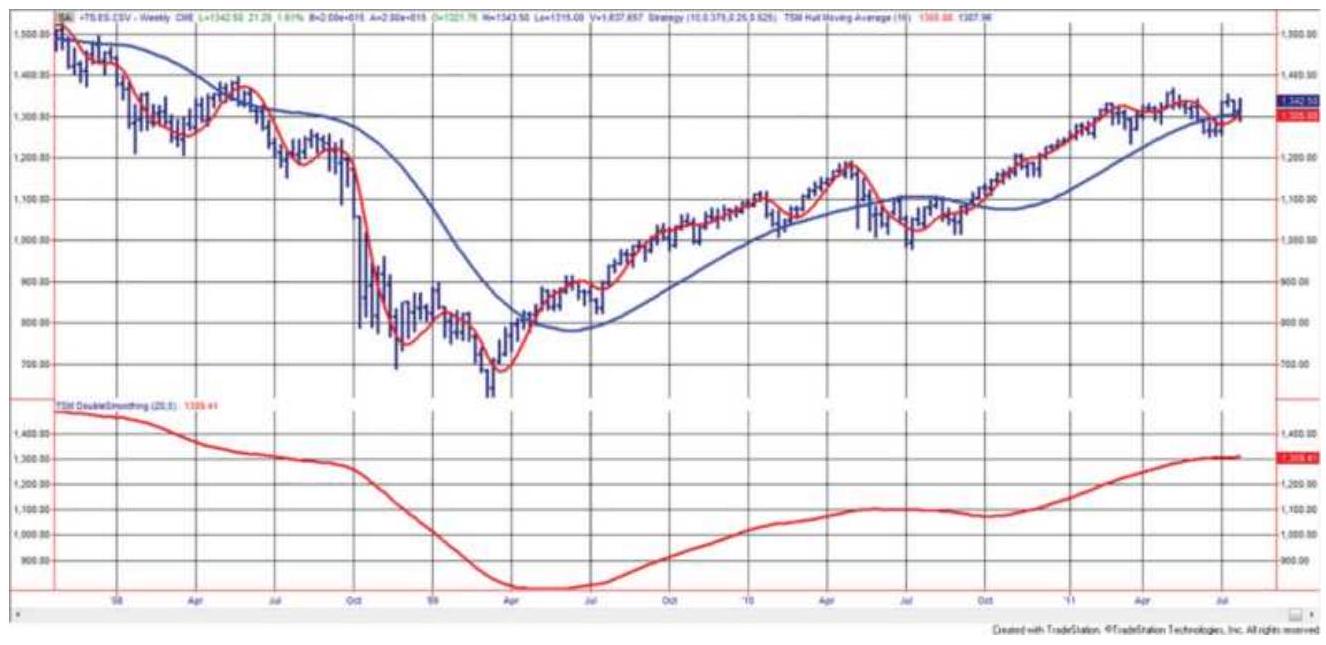

DOUBLE-SMOOTHED MOMENTUM 双重平滑动量量

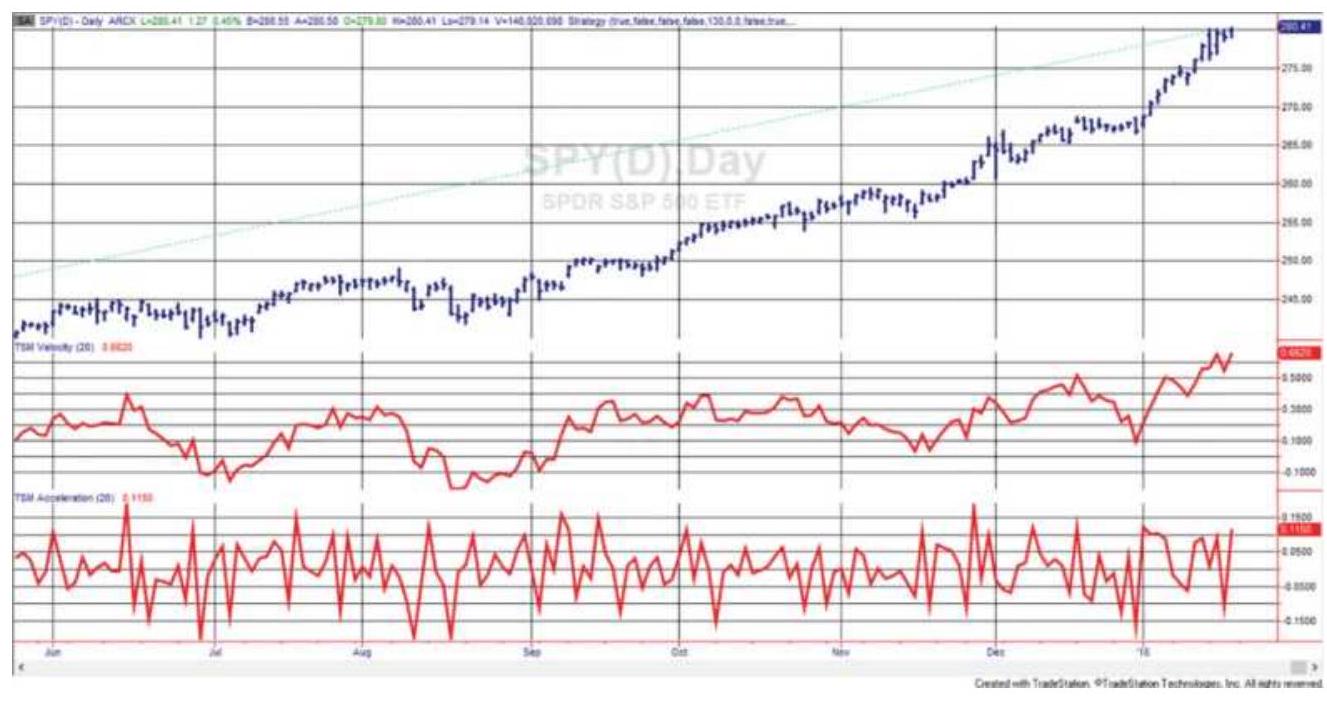

VELOCITY AND ACCELERATION 速度和加速度速度

HYBRID MOMENTUM TECHNIQUES 混合动量技术技术

MOMENTUM DIVERGENCE 动量背离离

SOME FINAL COMMENTS ON MOMENTUM 关于动量的一些最后评论评论

NOTES 笔记记

CHAPTER 10: Seasonality and Calendar Patterns 第 10 章:季节性和日历模式模式

SEASONALITY NEVER DISAPPEARS 季节性从未消失失

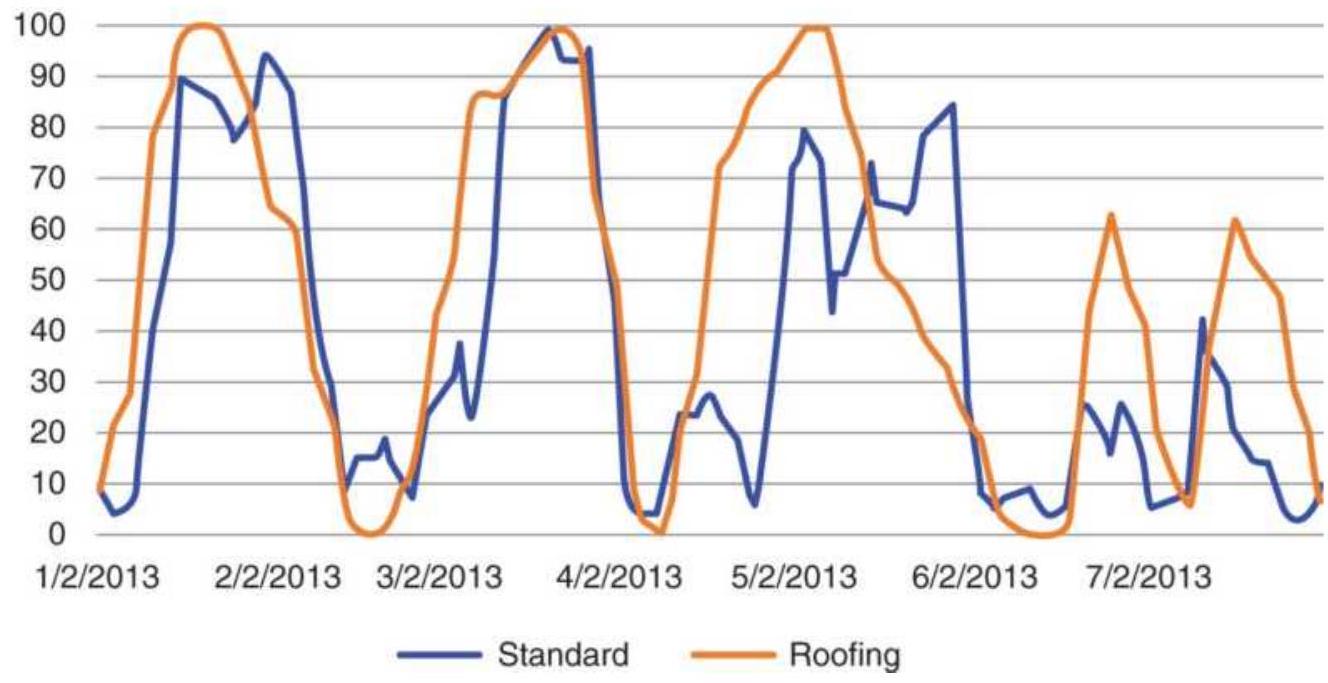

THE SEASONAL PATTERN 季节模式模式

POPULAR METHODS FOR CALCULATING 计算的流行方法方法

SEASONALITY 季节性性

CLASSIC METHODS FOR FINDING 经典查找方法方法

SEASONALITY 季节性性

WEATHER SENSITIVITY 天气敏感性性

IDENTIFYING SEASONAL TRADES 识别季节性交易交易

SEASONALITY AND THE STOCK MARKET 季节性与股市市

COMMON SENSE AND SEASONALITY 常识与季节性性

NOTES 笔记记

CHAPTER 11: Cycle Analysis 第 11 章:循环分析分析

CYCLE BASICS 循环基础知识知识

UNCOVERING THE CYCLE 揭开循环循环

MAXIMUM ENTROPY 最大熵熵

SHORT CYCLE INDICATOR 短周期指示器器

PHASING 相位调整调整

NOTES 笔记记

CHAPTER 12: Volume, Open Interest, and Breadth 第十二章:成交量、未平仓合约和市场广度度

FUTURES VOLUME AND OPEN INTEREST 期货交易量和未平仓合约数数

EXTENDED HOURS AND 24-HOUR 延长营业时间和 24 小时小时

TRADING 交易交易

VARIATIONS FROM THE NORMAL 偏离正常值值

PATTERNS 图案案

STANDARD INTERPRETATION 标准解释解释

VOLUME INDICATORS 音量指示器器

BREADTH INDICATORS 广度指标指标

IS ONE VOLUME OR BREADTH INDICATOR BETTER THAN ANOTHER? 是否有一种交易量或广度指标优于其他指标??

MORE TRADING METHODS USING 更多交易方法方法

VOLUME AND BREADTH 成交量和广度度

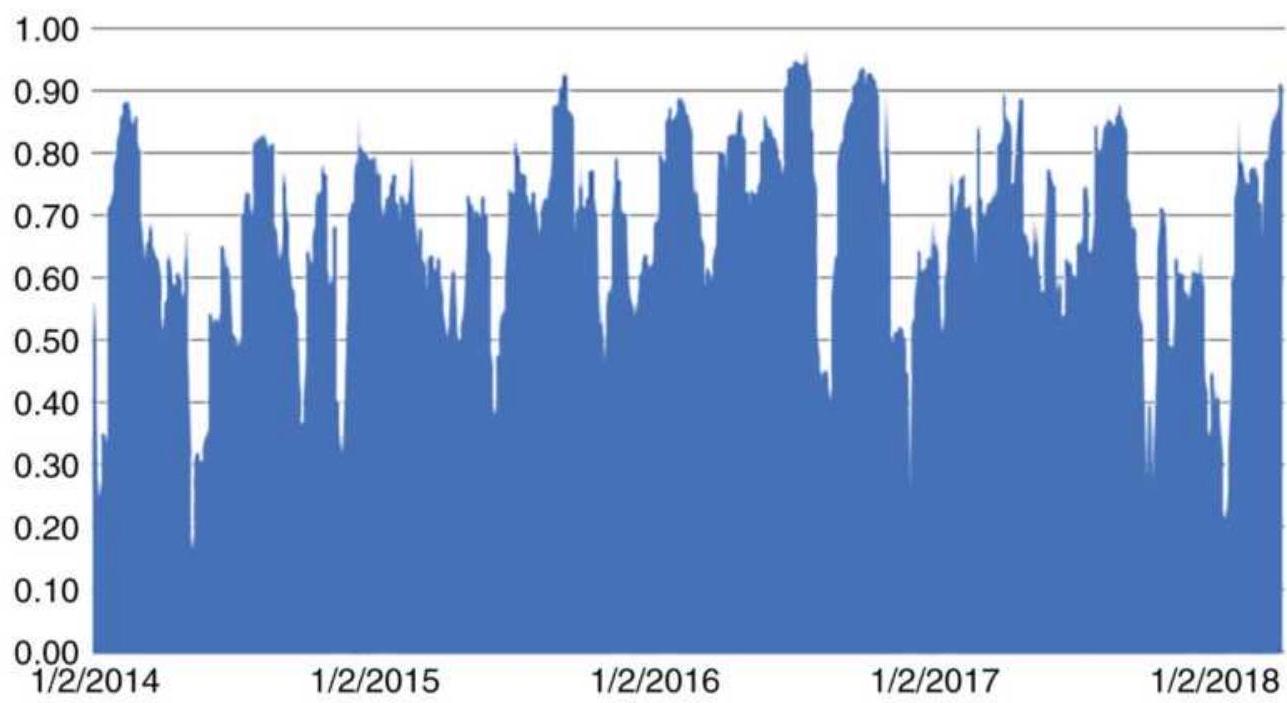

AN INTEGRATED PROBABILITY MODEL 集成概率模型模型

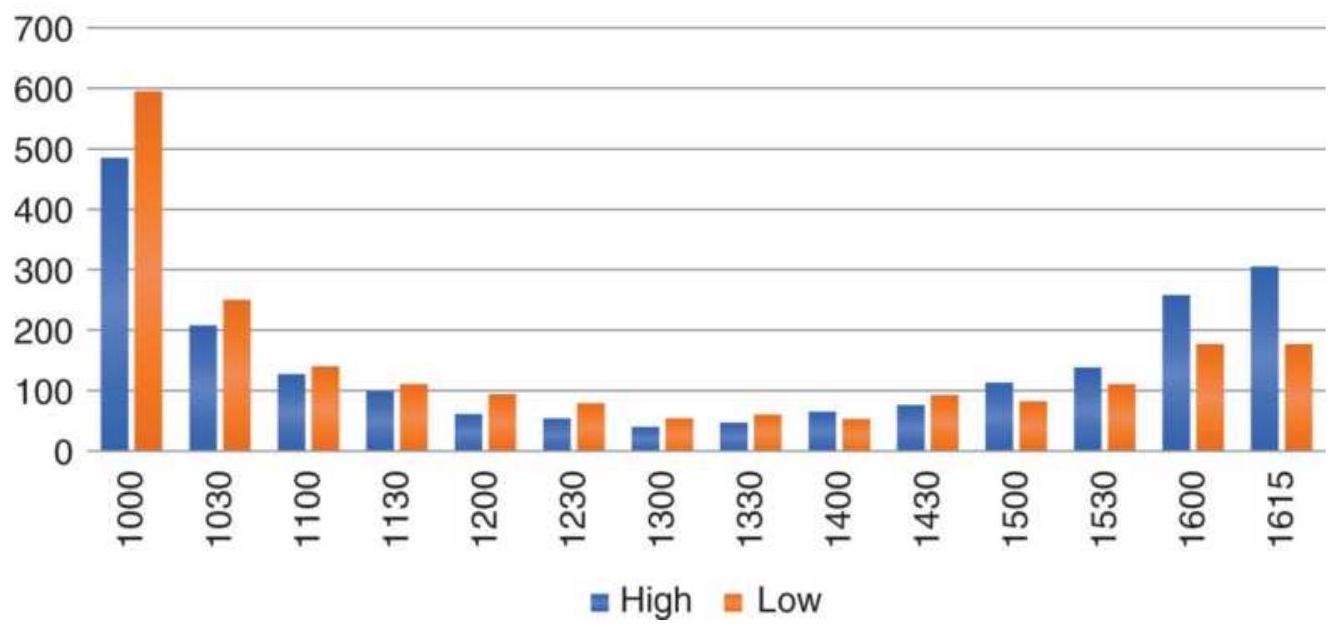

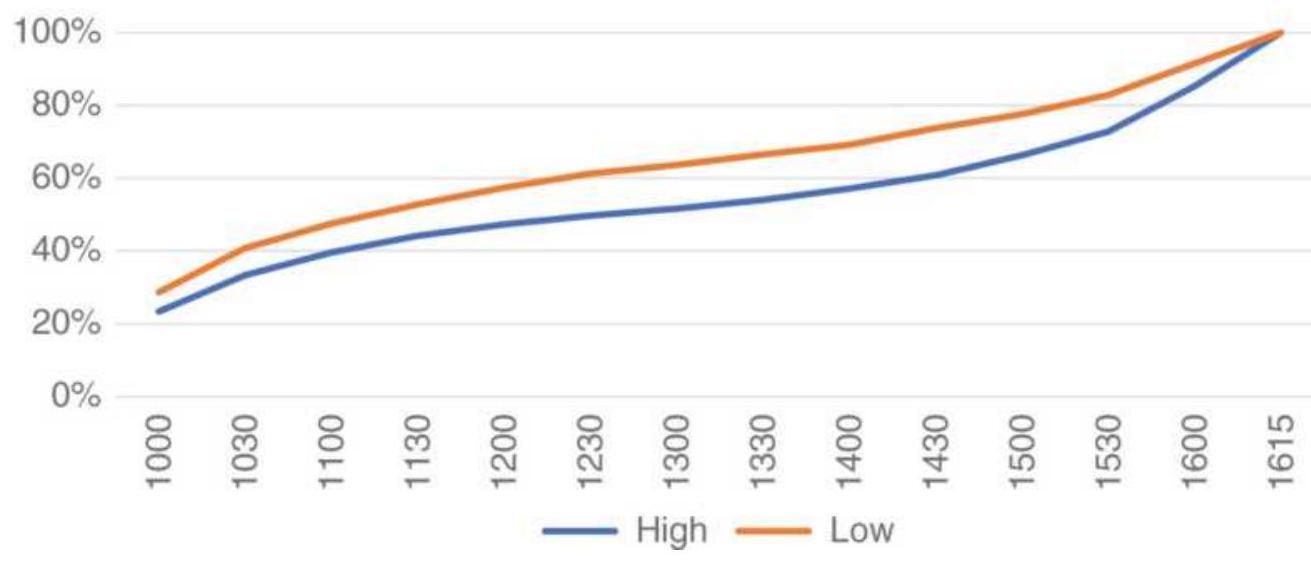

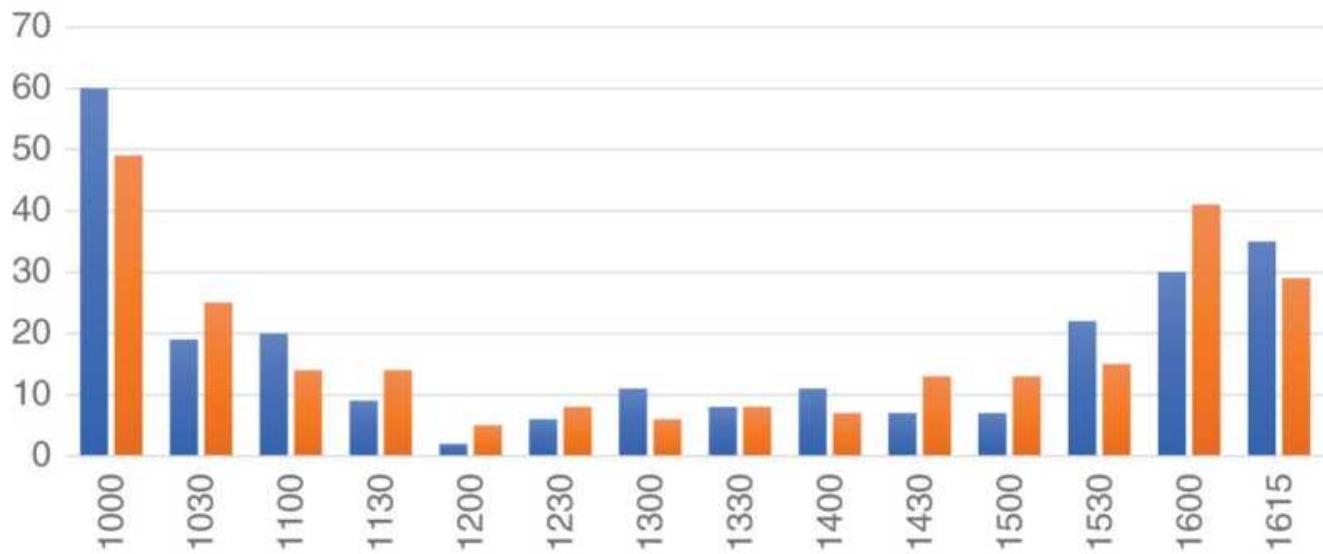

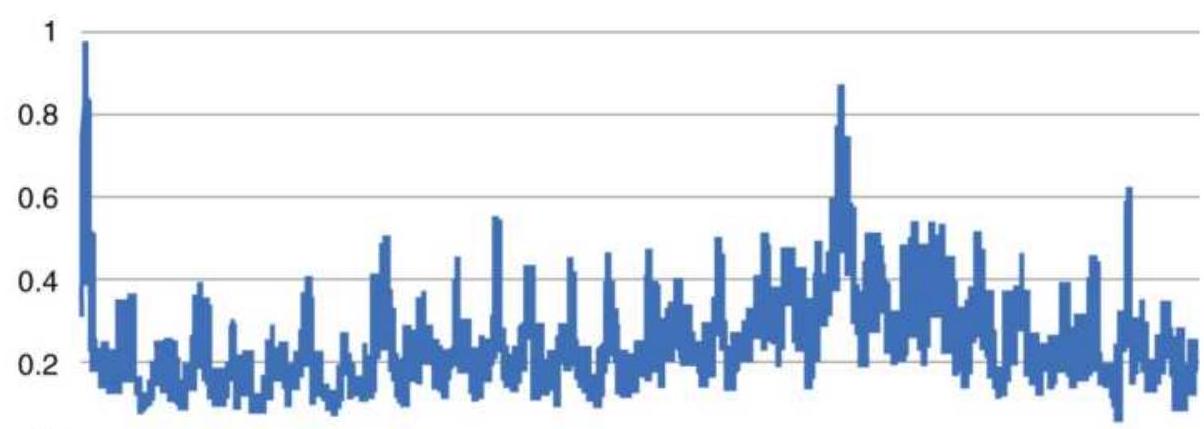

INTRADAY VOLUME PATTERNS 当日成交量模式模式

FILTERING LOW VOLUME 过滤低音量量

MARKET FACILITATION INDEX 市场促进指数指数

NOTES 笔记记

CHAPTER 13: Spreads and Arbitrage 第十三章:价差与套利套利

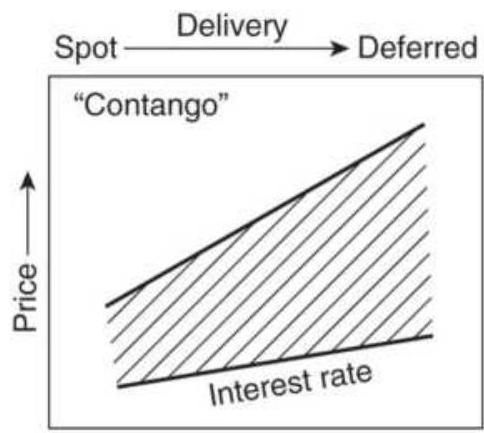

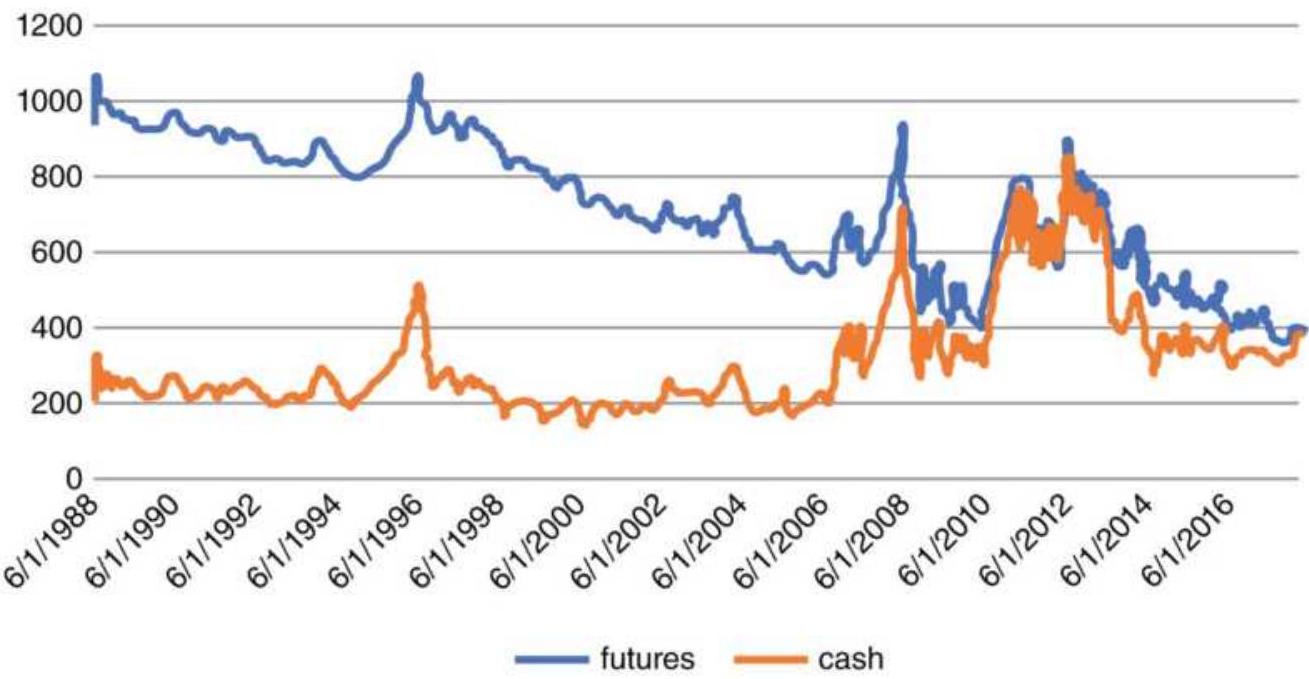

DYNAMICS OF FUTURES INTRAMARKET SPREADS 期货市场内部价差动态动态

CARRYING CHARGES SPREADS IN STOCKS 股票持有成本价差差

SPREAD AND ARBITRAGE RELATIONSHIPS RISK REDUCTION IN SPREADS ARBITRAGE 价差和套利关系在价差套利中的风险降低降低

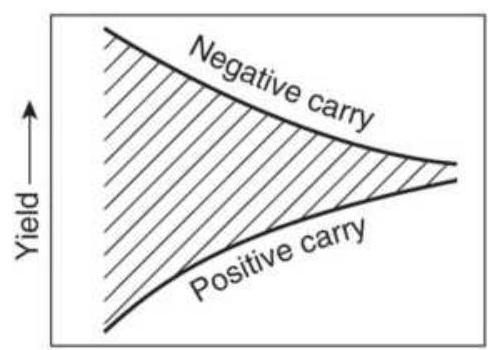

THE CARRY TRADE 套息交易交易

IMPLIED VERSUS HISTORIC VOLATILITY 隐含波动率与历史波动率率

CHANGING SPREAD RELATIONSHIPS 变动的点差关系关系

INTERMARKET SPREADS 跨市场价差差

NOTES 笔记记

CHAPTER 14: Behavioral Techniques 第 14 章:行为技术技术

MEASURING THE NEWS 衡量新闻新闻

EVENT TRADING 事件交易交易

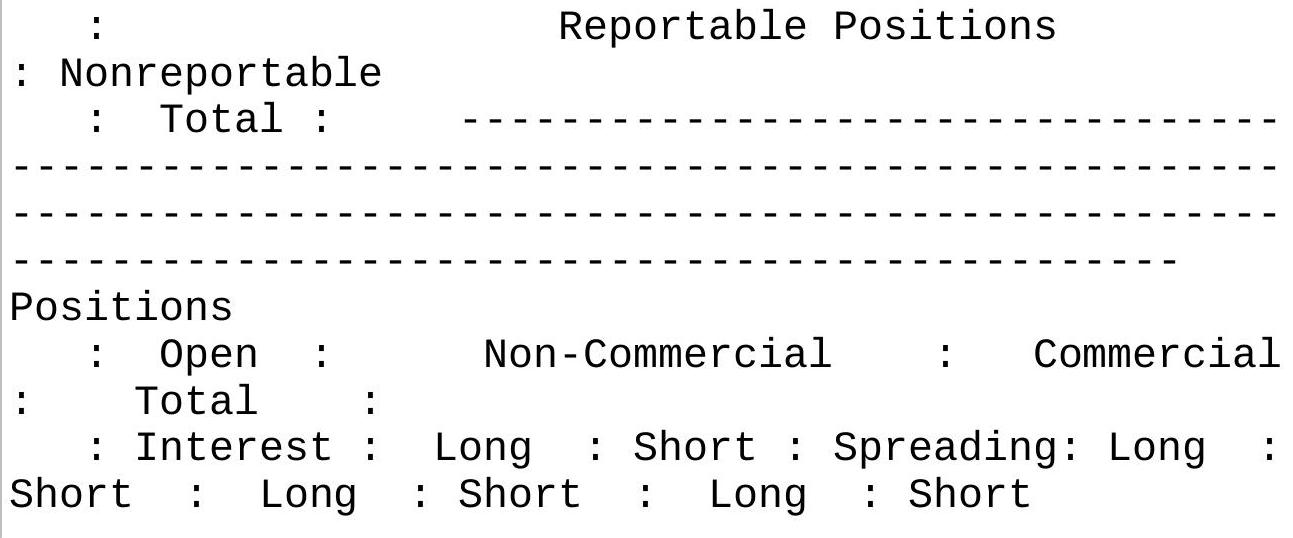

COMMITMENT OF TRADERS REPORT 交易者承诺报告报告

OPINION AND CONTRARY OPINION 意见与反对意见意见

FIBONACCI AND HUMAN BEHAVIOR 斐波那契与人类行为行为

ELLIOTT'S WAVE PRINCIPLE 艾略特波浪理论理论

PRICE TARGET CONSTRUCTIONS USING 价格目标构造使用使用

THE FIBONACCI RATIO 斐波那契比率率

FISCHER'S GOLDEN SECTION COMPASS 费希尔的黄金分割比例圆规规

SYSTEM 系统系统

W. D. GANN: TIME AND SPACE W.D.甘恩:时间与空间空间

FINANCIAL ASTROLOGY 金融占星术术

NOTES 笔记记

CHAPTER 15: Short-Term Patterns 第 15 章:短期模式模式

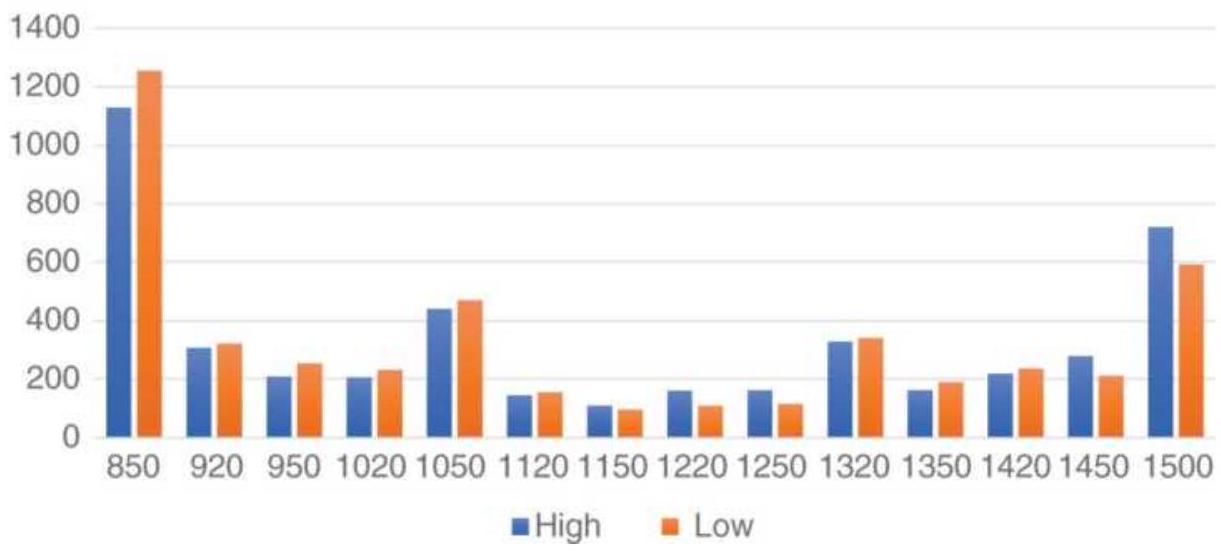

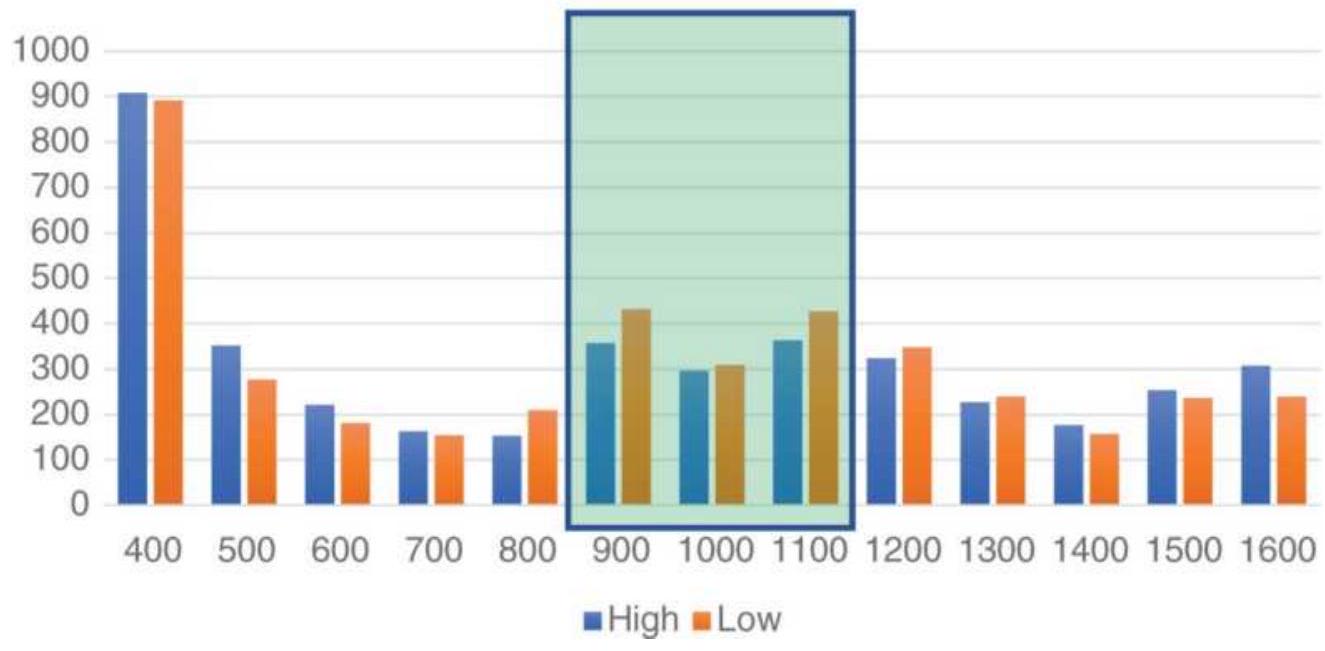

PROJECTING DAILY HIGHS AND LOWS 预测每日高低温温

TIME OF DAY 一天中的时间时间

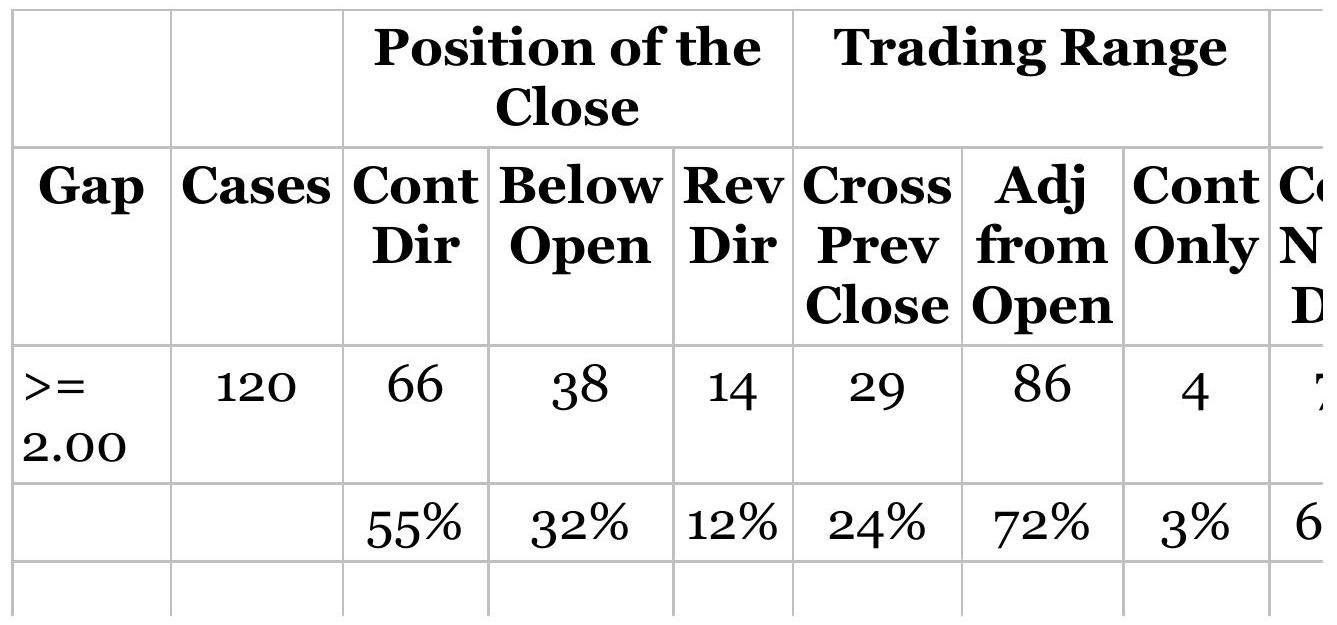

OPENING GAPS 打开间隙隙

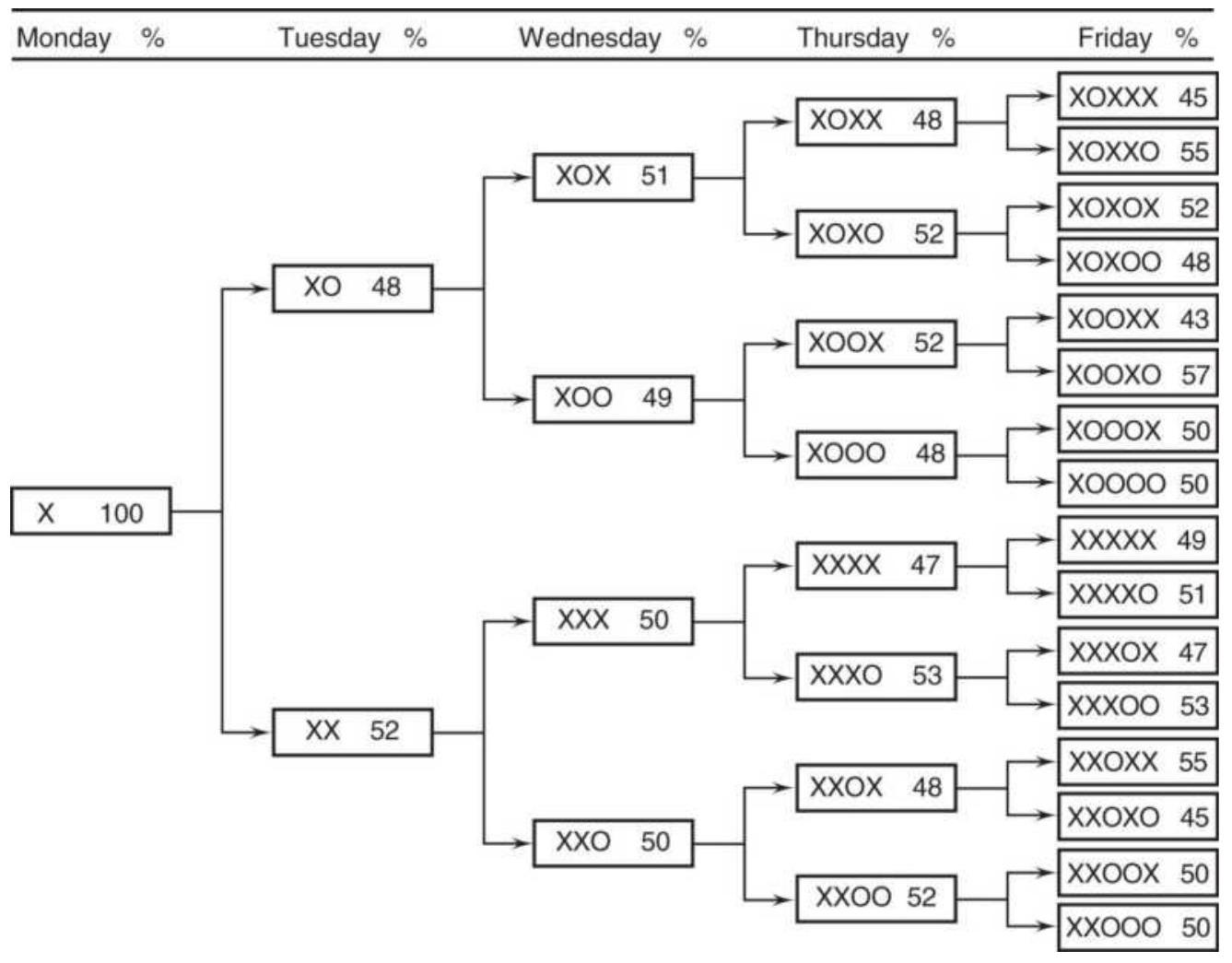

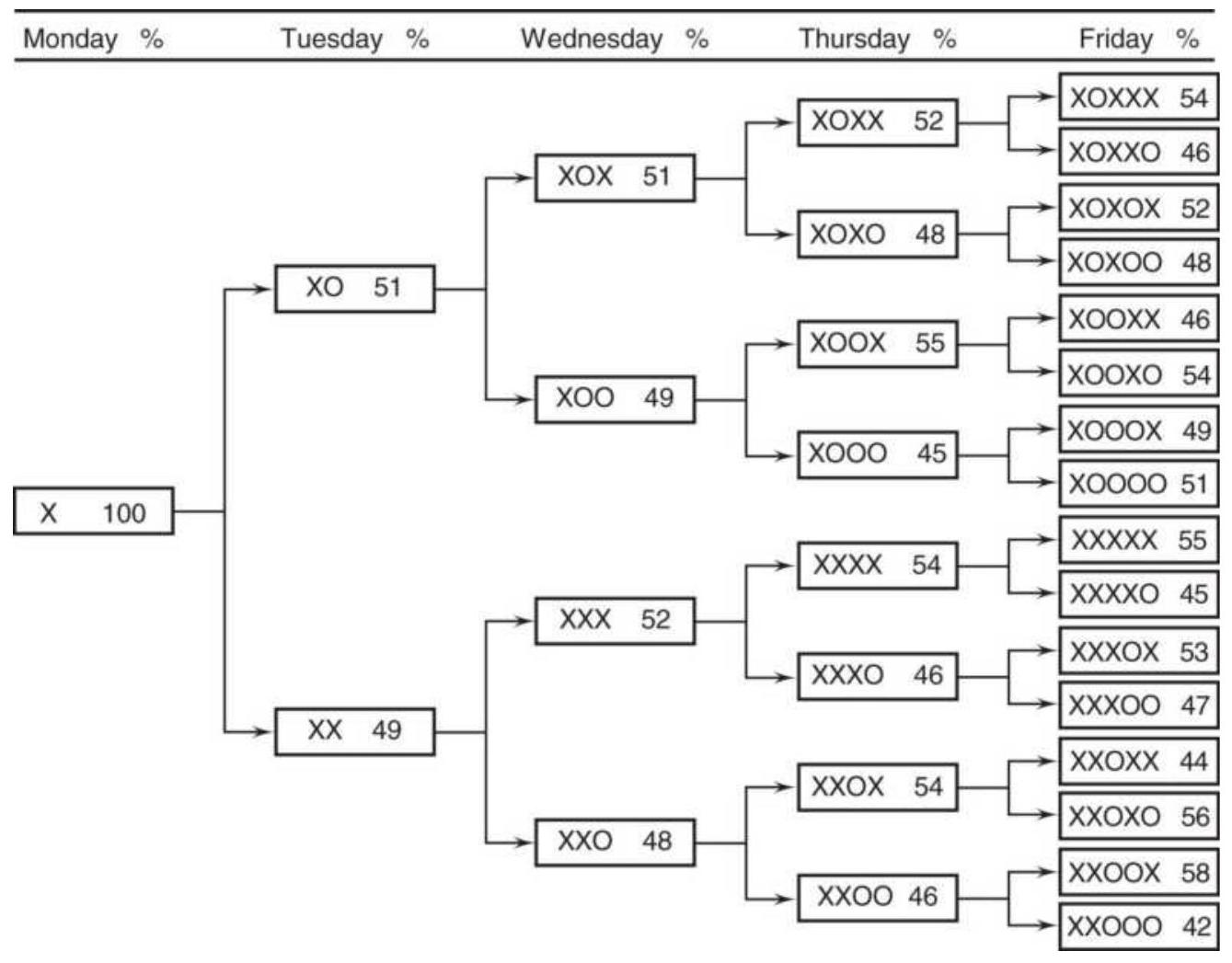

WEEKDAY, WEEKEND, AND REVERSAL PATTERNS 工作日、周末和反转模式模式

COMPUTER-BASED PATTERN RECOGNITION 基于计算机的模式识别别

ARTIFICIAL INTELLIGENCE METHODS 人工智能方法方法

NOTES 笔记记

CHAPTER 16: Day Trading 第十六章:日间交易交易

IMPACT OF TRANSACTION COSTS 交易成本的影响影响

SLIPPAGE AND LIQUIDITY 滑点与流动性性

KEY ELEMENTS OF DAY TRADING 日内交易的关键要素素

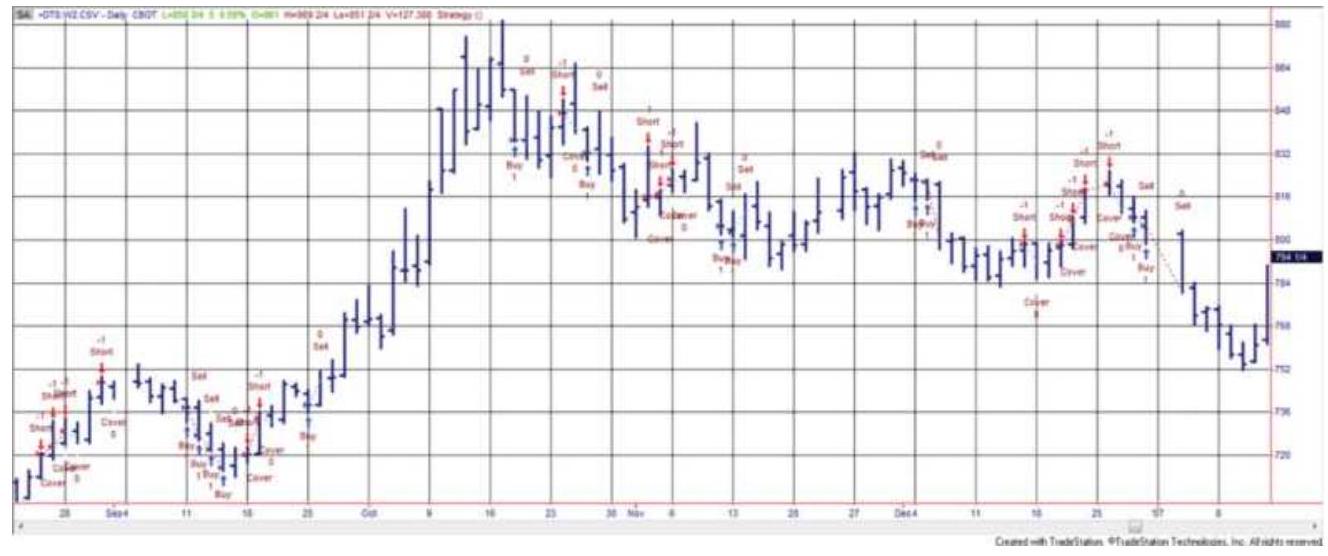

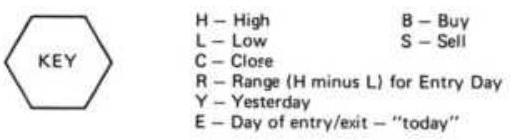

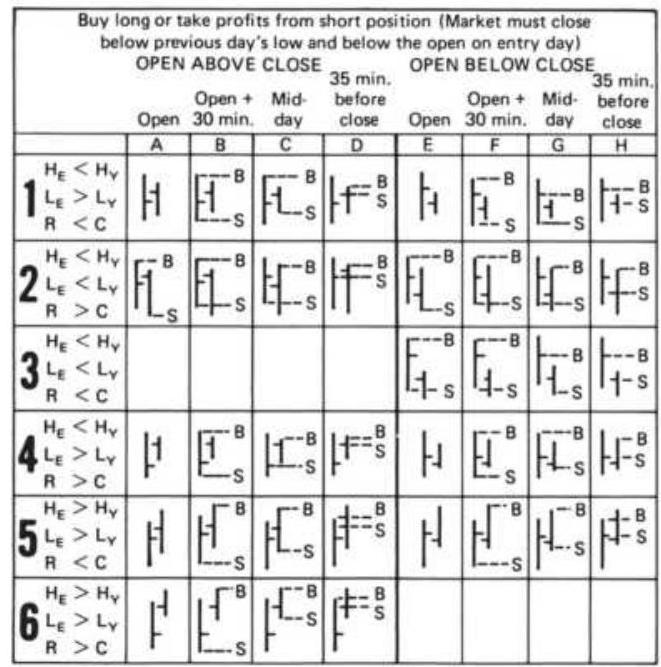

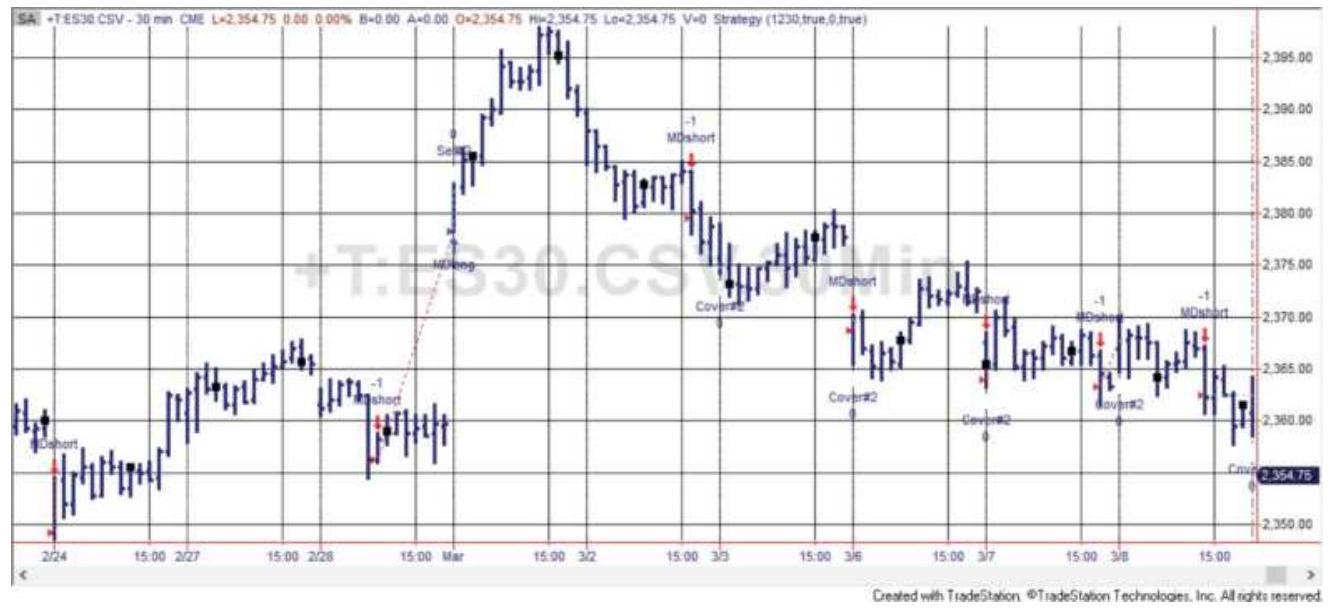

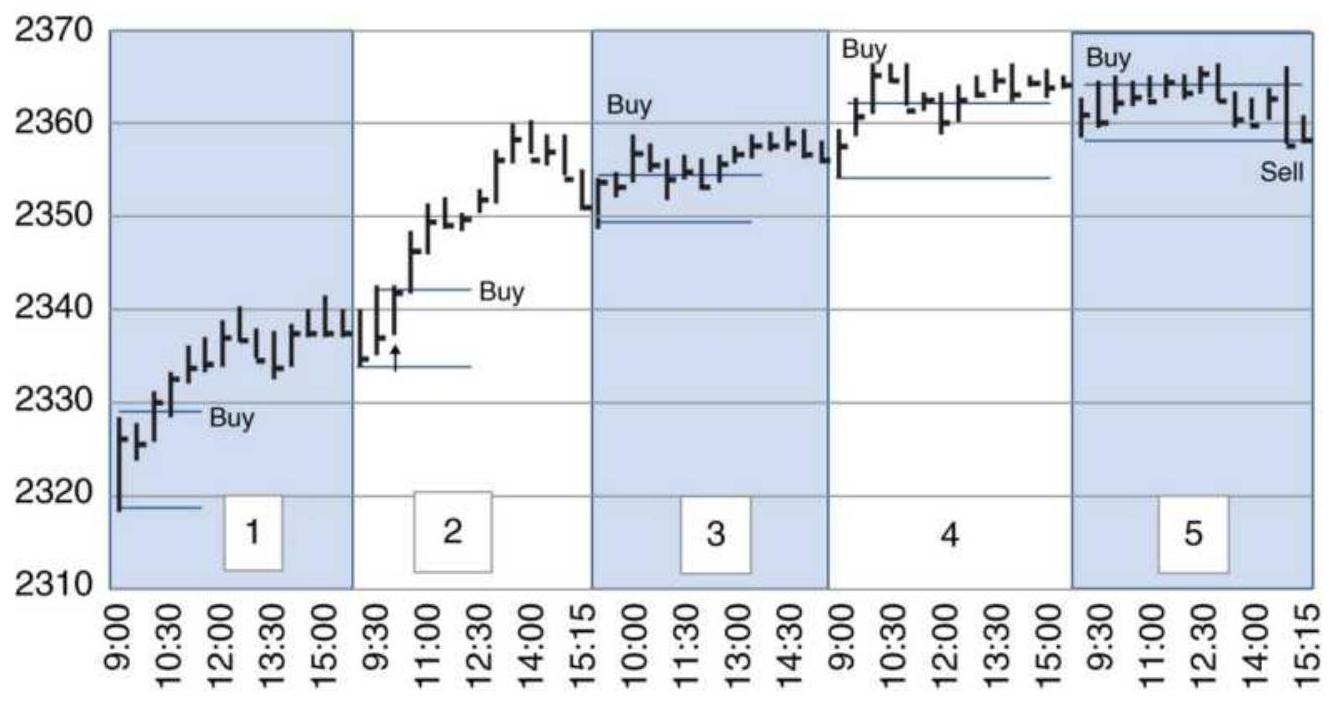

TRADING USING PRICE PATTERNS 使用价格模式进行交易交易

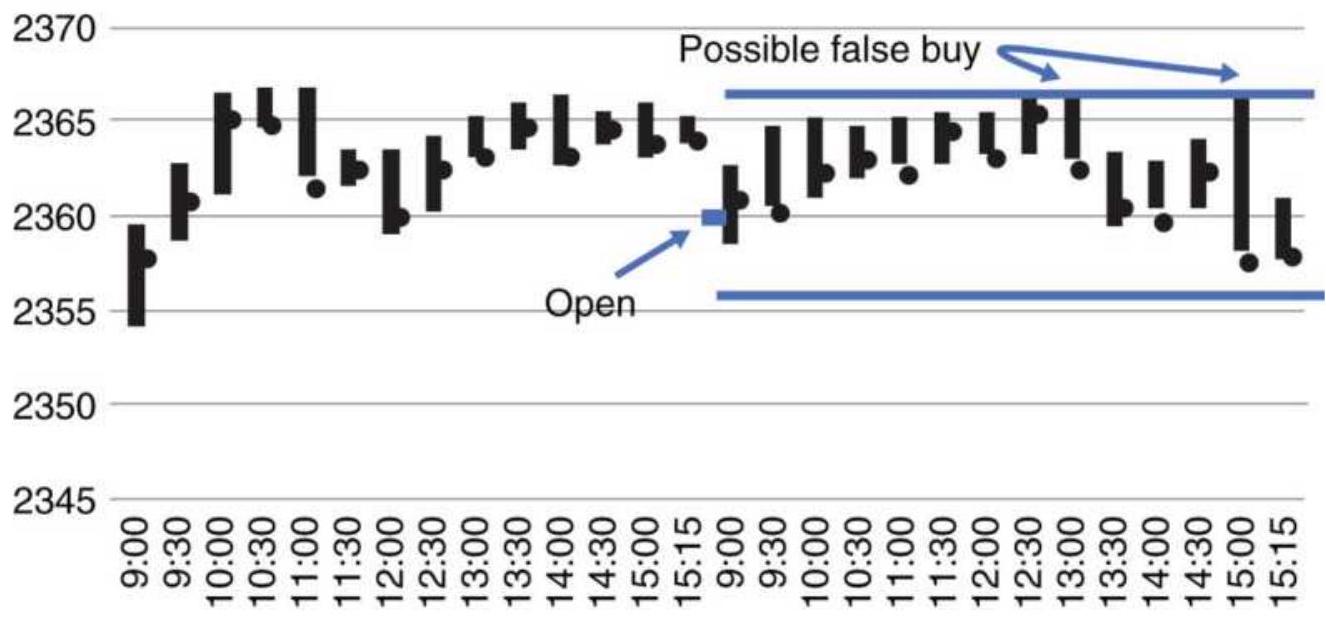

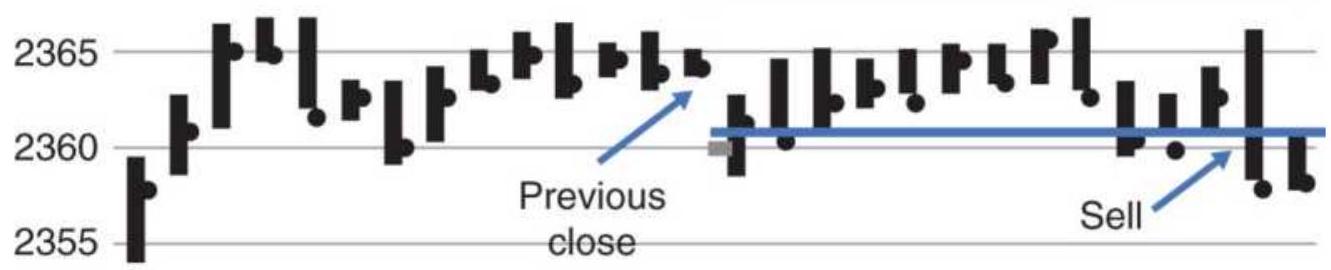

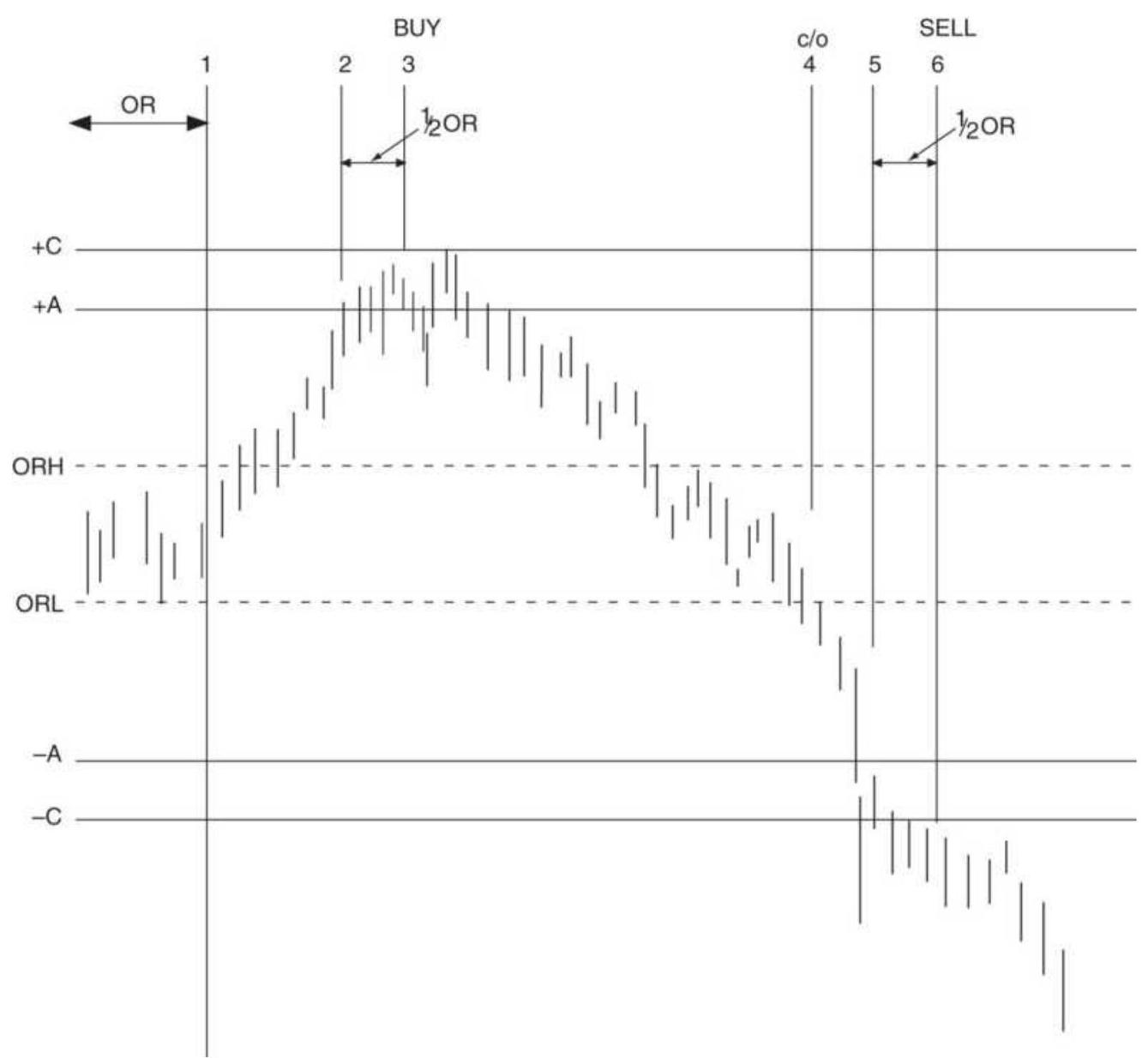

INTRADAY BREAKOUT SYSTEMS 日内突破系统系统

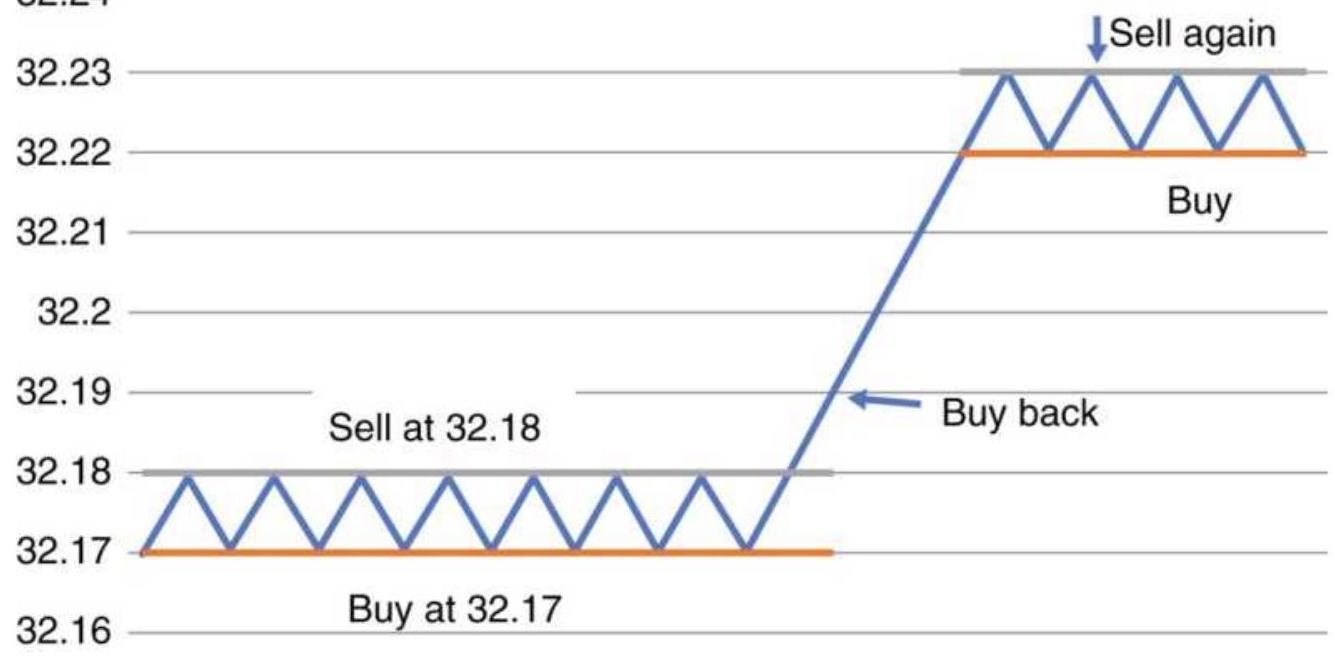

HIGH-FREQUENCY TRADING 高频交易交易

INTRADAY VOLUME PATTERNS 当日成交量模式模式

INTRADAY PRICE SHOCKS 盘中价格冲击击

NOTES 笔记记

CHAPTER 17: Adaptive Techniques 第十七章: 自适应技术技术

ADAPTIVE TREND CALCULATIONS 自适应趋势计算计算

ADAPTIVE VARIATIONS 适应性变化变化

OTHER ADAPTIVE MOMENTUM 其它自适应动量量

CALCULATIONS 计算计算

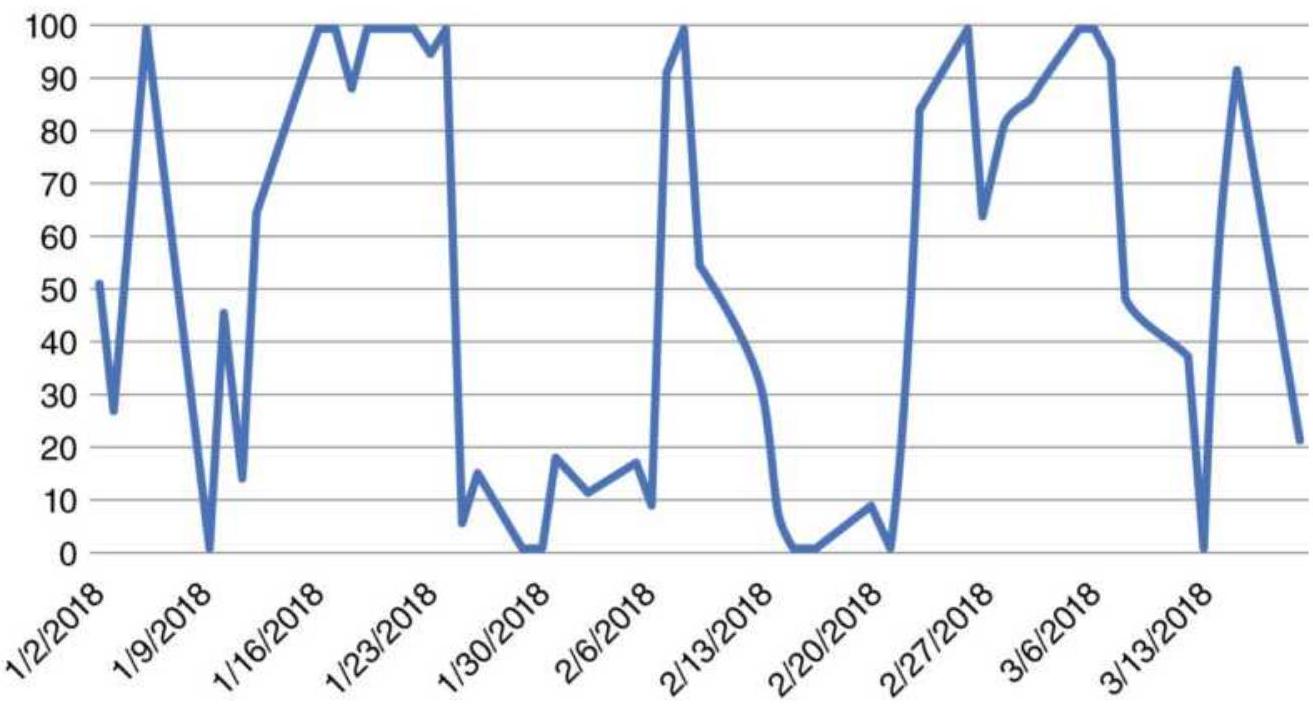

ADAPTIVE INTRADAY BREAKOUT SYSTEM 自适应日内突破系统系统

AN ADAPTIVE PROCESS 适应性过程过程

NOTES 笔记记

CHAPTER 18: Price Distribution Systems 第 18 章:价格分配系统系统

ACCURACY IS IN THE DATA 准确性就在数据中中

USE OF PRICE DISTRIBUTIONS AND 价格分布的使用和和

PATTERNS TO ANTICIPATE MOVES 预判动作的模式模式

THE IMPORTANCE OF THE SHAPE OF THE 形状的重要性性

DISTRIBUTION 分配配

A PURCHASER'S INVENTORY MODEL 一个购买者的库存模型模型

A PRODUCER'S SELLING MODEL 生产者的销售模式模式

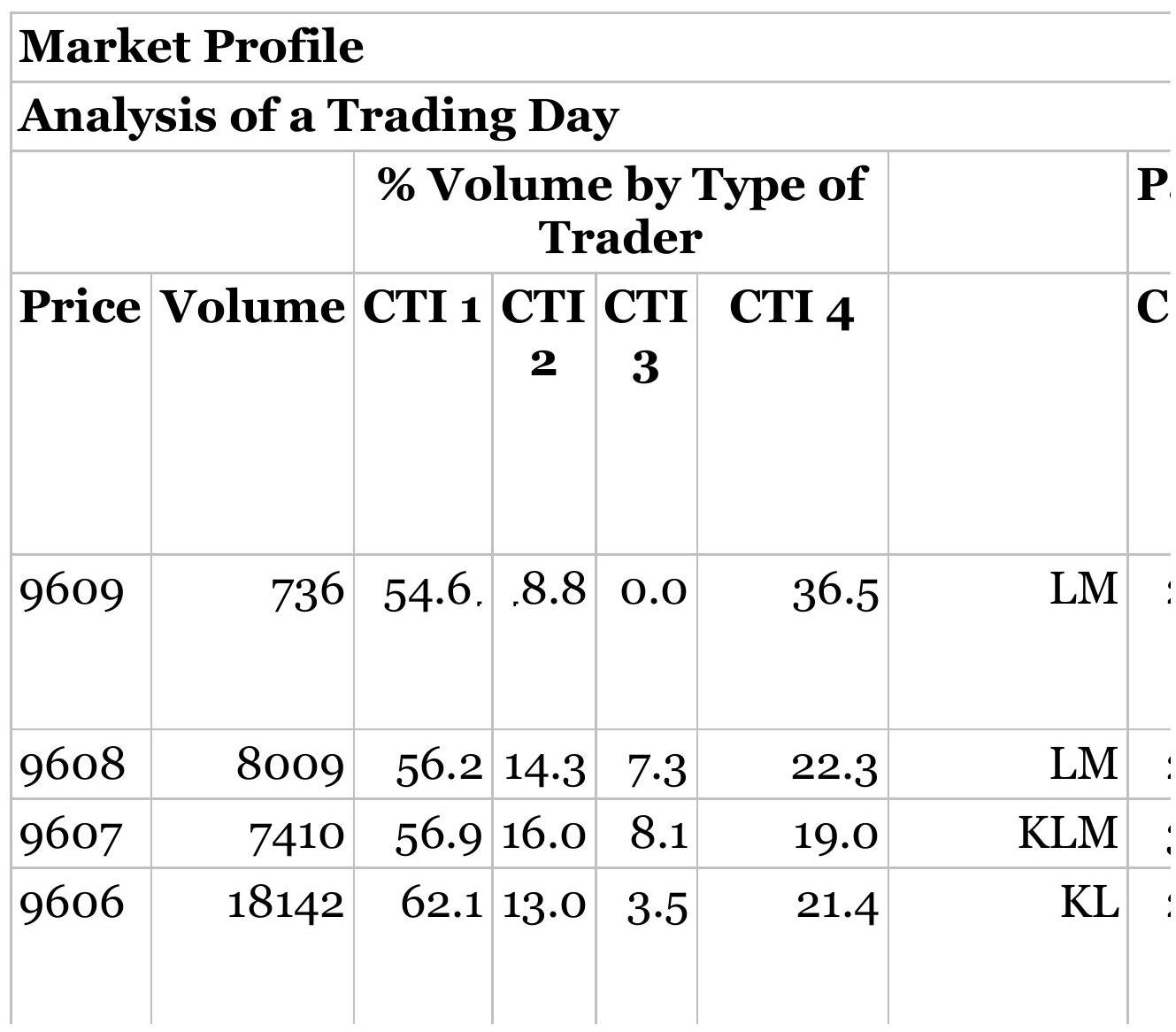

STEIDLMAYER'S MARKET PROFILE 斯泰德迈尔的市场轮廓廓

A FAST VERSION OF MARKET PROFILE 市场剖析的快速版本版本

NOTES 笔记记

CHAPTER 19: Multiple Time Frames 第 19 章:多重时间框架架

TUNING TWO TIME FRAMES TO WORK 调节两个时间框架以工作工作

TOGETHER 在一起一起

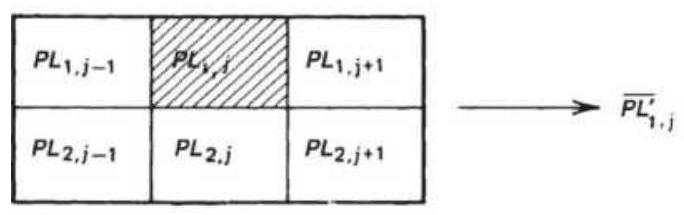

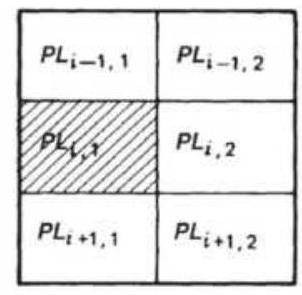

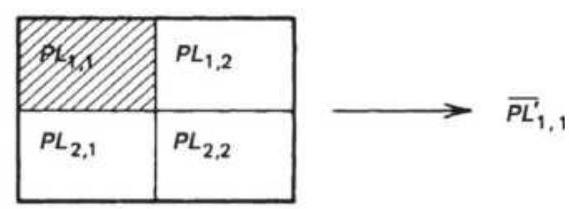

DISPLAYING TWO OR THREE TIME FRAMES 显示两个或三个时间框架架

ELDER'S TRIPLE SCREEN TRADING 老人的三重屏幕交易交易

SYSTEM 系统系统

ROBERT KRAUSZ'S MULTIPLE TIME 罗伯特·克劳斯的多重时间时间

FRAMES 帧帧

MARTIN PRING'S KST SYSTEM 马丁·普林格的 KST 系统系统

NOTES 笔记记

CHAPTER 20: Advanced Techniques 第 20 章:高级技术技术

MEASURING VOLATILITY 测量波动率率

THE PRICE-VOLATILITY RELATIONSHIP 价格与波动性之间的关系关系

USING VOLATILITY FOR TRADING 利用波动性进行交易交易

LIQUIDITY 流动性性

TRENDS AND PRICE NOISE 趋势和价格噪音音

TRENDS AND INTEREST RATE CARRY 趋势和利率差额额

FUZZY LOGIC 模糊逻辑辑

EXPERT SYSTEMS 专家系统系统

GAME THEORY 博弈论论

FRACTALS, CHAOS, AND ENTROPY 分形、混沌和熵熵

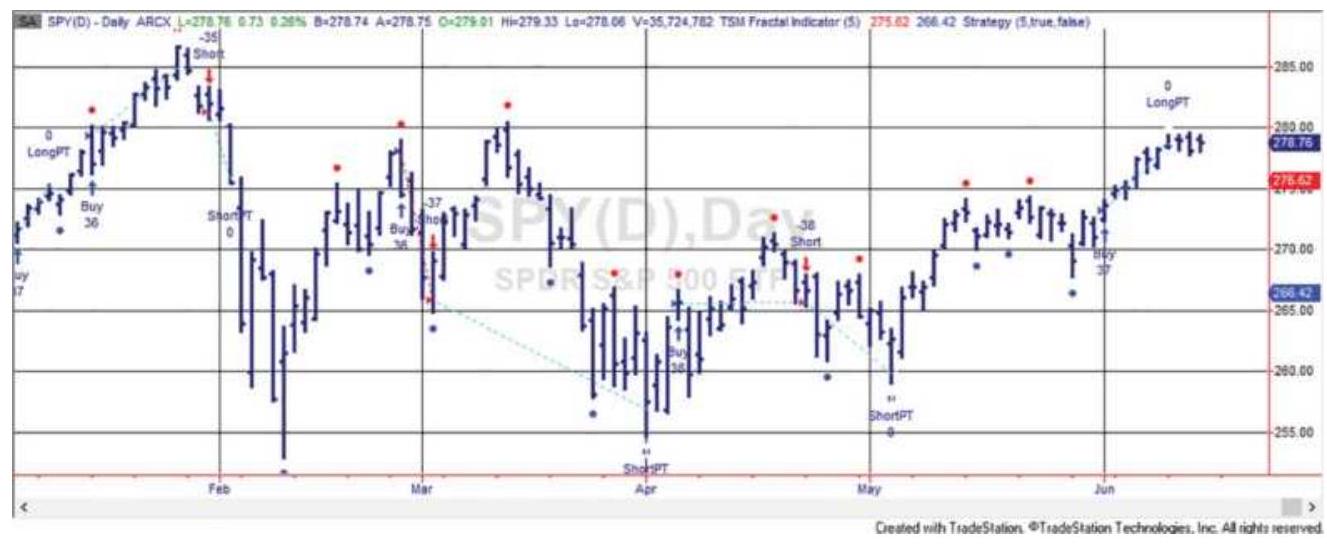

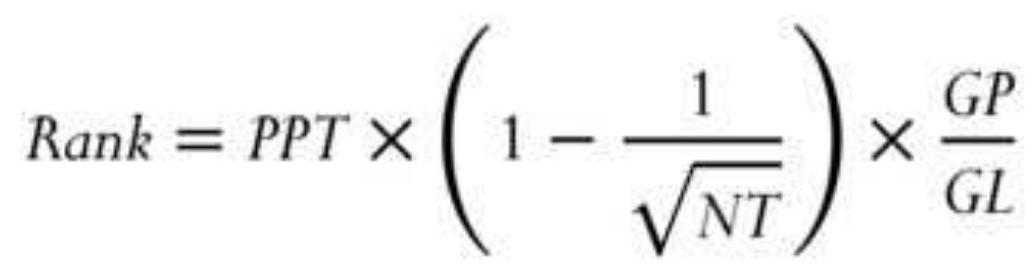

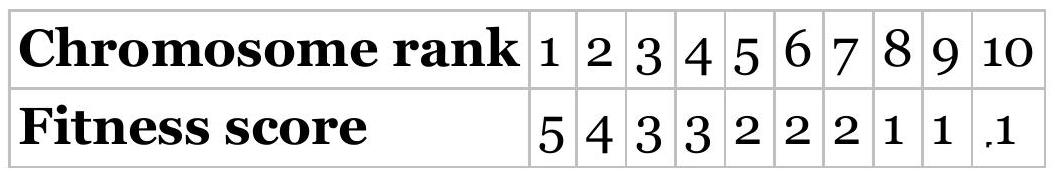

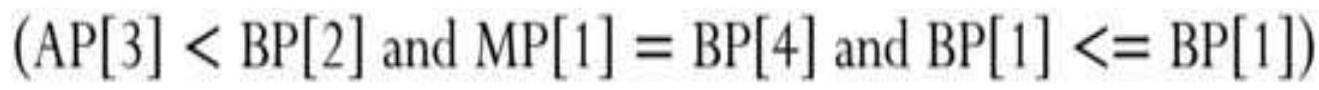

GENETIC ALGORITHMS 遗传算法算法

NEURAL NETWORKS 神经网络网络

MACHINE LEARNING AND ARTIFICIAL 机器学习与人工智能智能

INTELLIGENCE 智力力

REPLICATION OF HEDGE FUNDS 对冲基金的复制复制

NOTES 笔记记

CHAPTER 21: System Testing 第 21 章:系统测试测试

EXPECTATIONS 期望望

SELECTING THE TEST DATA 选择测试数据数据

TESTING INTEGRITY 测试完整性性

IDENTIFYING THE PARAMETERS 识别参数参数

SEARCHING FOR THE BEST RESULT 寻找最佳结果结果

TOO LARGE TO TEST EVERYTHING 太大而无法测试所有内容内容

VISUALIZING AND INTERPRETING TEST 可视化与解释测试测试

RESULTS 结果结果

THE IMPACT OF COSTS``` 成本的影响影响

REFINING THE STRATEGY RULES 精炼战略规则规则

ARRIVING AT VALID TEST RESULTS 得出有效的测试结果结果

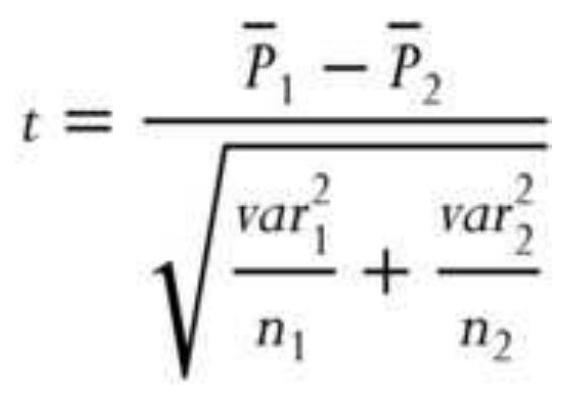

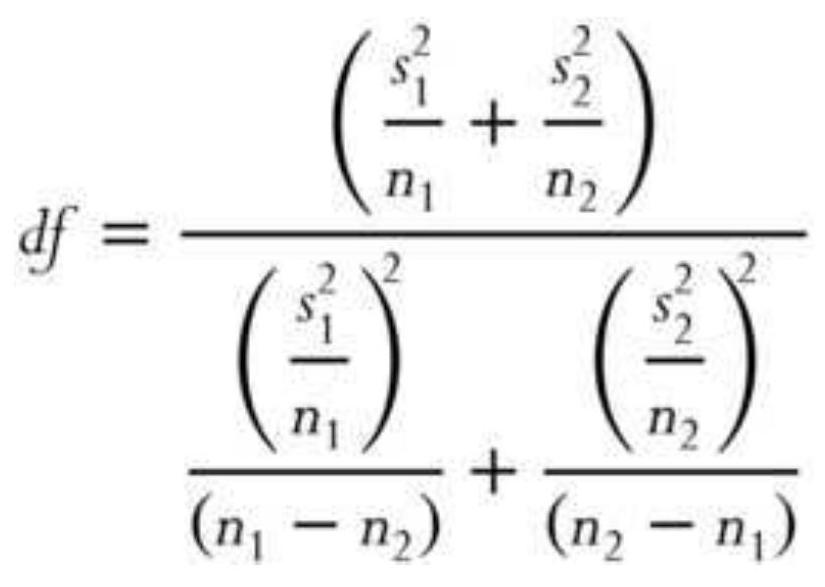

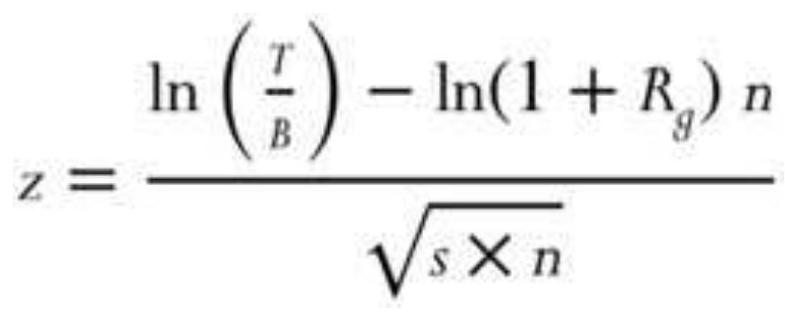

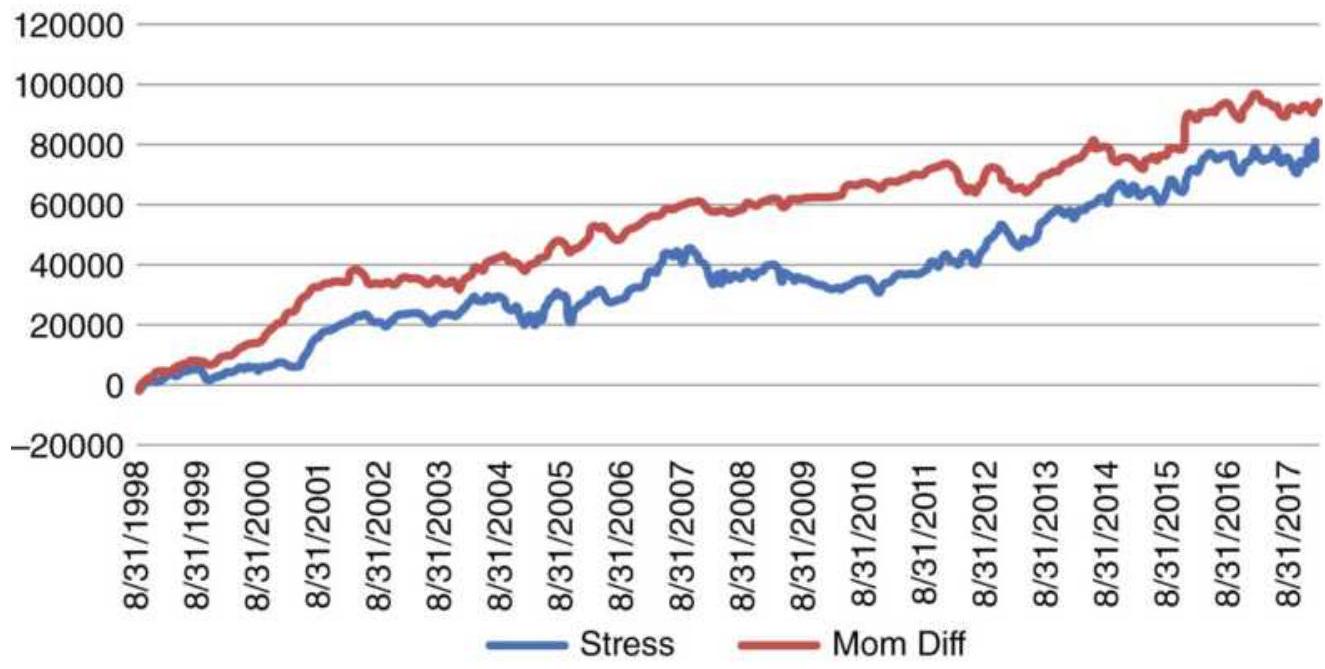

COMPARING THE RESULTS OF TWO TREND 比较两种趋势的结果结果

SYSTEMS 系统系统

RETESTING TO STAY CURRENT 重新测试以保持最新最新

PROFITING FROM THE WORST RESULTS 从最差的结果中获利利

TESTING ACROSS A WIDE RANGE OF 对广泛范围的测试测试

MARKETS 市场市场

PRICE SHOCKS 价格冲击击

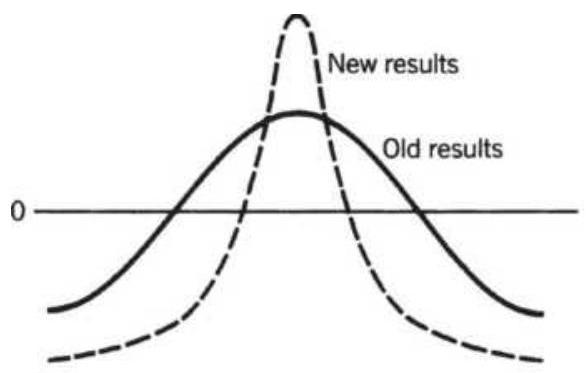

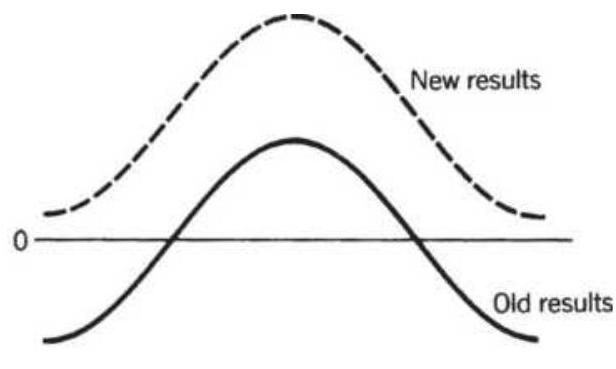

ANATOMY OF AN OPTIMIZATION 优化的解剖图图

SUMMARIZING ROBUSTNESS 总结稳健性性

NOTES 笔记记

CHAPTER 22: Adding Reality 第 22 章:加入现实现实

SOME COMPUTER BASICS 一些计算机基础知识知识

THE ABUSE OF POWER 权力滥用用

FINAL STEPS BEFORE LAUNCH 最后的发射前步骤步骤

EXTREME EVENTS 极端事件事件

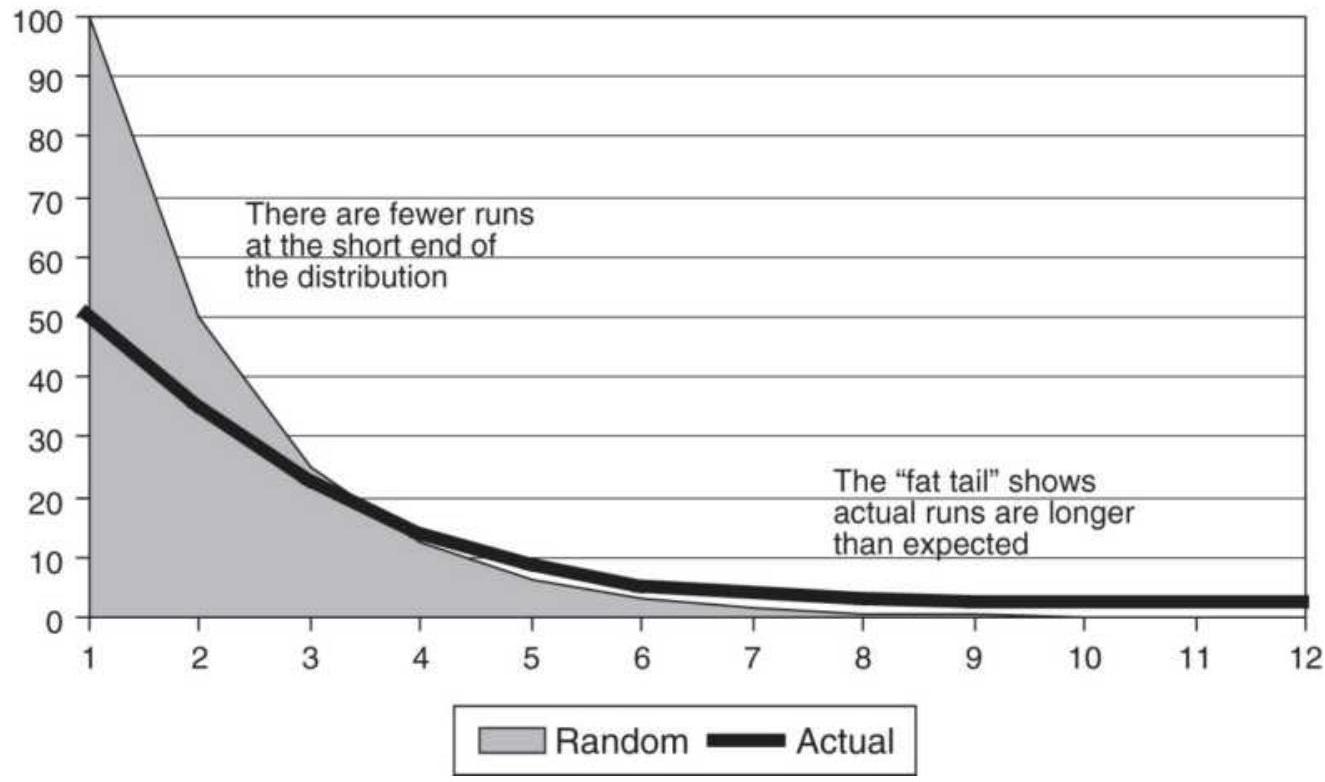

GAMBLING TECHNIQUES: THE THEORY OF 赌博技巧:理论理论

RUNS 运行运行

SELECTIVE TRADING 选择性交易交易

SYSTEM TRADE-OFFS 系统权衡衡

SILVER AND AMAZON: TOO GOOD TO BE 银和亚马逊:好得令人难以置信置信

TRUE 真真

SIMILARITY OF SYSTEMATIC TRADING 系统交易的相似性性

SIGNALS 信号号

NOTES 笔记记

CHAPTER 23: Risk Control

MISTAKING LUCK FOR SKILL

RISK AVERSION

LIQUIDITY

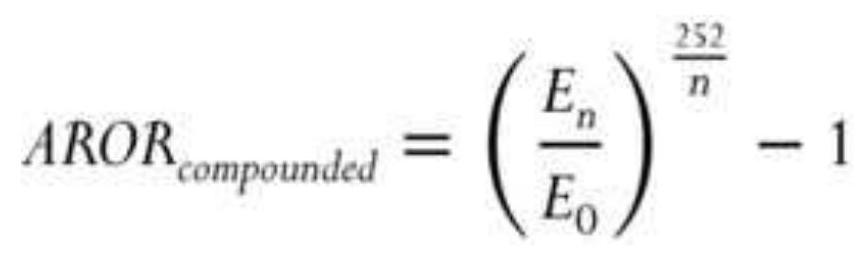

MEASURING RETURN AND RISK

POSITION SIZING

INDIVIDUAL TRADE RISK

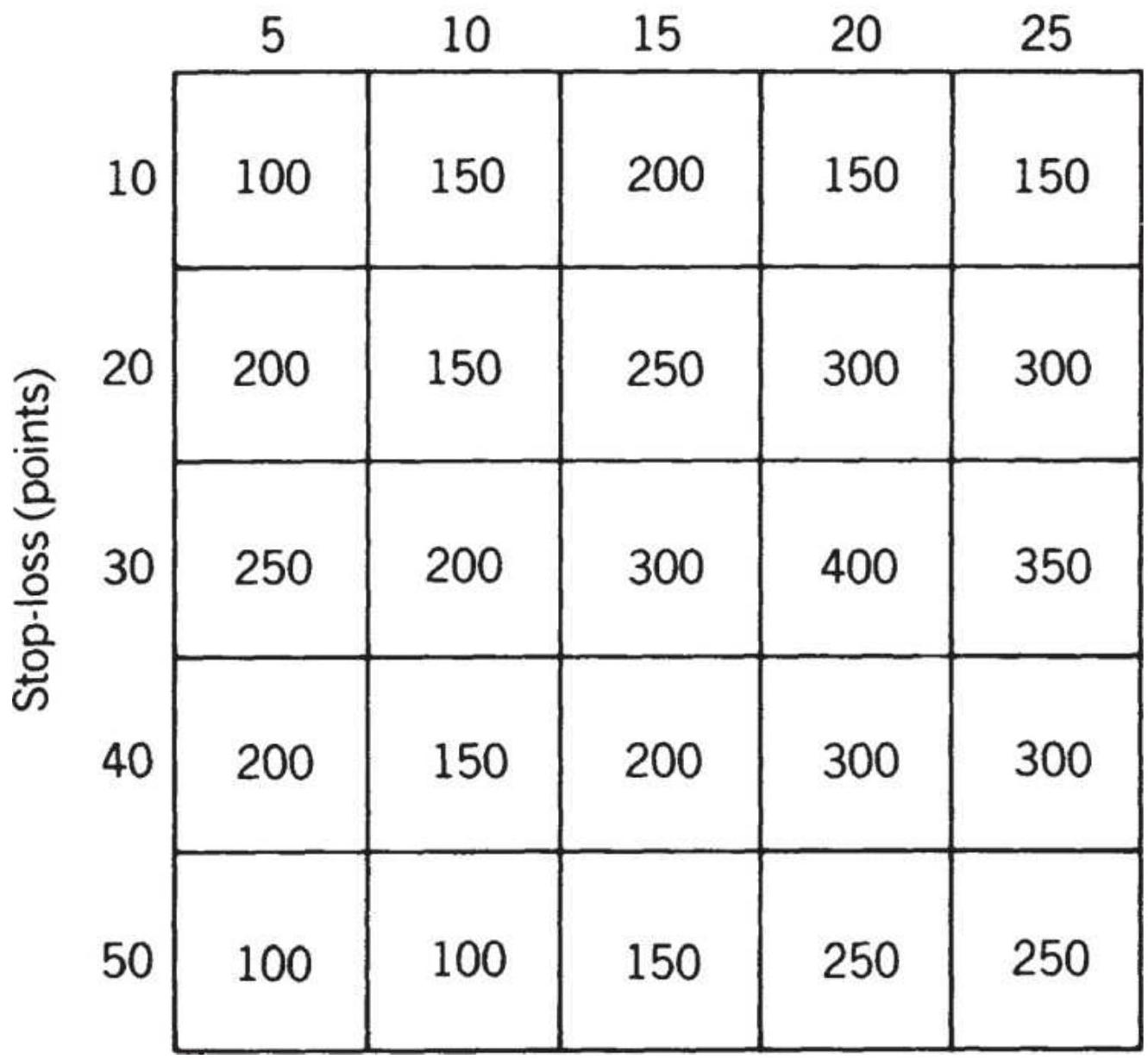

KAUFMAN ON STOPS AND PROFIT-TAKING

ENTERING A POSITION

LEVERAGE

COMPOUNDING A POSITION

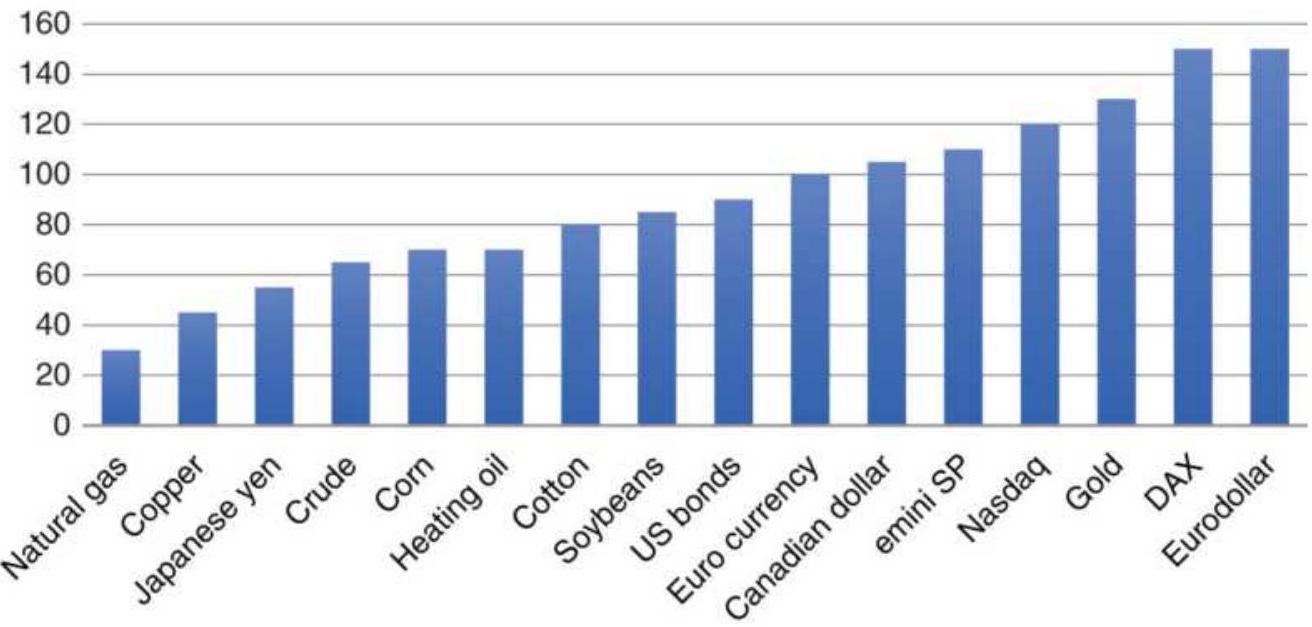

SELECTING THE BEST MARKETS

PROBABILITY OF SUCCESS AND RUIN

MANAGING EQUITY RISK

IDEAL LEVERAGE USING OPTIMAL f

COMPARING EXPECTED AND ACTUAL

RESULTS

```

NOTES

CHAPTER 24: Diversification and Portfolio Allocation

DIVERSIFICATION

TYPES OF PORTFOLIO MODELS

CLASSIC PORTFOLIO ALLOCATION

CALCULATIONS

FINDING OPTIMAL PORTFOLIO

ALLOCATION USING EXCEL'S SOLVER

\section*{KAUFMAN'S GENETIC ALGORITHM SOLUTION TO PORTFOLIO ALLOCATION (GASP) \\ VOLATILITY STABILIZATION \\ NOTES \\ ABOUT THE COMPANION WEBSITE \\ INDEX \\ END USER LICENSE AGREEMENT}

\section*{List of Tables}

Chapter 1

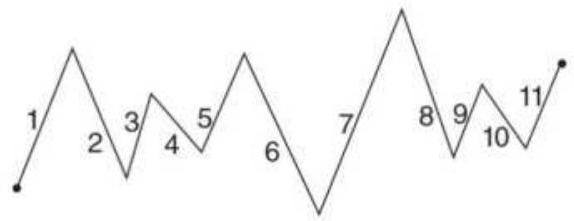

TABLE 1.1 These price changes, reflecting the patterns in Figure 1.4, S...

Chapter 2

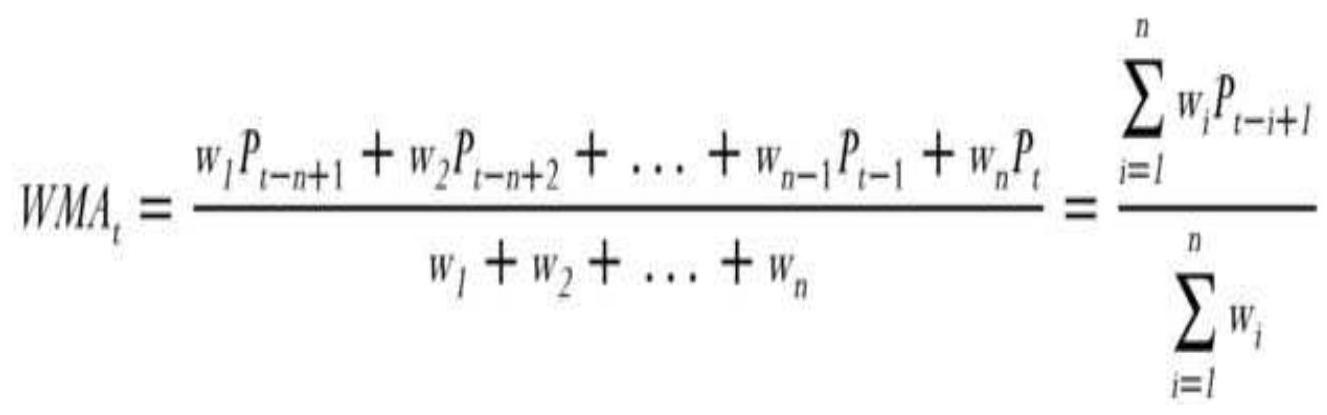

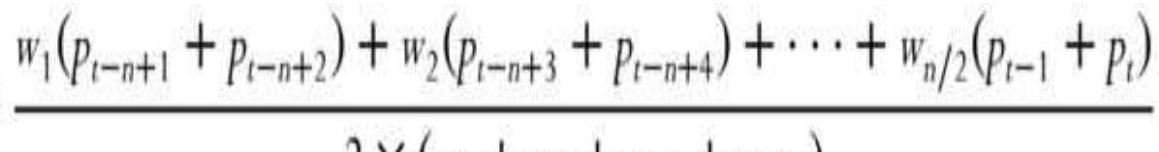

TABLE 2.1 Weighting an average.

TABLE 2.2 Values of \(t\) corresponding to the upper tail probabilit.

TABLE 2.3 Calculation of returns and NAVs

from daily profits and losses.

TABLE 2.4 Marginal probability.

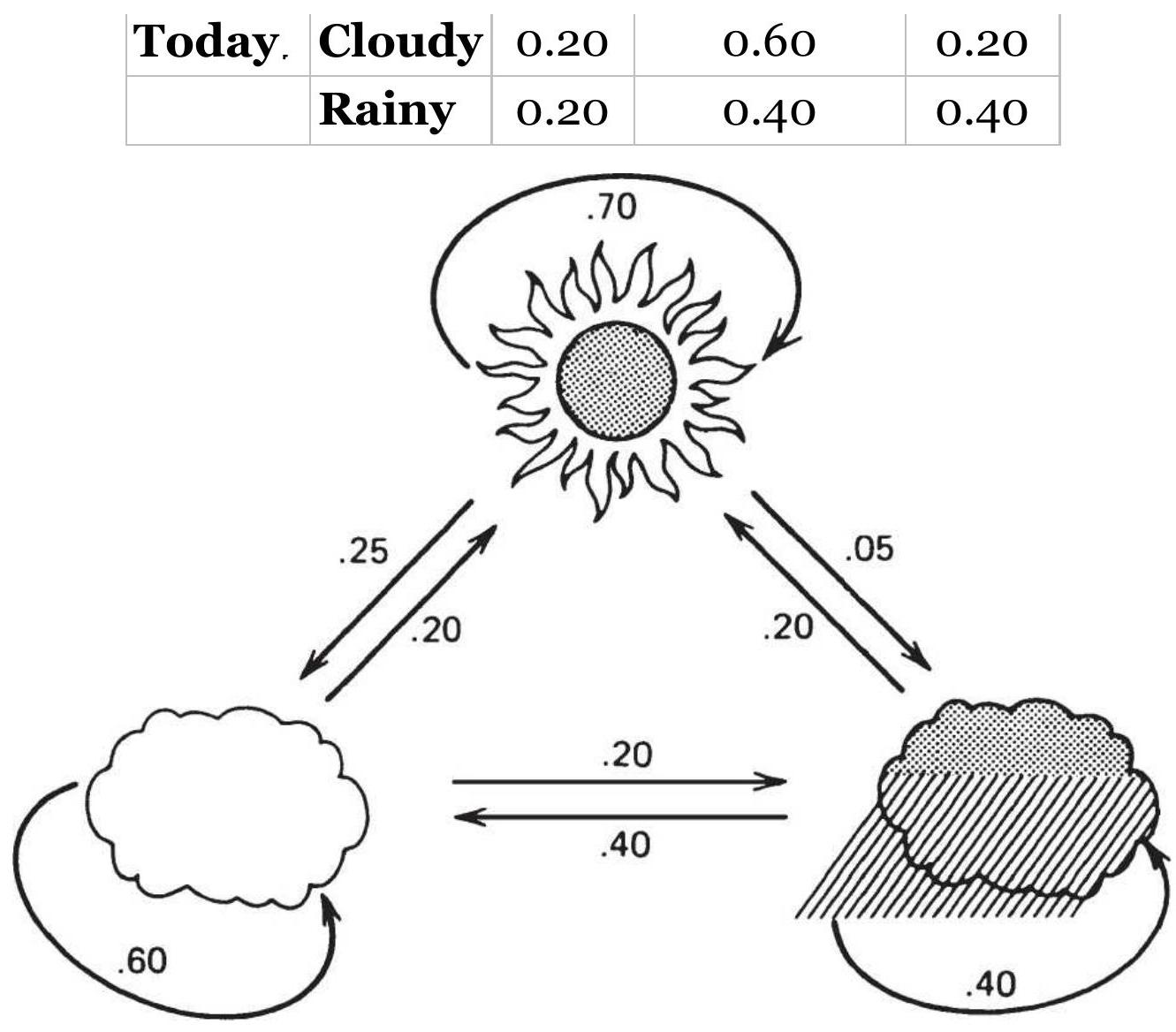

TABLE 2.5 Transition Matrix

TABLE 2.6a Counting the occurrences of up and down days.

TABLE 2.6b Starting transition matrix.

Chapter 3

TABLE 3.1 Percentage of time gaps are closed within 1 week, based on a...

TABLE 3.2 Average upward gaps, pullbacks, and close for 275 active stocks.

TABLE 3.3 Average downward gaps, pullbacks, and close for 275 active stocks.

\section*{Chapter 5}

TABLE 5.1 Point-and-figure box sizes.

TABLE 5.2 The box size with the best

performance of the point-and-figur...

TABLE 5.3 Breakout test results using data from 2000 through November 2017.

\section*{Chapter 6}

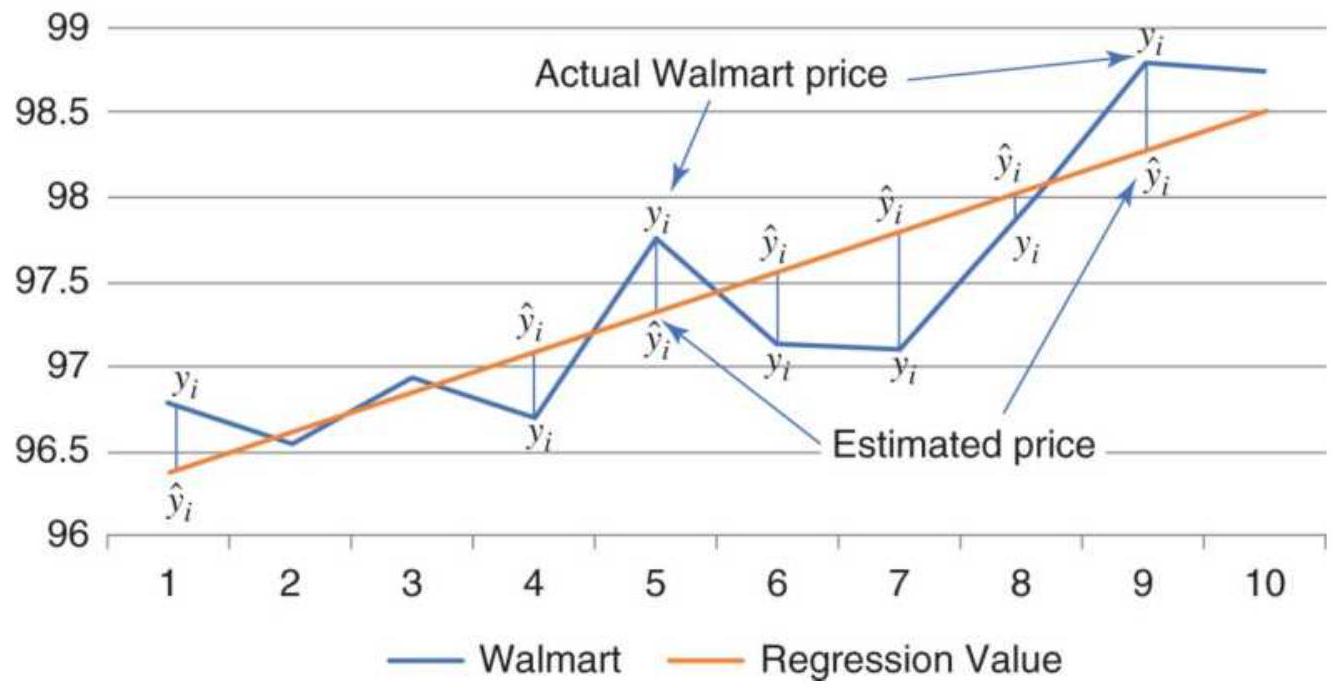

TABLE 6.1 Calculations for the Walmart best fit.

TABLE 6.2 Output from Excel's regression function.

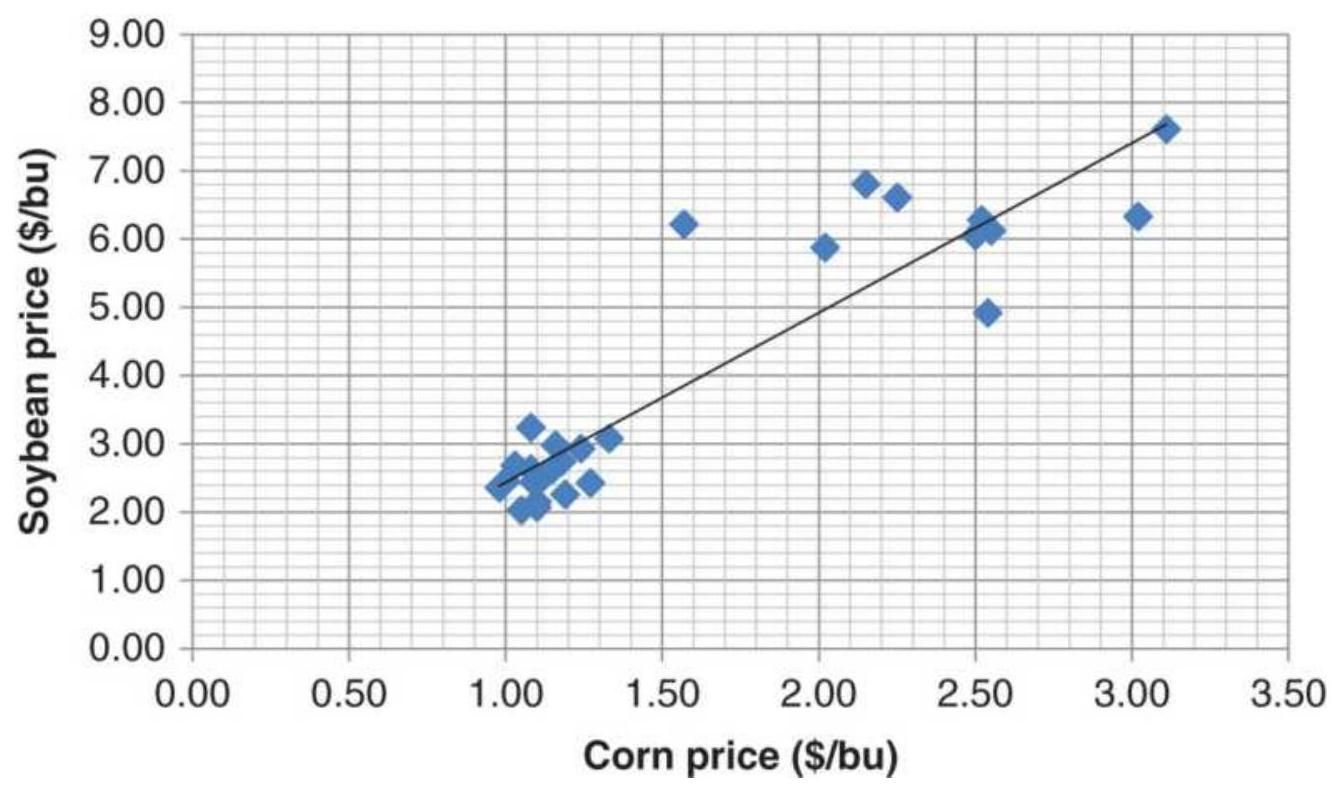

TABLE 6.3 Calculations for the corn-soybean regression.

TABLE 6.4 Excel solution for the corn-soybean regression.

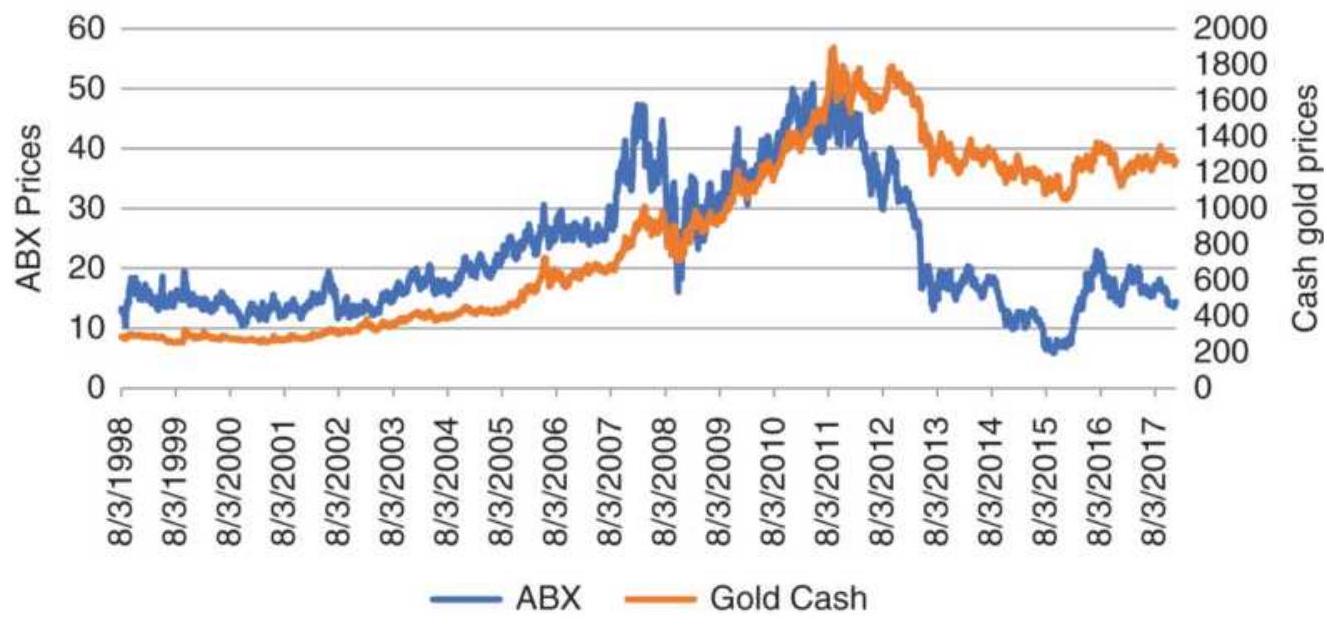

TABLE 6.5 Spreadsheet for ABX-gold regression.

TABLE 6.6 ABX \(=f\) (gold) solution for 1st-, 2nd, and 3rd-order polynomials.

TABLE 6.7 Spreadsheet setup for linear,

logarithmic, and exponential regre.

TABLE 6.8 Spreadsheet for the curvilinear (2nd-order) solution.

TABLE 6.9 Wheat prices and set-up for Solver solution.

TABLE 6.10 Ranking of pharmaceutical companies.

Chapter 7

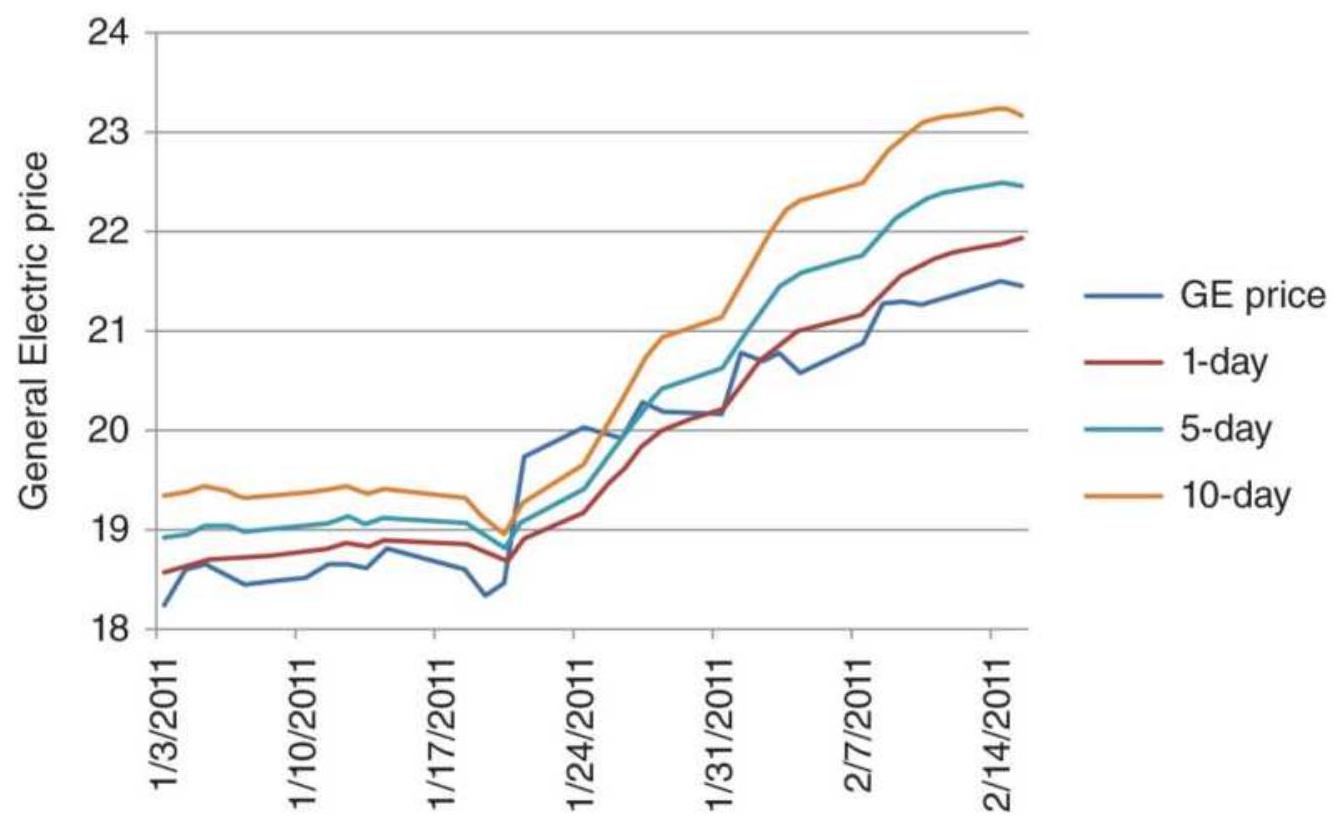

TABLE 7.1 General Electric analysis of

regression error based on a 20-day...

TABLE 7.2 The standard deviation of errors for different "days ahead" forecasts....

TABLE 7.3 Comparison of exponential smoothing values.

TABLE 7.4 Comparison of exponential smoothing residual impact.

TABLE 7.5 Equating standard moving averages to exponential smoothing.

TABLE 7.6 Equating exponential smoothing to standard moving averages.

TABLE 7.7 Comparison of exponential

smoothing techniques applied to Microsoft.

Chapter 8

TABLE 8.1 Frequency distribution for a sample of five diverse markets, sho...

TABLE 8.2 Comparison of entry methods for 10

years of Amazon (AMZN). Signa...

TABLE 8.3 Comparison of entries on the close, next open, and next close. ...

TABLE 8.4 Performance statistics for NASDAQ futures, 1998-June 2018.

TABLE 8.5 Results of using a moving average of the highs and lows, compa..

TABLE 8.6 MPTDI Variables for gold.

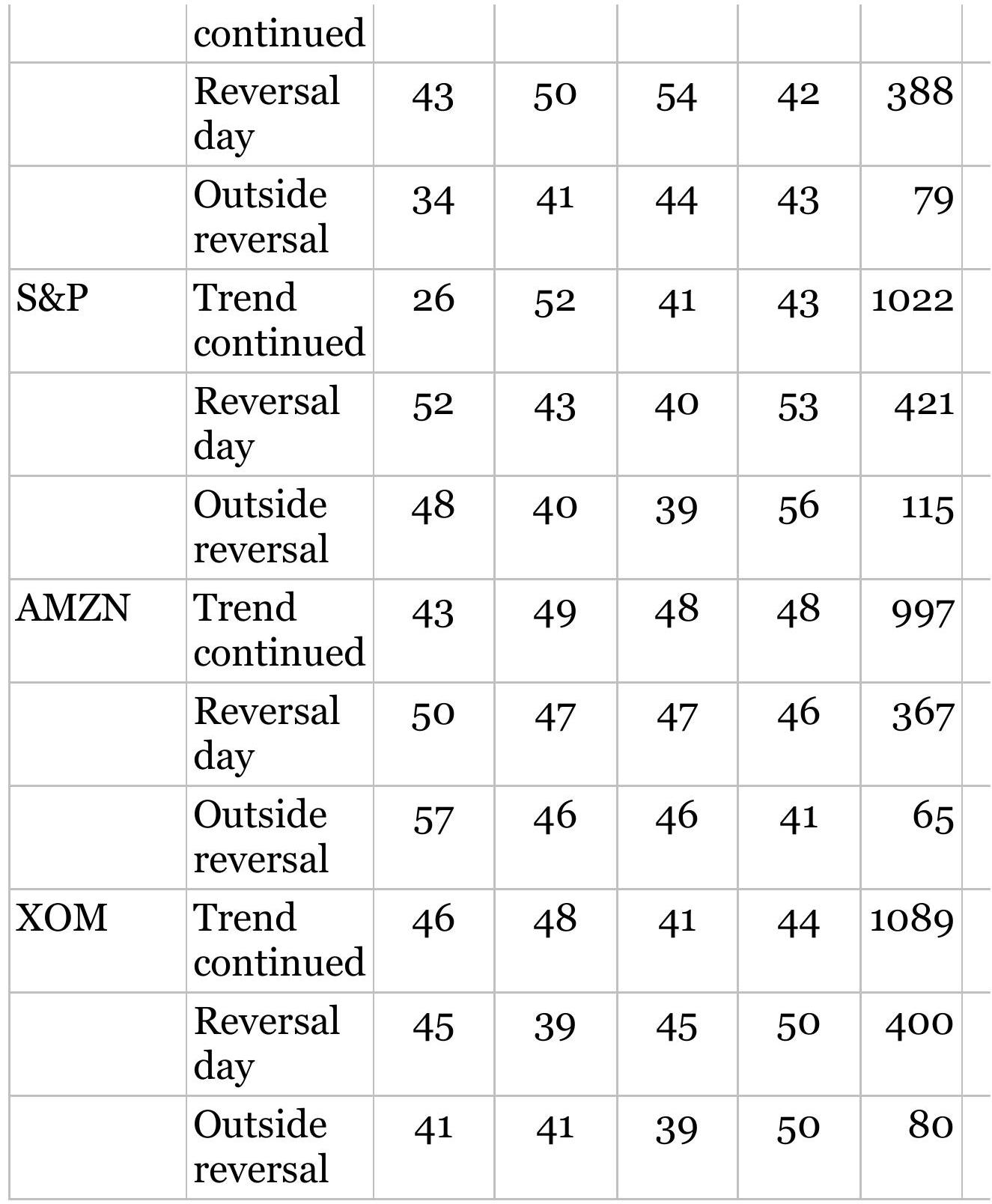

TABLE 8.7 Summary of futures market results.

TABLE 8.8 Summary of stock market results.

TABLE 8.9 Summary of system net profits for stocks.

TABLE 8.10 Average results of the three trend strategies for four sample...

TABLE 8.11 Comparison of a 120-day single moving average with a 100- and...

TABLE 8.12 Results of a 2-trend system using futures, 1991-2017.

TABLE 8.13 Adding a short-term trend to the 2trend crossover system.

Chapter 9

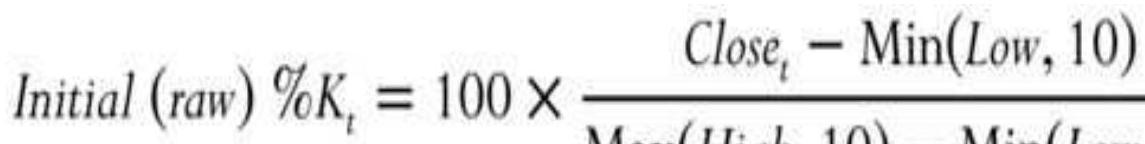

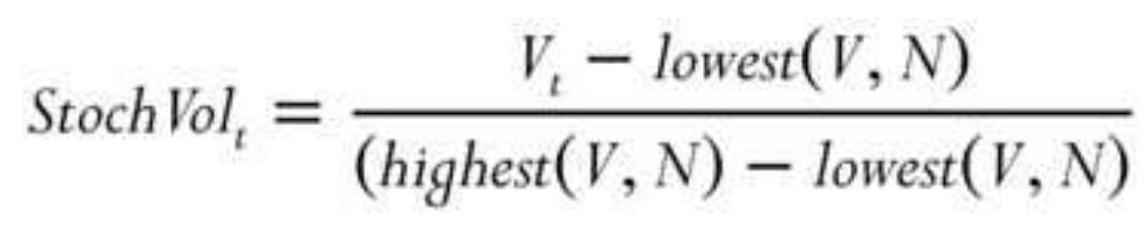

TABLE 9.1 Excel example of 10-day stochastic for Hewlett-Packard (HPQ).

TABLE 9.2 A/D Oscillator and trading signals, soybeans, January 25,...

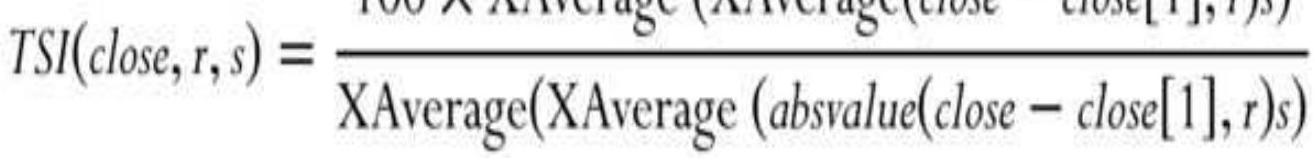

TABLE 9.3 TSI calculations using two 20-day smoothing periods.

TABLE 9.4 Equations for velocity and acceleration.

Chapter 10

TABLE 10.1 Average monthly cash wheat prices.

TABLE 10.2 Monthly returns based on wheat cash prices. Average and m...

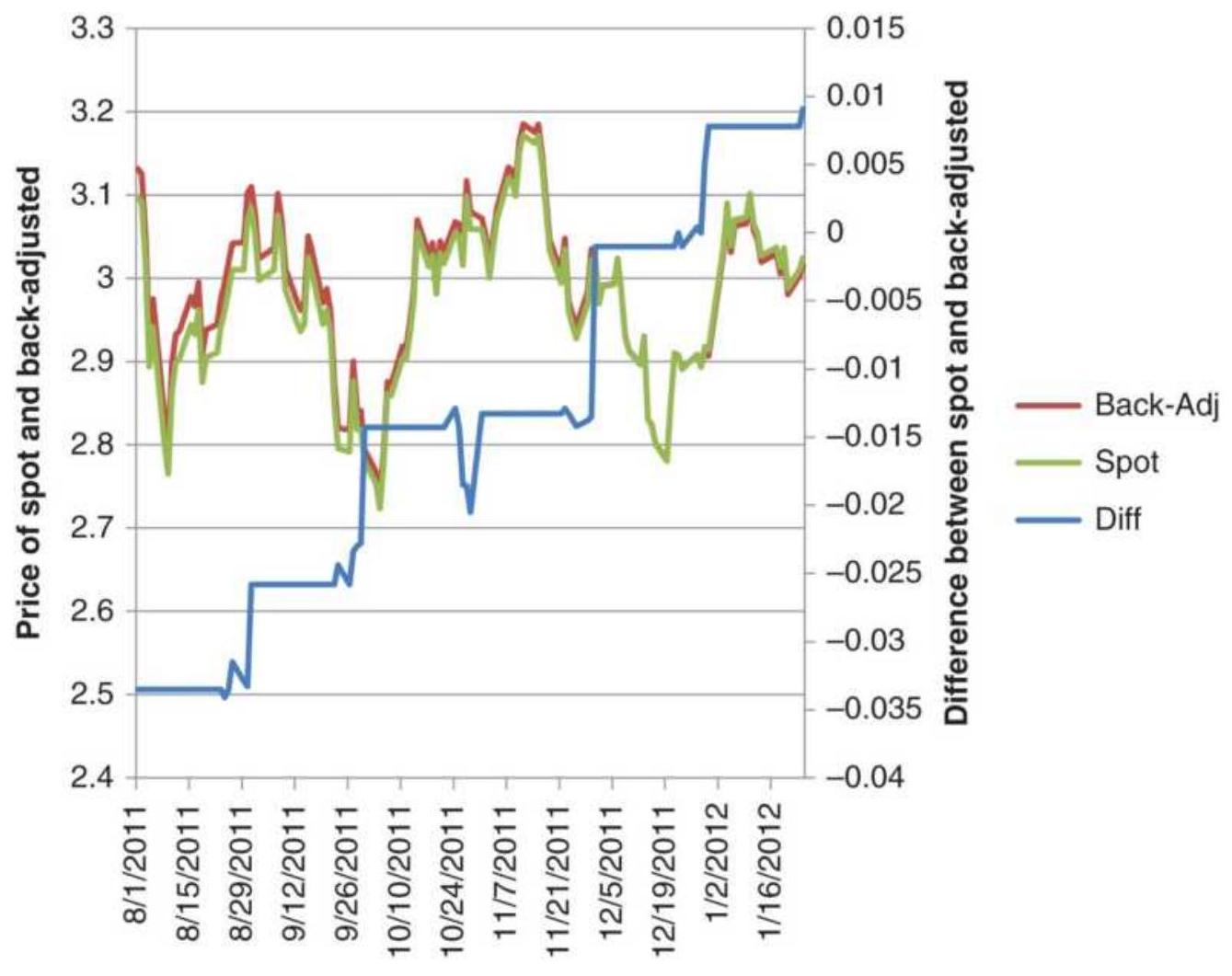

TABLE 10.3 (Top) Back-adjusted wheat futures prices, 1978-1985....

TABLE 10.4 Original cash wheat prices (top) and returns adjusted by...

TABLE 10.5 Wheat prices expressed as link relatives.

TABLE 10.6 Calculations for the moving average method.

TABLE 10.7 Weather-related events in the southern and northern hemispheres.

TABLE 10.8 Corn cash prices with seasonal buy and sell signals.

TABLE 10.9 Results of Bernstein's study, ending 1985.

TABLE 10.10 Seasonal calendar.

TABLE 10.11 Merrill's holiday results.

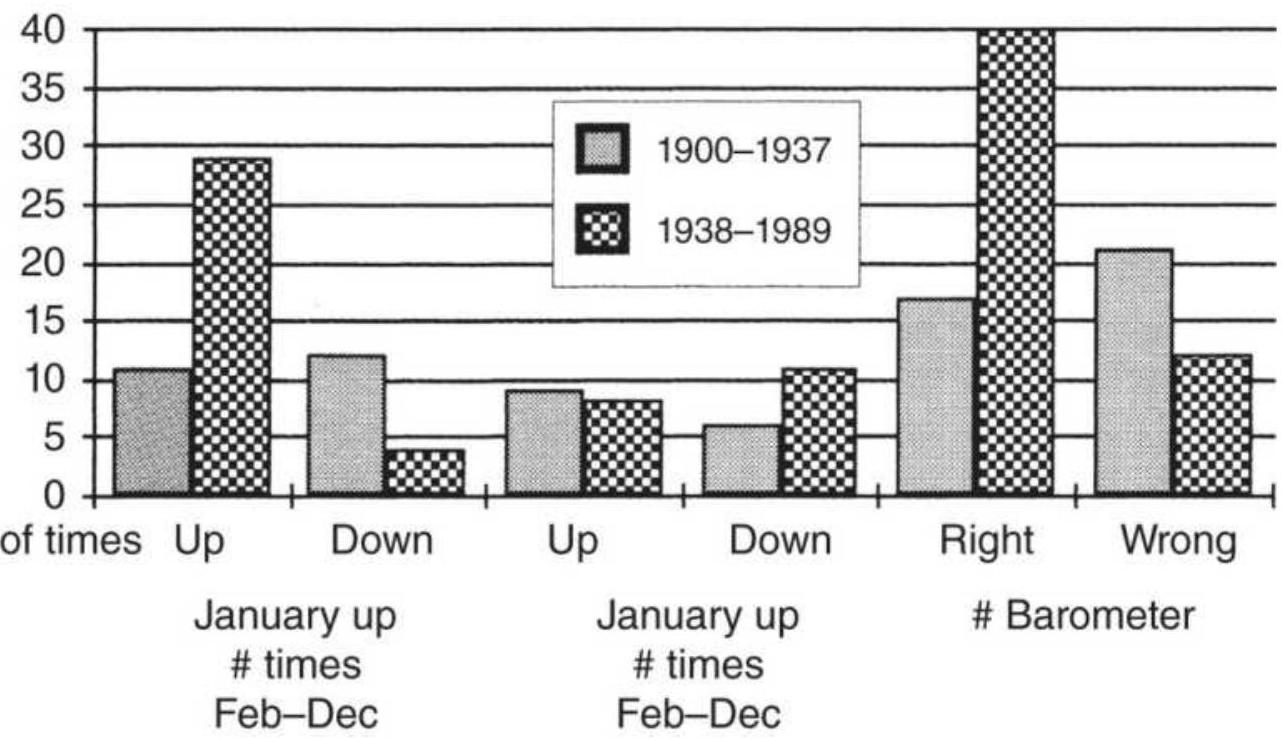

TABLE 10.12 The January barometer patterns, \(1938-1989\).

\section*{Chapter 11}

TABLE 11.1 Dates of the peaks and valleys in Figure 11.2.

TABLE 11.2 Dates of the combined observed and estimated peaks and valleys.

TABLE 11.3 Election year analysis for years in which the stock market...

TABLE 11.4 Corn setup for Solver solution.

Chapter 12

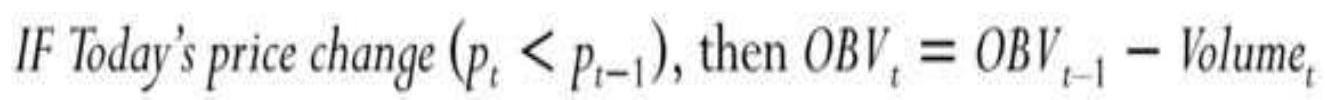

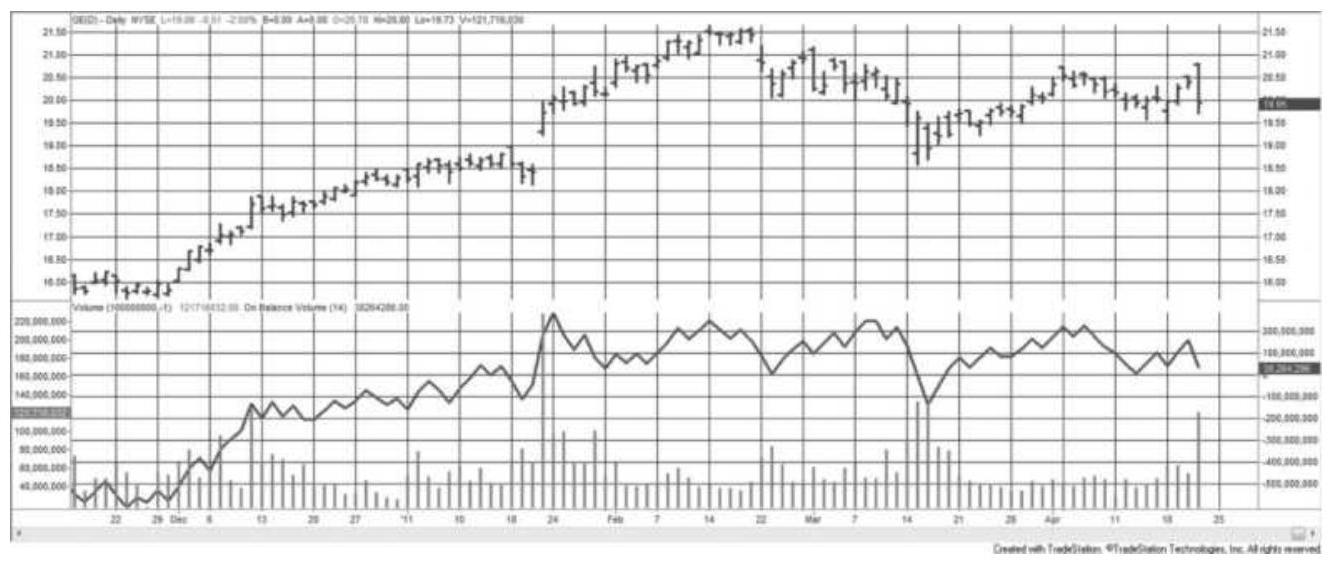

\section*{TABLE 12.1 Calculating On-Balance Volume. \\ TABLE 12.2 Interpreting On-Balance Volume.}

Chapter 13

TABLE 13.1 Major crossrates as of March 14,

2018.

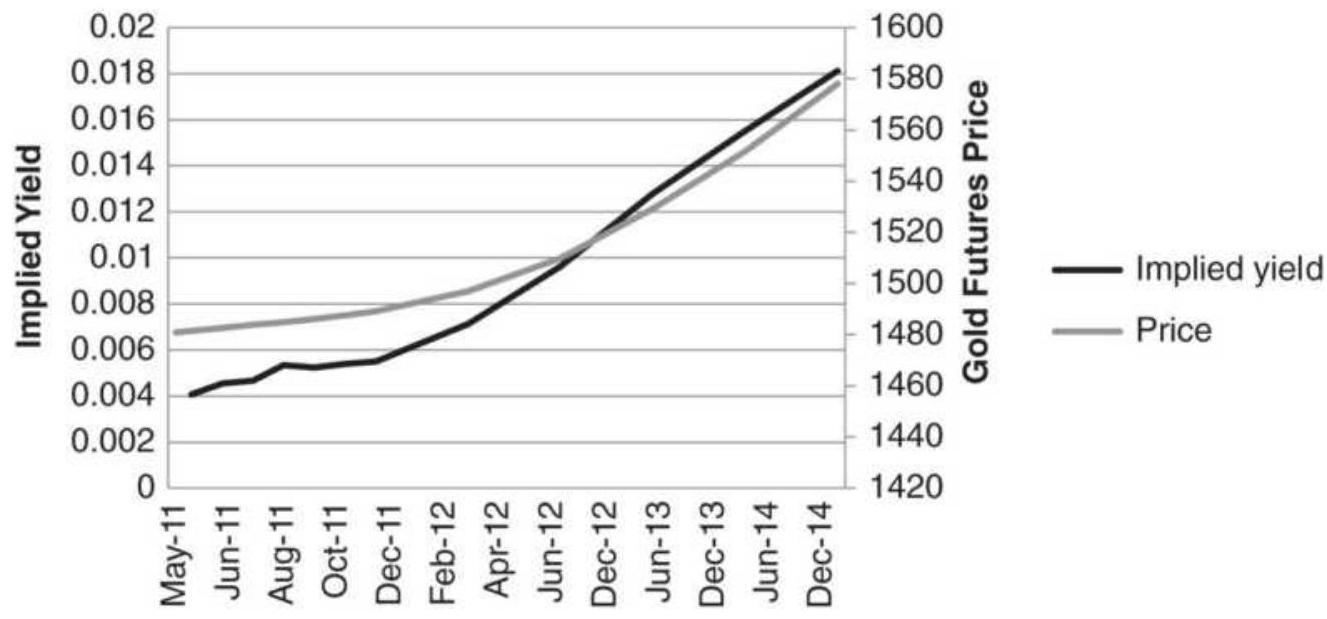

TABLE 13.2 Gold prices and delivery months, implied yield, and total days.

TABLE 13.3 Key values of crossrates and yields, March 31, 2009.

Chapter 14

TABLE 14.1 Results of upward breakout of U.S. bonds futures (left) and...

TABLE 14.2 Results of downward breakout of U.S. bonds (left) and S\&P...

TABLE 14.3 Size and position of the planets and Earth's moon.

TABLE 14.4 Solar eclipses, 2010-2020.

TABLE 14.5 Lunar eclipses, 2011-2020.

TABLE 14.6 Dates on which Jupiter and Saturn go retrograde \((\underline{R})\) and direct \((\underline{D}) \ldots\).

TABLE 14.7 New moon and full moon

occurrences, 2018-2021.

\section*{Chapter 15}

TABLE 15.1 Merrill's hourly stock market patterns.

TABLE 15.2 Time pattern for 30-year bond futures, \(1998-2017\).

TABLE 15.3 S\&P time intervals for the three periods shown in Figure 15.4.

TABLE 15.4 Detail for crude oil time patterns.

TABLE 15.5 Results of the gap test using emini S\&P prices from 8:30...

TABLE 15.6 Summary of S\&P gap test results.

TABLE 15.7 U.S. 30-year bond futures, 19992017.

TABLE 15.8 Crude oil futures, September 2014-April 2017.

TABLE 15.9 Euro currency futures, April 2001April 2017.

```

TABLE 15.10 Amazon (AMZN) gap analysis,

2000-April 2018.

```

TABLE 15.11 General Electric (GE) gap analysis, 2000-April 2018.

TABLE 15.12 Micron (MU) gap analysis, 2000April 2018.

TABLE 15.13 Boeing (BA) gap analysis, 2000April 2018.

TABLE 15.14 Tesla (TSLA) gap analysis, 2000April 2018.

TABLE 15.15 Average upward gaps, pullbacks, and close for 275 liquid stocks.

TABLE 15.16 Average downward gaps, pullbacks, and close for 275 liquid stocks.

TABLE 15.17 Selected stocks, data from 20122017.

TABLE 15.18 Weekend results conditioned on the previous week's patterns.

TABLE 15.19 Reversal patterns showing the results of 2000-2011 on...

TABLE 15.20 Taylor's book, November 1975 Soybeans

Chapter 16

\section*{TABLE 16.1 Price ranges for S\&P futures.}

TABLE 16.2 Average high-low range of selected stocks, by year.

TABLE 16.3 Average dollar range of selected futures markets, by year.

\section*{TABLE 16.4 Opening range breakout, \% profitable trades.}

Chapter 17

\section*{TABLE 17.1 Comparative returns of four adaptive systems applied to f...}

Chapter 18

TABLE 18.1 Volatility distribution for SPY.

TABLE 18.2 Probability of annualized volatility using a frequency distribution.

Chapter 20

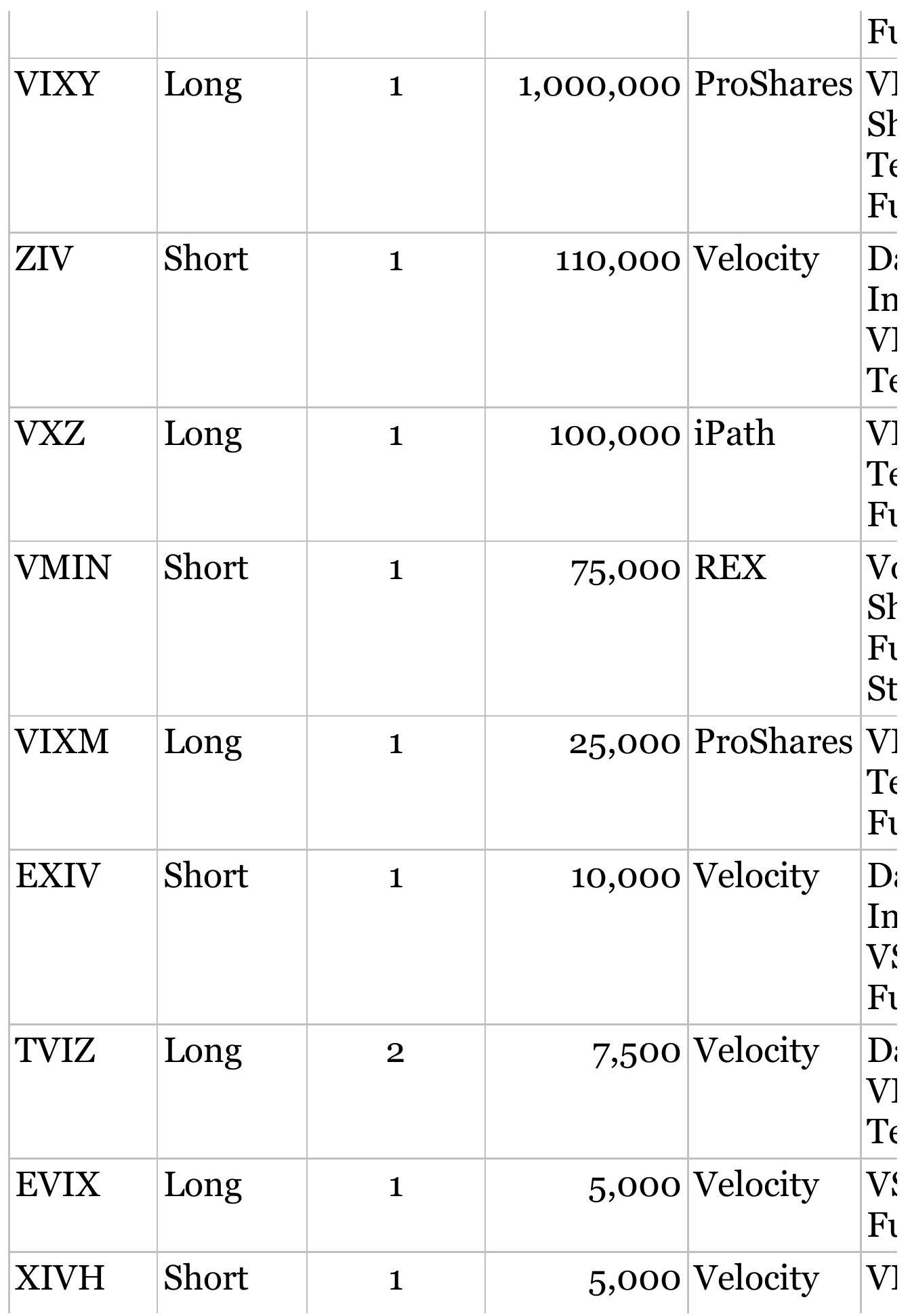

\section*{TABLE 20.1 VIX ETFs and ETNs, daily volume greater than 5,000, as of May 2018.}

\section*{TABLE 20.2 Predicting the trading range of the S\&P 500.}

TABLE 20.3 Frequency of price moves following a known pattern, including...

TABLE 20.4 Frequency of price moves following the completeness of the ch...

TABLE 20.5 Conditional probabilities of a price change given the complet...

TABLE 20.6 Functional description of the genes in chromosomes 1 and 2.

TABLE 20.7 Two training cases (initial state). TABLE 20.8 Two training cases (after mutated weighting factors).

Chapter 21

TABLE 21.1 Optimization report for a simple moving average test of QQQ....

TABLE 21.2 Statistics for the moving average and linear regression strategies.

TABLE 21.3 Reversing a losing strategy.

TABLE 21.4 Results of moving average

optimizations on futures, 1990-2017.

TABLE 21.5 Crossover tests, nearest futures,

1990-2017.

TABLE 21.6 Testing on 2007-2011 and projecting on 2012-2017.

TABLE 21.7 Test 1: Optimizing crude oil,

January 2, 1990-August 3, 1990.*

TABLE 21.8 Test 2: Optimizing crude oil,

January 2, 1990-January 16, 1991.*

TABLE 21.9 Test 3: Optimizing crude oil,

January 2, 1990-March 28, 1991.

\section*{Chapter 22}

TABLE 22.1 Summary of price shocks.

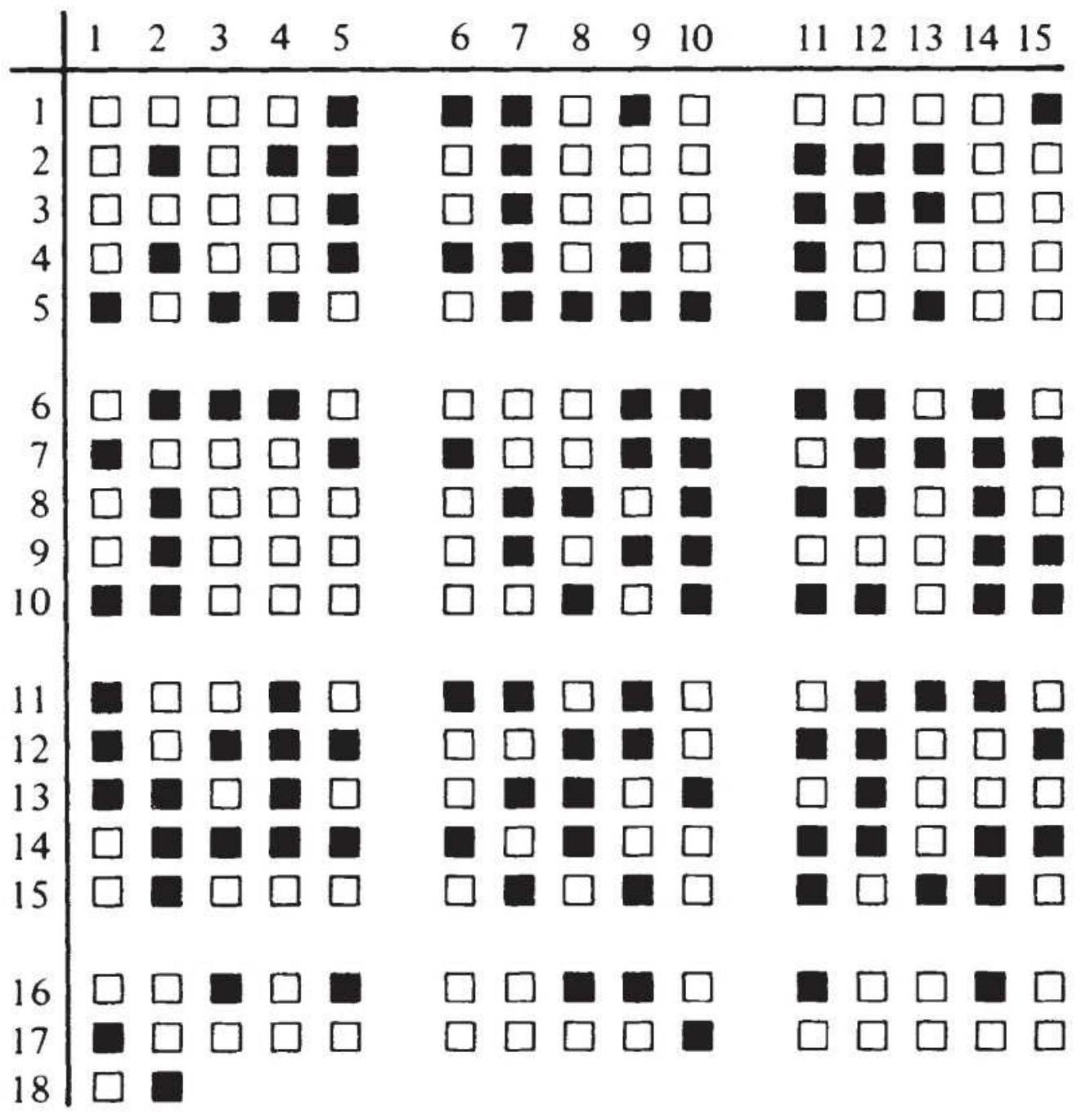

TABLE 22.2 Simulated runs.

TABLE 22.3 Frequency of up and down runs for selected markets, \(3 / 17 / 1998 \ldots\)

TABLE 22.4 Percent of trading days systems holding the same positions.

TABLE 22.5 SPY Moving average correlations, 1998-June 2018.

TABLE 22.6 SPY Similarity of positions using different moving average c...

TABLE 22.7 Correlations for four trend methods using a 20-day calculati...

TABLE 22.8 Correlations for four trend methods using an 80-day calculat...

Chapter 23

\section*{TABLE 23.1 A spreadsheet to calculate the Sharpe ratio.}

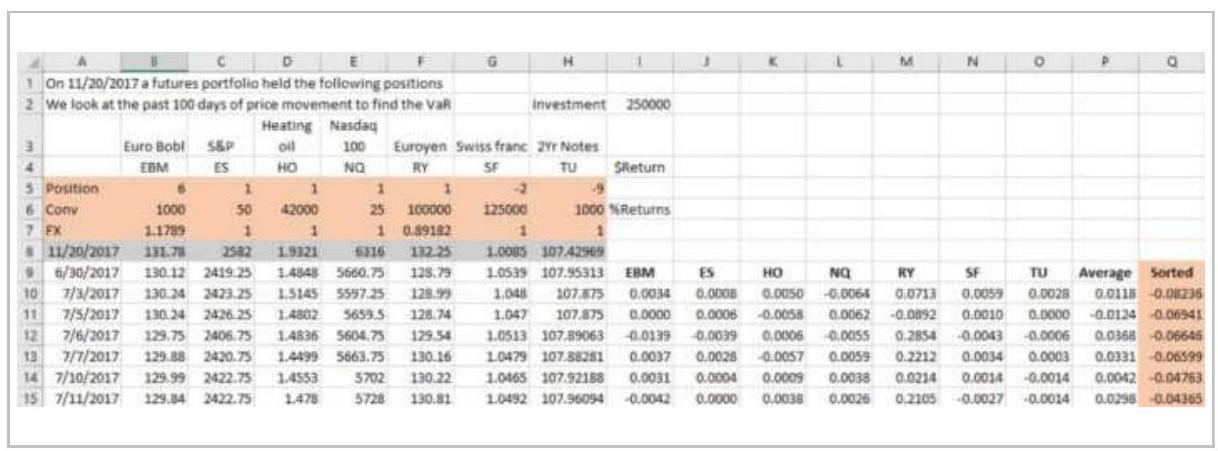

TABLE 23.2 VaR calculations on a spreadsheet. TABLE 23.3 Position sizing in futures.

TABLE 23.4 Position sizing for stocks using ATR.

TABLE 23.5 Stock allocation using annualized volatility.

TABLE 23.6 Position sizing using price.

TABLE 23.7 Stop-loss test of 30-year bonds, 2000-2011, applied to a movi...

TABLE 23.8 Results of averaging into a new position based on an 80-day m...

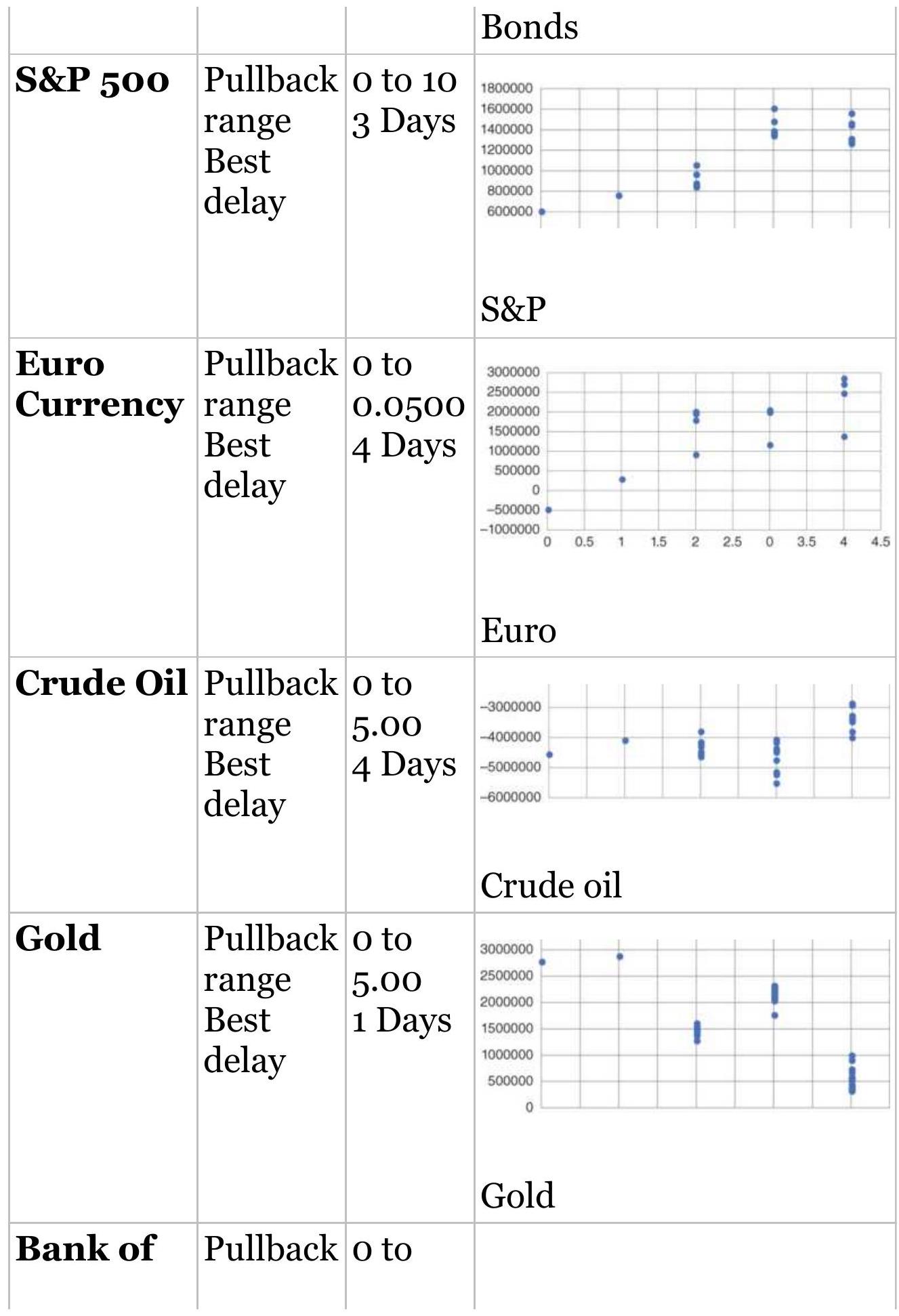

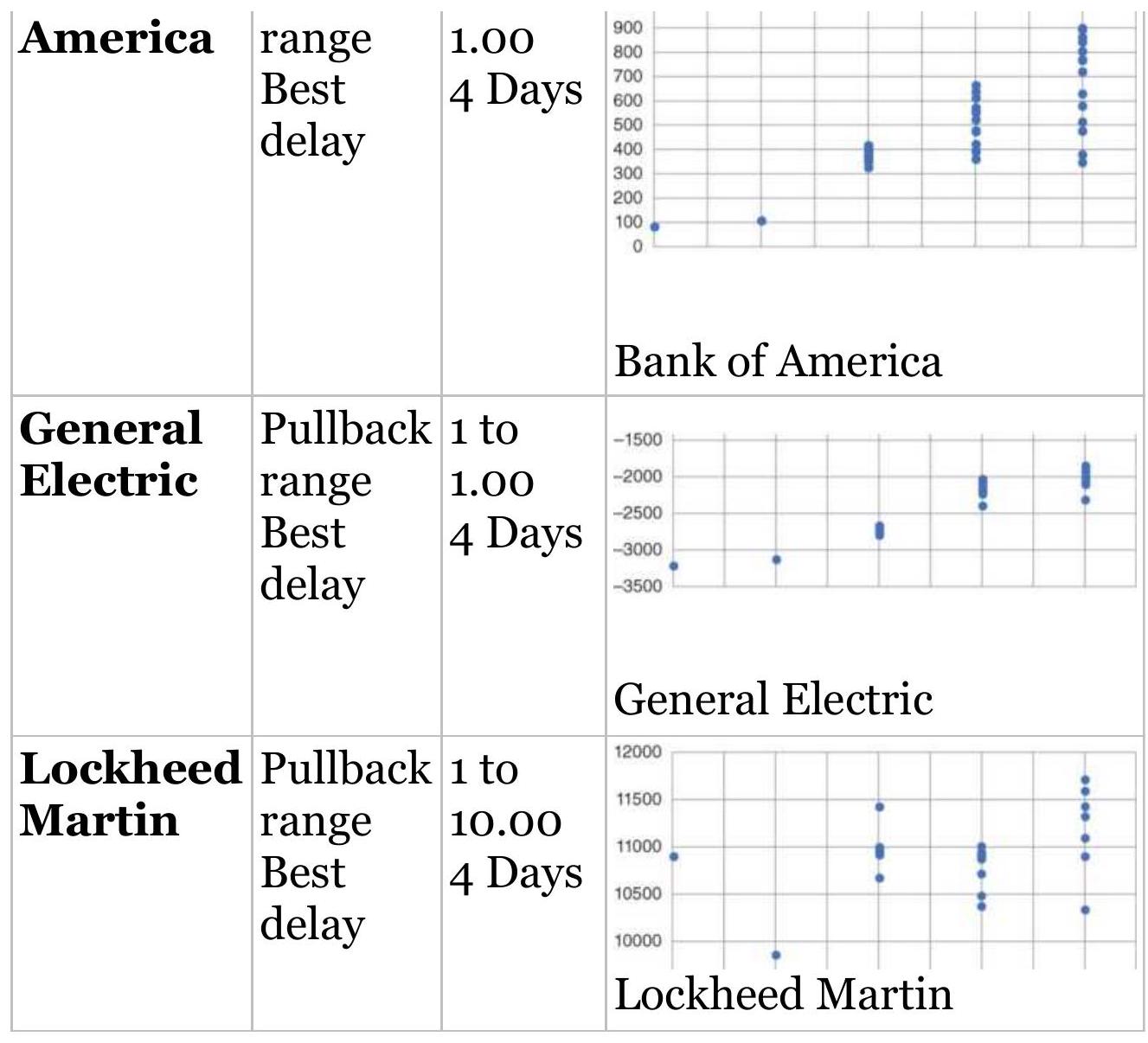

TABLE 23.9 Size of pullback and best delay for each market when waiting ...

TABLE 23.10 Results of timing the entry using an 8-day RSI, an 80-day mo...

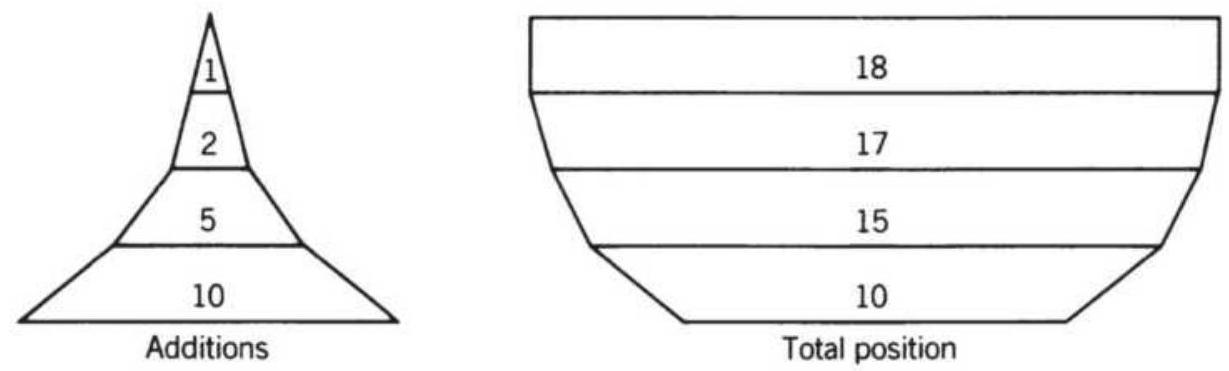

\section*{TABLE 23.11 Building a position on new high profits.}

TABLE 23.12 Adding on new highs, long-only.

TABLE 23.13 Averaging down, 1998-2008.

TABLE 23.14 Averaging down, 2009-2018.

TABLE 23.15 Examples of risk of ruin with

unequal wins and losses.

TABLE 23.16 Probability of a loss after N trades, relative to a system ...

TABLE 23.17 The probability of a specific number of losses.

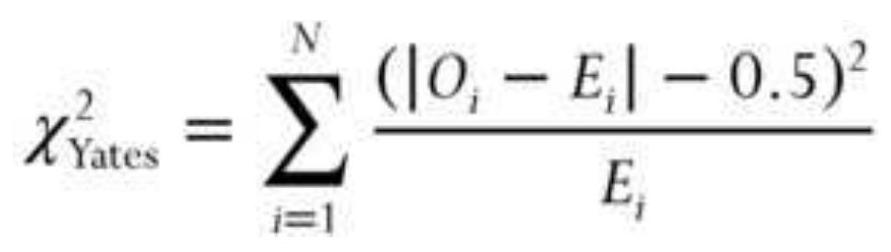

TABLE 23.18 Distribution of \(\chi^{\underline{2}}\).

TABLE 23.19 Results from Analysis of Runs

Chapter 24

TABLE 24.1 Portfolio evaluation of stocks and bonds using a spreadsheet.

\section*{TABLE 24.2 Solver Setup.}

TABLE 24.3 Returns expressed as NAVs.

TABLE 24.4 Generating random numbers to create weighting factors.

TABLE 24.5 Normalized weighting factors for each portfolio in the pool.

TABLE 24.6 Evaluating portfolio return and

\(\underline{\text { risk. }}\)

\section*{TABLE 24.7 Example of volatility stabilization.}

\section*{List of I|lustrations}

Chapter 1

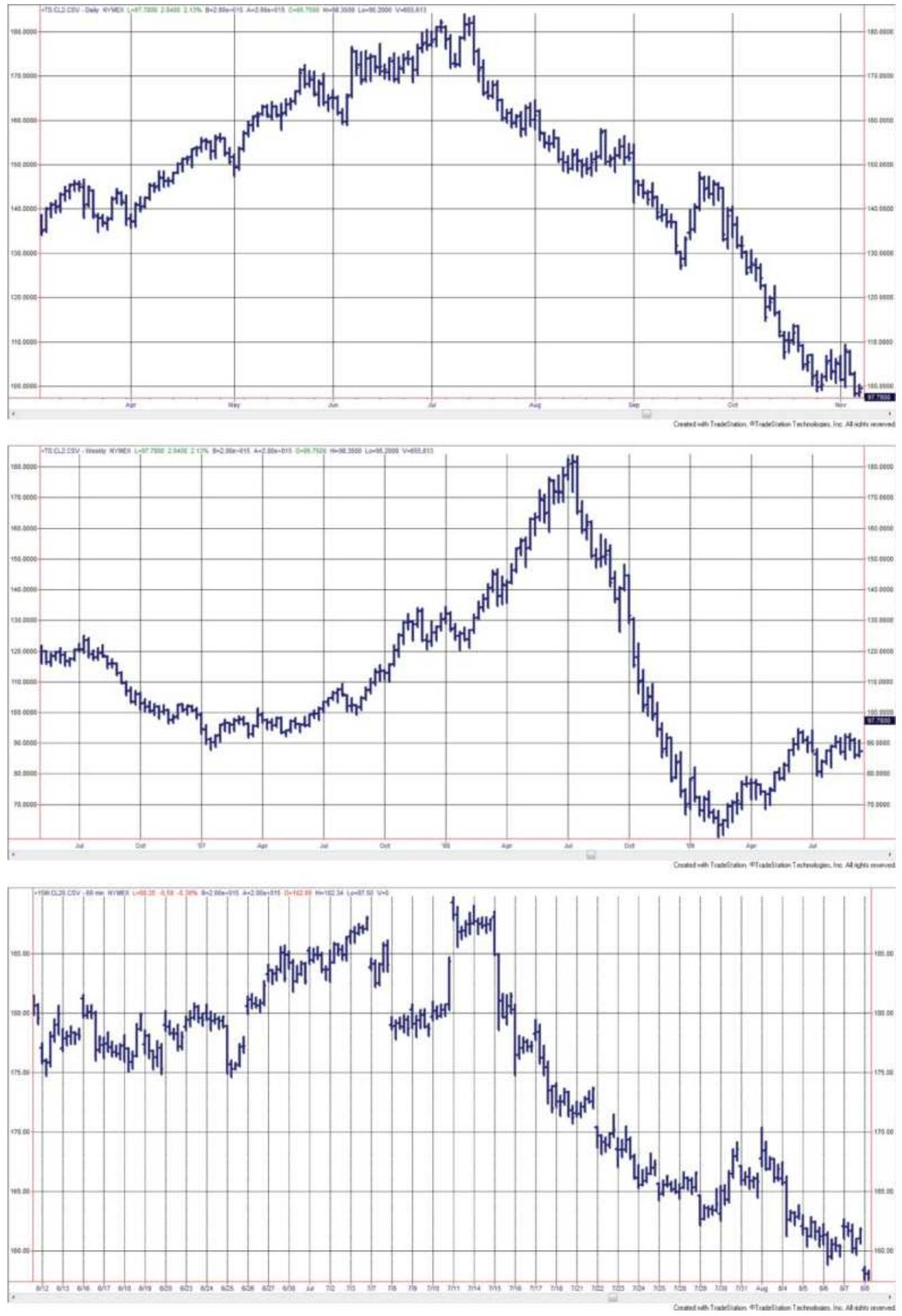

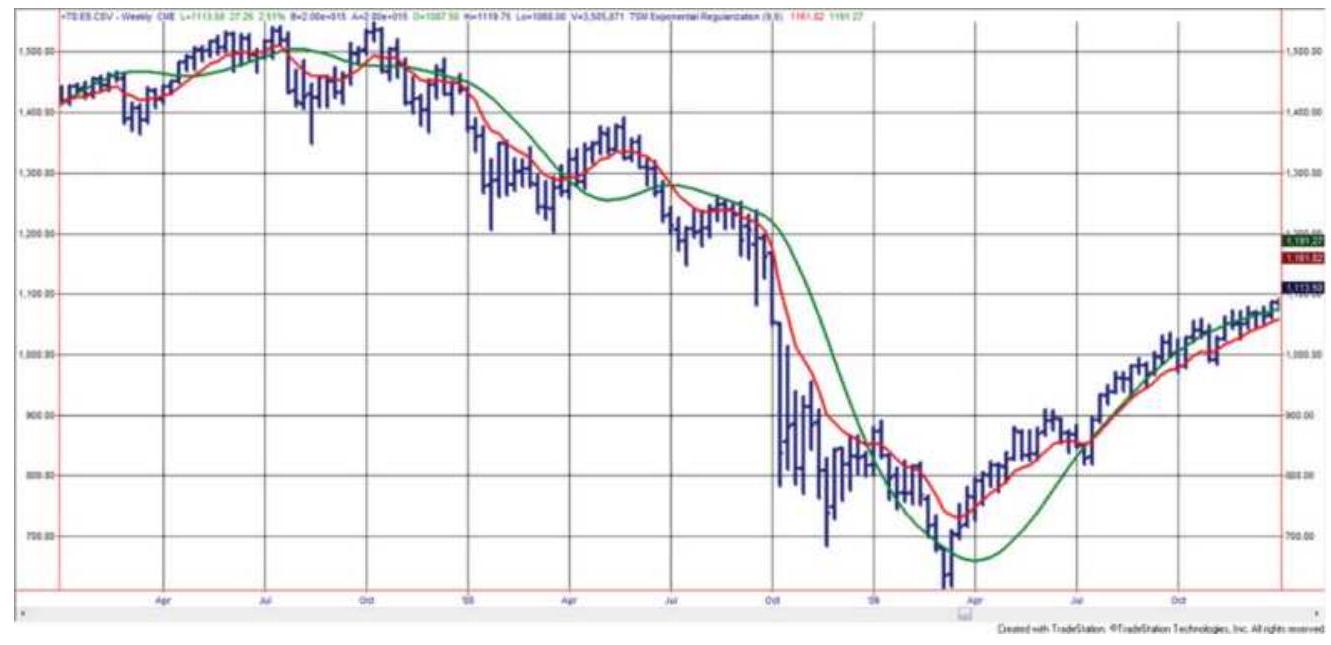

FIGURE 1.1 Crude oil prices weekly chart with July 2008 in the center (top); \(\ldots\)

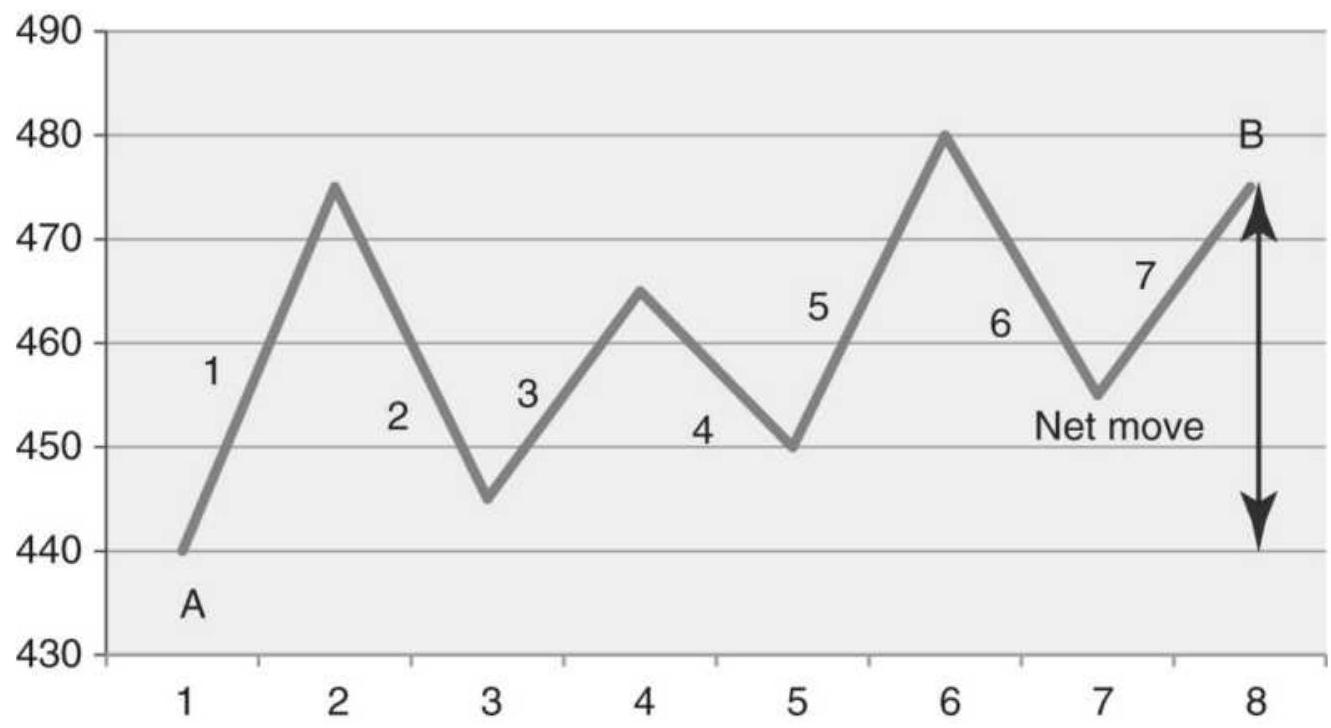

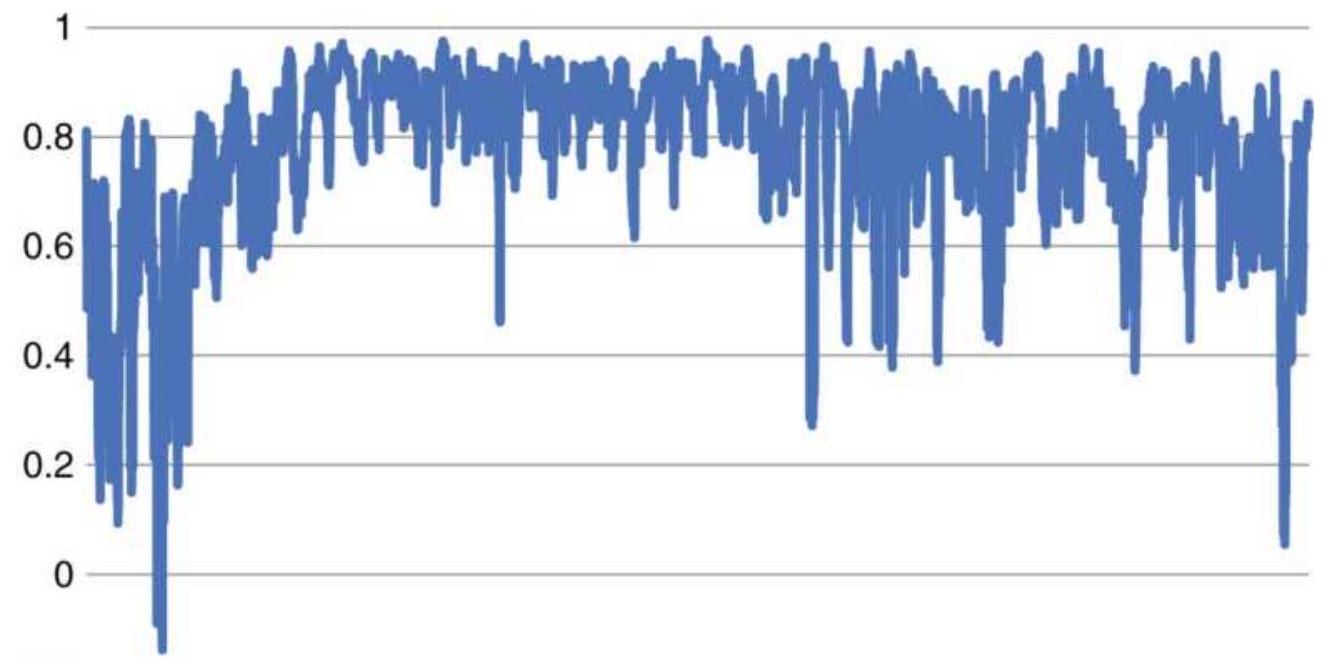

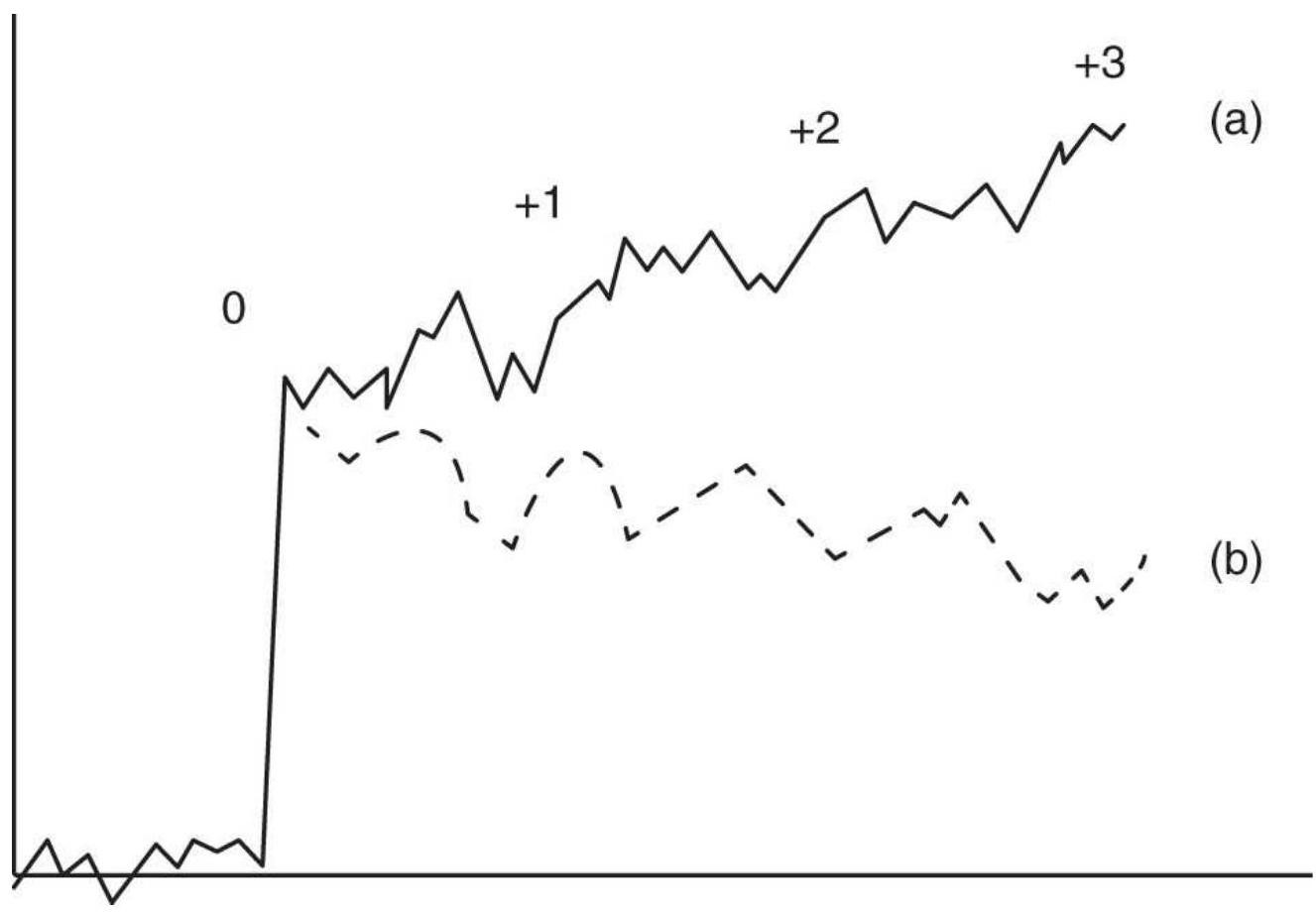

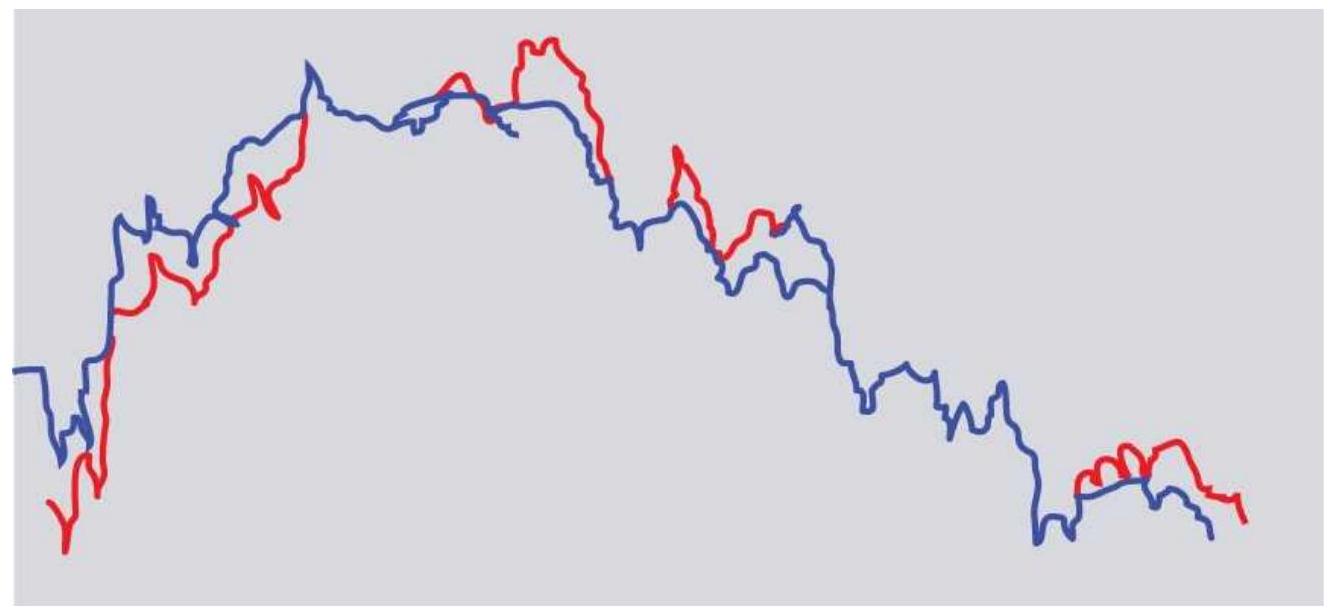

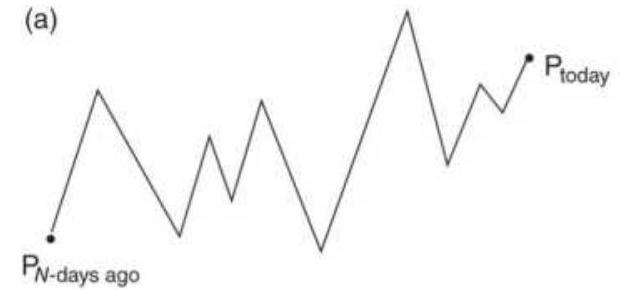

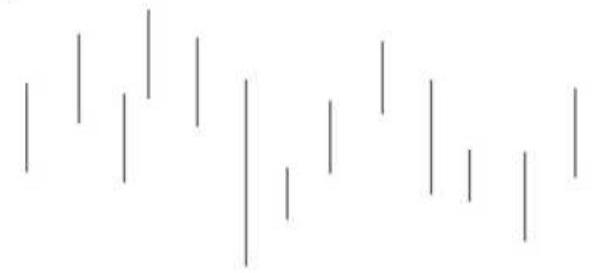

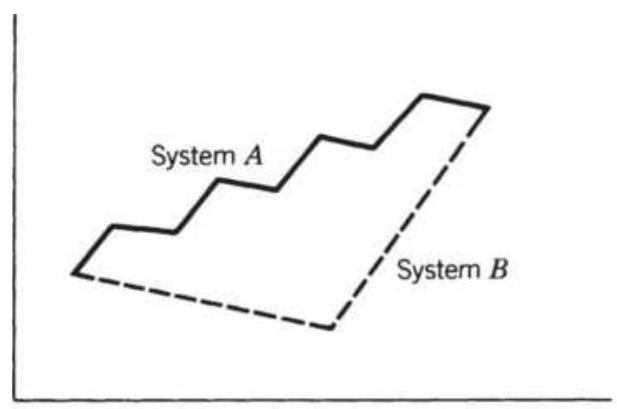

FIGURE 1.2 Basic measurement of noise using the efficiency ratio (also calle...

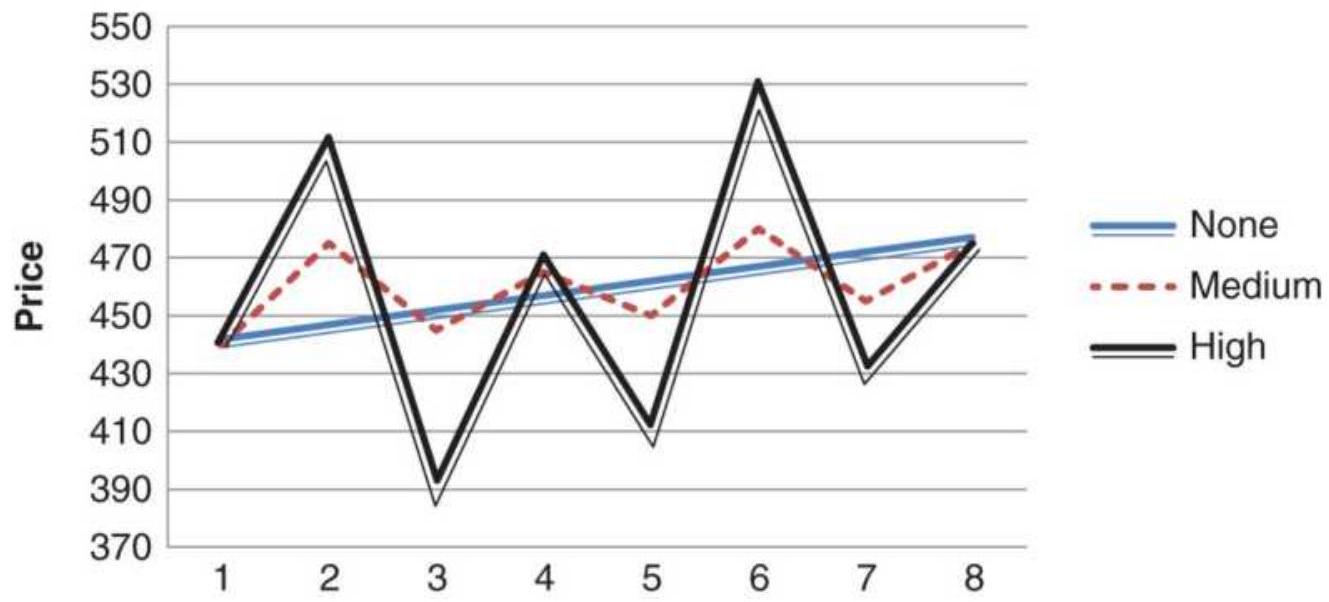

FIGURE 1.3 Three different price patterns all begin and end at the same poin...

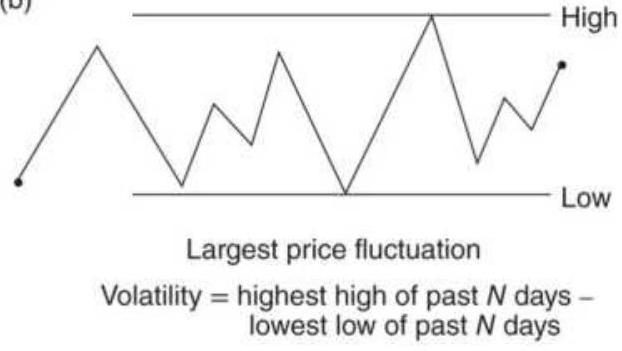

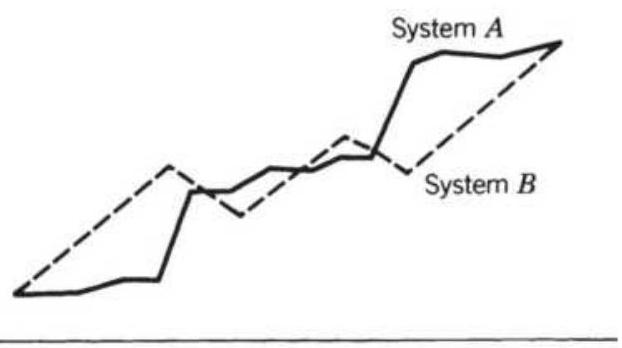

FIGURE 1.4 By changing the net price move we can distinguish between noise a...

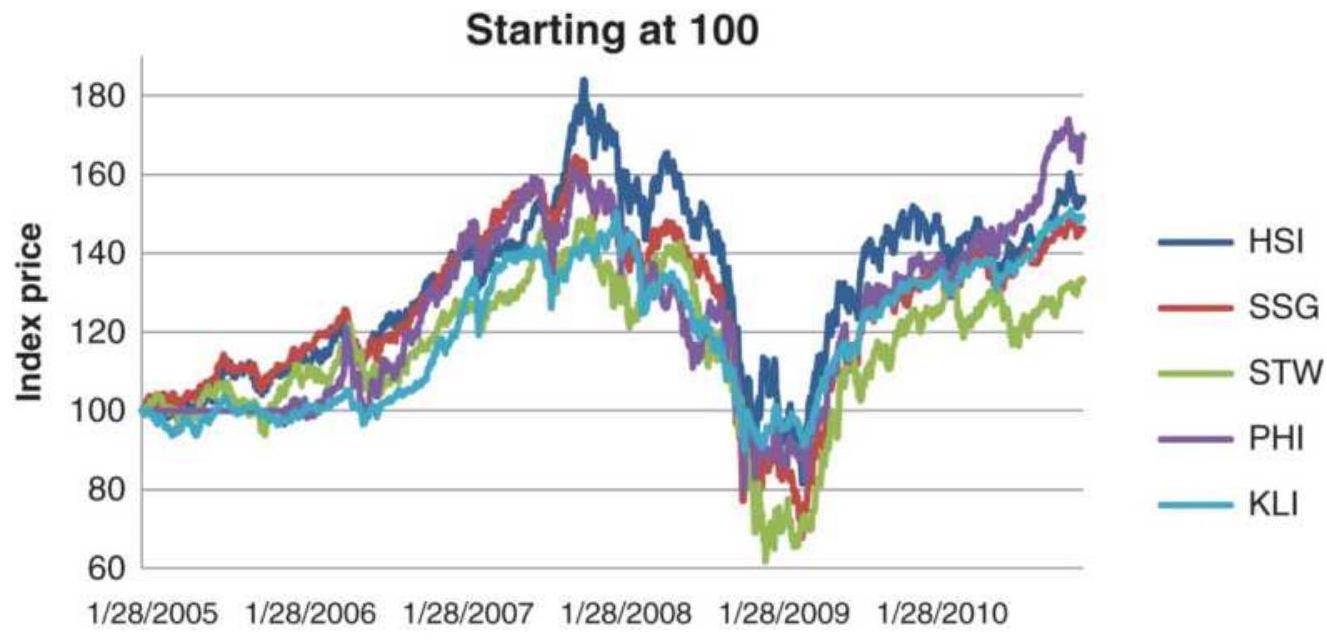

FIGURE 1.5 Relative change in maturity of world markets by region

FIGURE 1.6 Ranking of Asian Equity Index Markets, 2005-2010.

Chapter 2

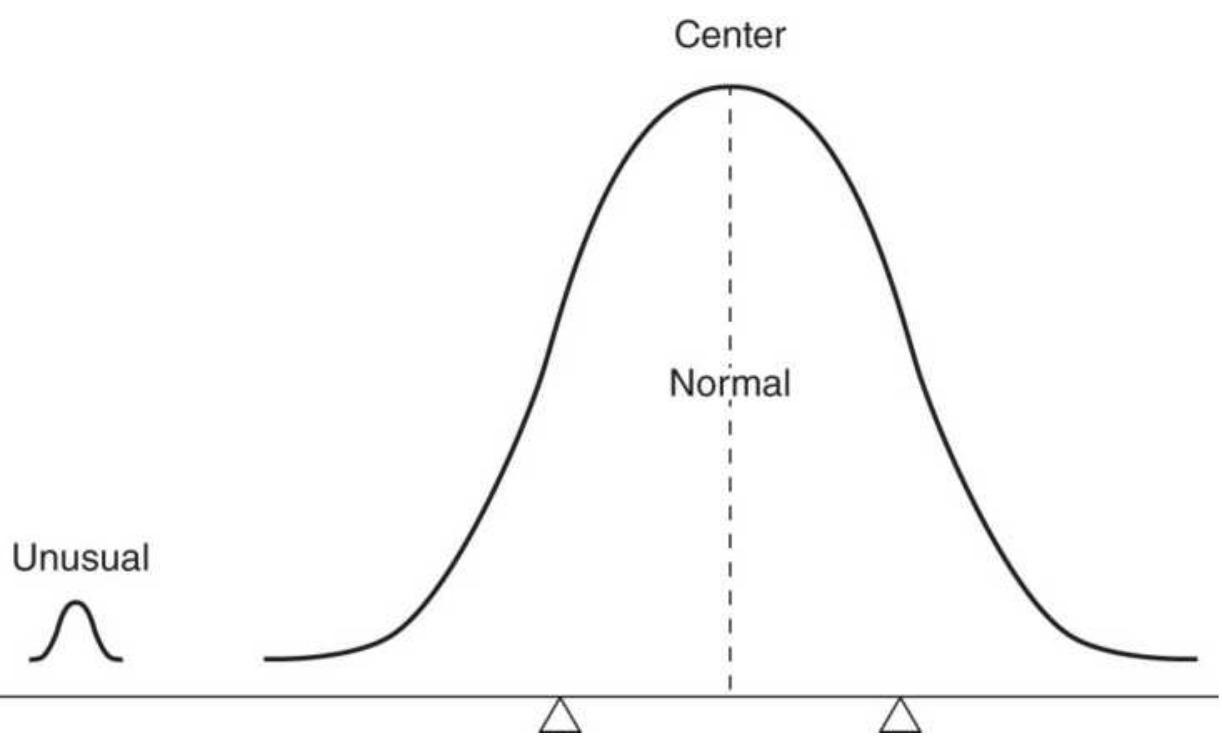

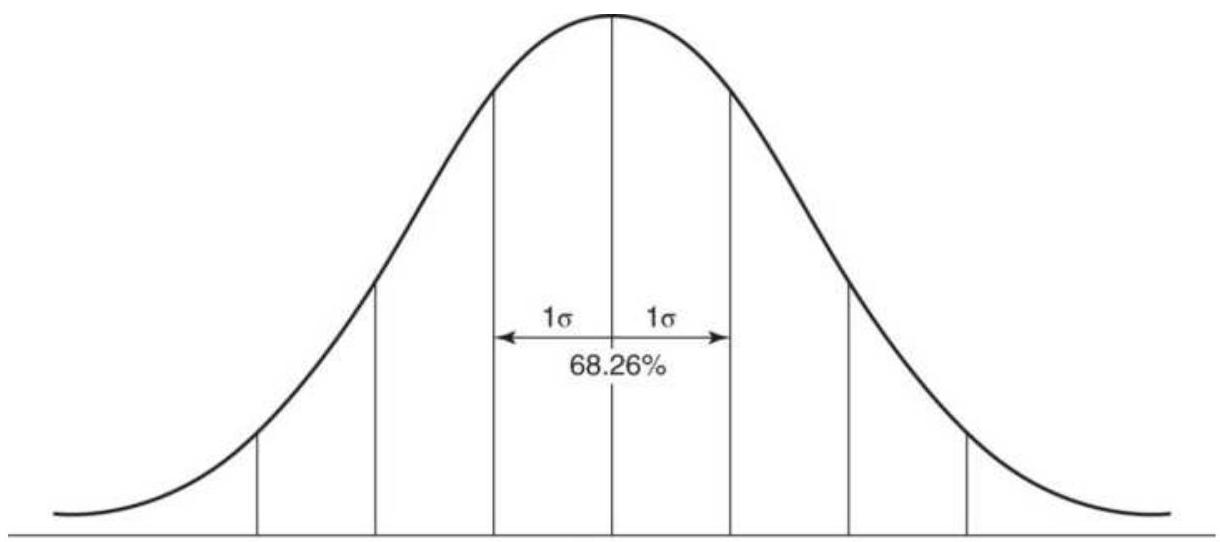

FIGURE 2.1 The Law of Averages. The normal cases overwhelm the unusual ones....

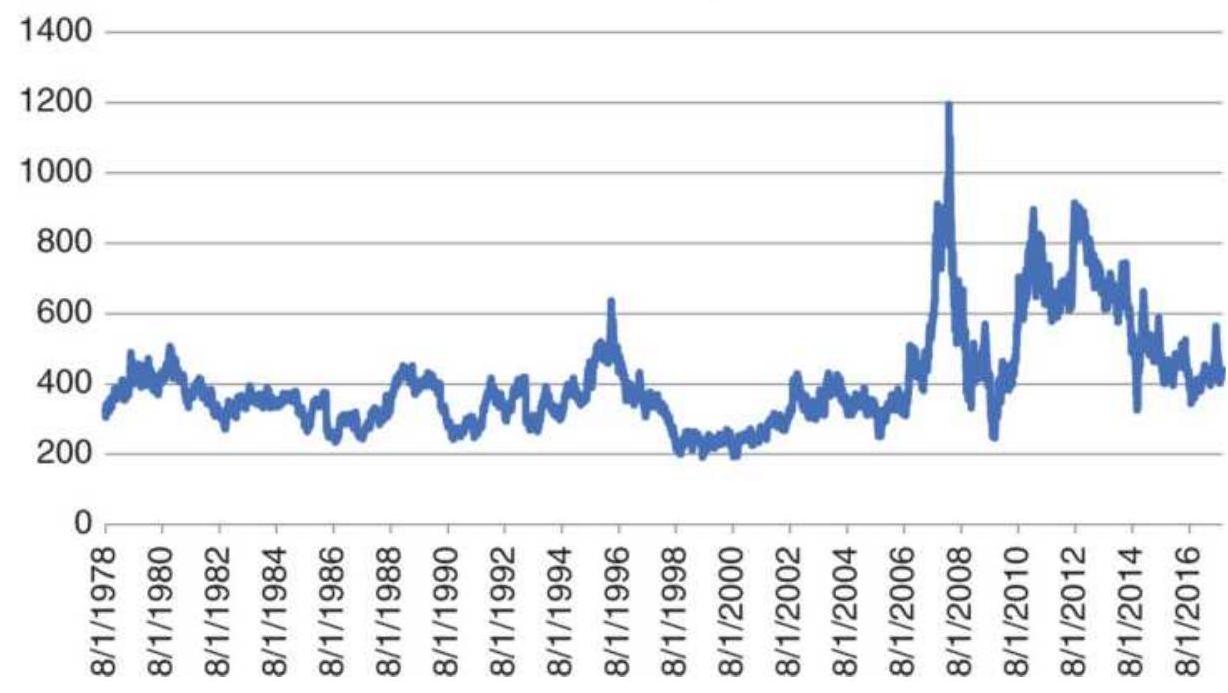

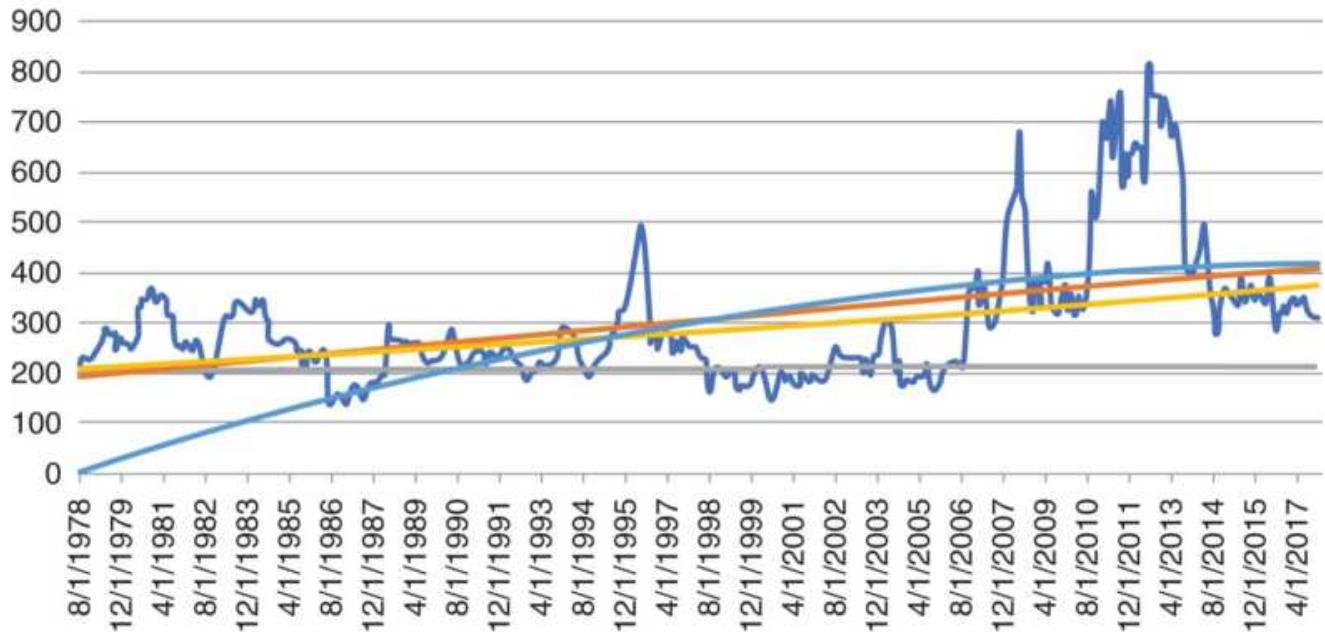

FIGURE 2.2 Wheat prices, 1978-2017.

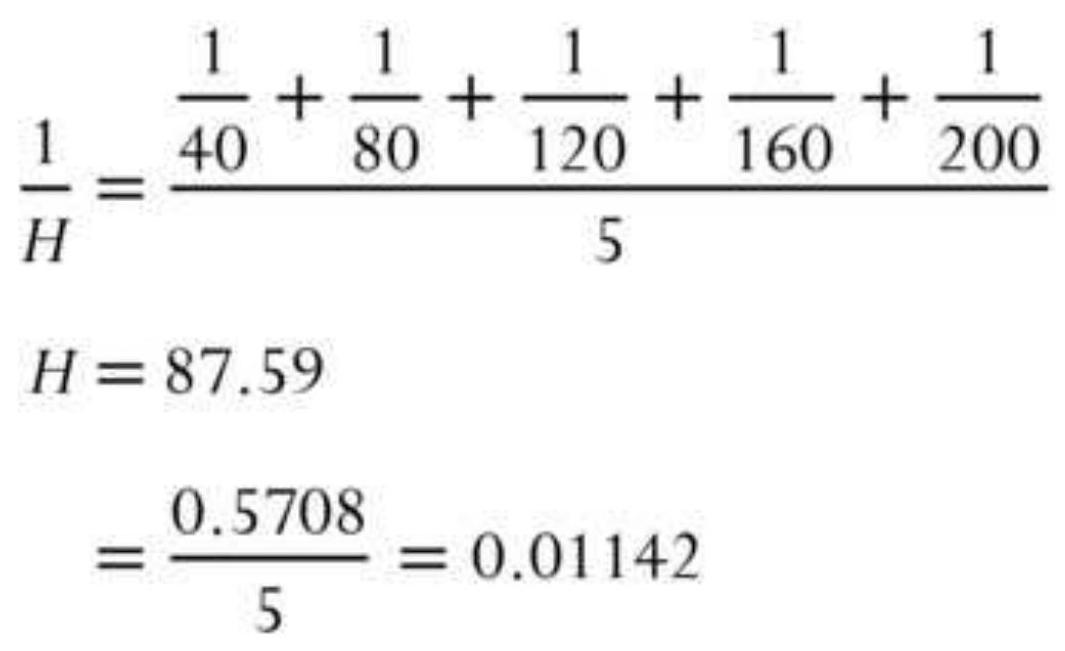

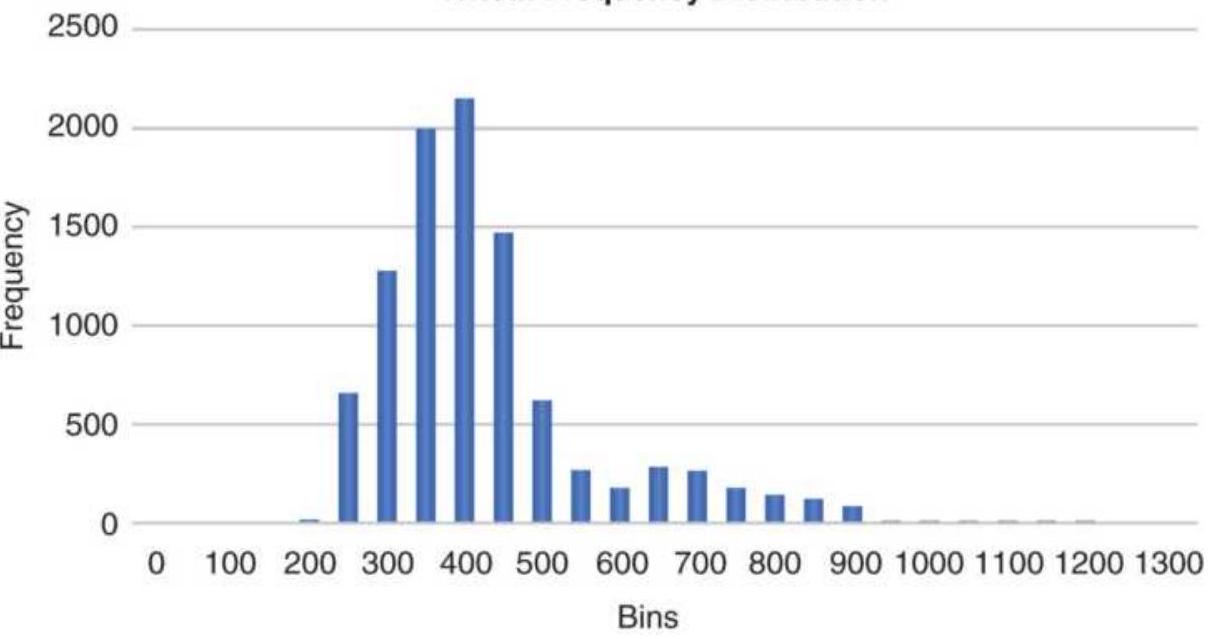

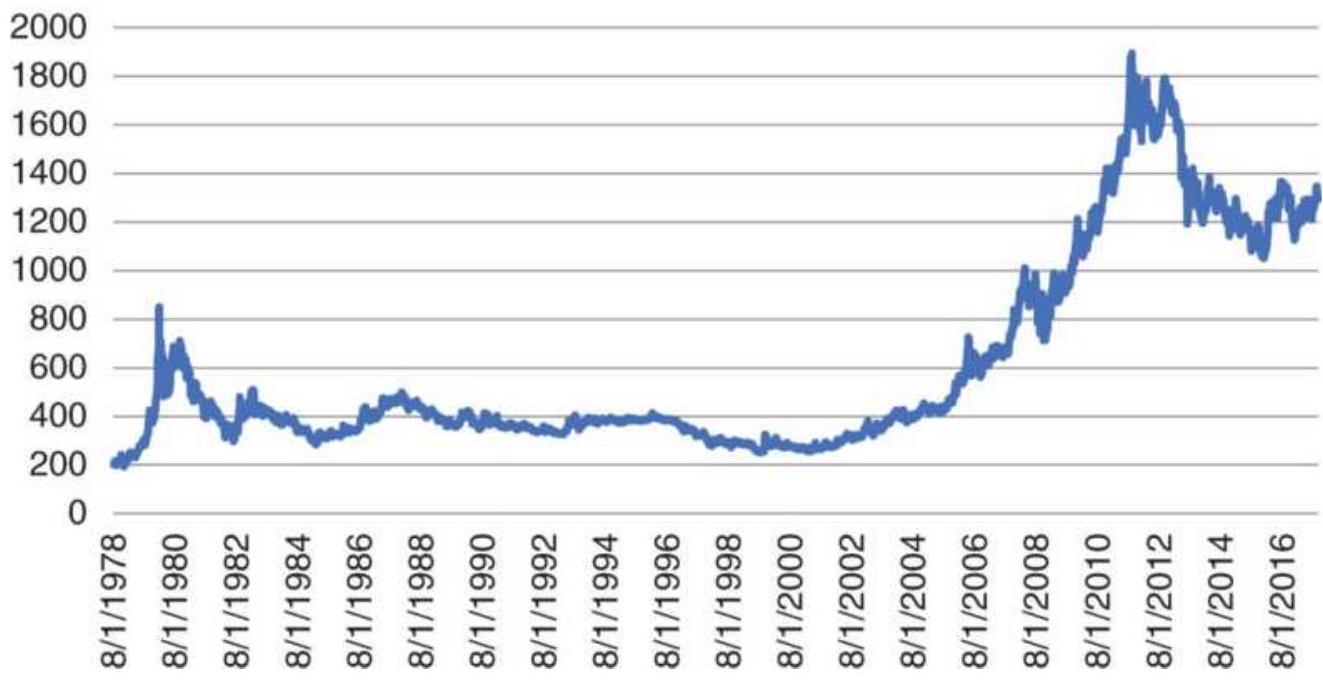

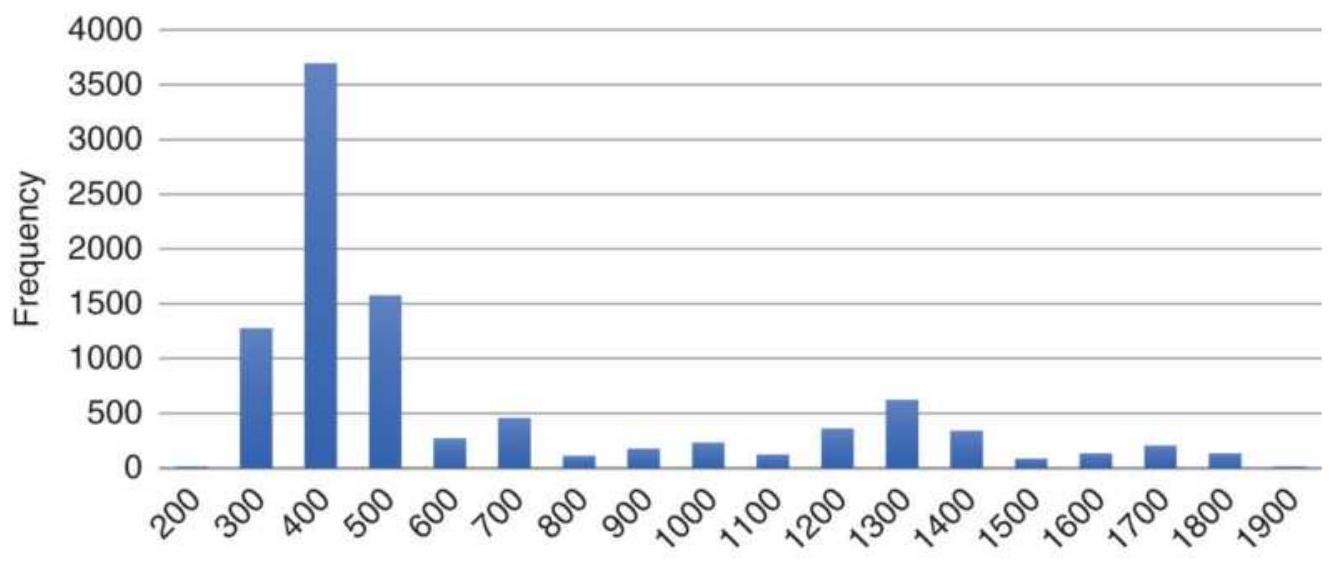

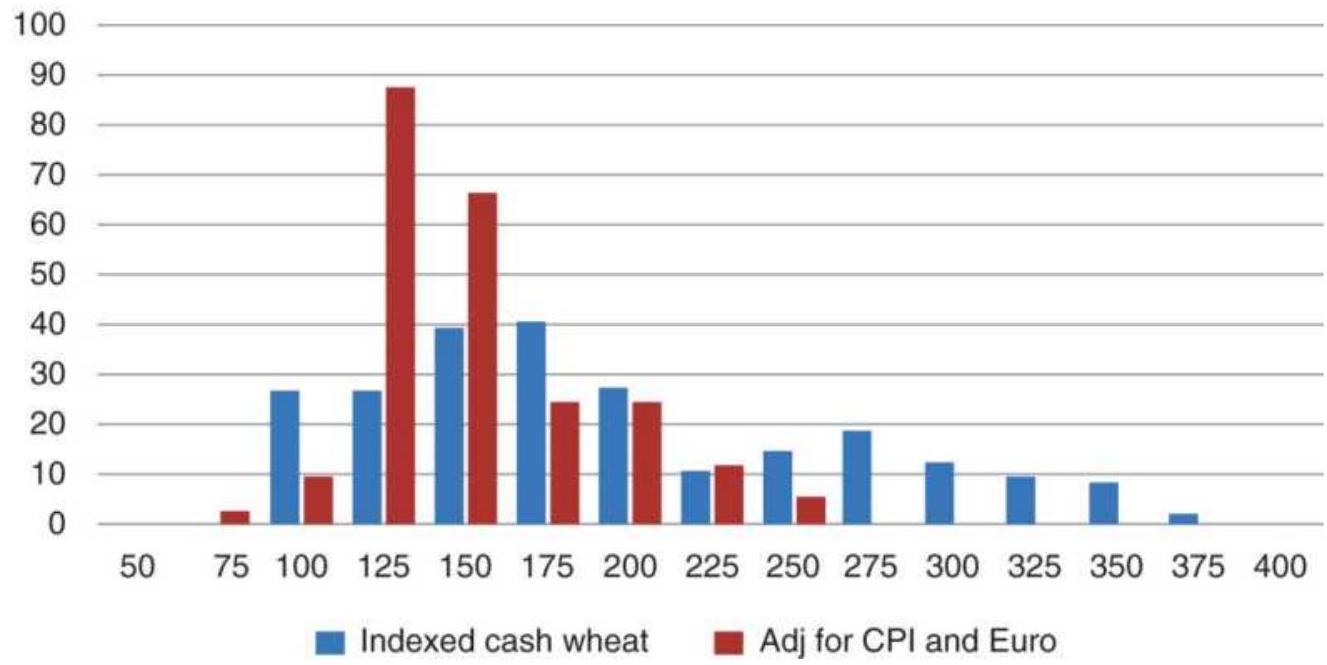

FIGURE 2.3 Wheat frequency distribution showing a tail to the right.

FIGURE 2.4 Normal distribution showing the percentage area included within o...

FIGURE 2.5 Gold cash prices.

FIGURE 2.6 Gold cash frequency distribution.

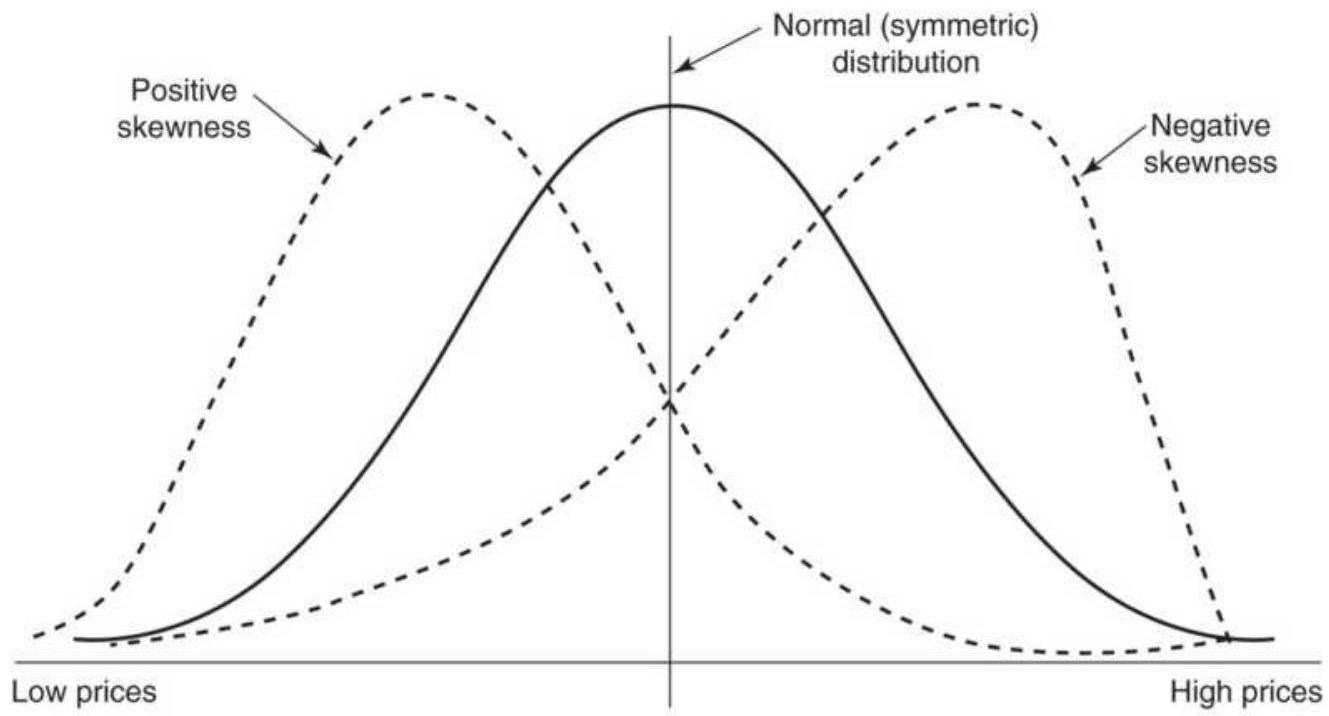

FIGURE 2.7 Skewness. Nearly all price

distributions are positively skewed, \(\mathrm{s} \ldots\)

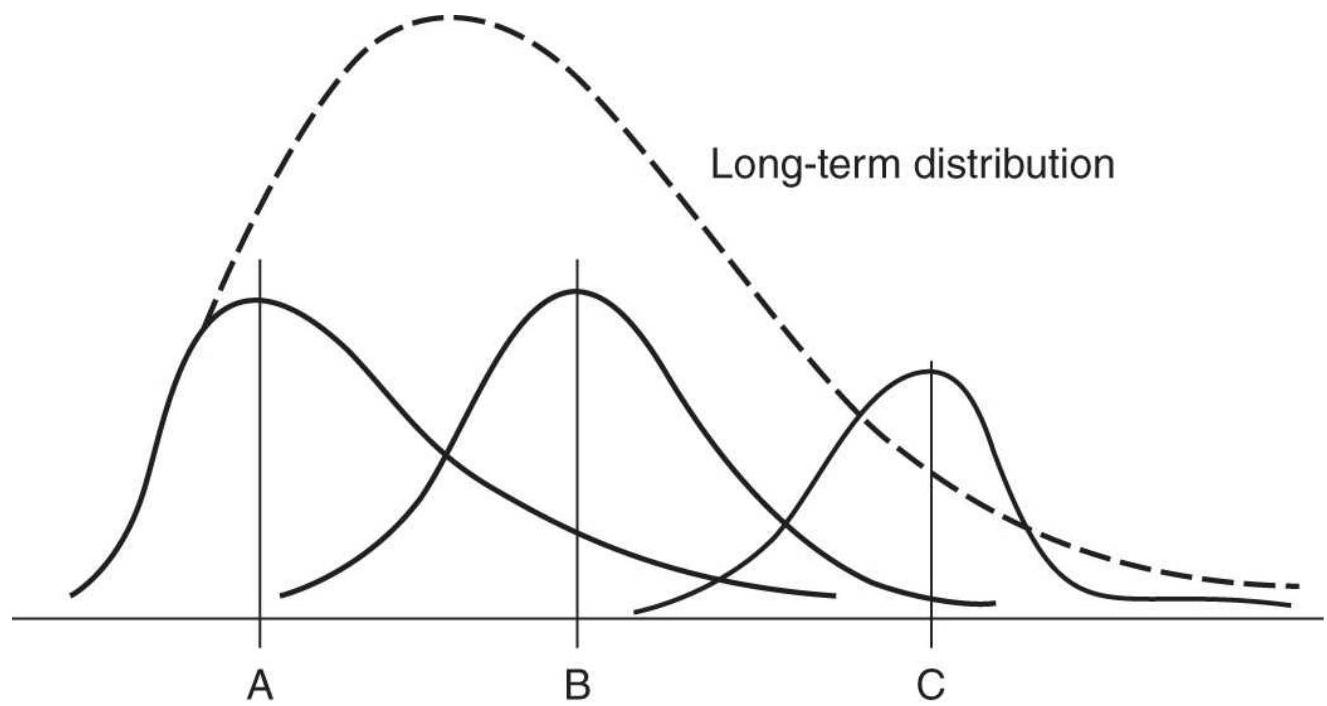

FIGURE 2.8 Changing distribution at different price levels. A, B, and C are ...

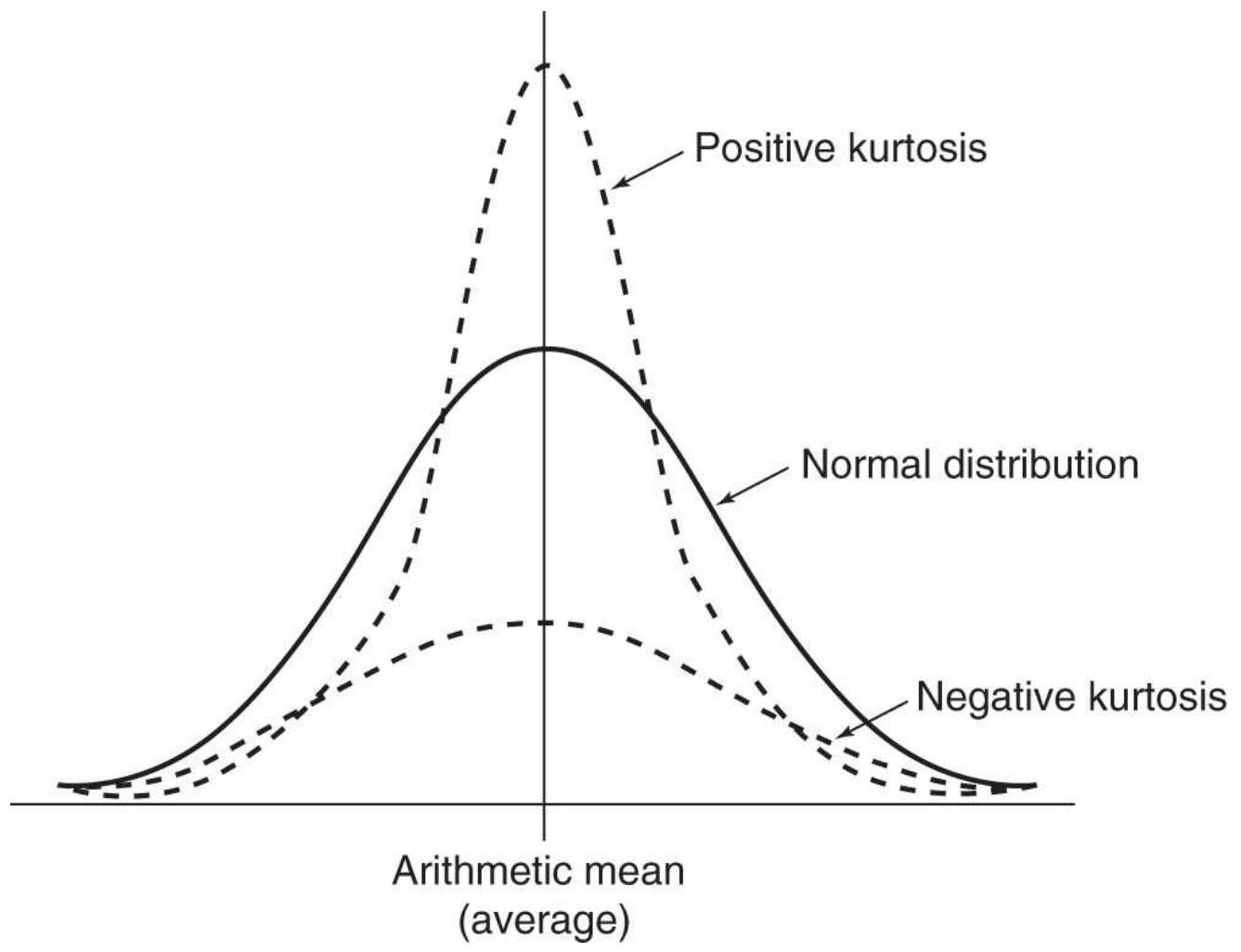

FIGURE 2.9 Kurtosis. A positive kurtosis is when the peak of the distributio...

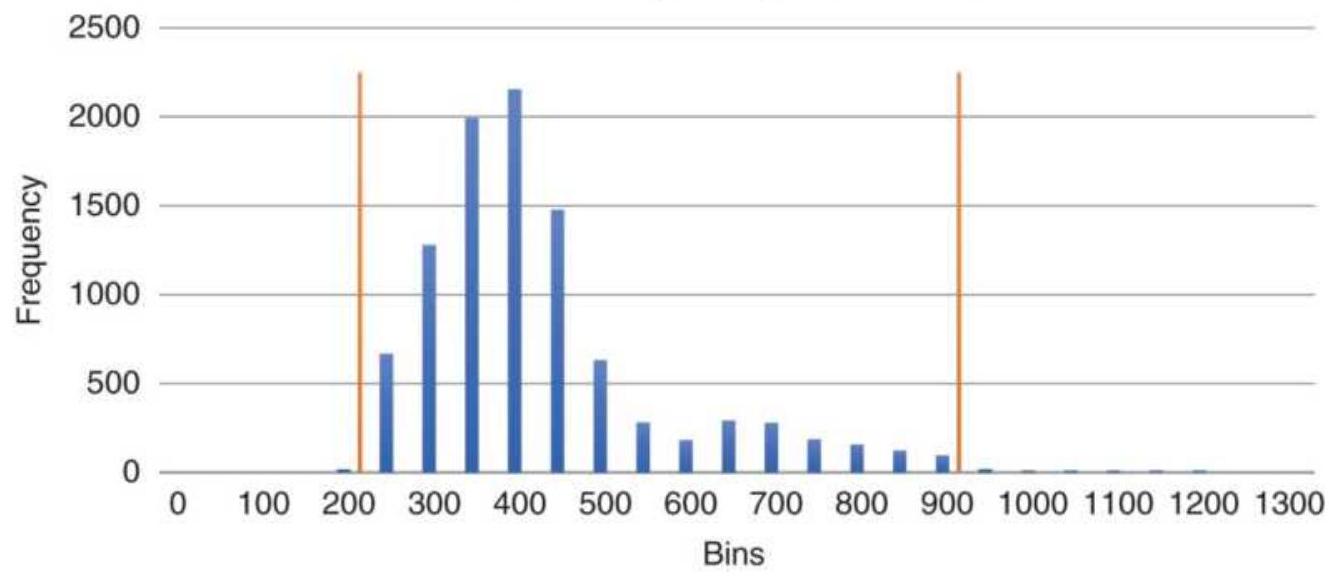

FIGURE 2.10 Measuring 10\% from each end of the frequency distribution. The d...

FIGURE 2.11 Probability network.

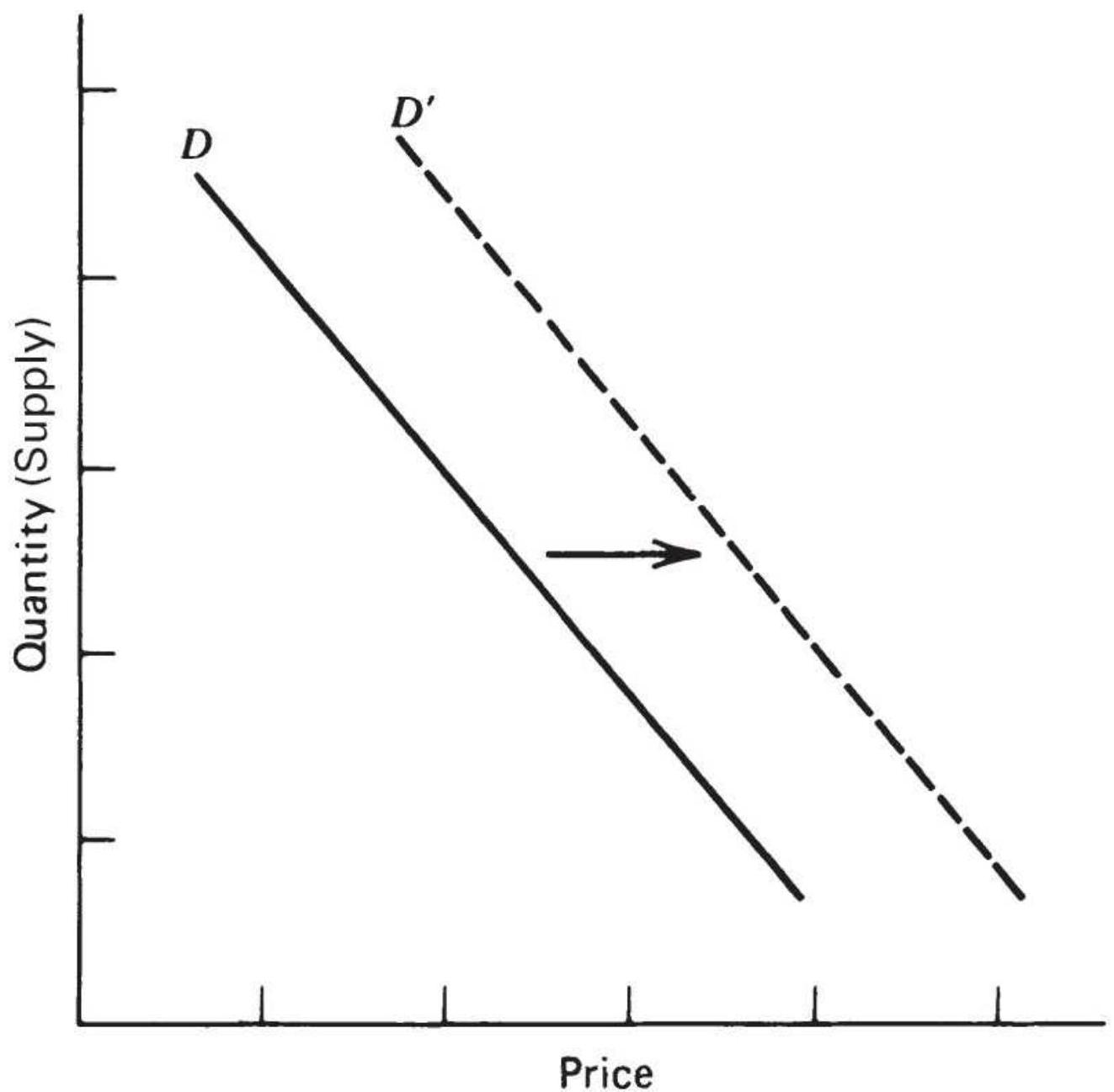

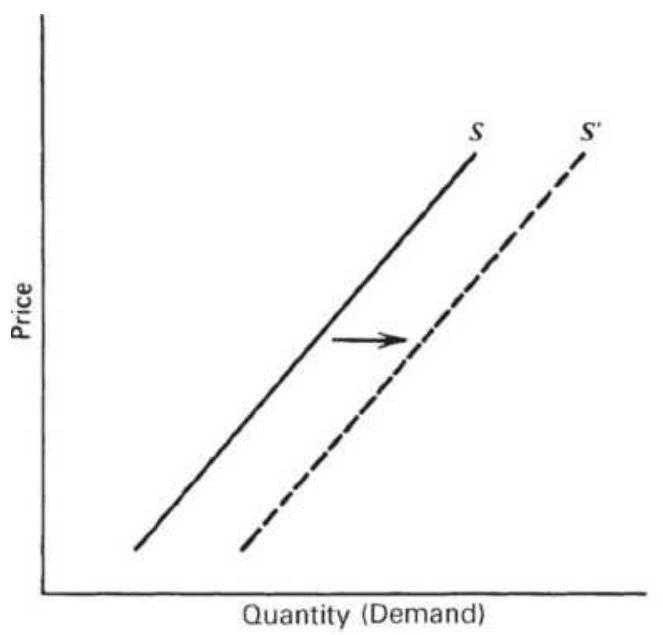

FIGURE 2.12a Shift in demand.

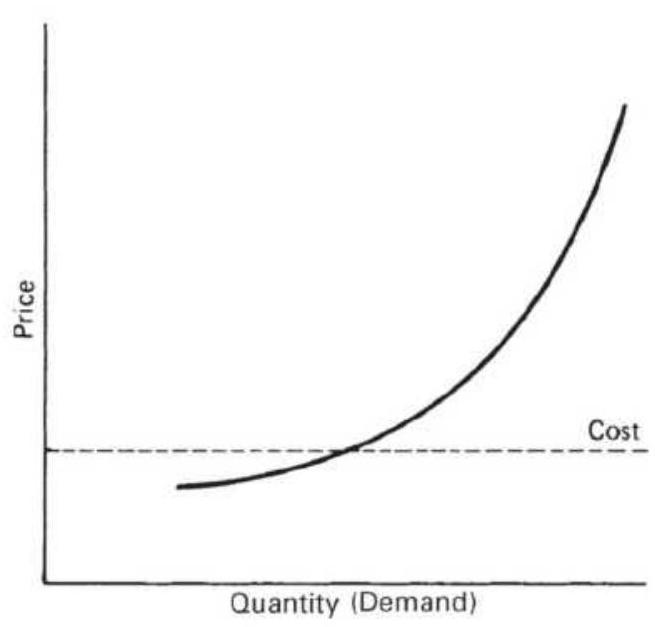

FIGURE 2.12b Demand curve, including extremes.

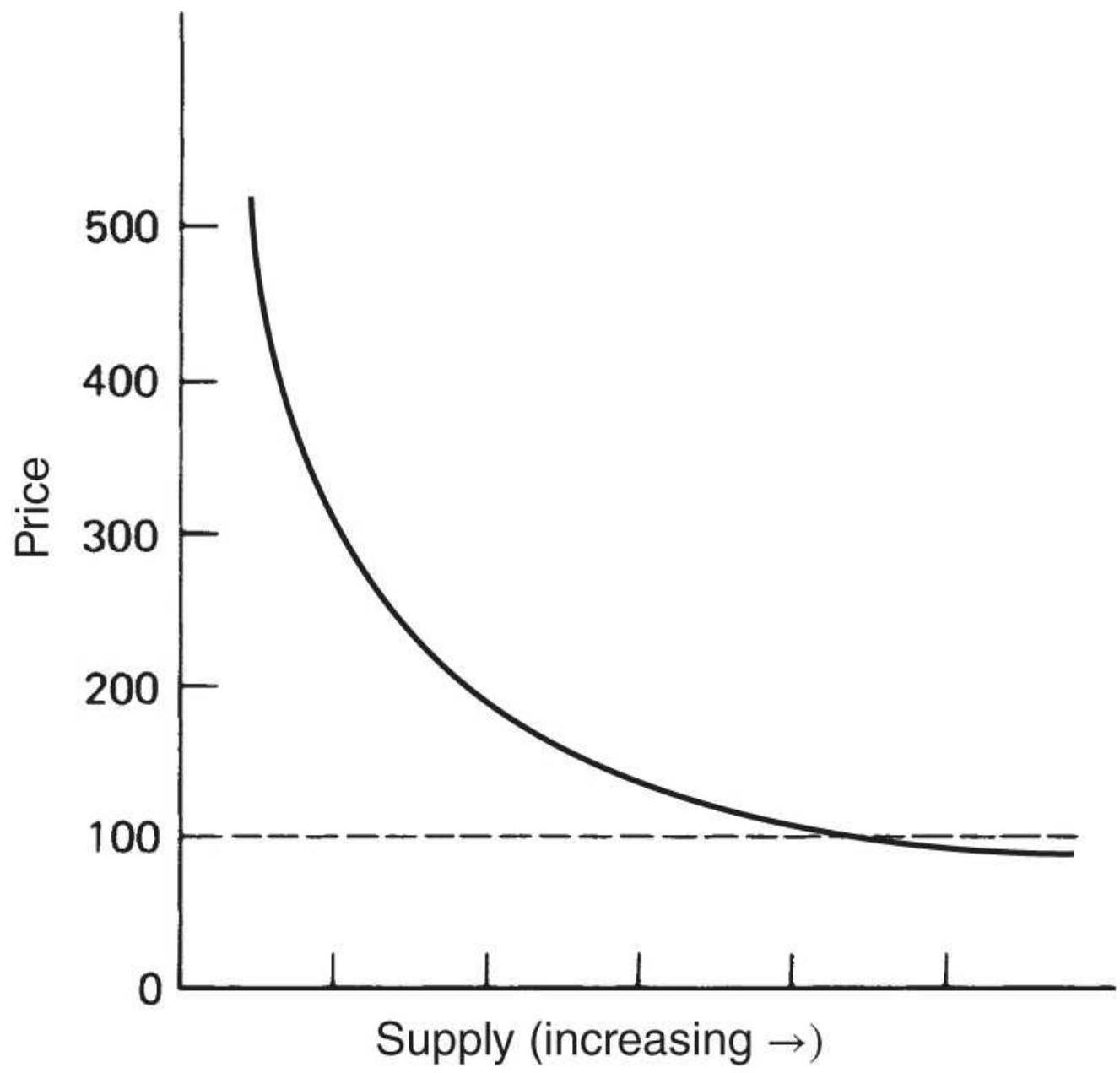

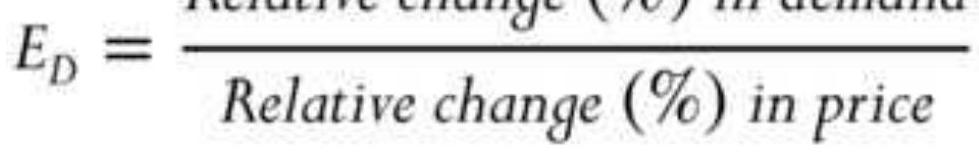

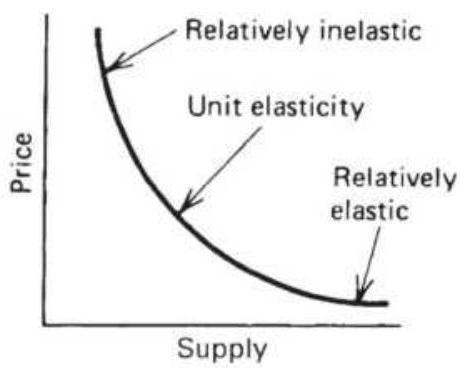

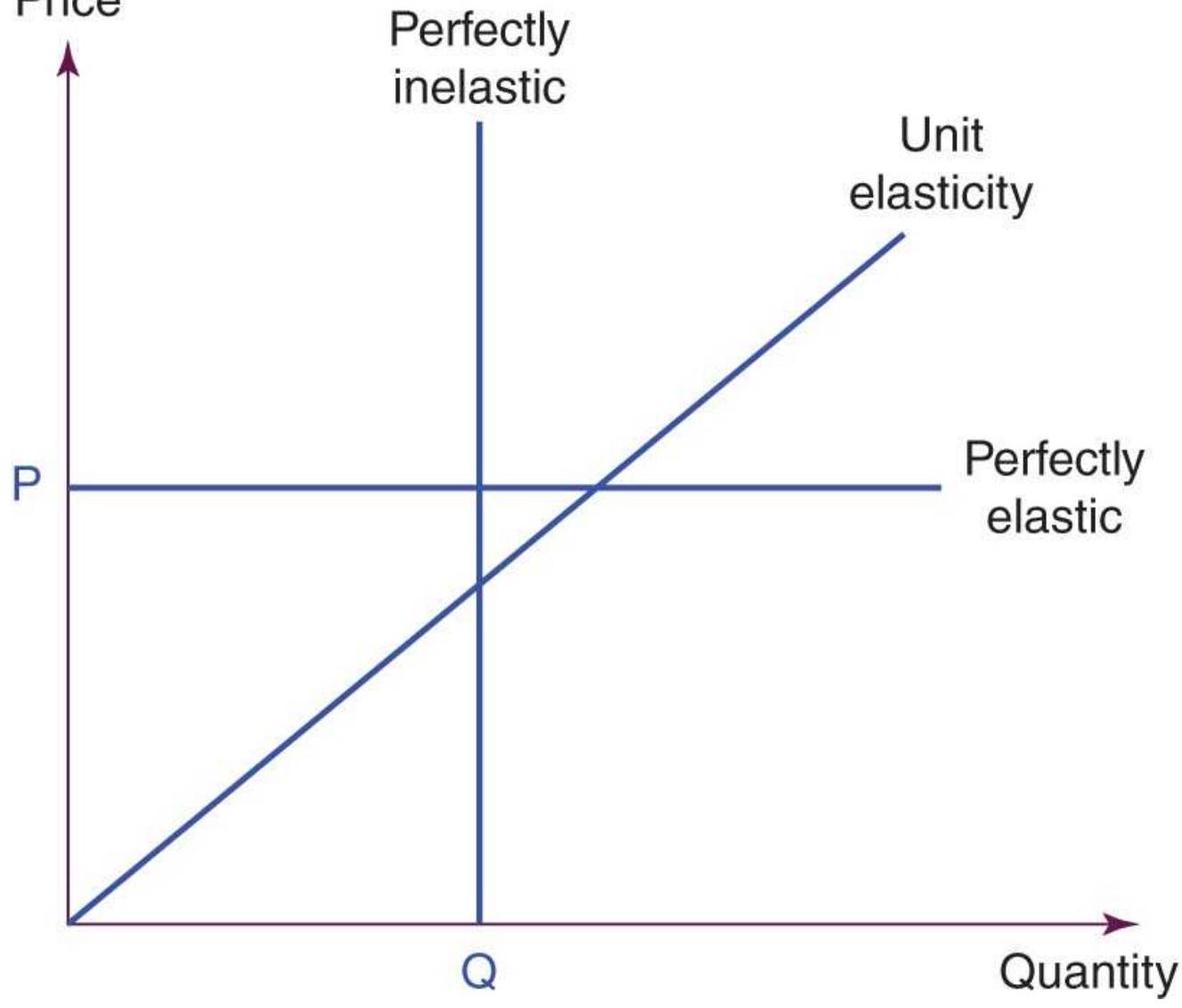

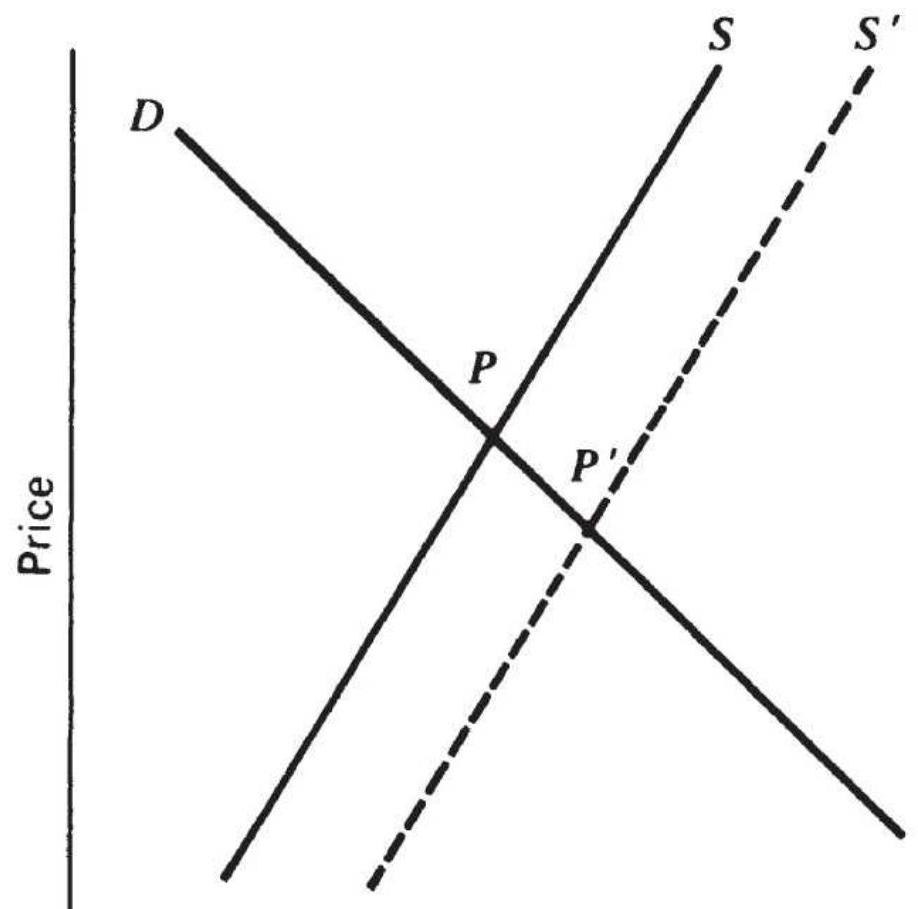

FIGURE 2.13 Demand elasticity. (a) Relatively elastic. (b) Relatively inelas...

FIGURE 2.14 Supply-price relationship. (a) Shift in supply. (b) Supply curve...

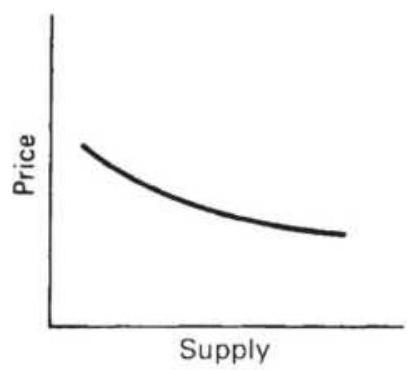

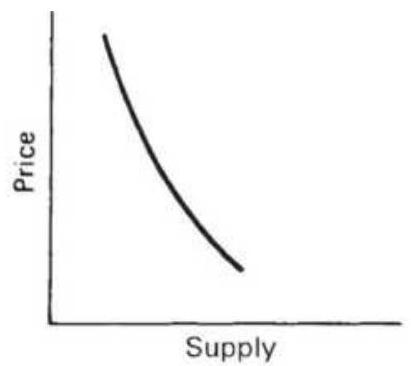

FIGURE 2.15 The three cases of elasticity of supply.

FIGURE 2.16 Equilibrium with shifting supply.

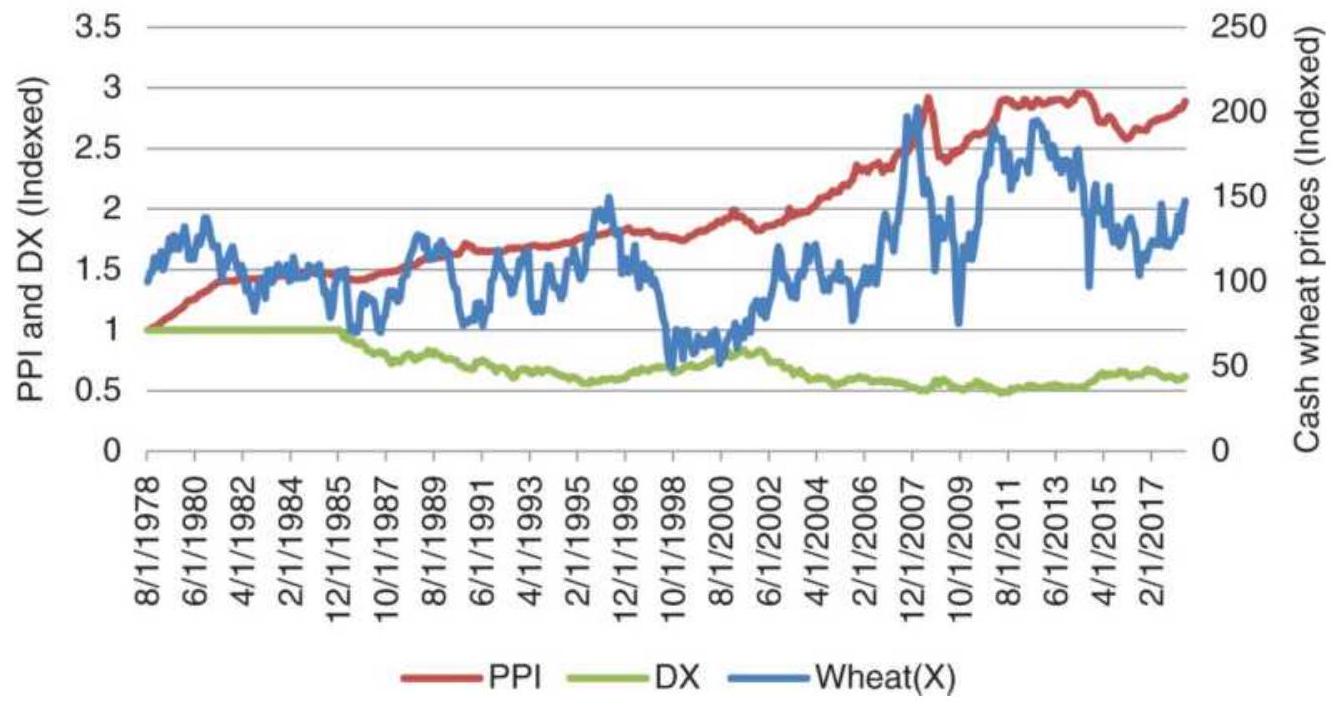

FIGURE 2.17 Cash wheat with the PPI and dollar index (DX), from 1978 through...

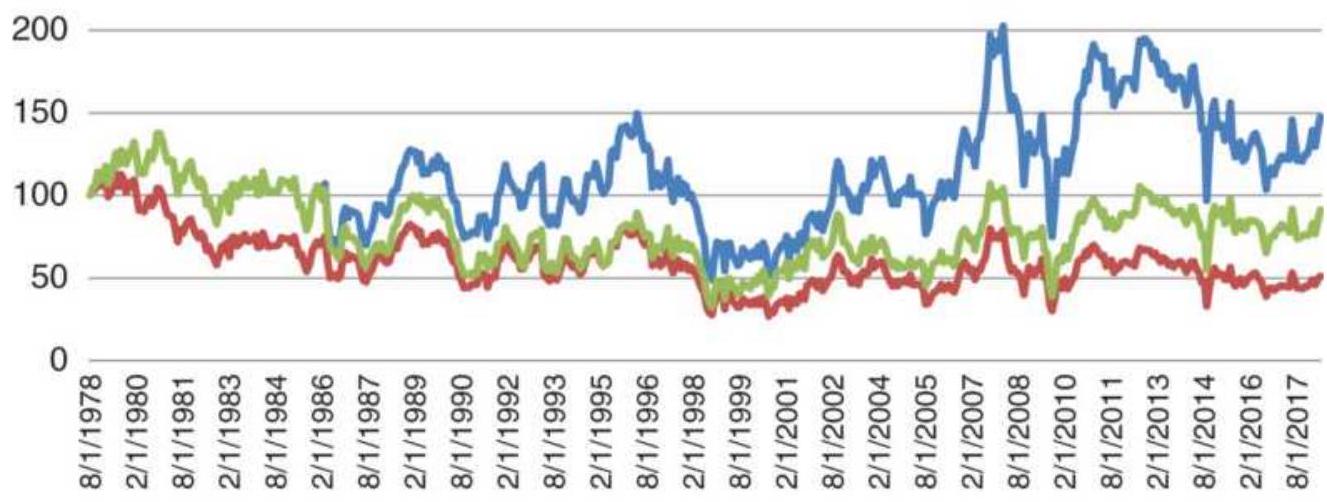

FIGURE 2.18 Wheat prices adjusted for PPI and Dollar Index (DX).

Chapter 3

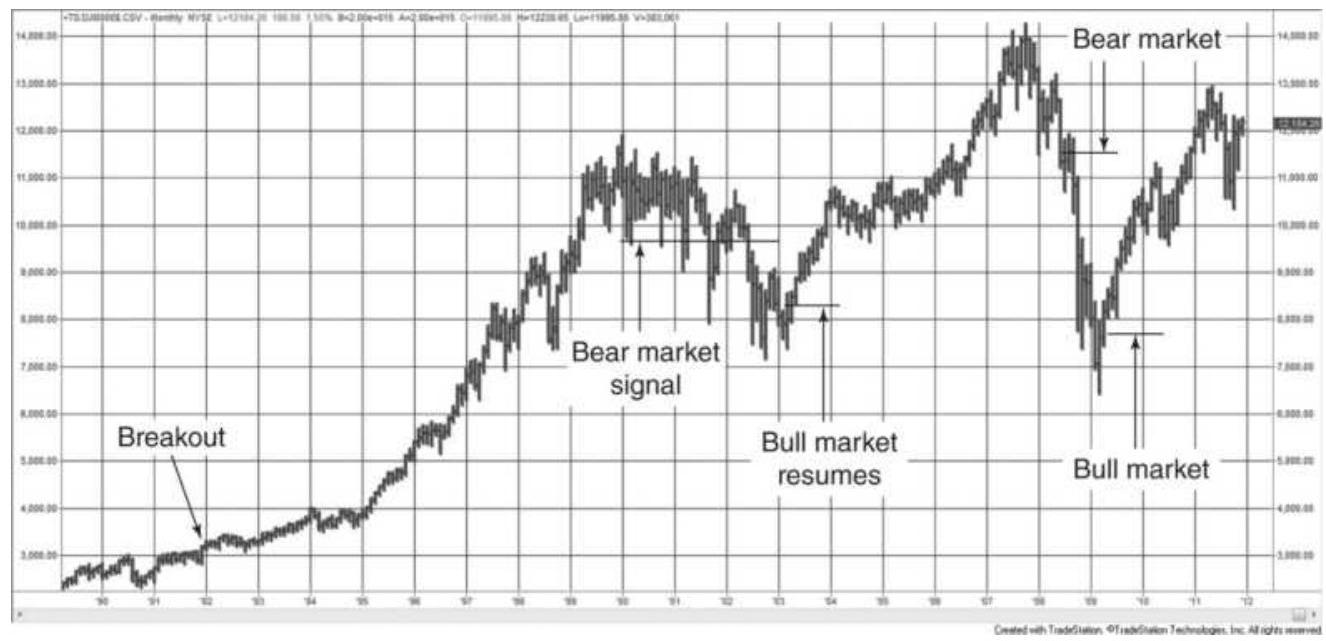

FIGURE 3.1 Dow Theory has been adapted to use the current versions of the ma...

FIGURE 3.2 Bull and bear market signals are traditional breakout signals, bu...

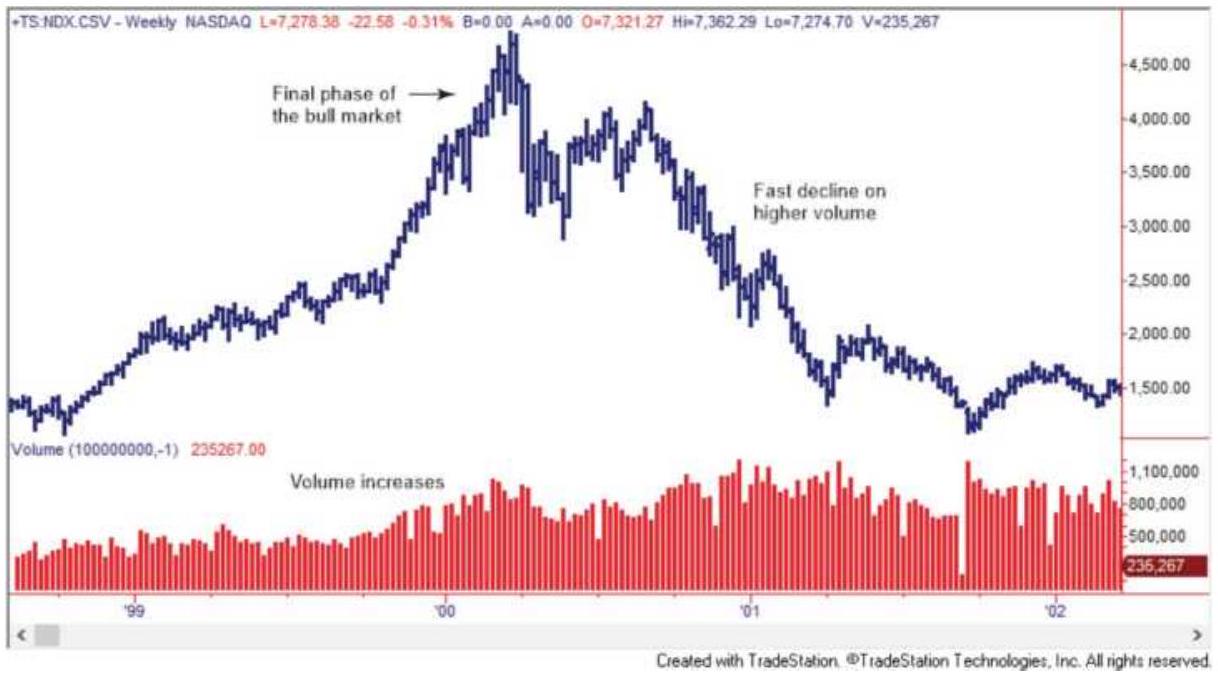

FIGURE 3.3 NASDAQ from April 1998 through June 2002. A clear example of a bu...

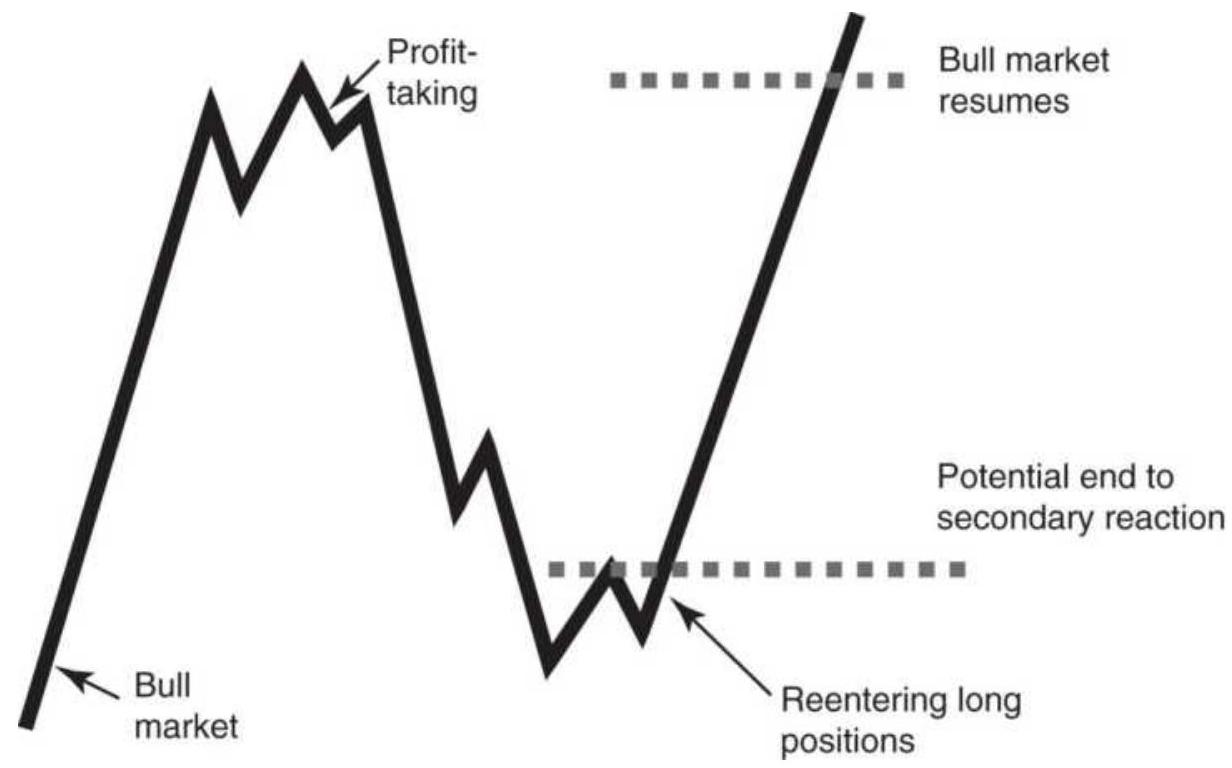

FIGURE 3.4 Secondary trends and reactions. A reaction is a smaller swing in ...

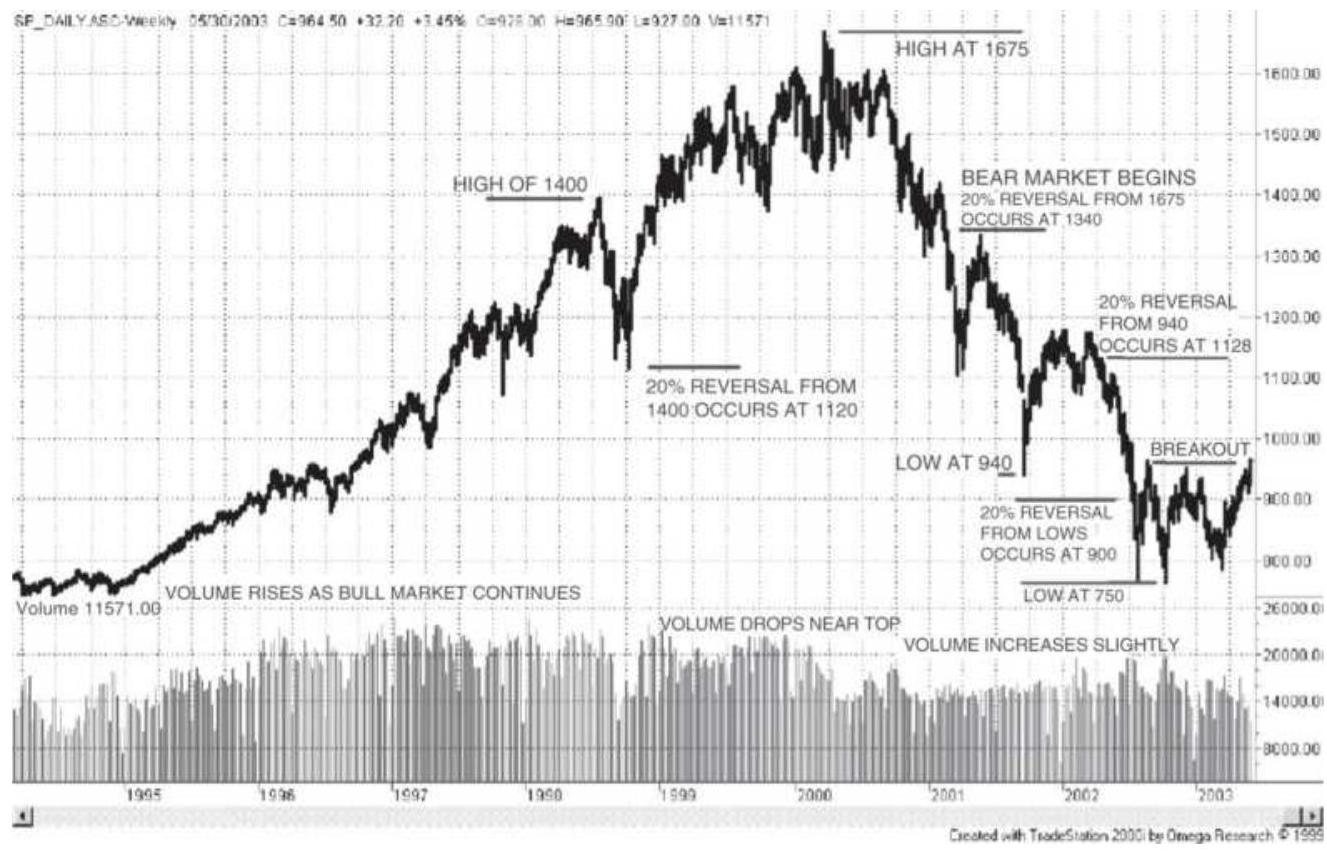

FIGURE 3.5 Dow Theory applied to the S\&P. Most of Dow's principles apply to ...

FIGURE 3.6 The trend is easier to see after it has occurred. While the upwar...

FIGURE 3.7 Upward and downward trendlines applied to Intel, November 2002 th...

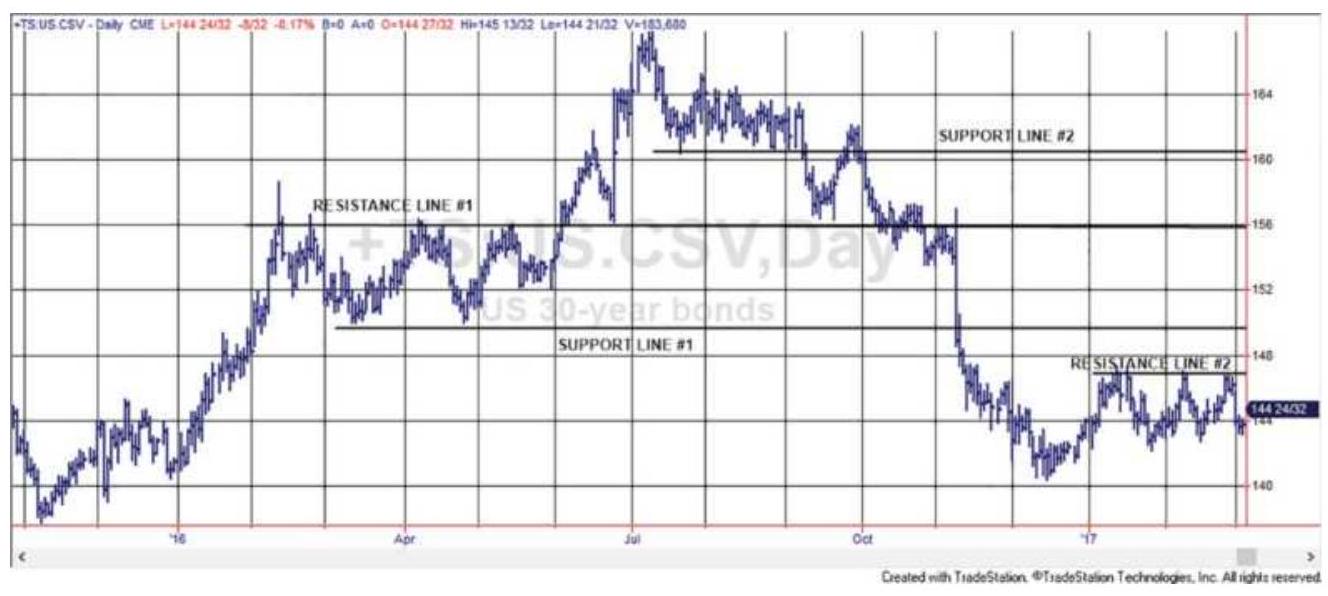

FIGURE 3.8 Horizontal support and resistance lines shown on bond futures pri...

FIGURE 3.9 Basic sell and buy signals using trendlines.

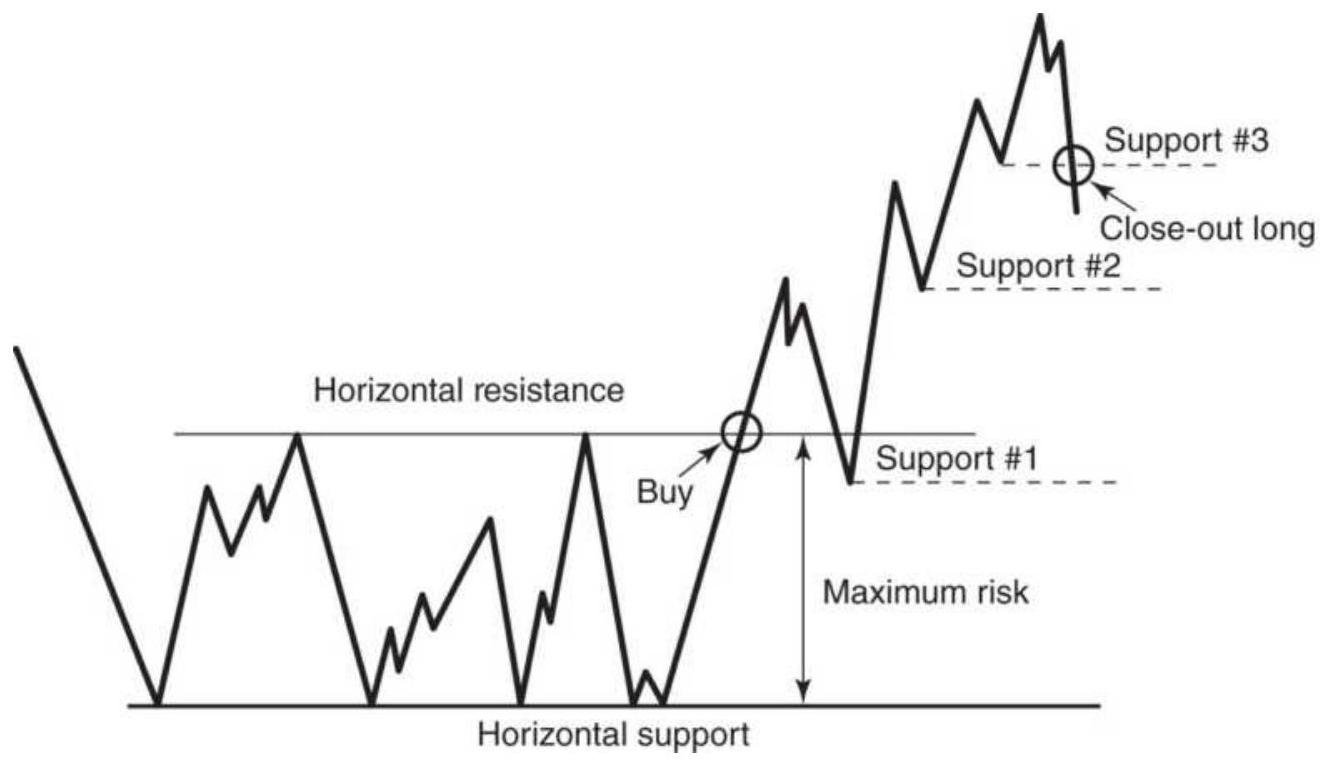

FIGURE 3.10 Trading rules for horizontal support and resistance lines.

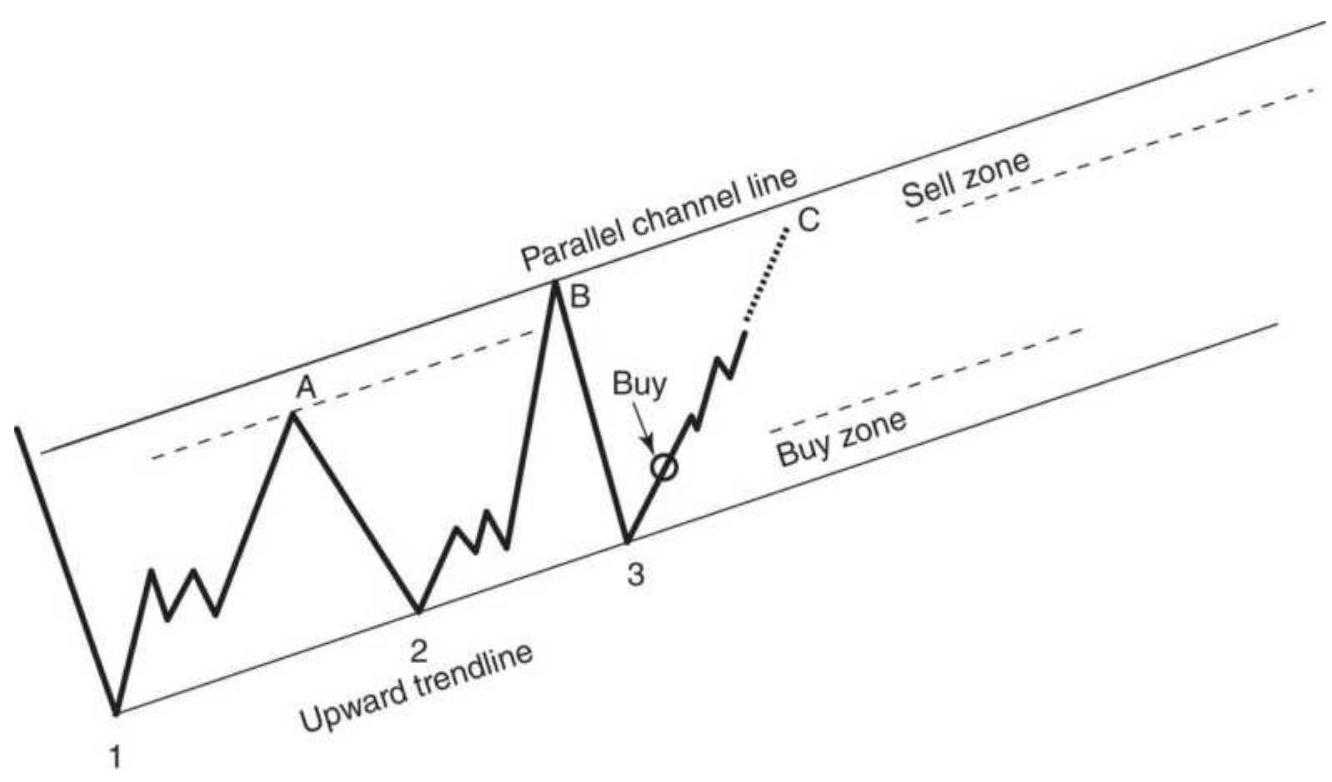

FIGURE 3.11 Trading a price channel. Once the channel has been drawn, buying...

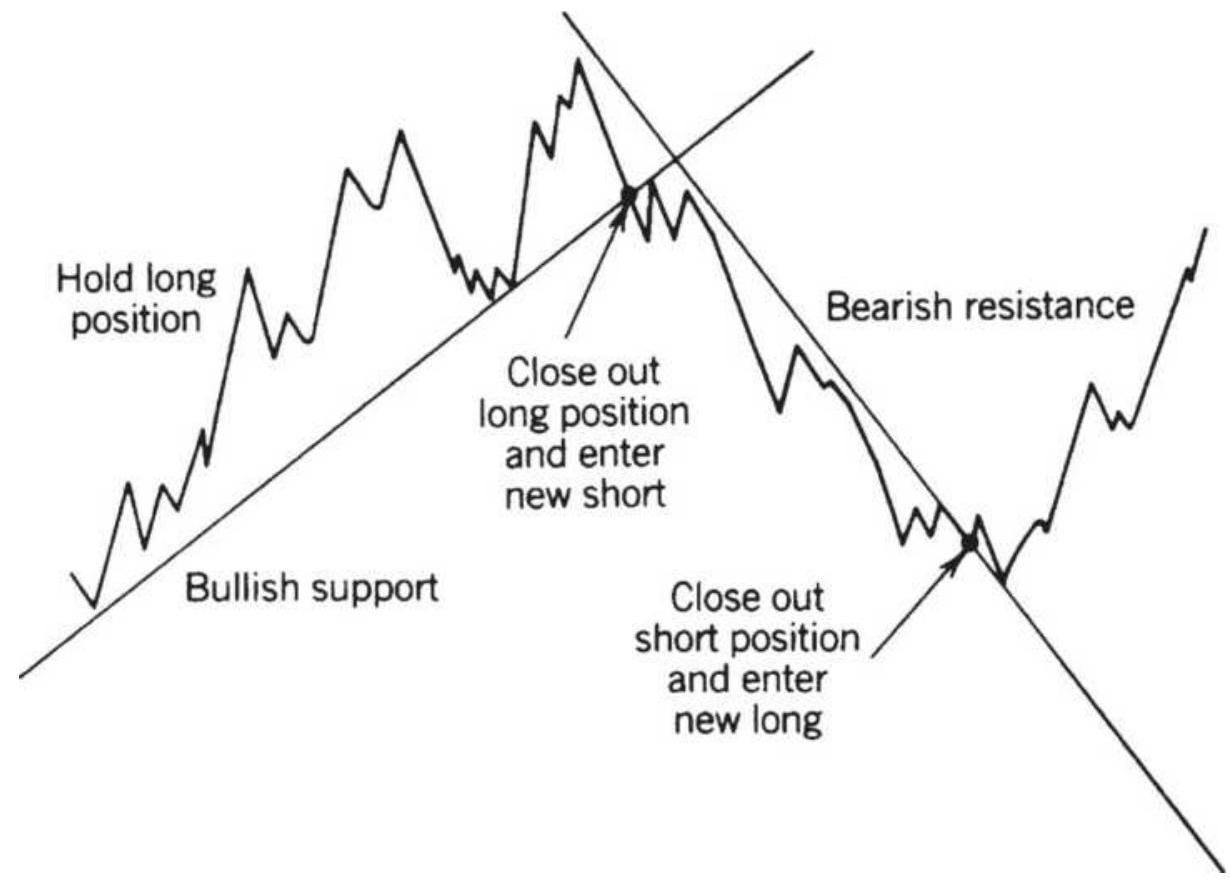

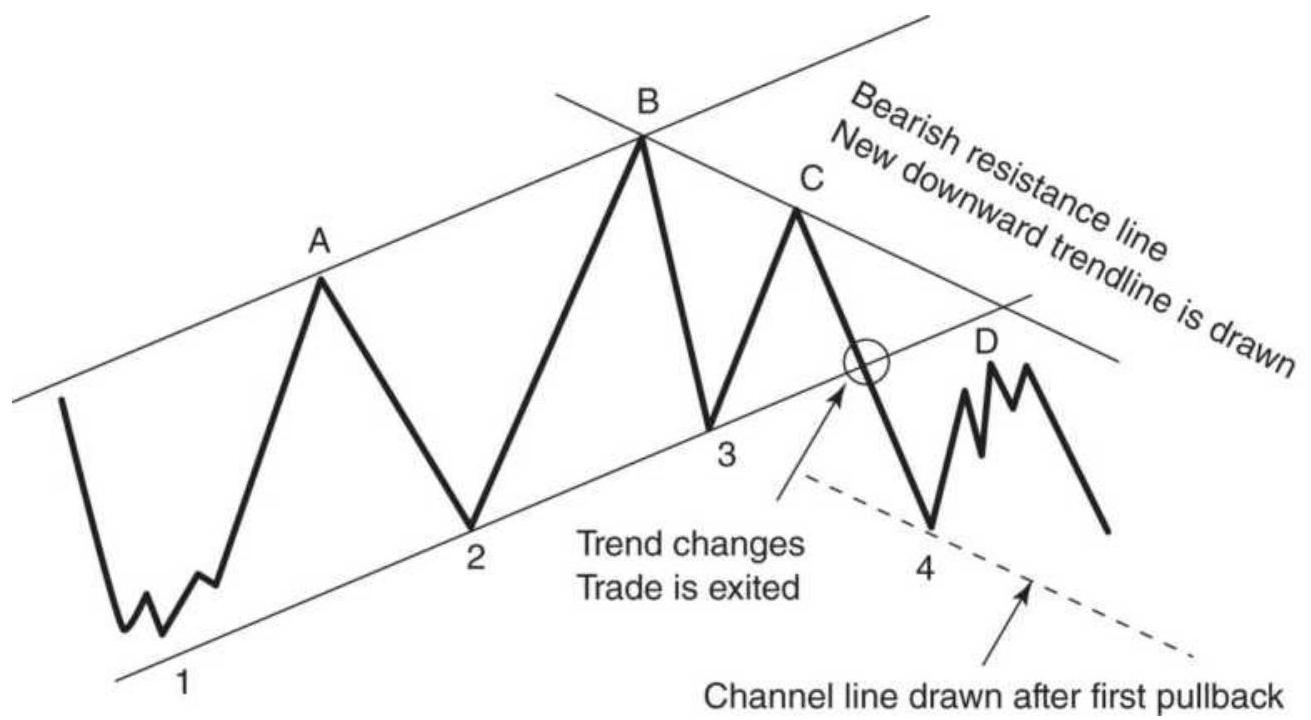

FIGURE 3.12 Turning from an upward to a downward channel. Trades are always ...

FIGURE 3.13 Price gaps shown on a chart of

Amazon.com.

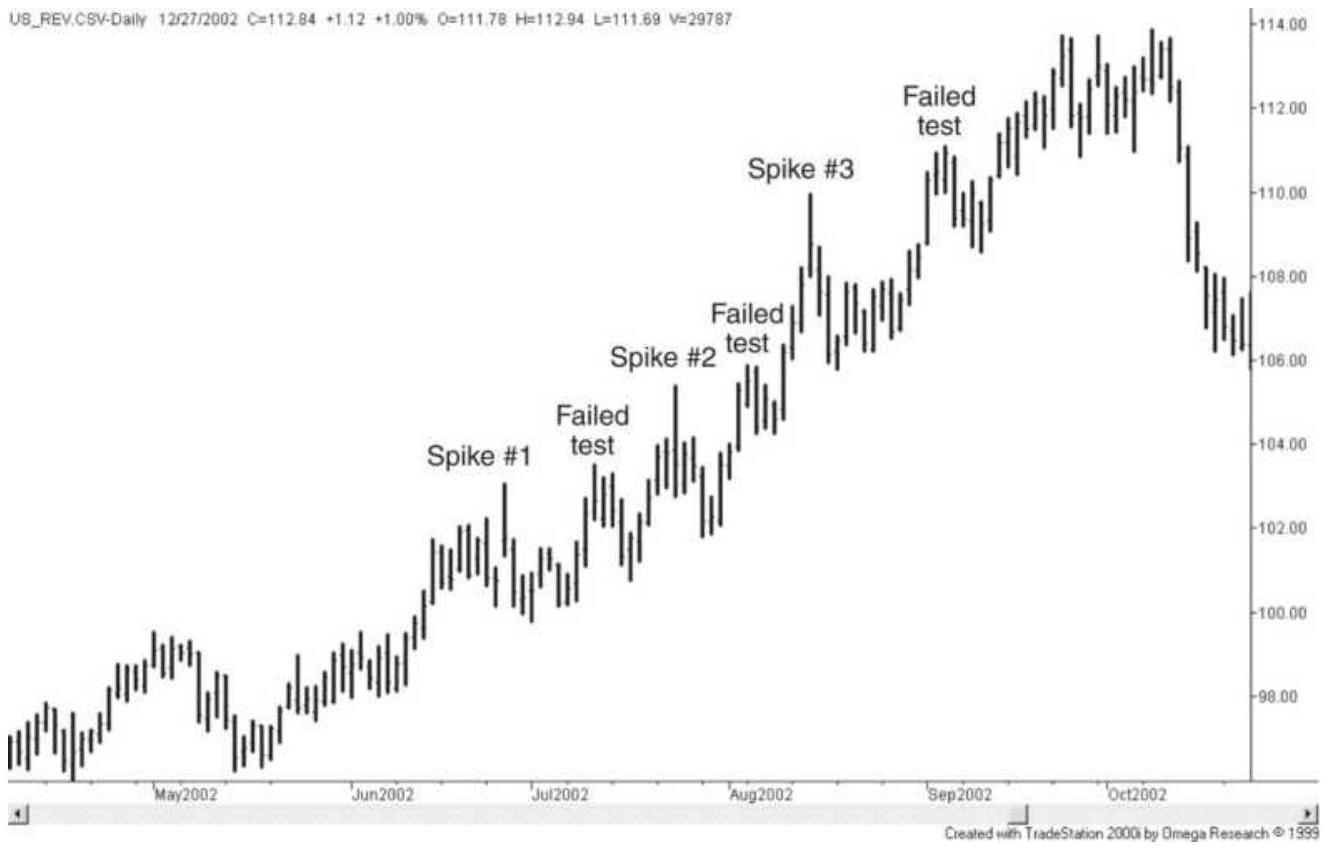

FIGURE 3.14 A series of spikes in bonds. From June through October 2002, U.S...

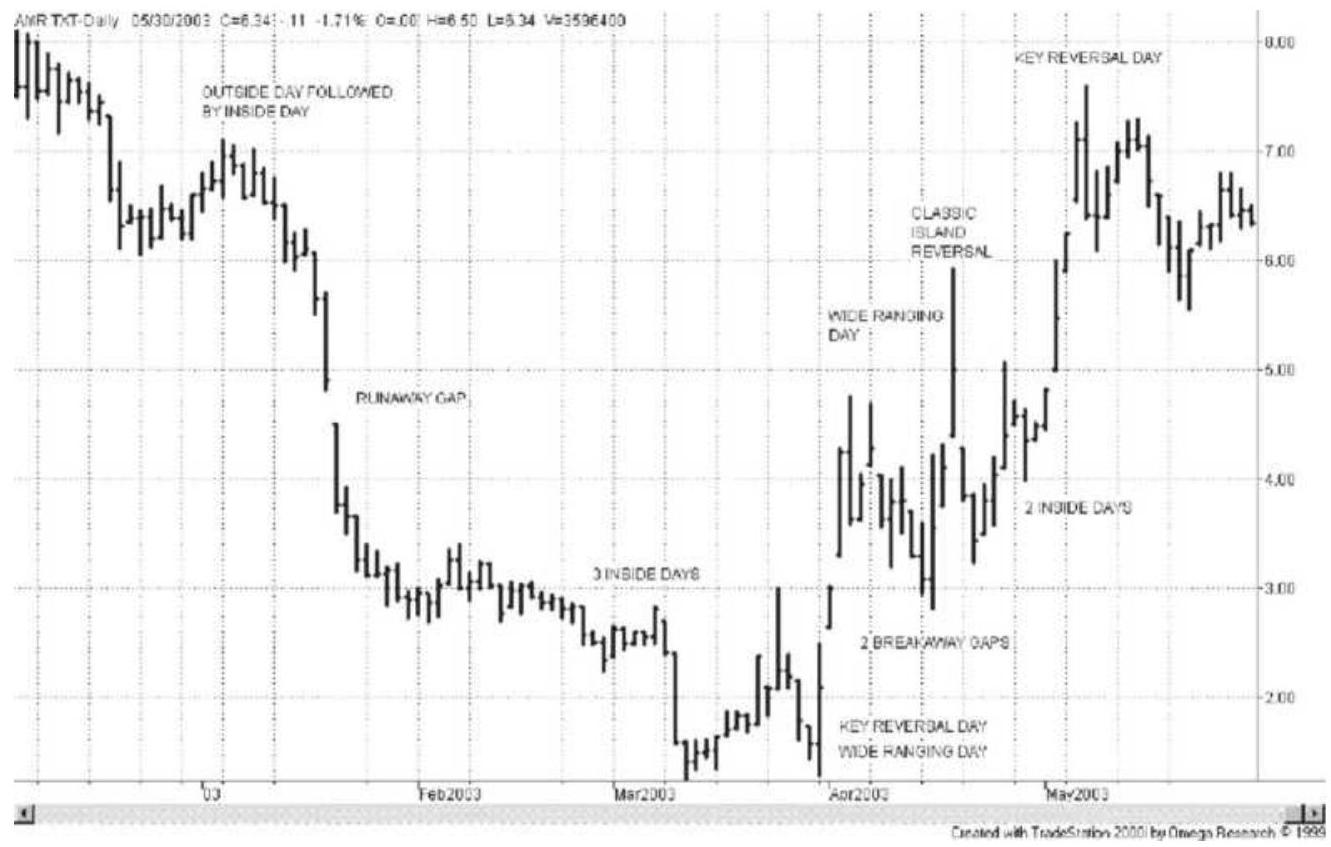

FIGURE 3.15 AMR in early 2003, showing a classic island reversal with exampl...

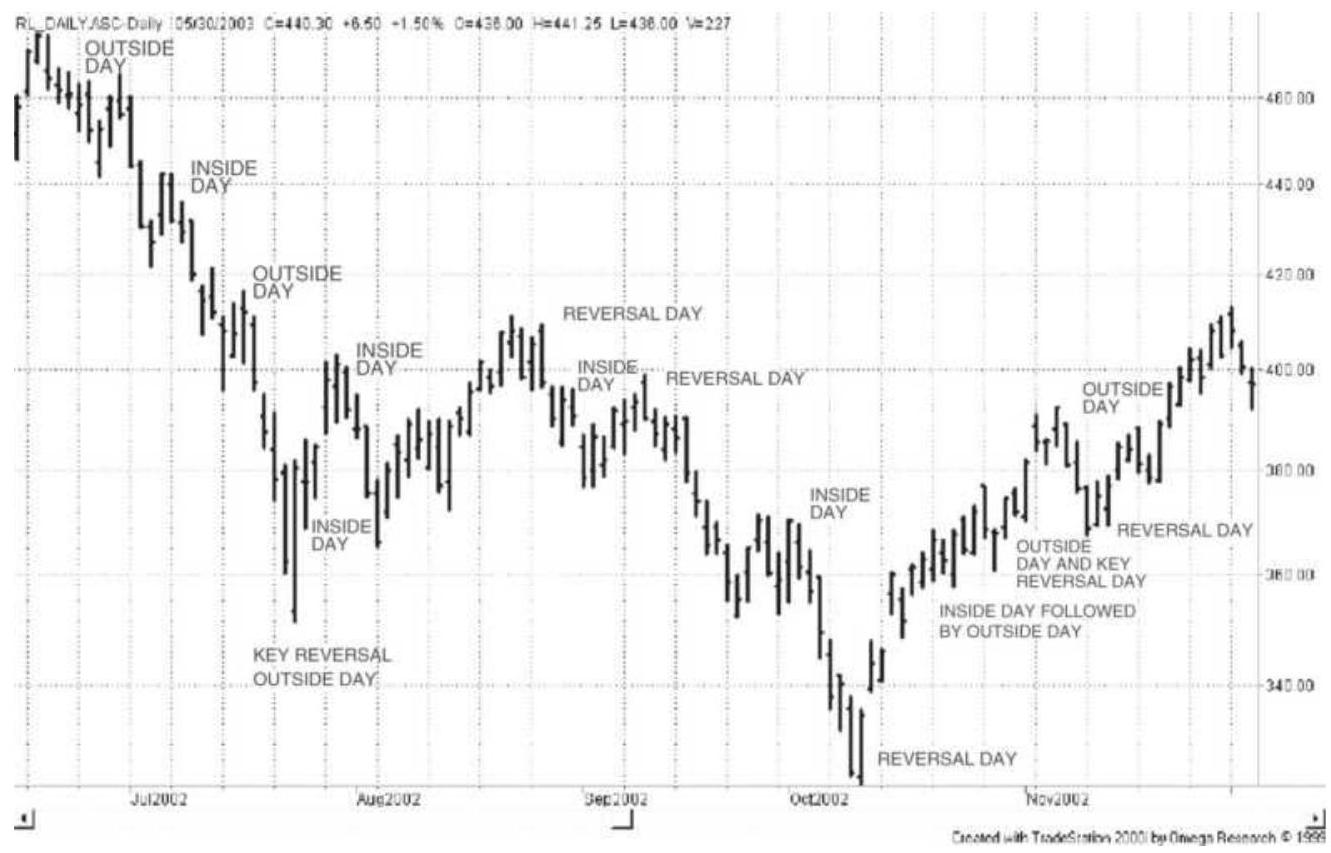

FIGURE 3.16 Russell 2000 during the last half of 2002 showing reversal days,...

FIGURE 3.17 Wide-ranging days, outside days, and inside days for Tyco.

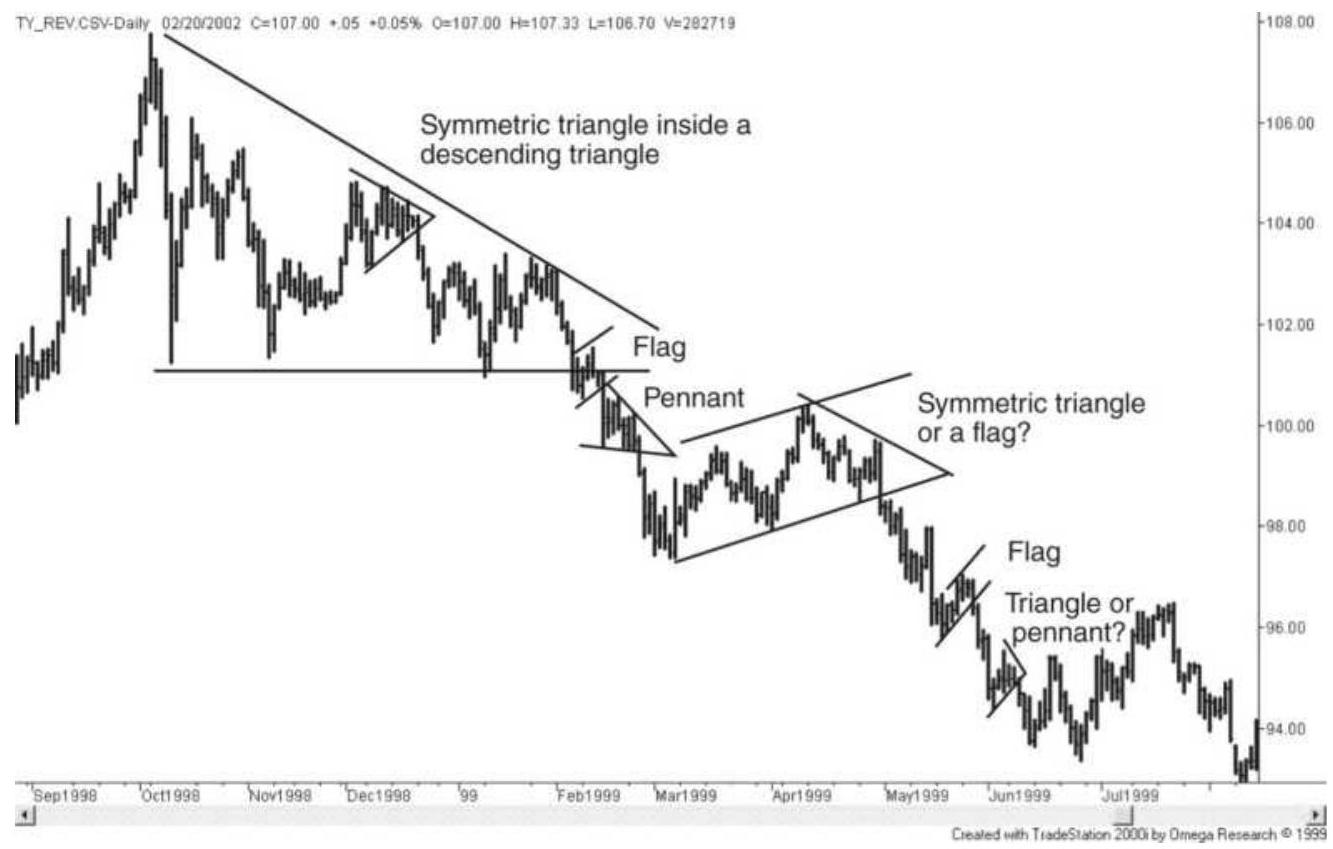

FIGURE 3.18 Symmetric and descending triangles and a developing bear market ...

FIGURE 3.19 An assortment of continuation patterns. These patterns are all r...

FIGURE 3.20 Wedge. A weaker wedge formation is followed by a strong rising w...

FIGURE \(3.21 \mathrm{~A} V\)-top in the NASDAQ index, March 2000.

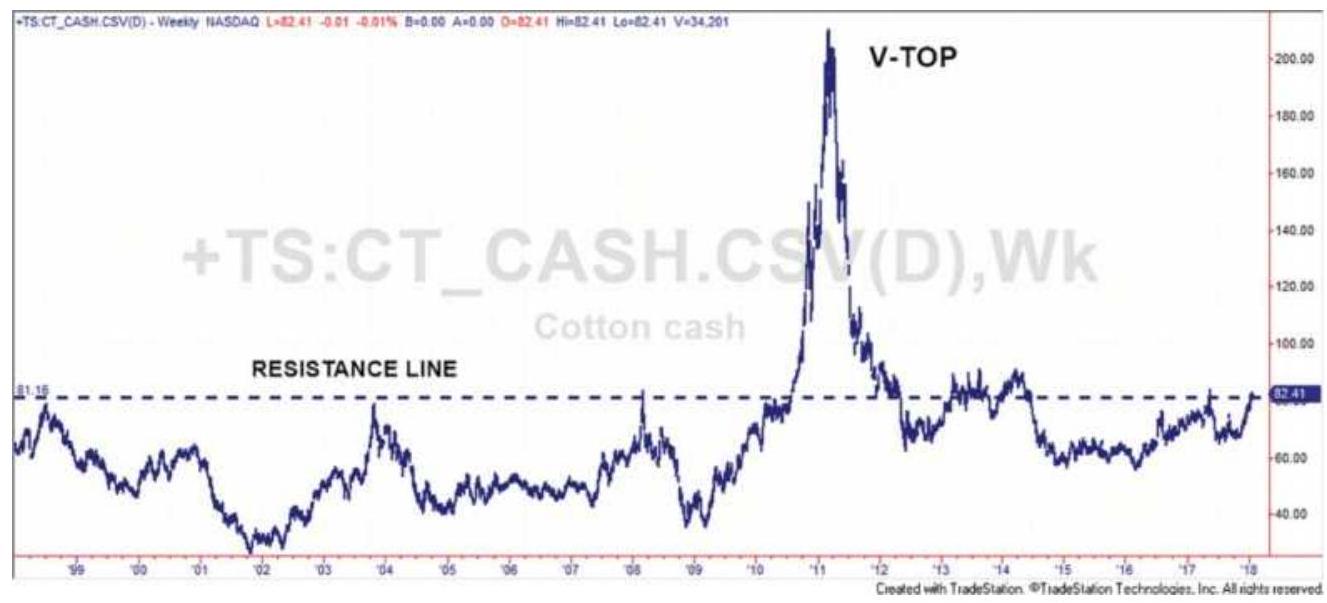

FIGURE 3.22 Cotton has frequent \(V\)-tops but nothing as extreme as in 2011.

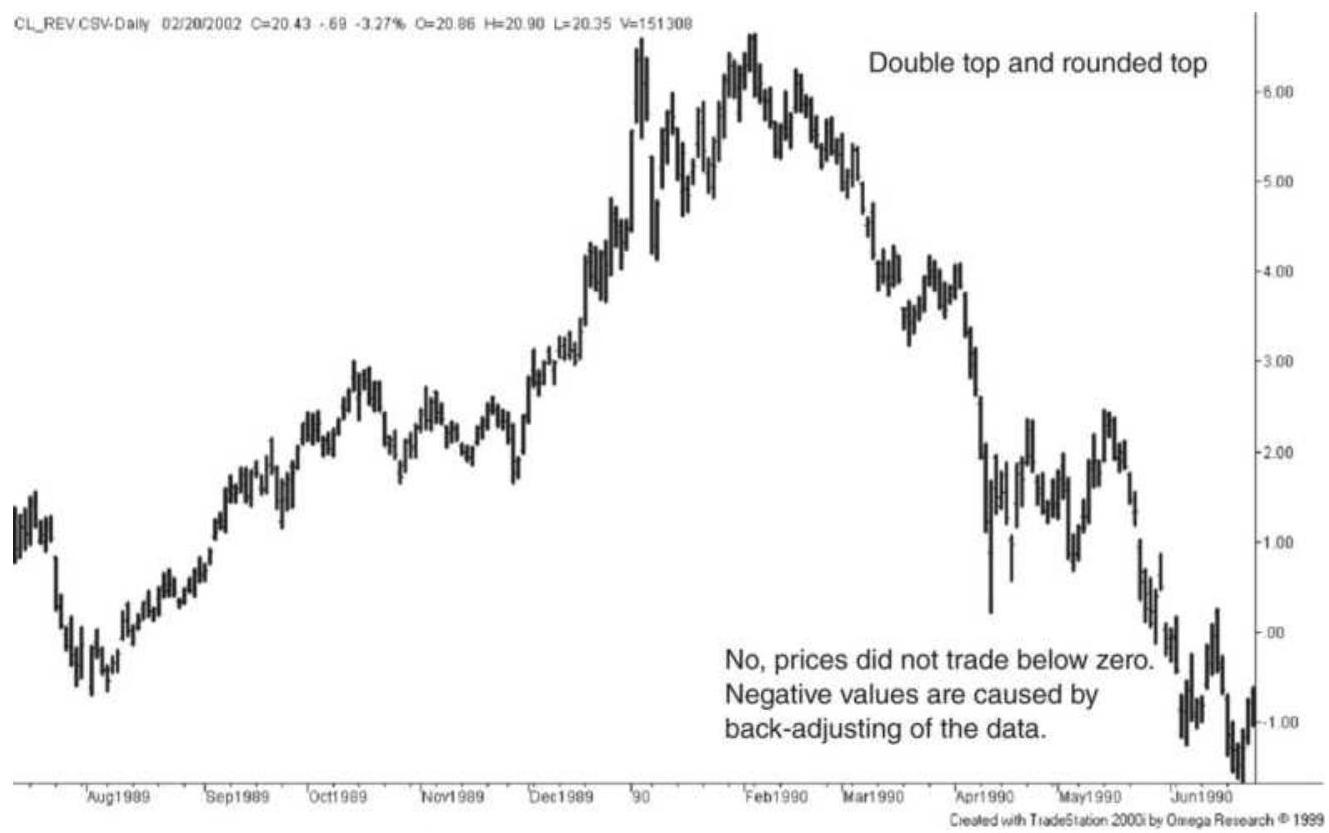

FIGURE 3.23 Two \(V\)-bottoms in crude oil, back-adjusted futures.

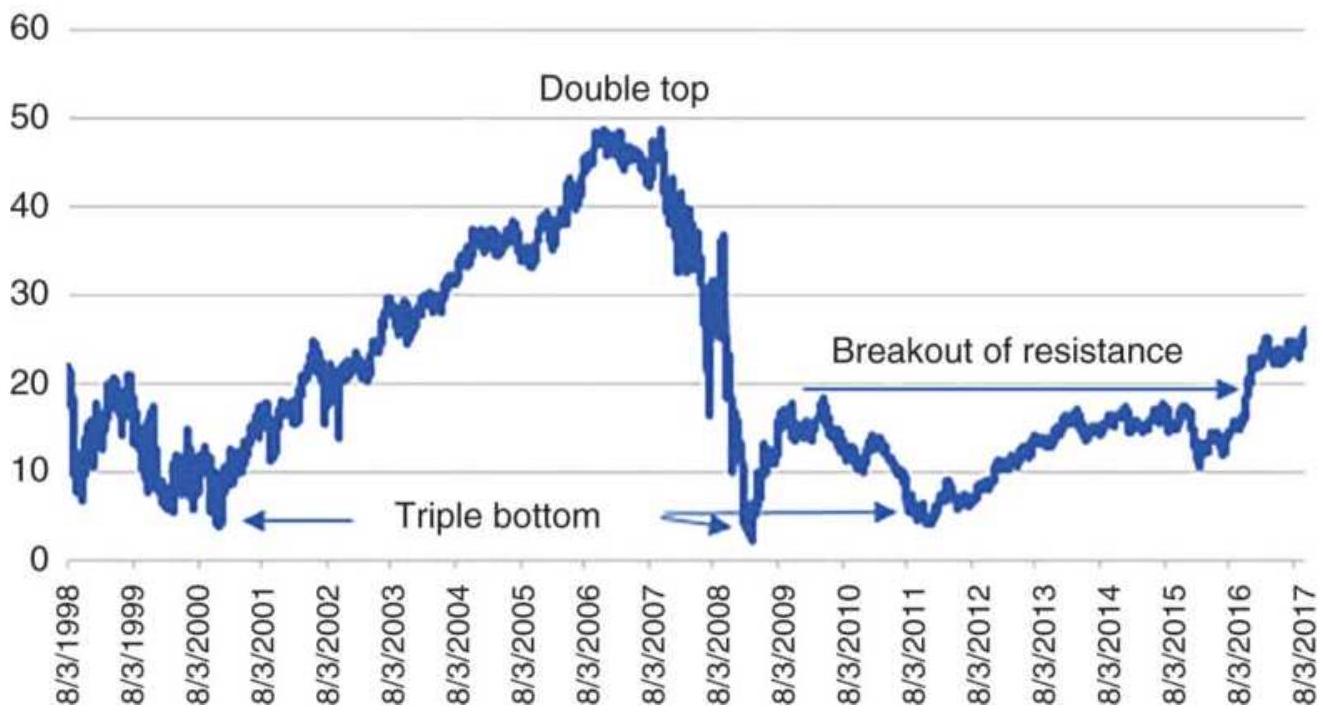

FIGURE 3.24 A double top in crude oil. FIGURE 3.25 Triple bottom in Bank of America (BAC).

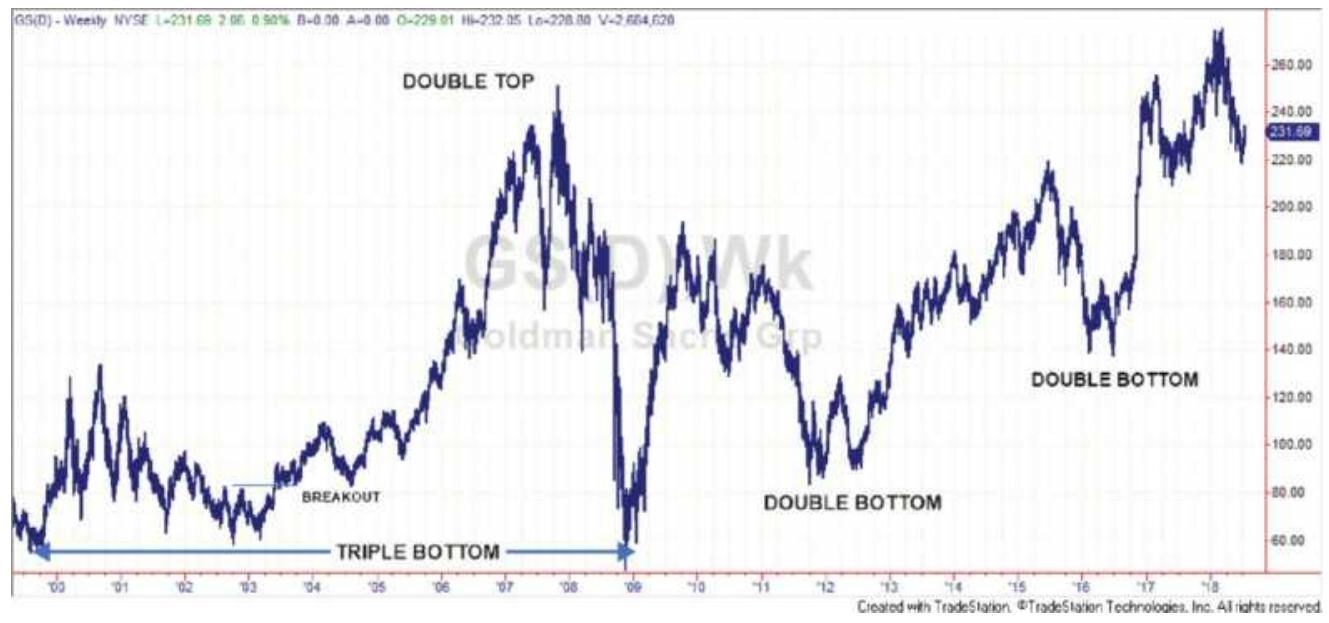

FIGURE 3.26 A triple bottom and two double bottoms in Goldman-Sachs (GS).

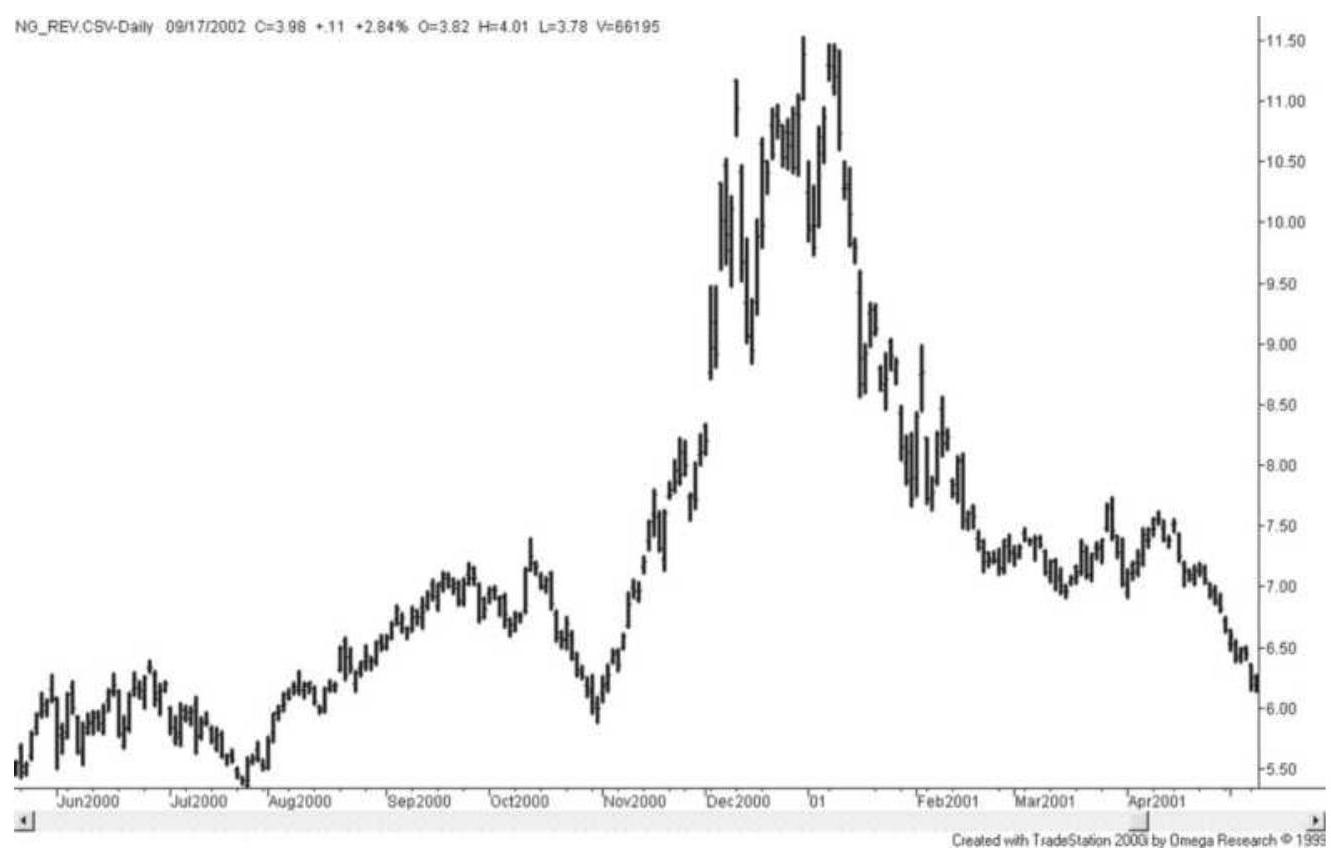

FIGURE 3.27 Natural gas shows a classic triple

top.

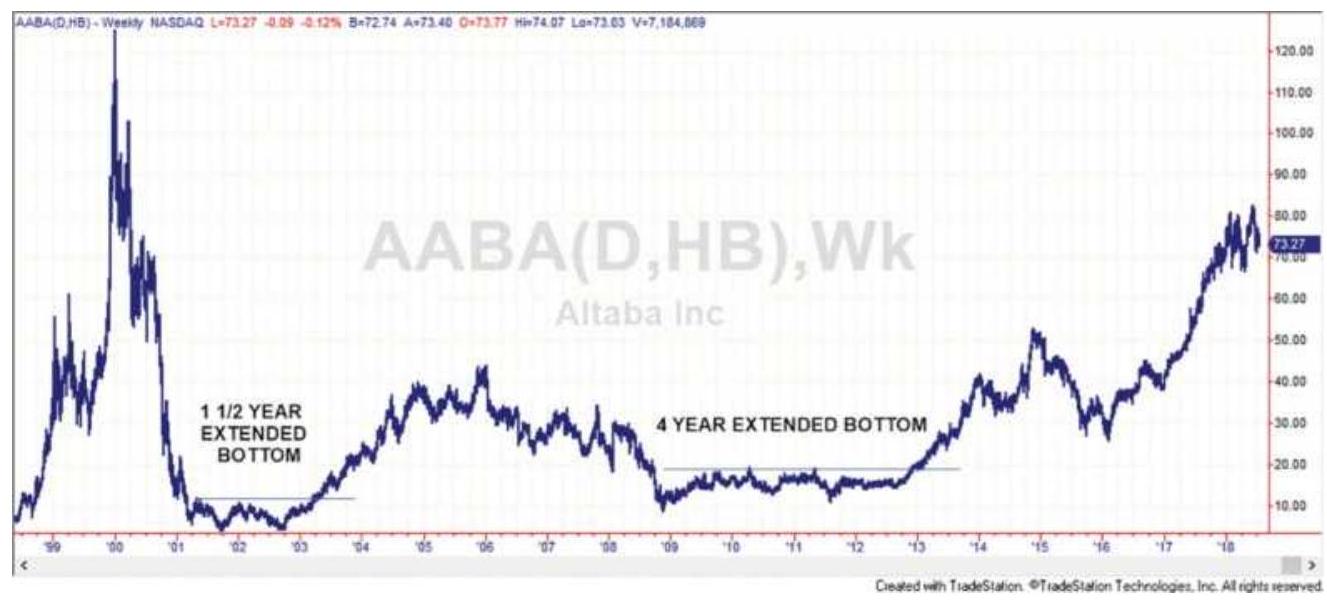

FIGURE 3.28 Two cases of a breakout of an extended bottom in Yahoo (AABA).

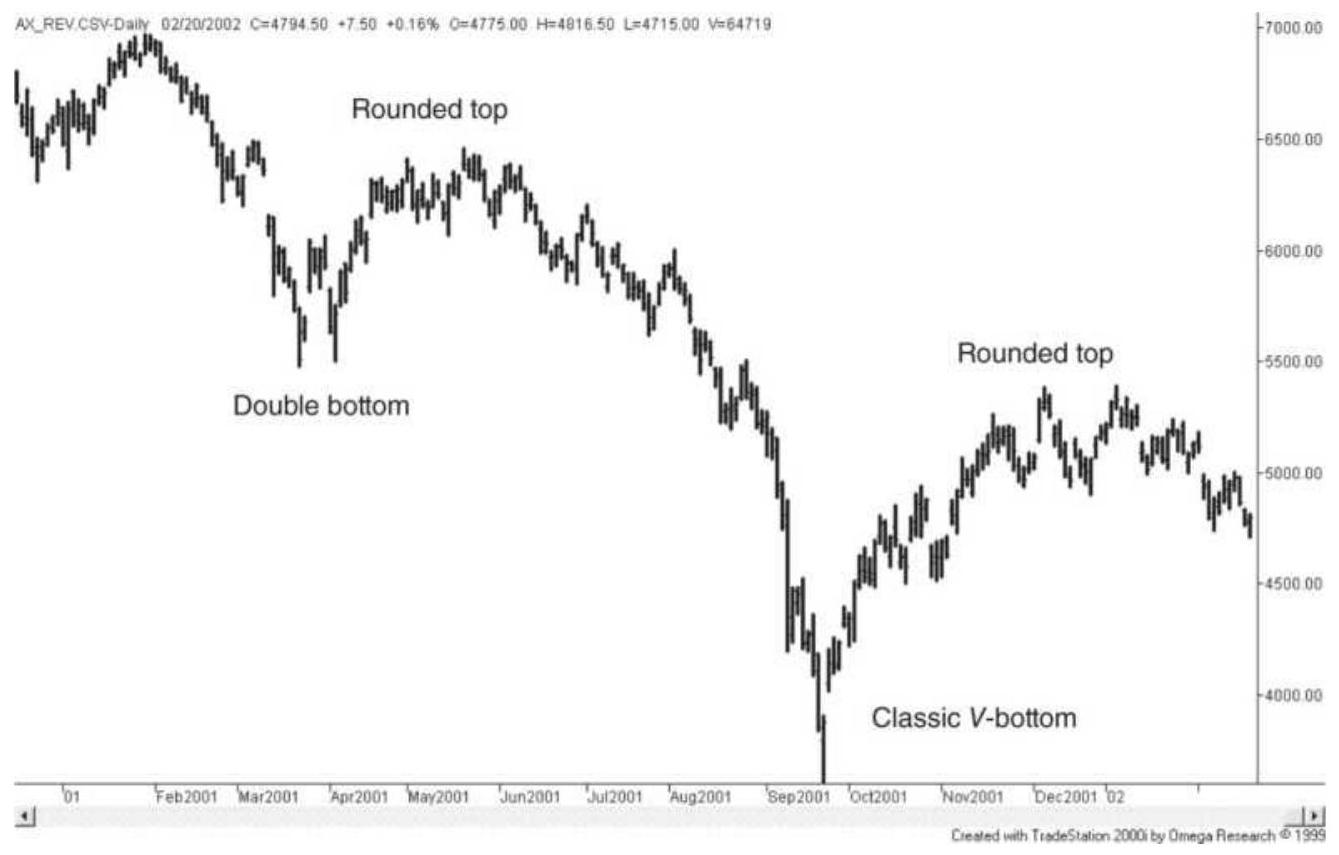

FIGURE 3.29 Two rounded tops in the German DAX stock index.

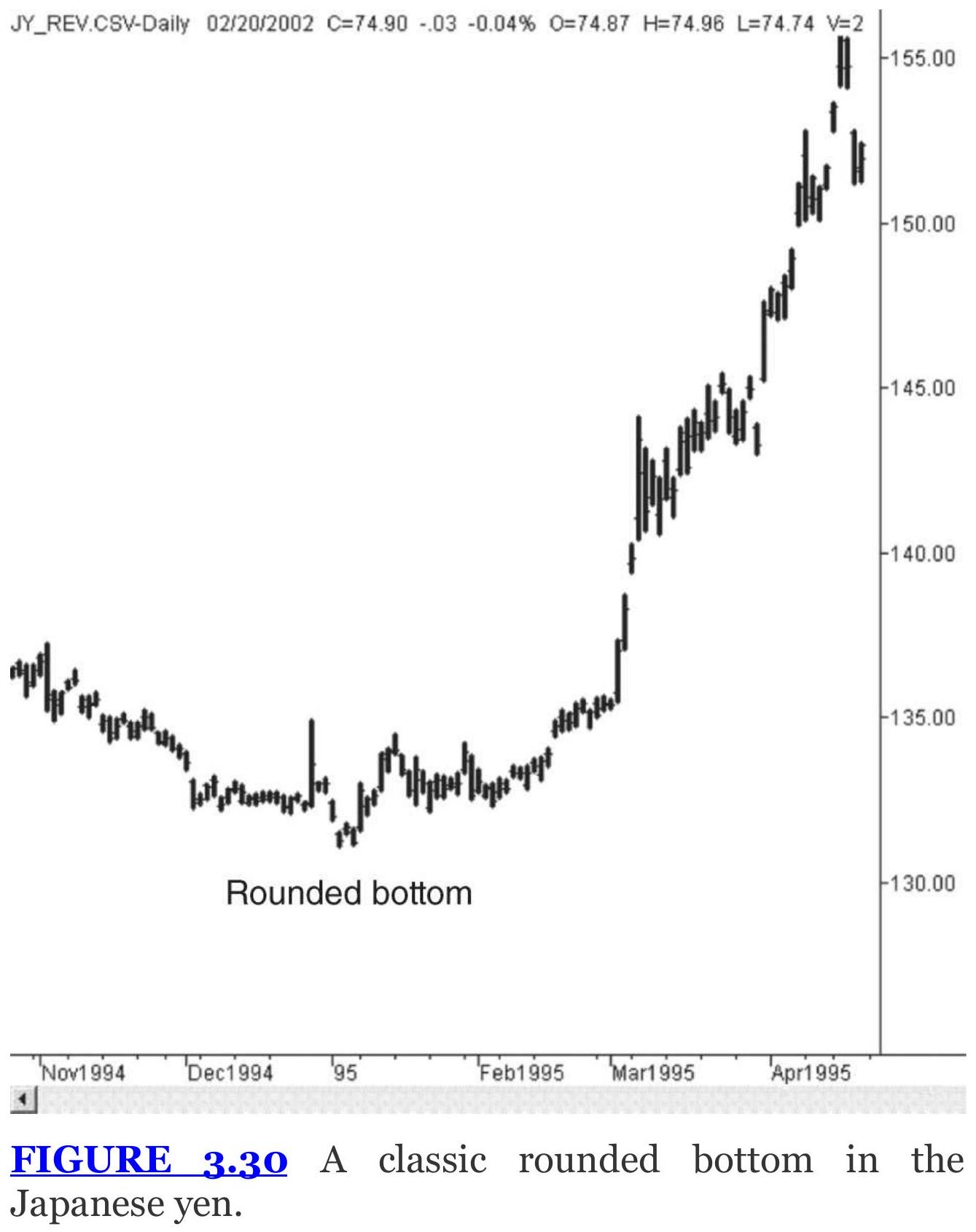

FIGURE 3.30 A classic rounded bottom in the Japanese yen.

FIGURE 3.31 A large declining wedge followed by a upside breakout in the Jap...

FIGURE 3.32 Head-and-shoulders top pattern in the Japanese Nikkei index.

FIGURE 3.33 On the left, an episodic pattern shown in an upward price shock ...

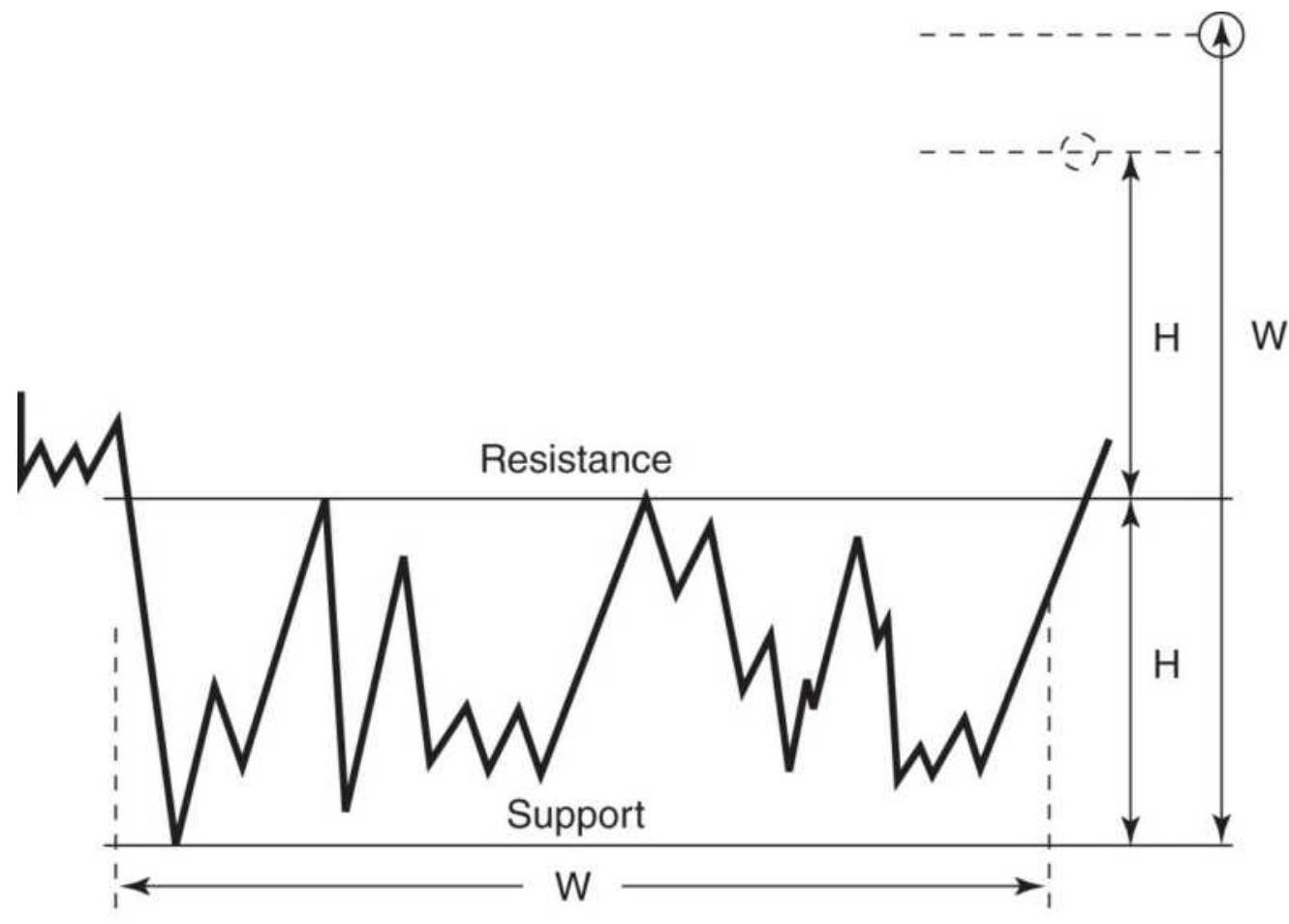

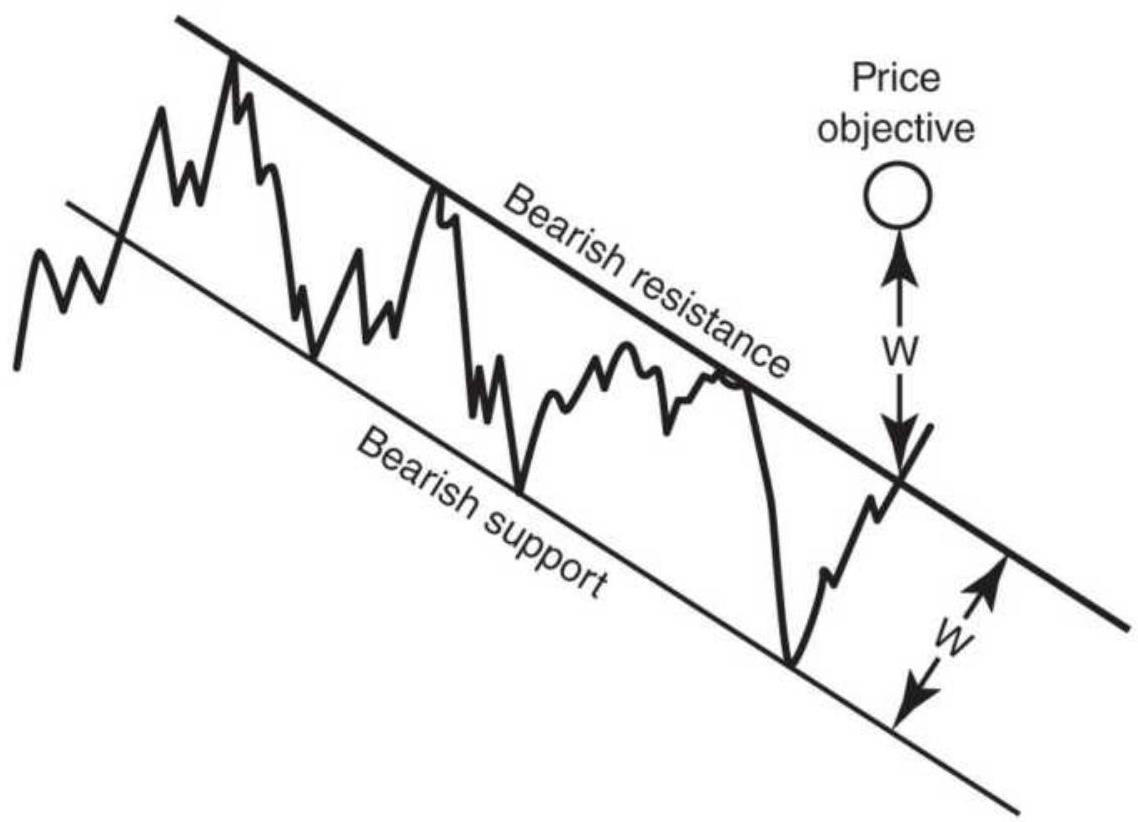

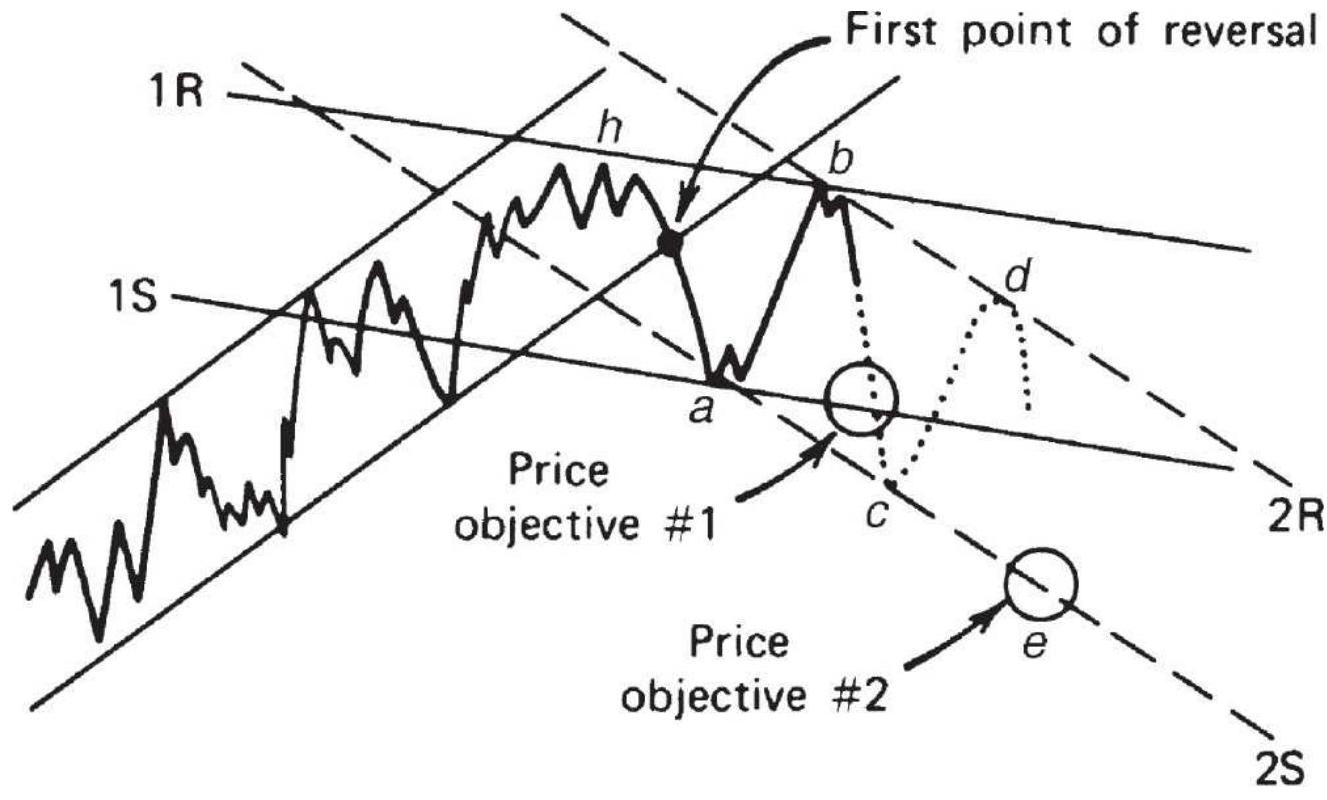

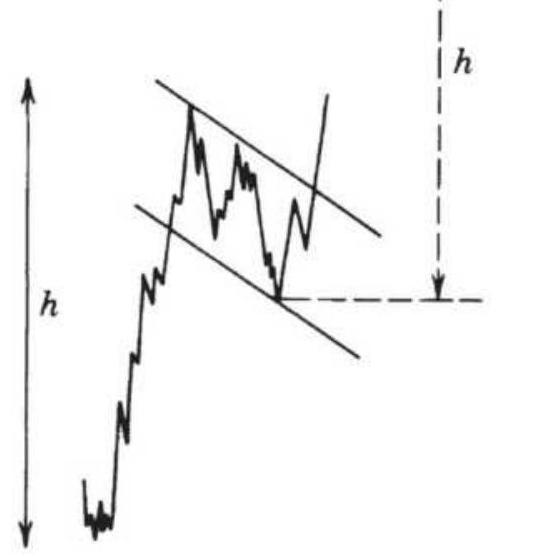

FIGURE 3.34 Price objectives for consolidation patterns and channels. (a) Tw...

\section*{FIGURE 3.35 Forming new channels to determine objectives.}

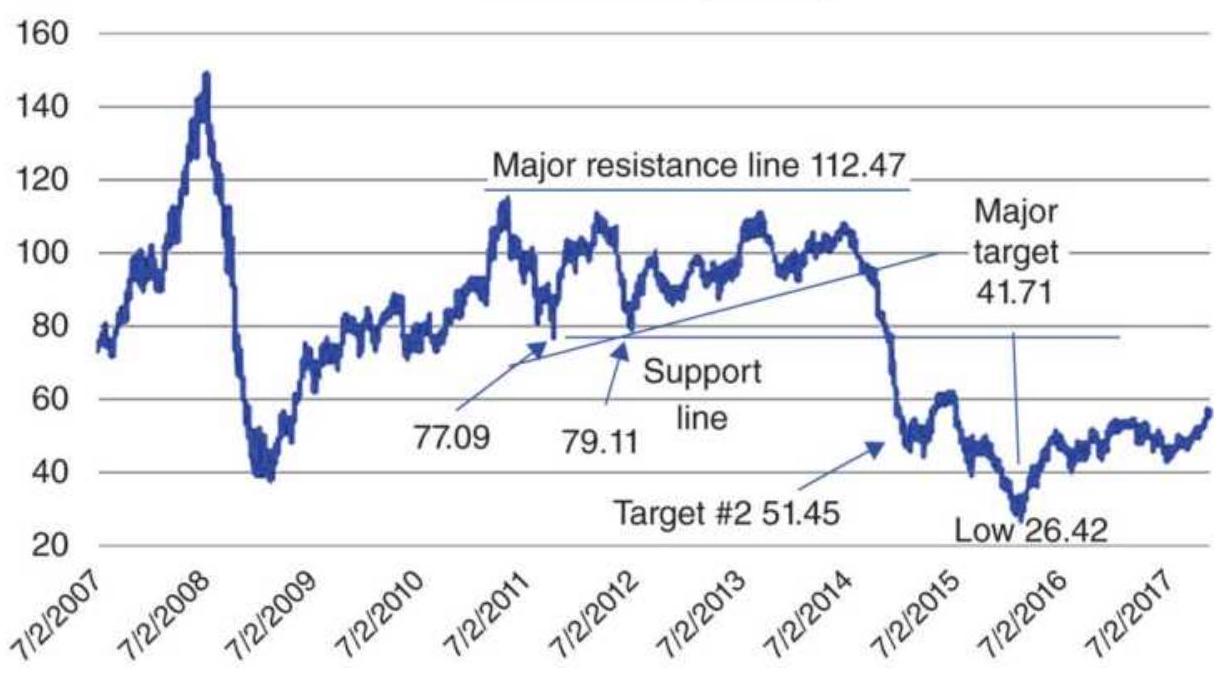

FIGURE 3.36 Two profit targets following a top formation in back-adjusted cr...

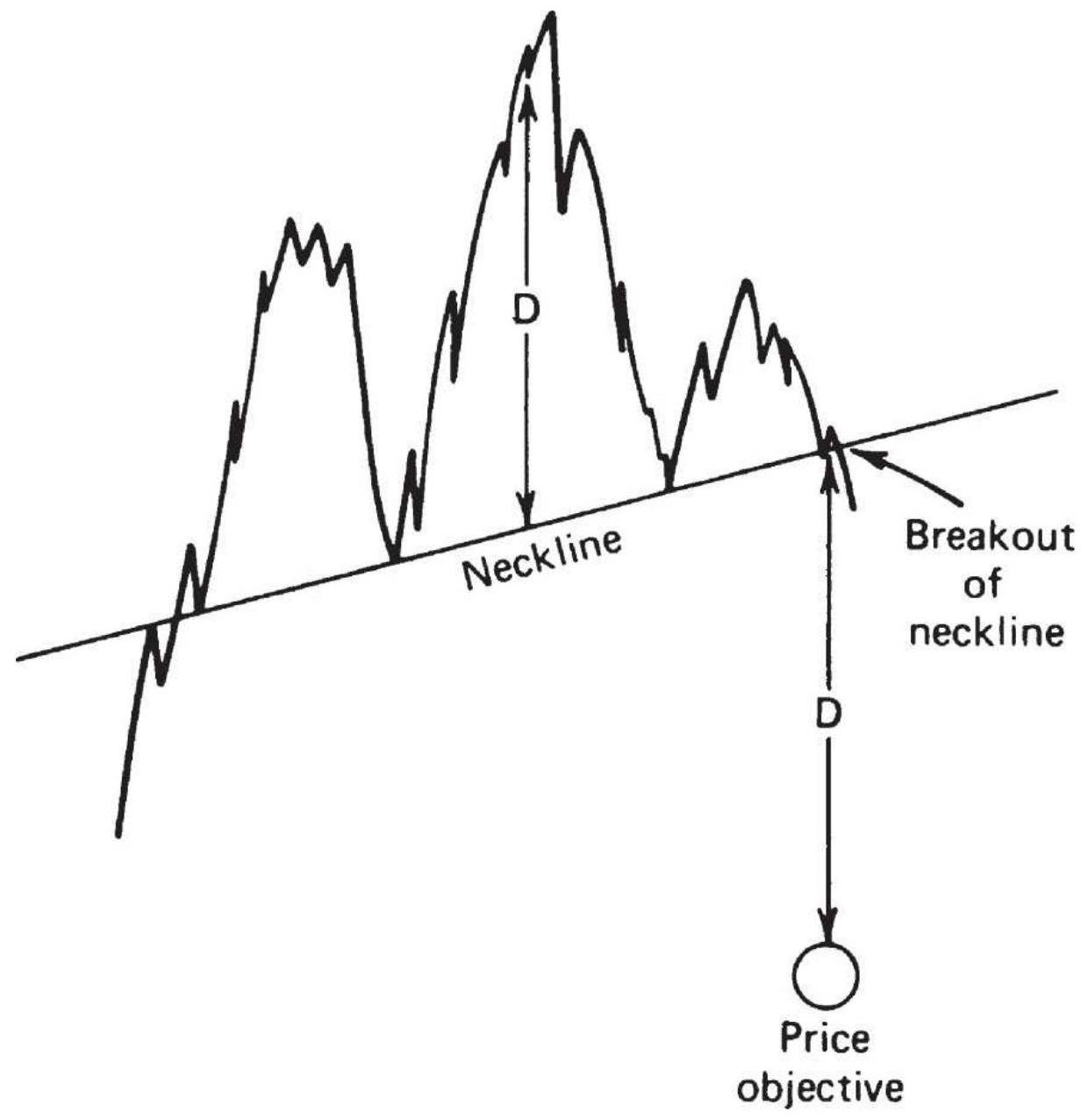

FIGURE 3.37 Head-and-shoulders top price objective.

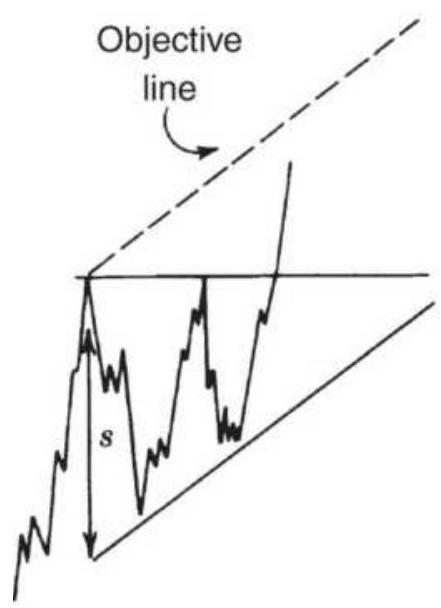

FIGURE 3.38 Triangle and flag objectives. (a) Triangle objective is based on...

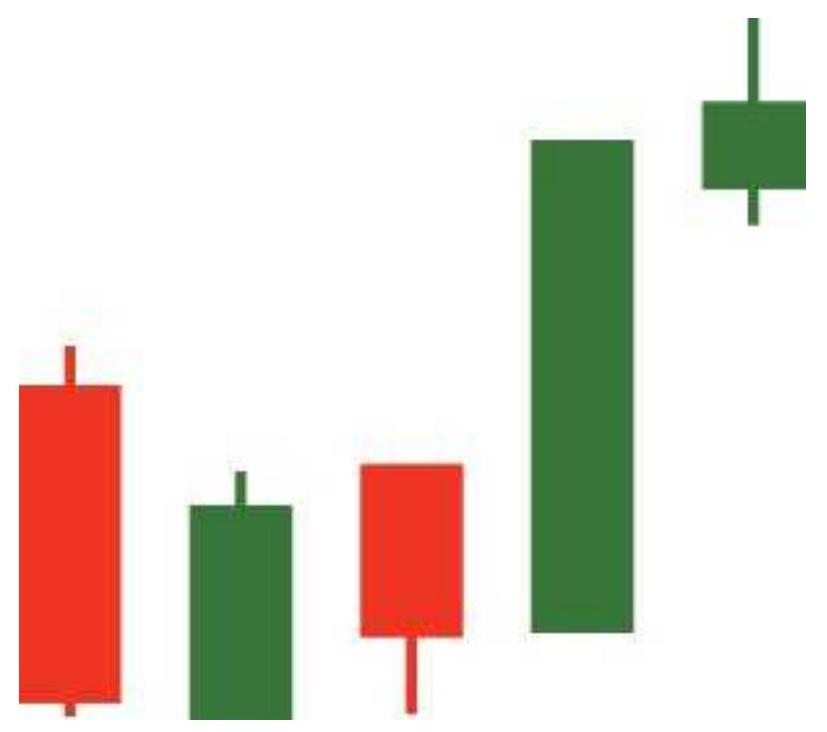

FIGURE \(3.39(a-j)\) Popular candle formations.

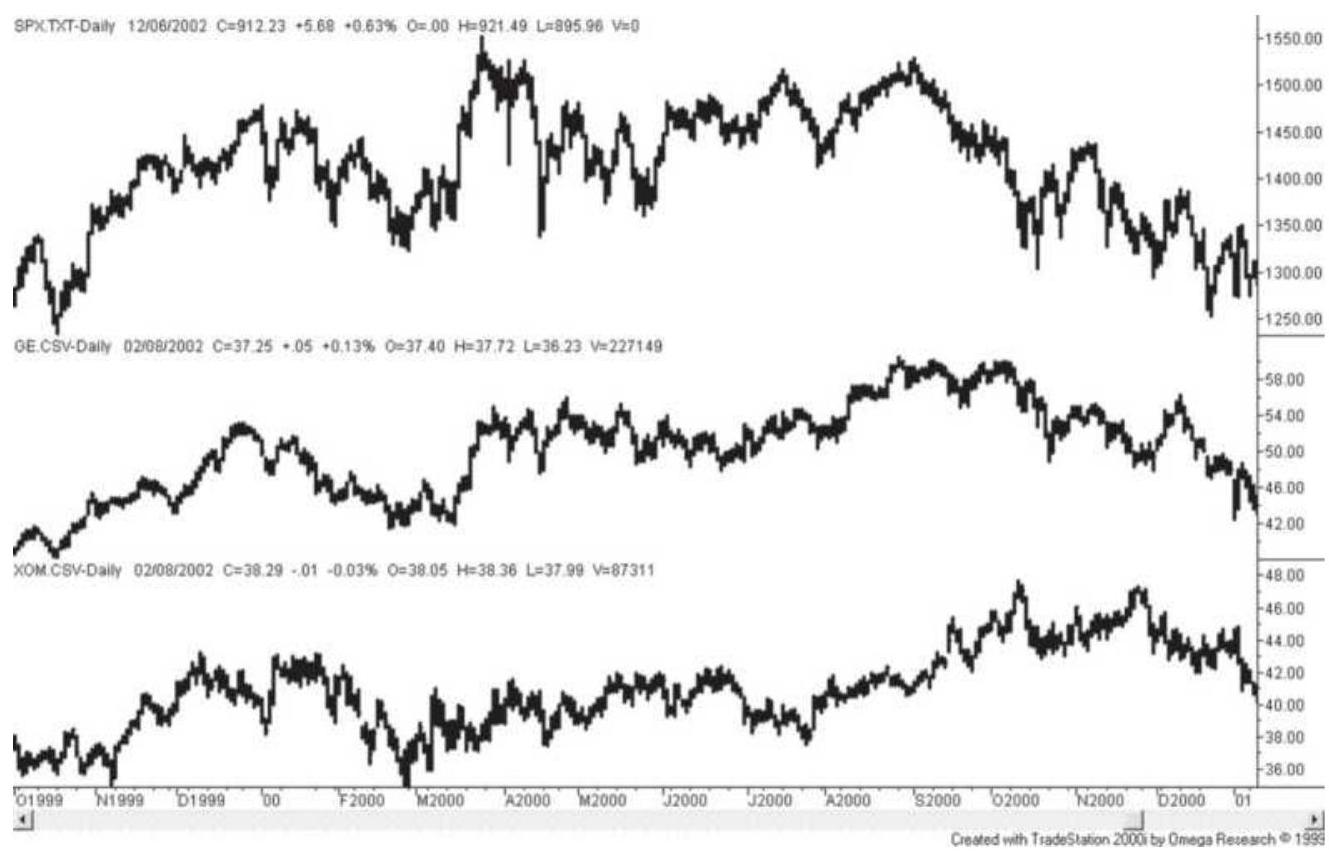

FIGURE 3.40 Similar patterns in the S\&P, GE, and Exxon.

FIGURE 3.41 Asian equity index markets adjusted to the same volatility level...

Chapter 4

FIGURE 4.1 Nofri's Congestion-Phase System applied to wheat, as programmed o...

FIGURE 4.2 Four daily patterns.

FIGURE 4.3 U.S. 30-year T-bond prices showing pivot points above and below \(t\)... FIGURE 4.4 Tubbs' Law of Proportion. FIGURE 4.5 Trident entry-exit.

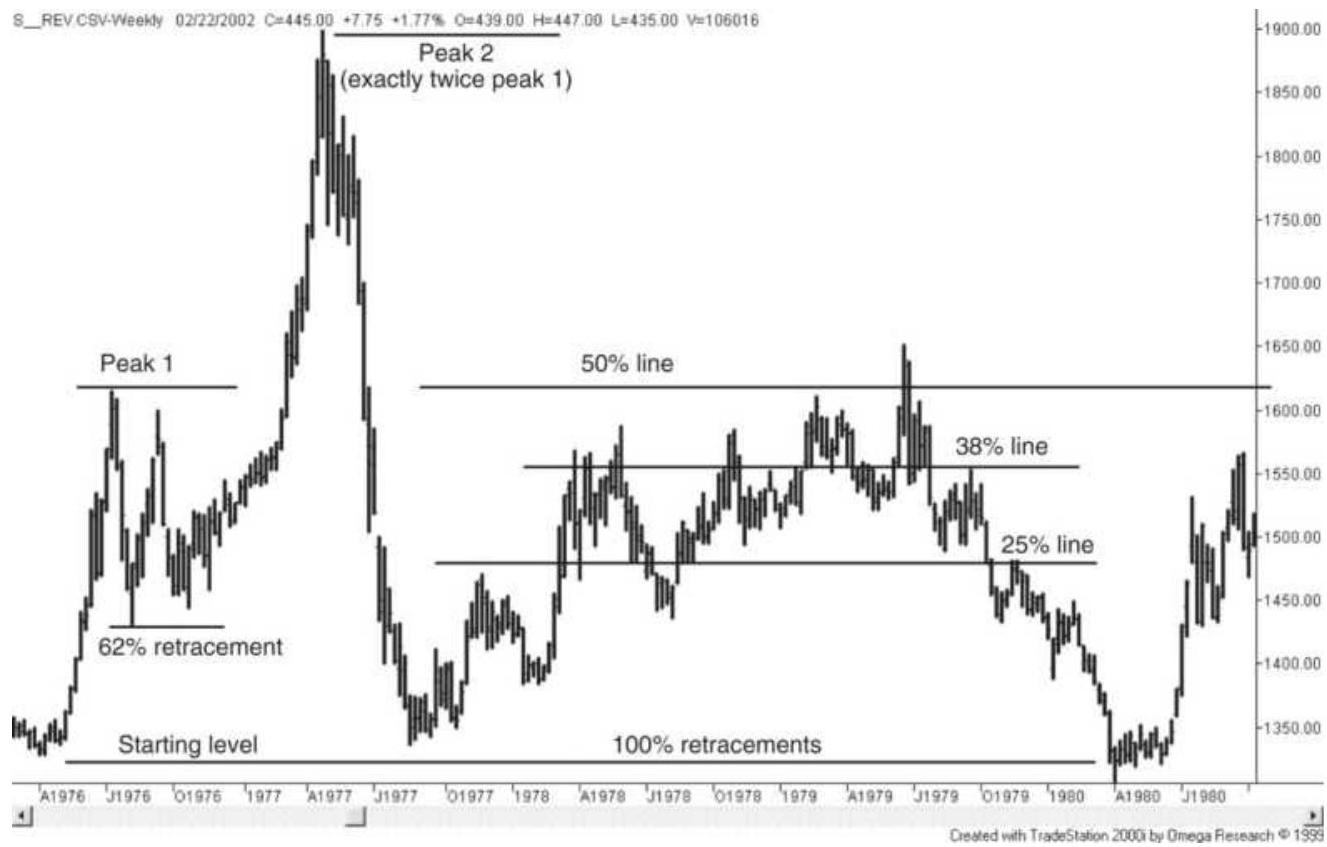

FIGURE 4.6 Soybean retracements in the late 1970 s.

FIGURE 4.7 S\&P retracement levels.

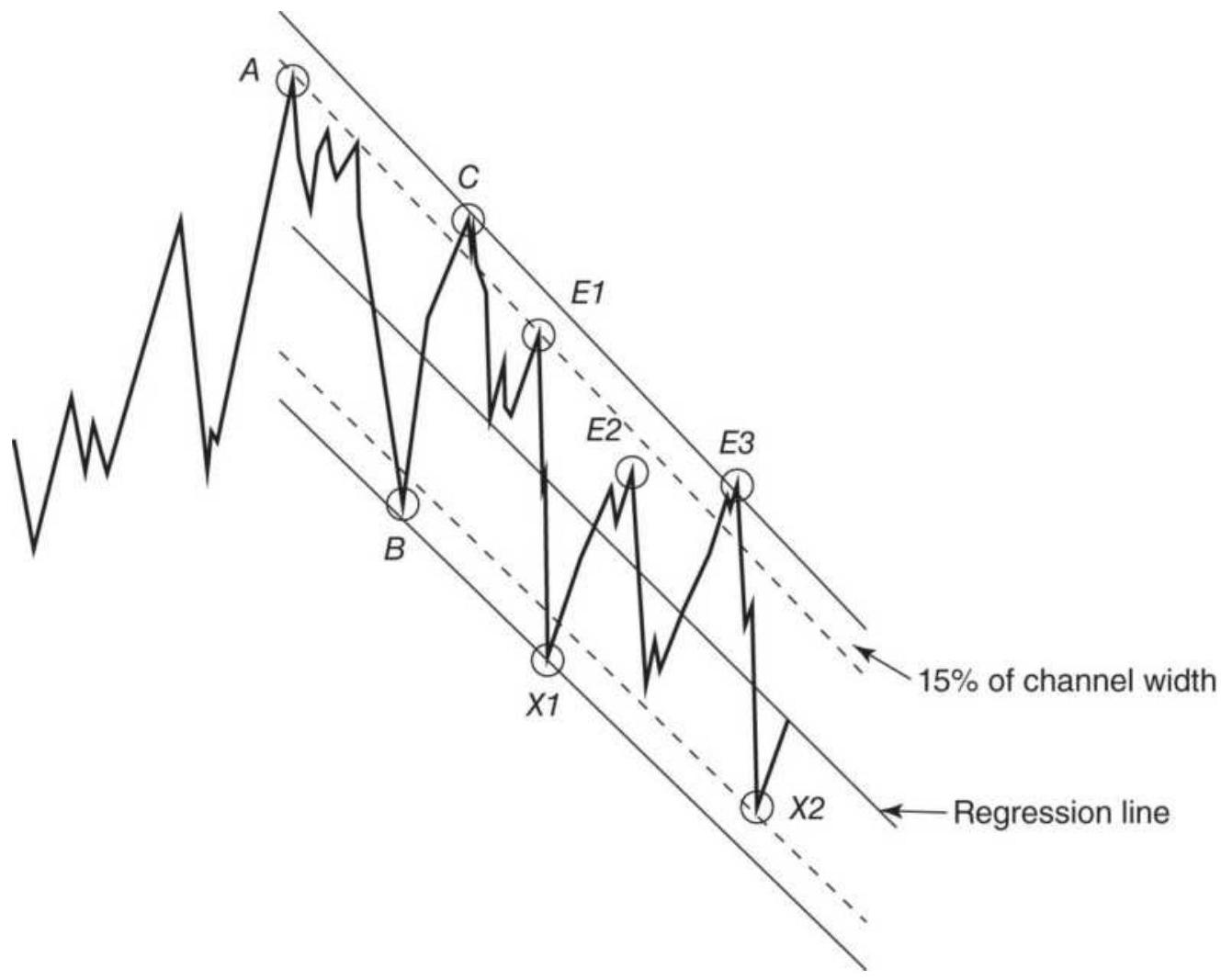

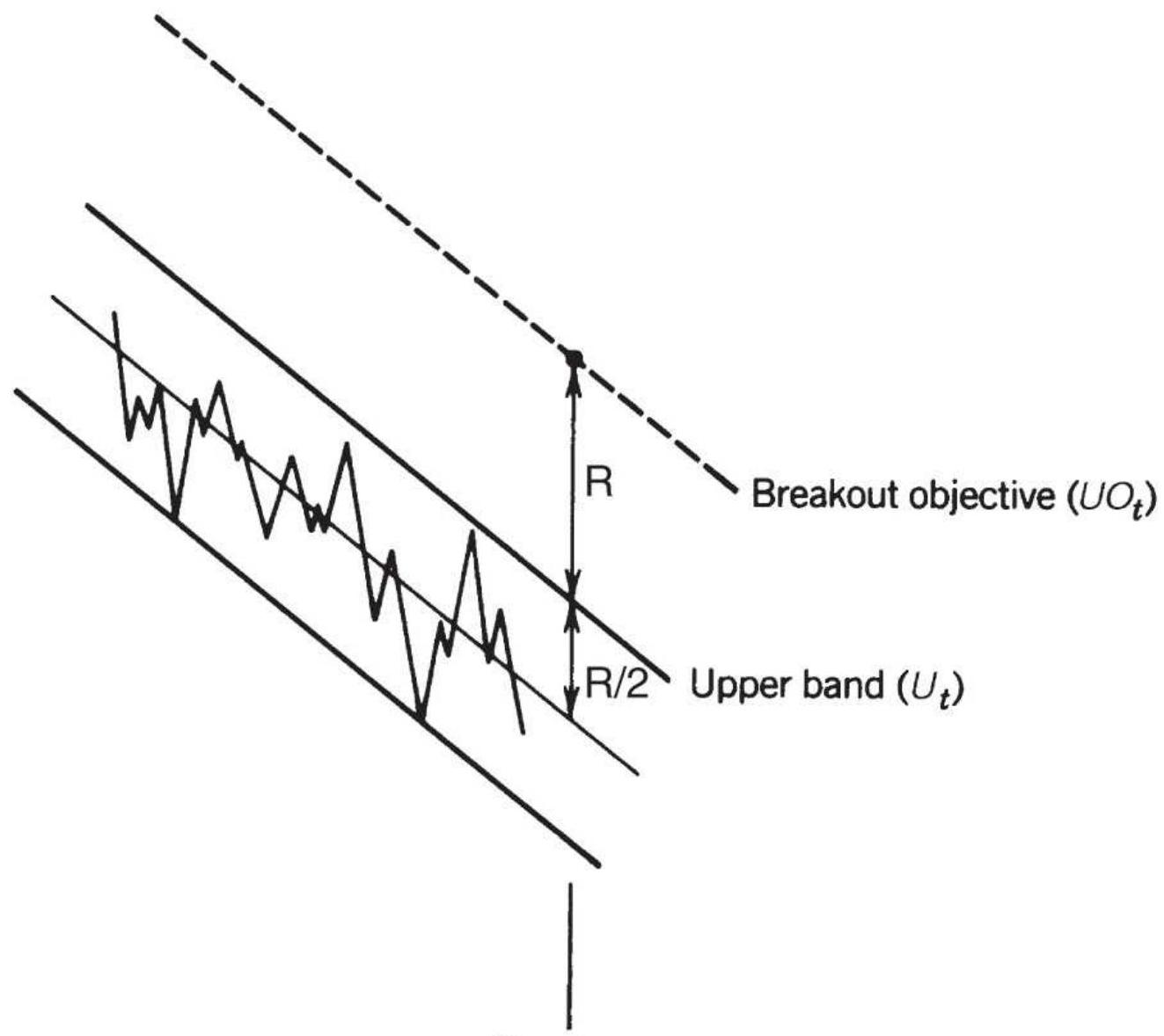

FIGURE 4.8 Trading a declining channel.

FIGURE 4.9 Channel calculation.

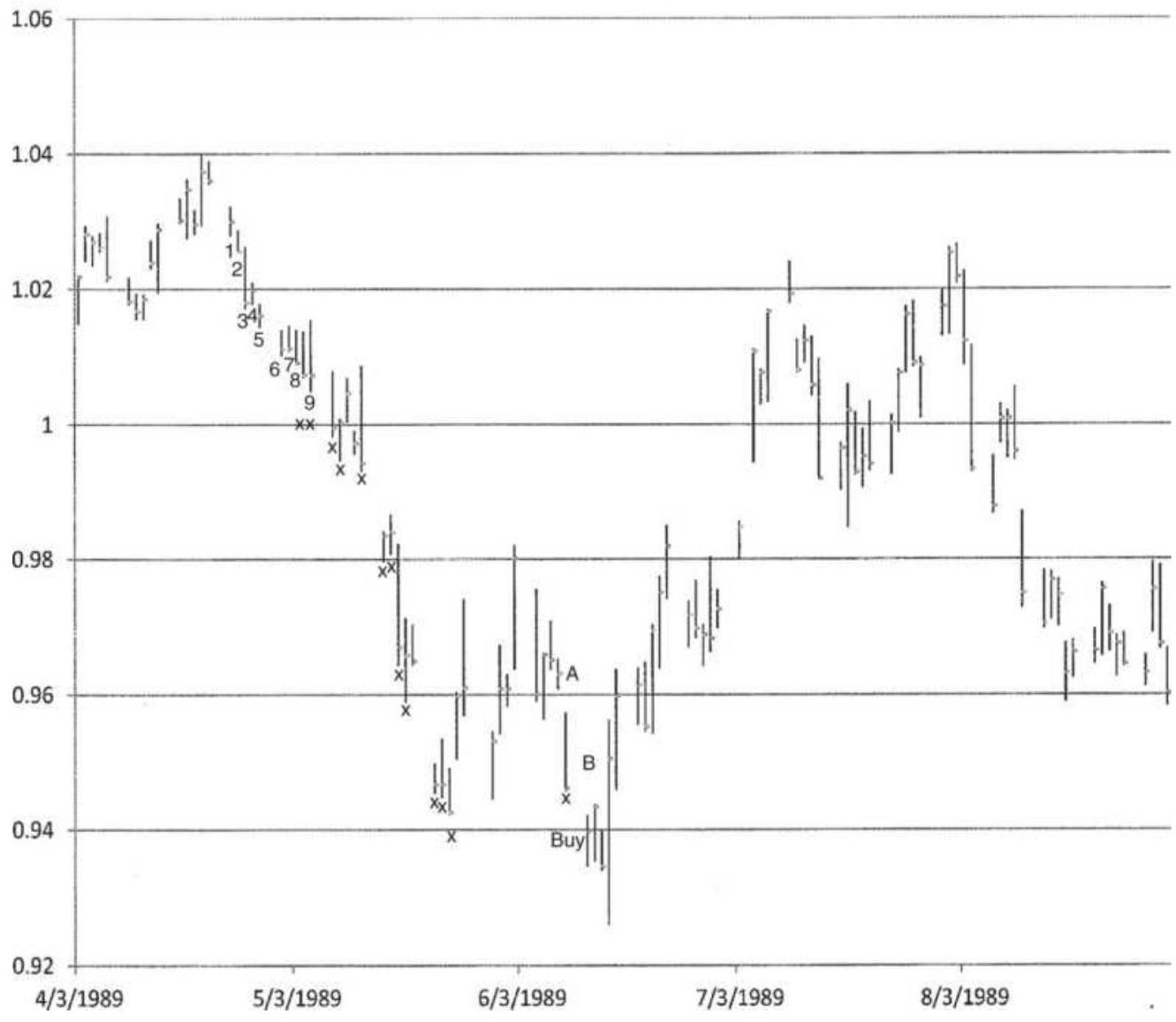

FIGURE 4.10 A sequential buy signal in the Deutsche mark.

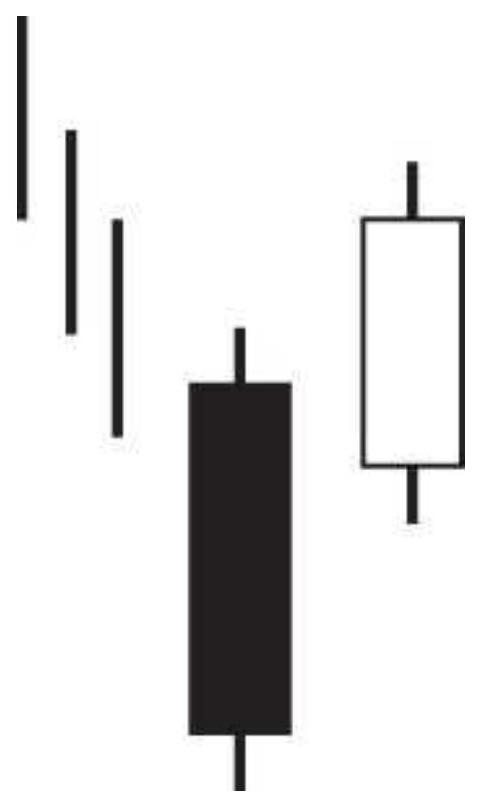

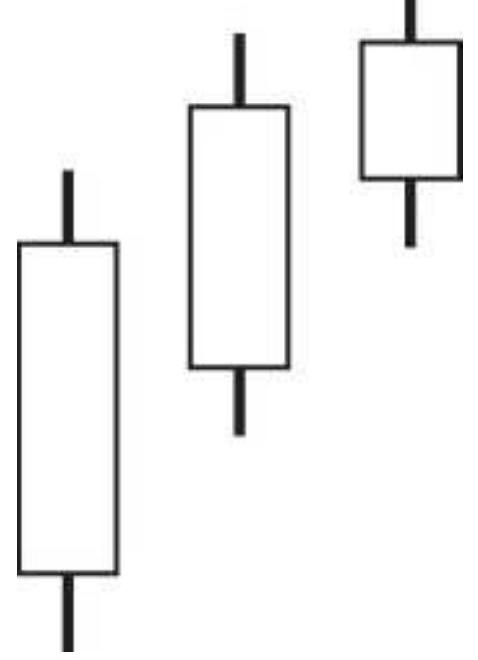

Figure 4.11a Above the stomach.

Figure 4.11b Bullish belt hold.

Figure 4.11c Deliberation.

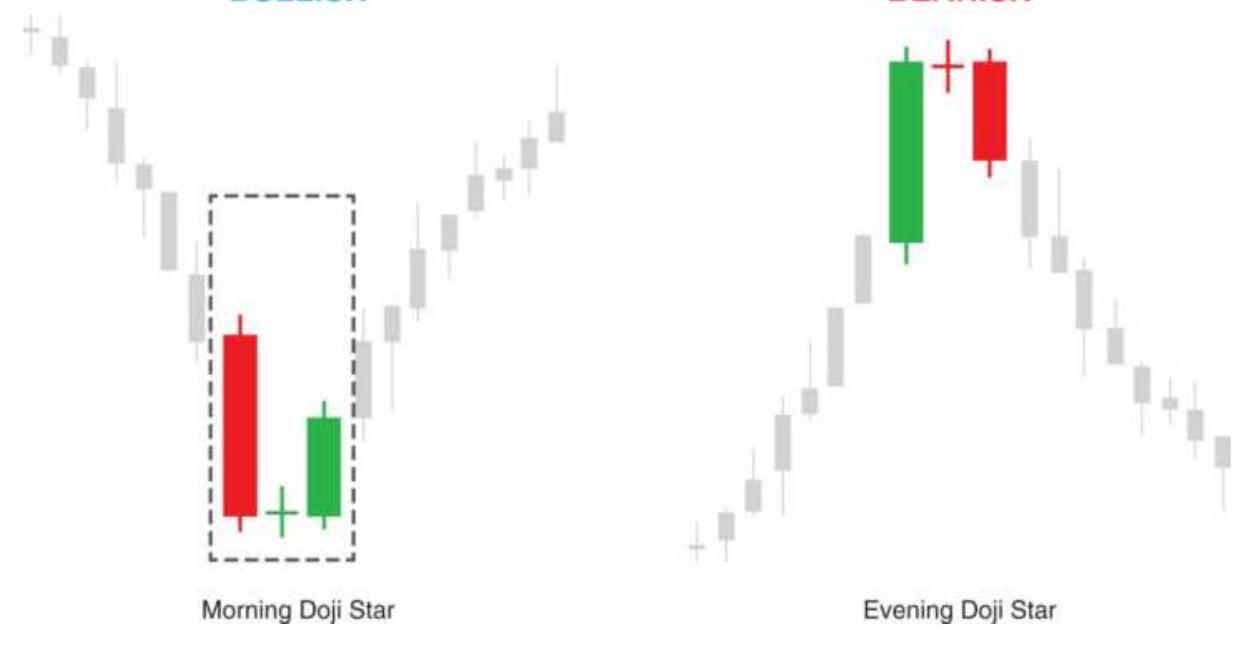

Figure 4.11d Morning doji star and evening doji star.

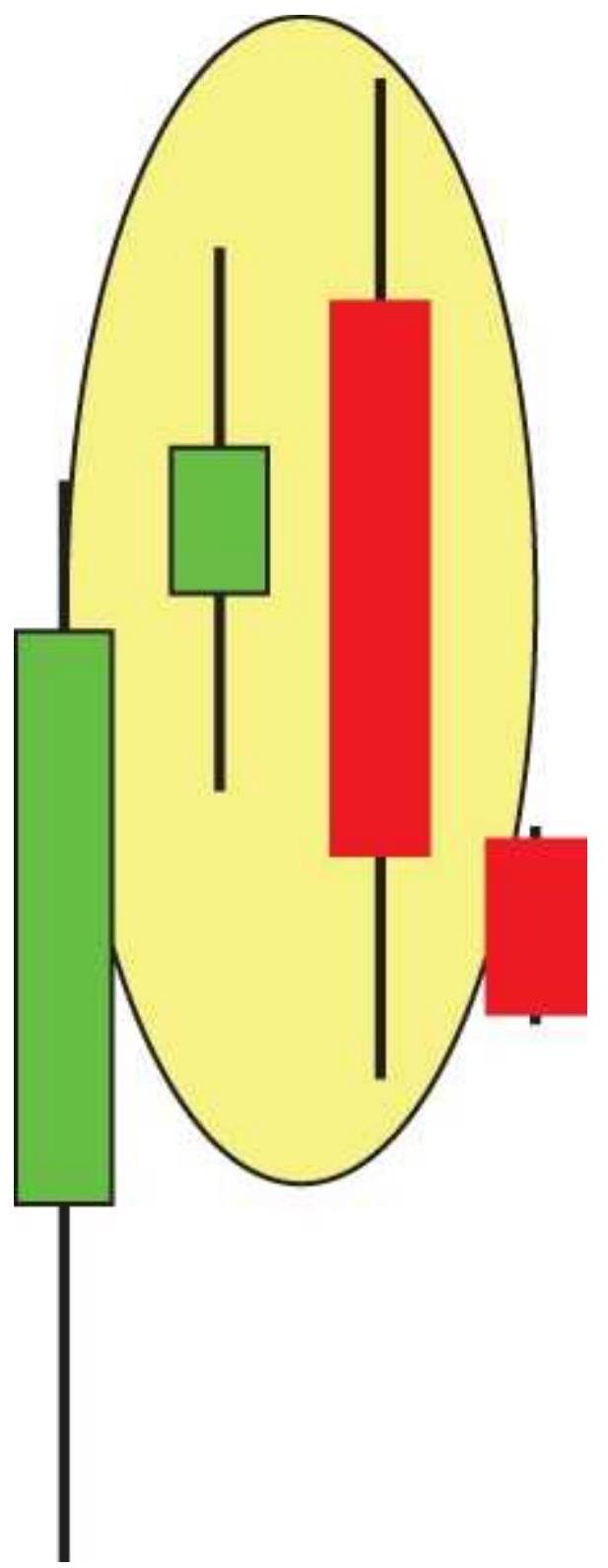

Figure 4.11e Bearish engulfing.

Figure 4.11f Last engulfing top.

Figure 4.11g Three outside up.

Figure 4.11h Two black gapping.

Figure 4.11i Rising window.

\section*{Chapter 5}

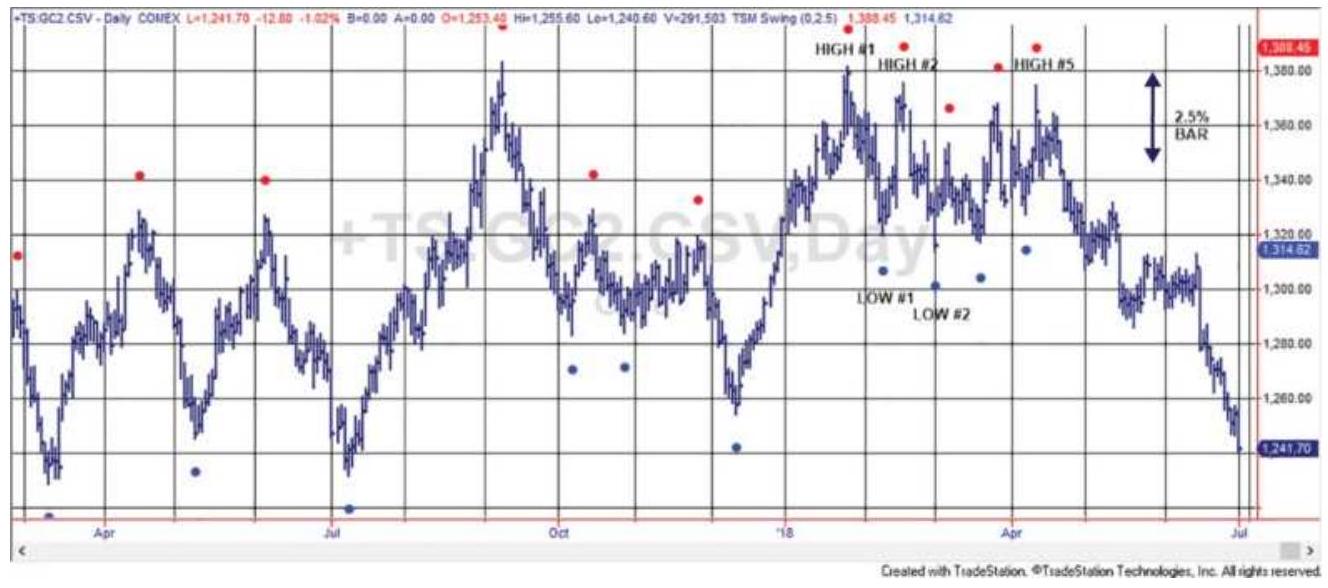

FIGURE 5.1 Gold futures with \(2.5 \%\) swing points marked.

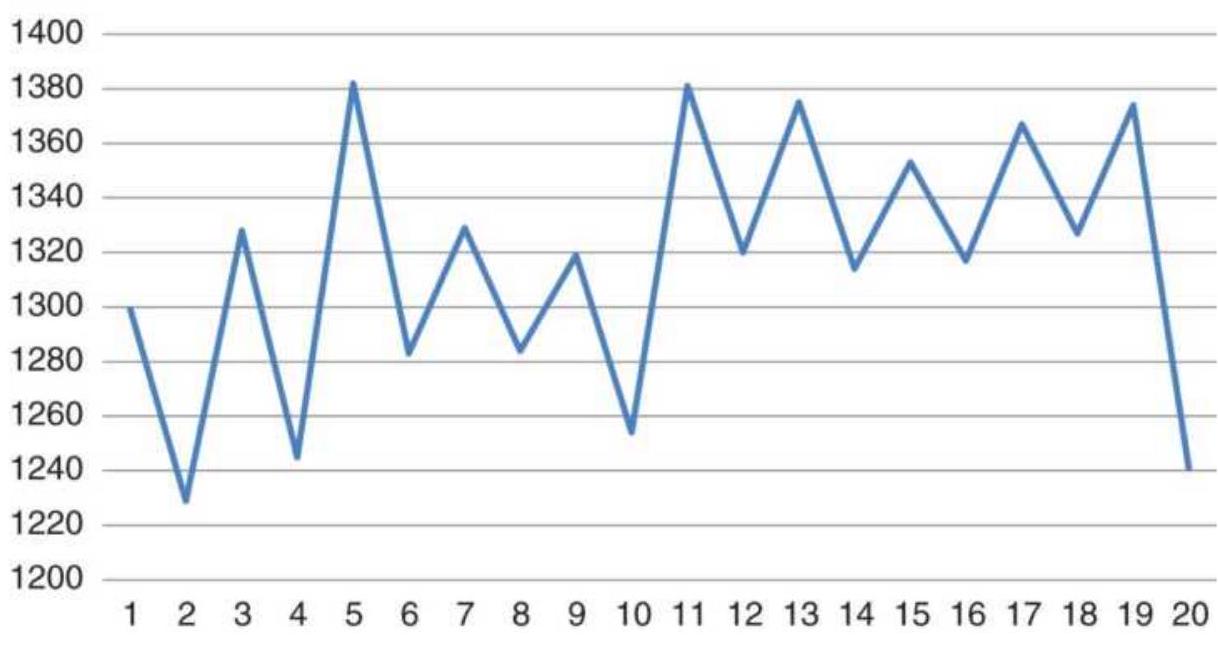

FIGURE 5.2 Corresponding swing chart of gold using a \(2.5 \%\) swing filter.

FIGURE 5.3 Recording swings by putting the dates in the first box penetrated...

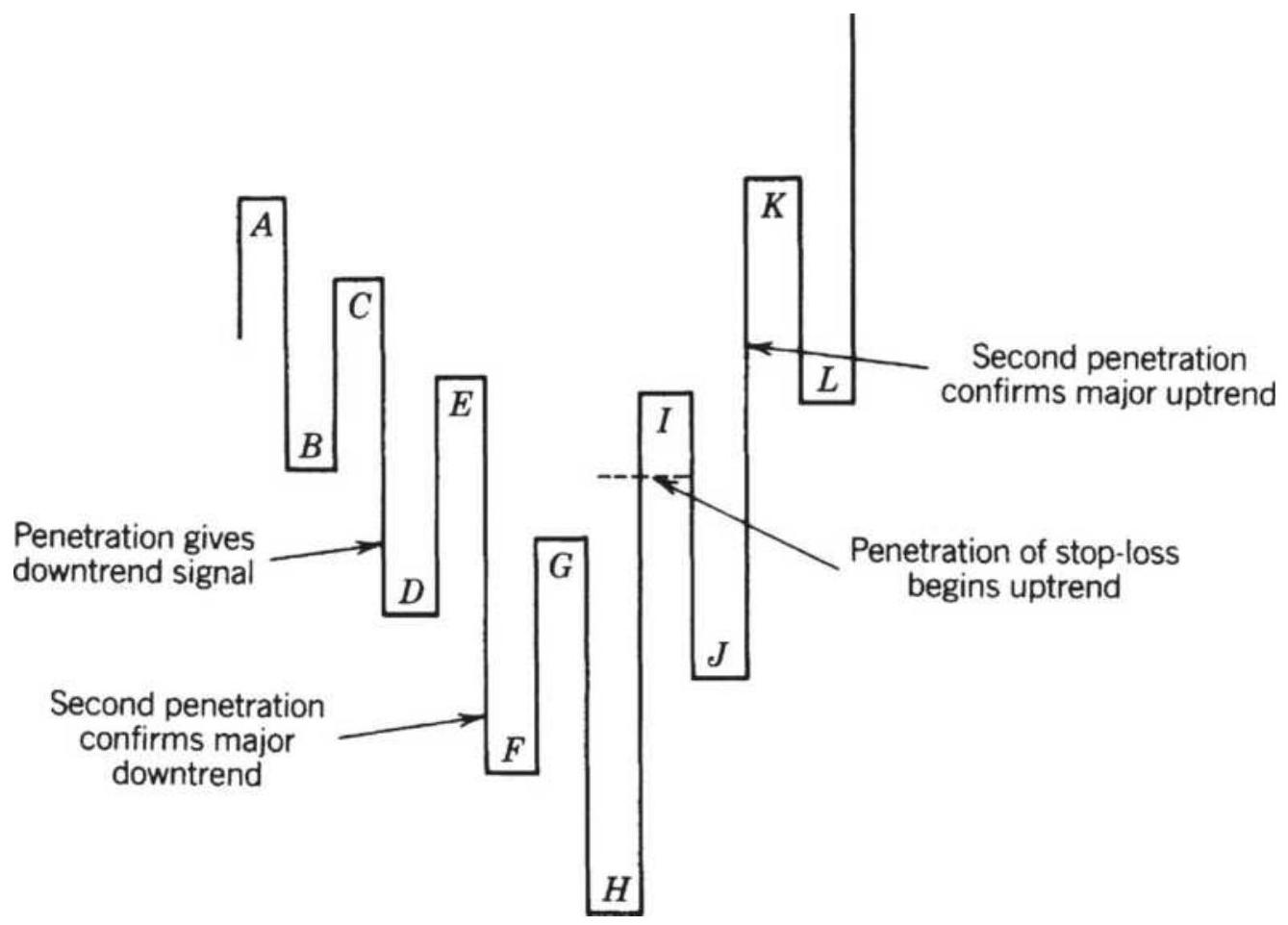

FIGURE 5.4 Livermore's trend change rules.

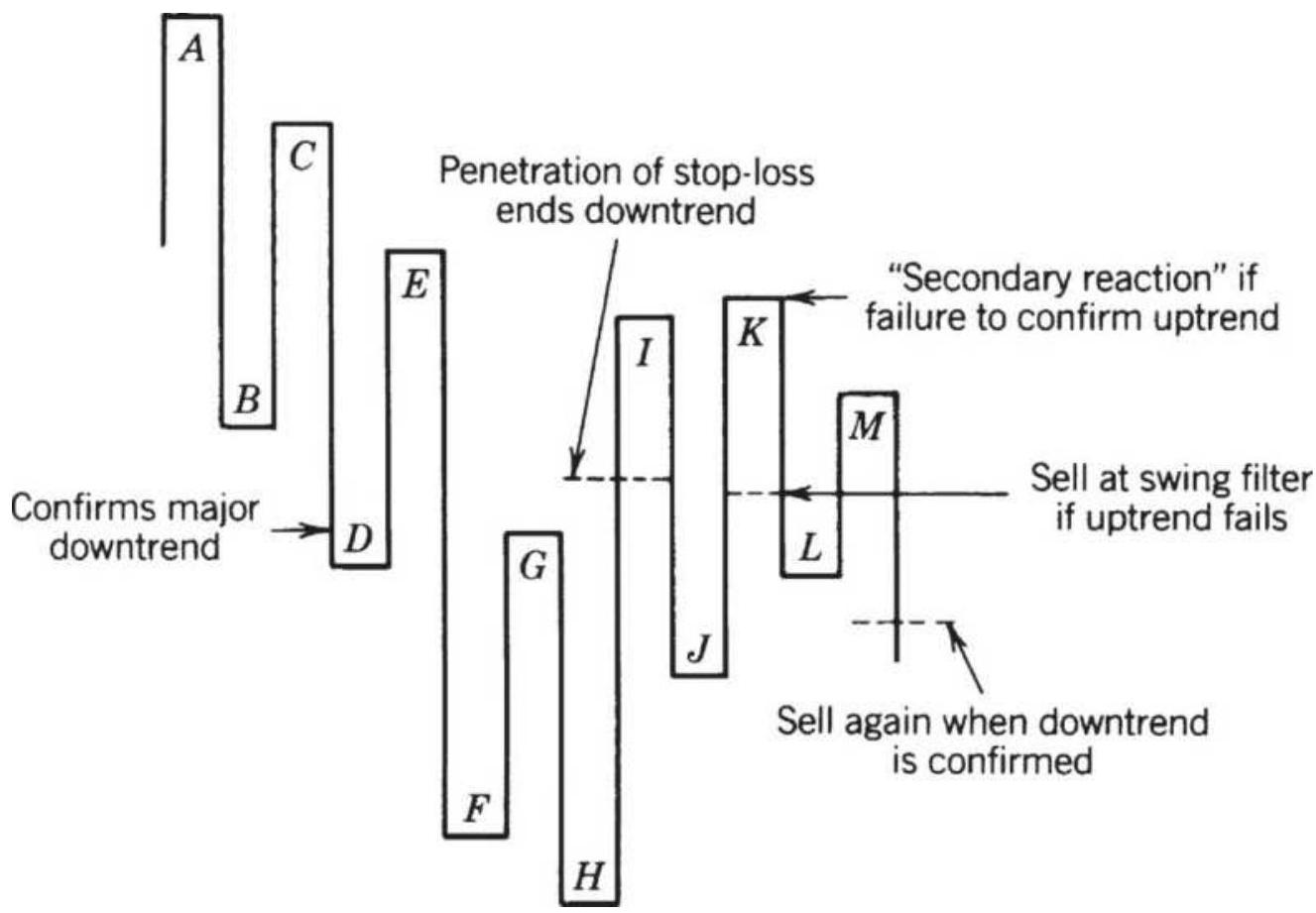

FIGURE 5.5 Failed reversal in the Livermore method.

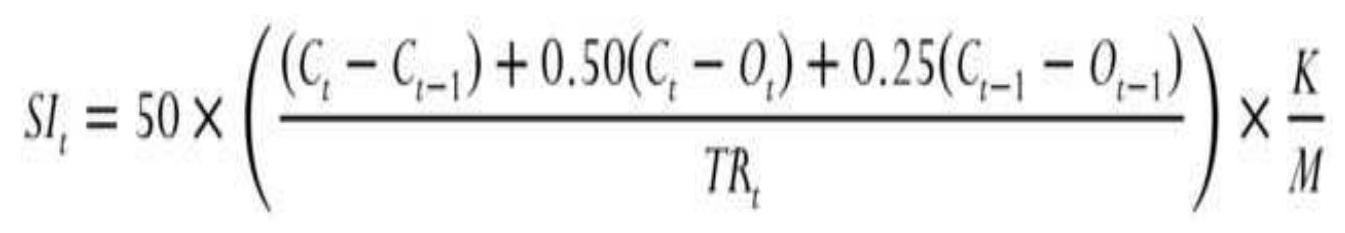

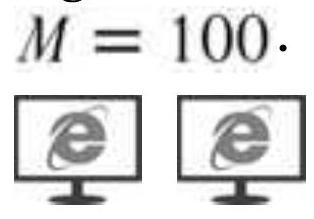

FIGURE 5.6 Wilder's Swing Index applied to Eurobund back-adjusted futures, u...

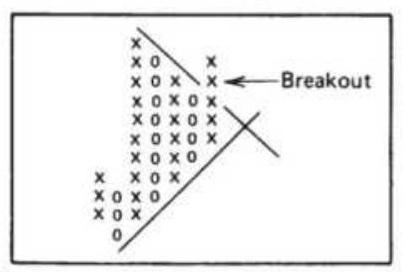

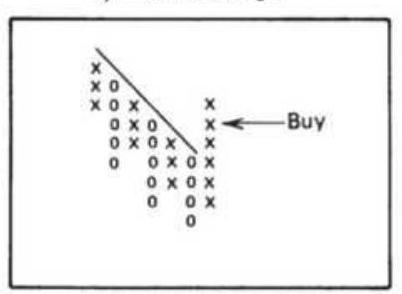

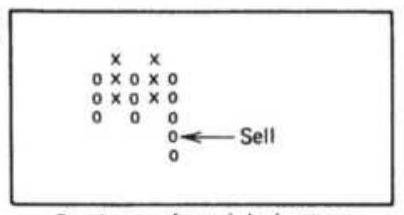

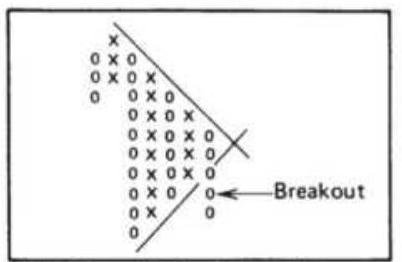

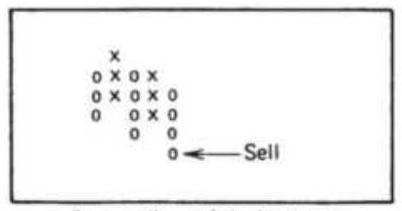

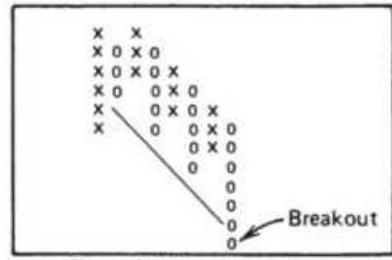

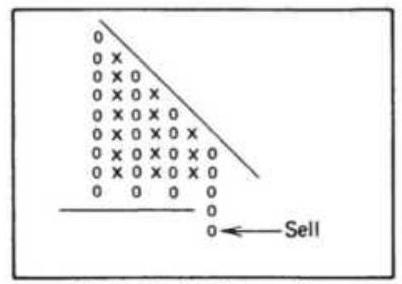

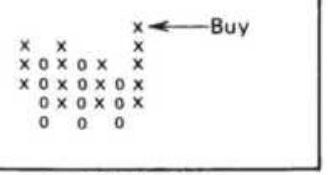

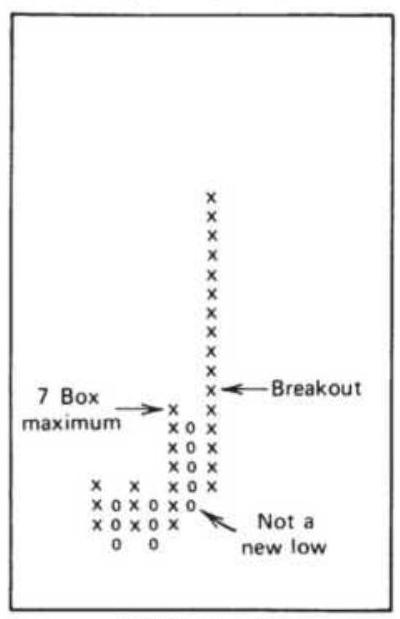

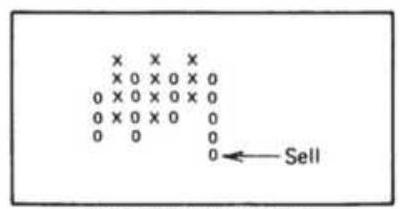

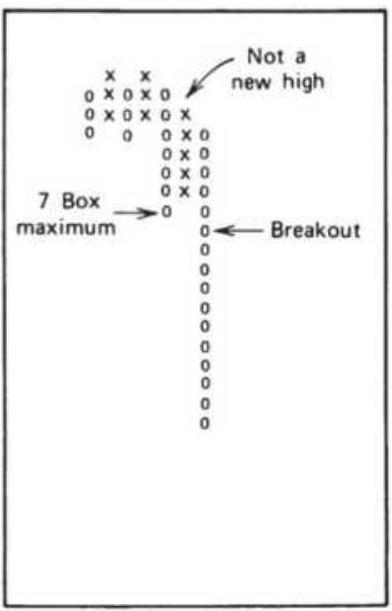

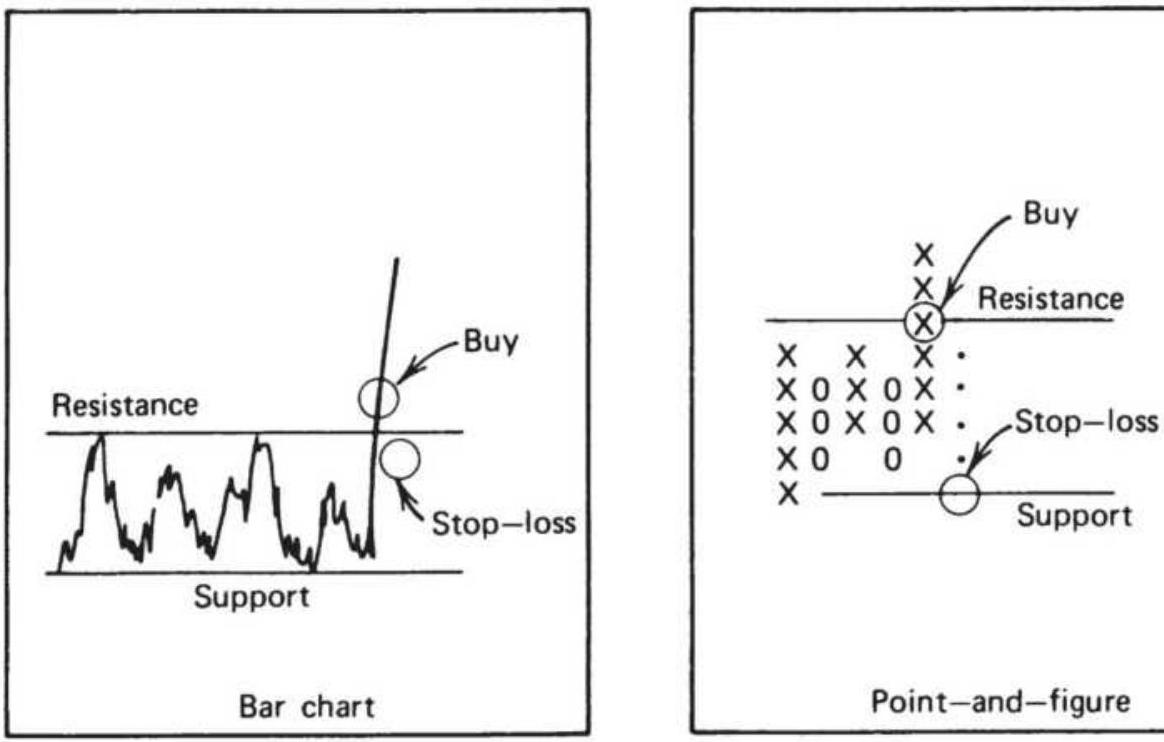

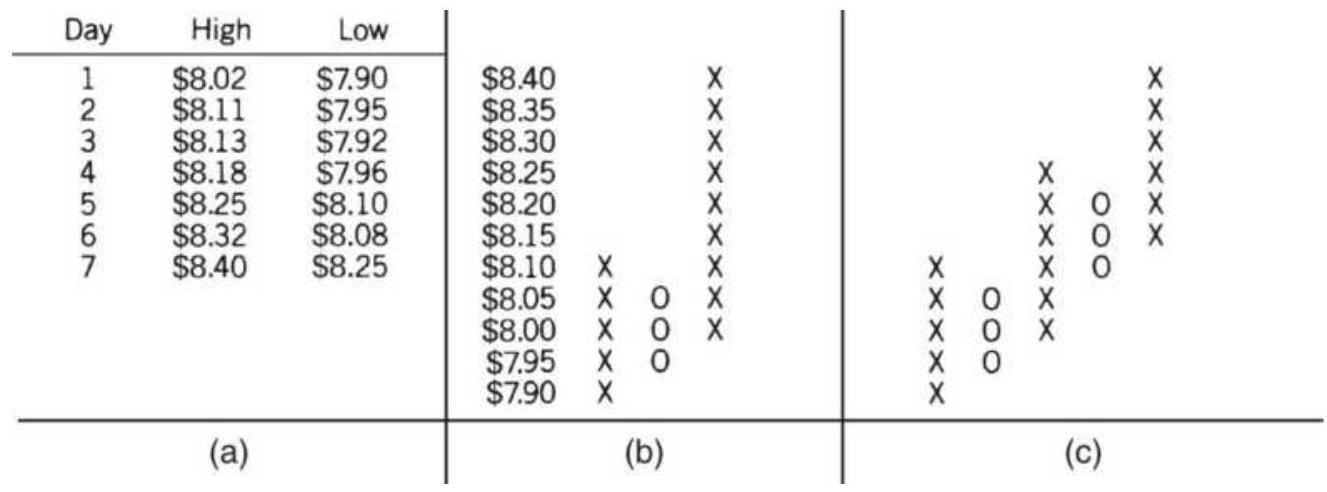

FIGURE 5.7 Point-and-figure chart.

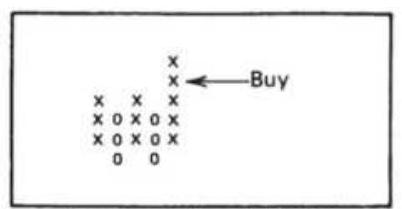

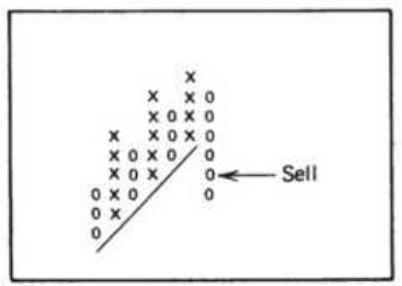

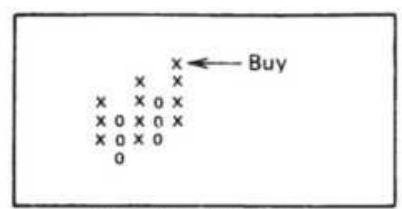

FIGURE 5.8 Best formations from Davis's study.

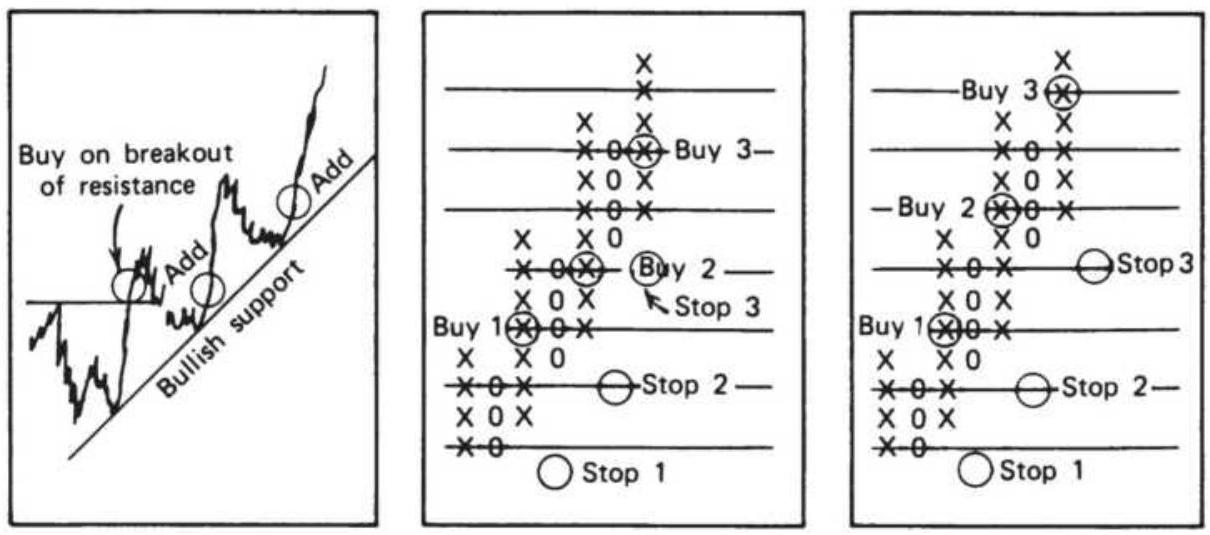

FIGURE 5.9 (a) Compound point-and-figure buy signals. (b) Compound point-and...

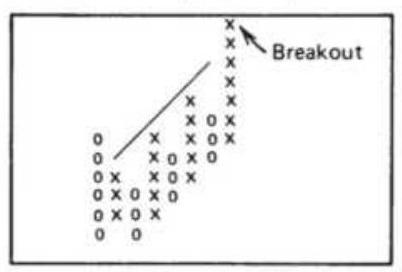

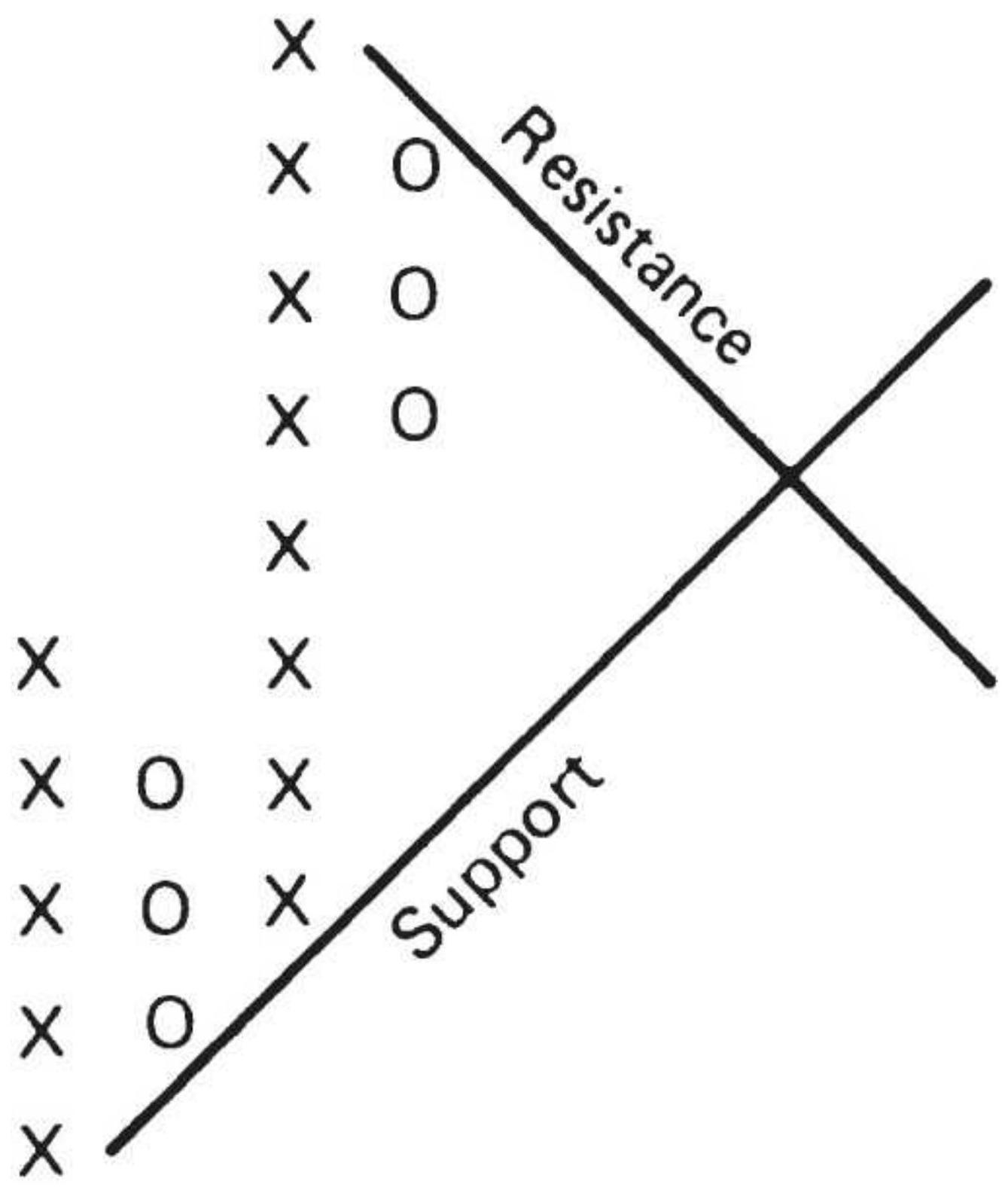

FIGURE 5.10 Point-and-figure trendlines.

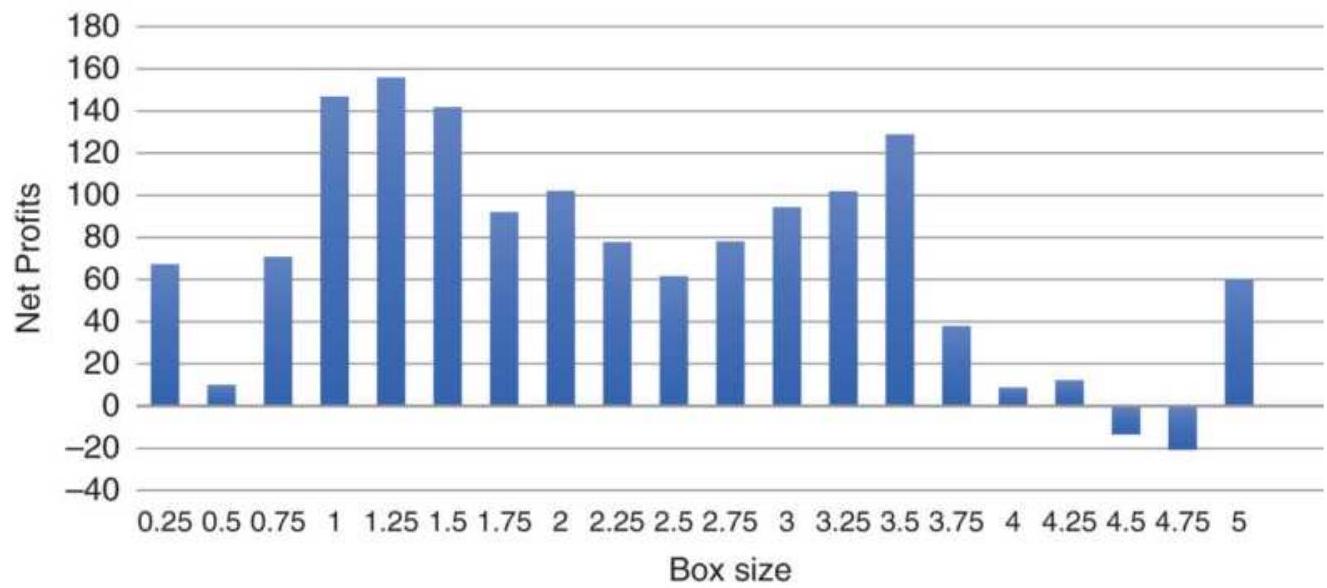

FIGURE 5.11 Tests of box size for Apple shows smaller is better.

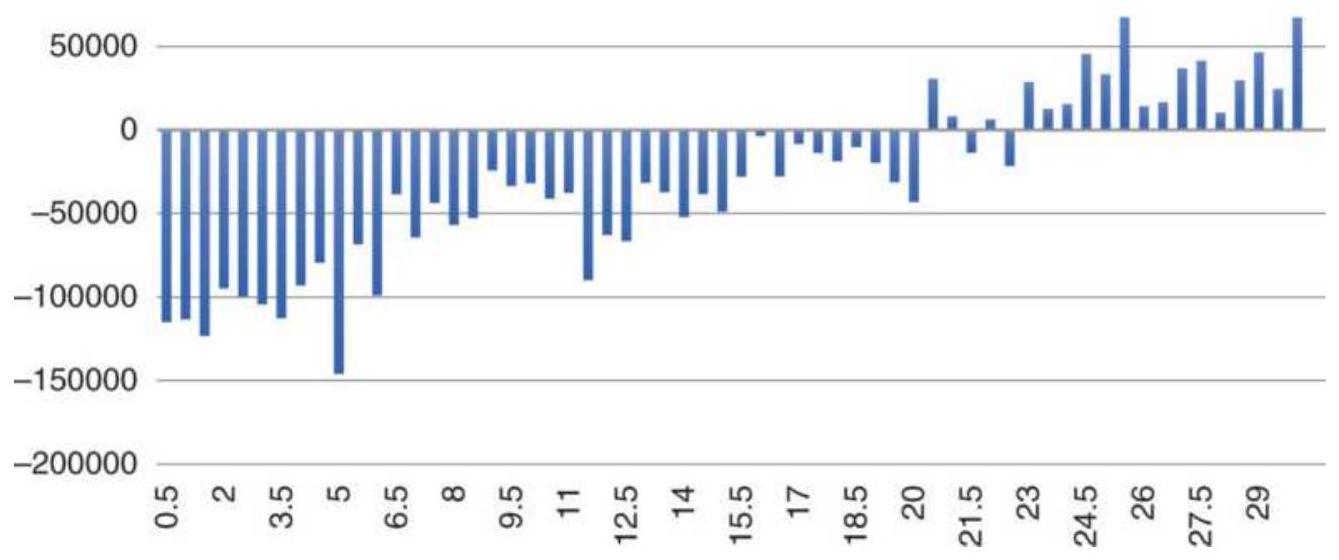

FIGURE 5.12 Tests of the S\&P futures shows results similar to moving average...

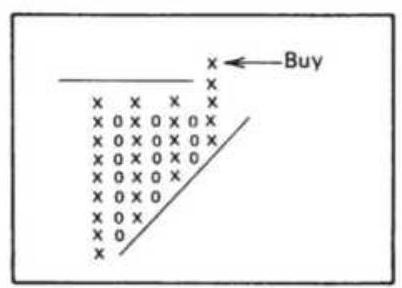

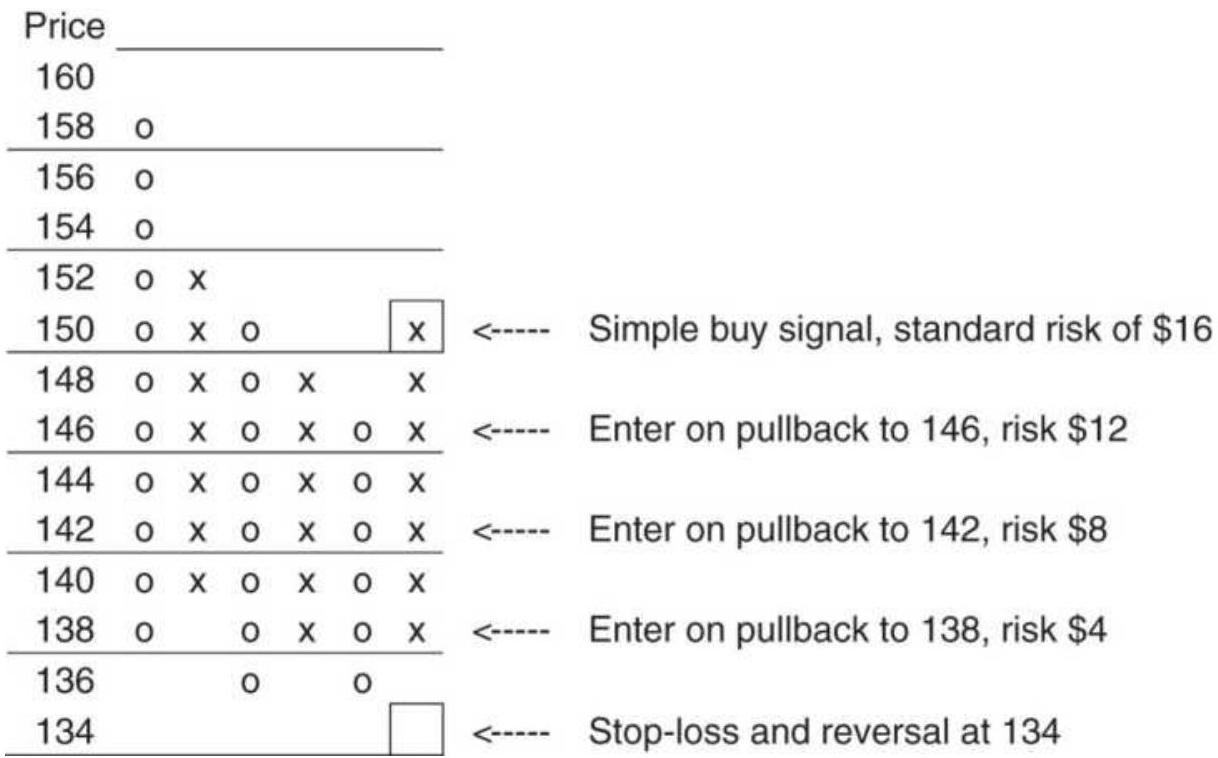

FIGURE 5.13 Entering IBM on a pullback with limited risk.

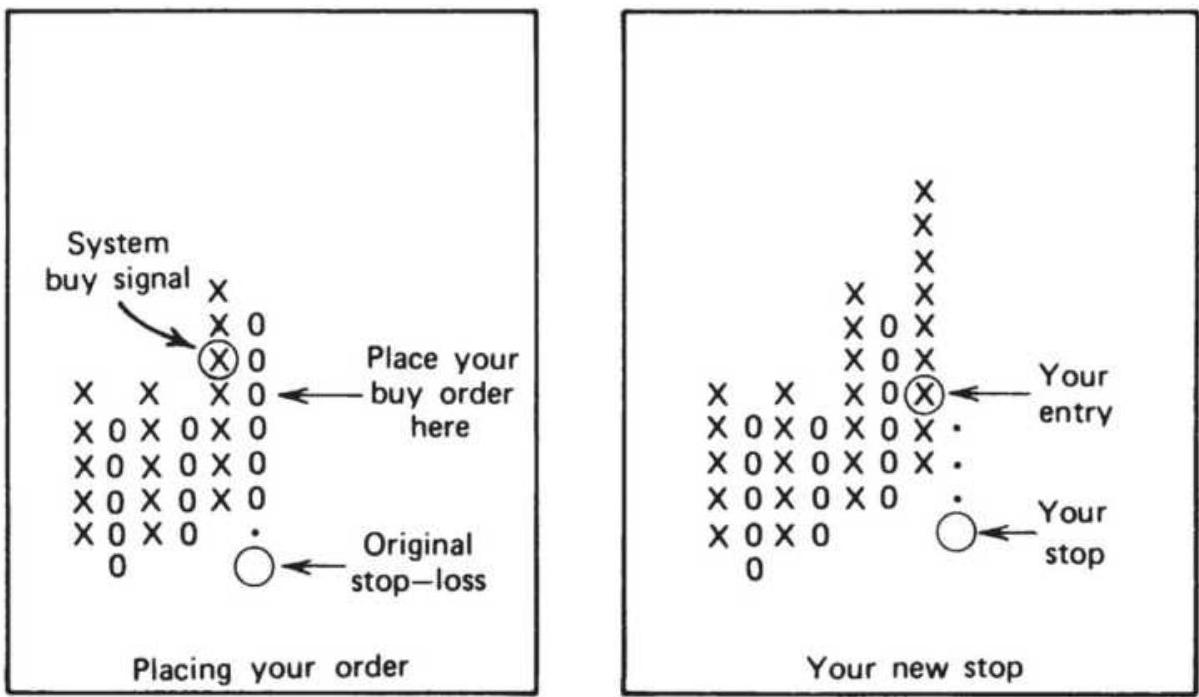

FIGURE 5.14 Entering on a confirmation of a new trend after a pullback.

FIGURE 5.15 Three ways to compound positions.

FIGURE 5.16 Placement of point-and-figure stops.

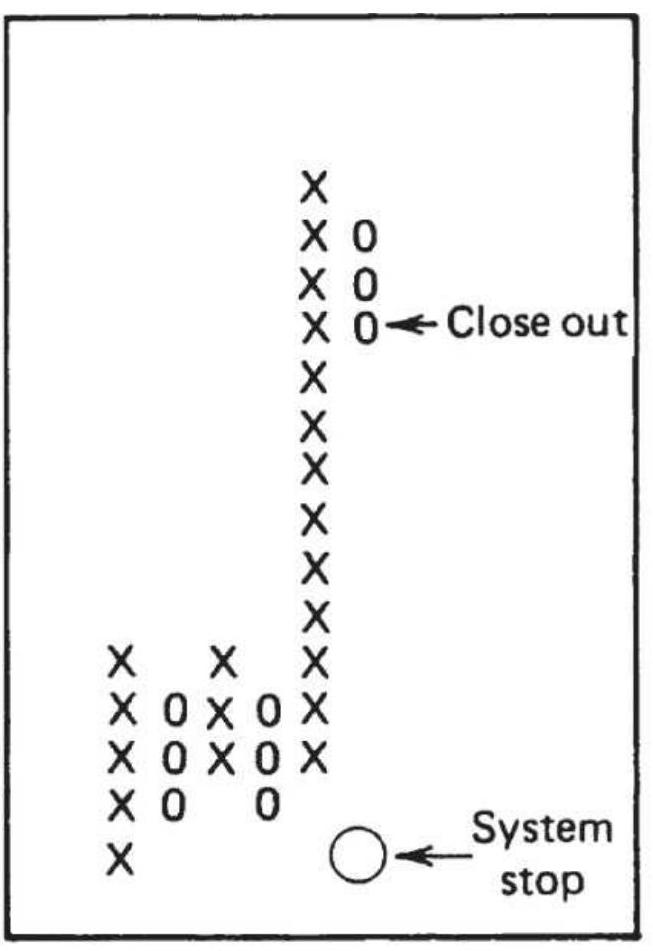

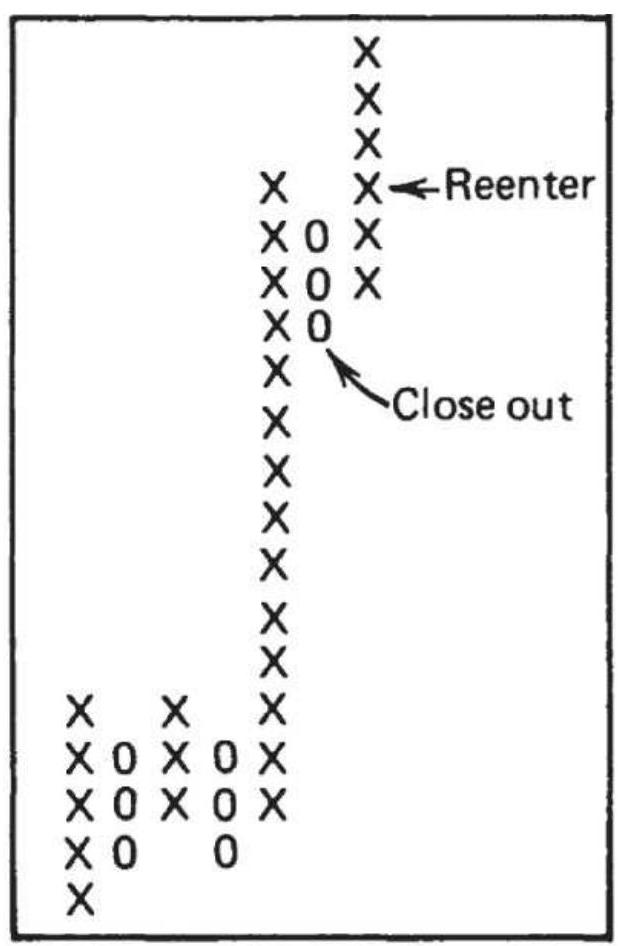

FIGURE 5.17 Cashing in on profits.

FIGURE 5.18 Alternative methods of plotting

point-and-figure reversals. (a) ...

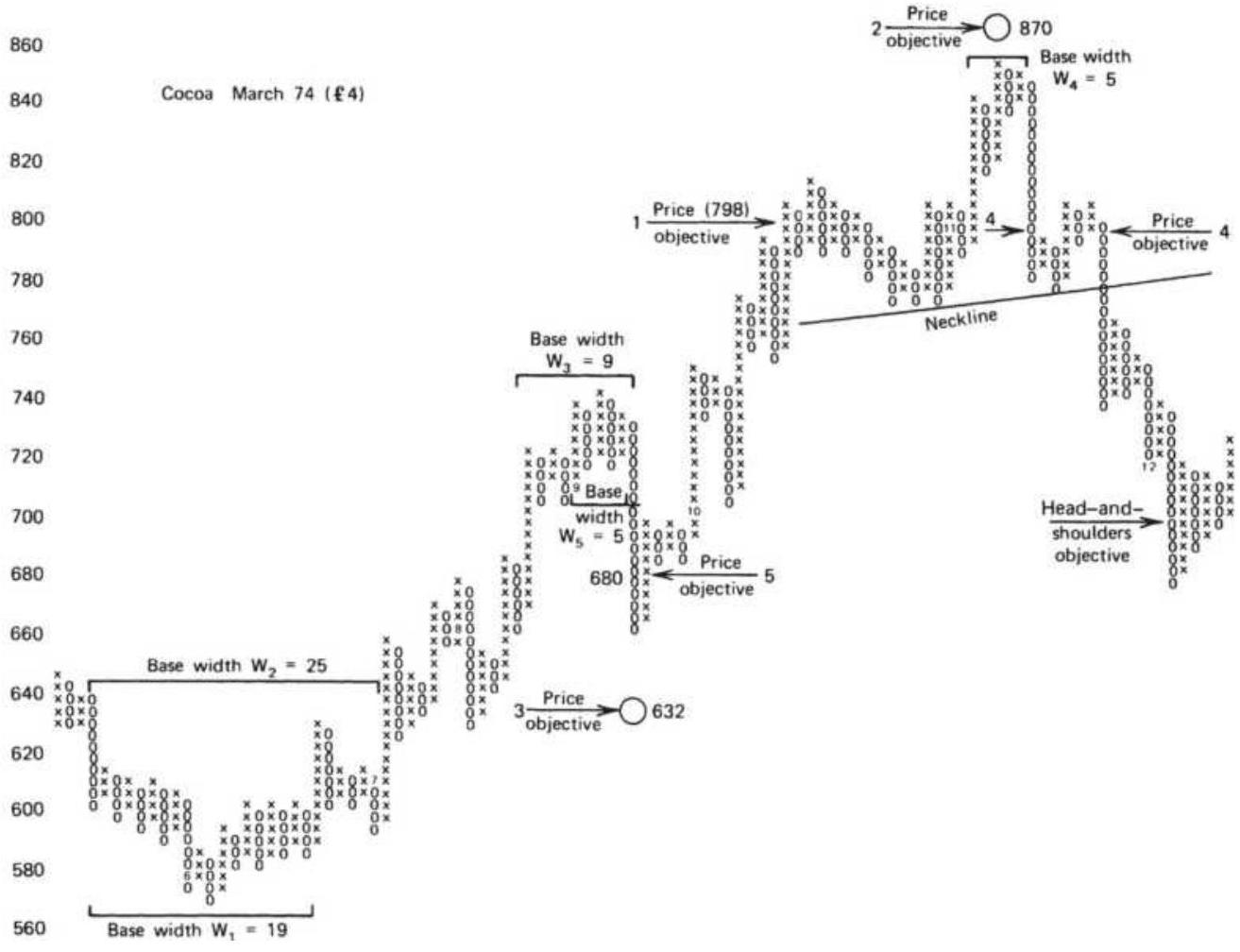

FIGURE 5.19 Horizontal count price objectives.

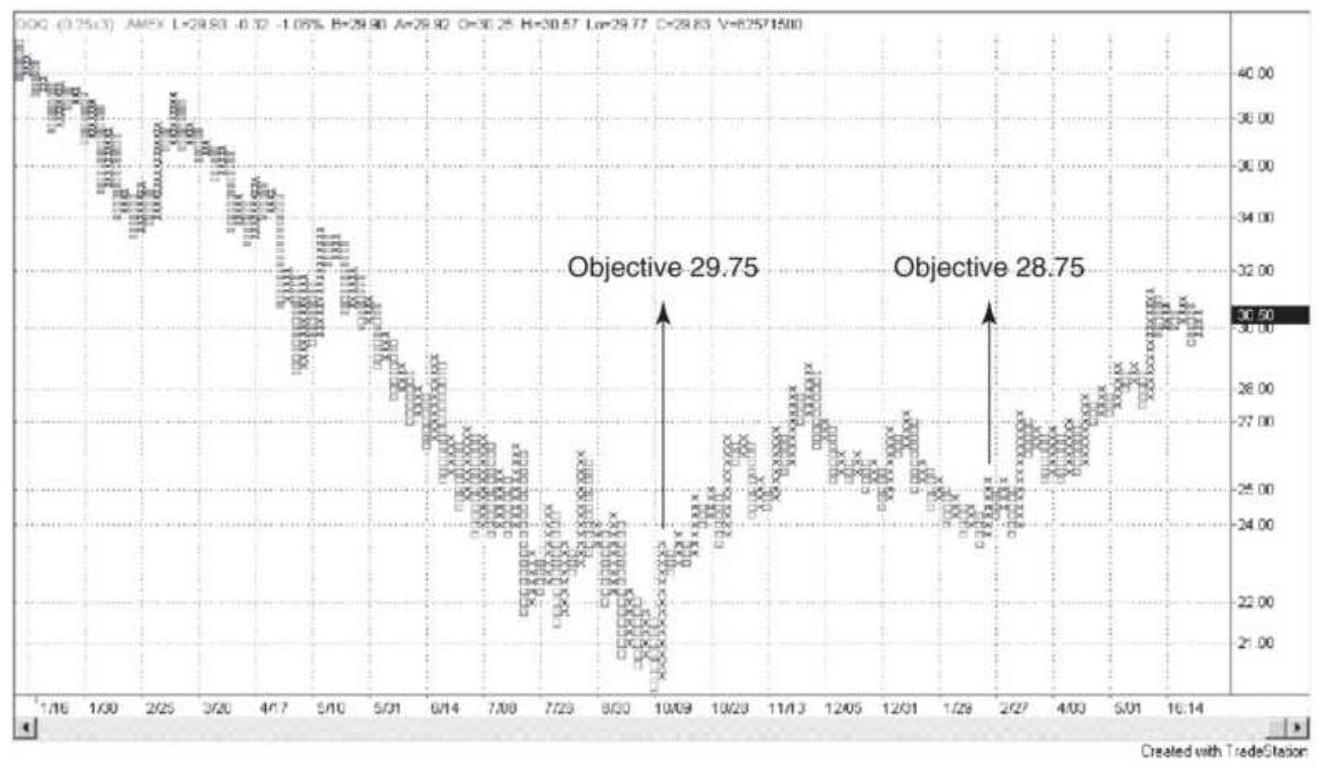

FIGURE 5.20 Point-and-figure vertical count for QQQ. The major low in Octobe...

FIGURE 5.21 Renko Bricks pattern.

FIGURE 5.22 N -Day breakout applied to

Merck, using a breakout period of 5 da...

FIGURE 5.23 Apple breakout tests.

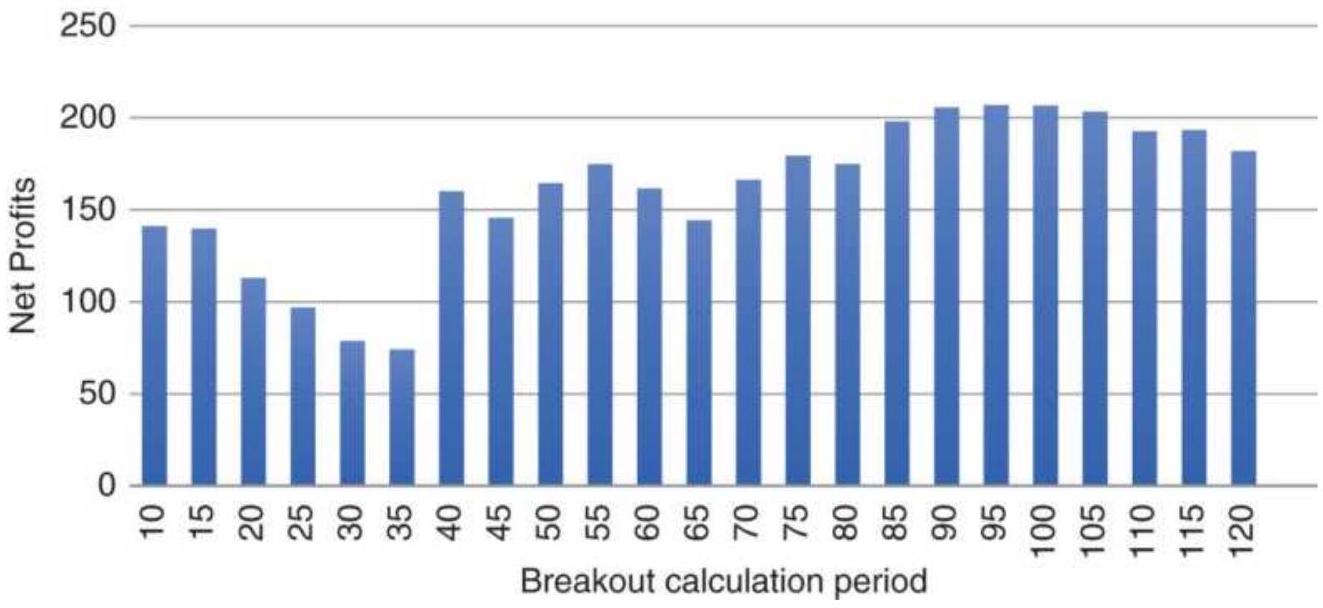

FIGURE 5.24 emini S\&P breakout tests.

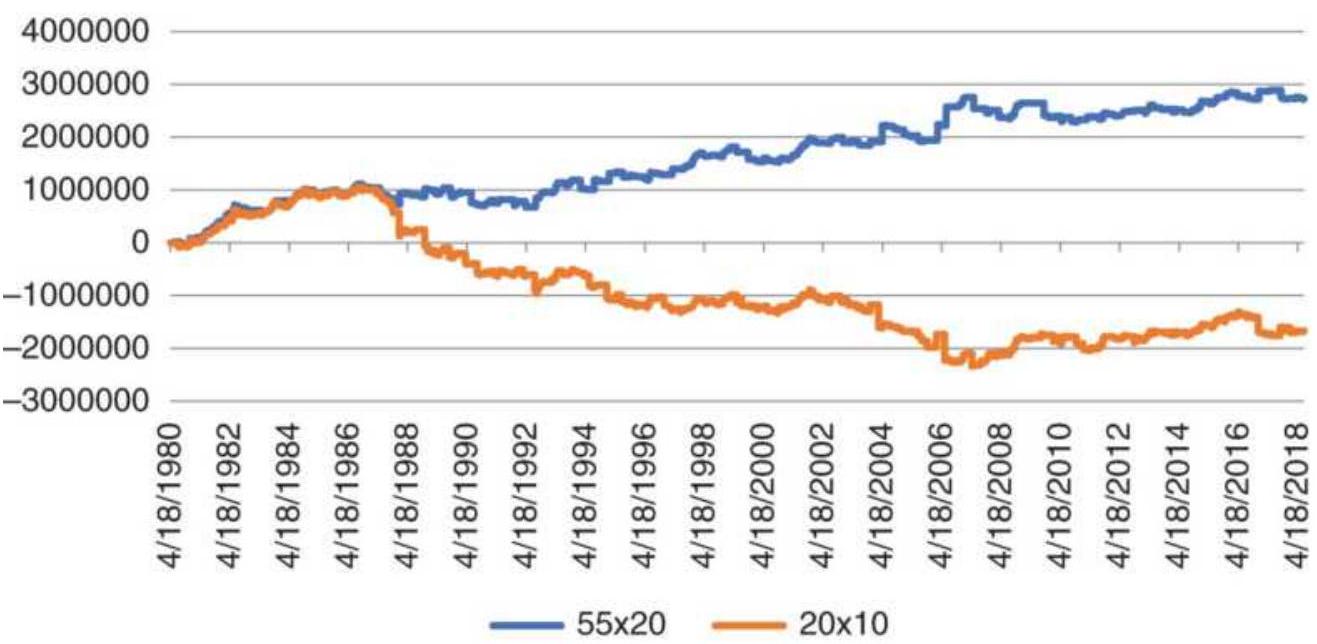

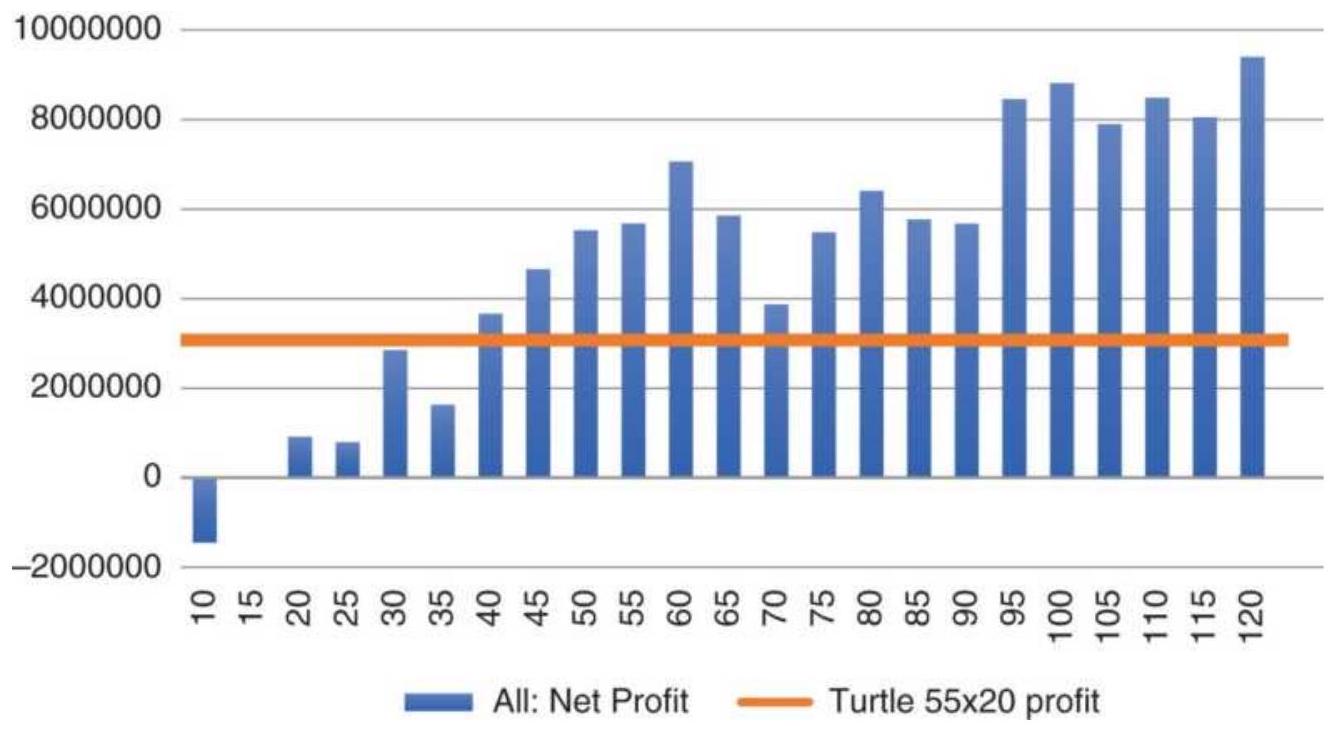

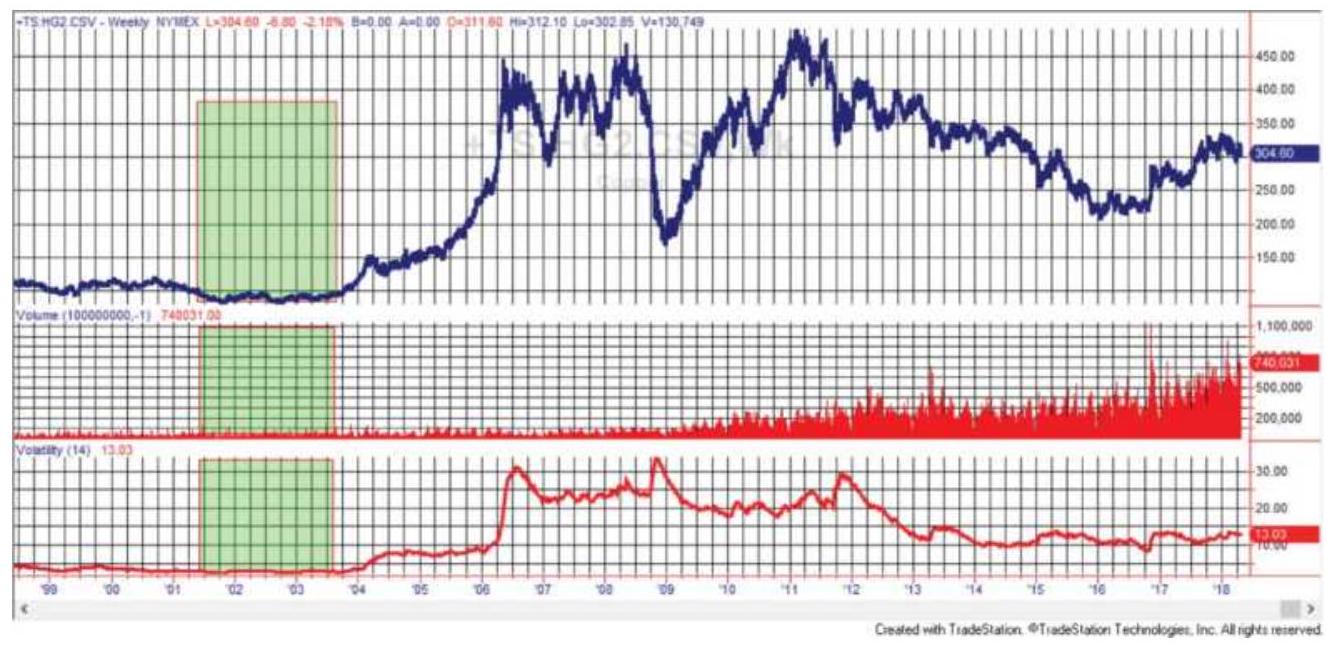

FIGURE 5.25 Copper profits for the slower and faster trends in our version o...

FIGURE 5.26 Relative performance of N -day

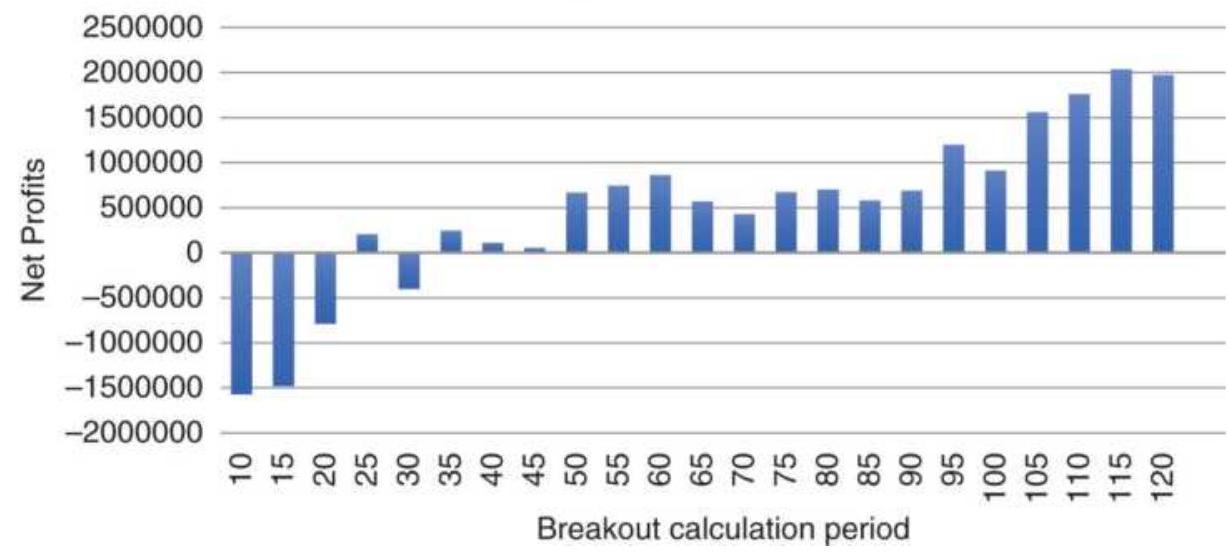

breakout, copper futures, 1980-201...

Chapter 6

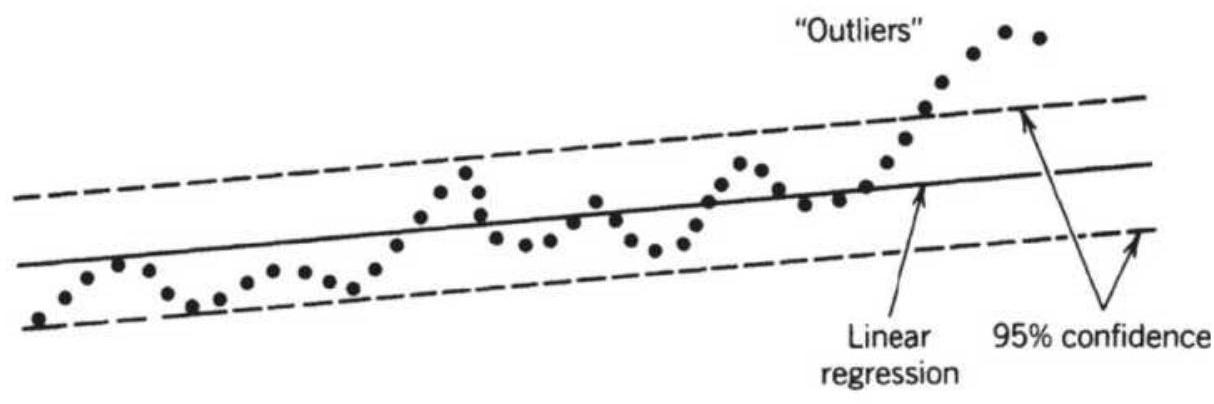

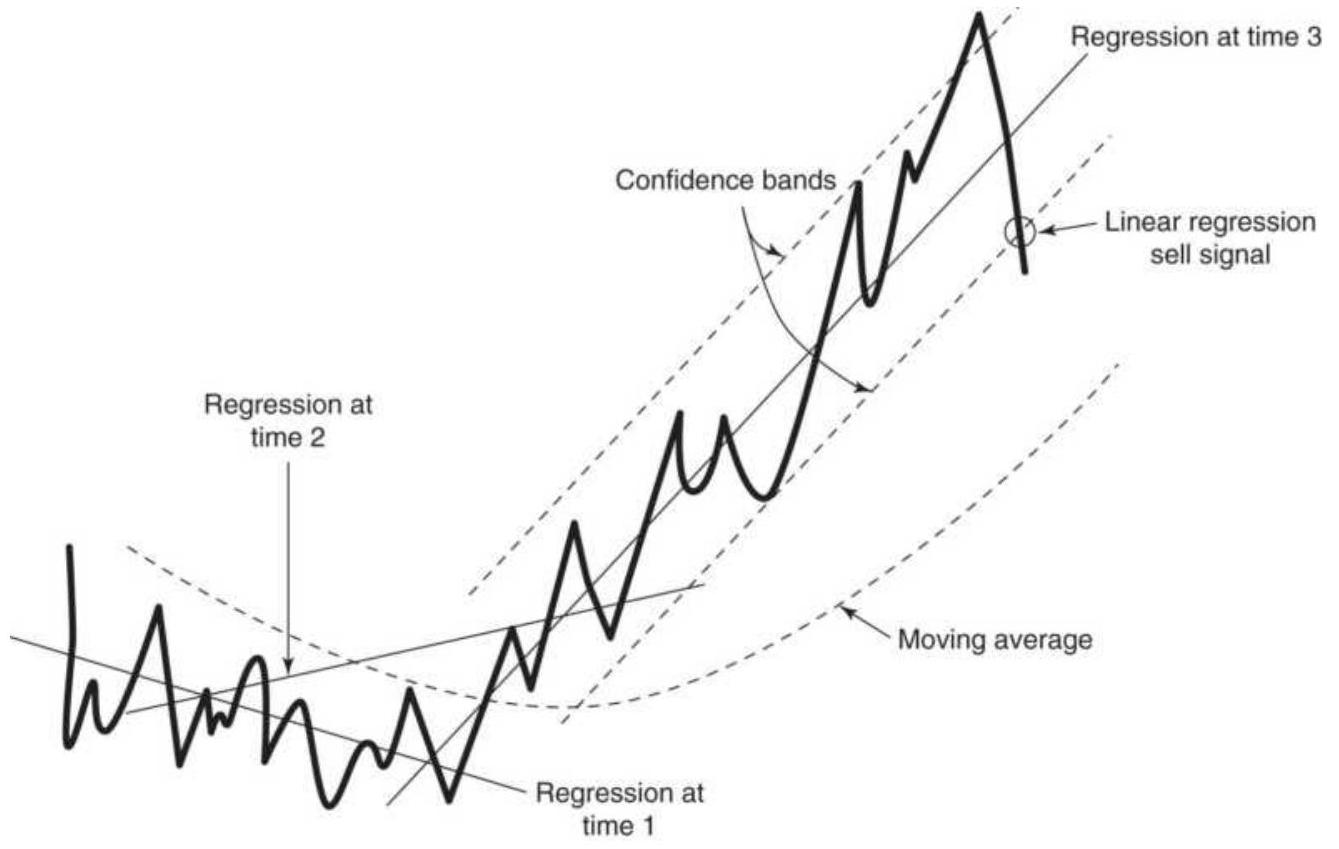

FIGURE 6.1 A basic regression analysis results

in a straight line through th...

\section*{FIGURE 6.2 Error deviation for method of least} squares.

FIGURE 6.3 Scatter diagram of corn, soybean pairs with linear regression sol...

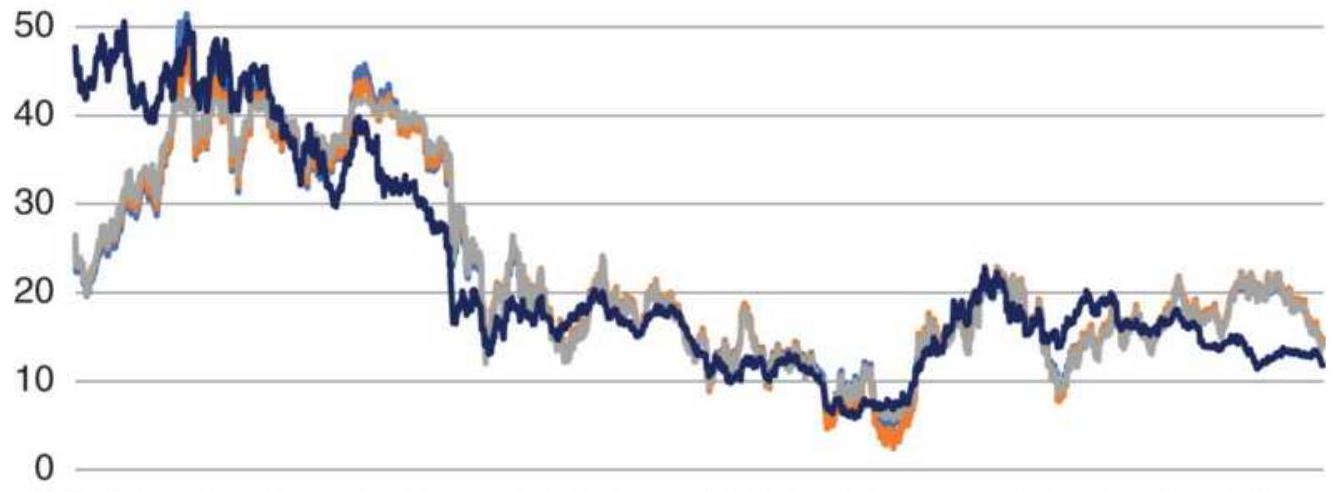

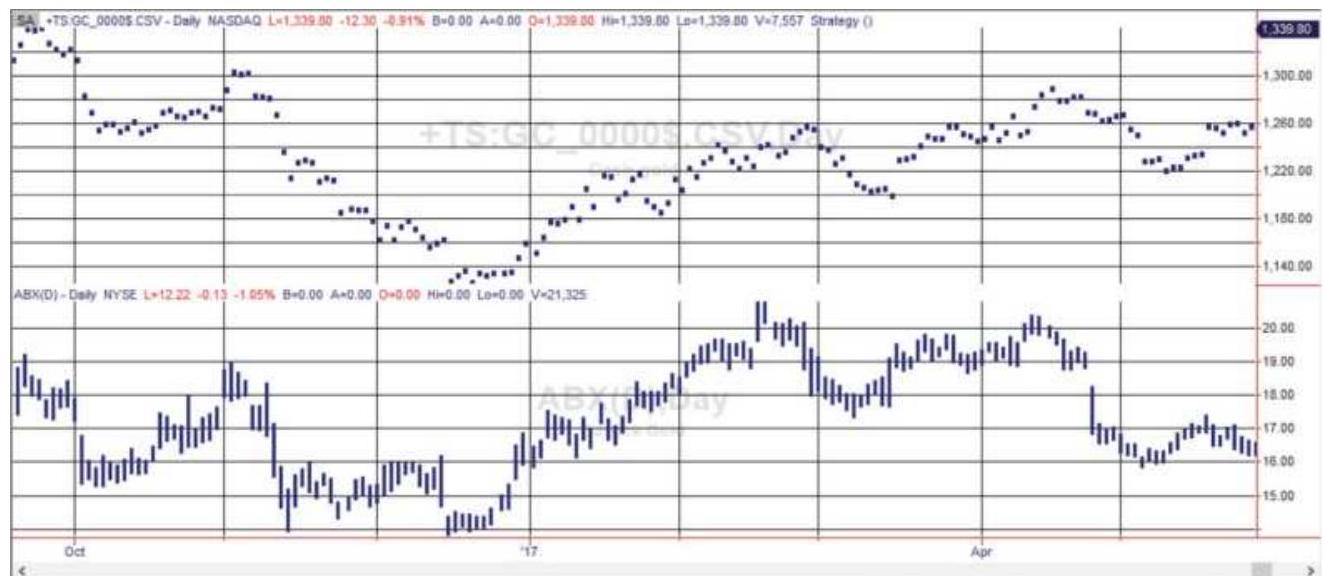

FIGURE 6.4 Prices of ABX and gold show that gold remained high while ABX dec...

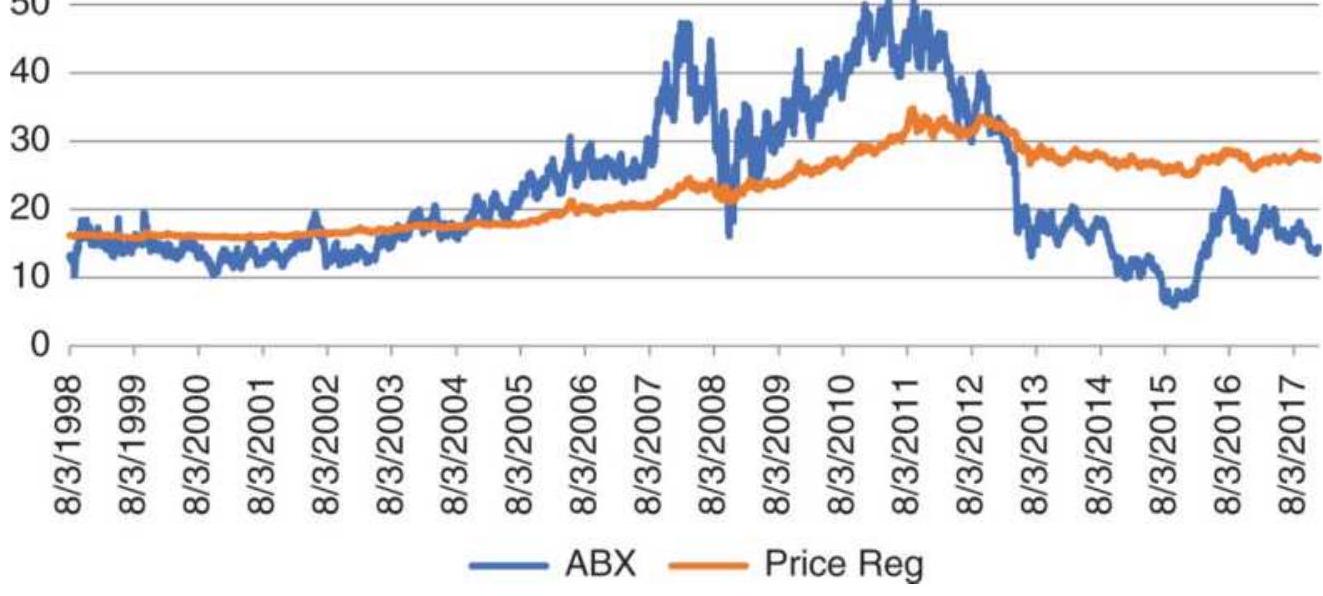

FIGURE 6.5 The best fit for ABX tracks the upward and downward move, but is ...

FIGURE 6.6 ABX-Gold regression using only the last 20-days of prices.

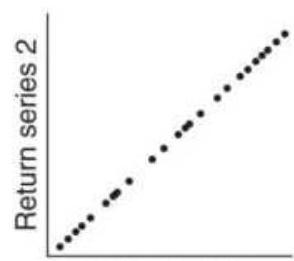

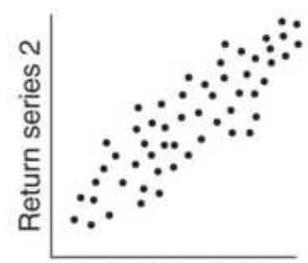

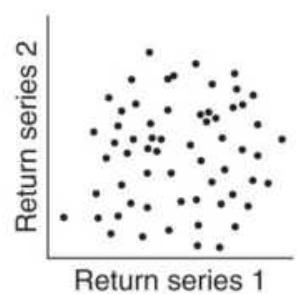

FIGURE 6.7 Degrees of correlation. (a) Perfect positive linear correlation (

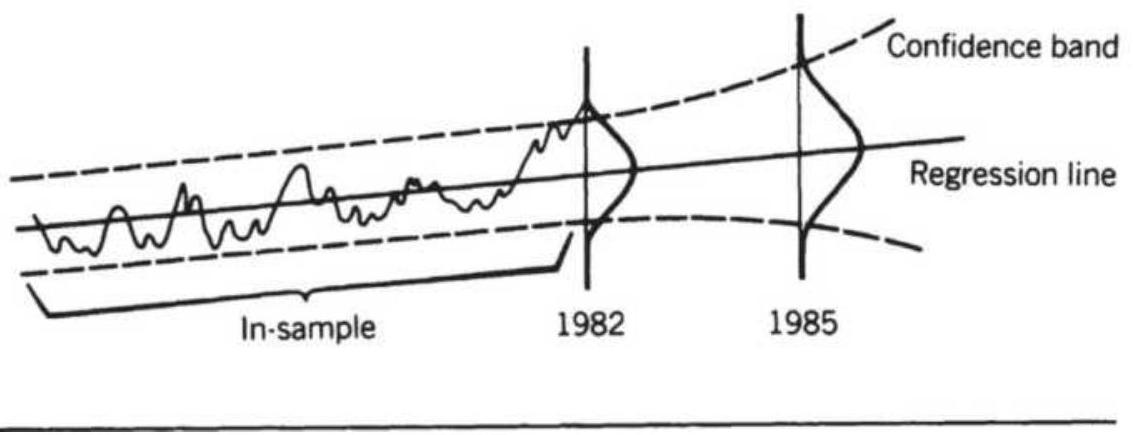

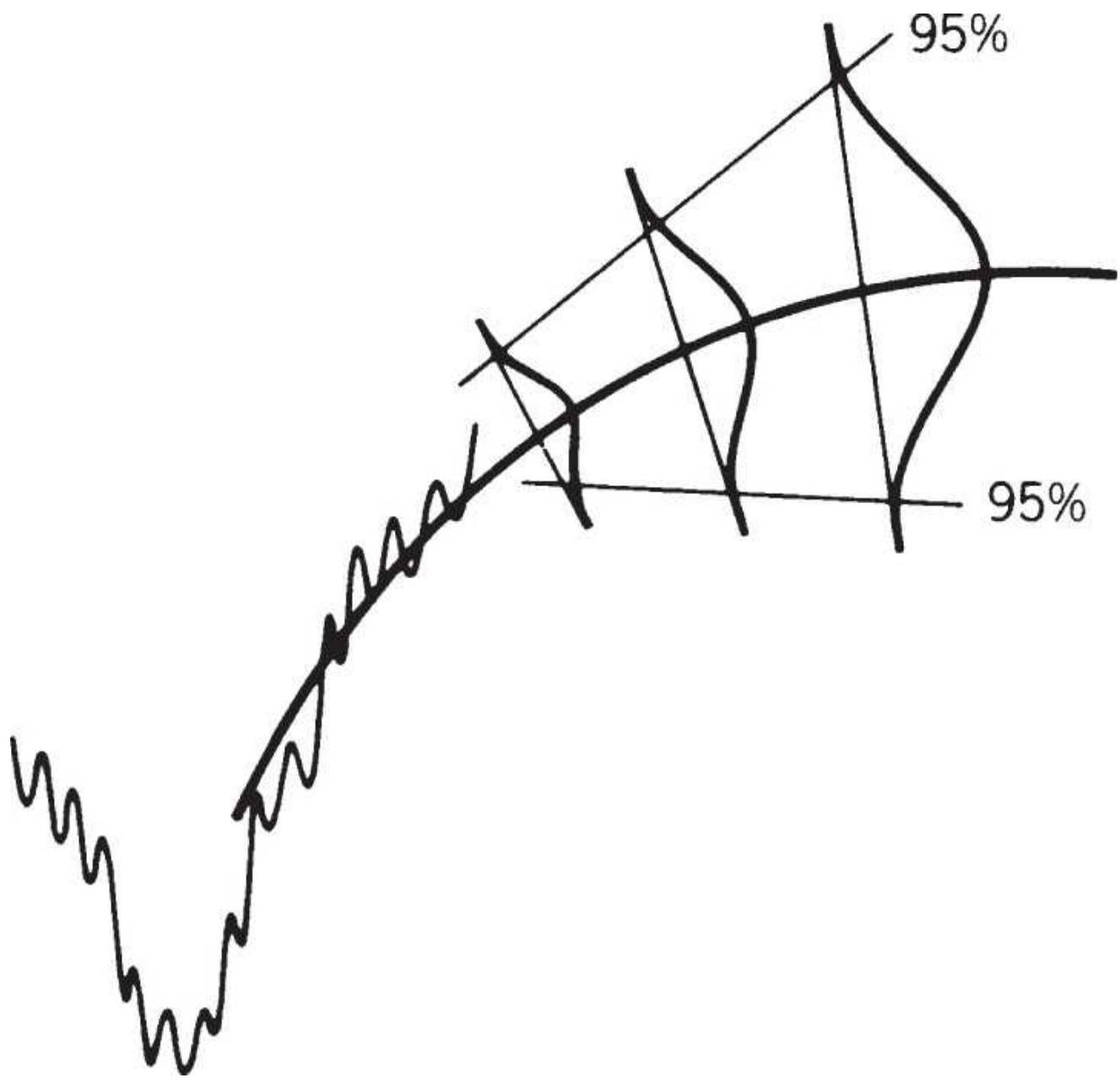

FIGURE 6.8 Confidence bands. (a) A 95\% confidence band. (b) Out-of-sample fo...

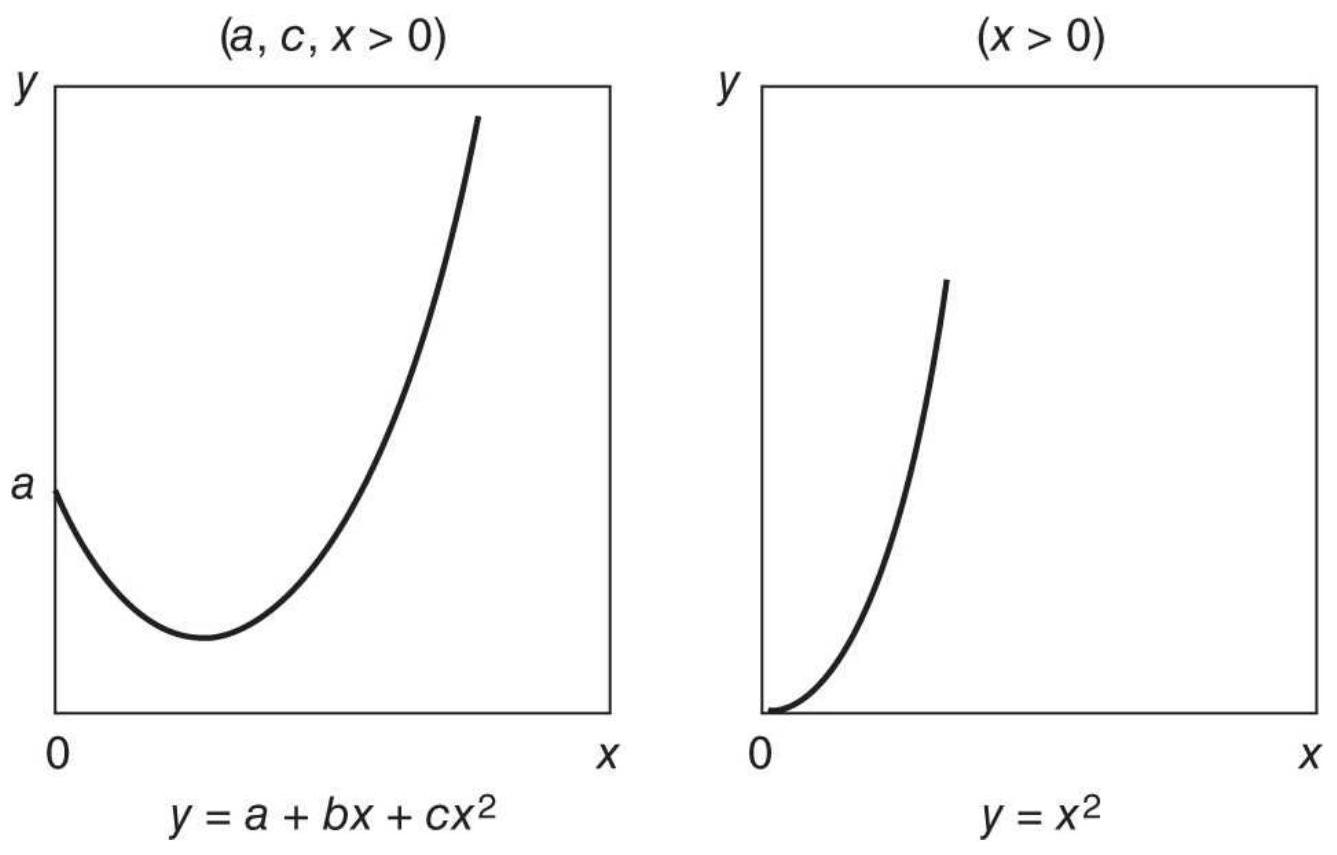

FIGURE 6.9 Curvilinear (parabolas).

FIGURE 6.10 Comparison of ABS-Gold linear, 2nd-, and 3rd-order regressions....

FIGURE 6.11 Logarithmic and exponential curves.

FIGURE 6.12 Comparison of four regression methods on weekly corn data.

FIGURE 6.13 Solver set-up page.

FIGURE 6.14 Wheat cash prices and Solver solution.

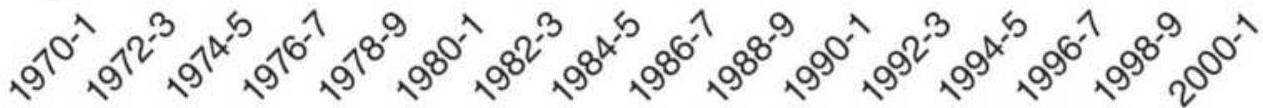

FIGURE 6.15 Correlogram of monthly corn

prices, \(1978-2017\), for 24 lags.

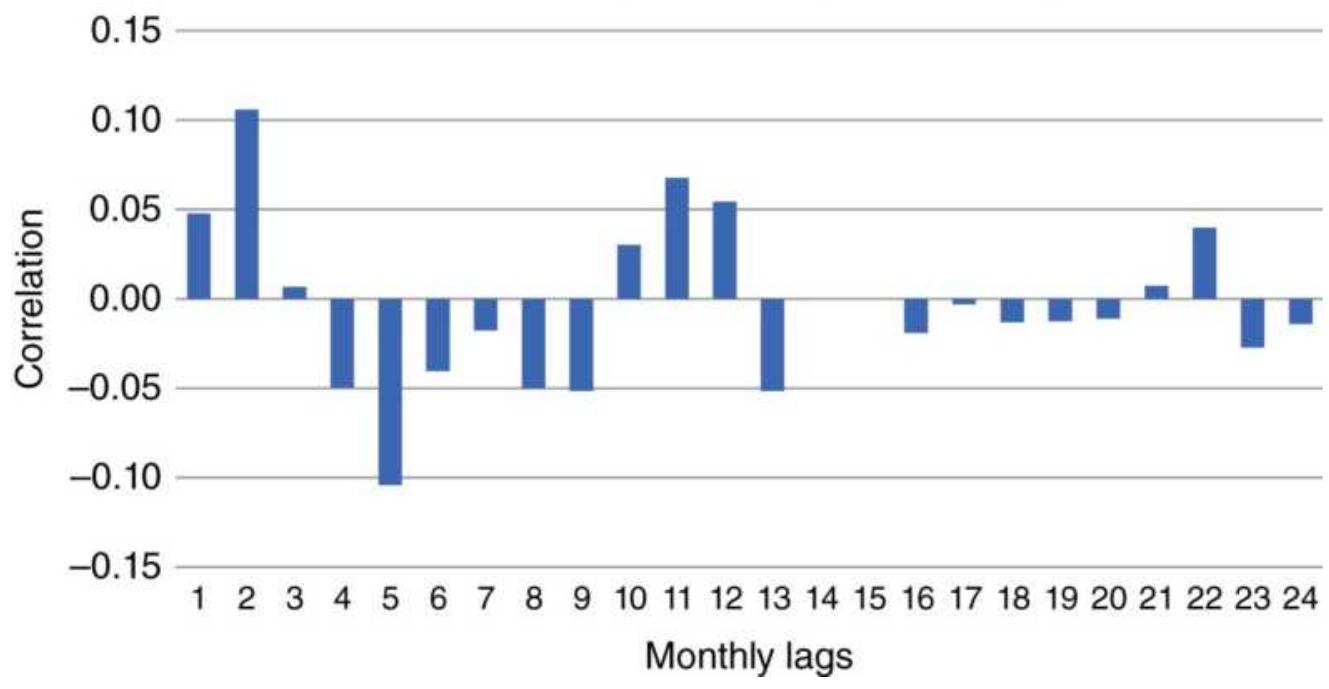

FIGURE 6.16 Correlogram of weekly returns of corn, wheat, and gold, 1978-201...

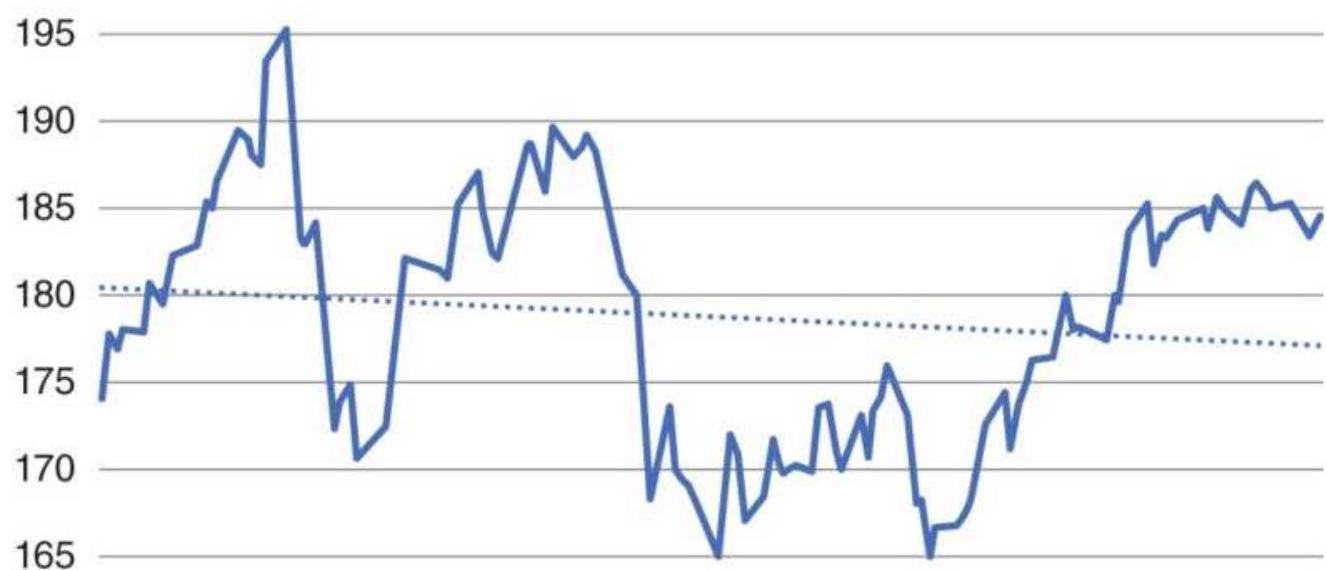

FIGURE 6.17 ARIMA forecast becomes less accurate as it is used farther ahead...

FIGURE 6.18 Linear regression model. Penetration of the confidence band turn...

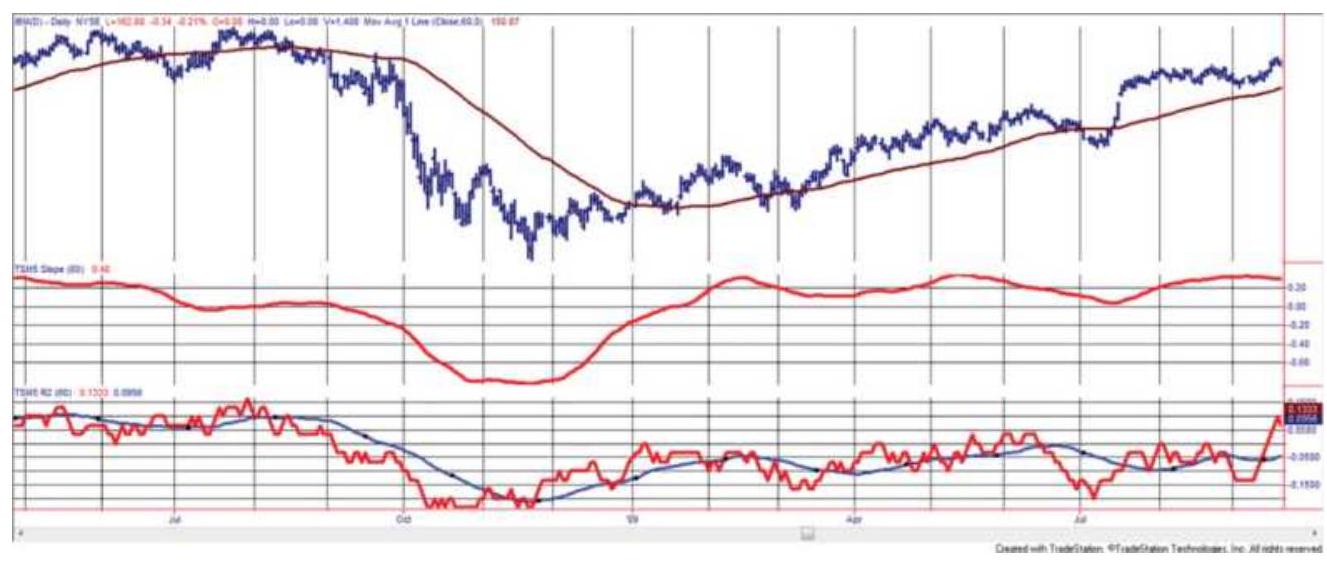

FIGURE 6.19 IBM trend using the slope and \(r\). (Top) IBM prices with 80-day mo...

FIGURE 6.20 AMGN shows a declining slope even though the price is higher.

Chapter 7

FIGURE 7.1 General Electric price from December 31, 2010, through February 1...

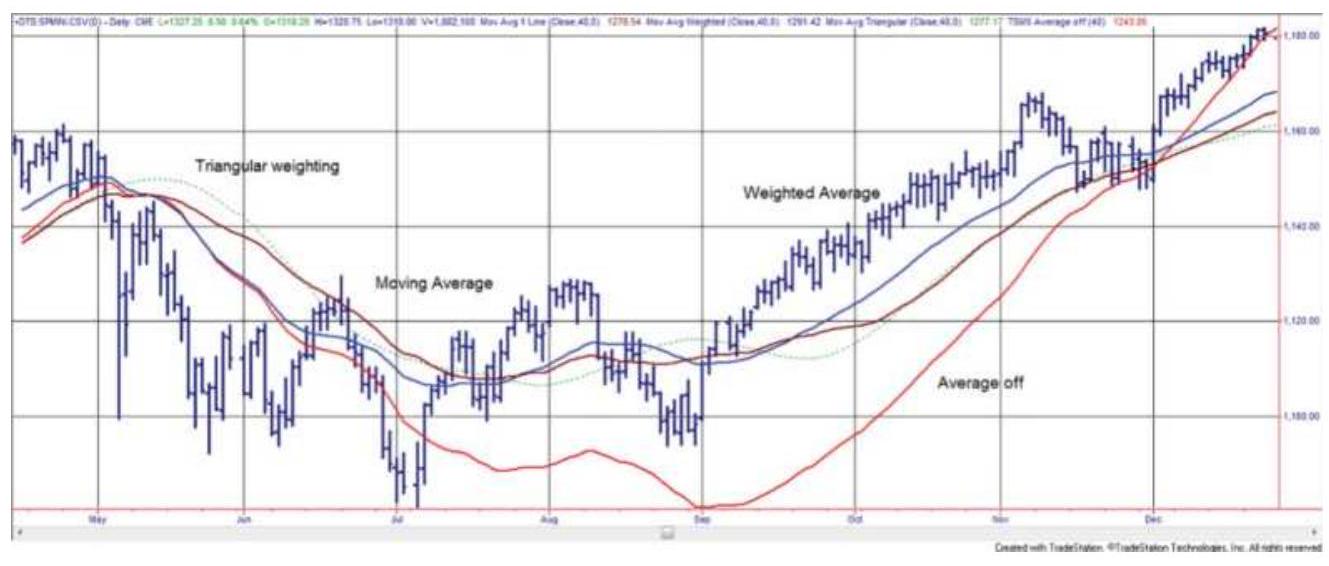

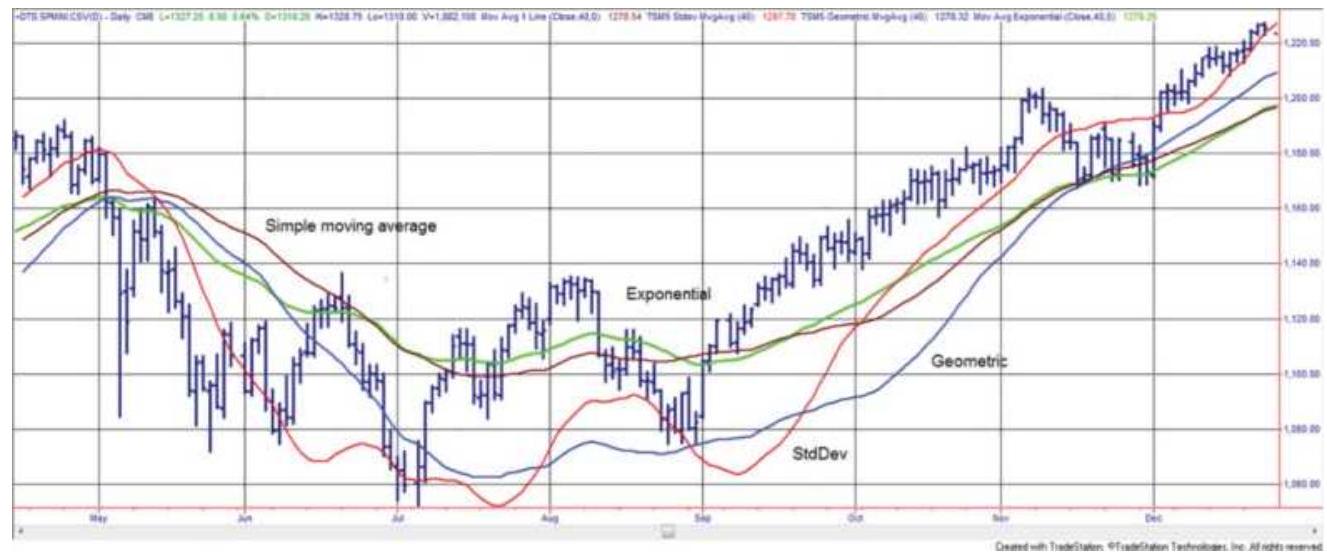

FIGURE 7.2 A comparison of moving averages.

The simple moving average, linea...

FIGURE 7.3 SP continuous futures, April through December 2010, with examples...

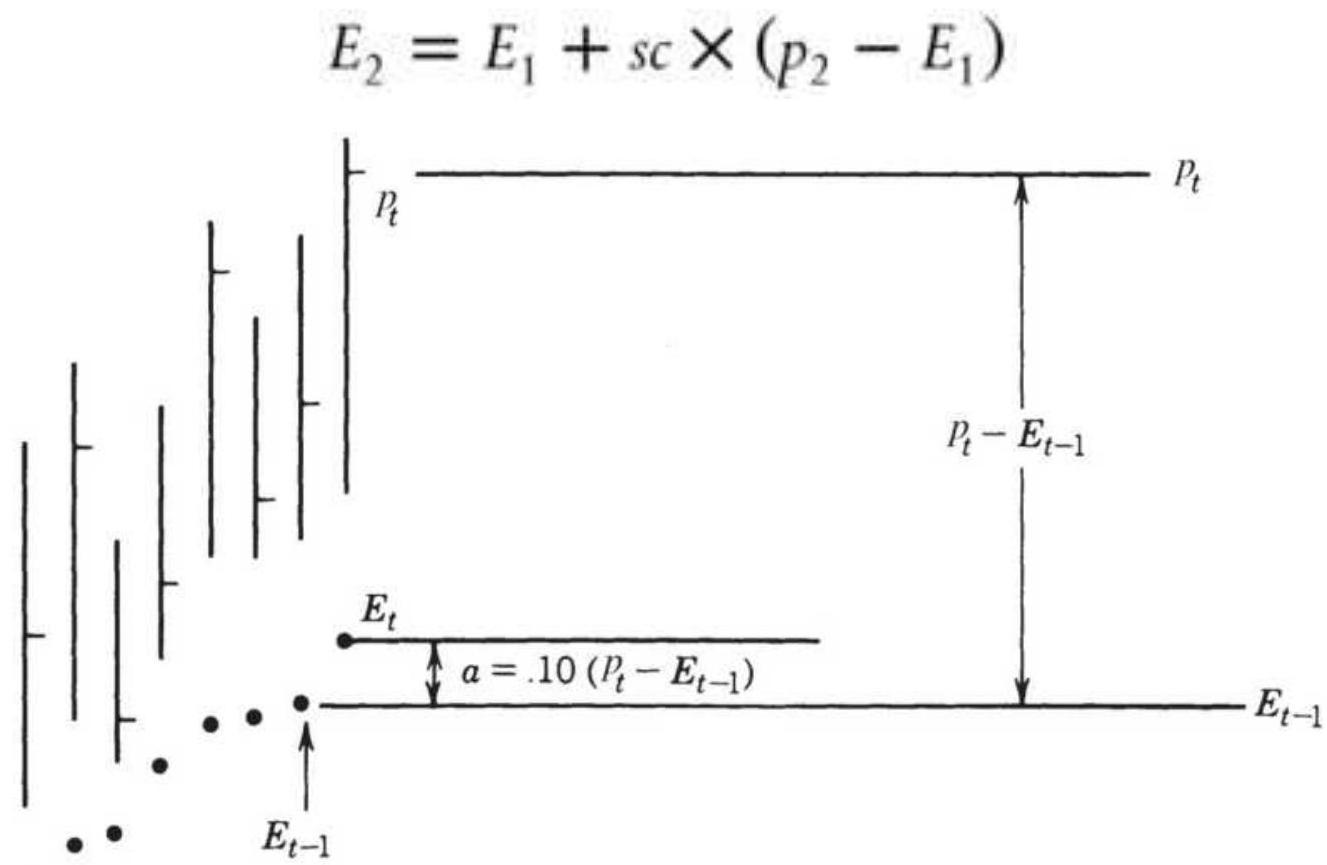

FIGURE 7.4 Exponential smoothing. The new exponential trendline value, \(E_{t}\), is...

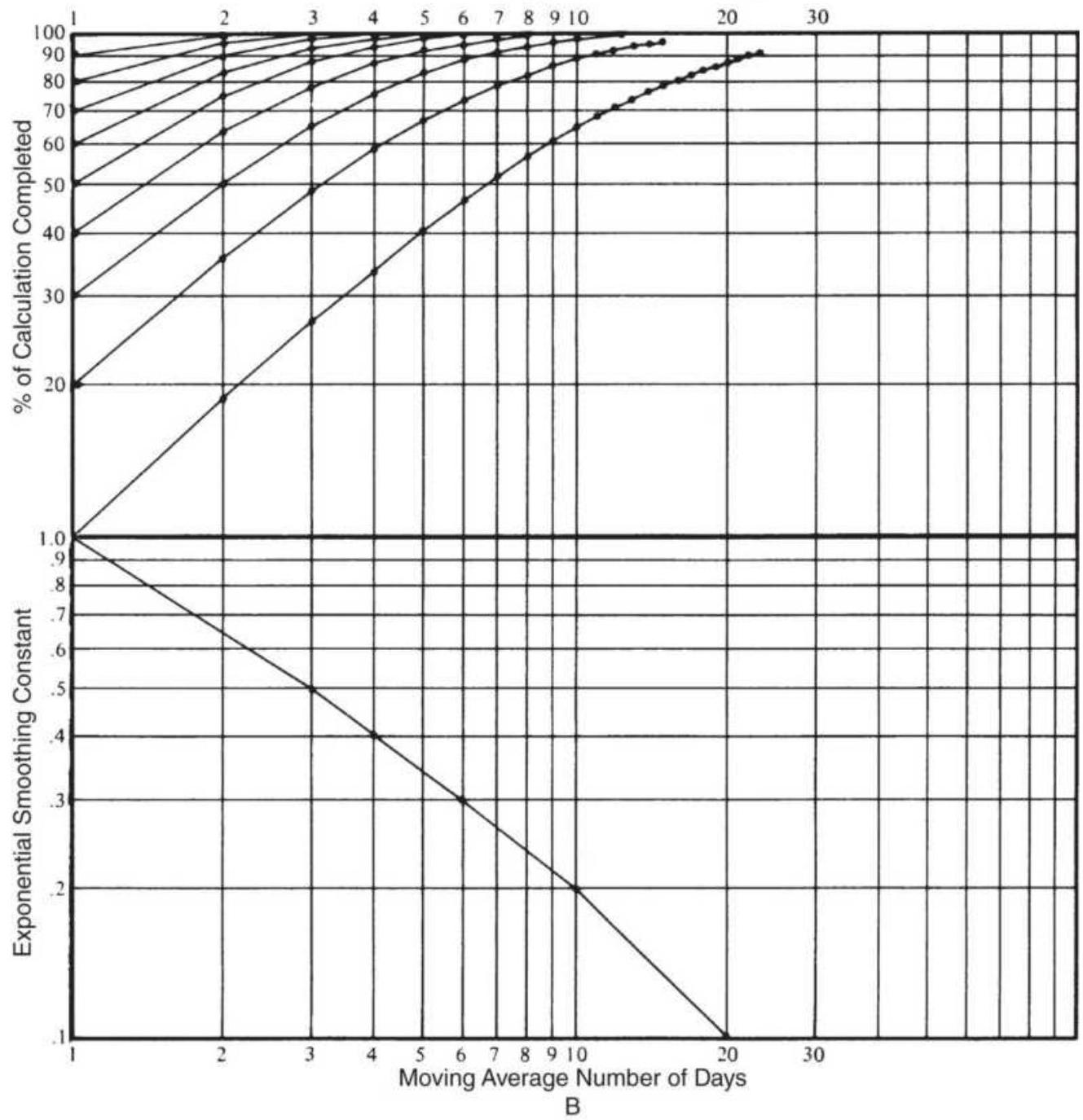

FIGURE 7.5 Graphic evaluation of exponential smoothing and moving average eq...

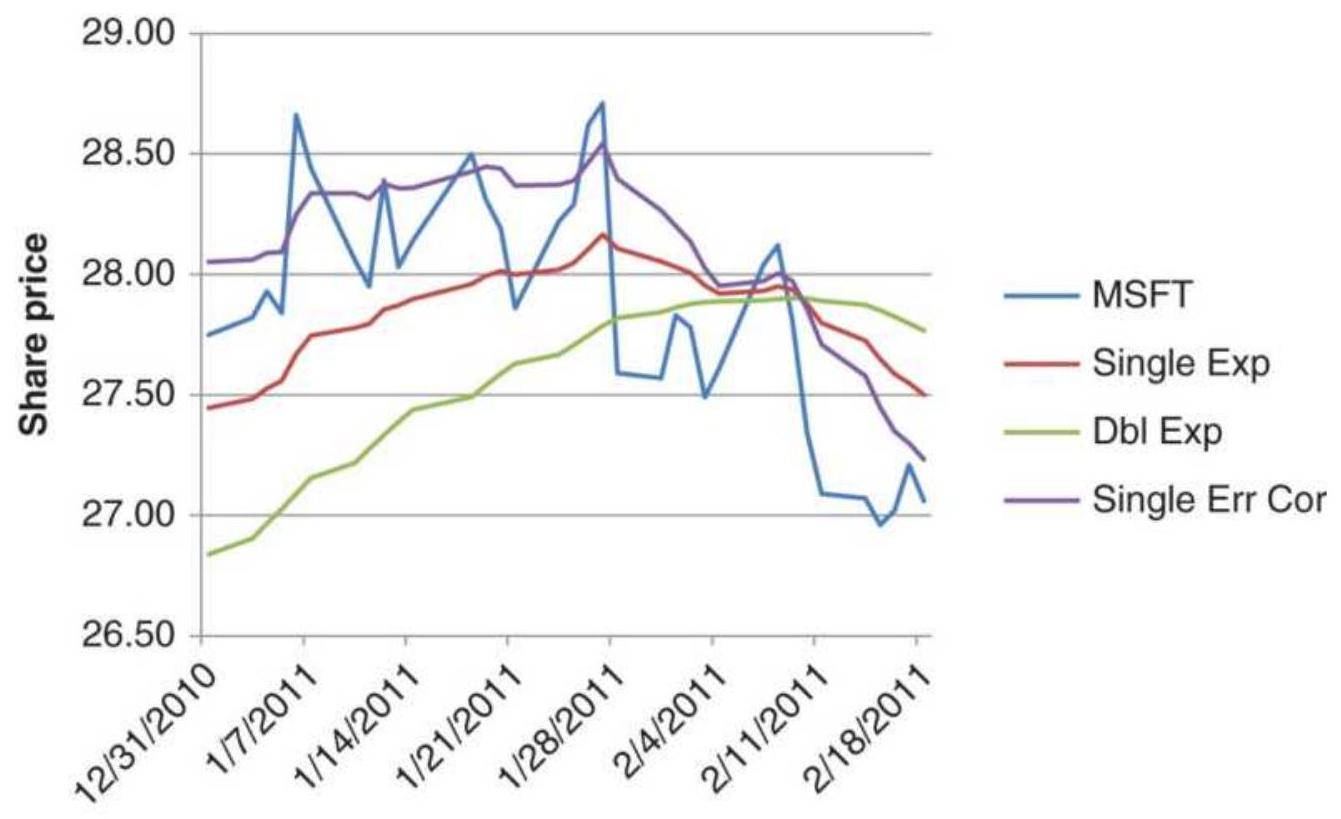

FIGURE 7.6 Comparison of exponential smoothing techniques applied to Microso...

FIGURE 7.7 Double-smoothing applied to Microsoft, June 2010 through February...

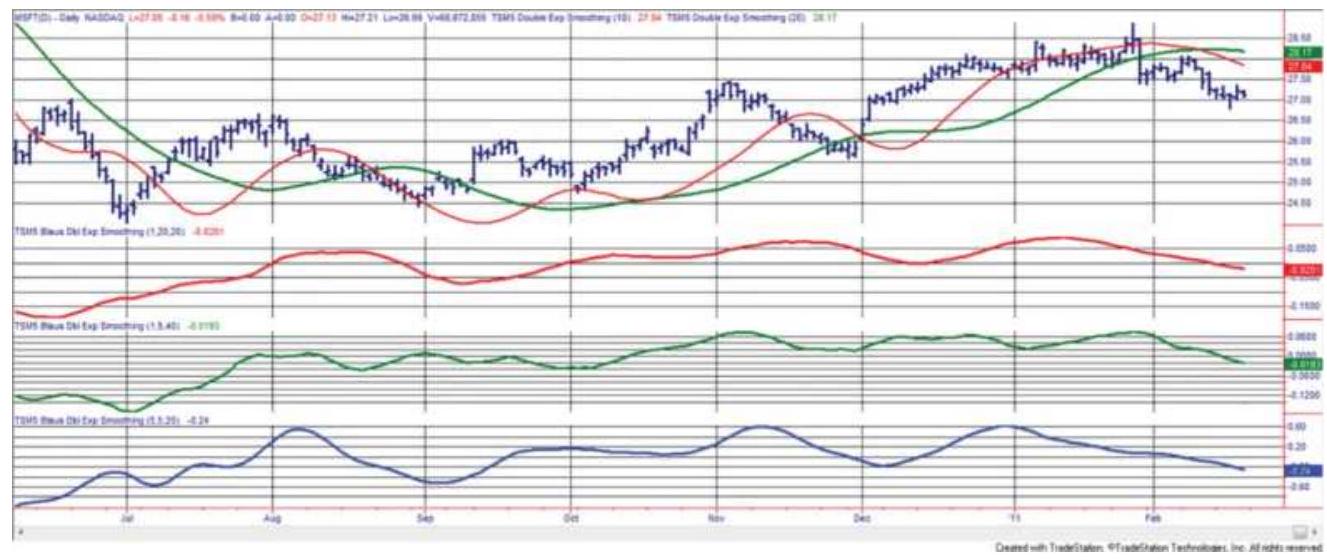

FIGURE 7.8 Comparison of a 9 -day exponential smoothing with a 9 -day exponent...

FIGURE 7.9 The Hull Moving Average (slower trend in the top panel), compared...

FIGURE 7.10 Plotting a moving average lag and lead for a short period of Mic...

\section*{Chapter 8}

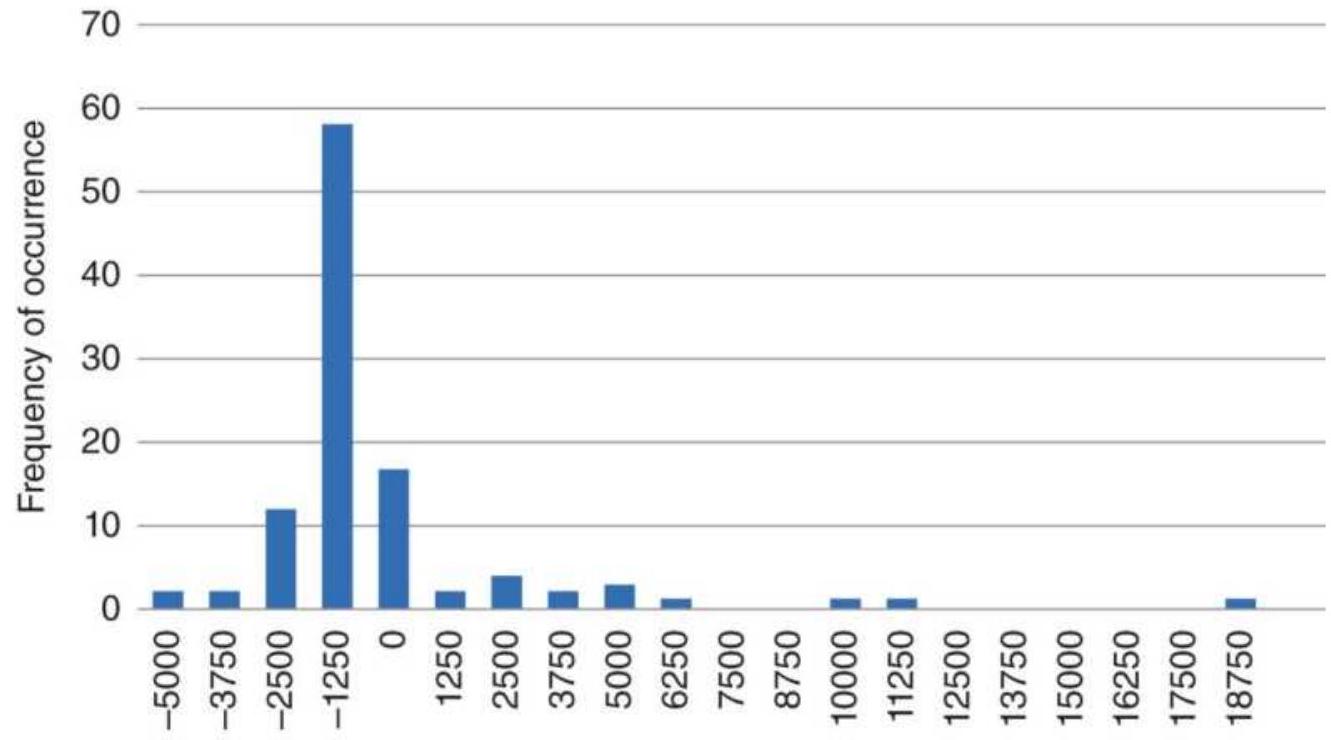

FIGURE 8.1 Distribution of runs. The shaded area shows the normal distributi...

FIGURE 8.2 Frequency distribution of returns for SP futures using a 40-day s...

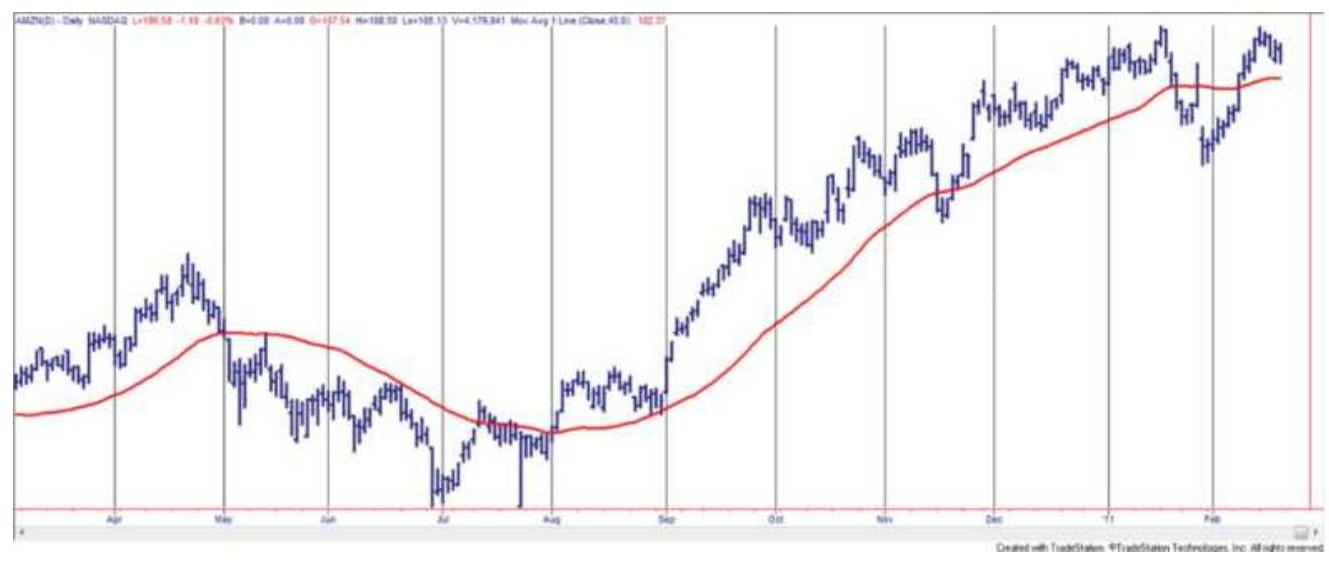

FIGURE 8.3 Amazon (AMZN) with a 40-day moving average.

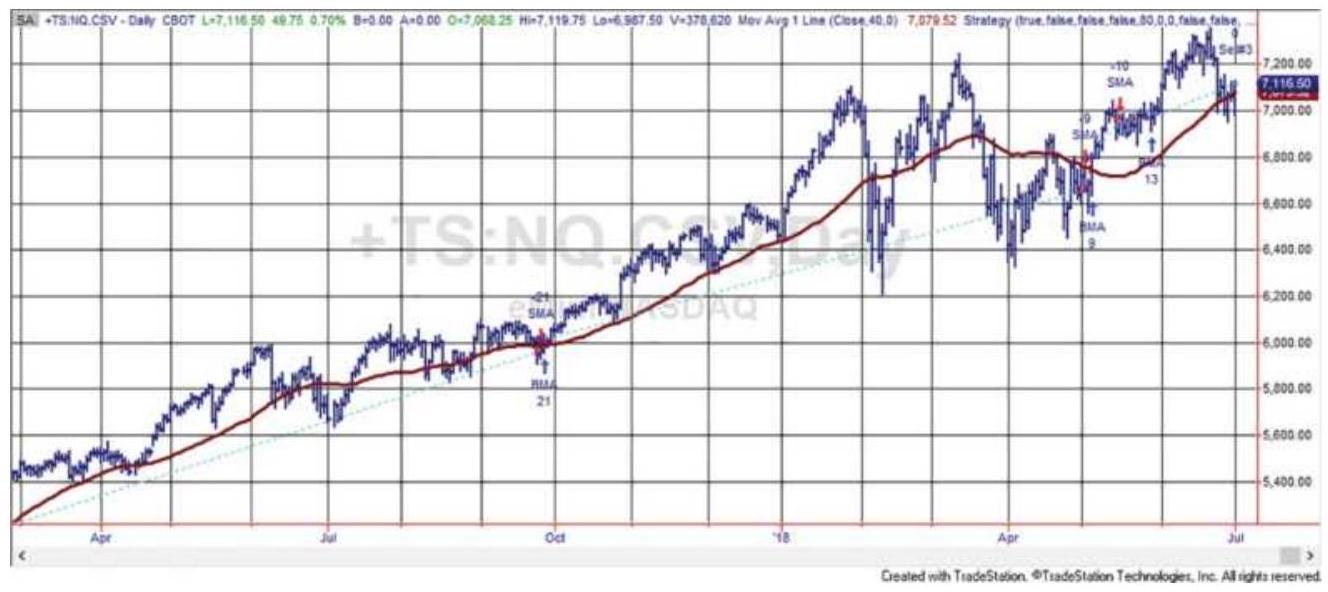

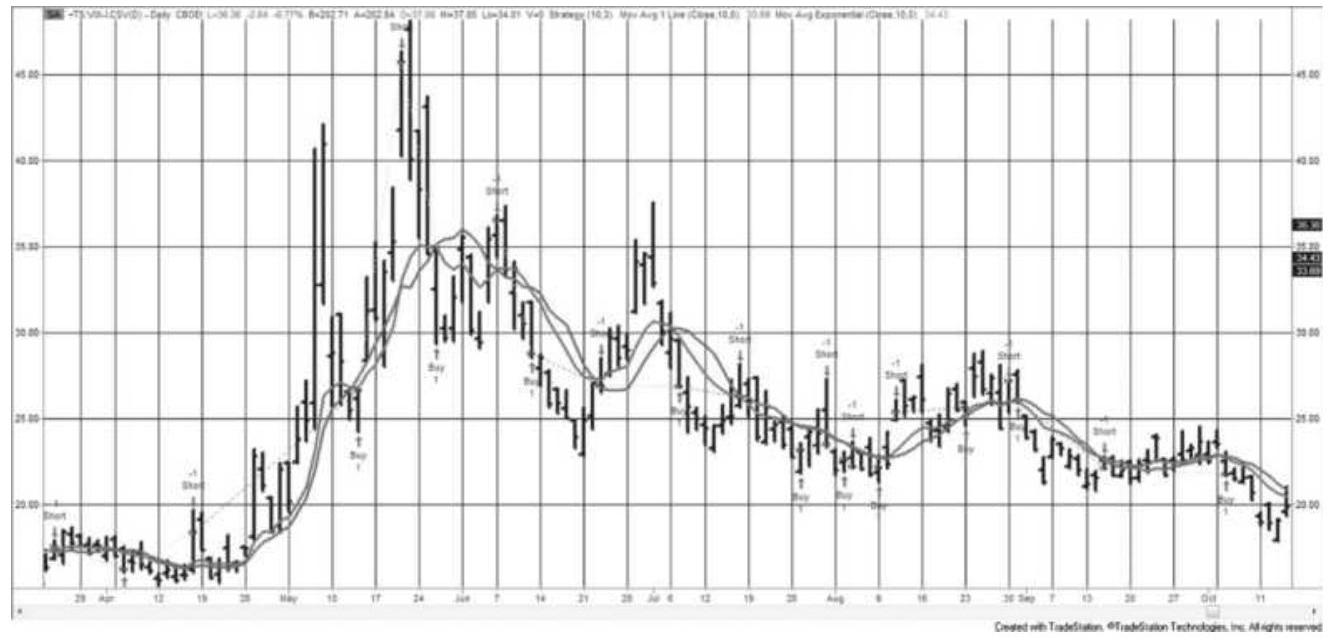

FIGURE 8.4 A trend system for NASDAQ 100 futures, using an 80 -day moving ave...

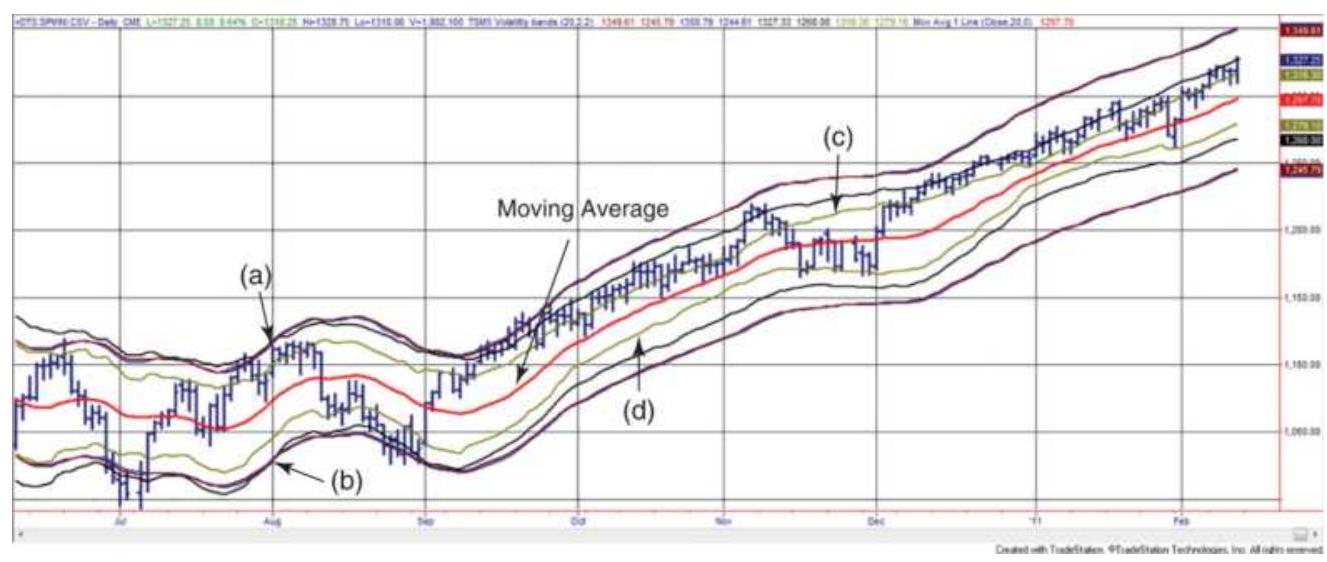

FIGURE 8.5 Four volatility bands around a 20day moving average, based on (a...

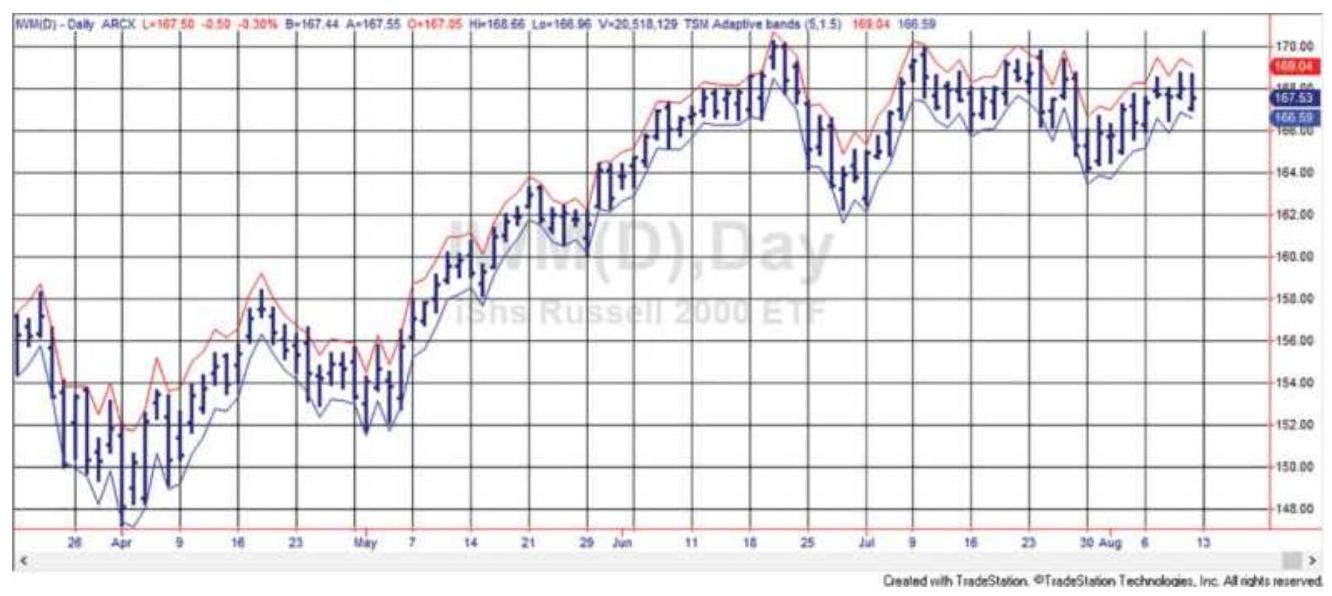

FIGURE 8.6 Adaptive bands constructed using double exponential smoothing sho...

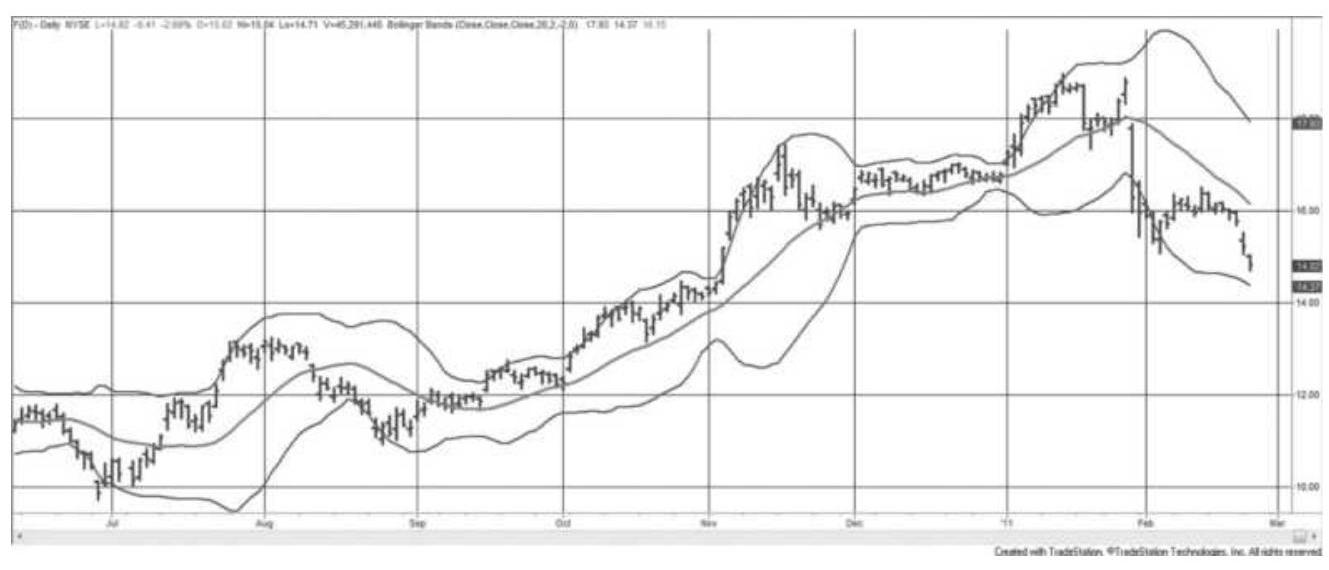

FIGURE 8.7 Bollinger bands applied to Ford.

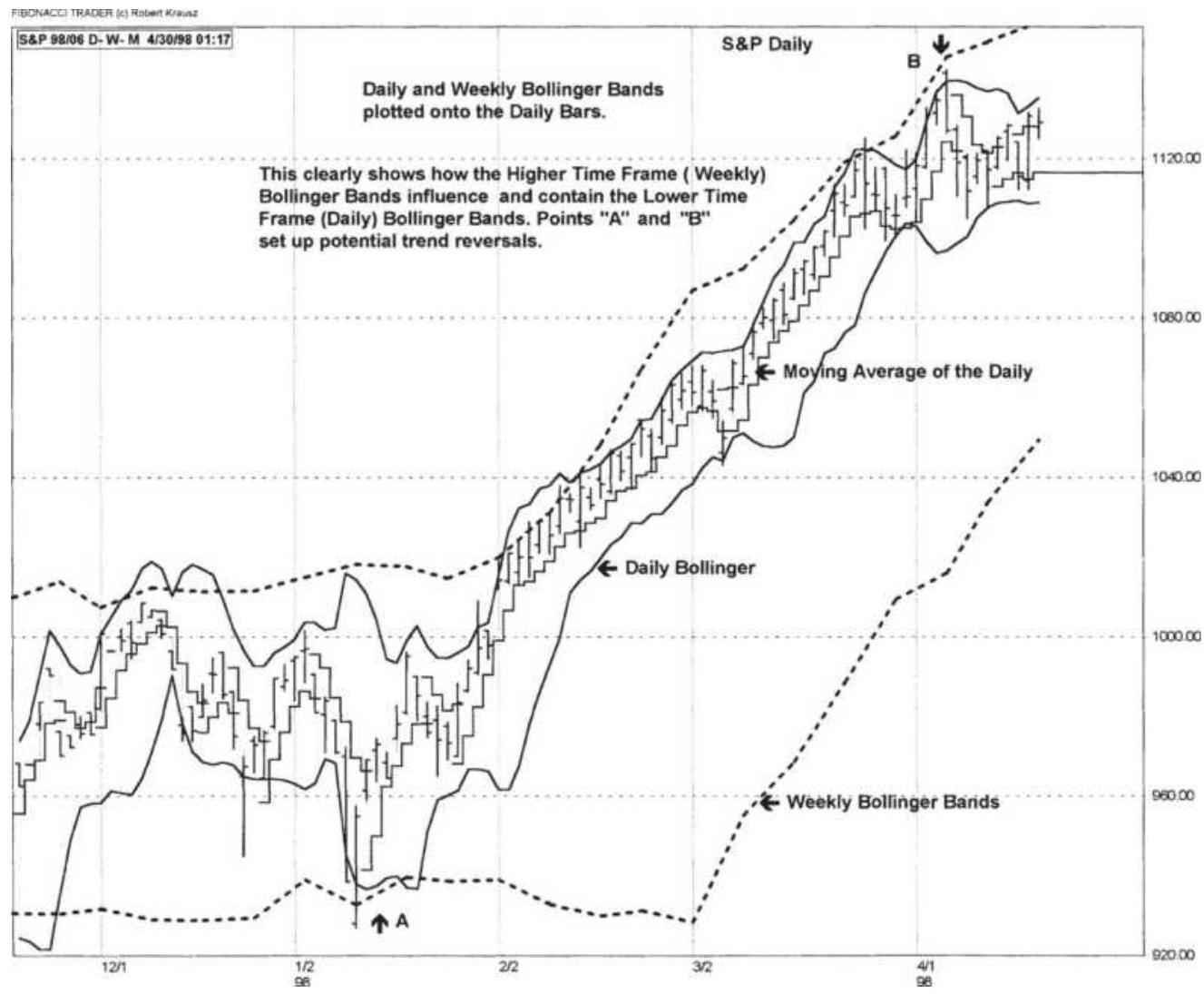

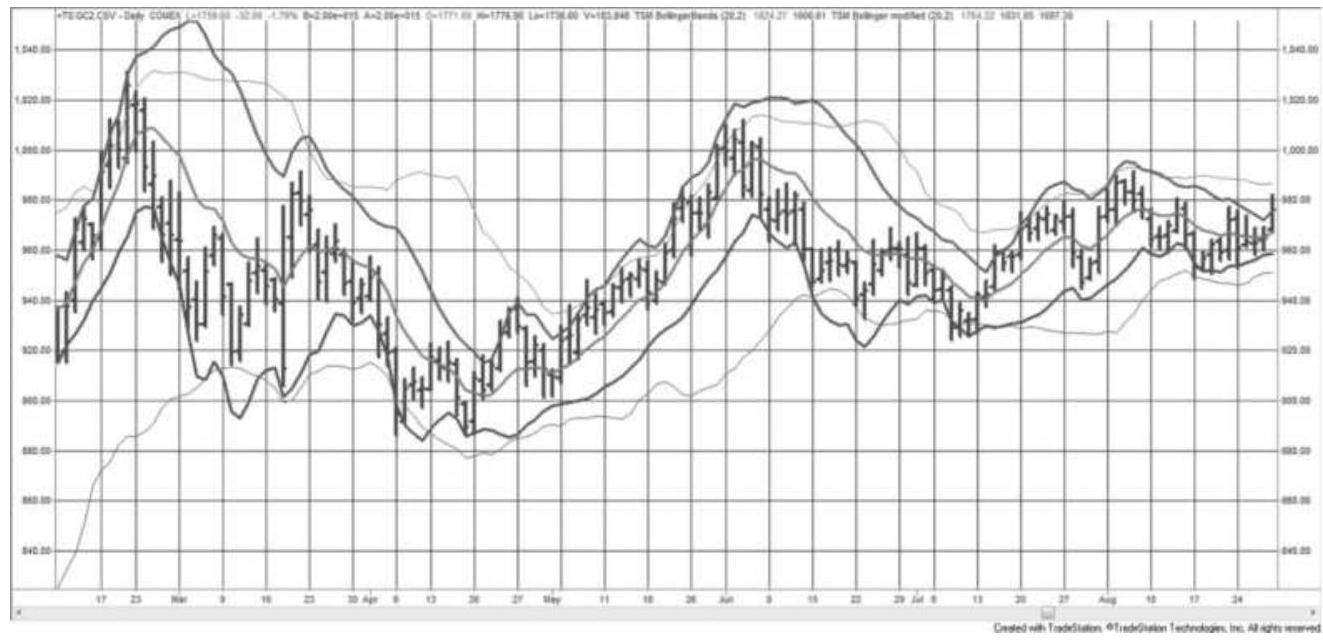

FIGURE 8.8 Combining daily and weekly Bollinger bands.

FIGURE 8.9 Modified Bollinger bands shown with original bands (lighter lines...

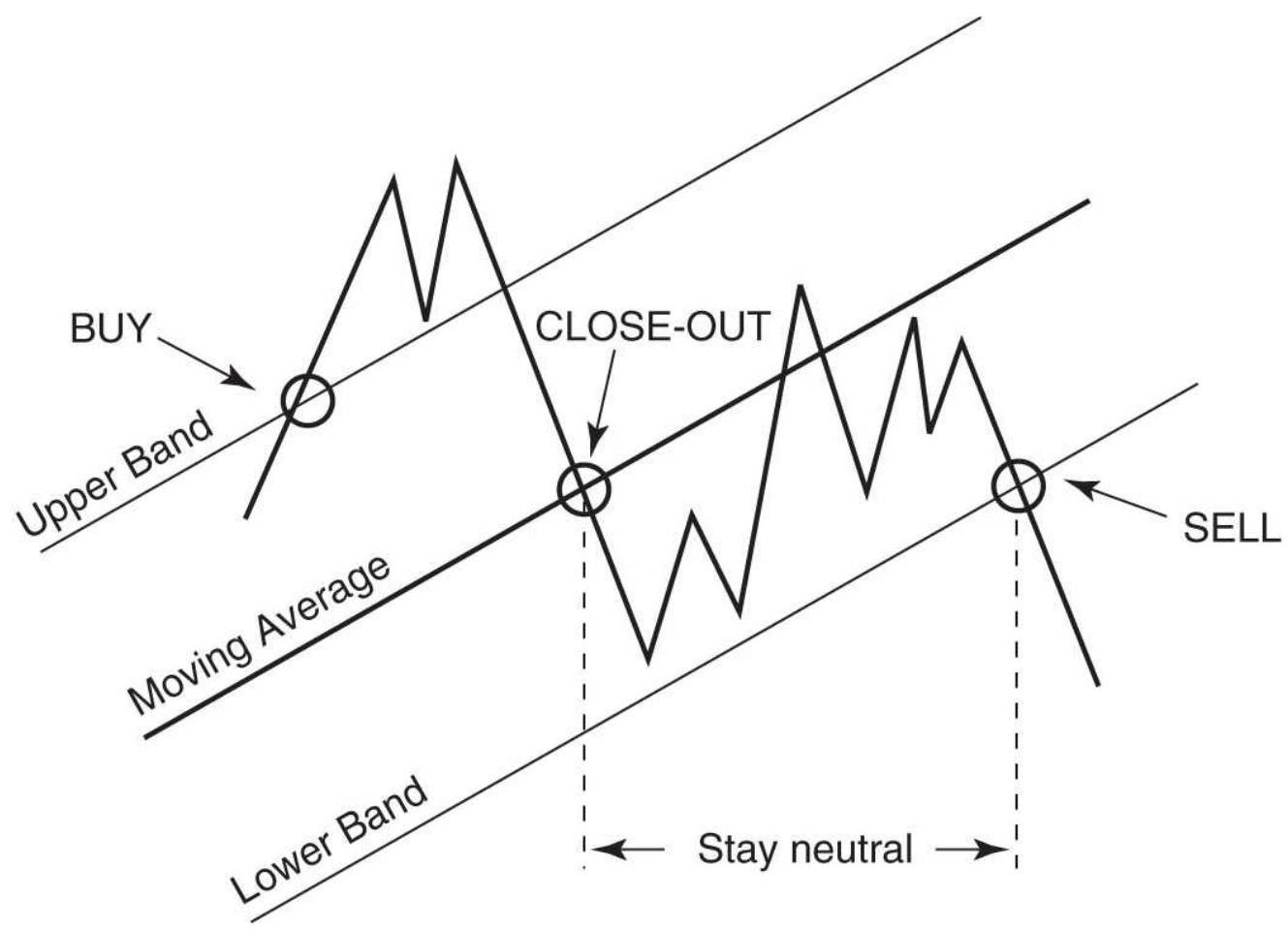

FIGURE 8.10 Simple reversal rules for using bands.

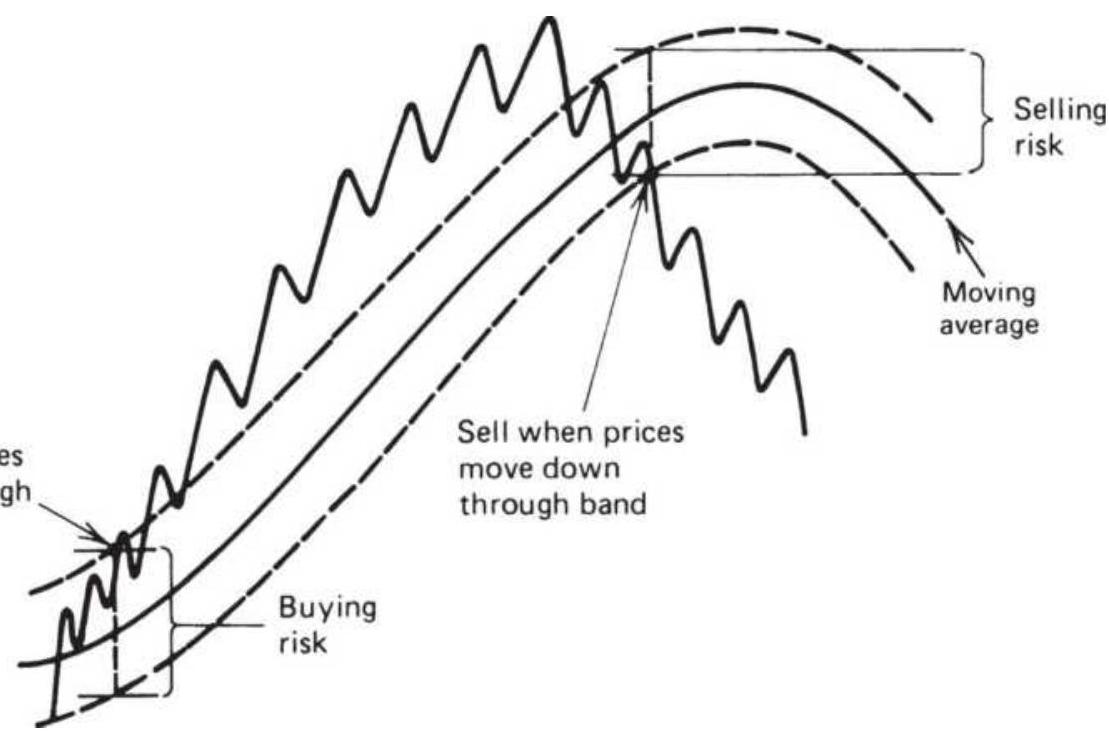

FIGURE 8.11 Basic rules for using bands.

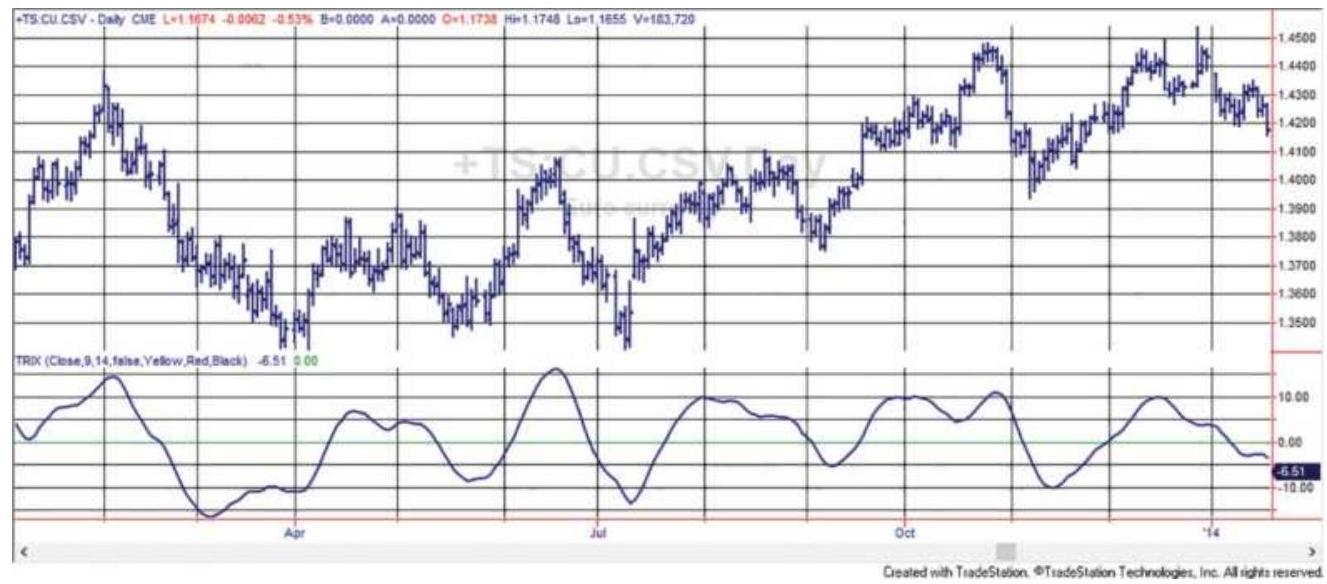

FIGURE 8.12 A g-day TRIX based on euro futures shows that a triple smoothing...

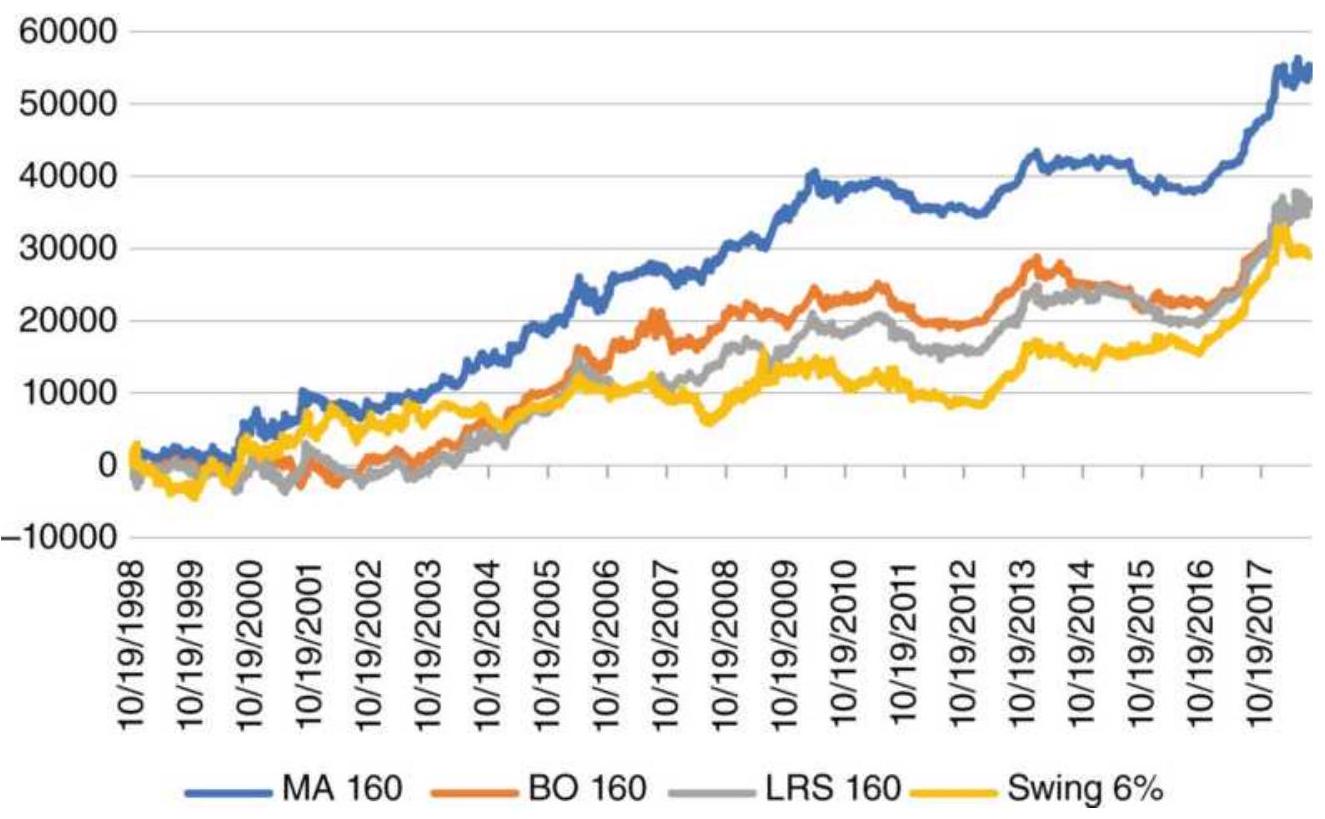

FIGURE 8.13 Cumulative profits of S\&P futures for four strategies.

FIGURE 8.14 Results of trend strategies for Boeing (BA) from 1998 through Ju...

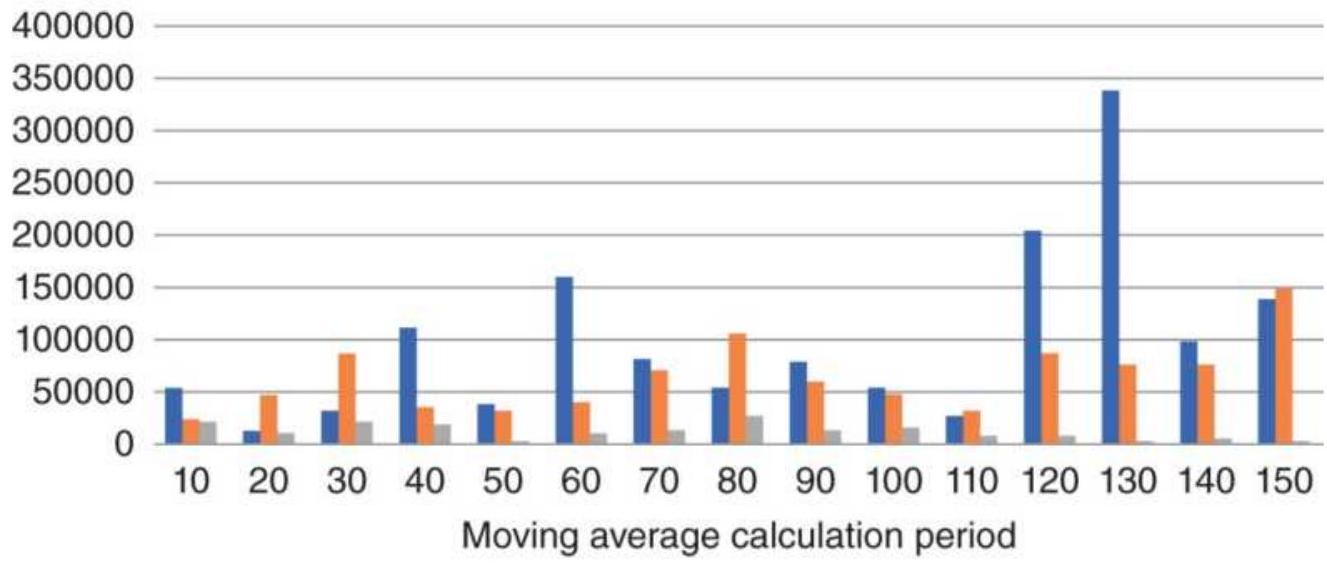

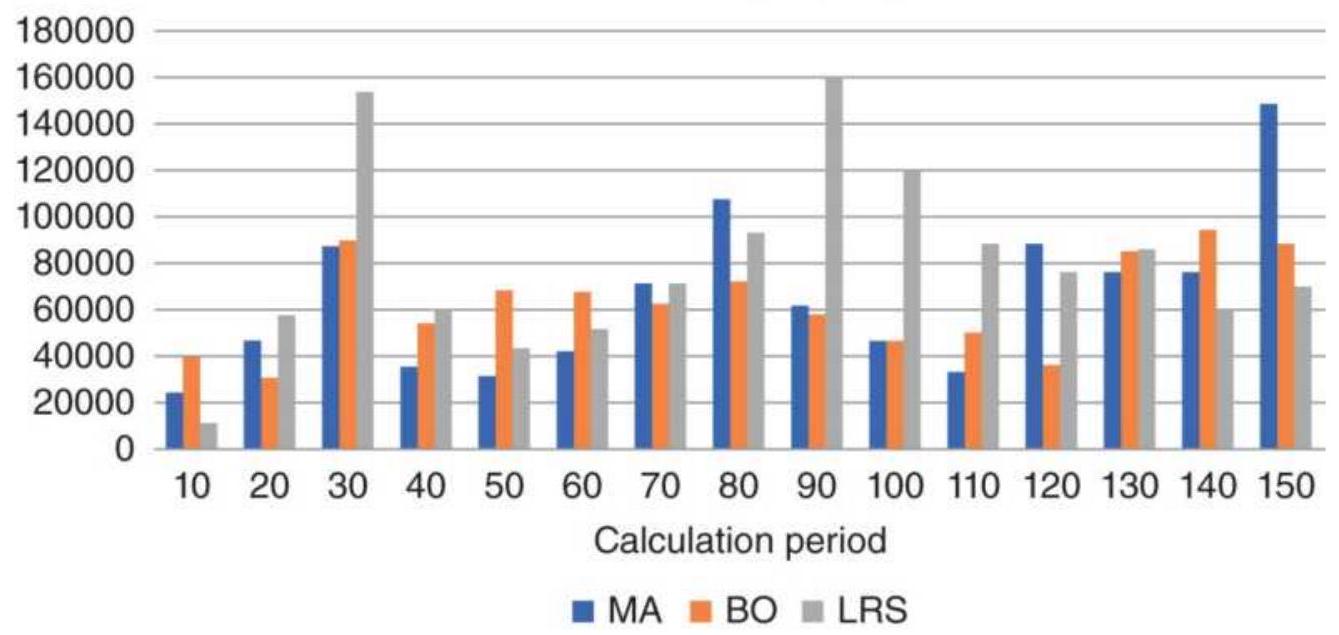

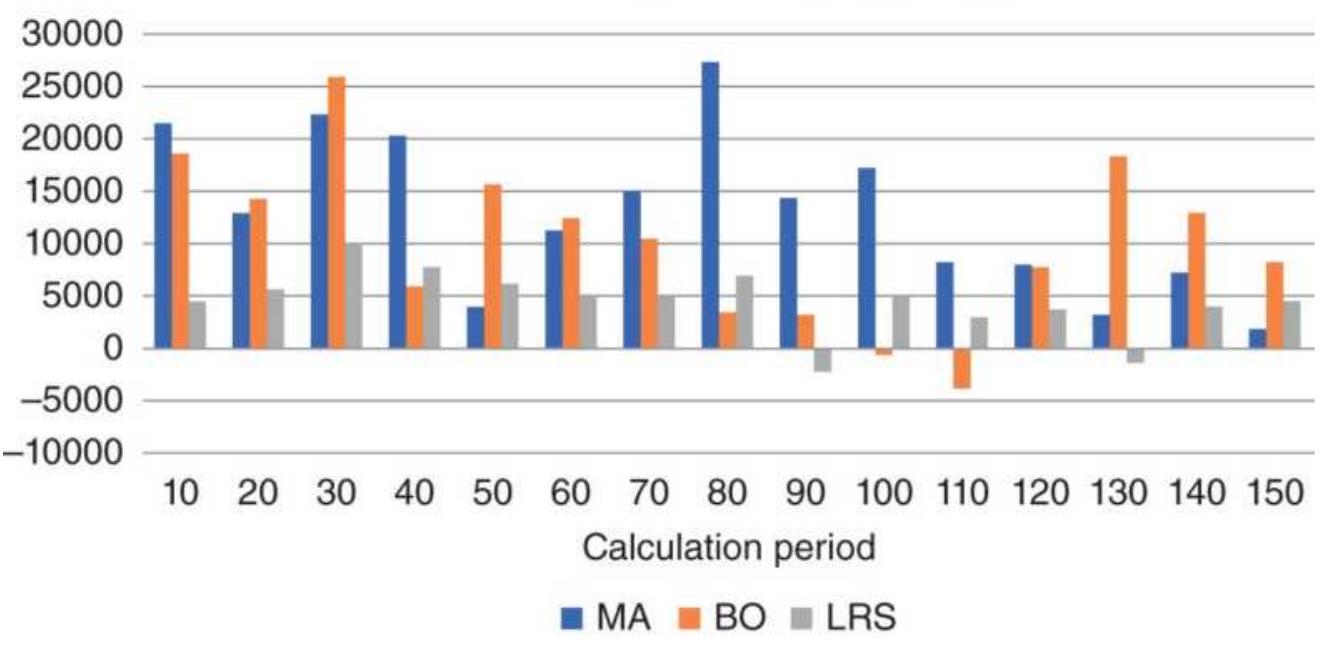

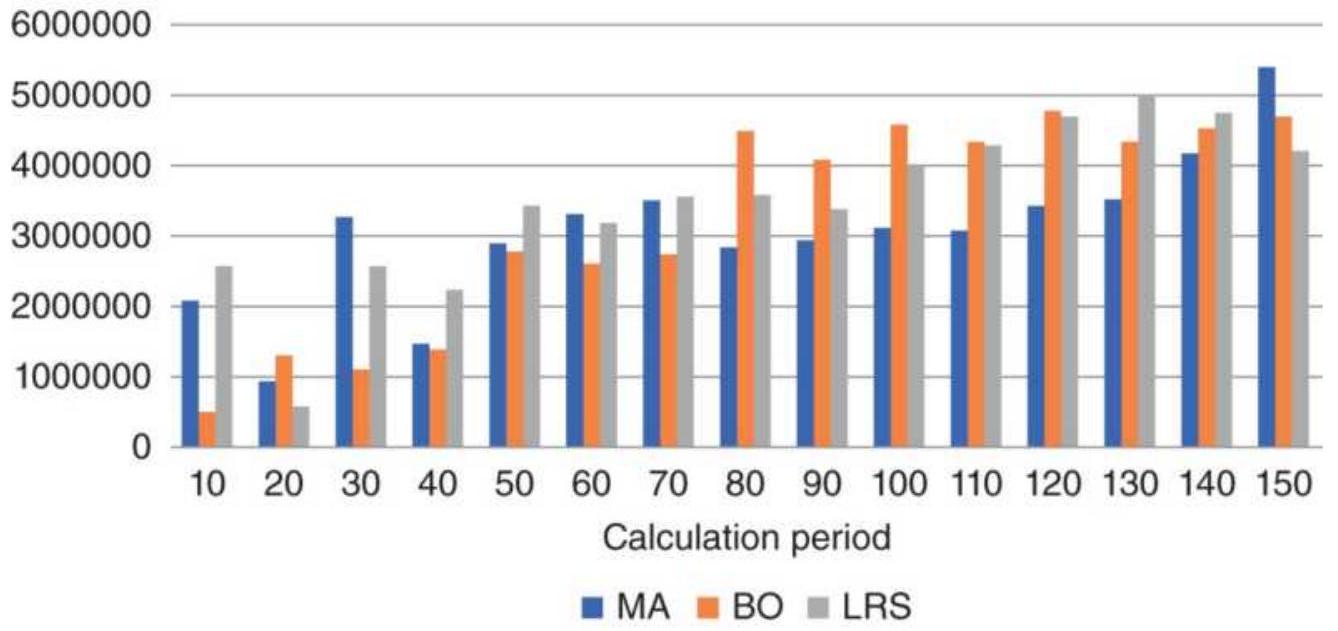

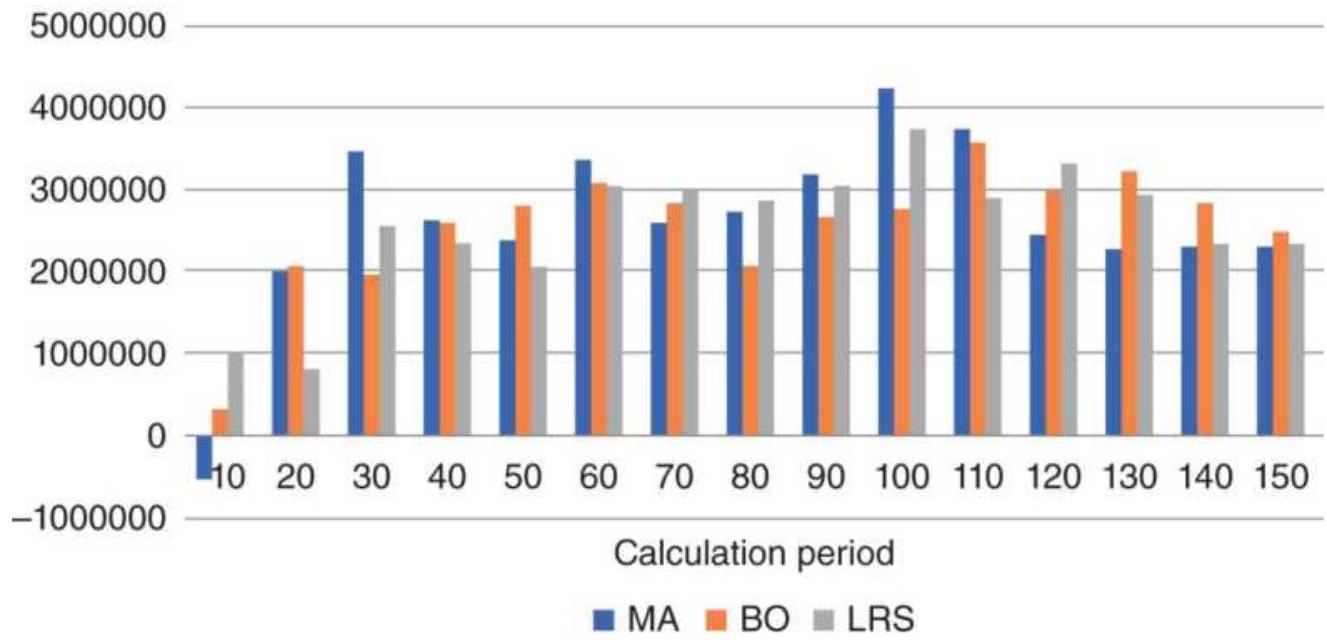

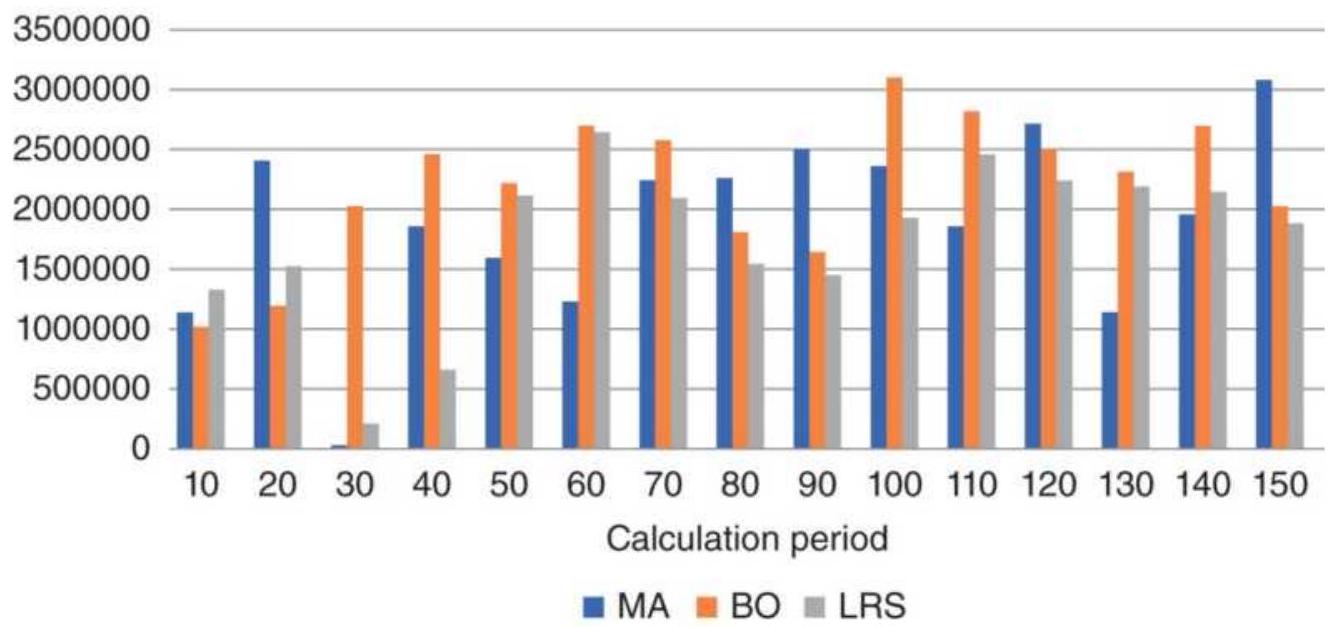

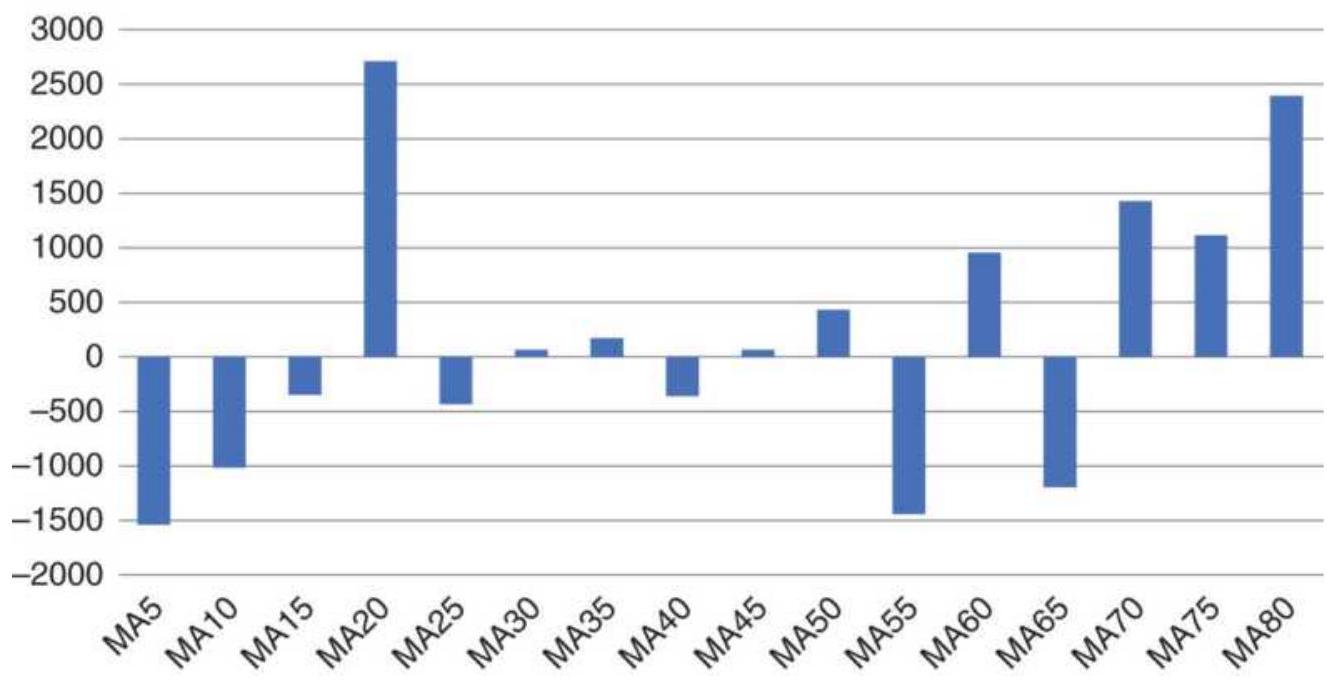

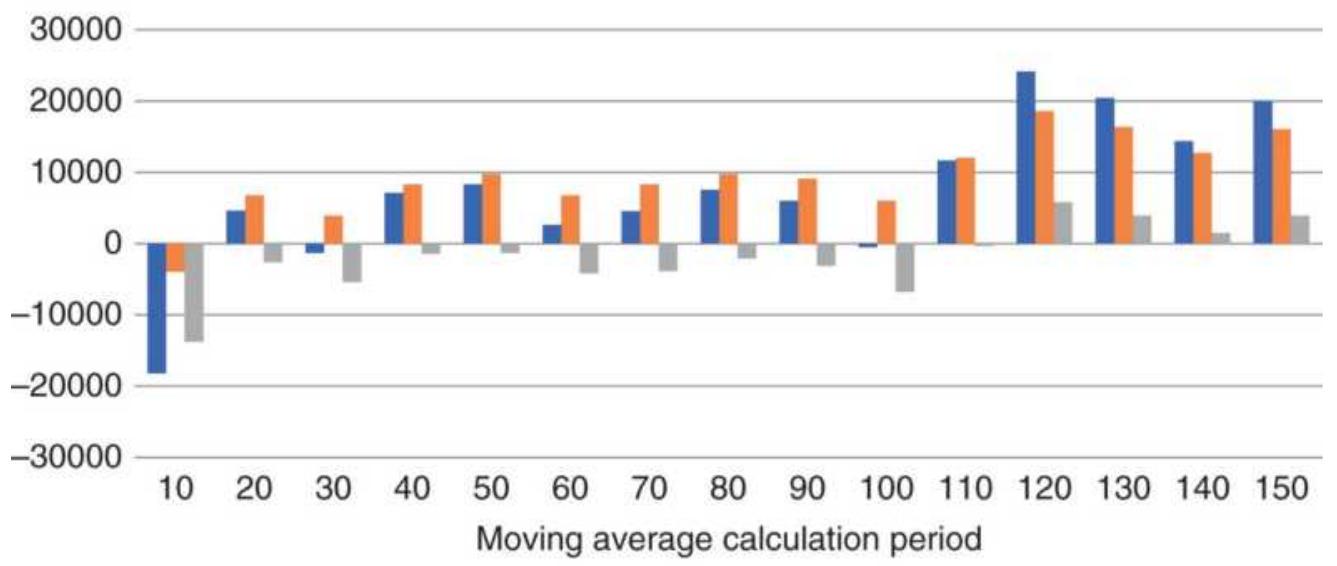

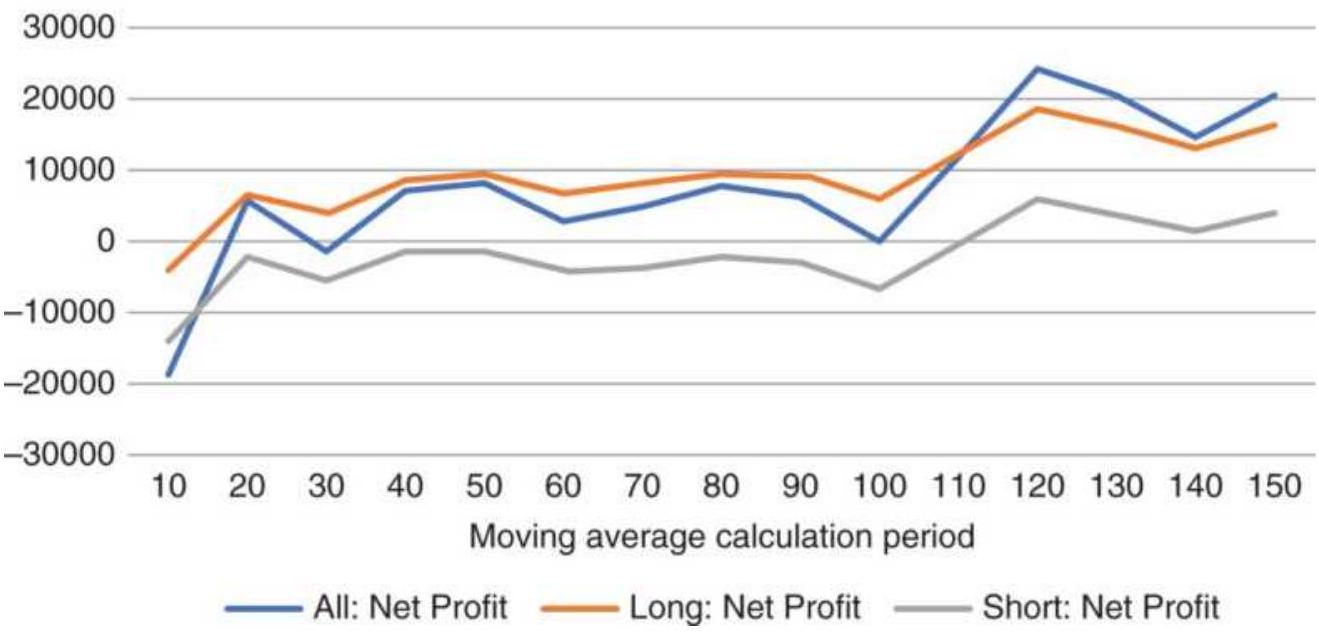

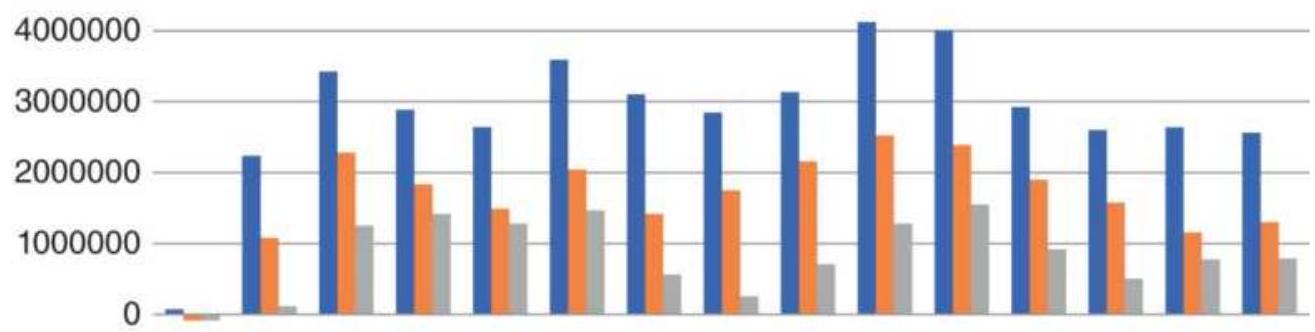

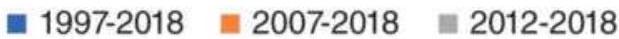

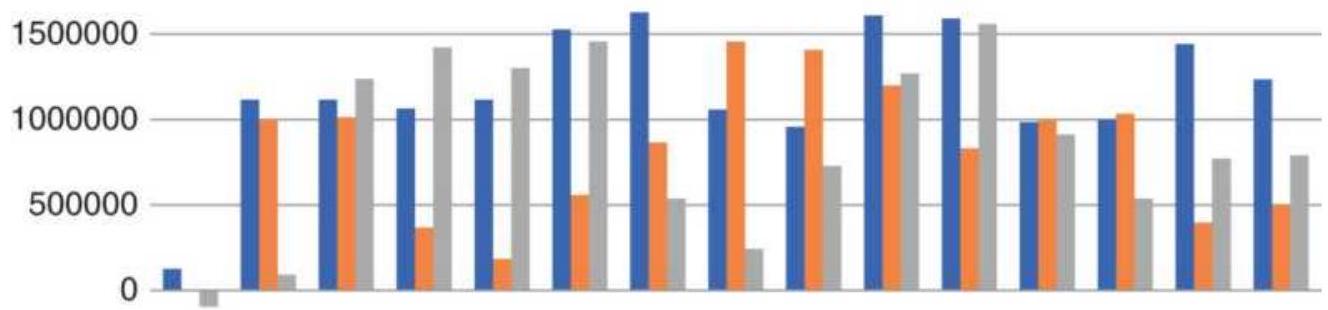

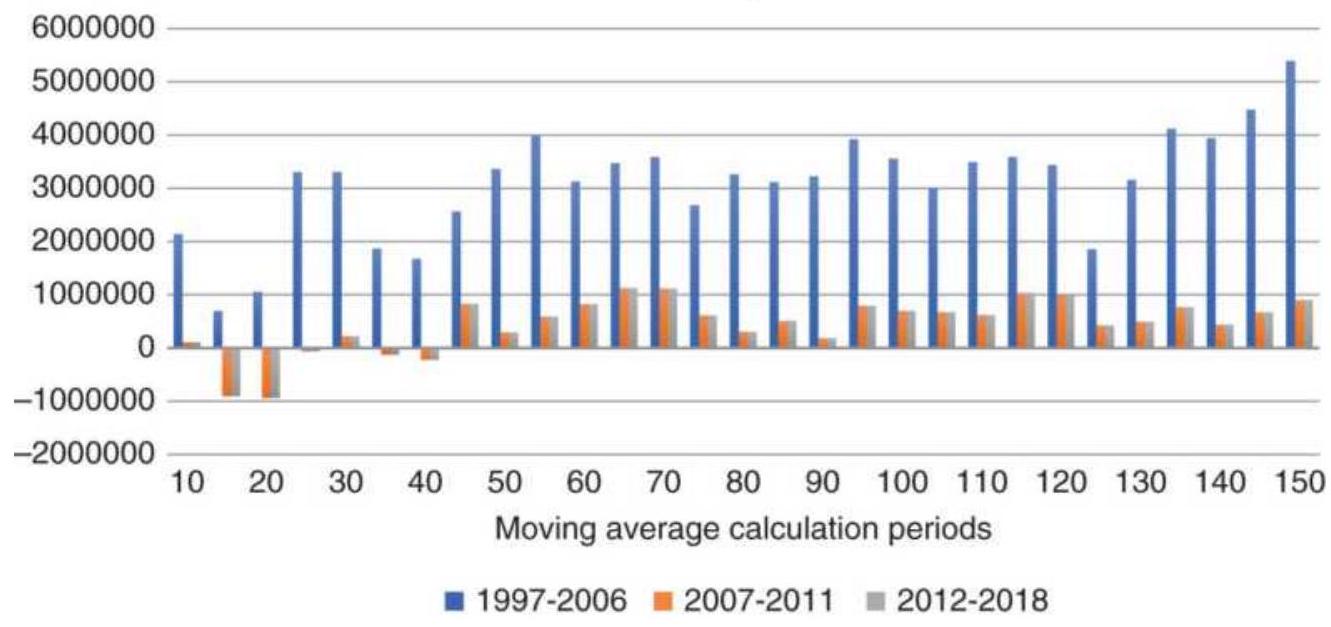

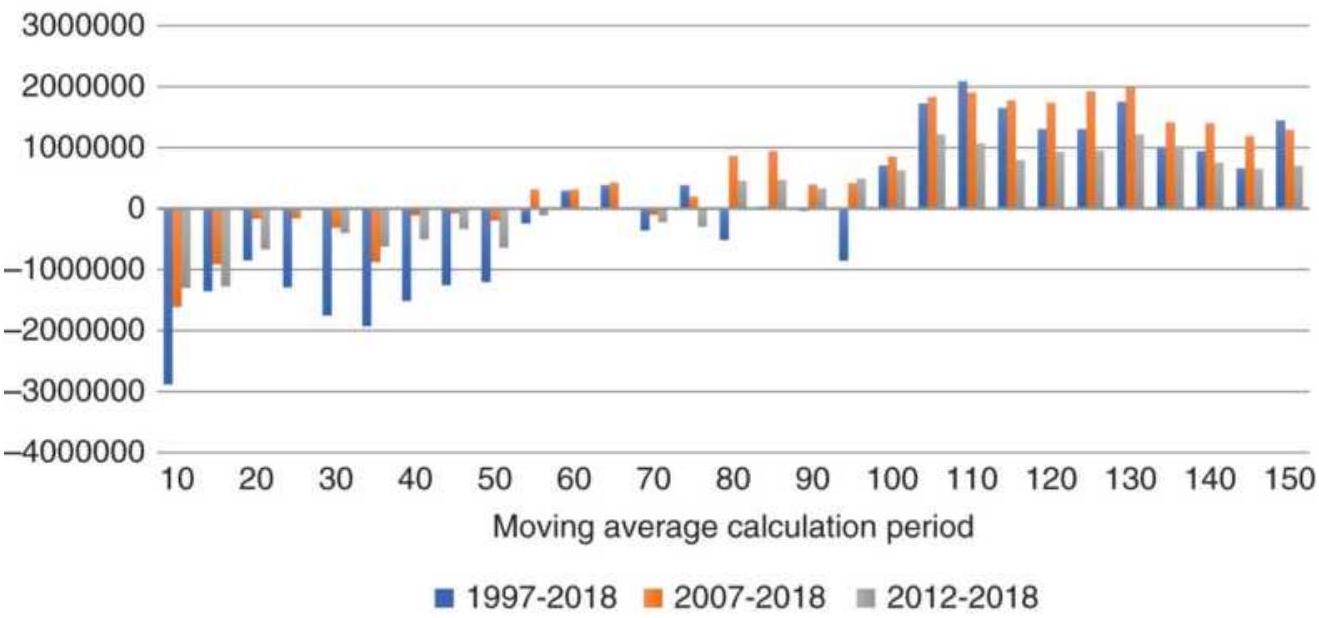

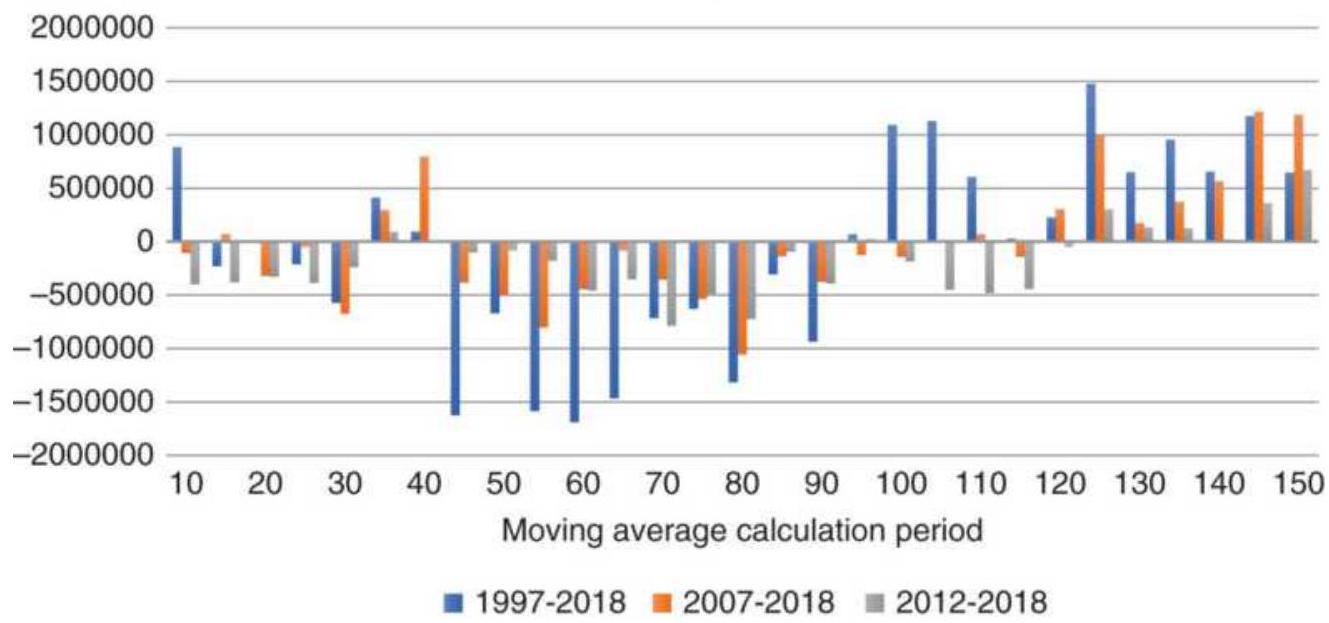

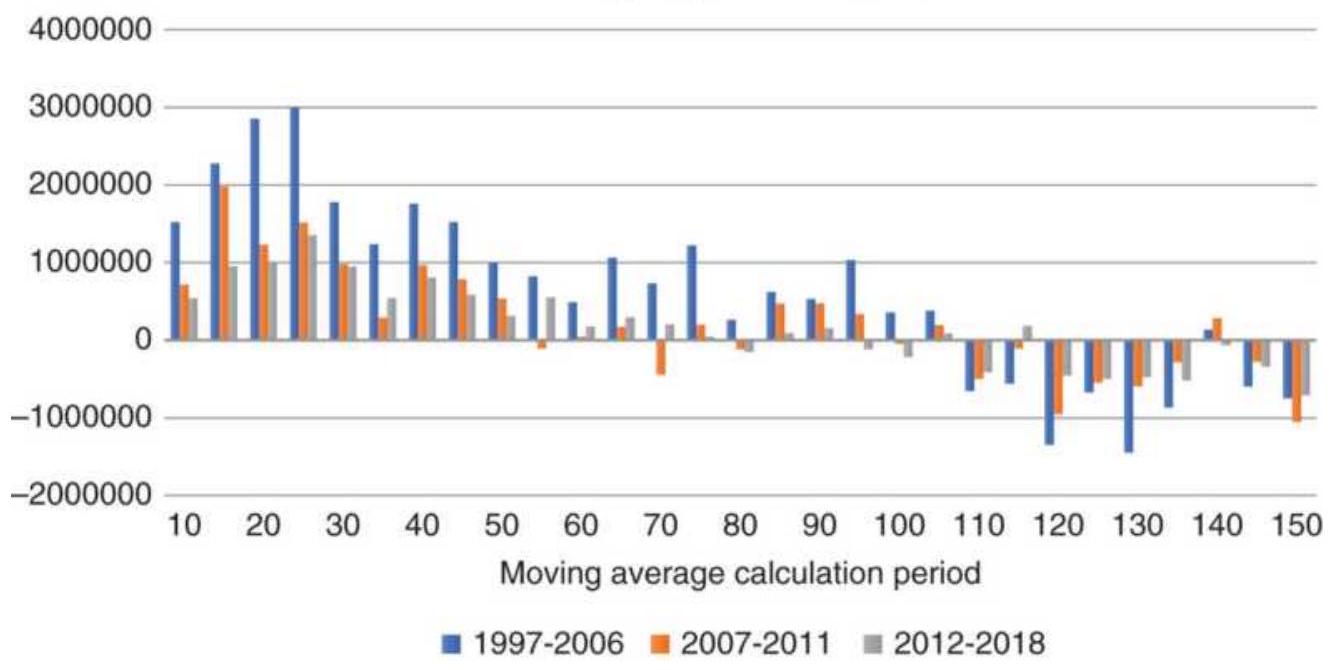

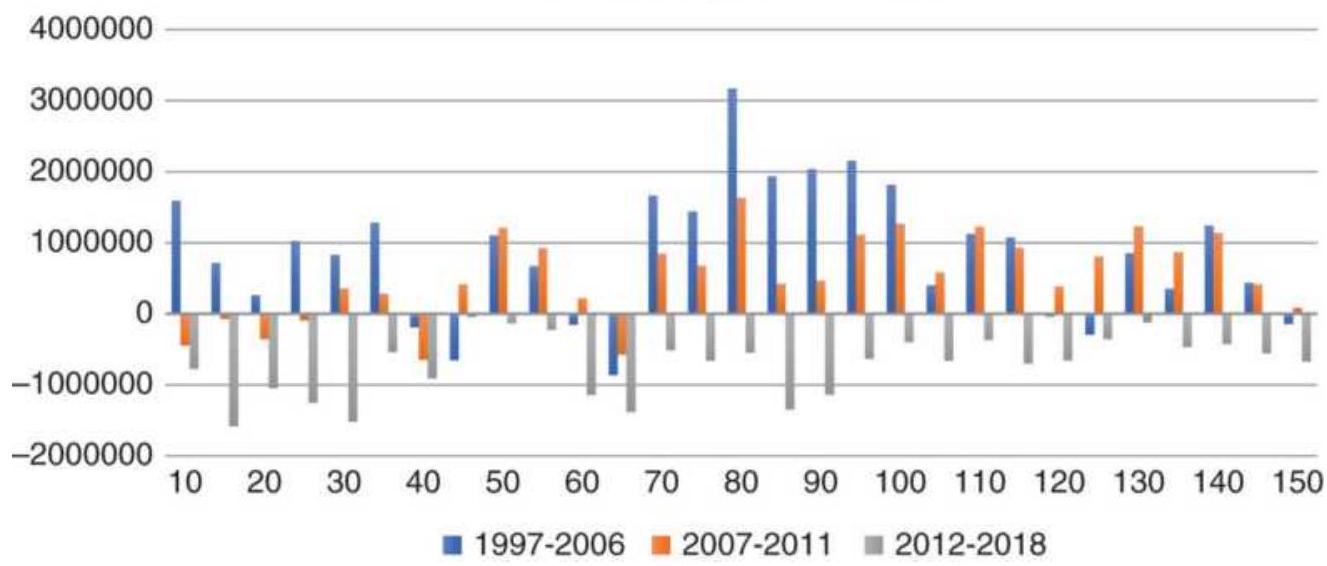

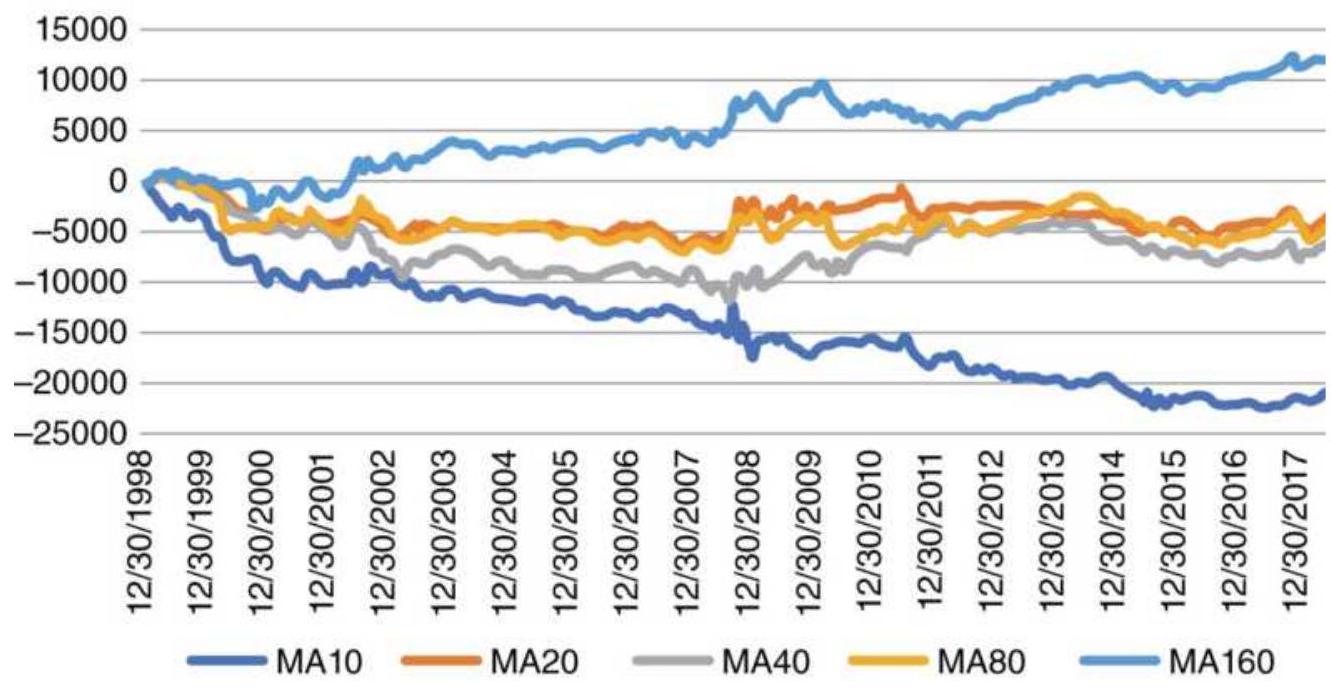

FIGURE 8.15 Moving average net profits from futures, 1998-2018.

FIGURE 8.16 Moving average net profits for selected stocks, 20 years ending ...

FIGURE 8.17 Amazon (AMZN) net profits from three systems.

FIGURE 8.18 Boeing (BA) net profits from three systems.

FIGURE 8.19 Ford (F) net profits from three systems.

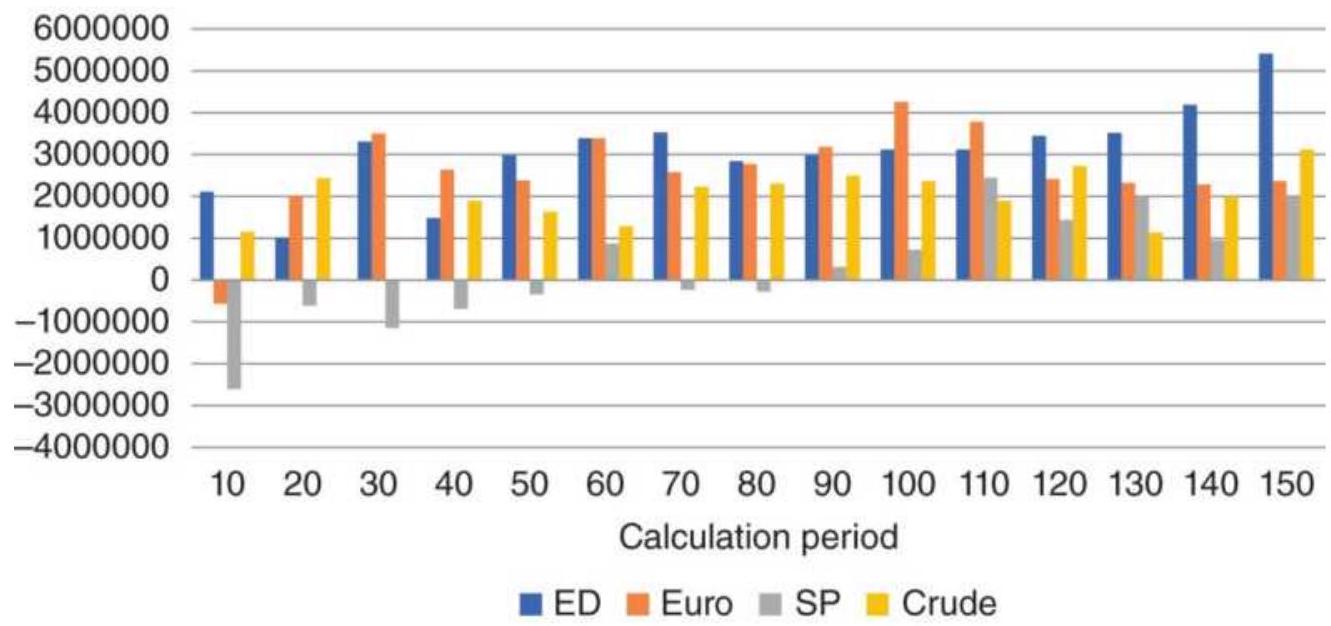

FIGURE 8.20 Eurodollar futures have a strong trend and benefit from any tren...

FIGURE 8.21 The euro currency (CU) has a strong trend but performs about the...

FIGURE 8.22 emini S\&P futures show a weak long-term trend and excessive nois...

FIGURE 8.23 Crude oil futures (CL) has had wide-ranging, volatile price move...

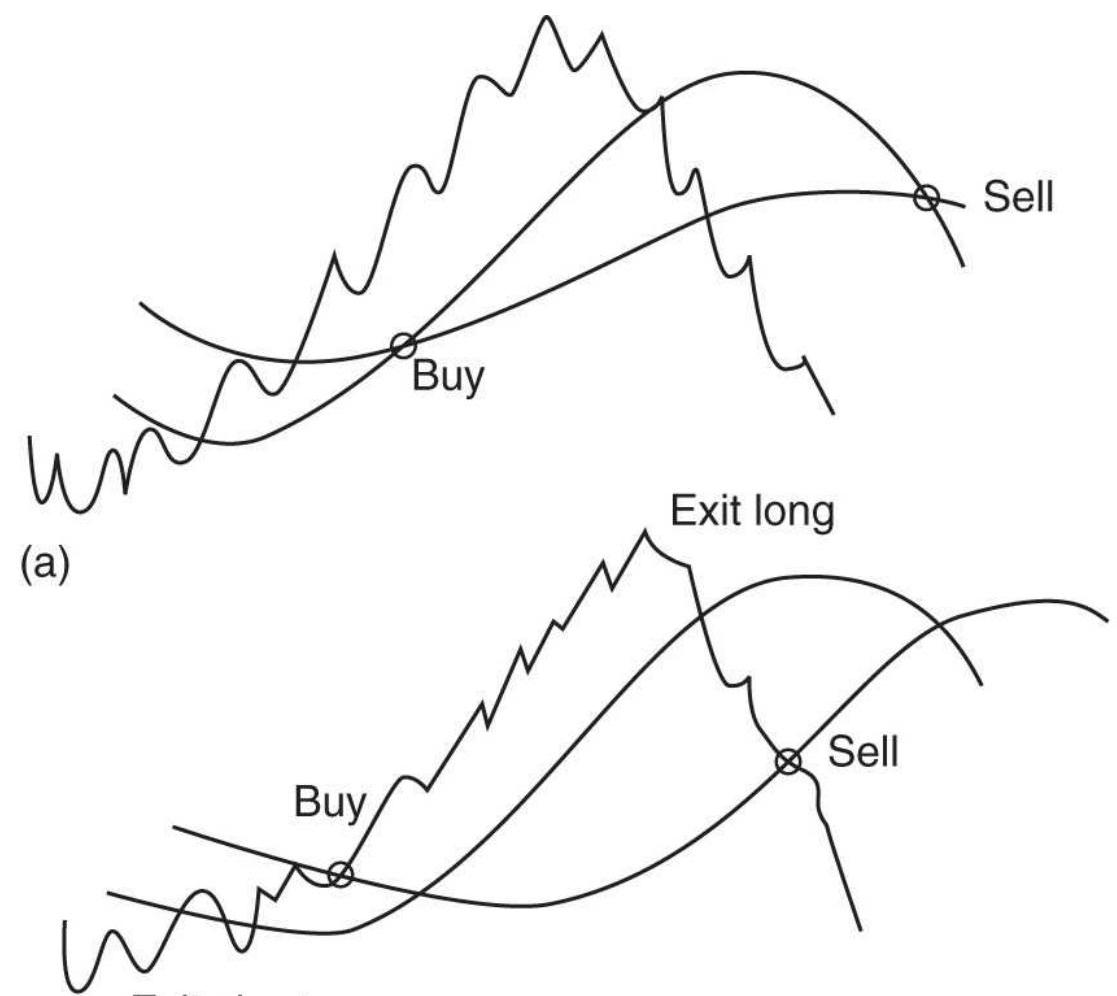

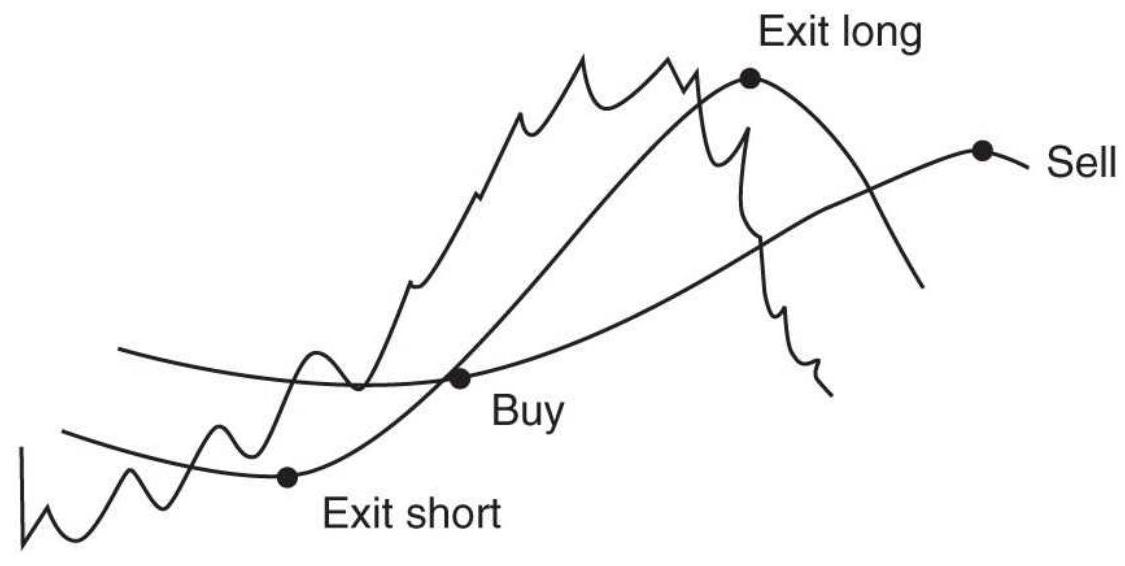

FIGURE 8.24 Three ways to trade using two

moving averages. (a) Enter and exi...

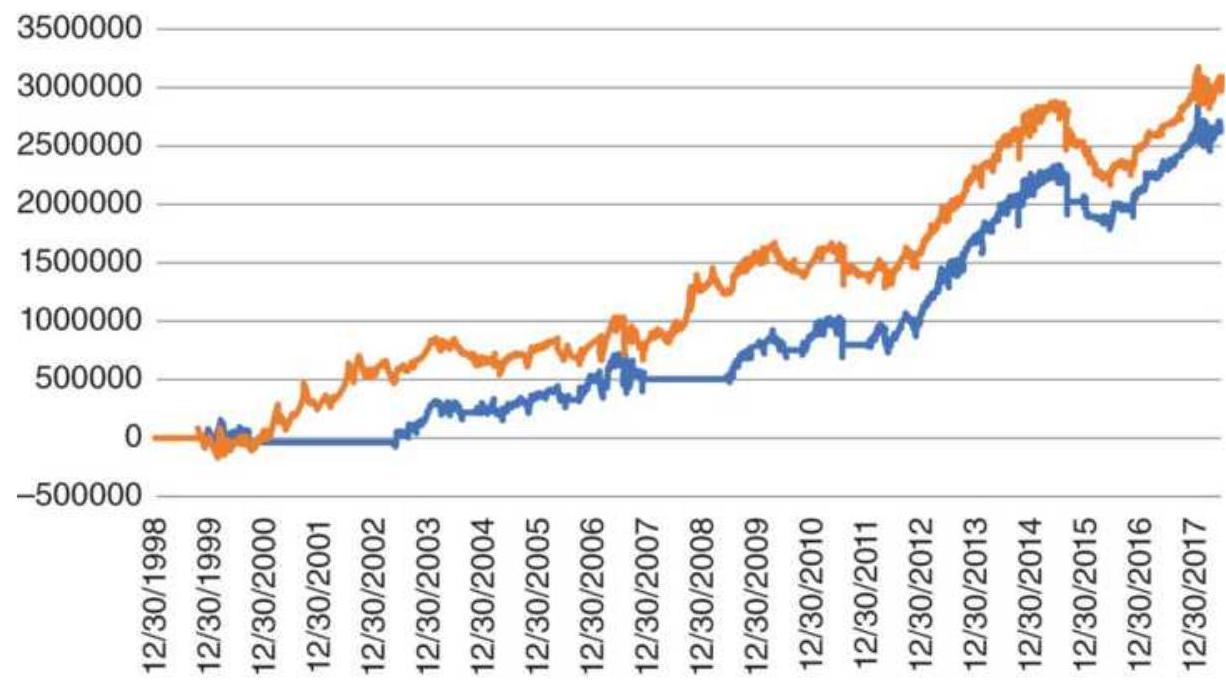

FIGURE 8.25 Moving average crossover for euro futures using 100-day and 30-d...

FIGURE 8.26 Donchian's 5- and 20-Day Moving Average System (somewhat moderni...

FIGURE 8.27 The Golden Cross applied to SPY showing both long-short and long...

FIGURE 8.28 The ROC Method applied to S\&P futures, 1998-2017.

FIGURE 8.29 The Ichimoku Cloud shown on the Dow Industrials.

FIGURE 8.30 Sequences of moving averages.

Left is the original sequence. On ...

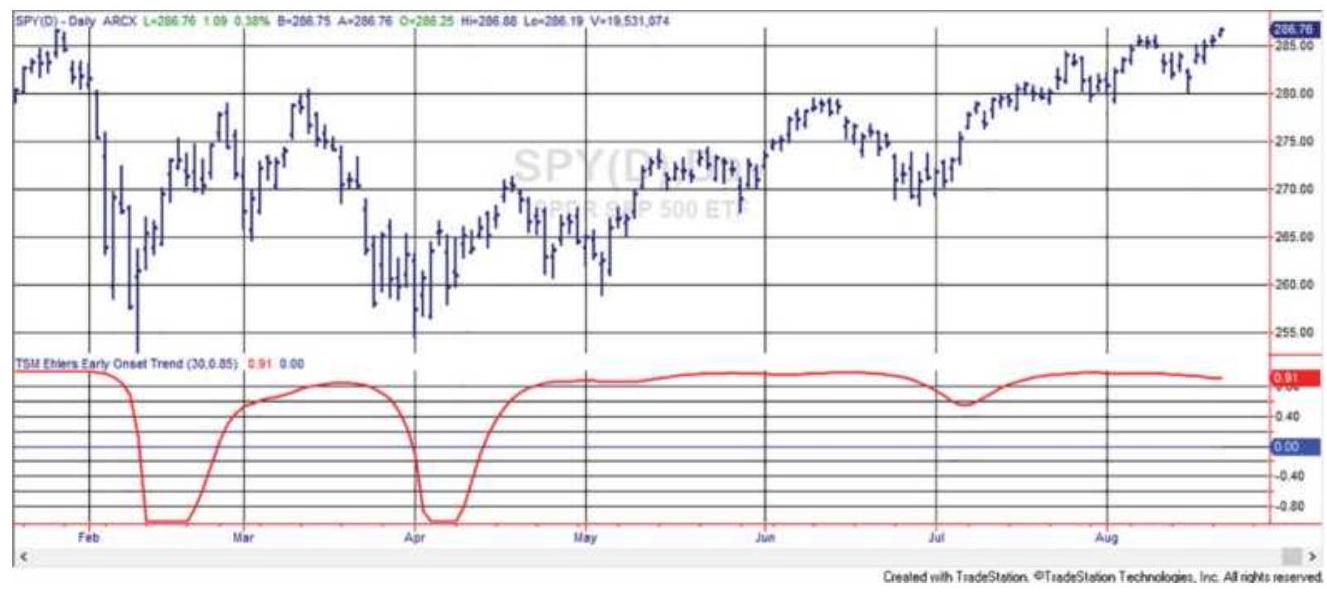

FIGURE 8.31 Ehlers' Early Onset Trend indicator for SPY during 2018.

\section*{Chapter 9}

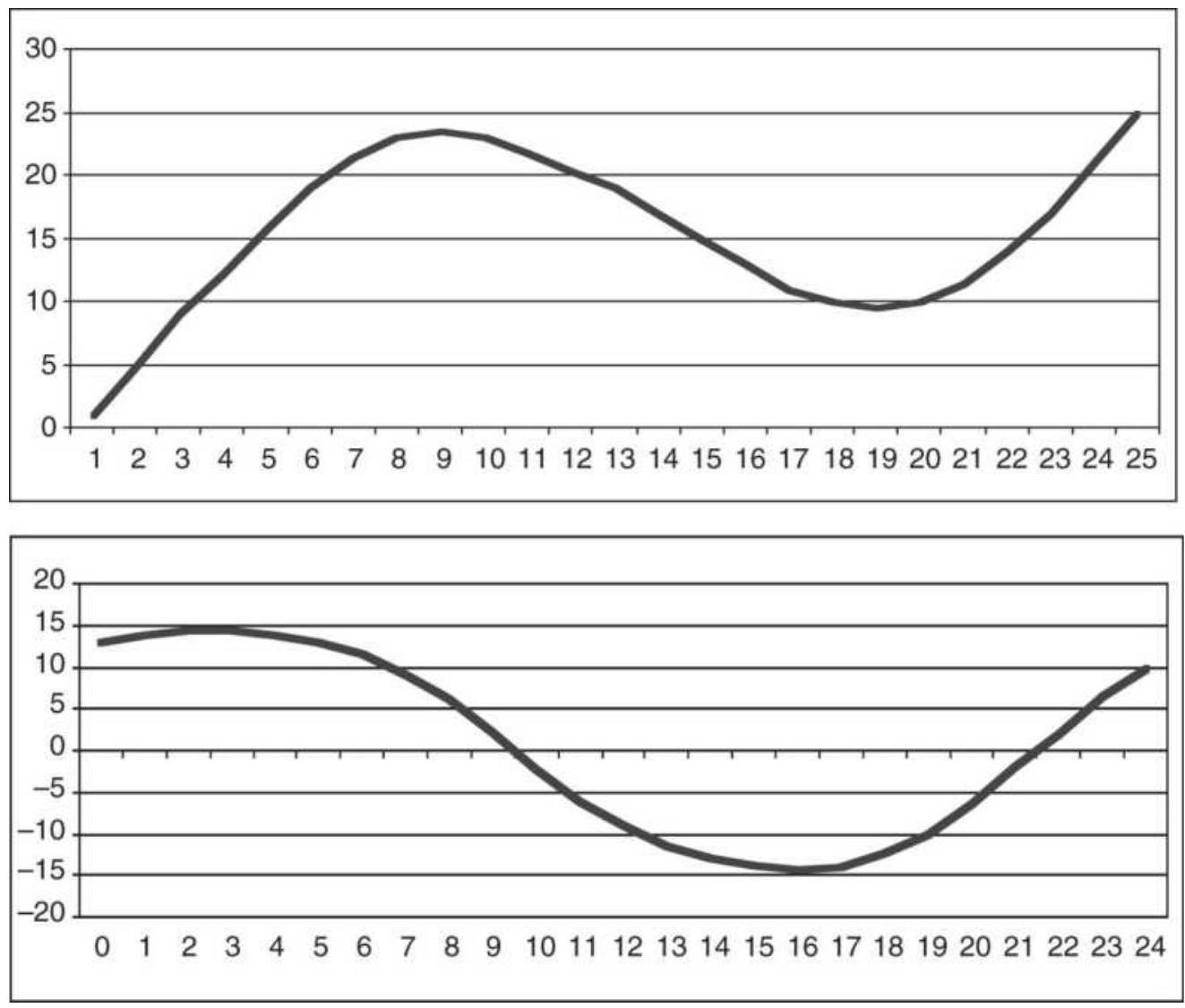

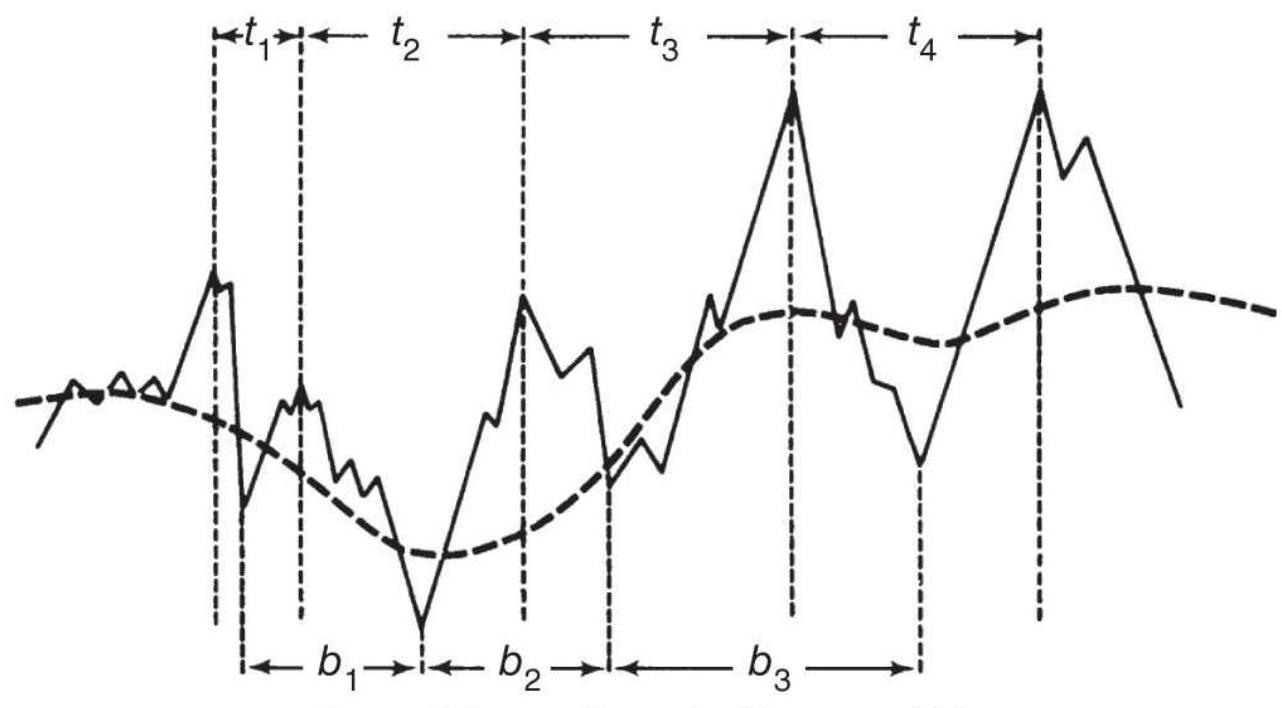

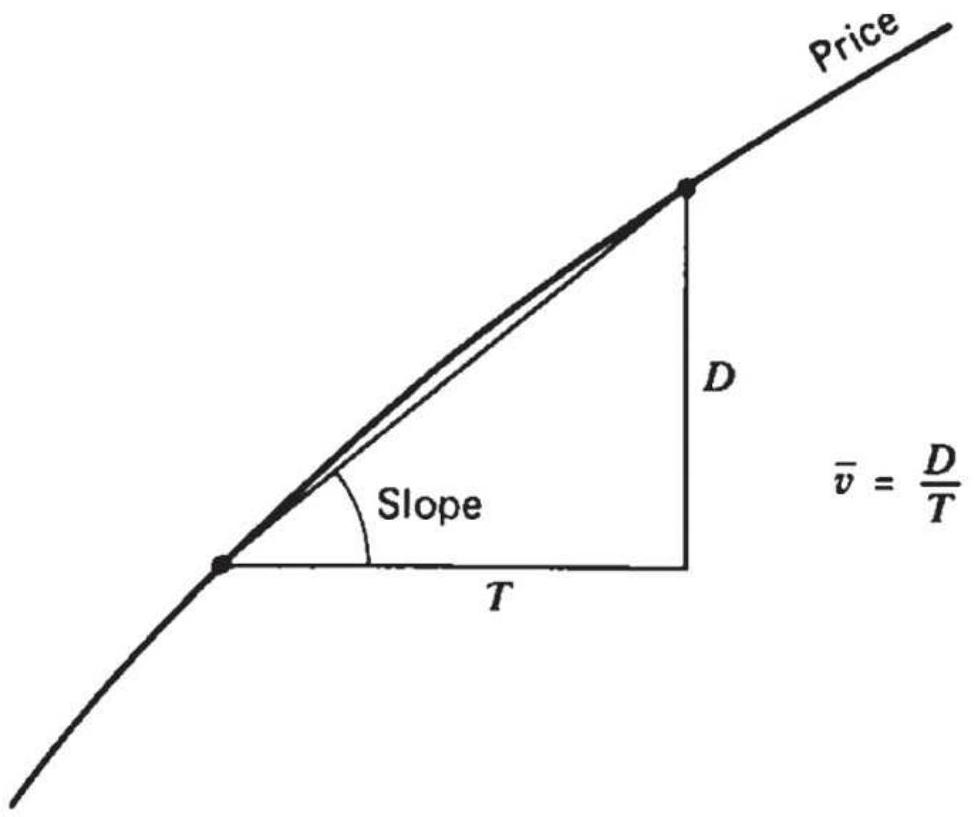

FIGURE 9.1 Geometric representation of momentum.

FIGURE 9.2 Price (top) and corresponding momentum (bottom).

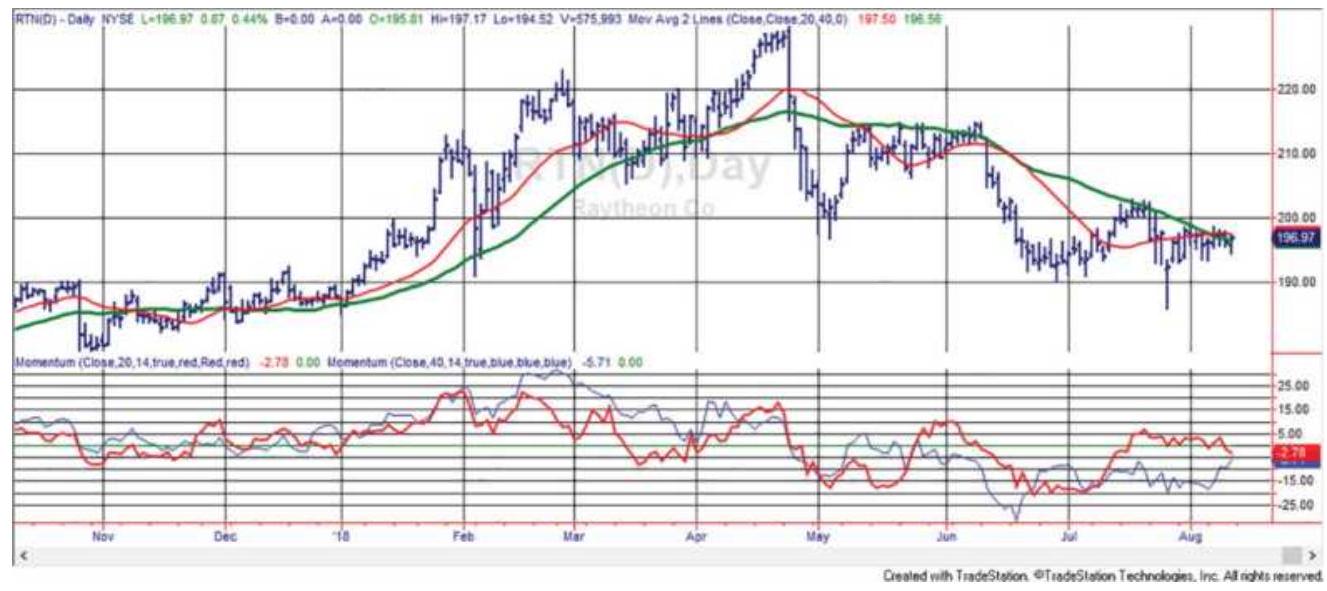

FIGURE 9.3 20- and 40-day momentum

compared to a 20- and 40-day moving avera...

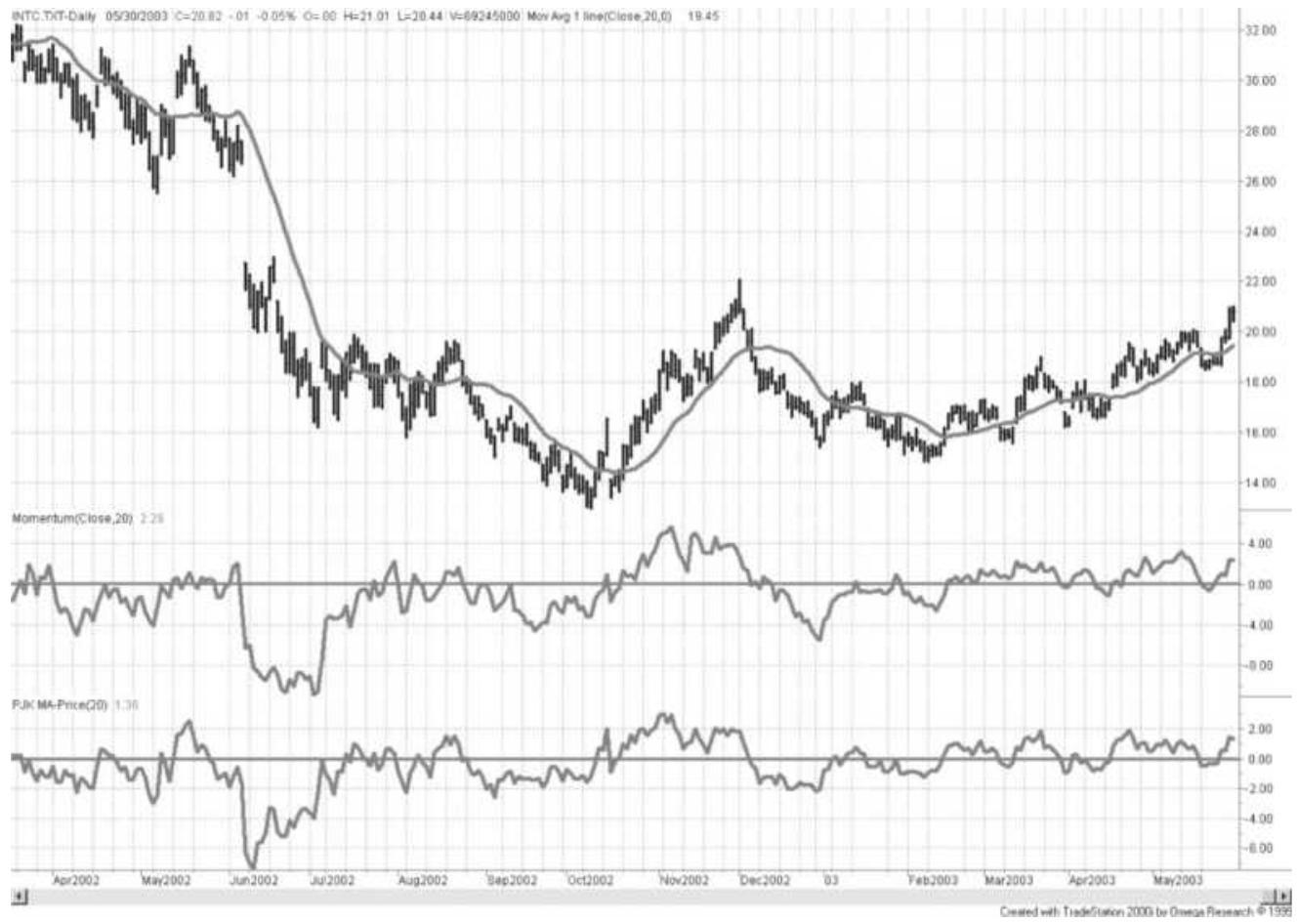

FIGURE 9.4 Momentum is also called relative strength, the difference between...

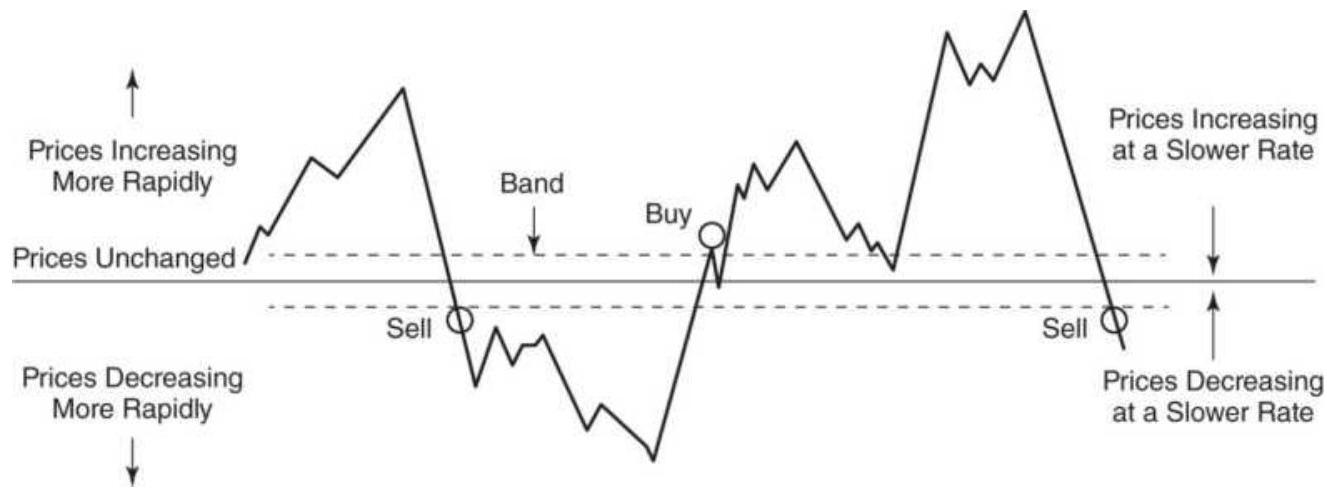

FIGURE 9.5 Trend signals using momentum.

FIGURE 9.6 Relationship of momentum to

prices. (a) Tops and bottoms determin...

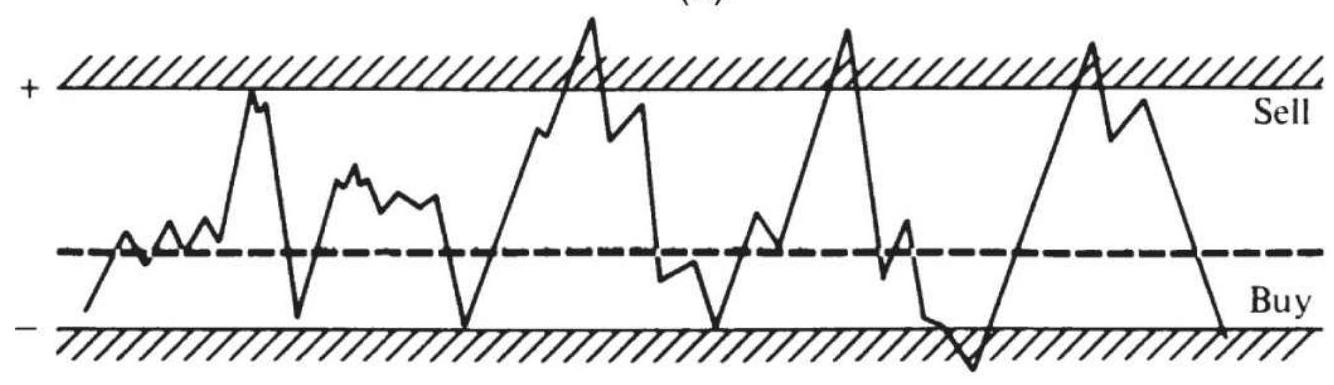

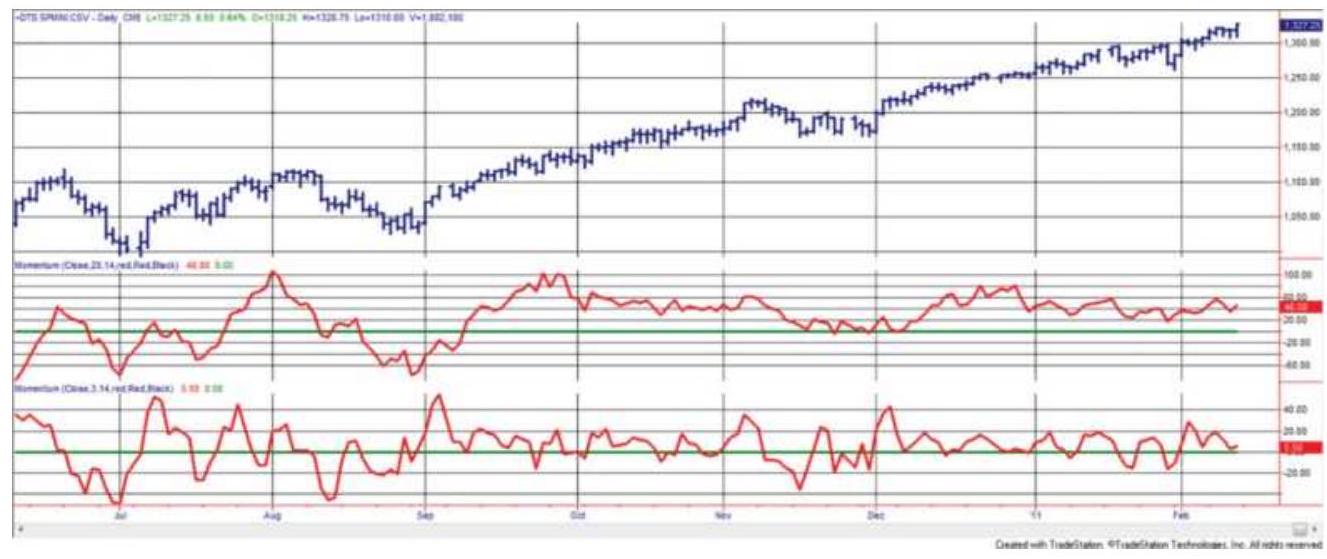

FIGURE 9.7 20-Day momentum (center panel) and 3 -day momentum (bottom panel) ...

FIGURE 9.8 A longer view of the 20- and 3-day momentum applied to the S\&P. T...

FIGURE 9.9 MACD for AOL. The MACD line is the faster of the two trendlines i...

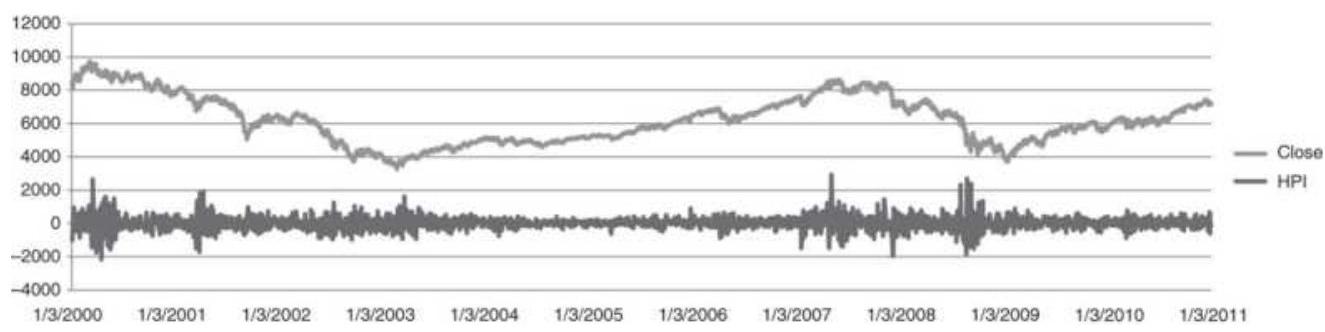

FIGURE 9.10 The Herrick Payoff Index applied to the DAX, 2000 through Februa...

FIGURE 9.11 Divergence Index applied to S\&P futures.

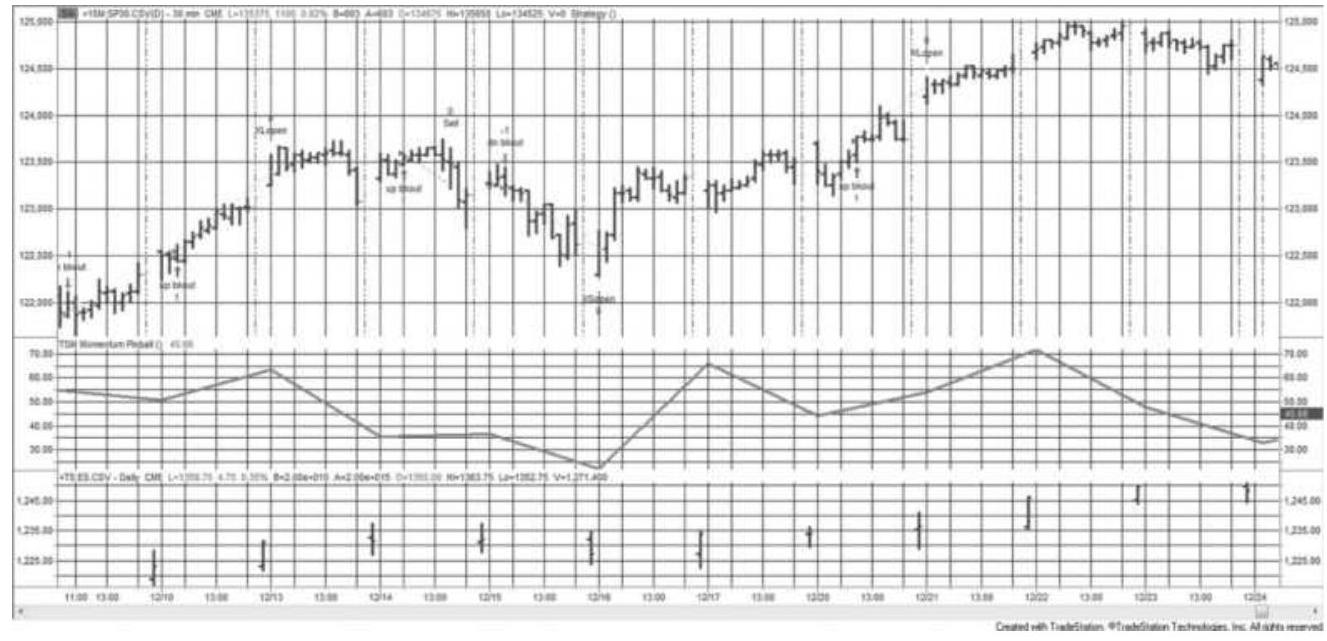

FIGURE 9.12 John Ehlers' SwamiChart showing S\&P price using 15 -minute bars a...

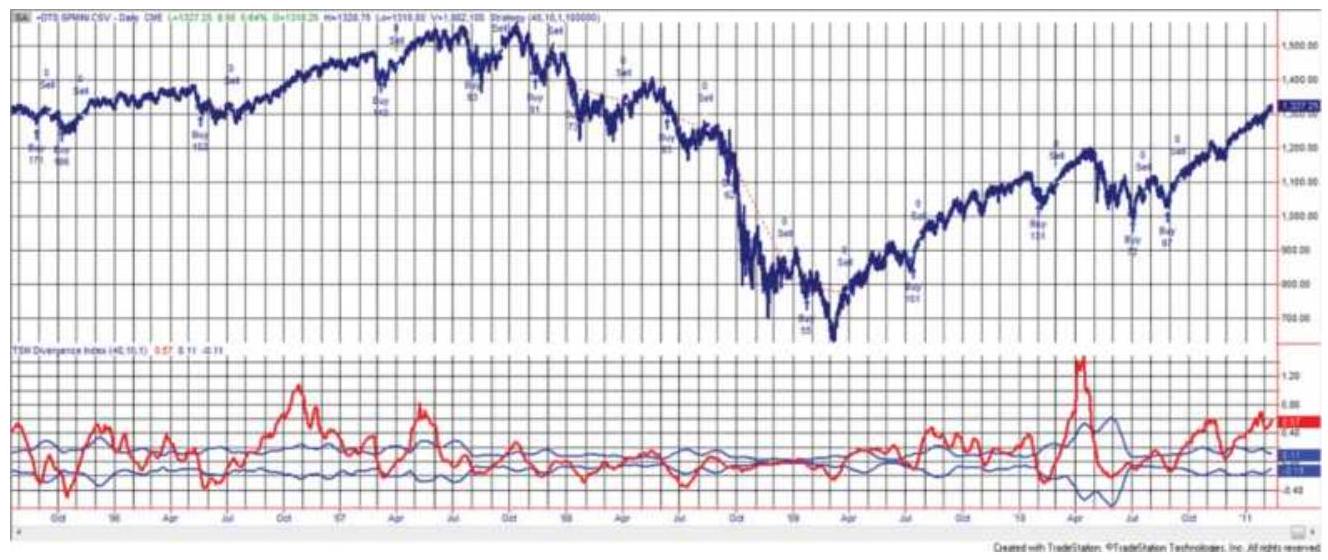

FIGURE 9.13 RSI bottom and top formations using a 20-day RSI applied to SPY....

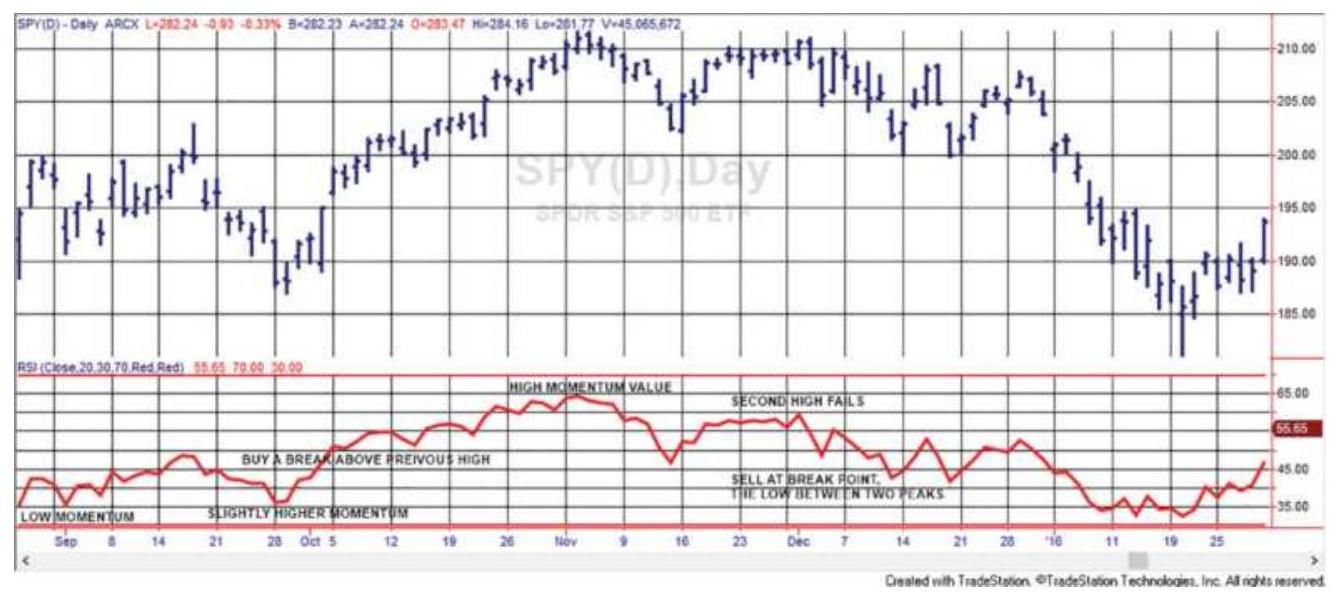

FIGURE 9.14 Performance of the 2-day RSI, MarketSci blog's favorite oscillat...

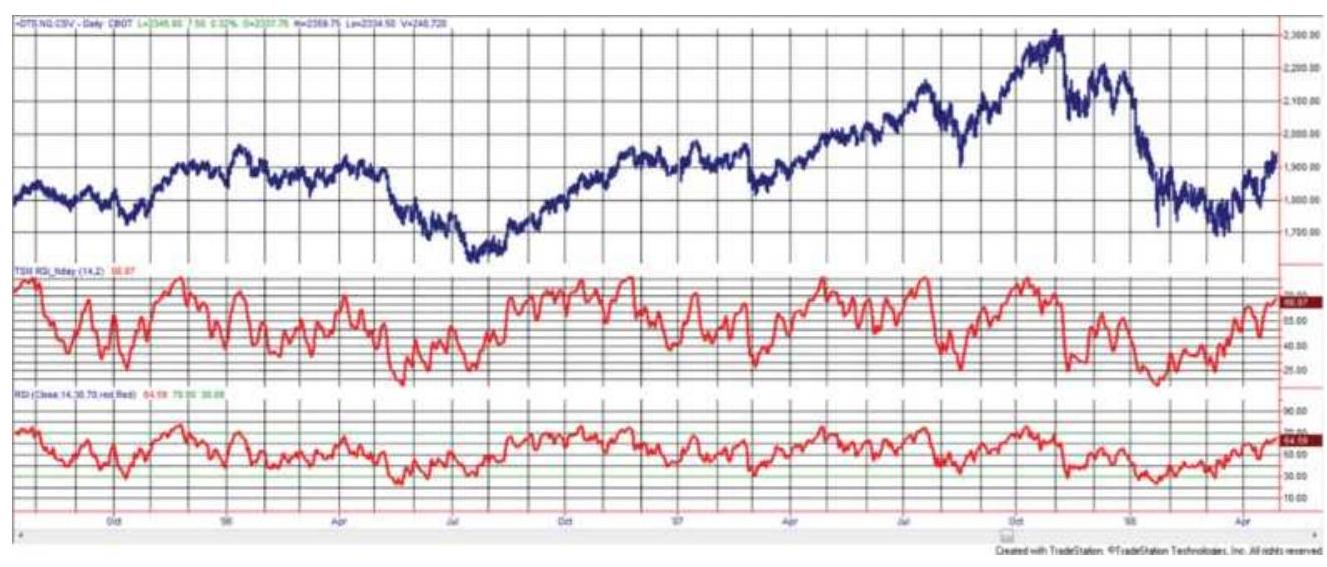

FIGURE 9.15 2-Period RSI (center panel) compared with the traditional 14-day...

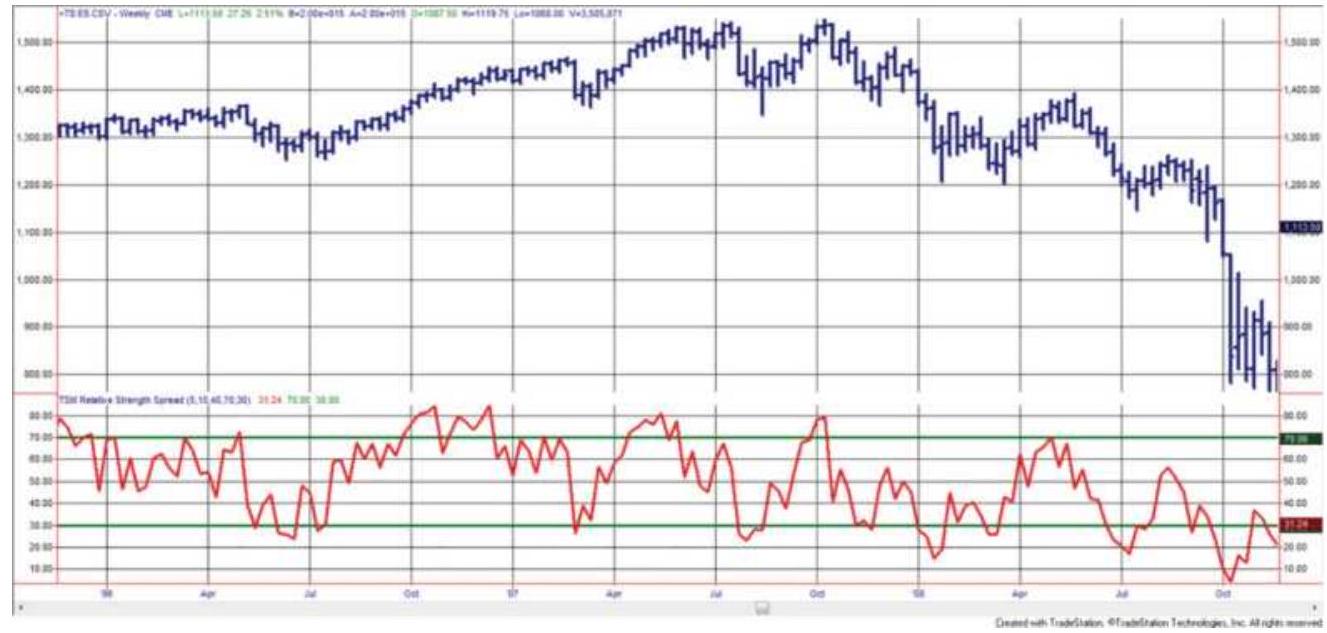

FIGURE 9.16 RSI applied to the spread between a 10 -week and 40 -week moving a...

FIGURE 9.17 Comparison of simple

momentum, RSI, and stochastic, all for 14-d...

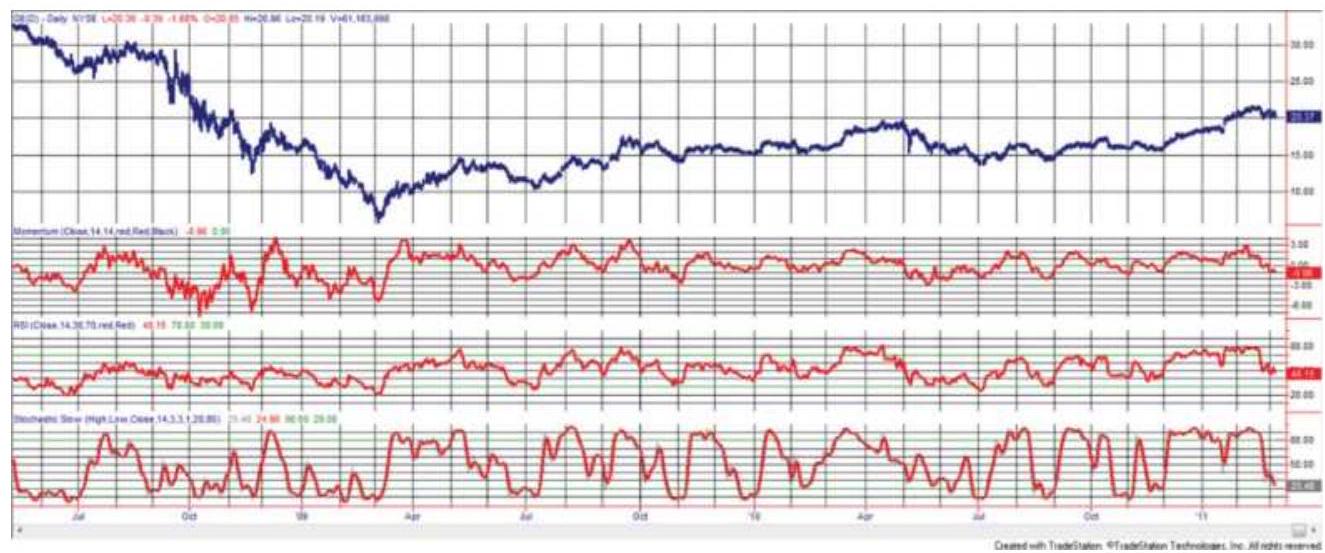

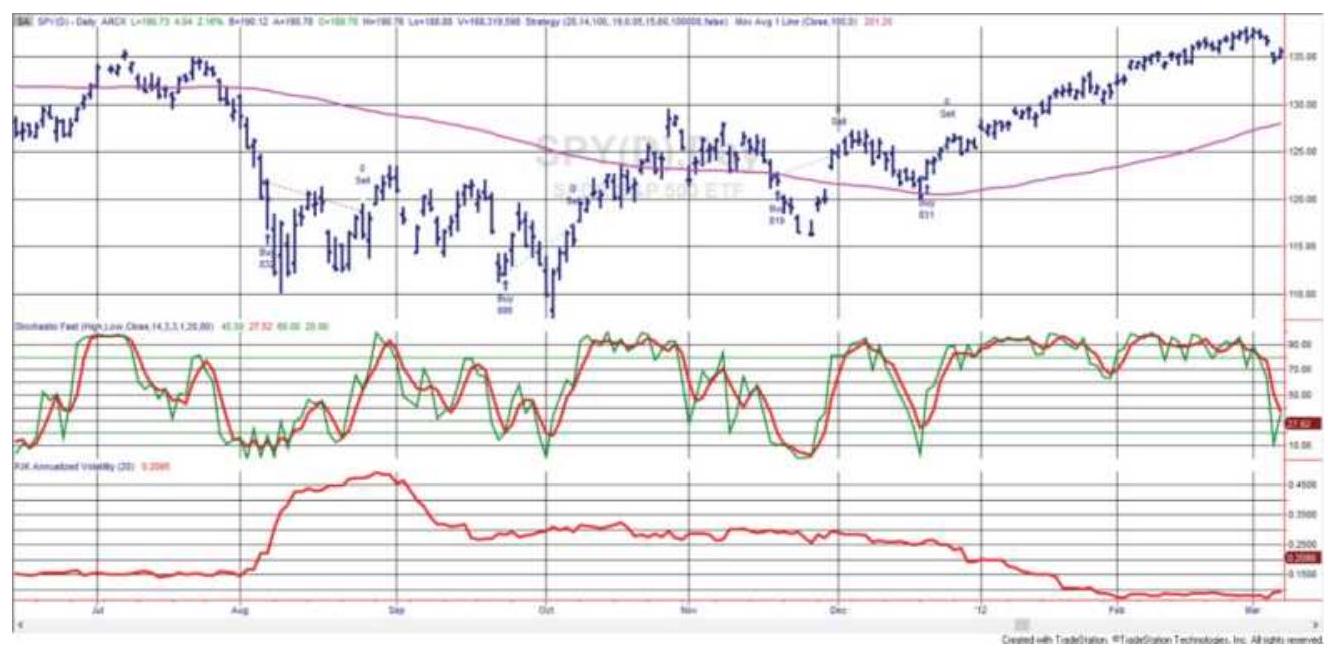

FIGURE 9.18 20-Day stochastic (bottom) and a 6o-day moving average for the S...

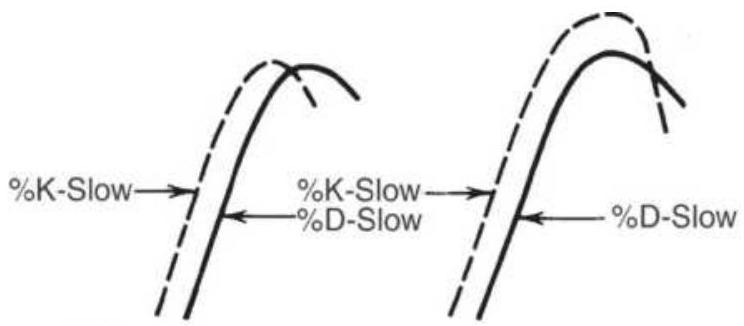

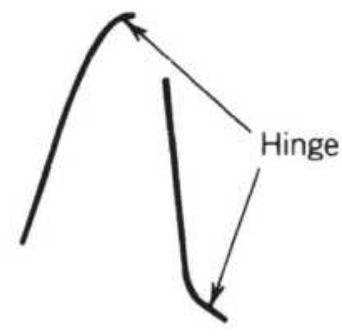

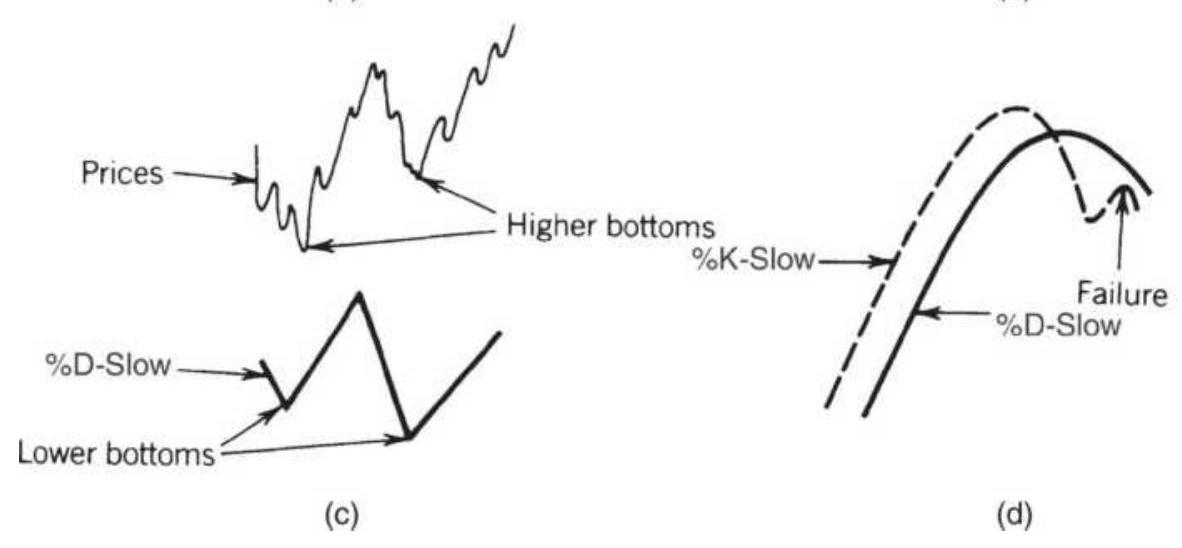

FIGURE 9.19 Lane's patterns. (a) Left and right

crossings. (b) Hinge. (c) Be...

\section*{FIGURE 9.20 Williams' A/D Oscillator.}

FIGURE 9.21 Williams' Ultimate Oscillator.

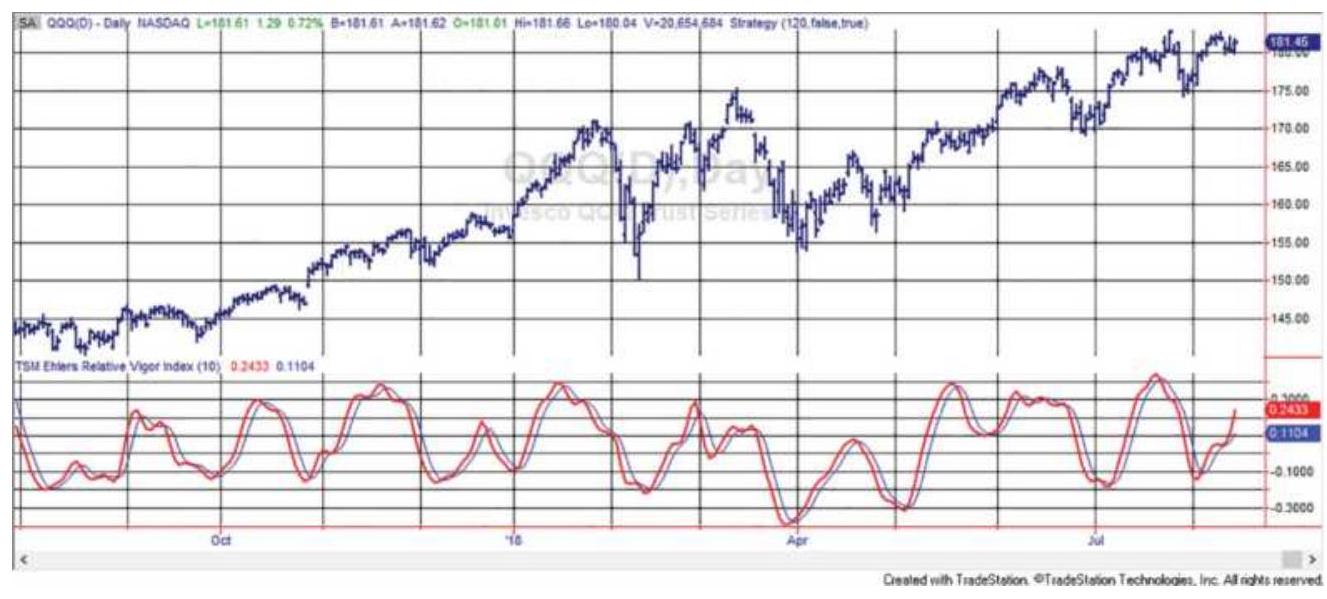

FIGURE 9.22 Ehlers' Relative Vigor Index for QQQ.

FIGURE 9.23 Comparing the TSI with 10-2020 smoothing (bottom) to a standard...

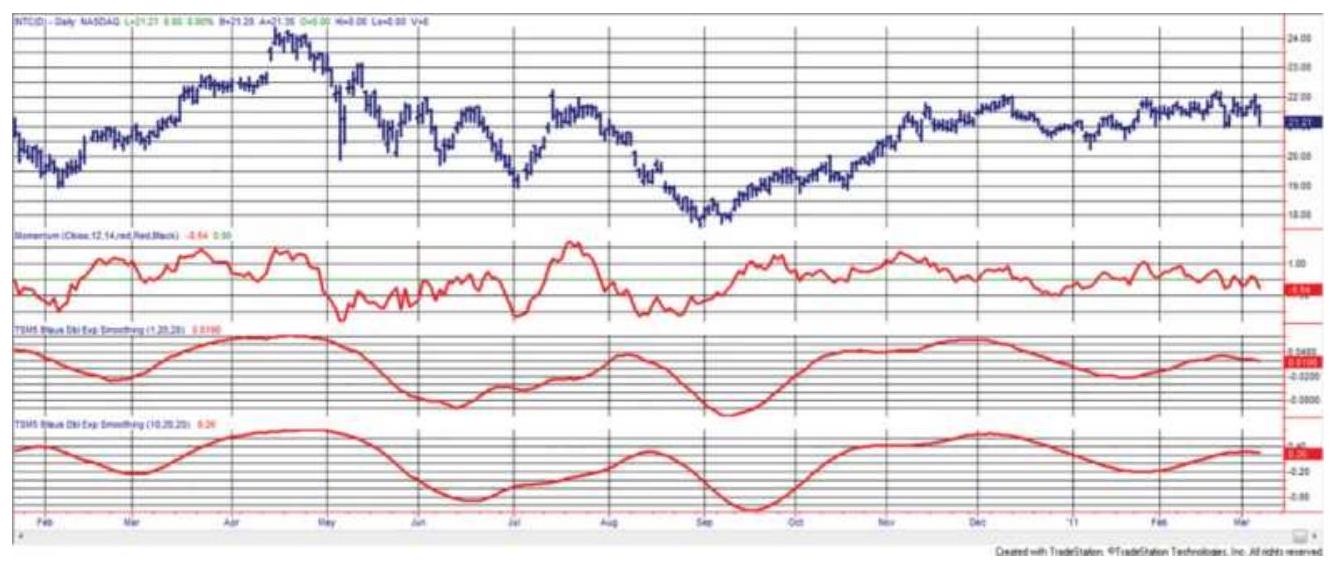

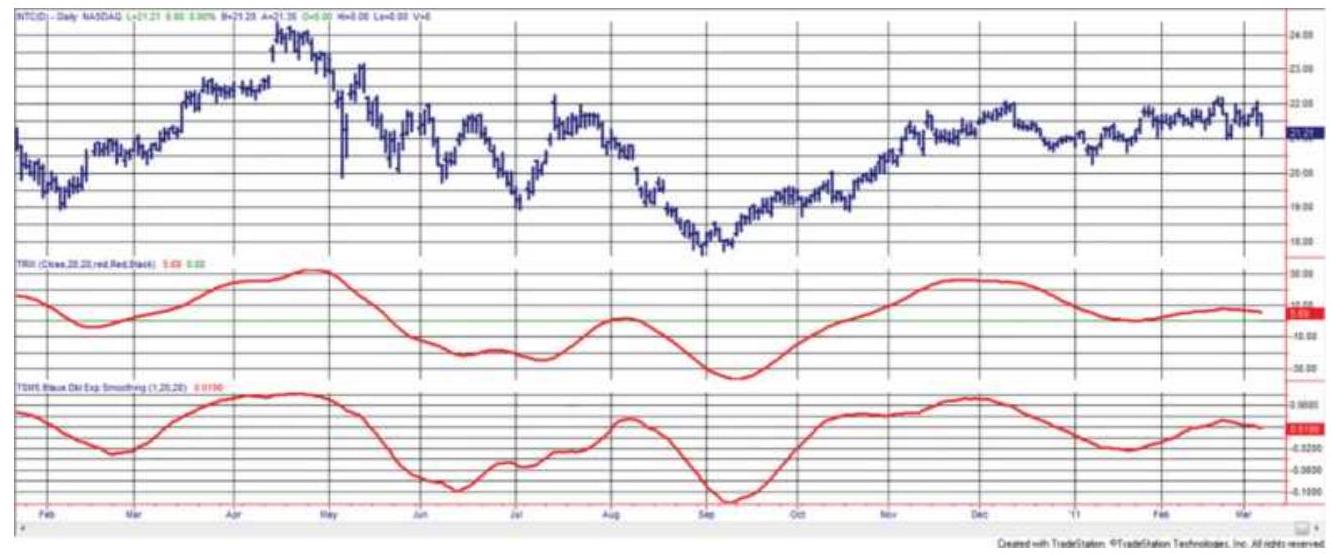

FIGURE 9.24 Comparison of TRIX (center panel) and TSI (lower panel) using tw...

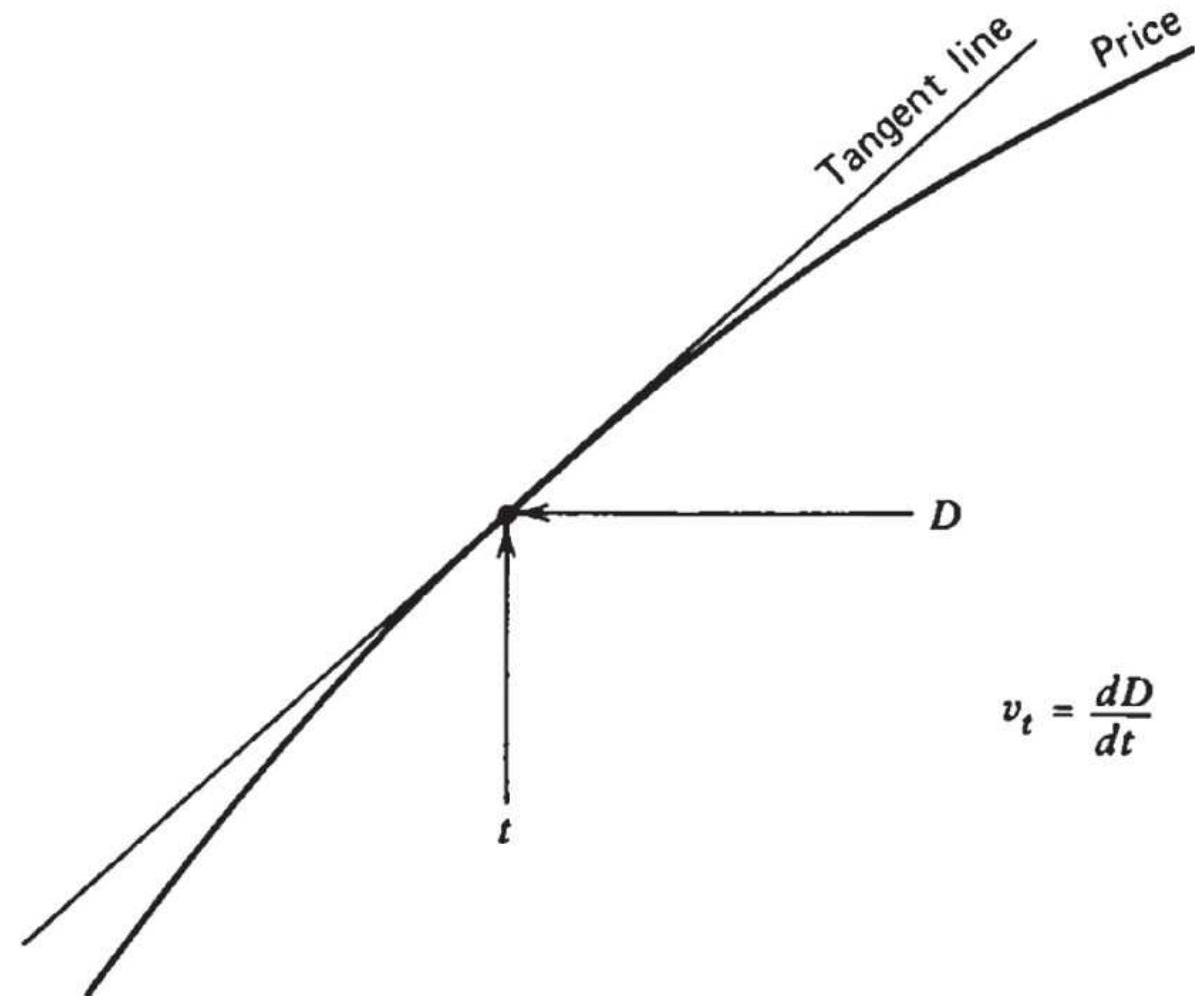

FIGURE 9.25 (a) Average velocity. (b)

Instantaneous velocity.

FIGURE 9.26 SPY prices (top) with first differences (center) and second diff...

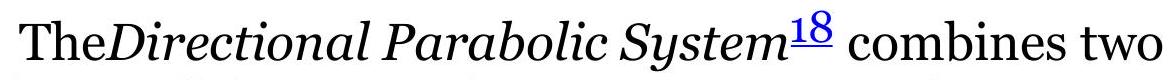

FIGURE 9.27 The Parabolic Stop-and-Reverse, similar to the Direction Parabol...

FIGURE 9.28 Momentum divergence.

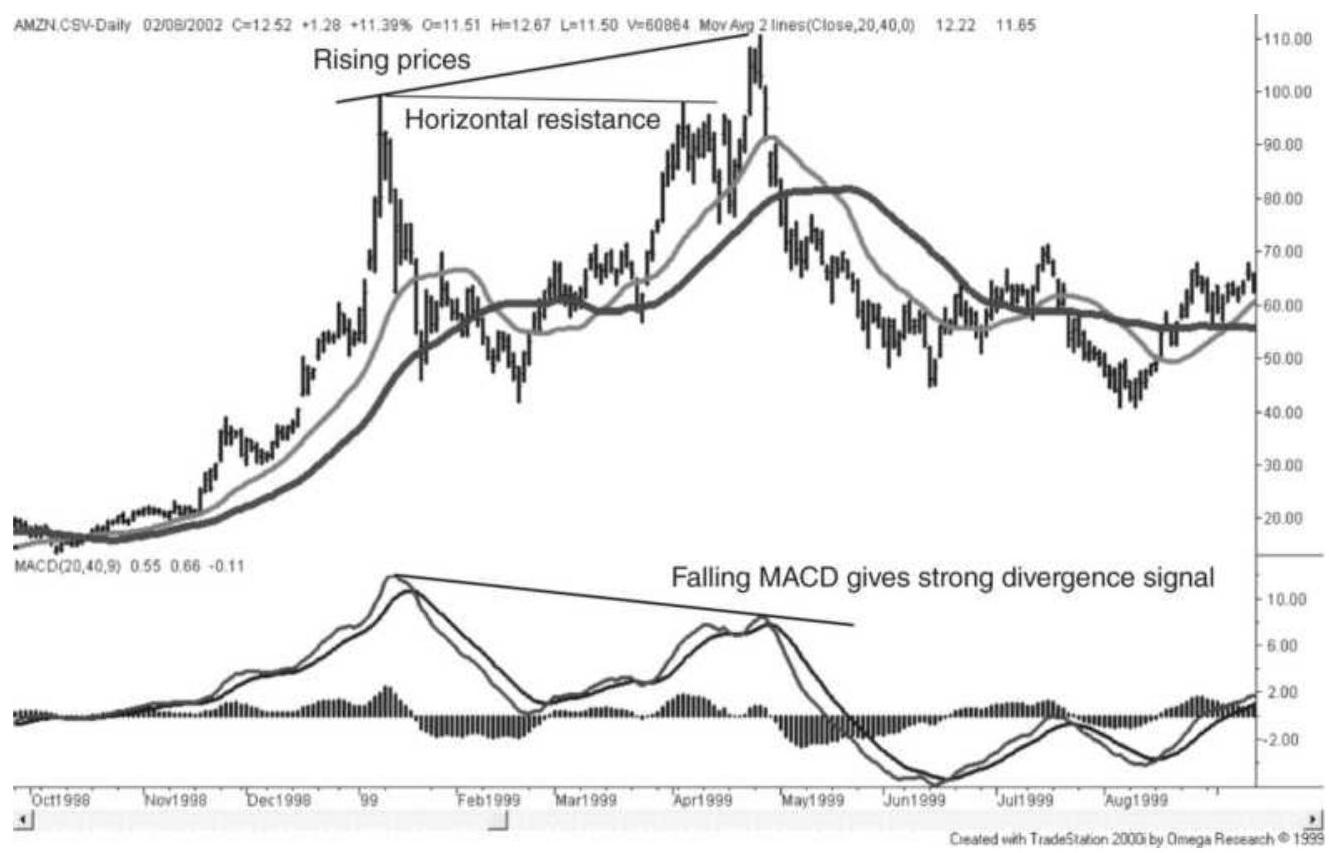

FIGURE 9.29 An example of divergence in Amazon.com.

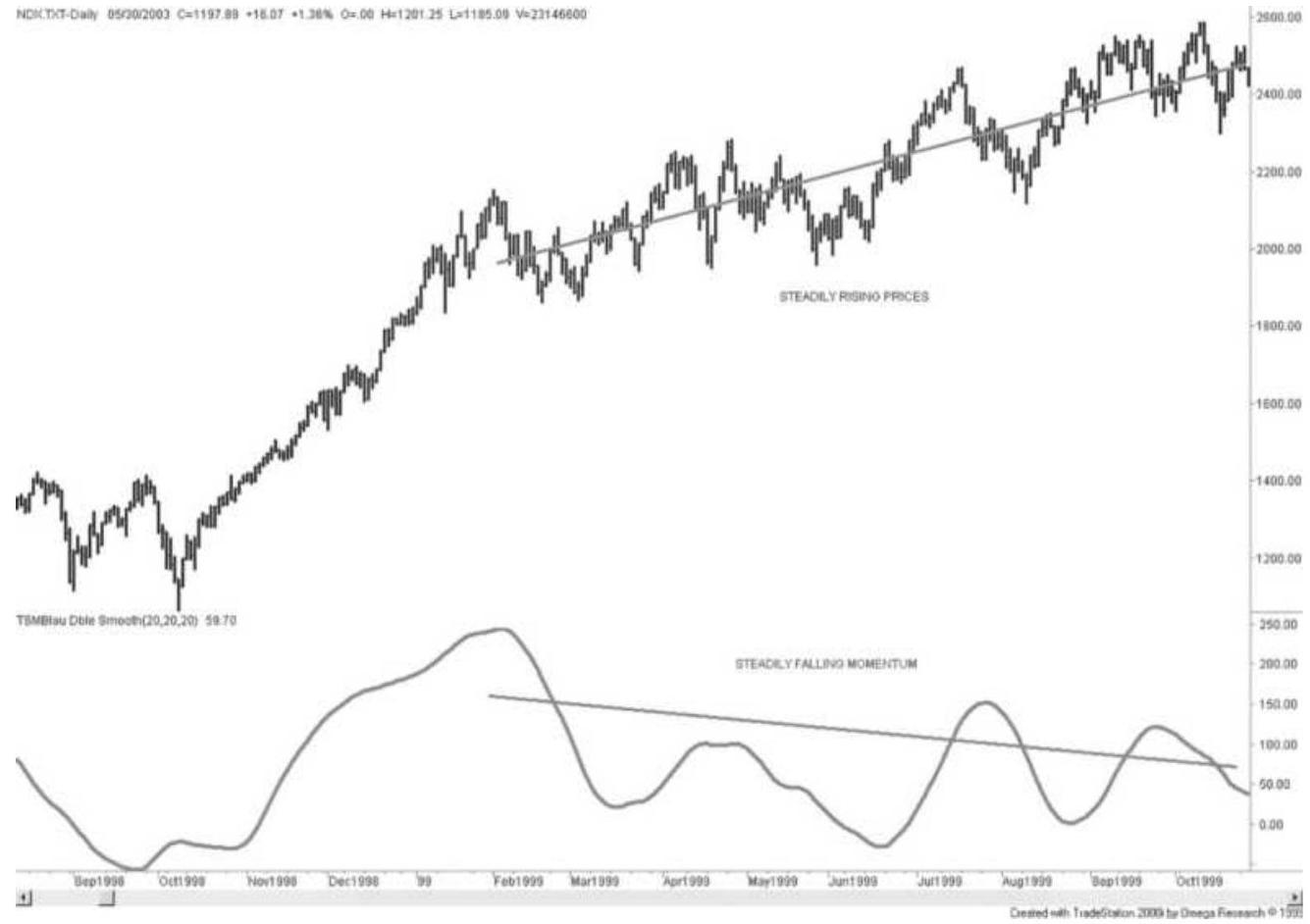

FIGURE 9.30 Slope divergence of NASDAQ 100 using double smoothing.

Chapter 10

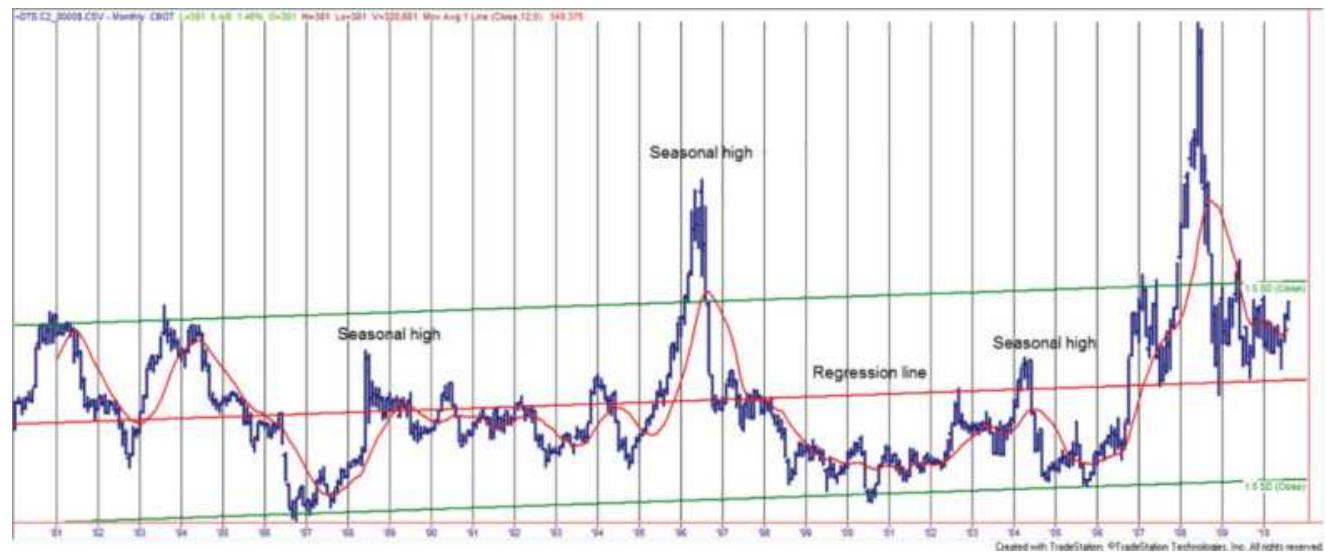

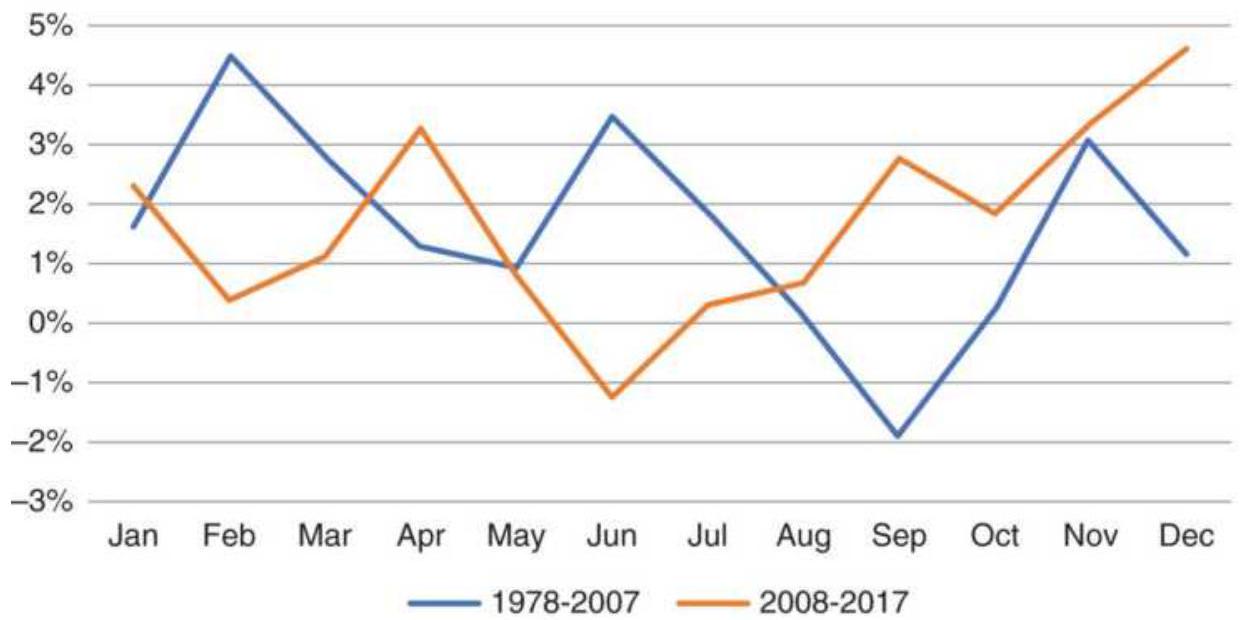

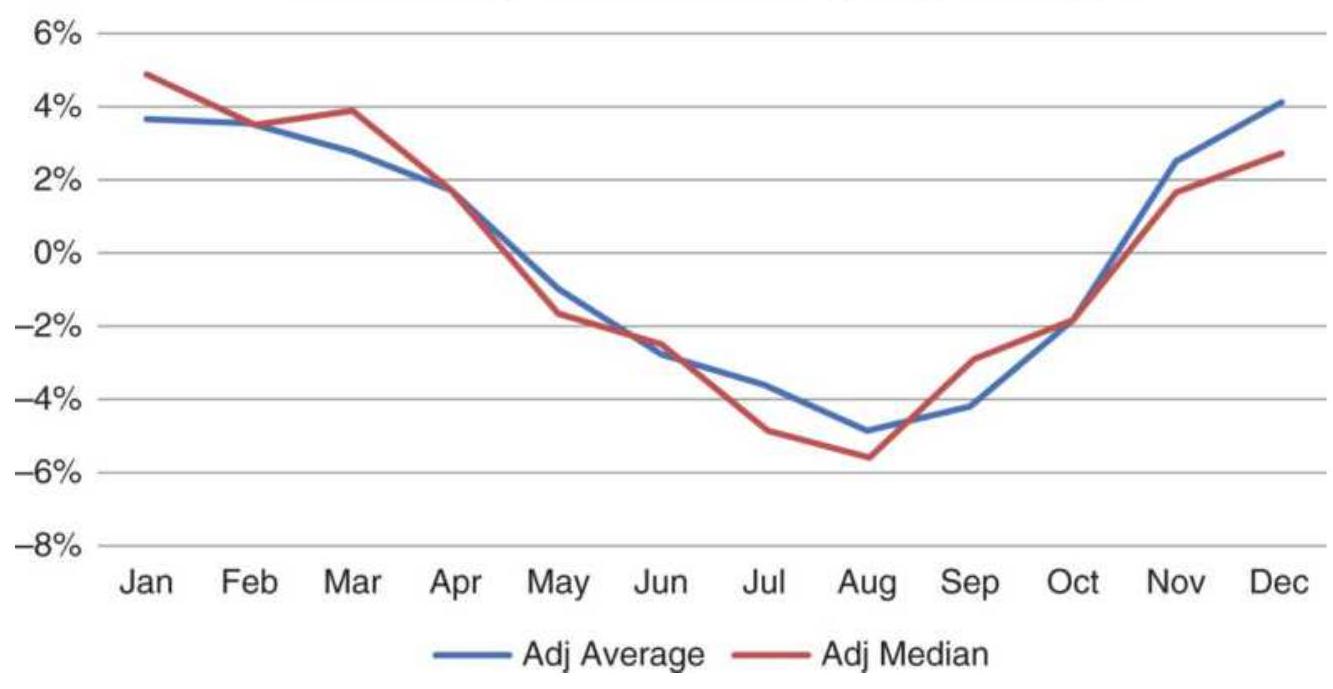

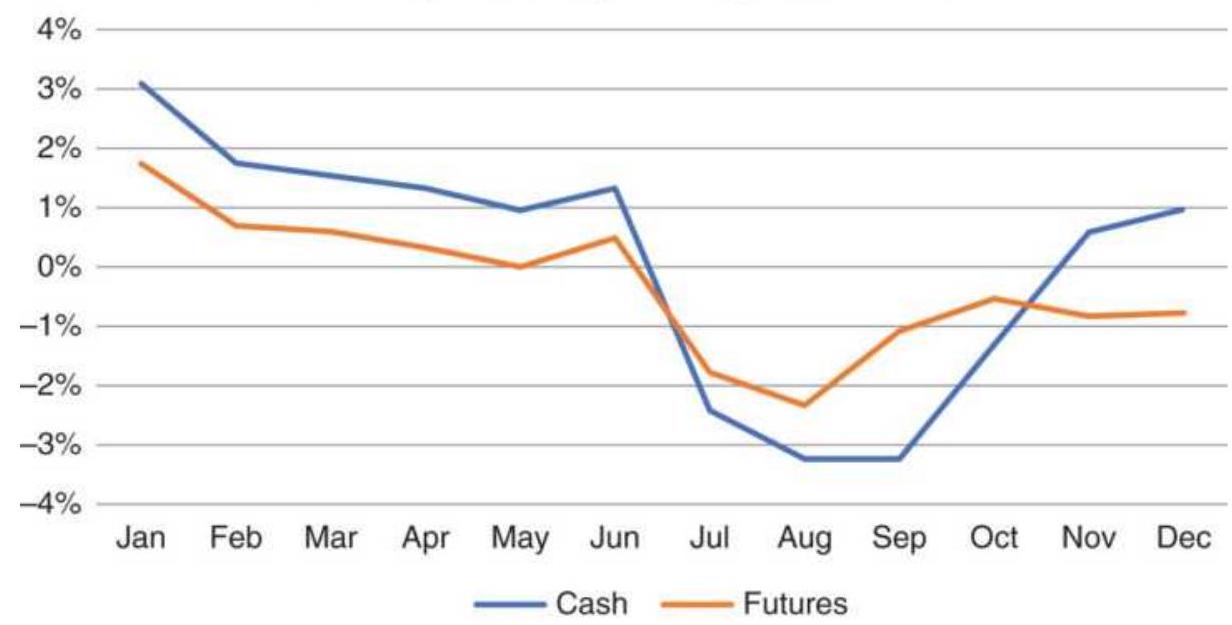

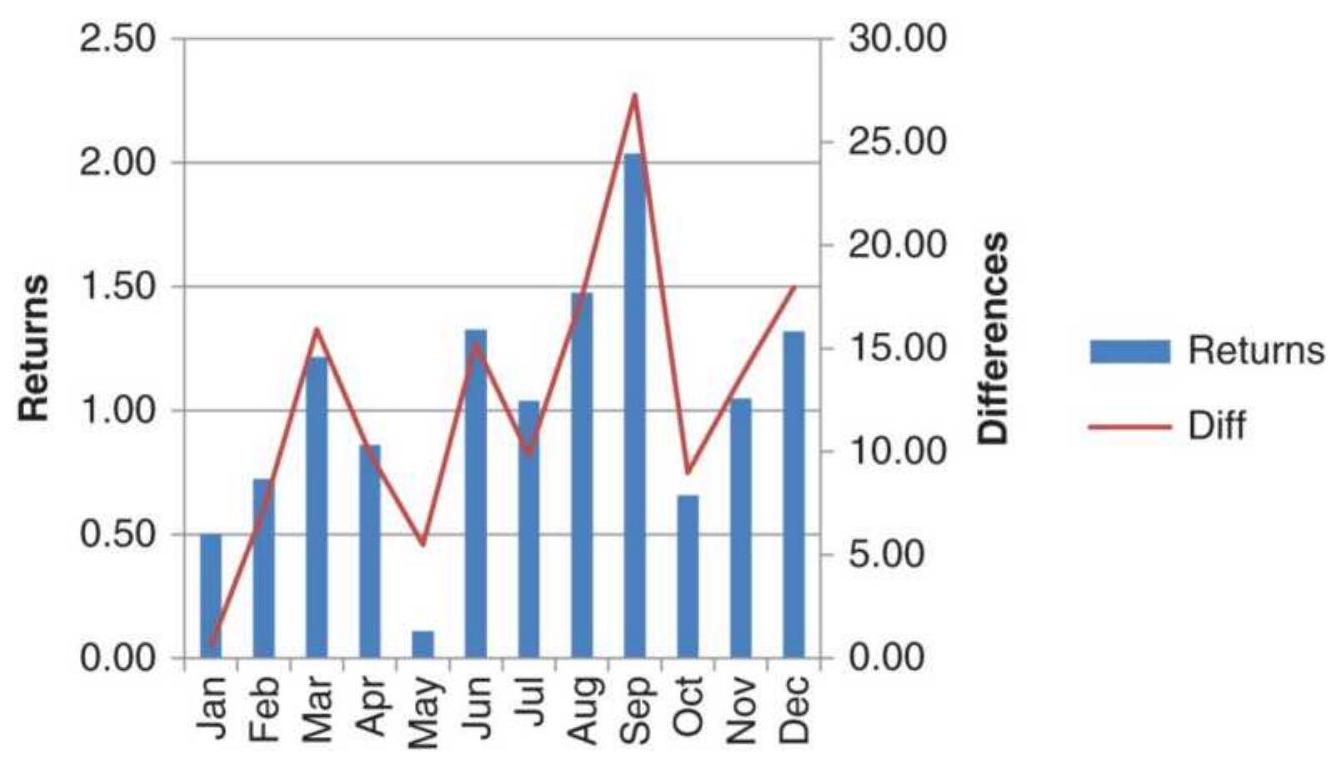

FIGURE 10.1 A classic chart of wheat seasonality using cash prices, 1978-201...

FIGURE 10.2 Wheat seasonality from backadjusted futures, using differences....

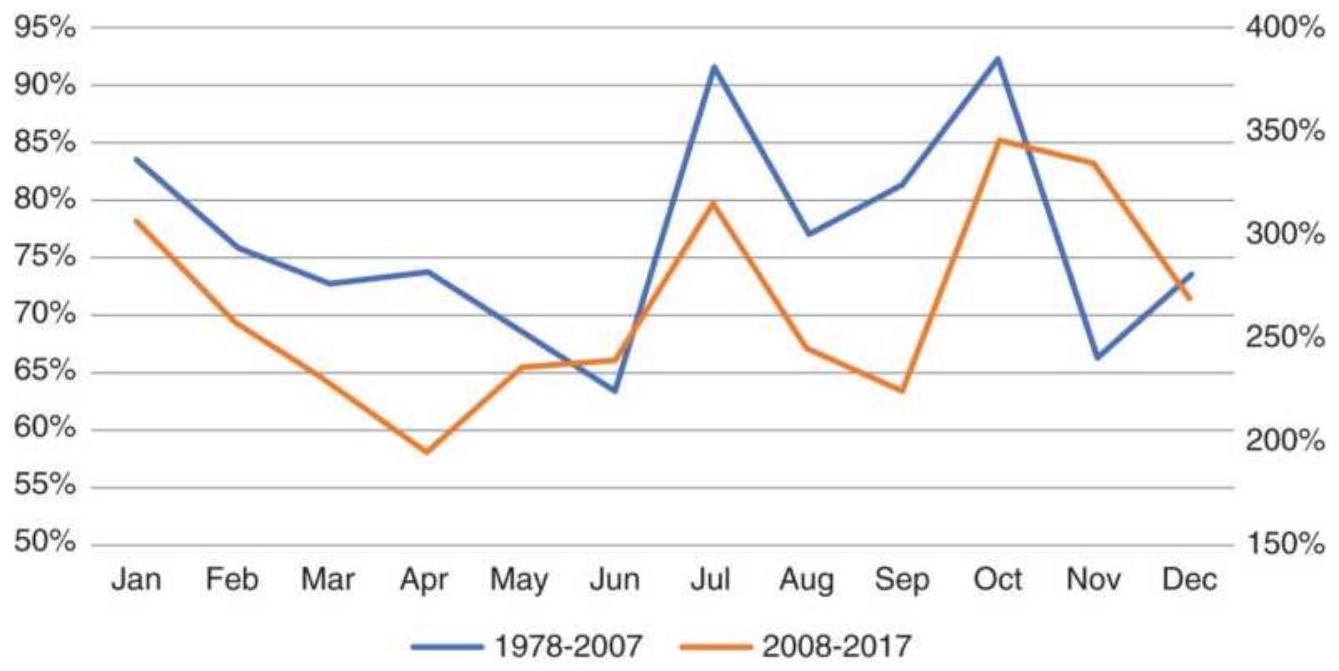

FIGURE 10.3 Percentage of profitable years for cash wheat.

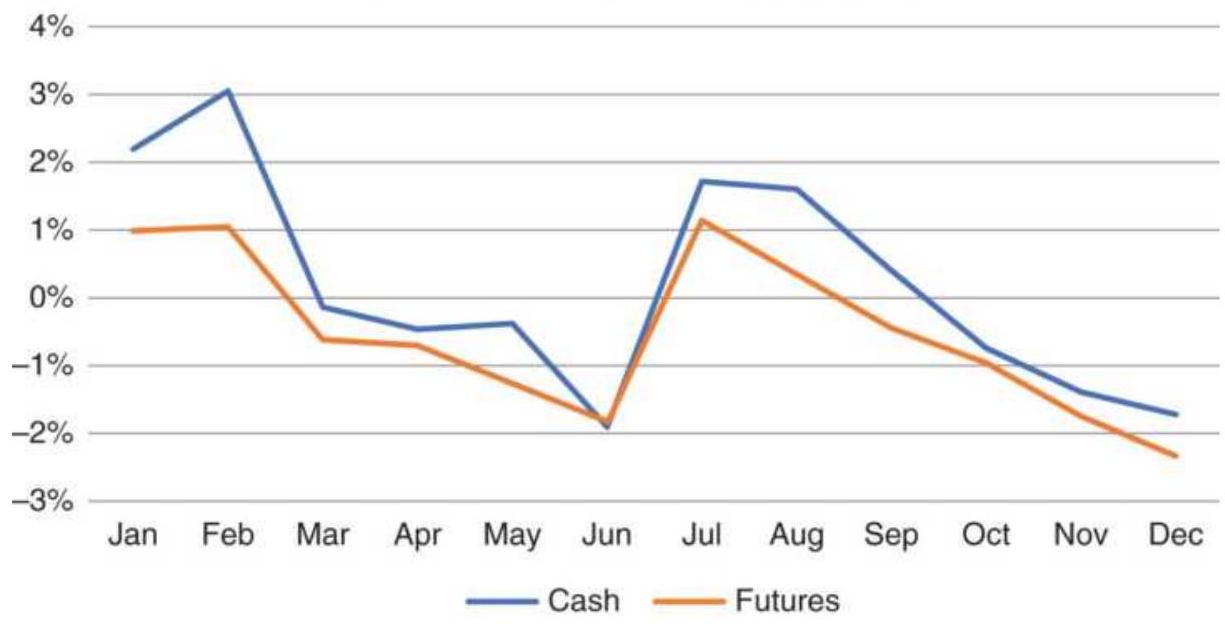

FIGURE 10.4 (a) Cash corn average monthly returns, 1978-2017. (b) Percentage...

FIGURE 10.5 (a) Cash cotton average monthly returns, 1978-2017. (b) Percenta...

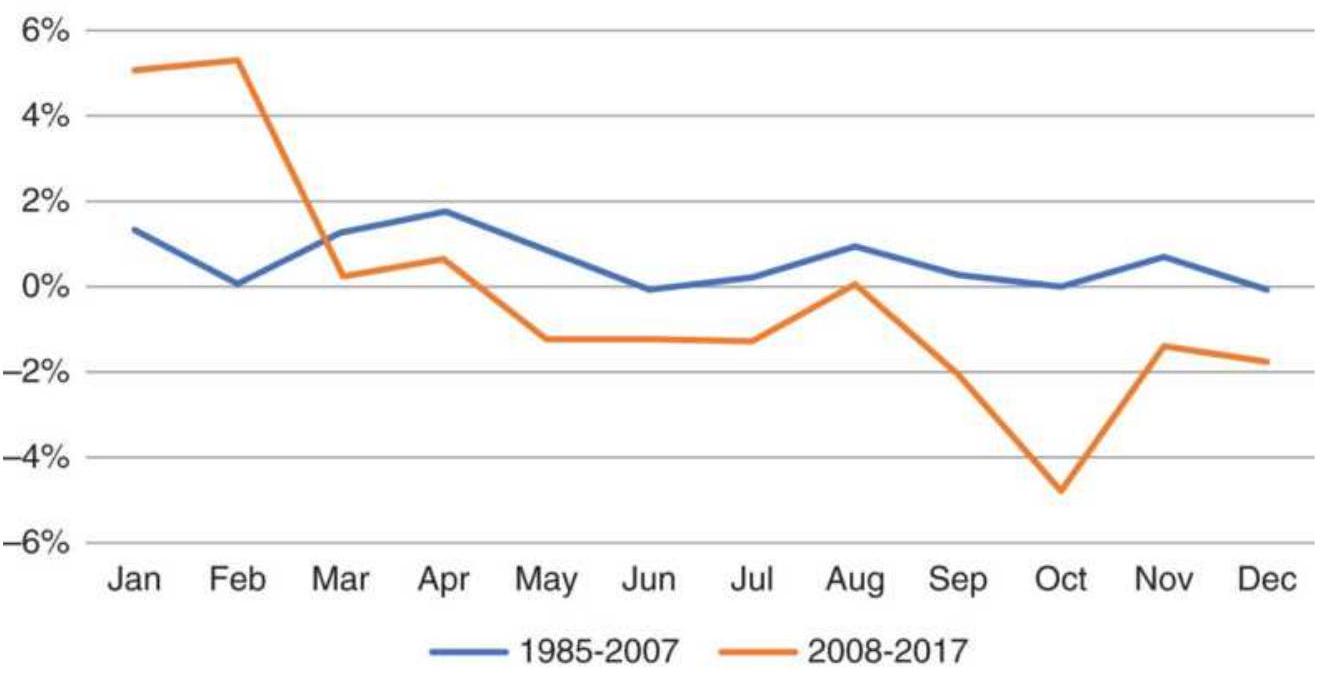

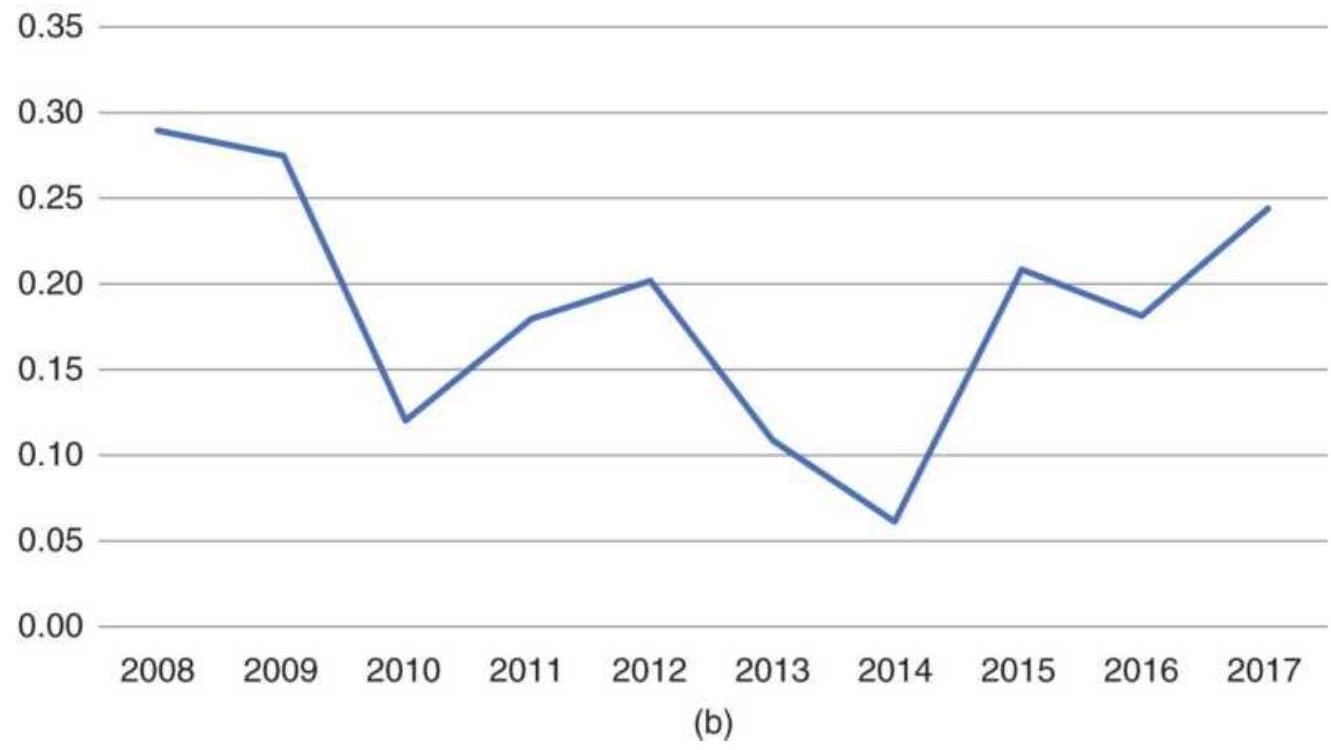

FIGURE 10.6 (a) Unleaded gasoline, average yearly percentage change, 1985-20...

FIGURE 10.7 Comparison of heating oil monthly returns, with and without, 200...

FIGURE 10.8 (a) Cotton average monthly returns. (b) High-low price range, in...

FIGURE 10.9 Cotton percentage monthly changes, sorted highest to lowest.

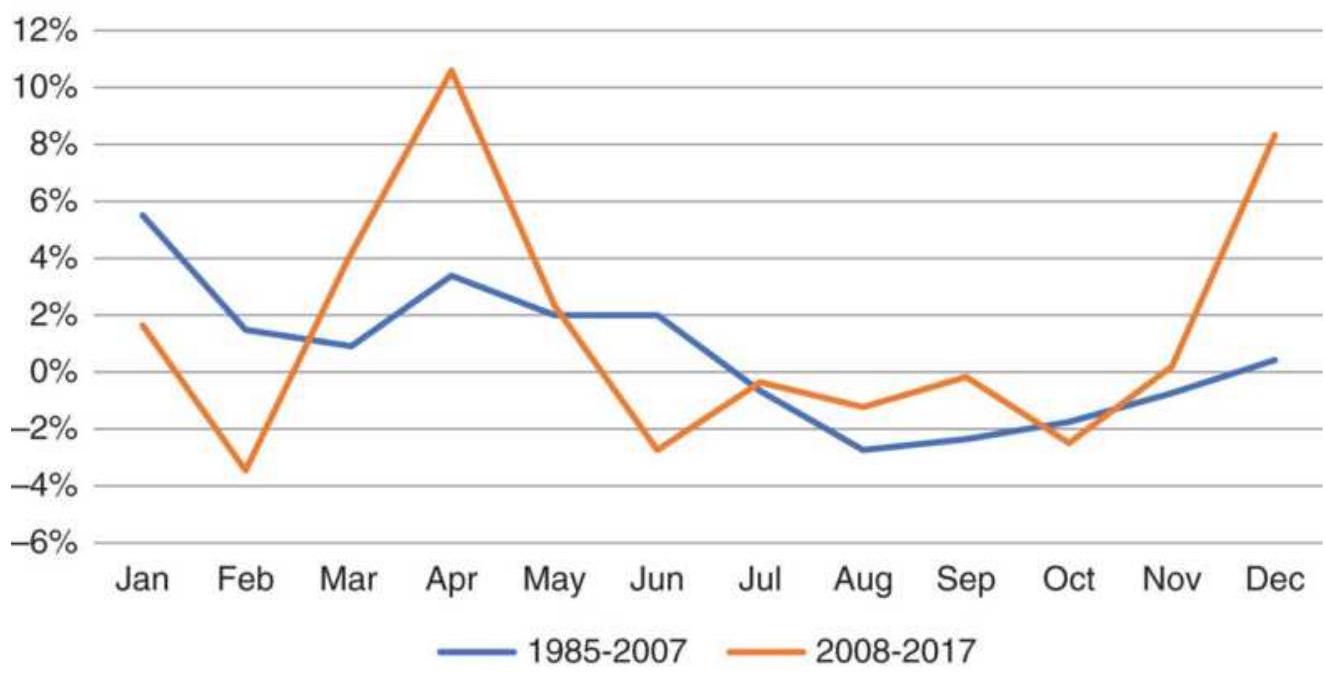

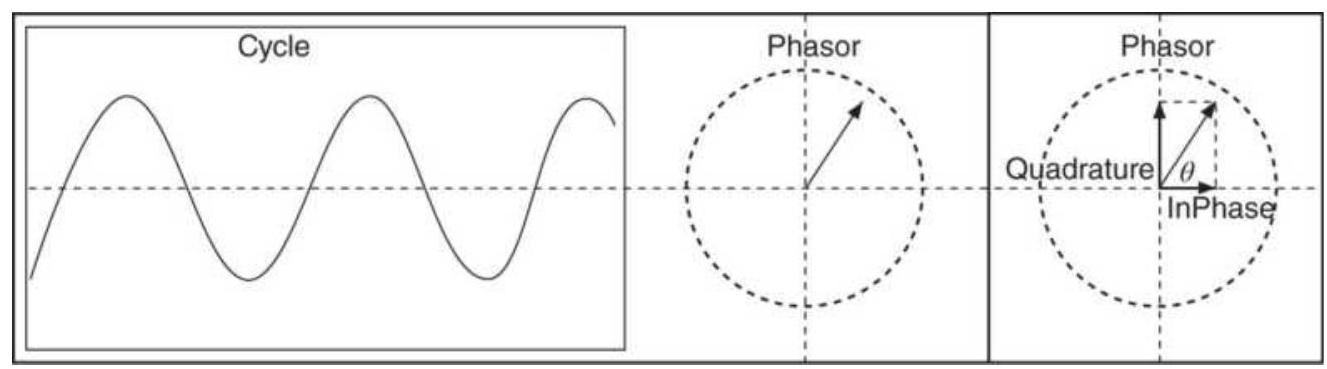

FIGURE 10.10 (a) Average returns by month for Southwest Airlines (LUV). (b) ...

FIGURE 10.11 (a) Amazon average returns by month. (b) eBay average returns b...

FIGURE 10.12 Comparing the change in percentage of positive years for Amazon... FIGURE 10.13 Platinum average returns by month shows much greater demand in ...

FIGURE 10.14 Ford returns, during the past ten years, spike in the spring an...

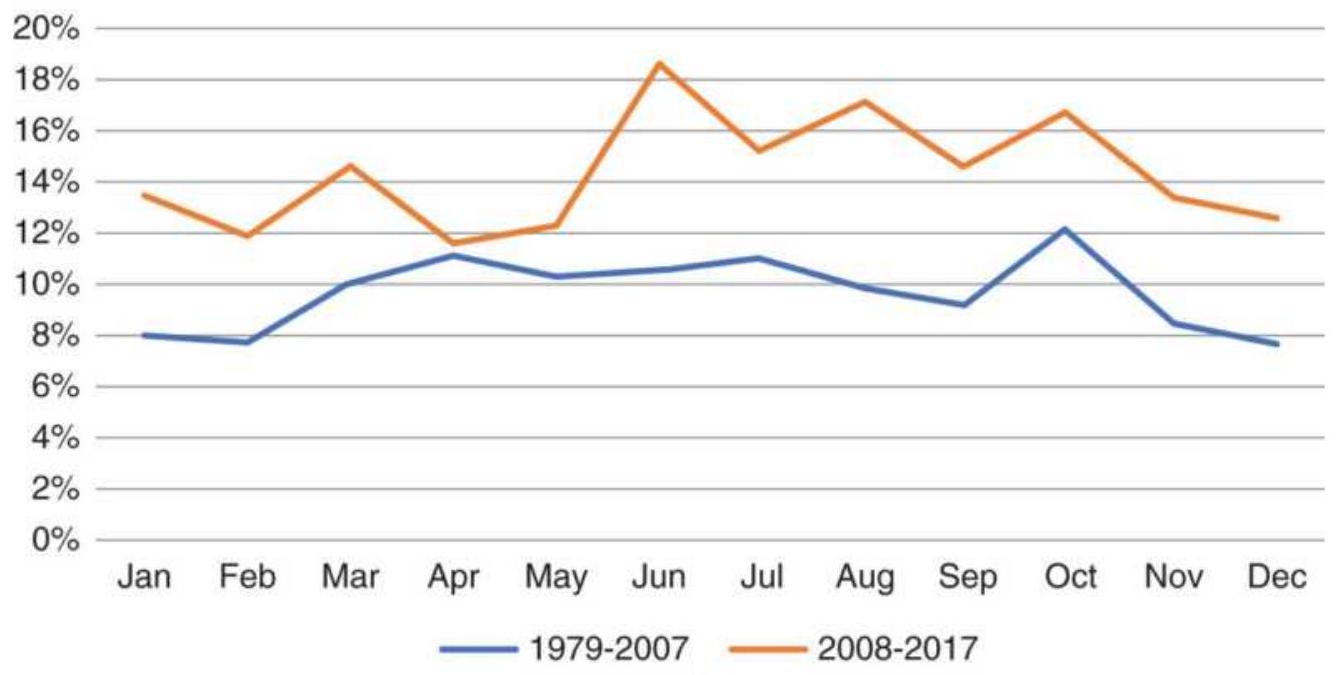

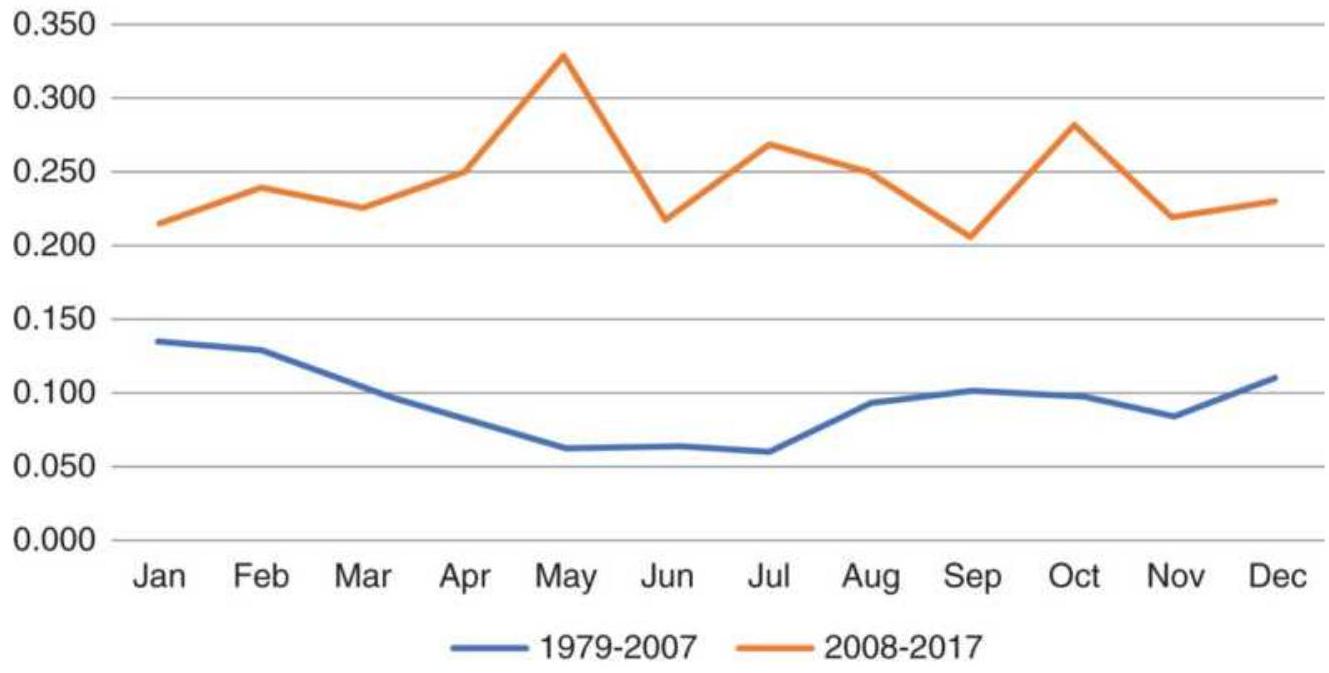

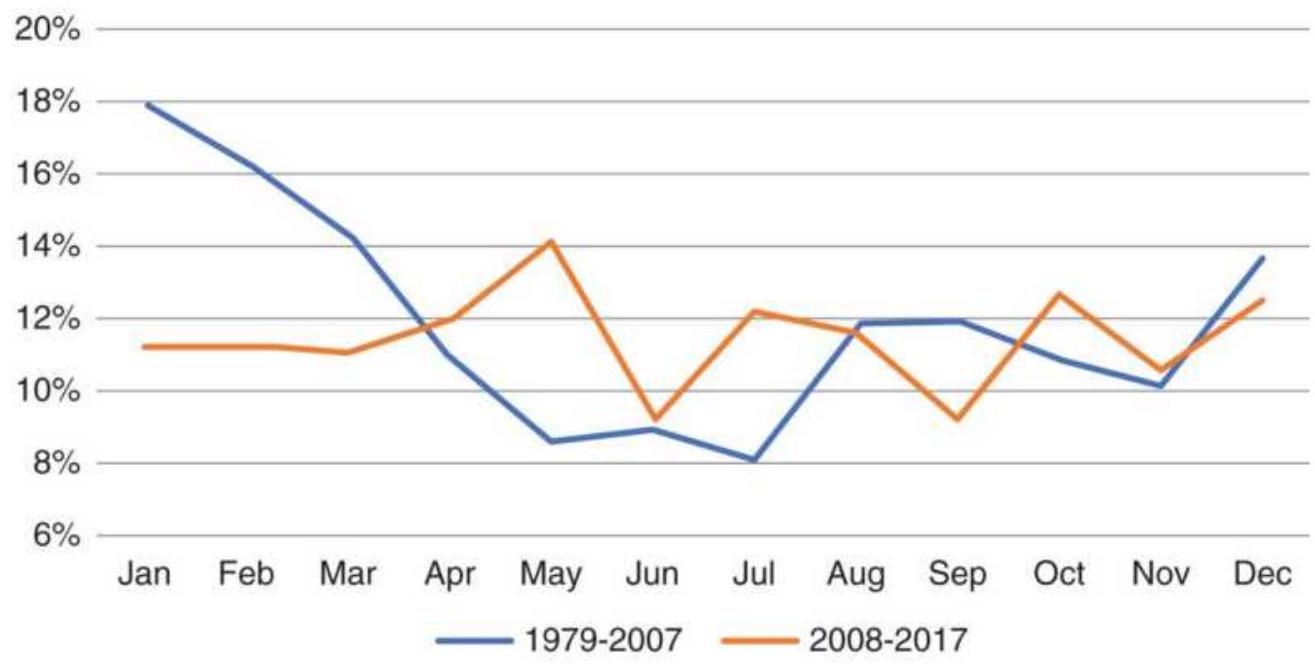

FIGURE 10.15 (a) Cash wheat volatility as a percentage of price. (b) Wheat v...

FIGURE 10.16 Southwest Airlines (LUV) (a) average monthly returns, and (b) a...

FIGURE 10.17 Heating oil volatility (a) in cents/barrel, and (b) as a percen...

FIGURE 10.18 (a) Amazon price minus a 12month moving average lagged 6 month...

FIGURE 10.19 (a) Amazon detrended prices using a 12-month MA lagged 6 months...

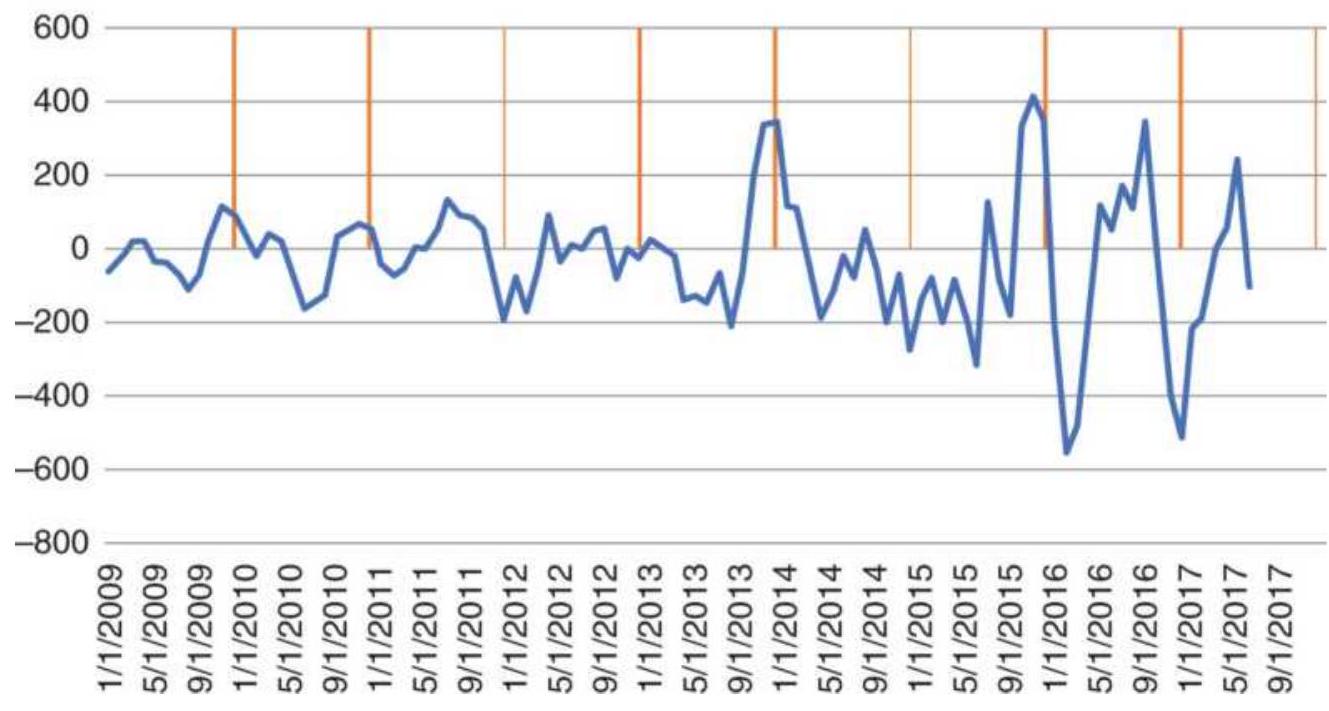

FIGURE 10.20 Amazon price detrended for the period 2009-2017. Vertical lines...

FIGURE 10.21 Seasonal pattern of wheat using the method of yearly averages....

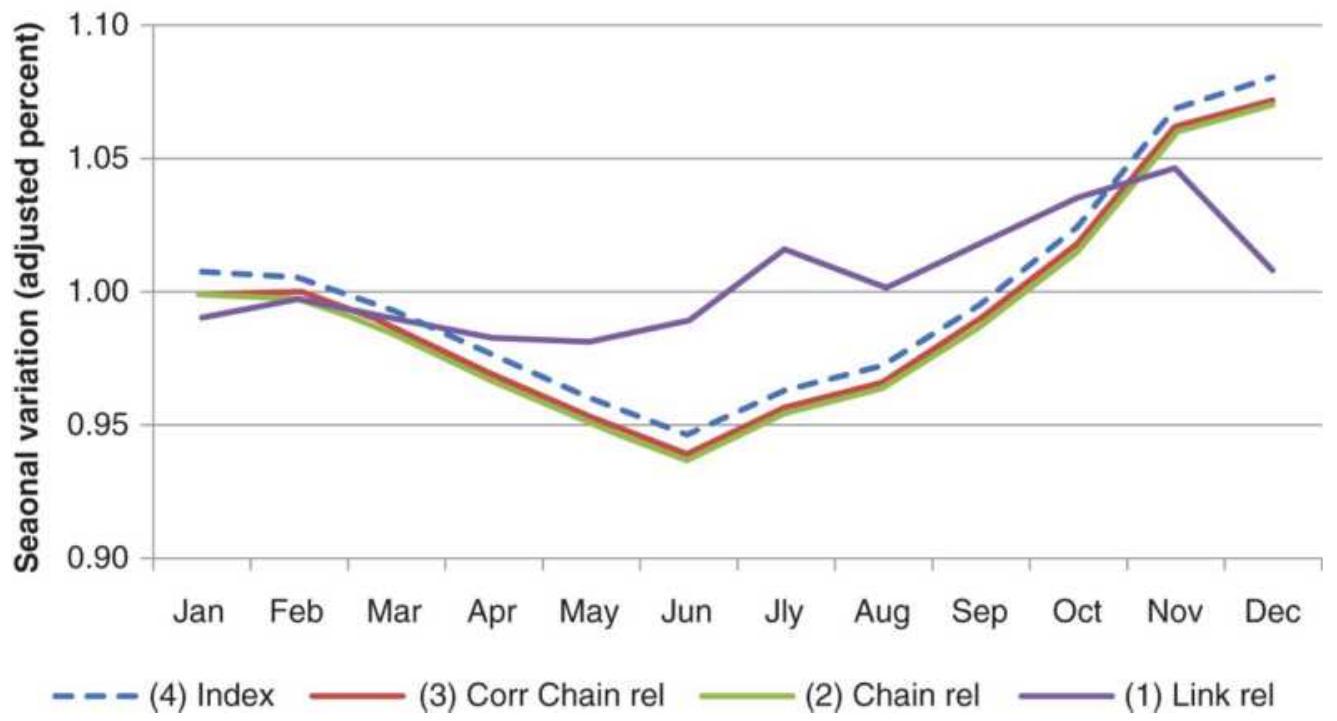

FIGURE 10.22 The four steps in creating link relatives.

FIGURE 10.23 Moving average method for wheat. (a) Moving average through mid...

FIGURE 10.24 Weather sensitivity for soybeans and corn.

FIGURE 10.25 Heating oil cash and futures average monthly returns, 2009-2017...

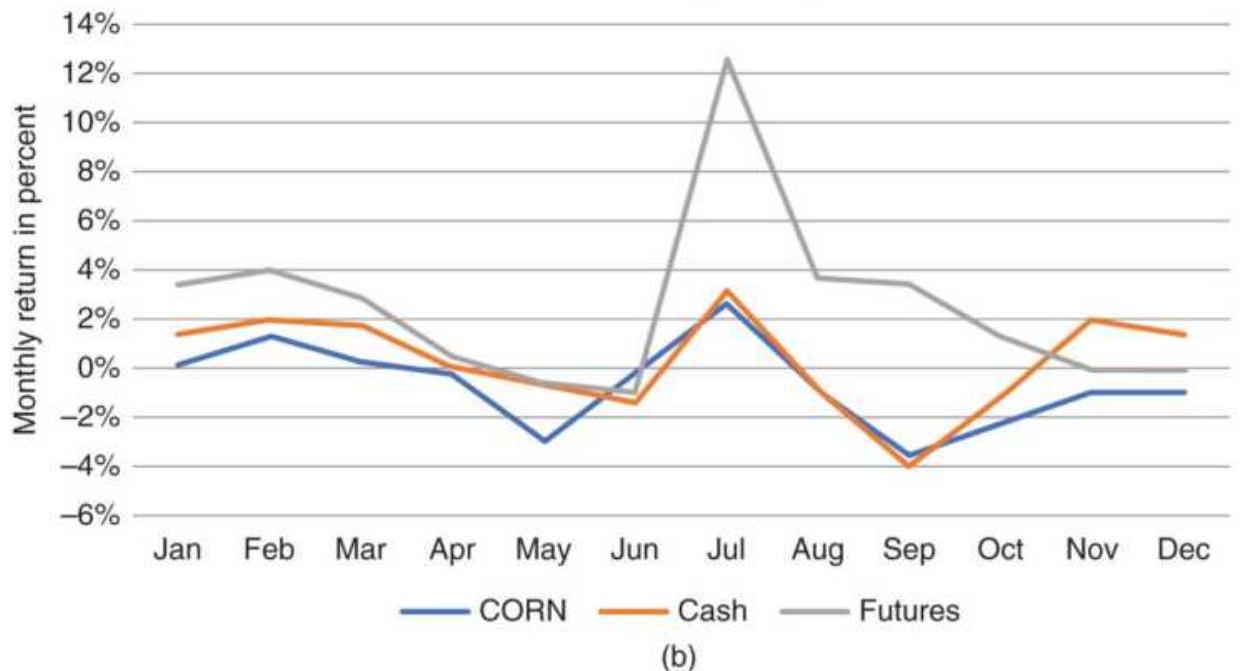

FIGURE 10.26 Cash and futures corn monthly returns, 2008-2017.

FIGURE 10.27 Coffee cash and futures monthly returns, 2008-2017.

FIGURE 10.28 (a) Corn seasonality for different years. (b) Comparison of cor...

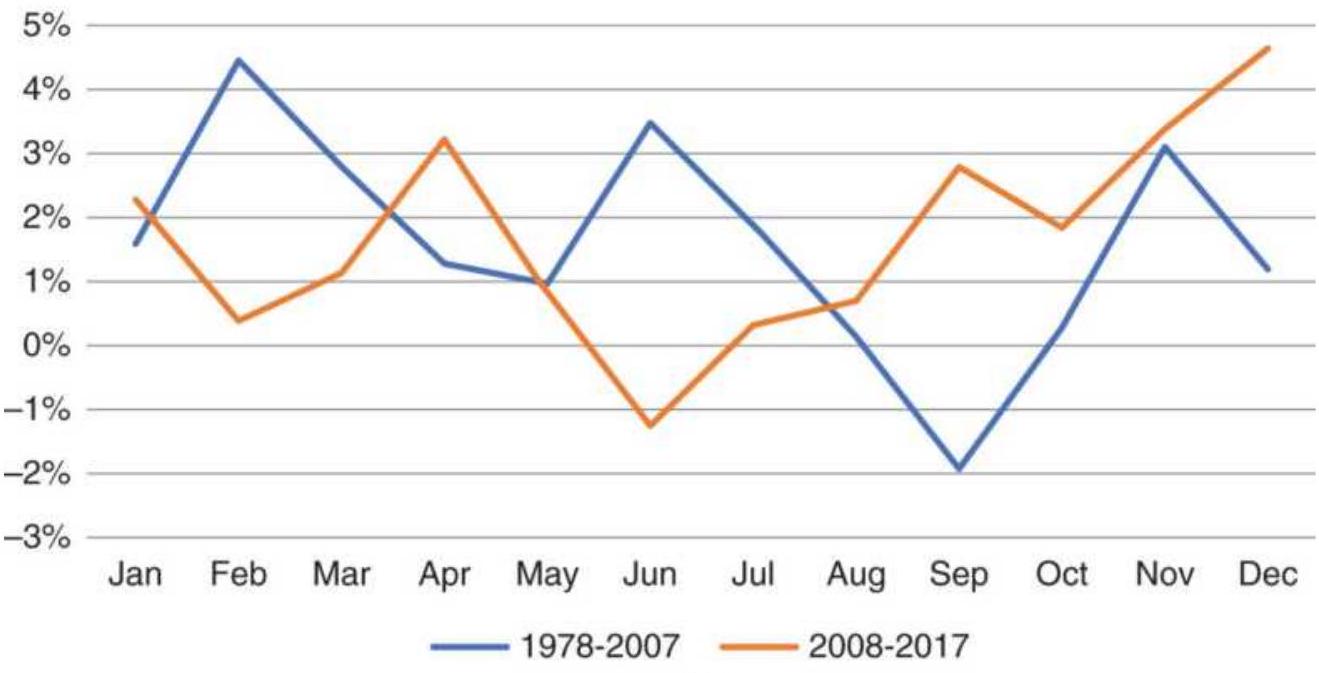

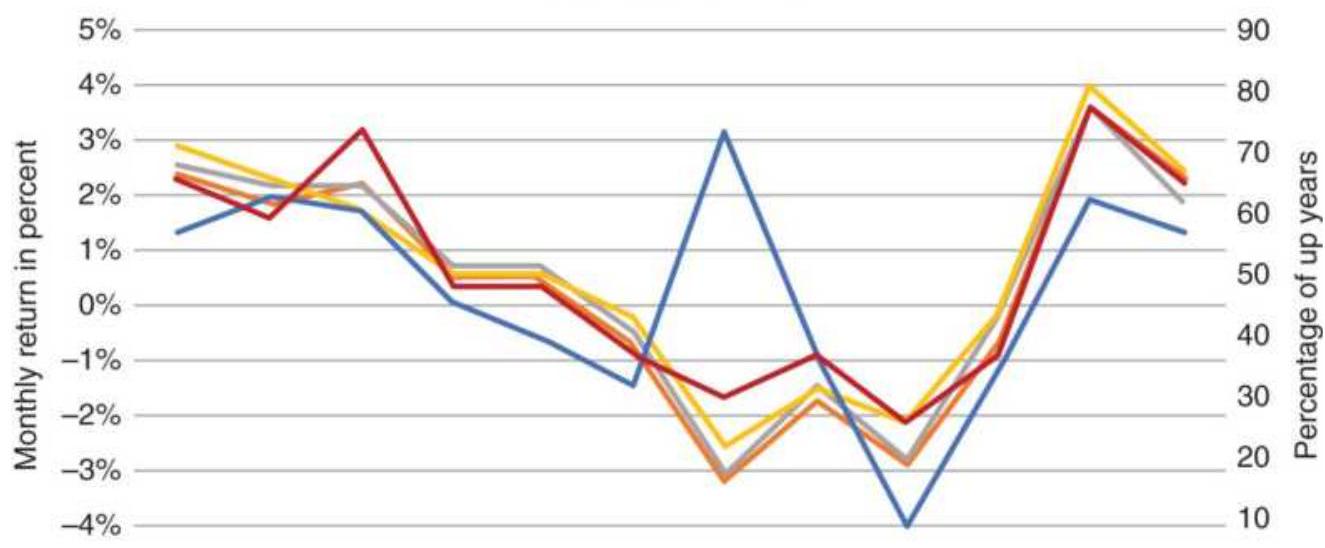

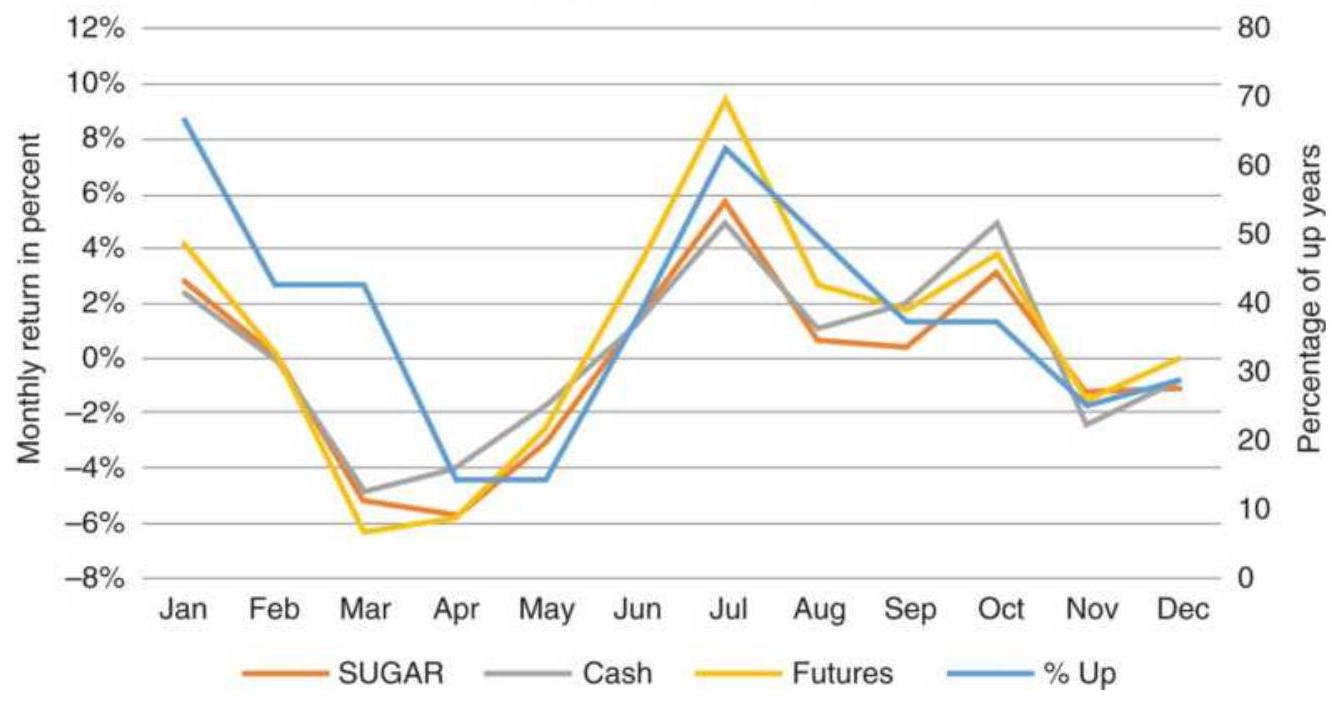

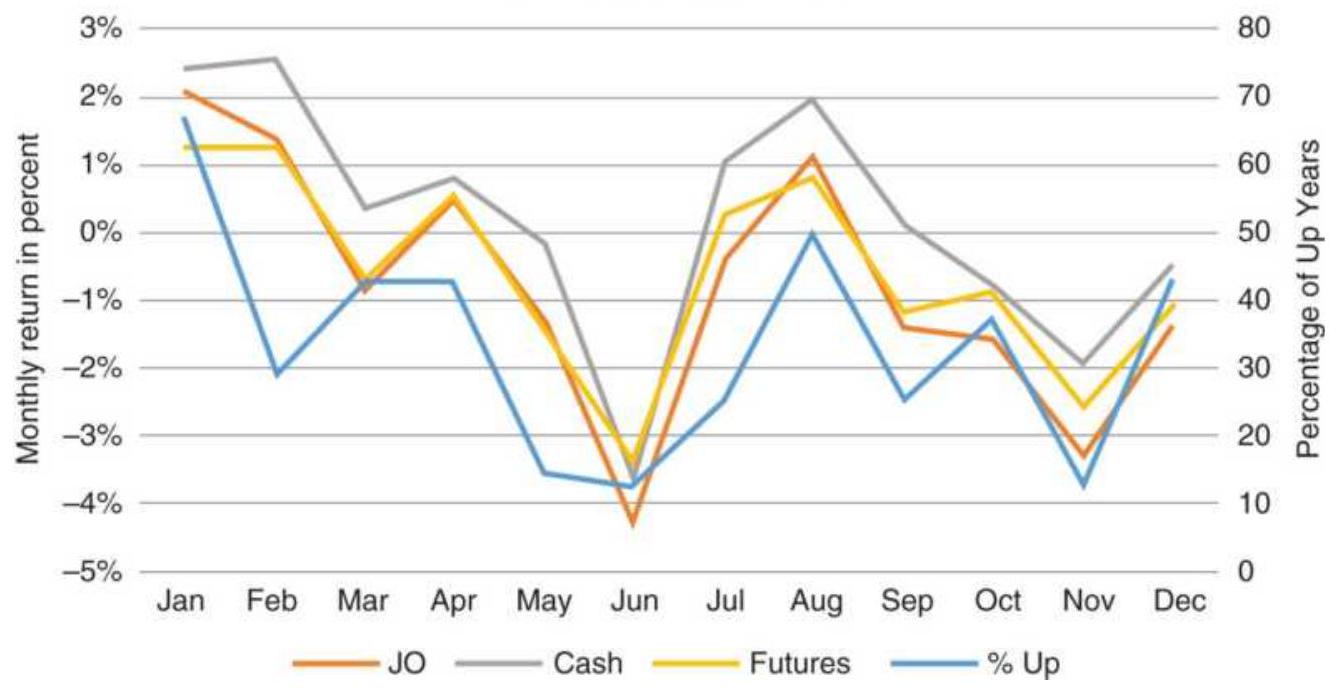

FIGURE 10.29 Comparison of wheat average monthly returns for cash, futures, ...

FIGURE 10.30 Comparison of sugar seasonality, 2008-2015 for cash, futures, a... FIGURE 10.31 Comparison of coffee seasonality using cash, futures, and the E...

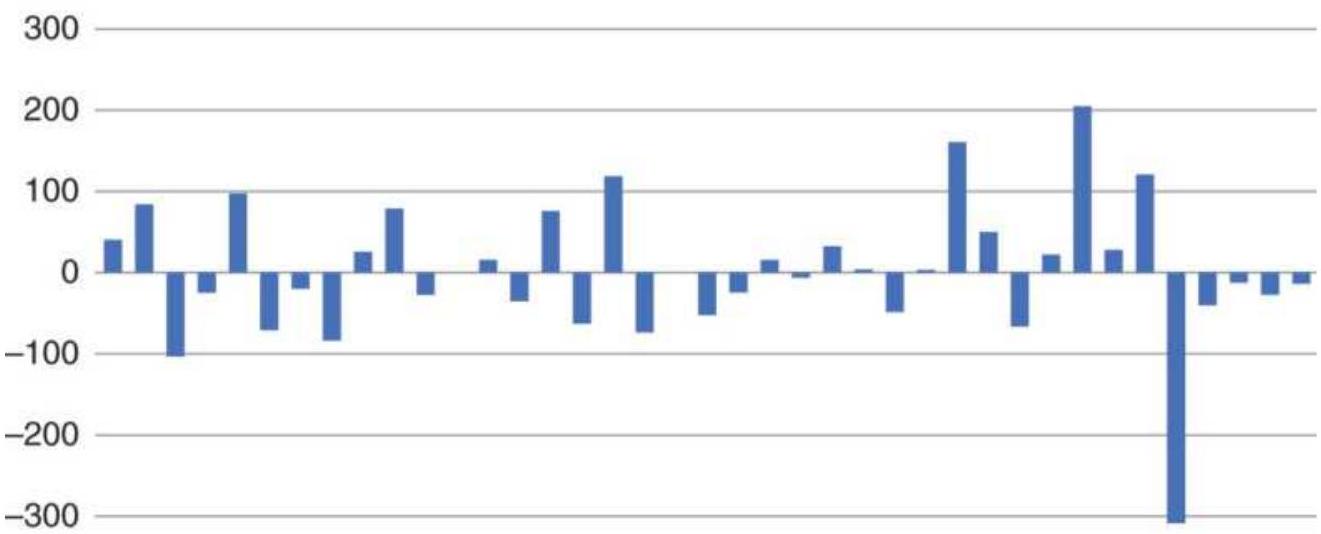

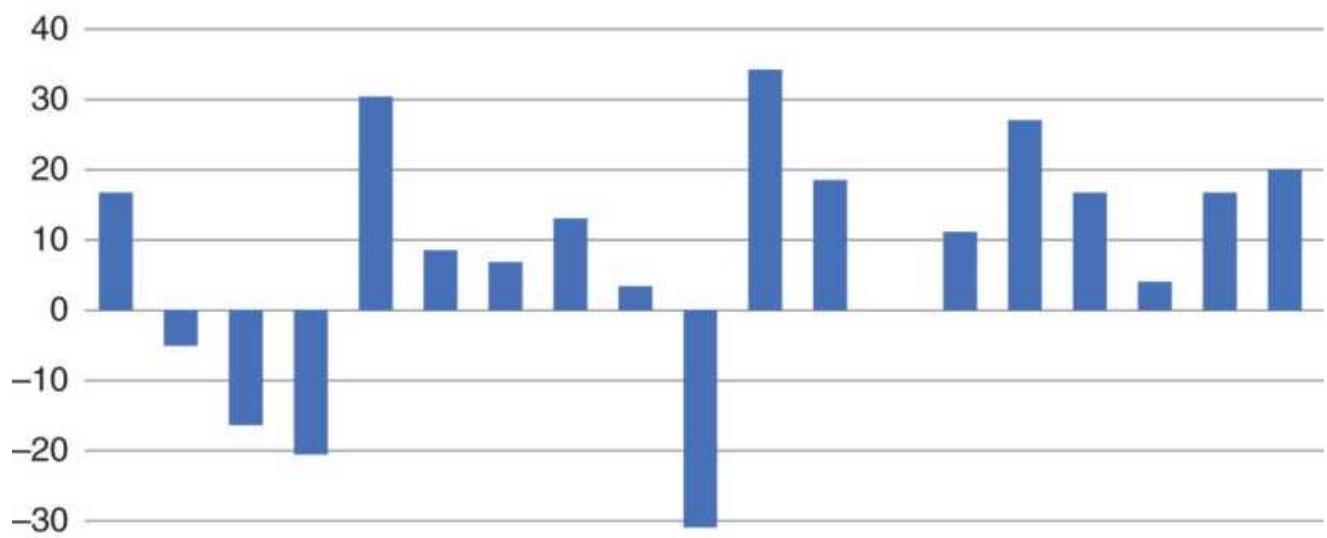

FIGURE 10.32 Corn net returns for the calendar years.

FIGURE 10.33 Entering a mean-reversion trade at the end of January in corn....

FIGURE 10.34 Pattern of bullish and bearish years by month.

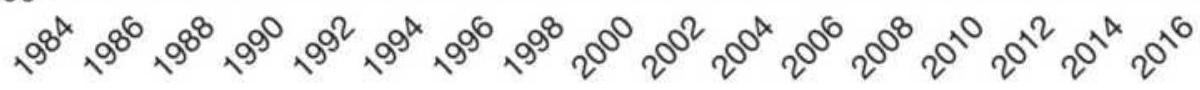

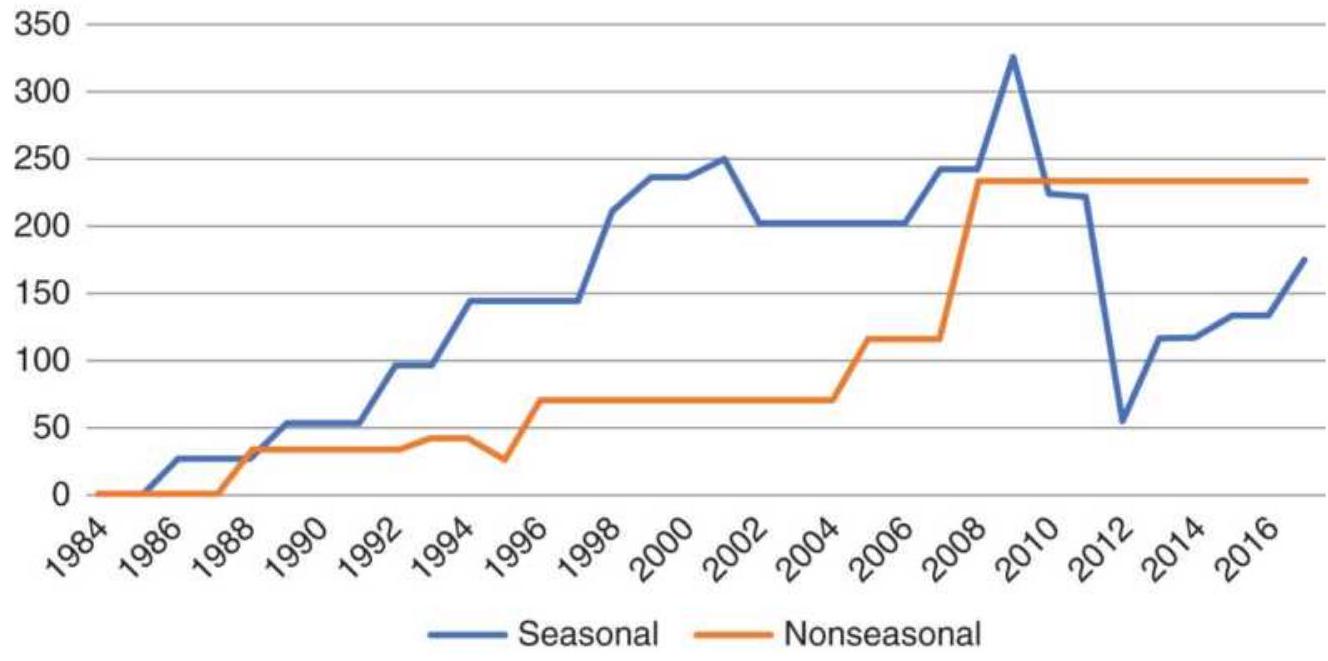

FIGURE 10.35 Results of seasonal and nonseasonal trades using cash corn.

FIGURE 10.36 Applying the cash corn strategies to corn futures.

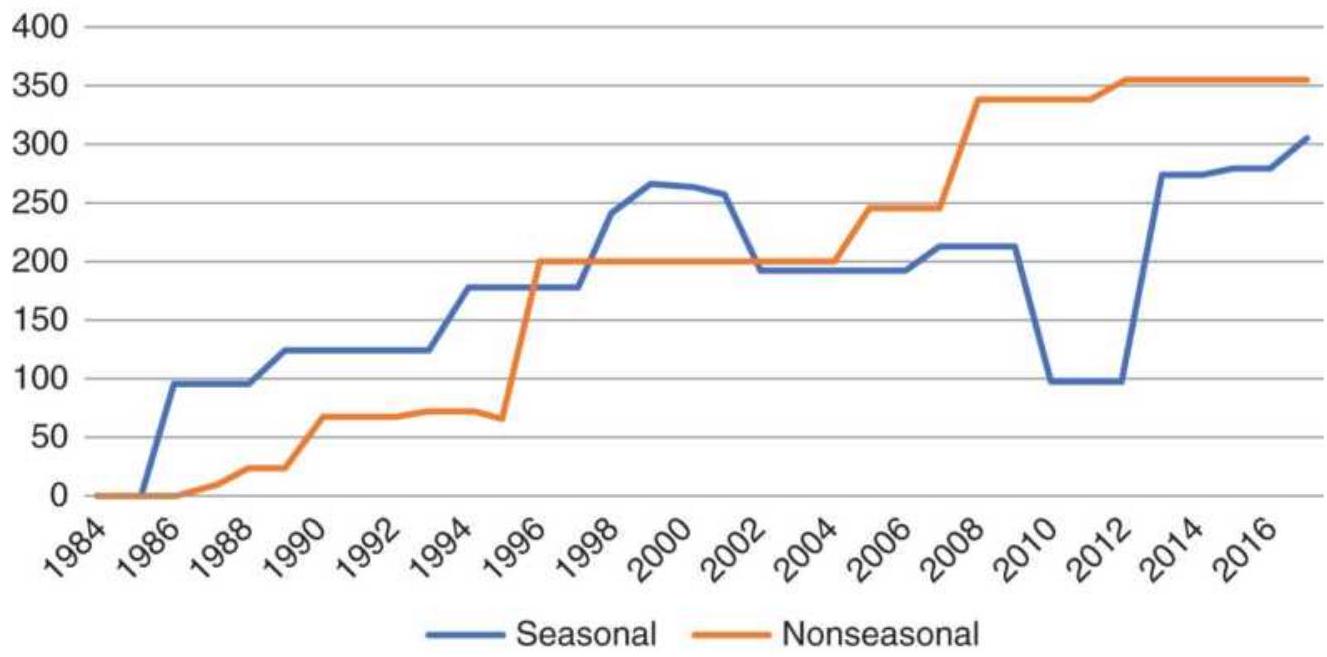

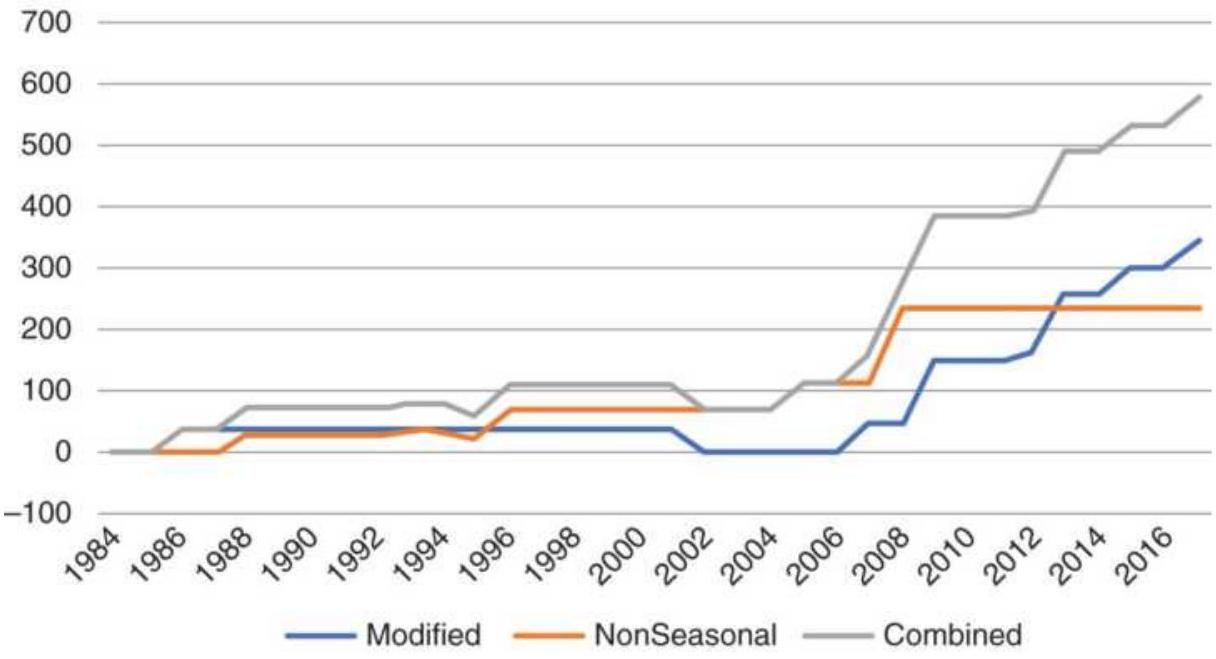

FIGURE 10.37 Results of modified seasonal strategy using corn futures.

FIGURE 10.38 Canadian dollars per U.S. dollar.

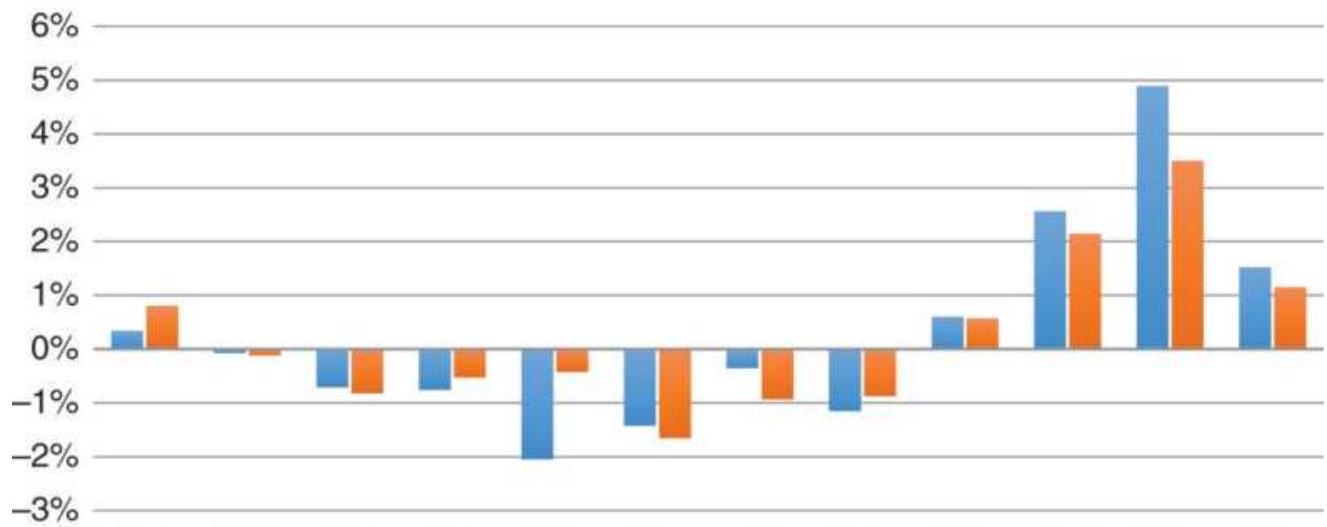

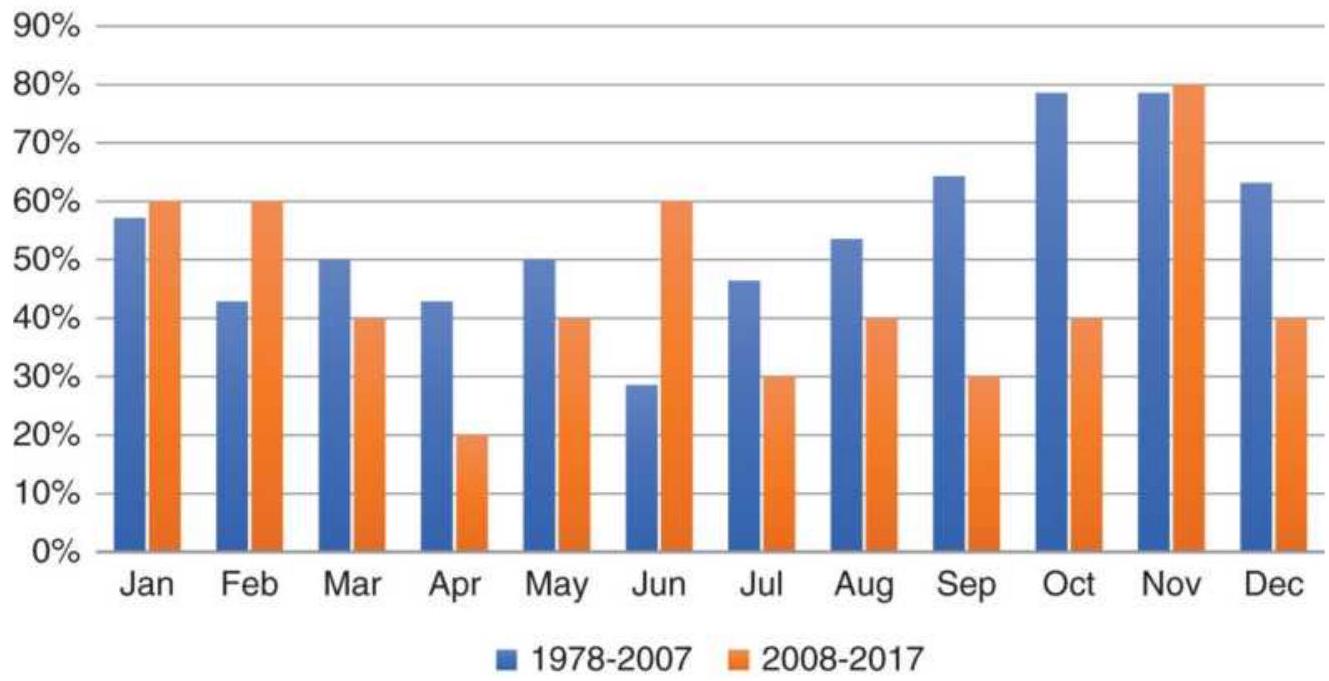

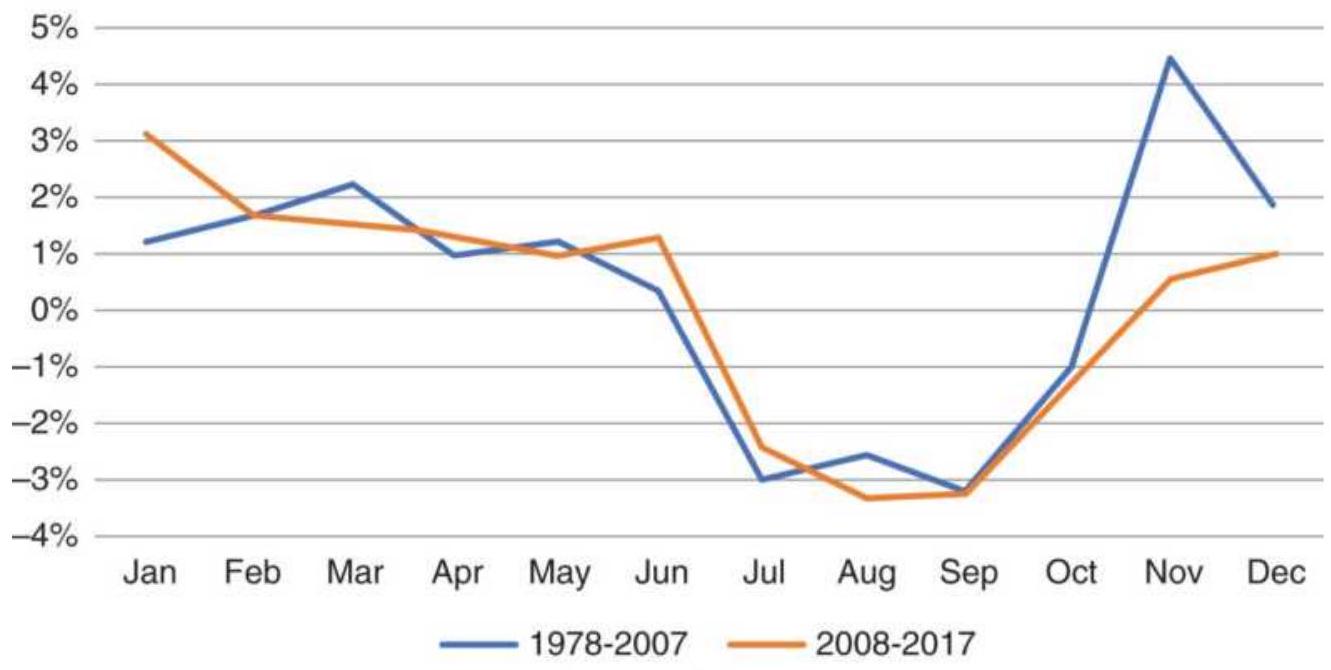

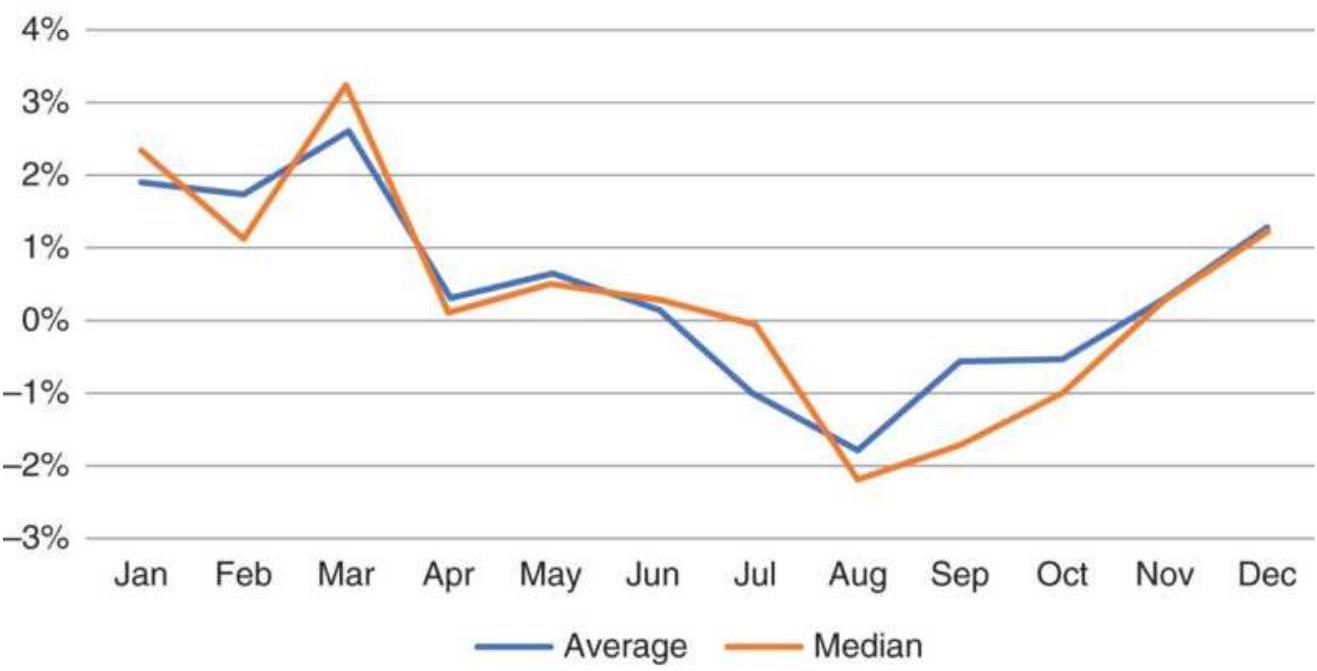

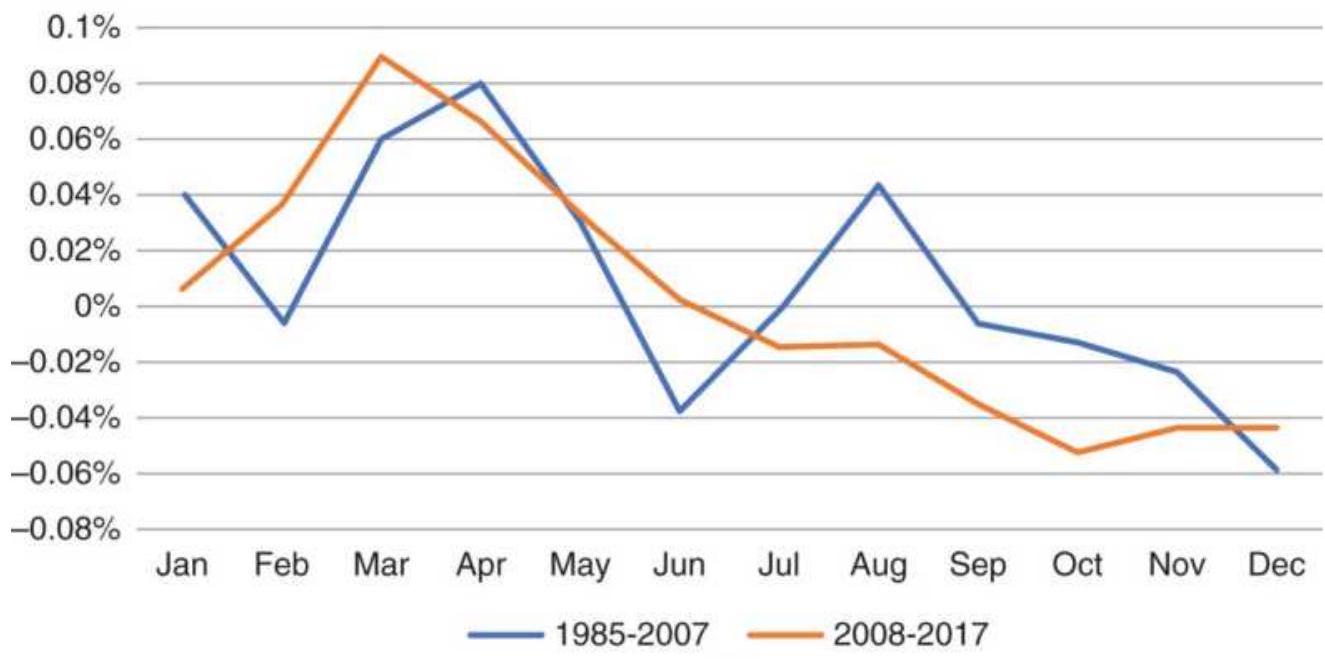

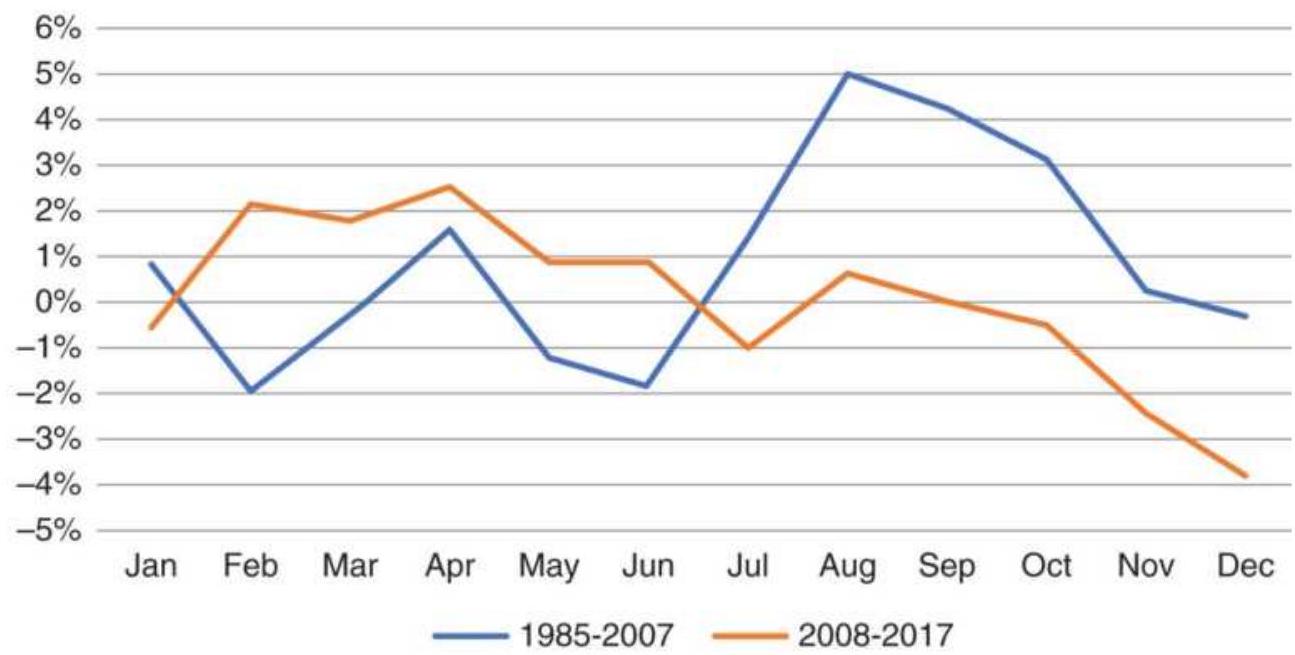

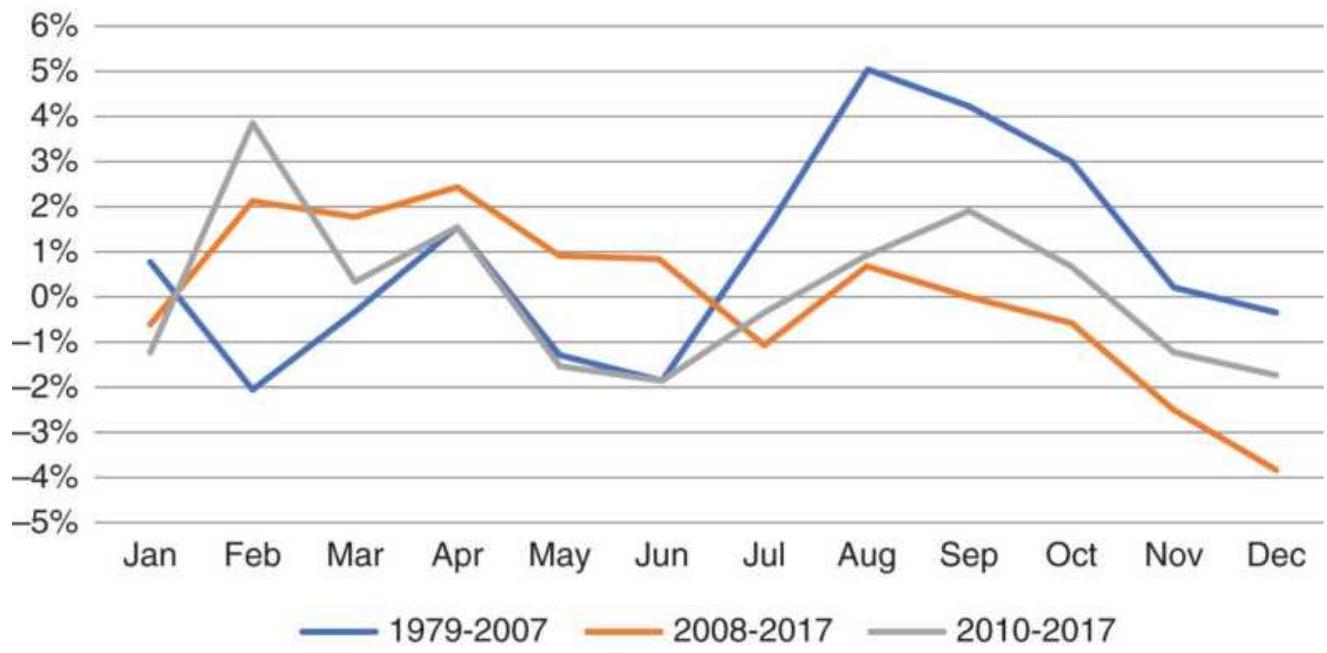

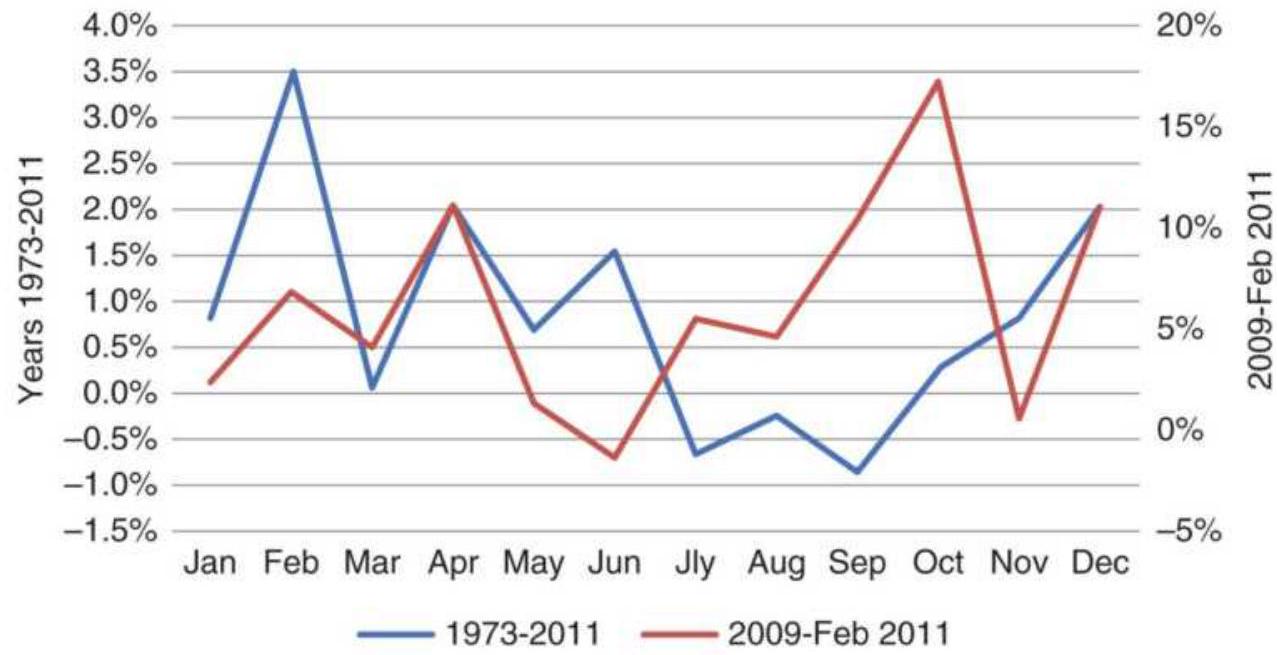

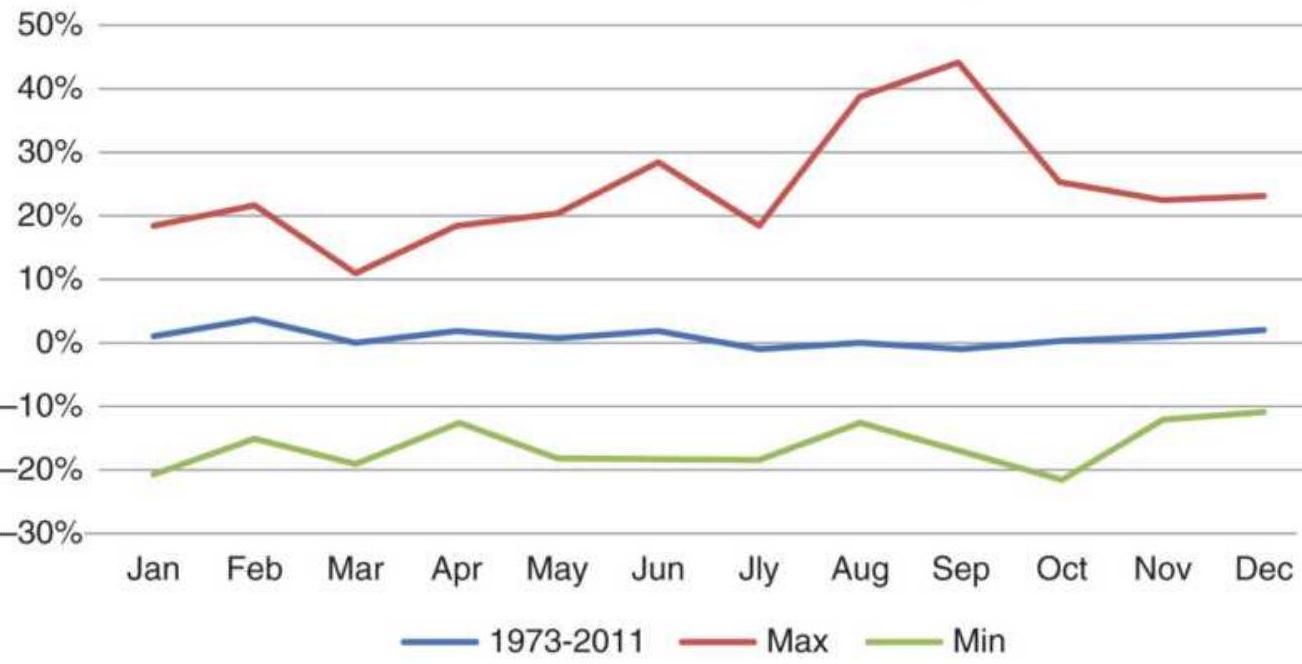

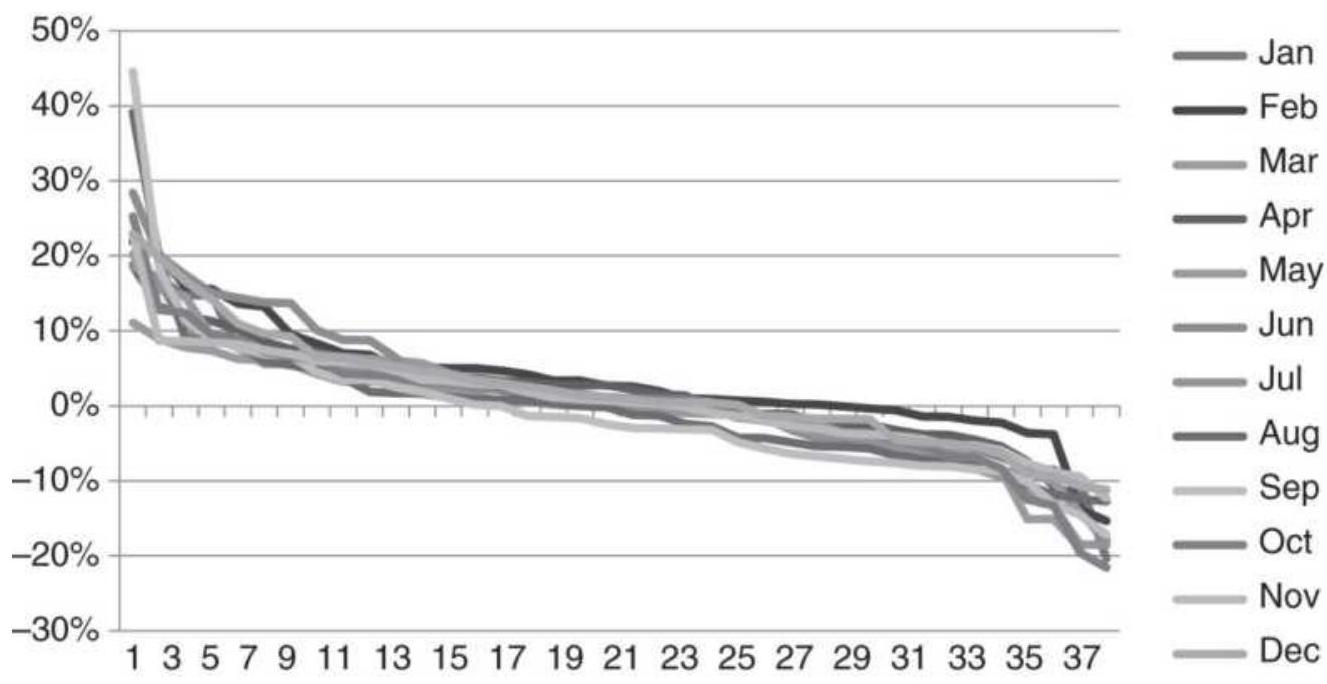

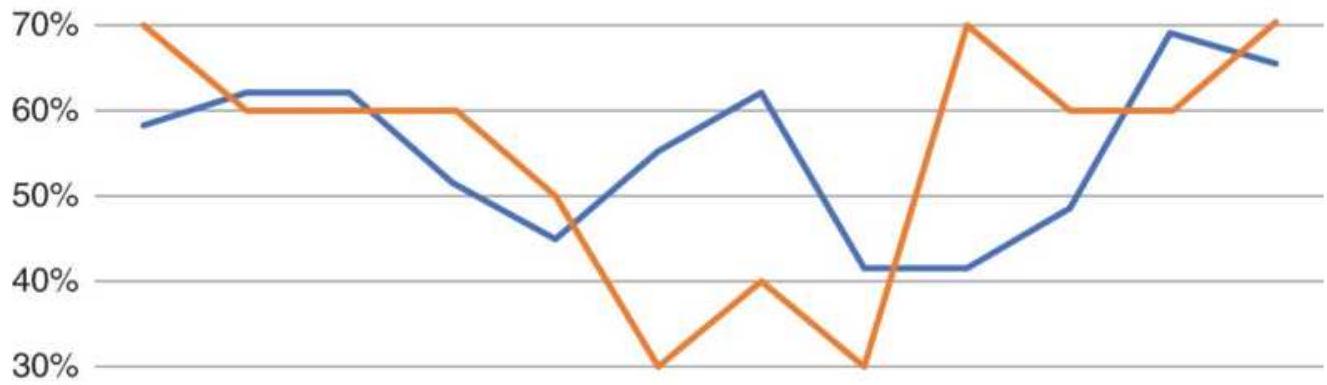

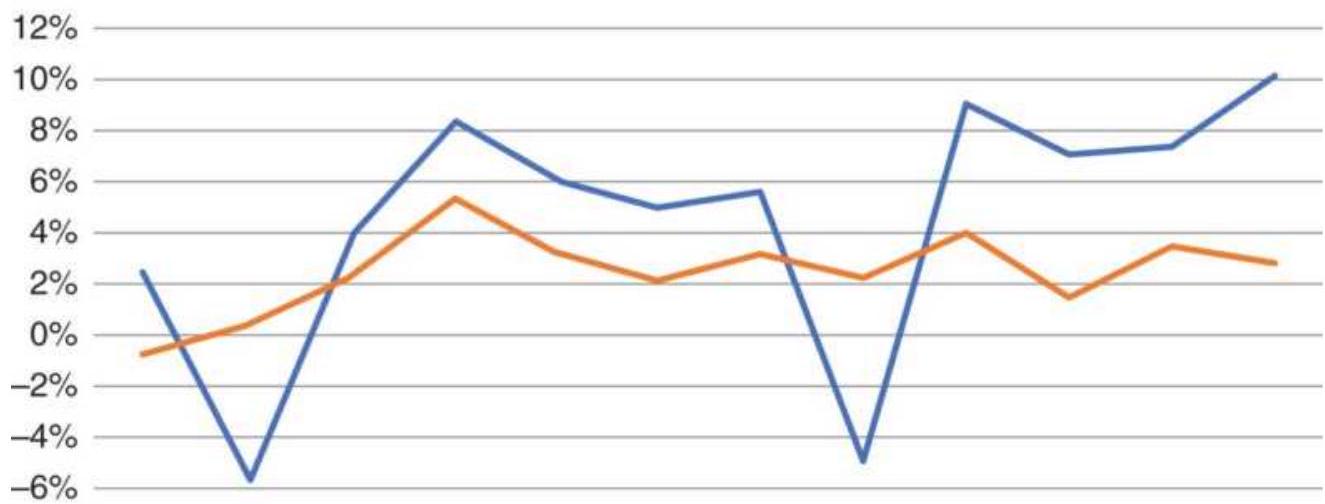

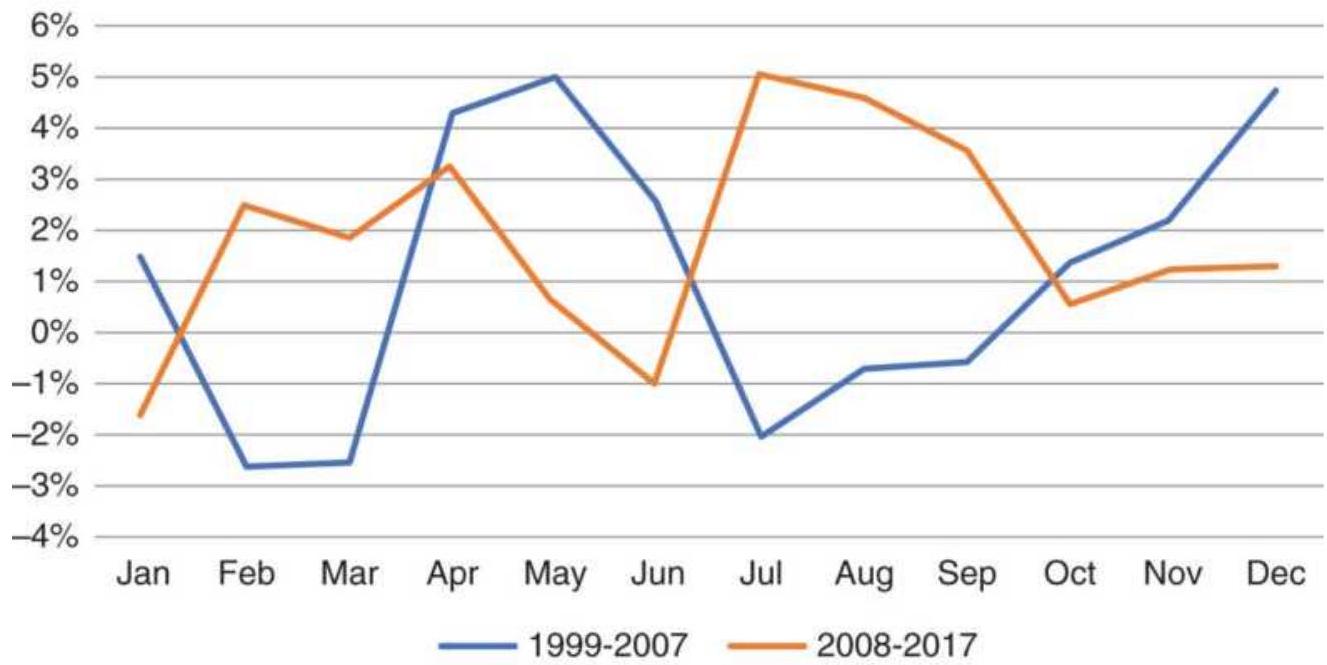

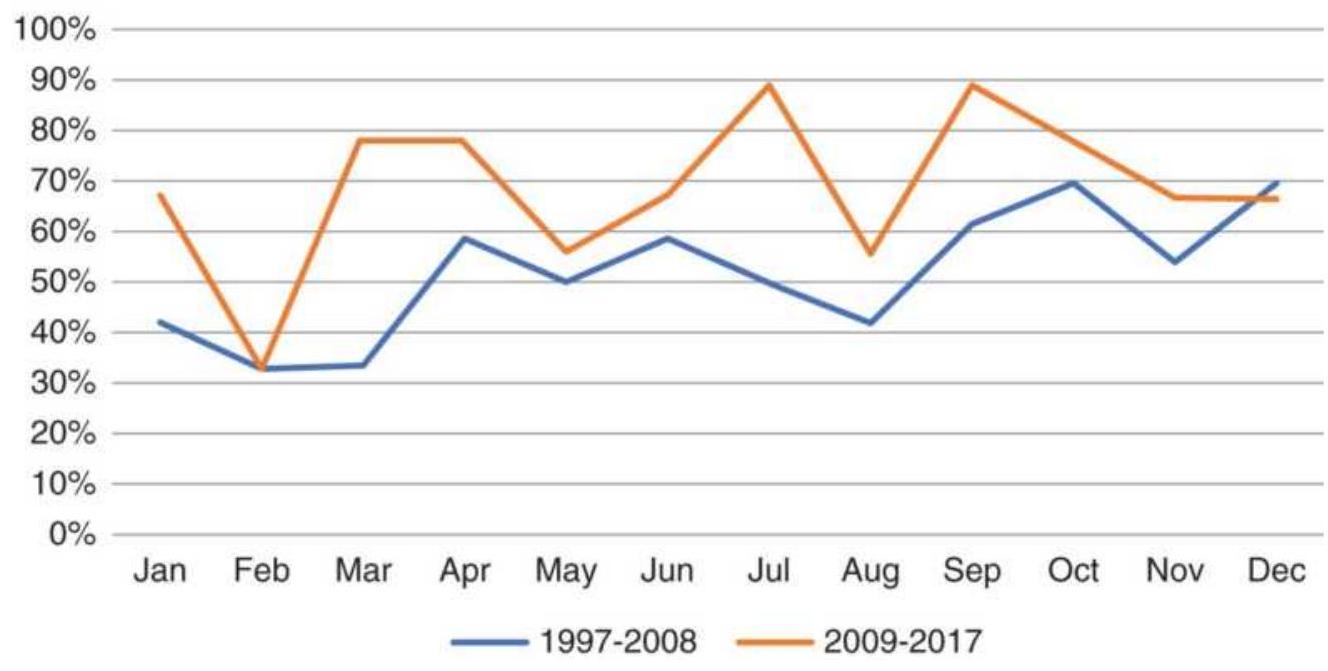

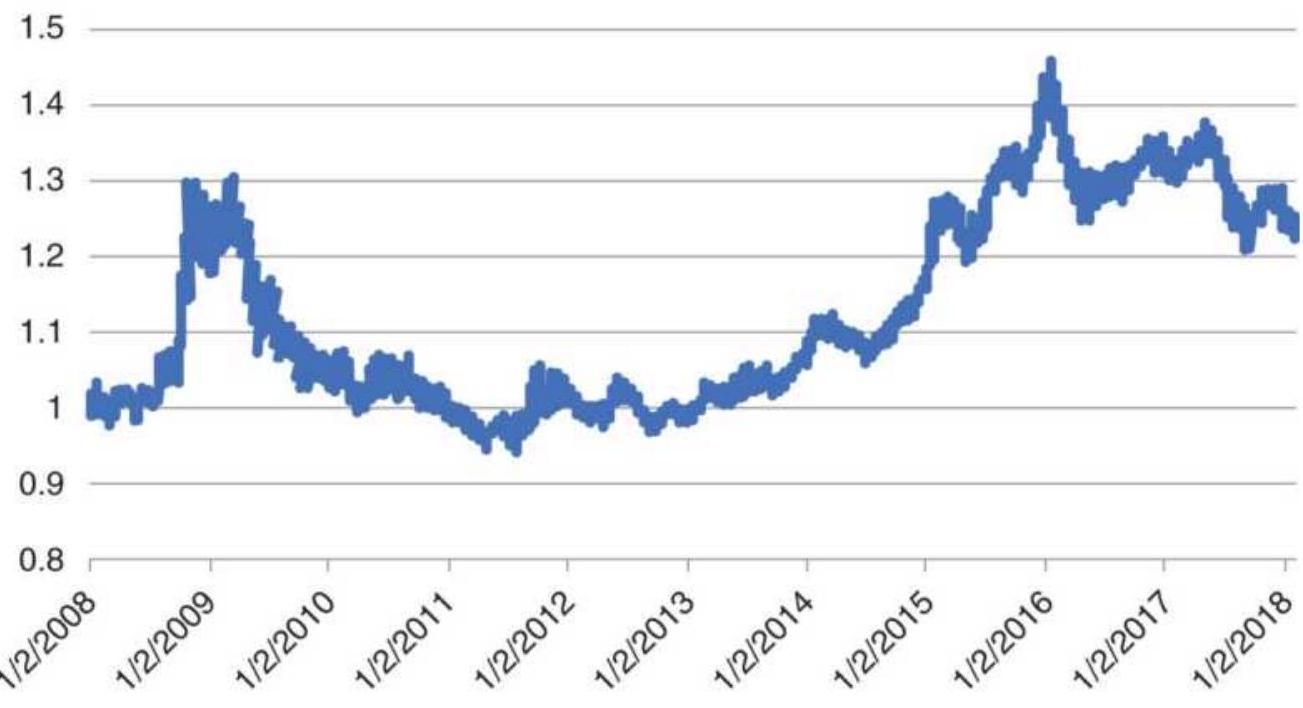

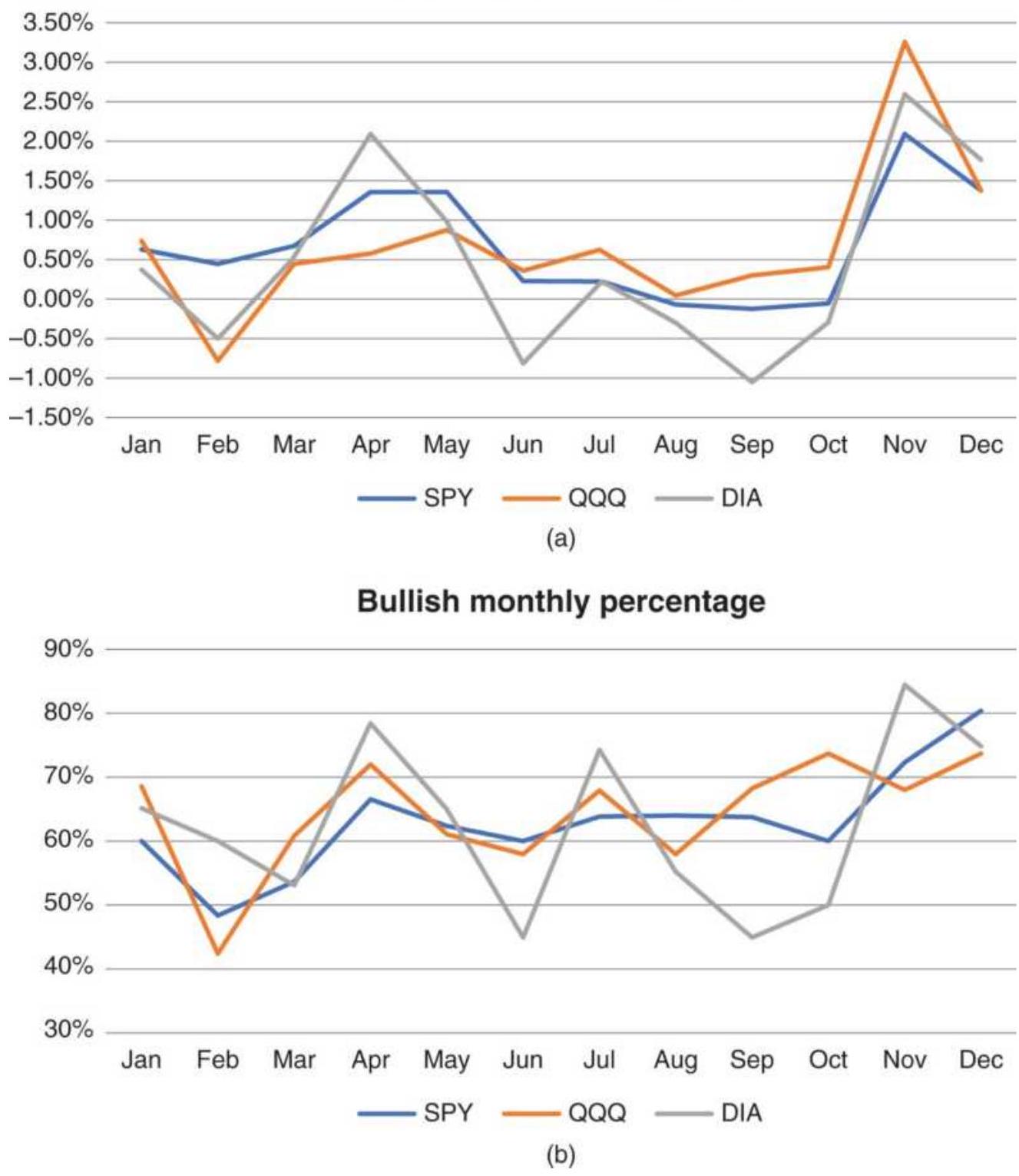

FIGURE 10.39 Seasonality in the U.S. stock market. (a) Average monthly retur...

FIGURE 10.40 Returns in December, 1998 2016 and 2011-2016, show similar patt... FIGURE 10.41 Daily returns of TNX and TN (futures).

FIGURE 10.42 Returns of bonds are declining.

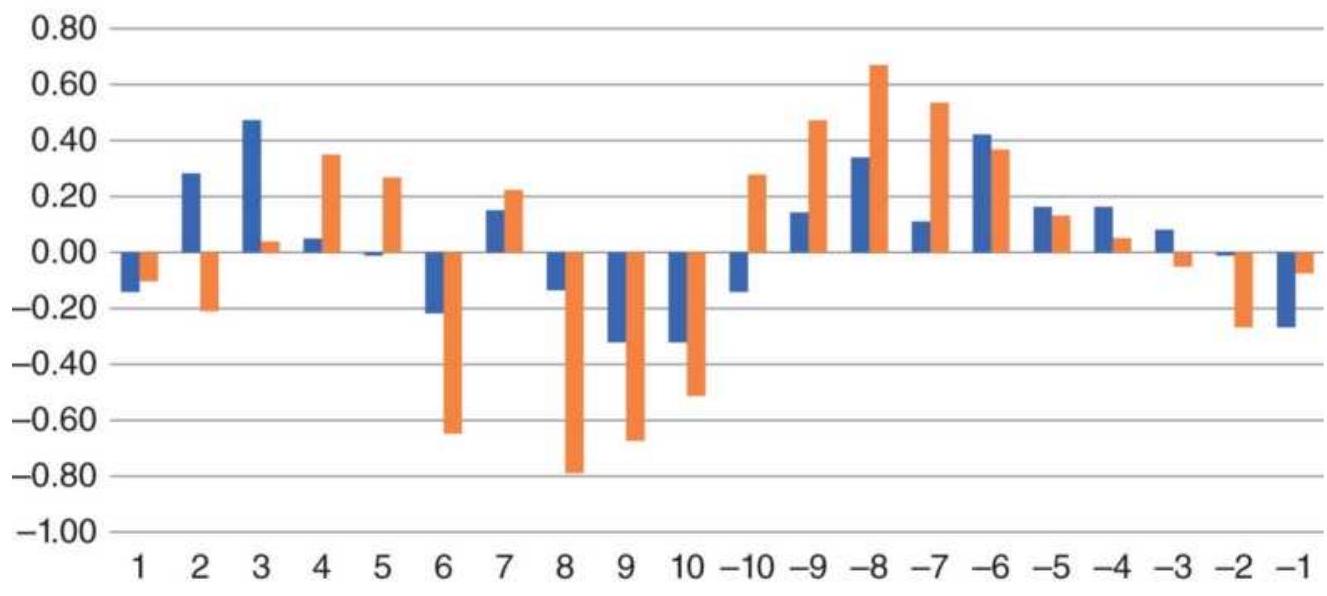

FIGURE 10.43 Best returns of SPY and SP cluster at the beginning of the mont...

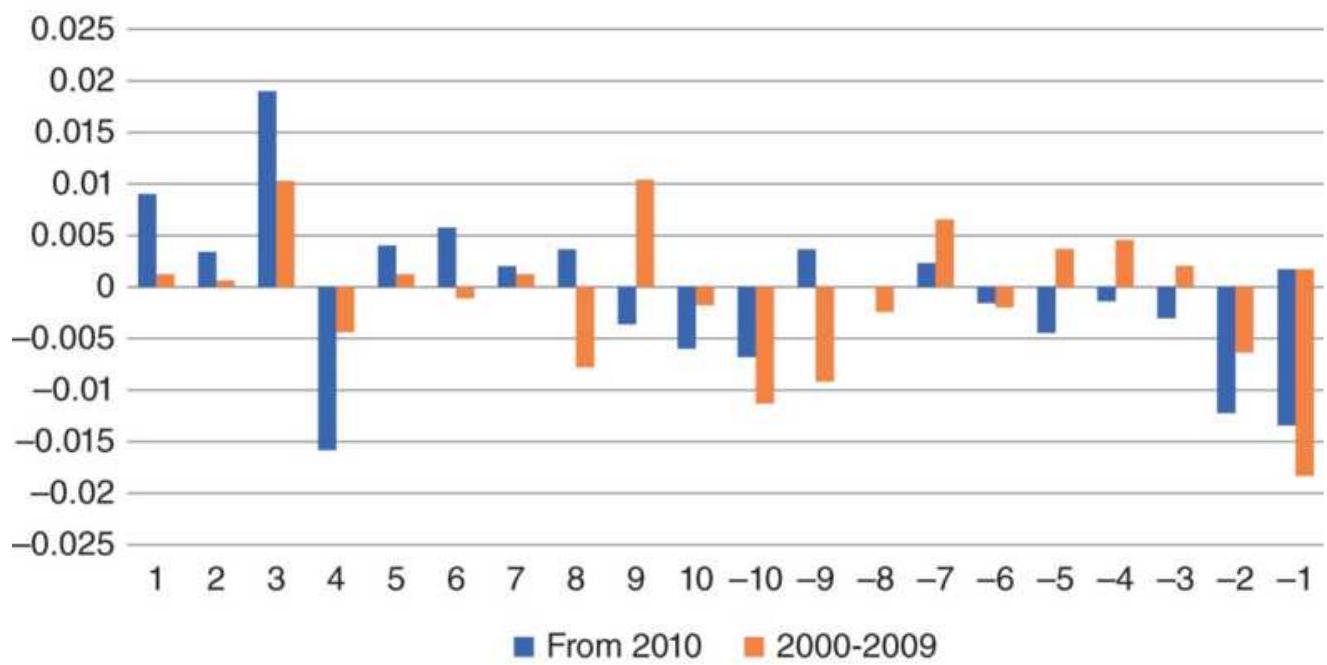

FIGURE 10.44 Returns of the equity index are increasing.

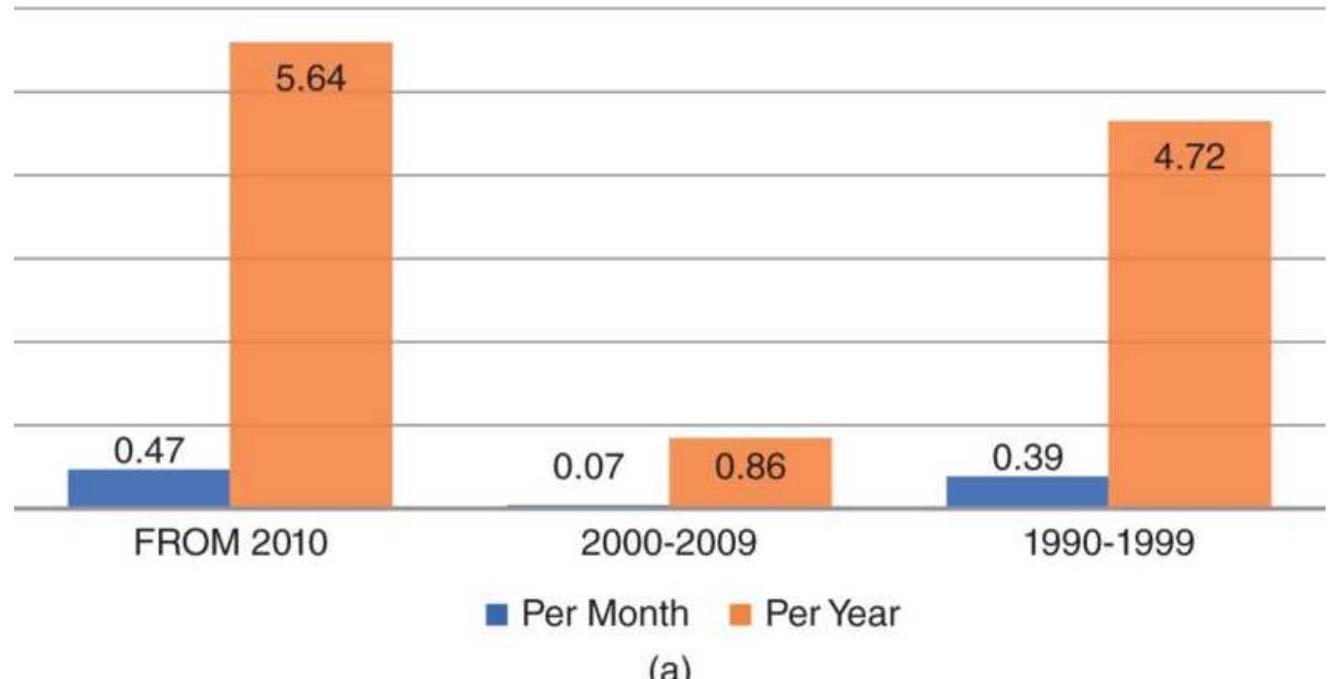

FIGURE 10.45 Results of January patterns.

FIGURE 10.46 Average returns for the year, excluding January.

FIGURE 10.47 SPY monthly volatility and returns, 1994-2017.

Chapter 11

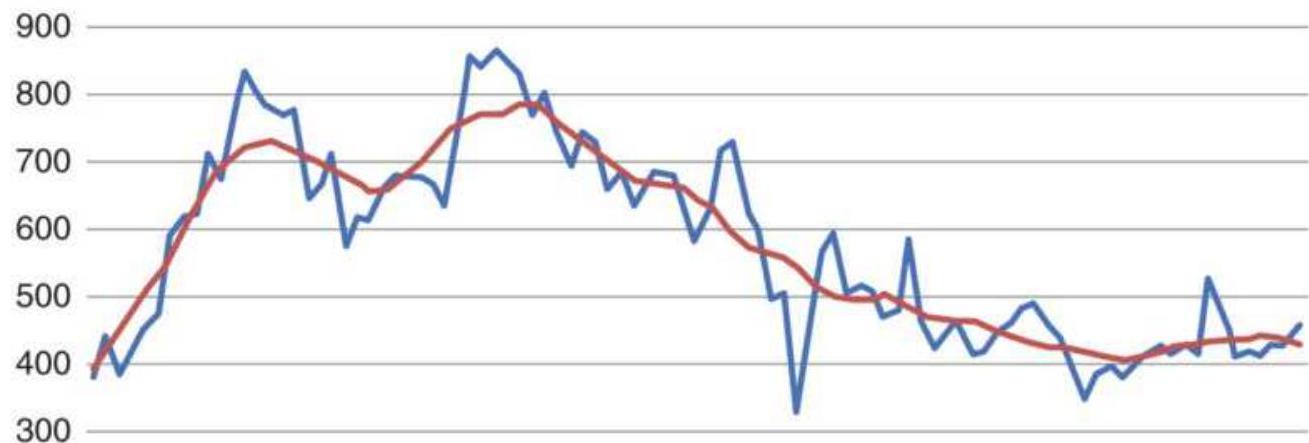

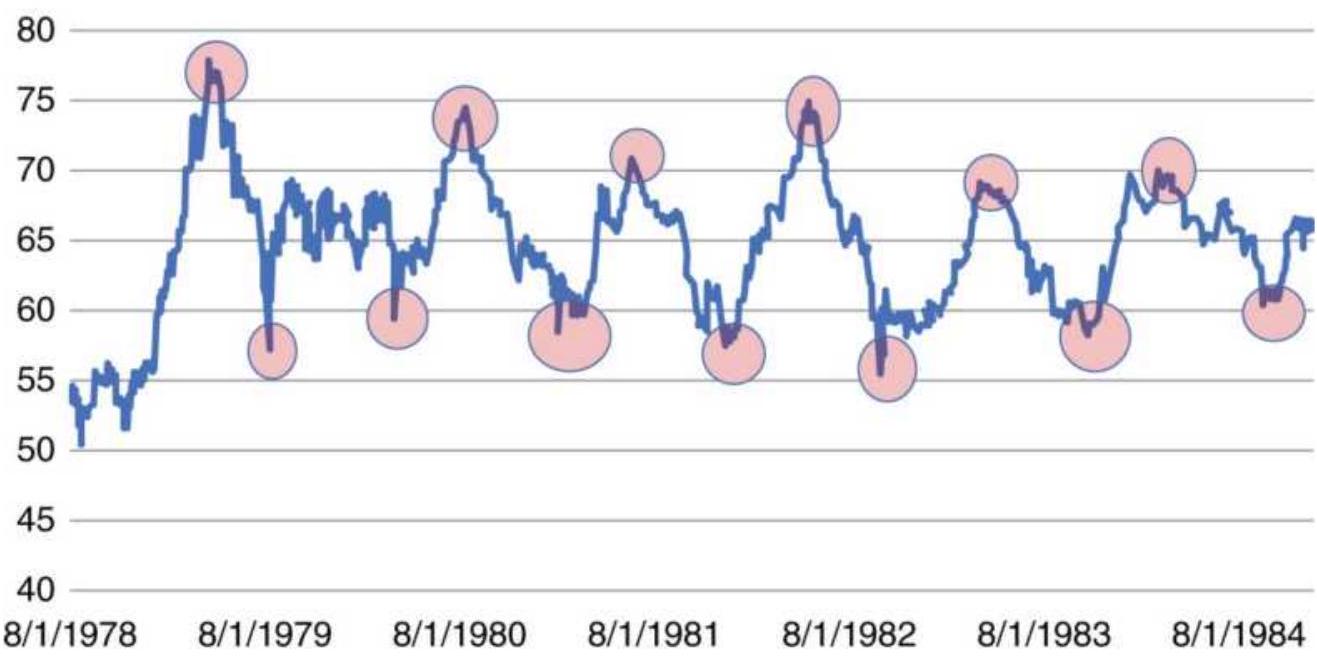

FIGURE 11.1 Cattle cash prices, 1978-2017.

FIGURE 11.2 Peaks and valleys of cattle prices, marked visually.

FIGURE 11.3 Cattle prices for 2012-2017, with circles marking the observed p...

FIGURE 11.4 Searching for the Swiss franc/U.S. dollar cycle.

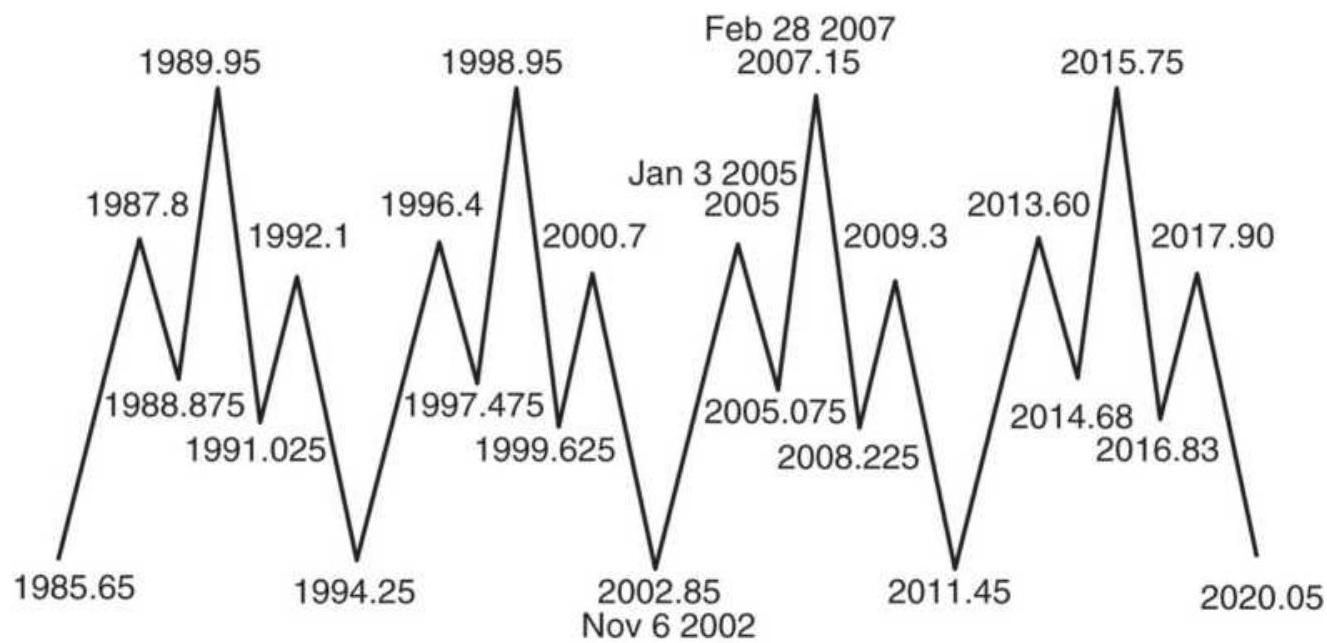

FIGURE 11.5 The 8.6-year business cycle.

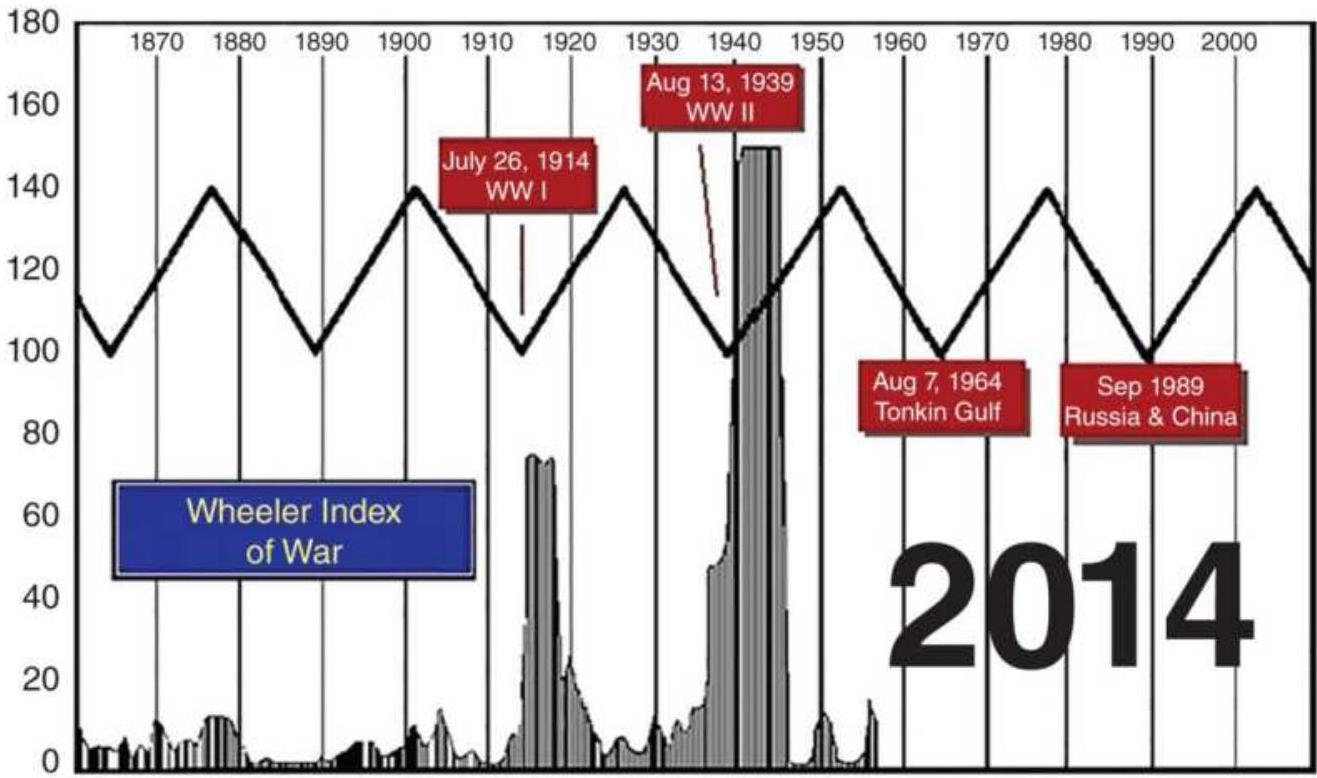

FIGURE 11.6 The Wheeler Index of War.

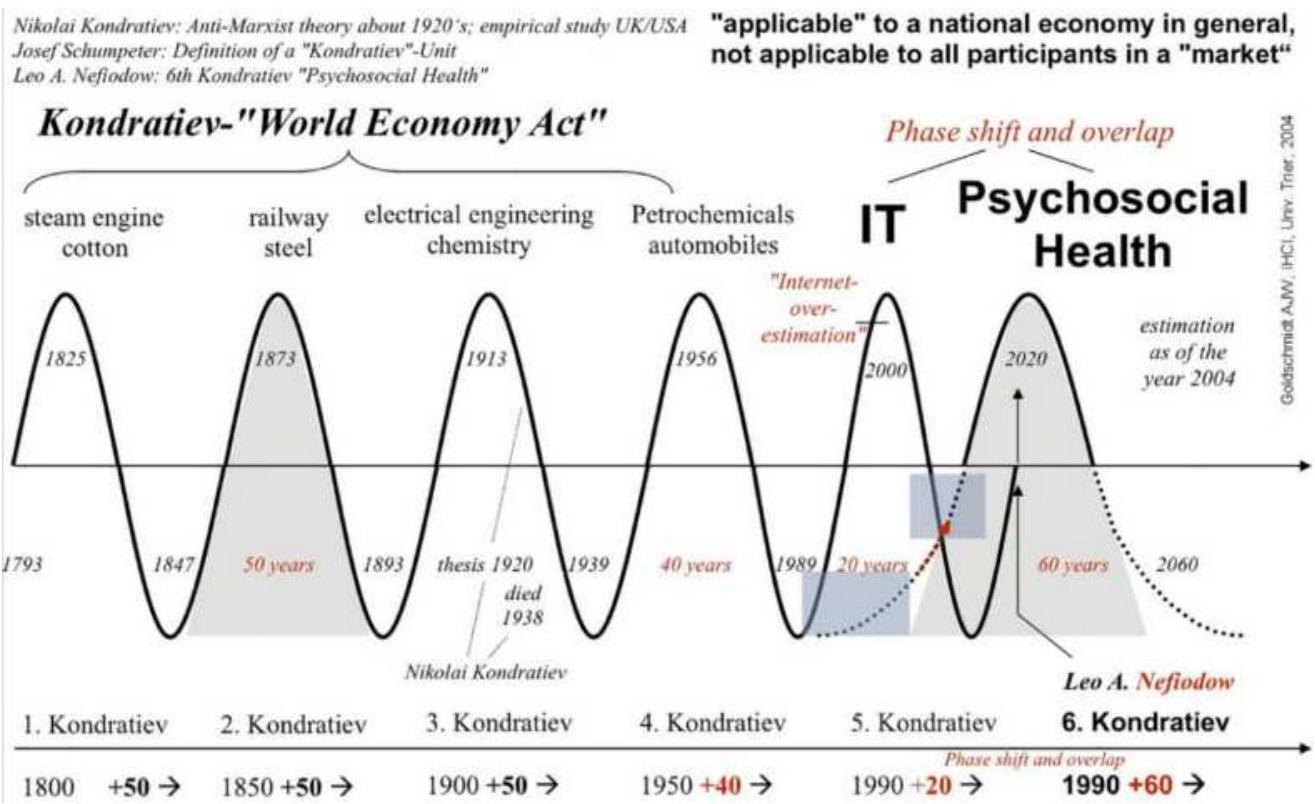

FIGURE 11.7 The Kondratieff Wave (along the

bottom), as of 2000.

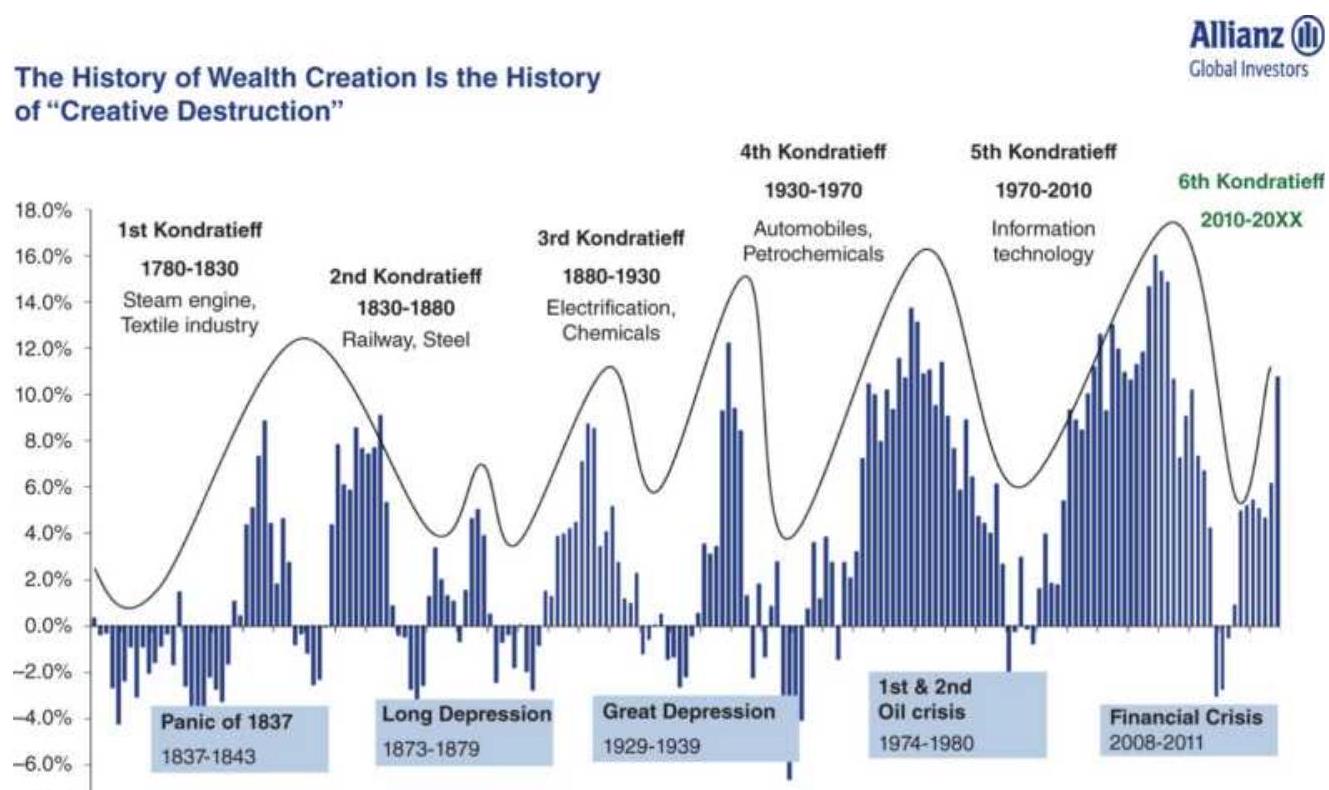

FIGURE 11.8 Another representation of the

Kondratieff Wave.

FIGURE 11.9 Returns of the Dow Jones

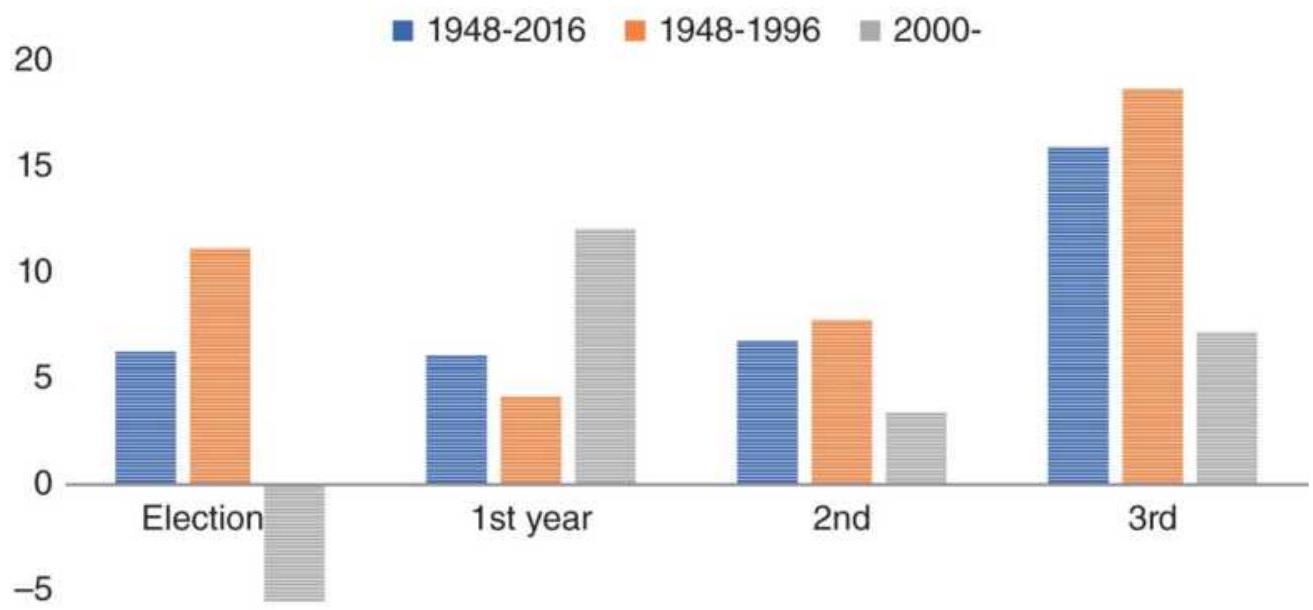

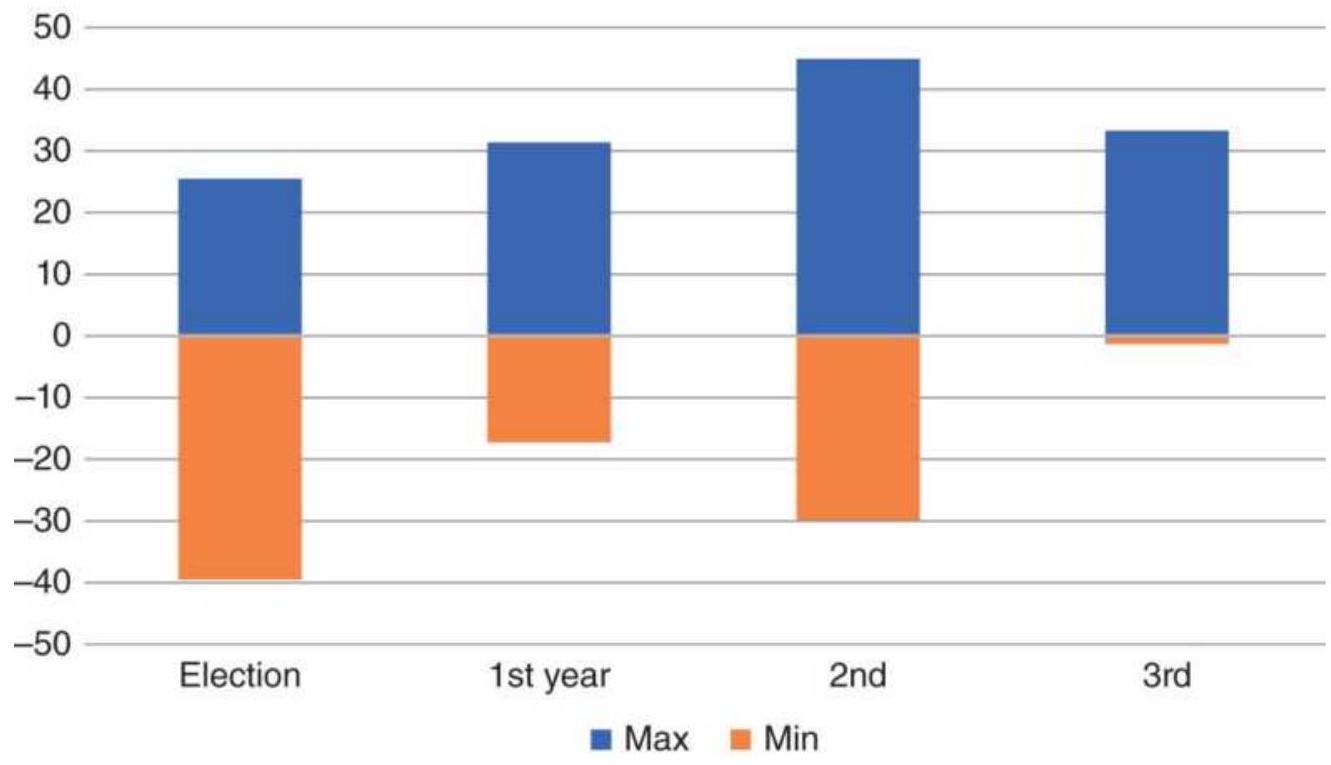

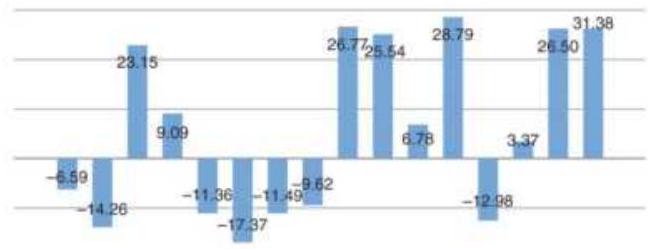

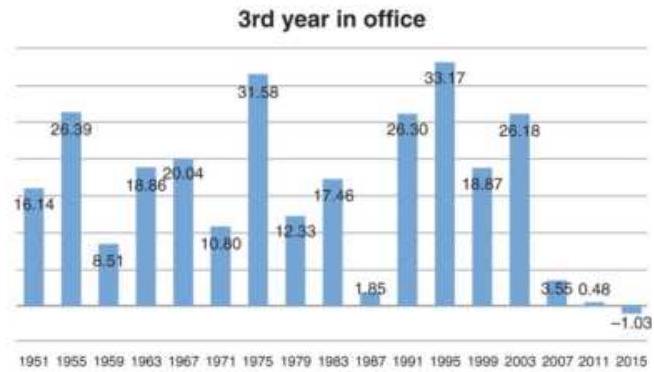

Industrials in the presidential cycle....

FIGURE 11.10 Volatility range, separated by presidential cycle, from 1948 to...

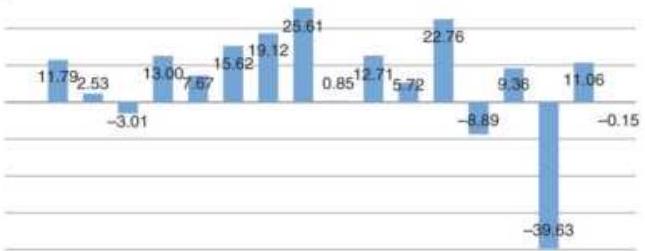

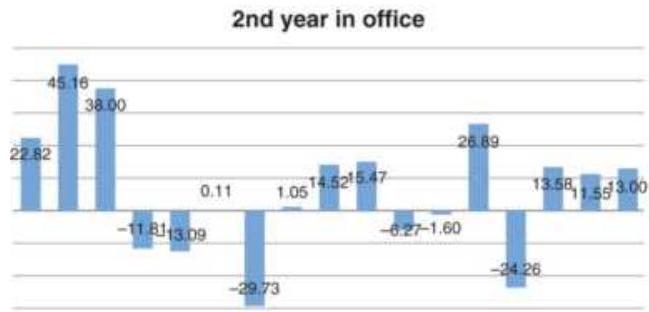

FIGURE 11.11 Detail of the election-year cycle. Each chart shows the history...

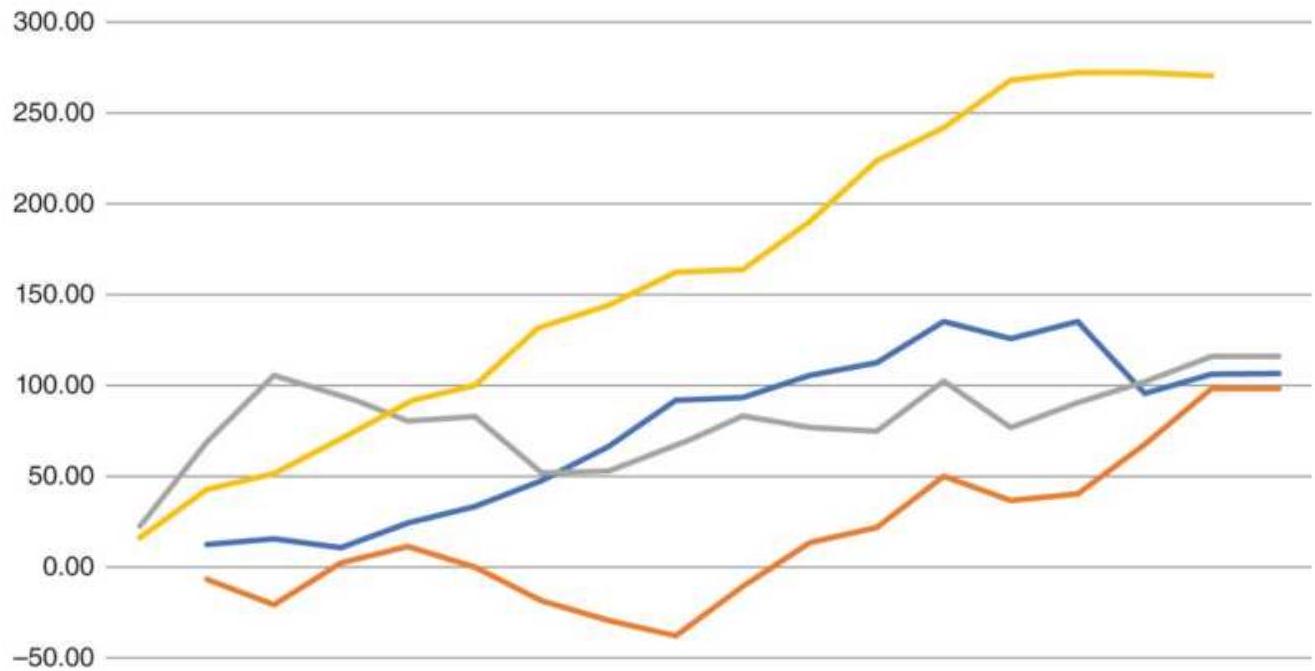

FIGURE 11.12 Cumulative returns trading each cycle year.

FIGURE 11.13 A 20-10 triangular MACD applied to IBM.

FIGURE 11.14 A 252-126 triangular MACD applied to back-adjusted corn futures...

FIGURE 11.15 Sinusoidal (sine) wave.

FIGURE 11.16 Compound sine wave.

FIGURE 11.17 Solver screen for corn solution.

FIGURE 11.18 Corn with trigonometric fit using Solver.

FIGURE 11.19 Spectral density. (a) A compound wave \(D\), formed from three prim...

FIGURE 11.20 10-, 20-, and 40-day cycles, within a 250 -day seasonal.

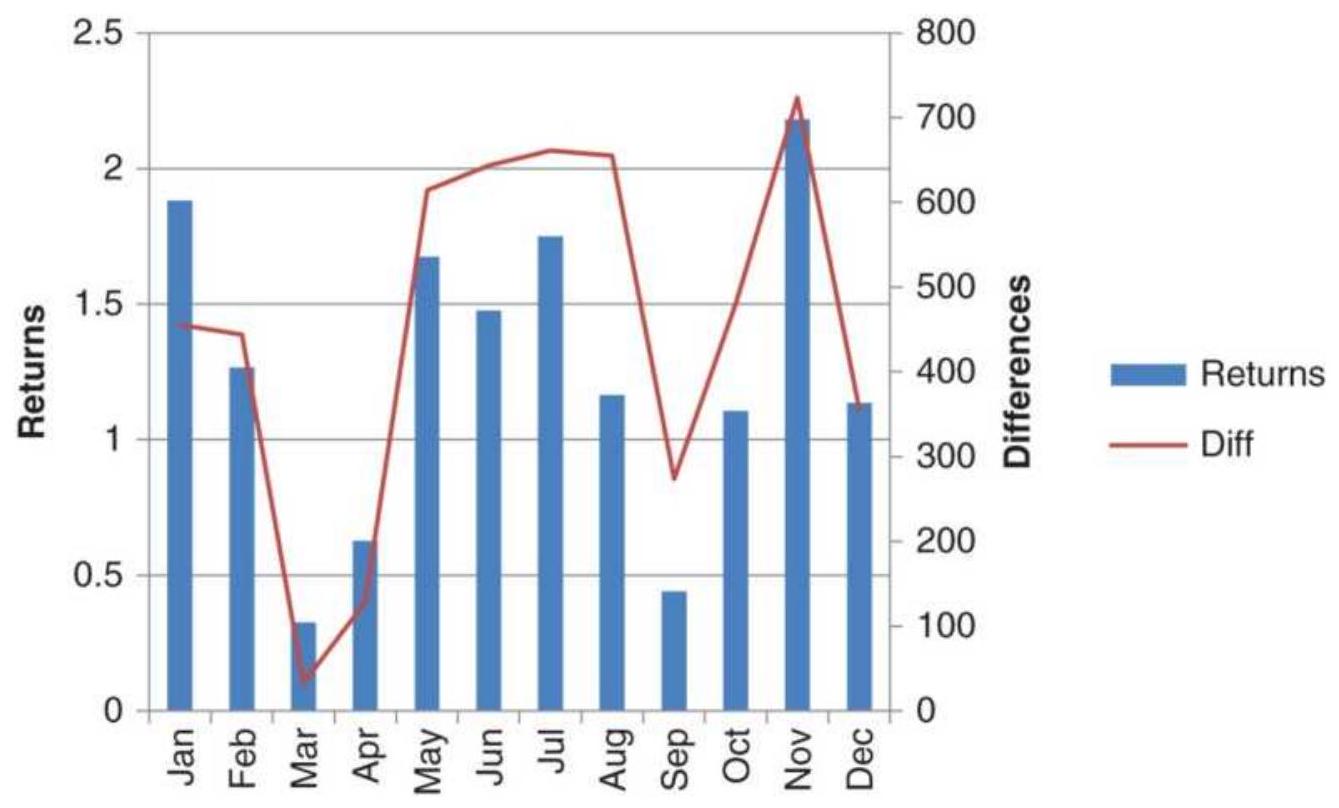

FIGURE 11.21 Output of spectral analysis program.

FIGURE 11.22 Excel spreadsheet showing input prices and output from Fourier ...

FIGURE 11.23 Results of Excel's Fourier Analysis for Southwest Airlines (LUV...

FIGURE 11.24 Cash corn cycles using Excel's

Fourier Analysis based on return...

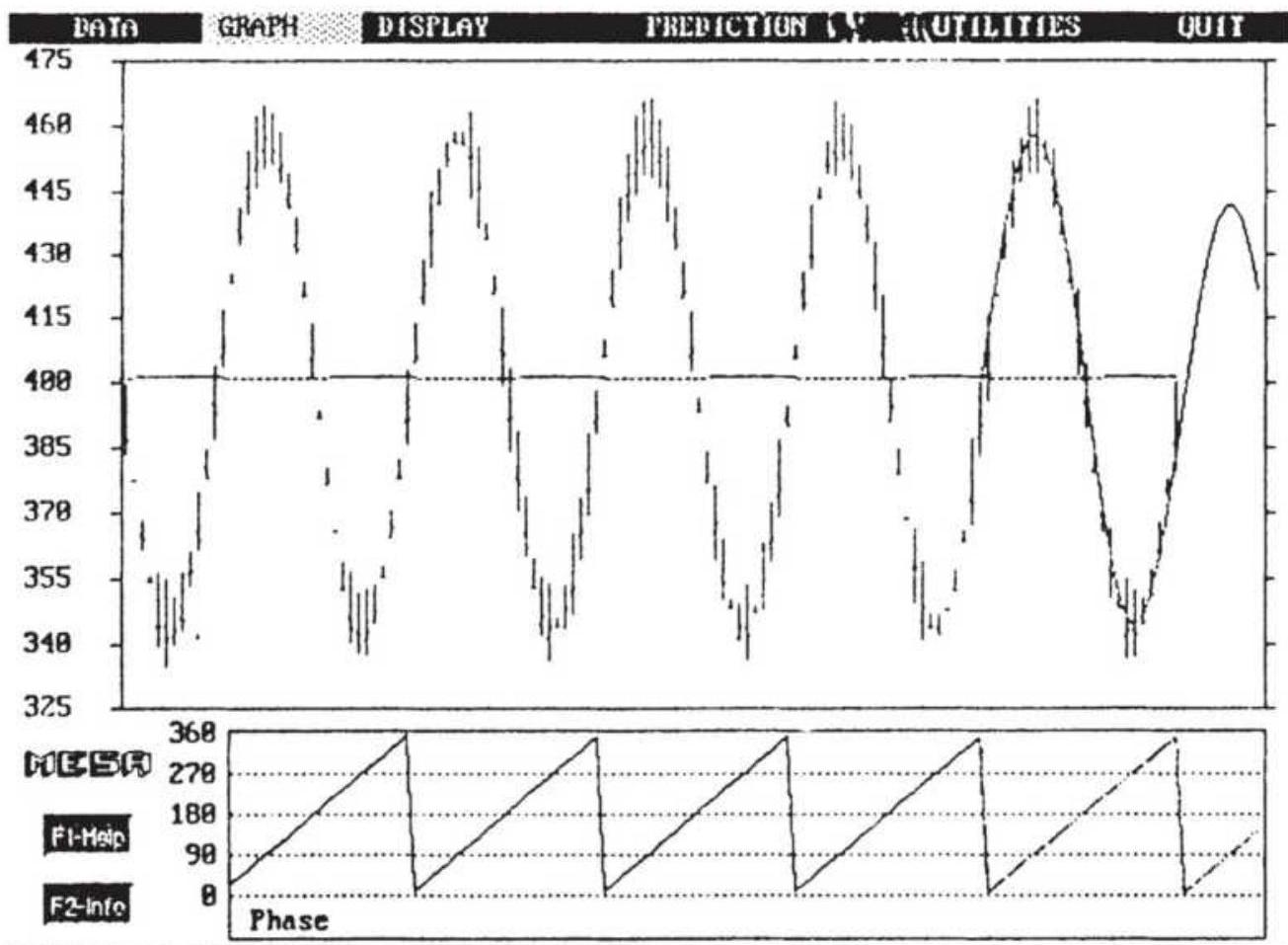

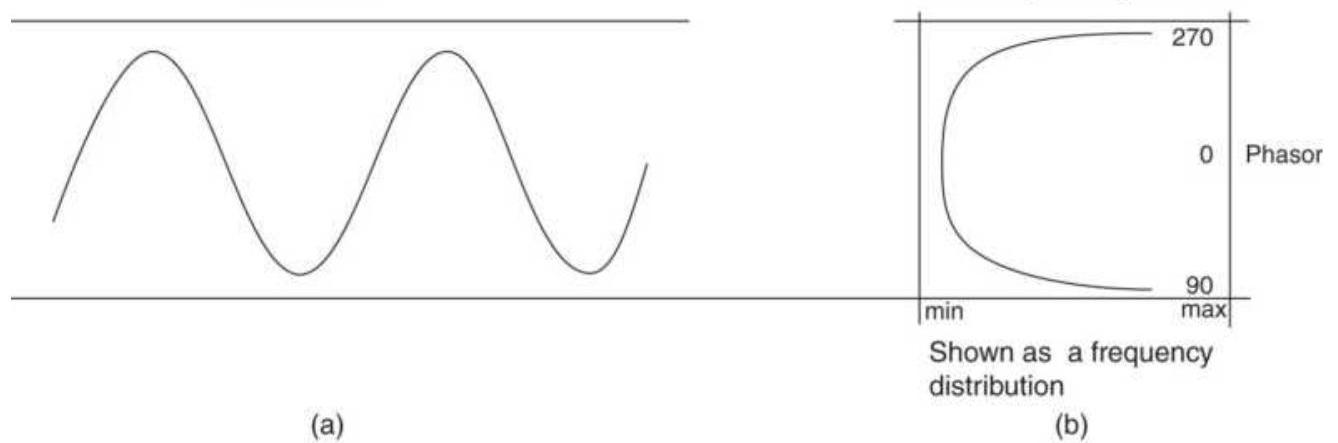

FIGURE 11.25 The phase angle forms a sawtooth pattern.

FIGURE 11.26 A cycle with the phasor and phase angle.

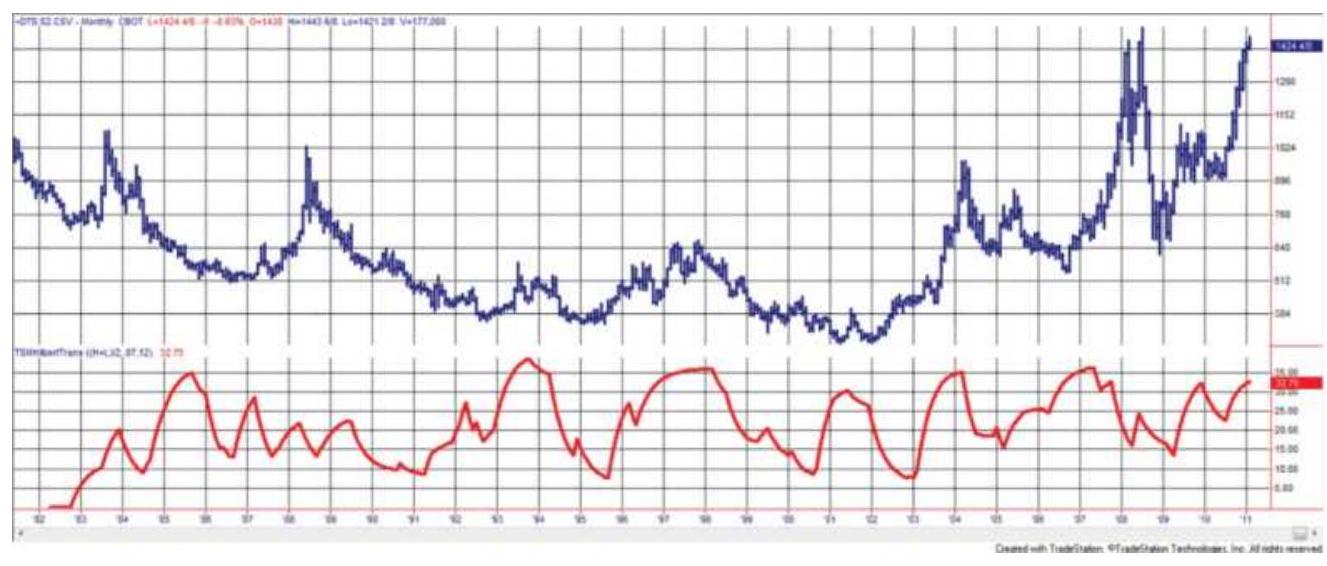

FIGURE 11.27 Back-adjusted soybean futures, 1982-2018, with the Hilbert Tran...

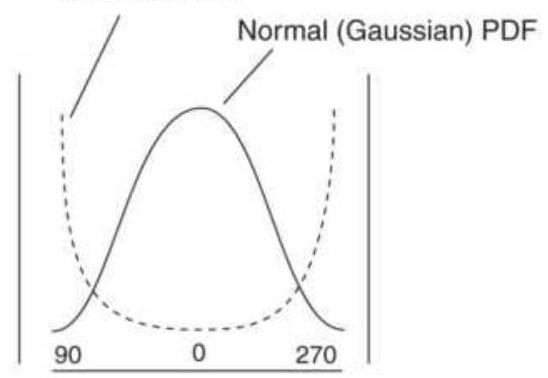

FIGURE 11.28 Probability Density Function (PDF) of a sine wave.

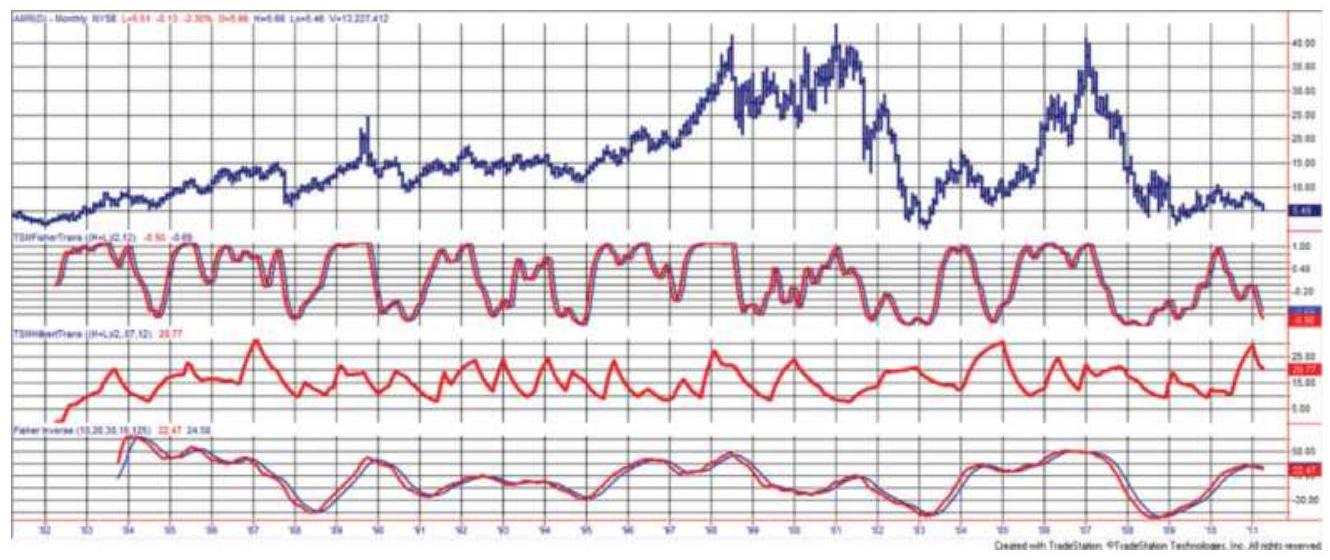

FIGURE 11.29 Monthly AMR (top) prices from 1982 with the Fisher Transform (2...

FIGURE 11.30 Ehlers' Universal Oscillator shown along the bottom of a heatin...

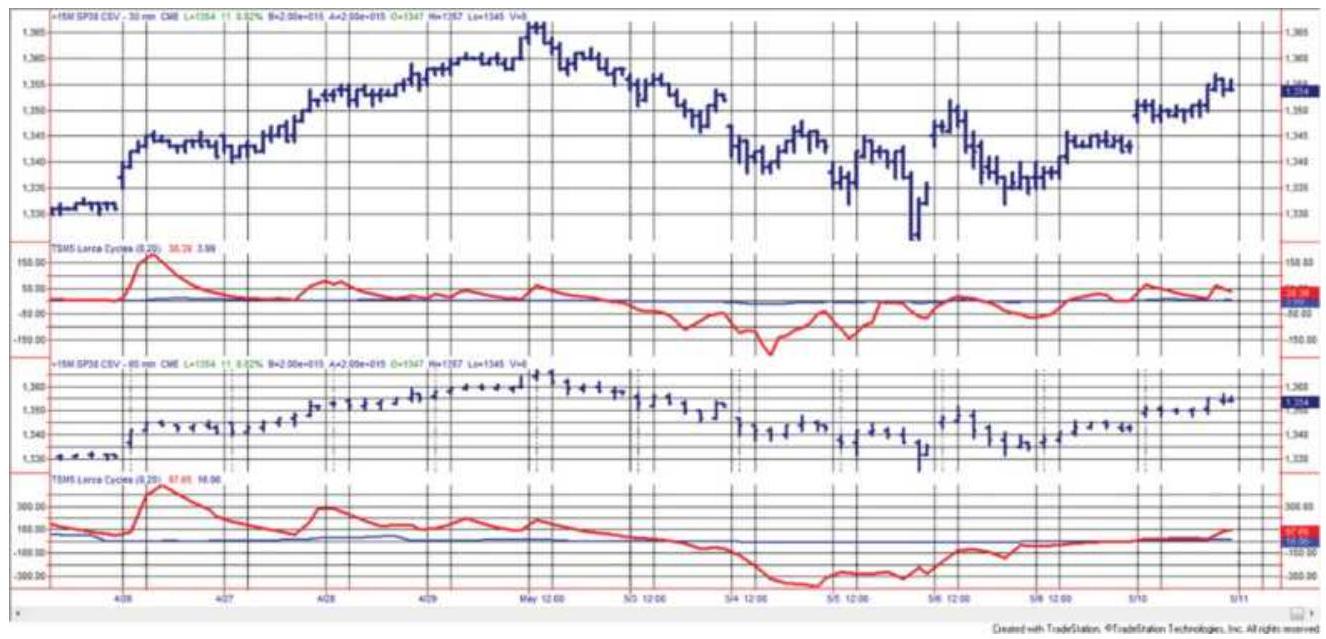

FIGURE 11.31 The Short Cycle Indicator applied to the emini S\&P 30- (top pan...

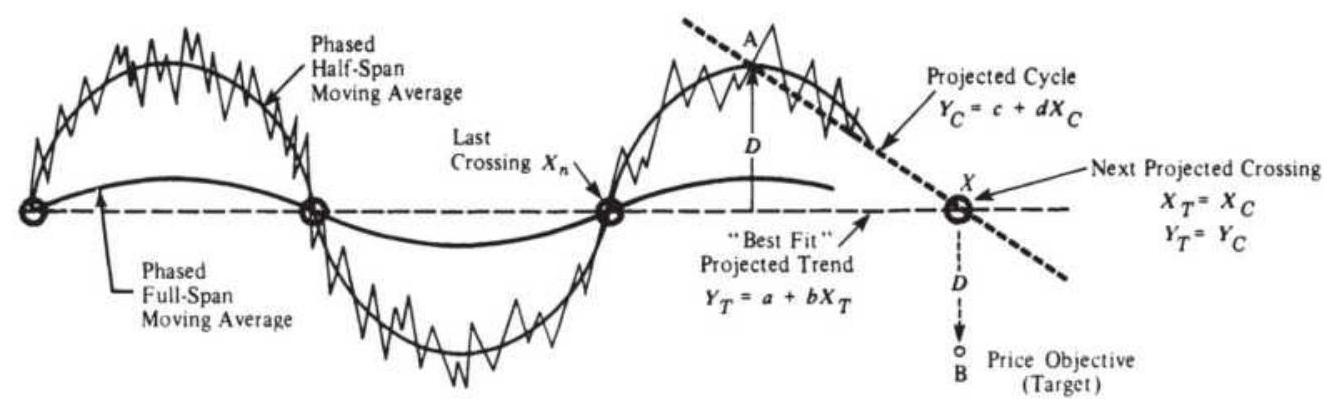

FIGURE 11.32 Hurst's phasing and target price projection.

Chapter 12

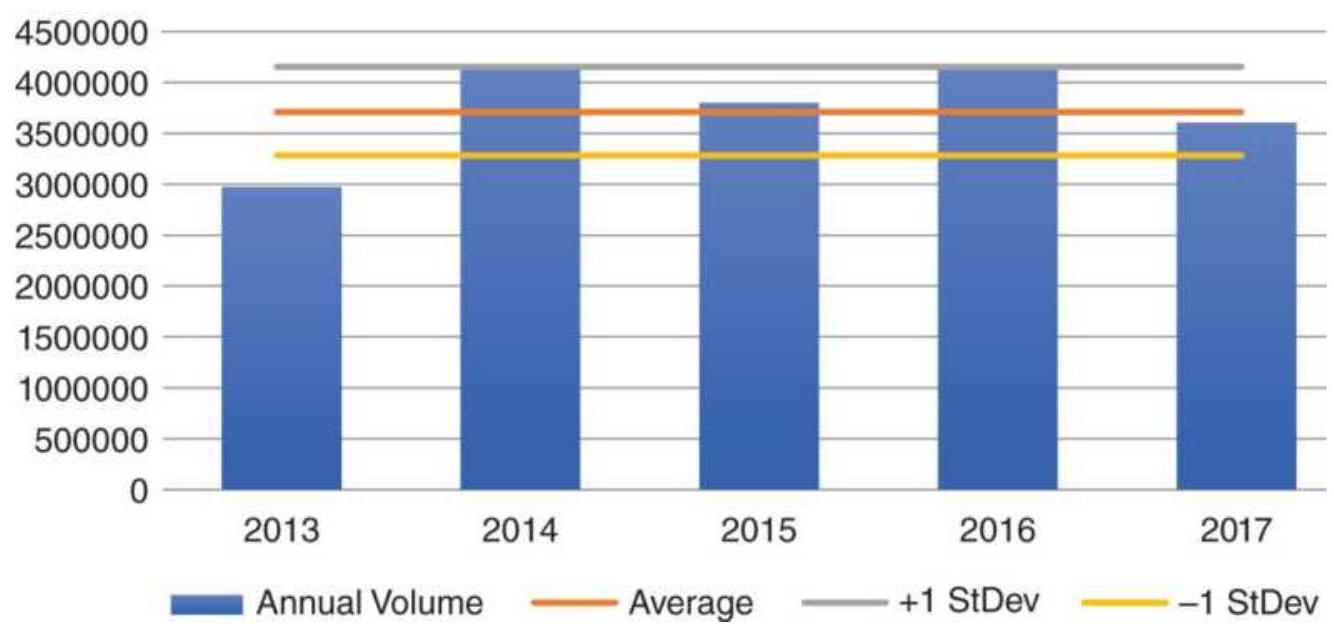

FIGURE 12.1a Average annual volume of Amazon with 1 standard deviation line....

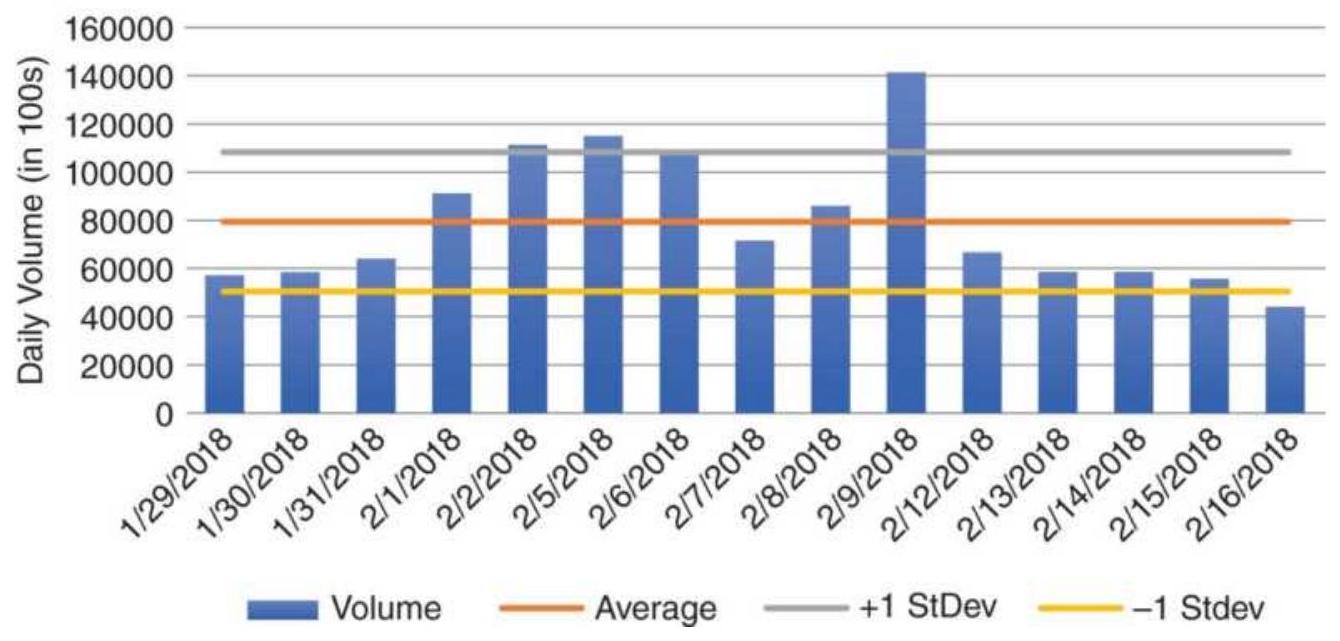

FIGURE 12.1b Amazon average daily volume from January 29 through February 16...

FIGURE 12.2 Tesla price and volume showing numerous spikes on price drops.

FIGURE 12.3 Gold futures with multiple volume spikes, not as extreme or as w...

FIGURE 12.4 Caterpillar (CAT) chart plotting as Equivolume.

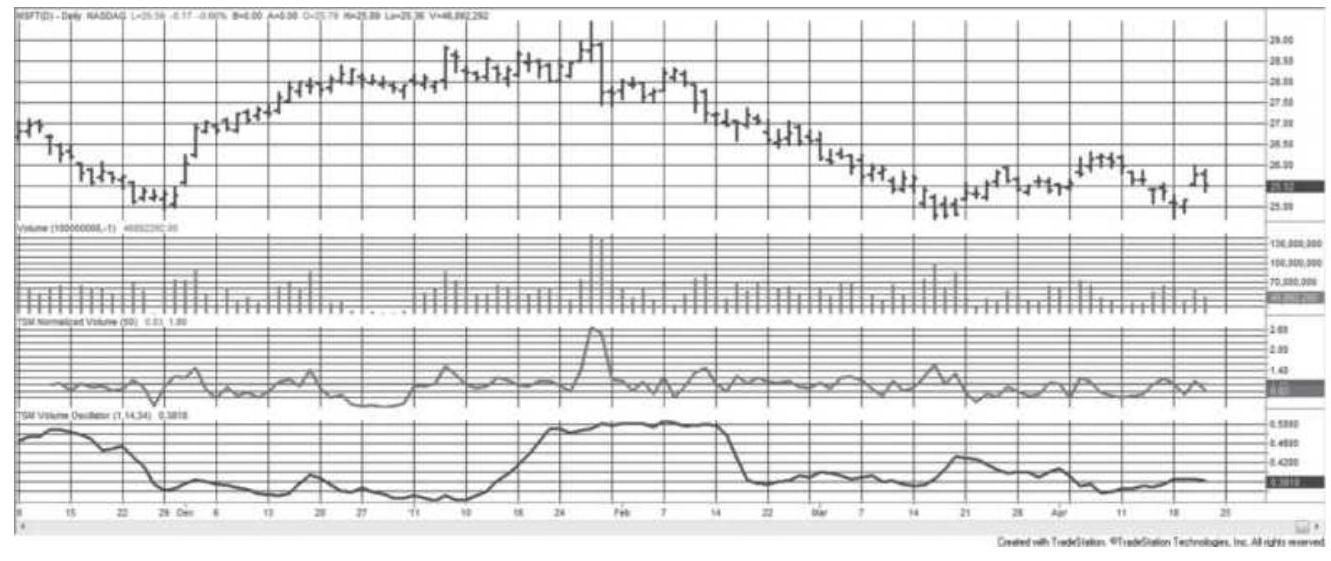

FIGURE 12.5 Microsoft prices (top), volume (second panel), normalized 50-day...

FIGURE 12.6 On-Balance Volume applied to GE from December 2010 through April...

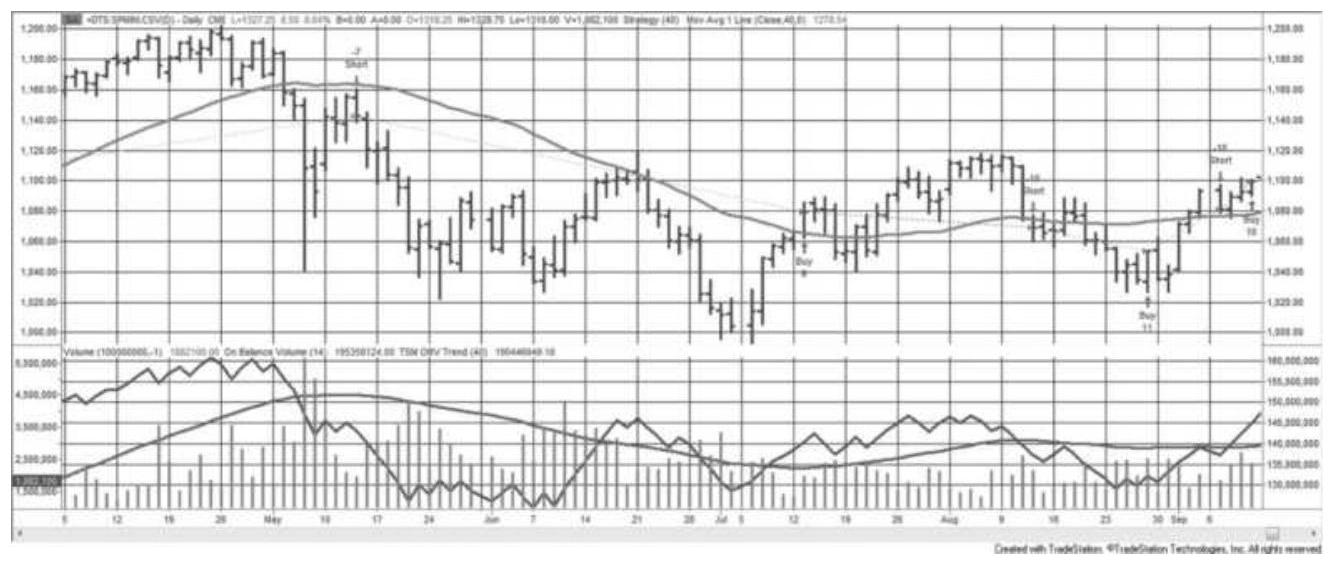

FIGURE 12.7 S\&P prices are shown with the volume, OBV, and a 40 -day trend of...

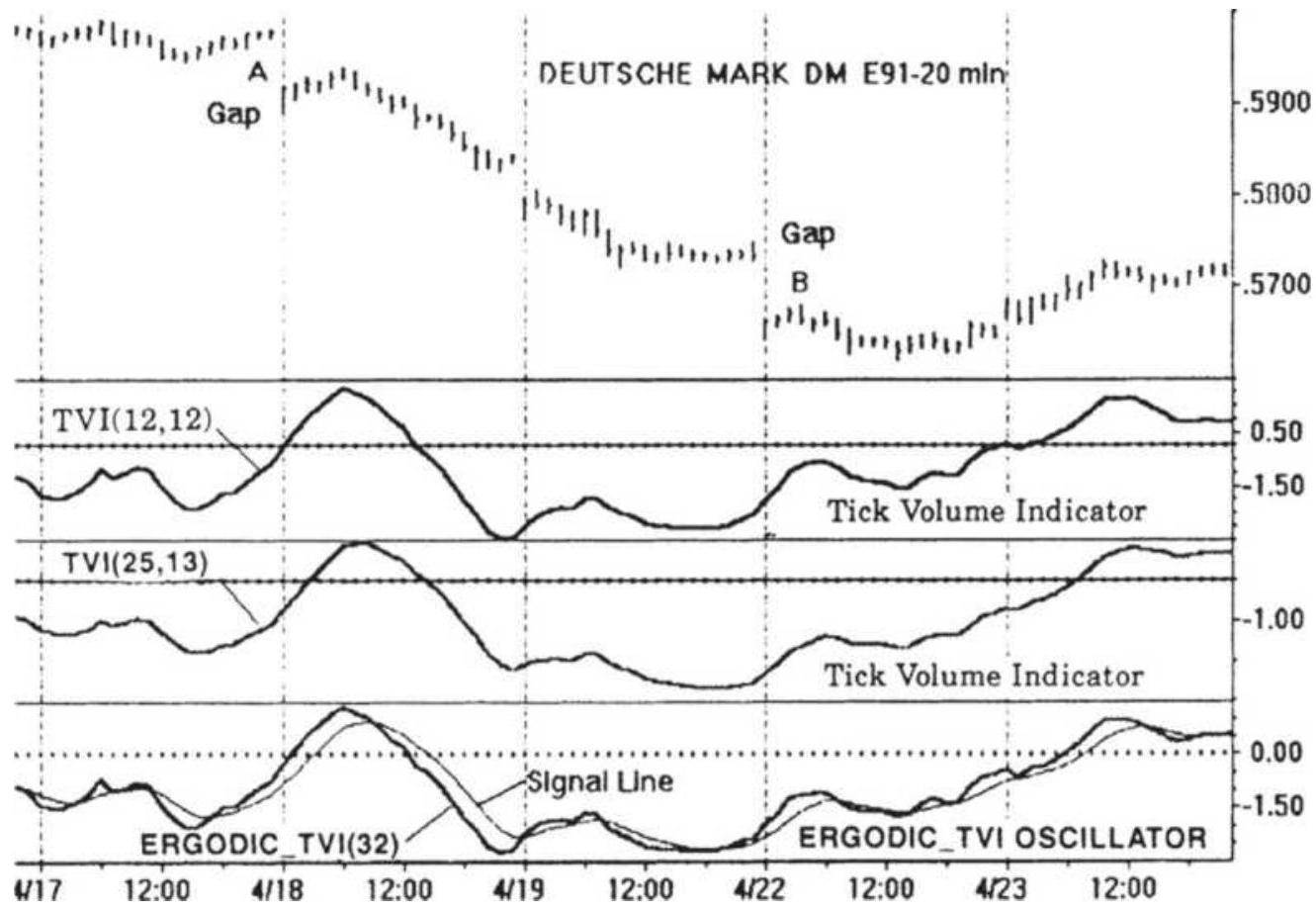

FIGURE 12.8 Blau's Tick Volume Indicator.

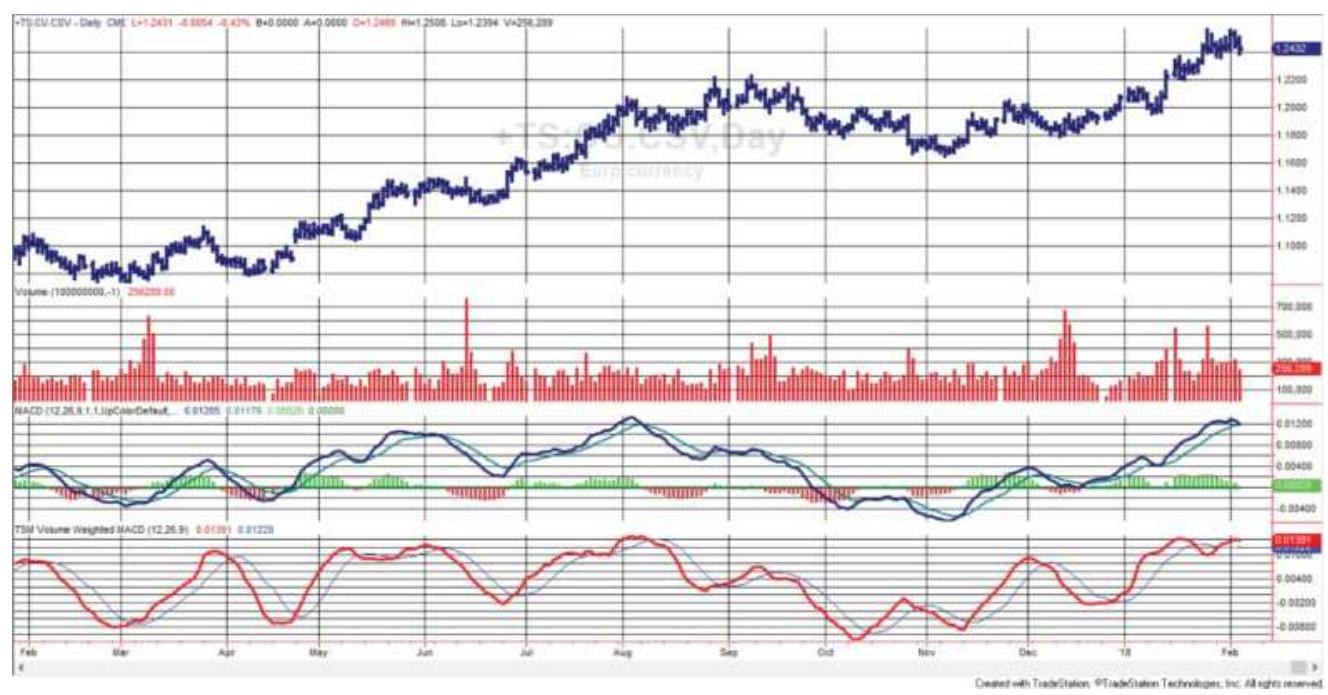

FIGURE 12.9 Volume-weighted MACD applied to euro currency futures.

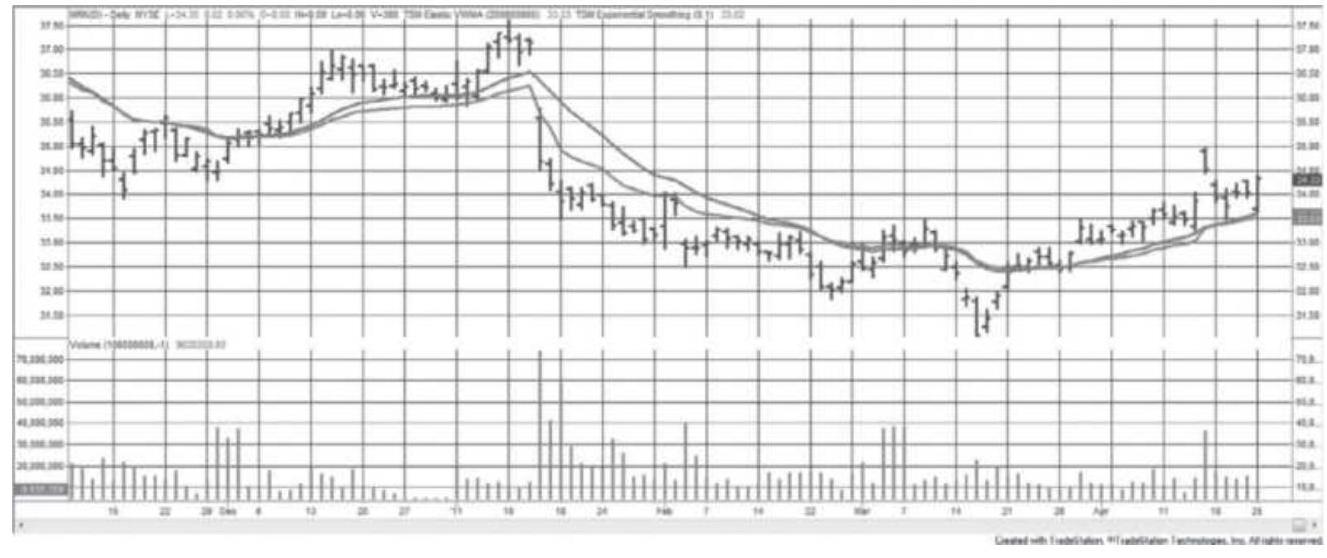

FIGURE 12.10 Elastic Volume-Weighted Moving Average (tracking closer to pric...

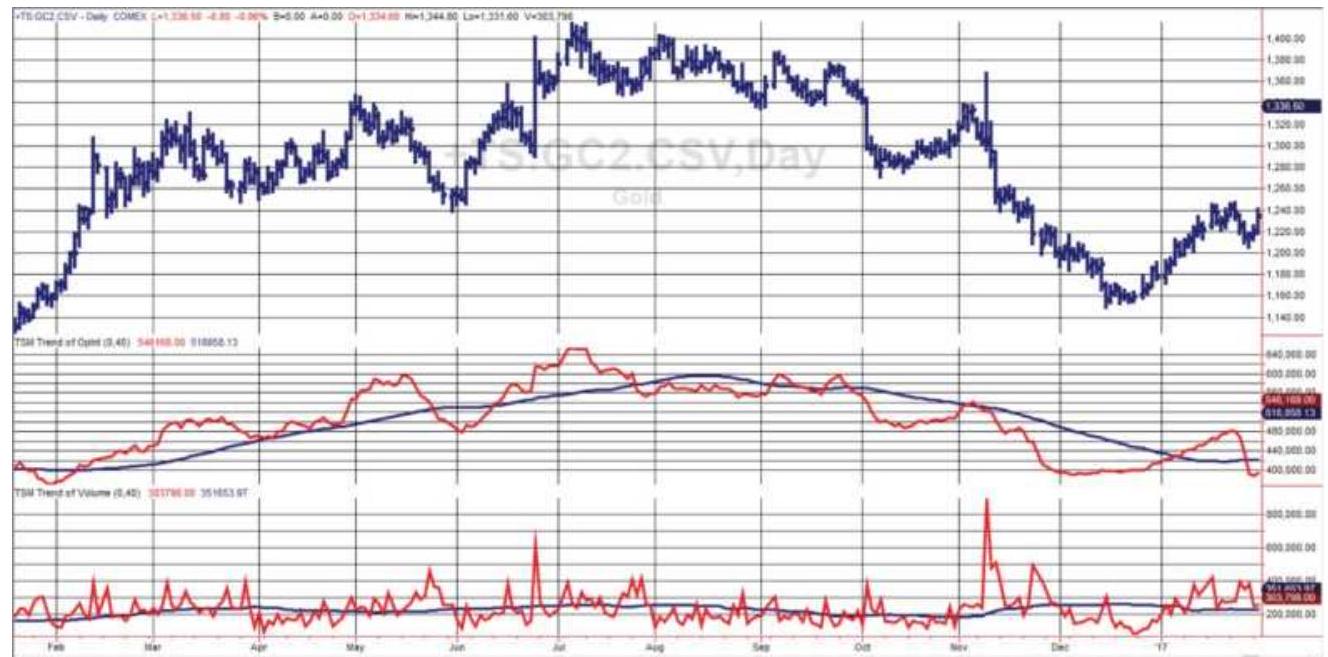

FIGURE 12.11 Gold futures with open interest (center) and volume (bottom), e...

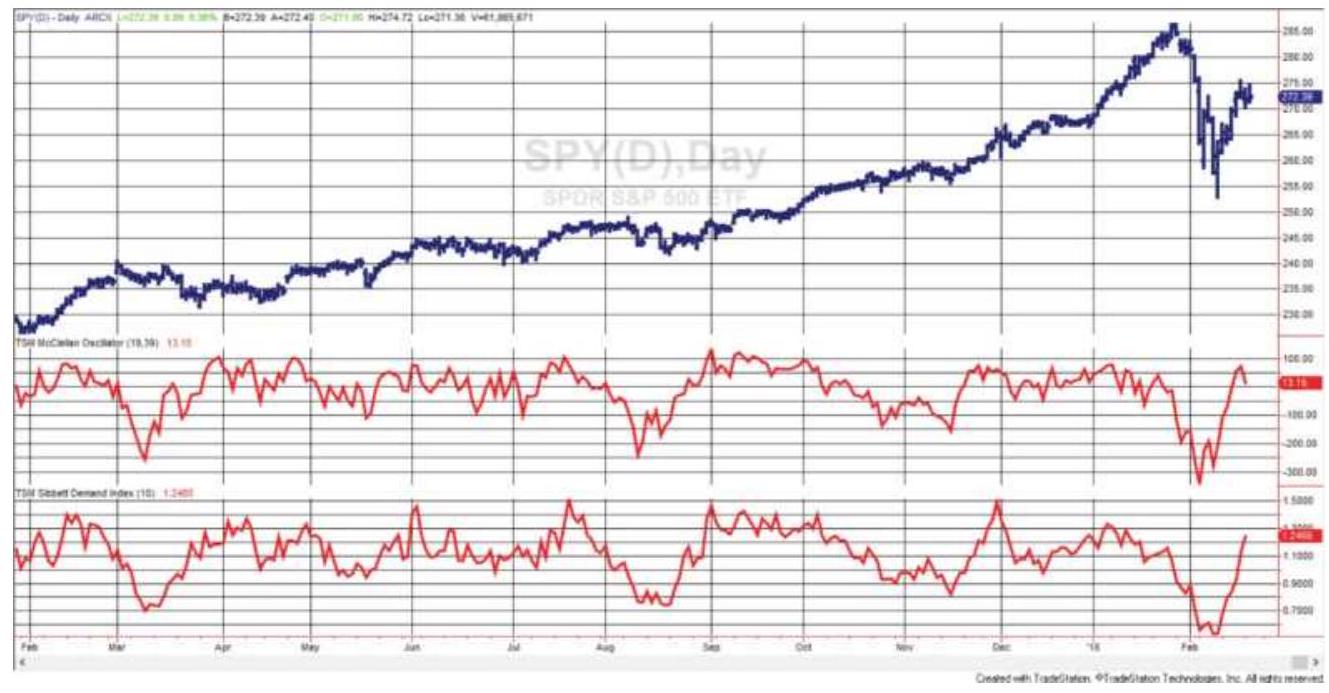

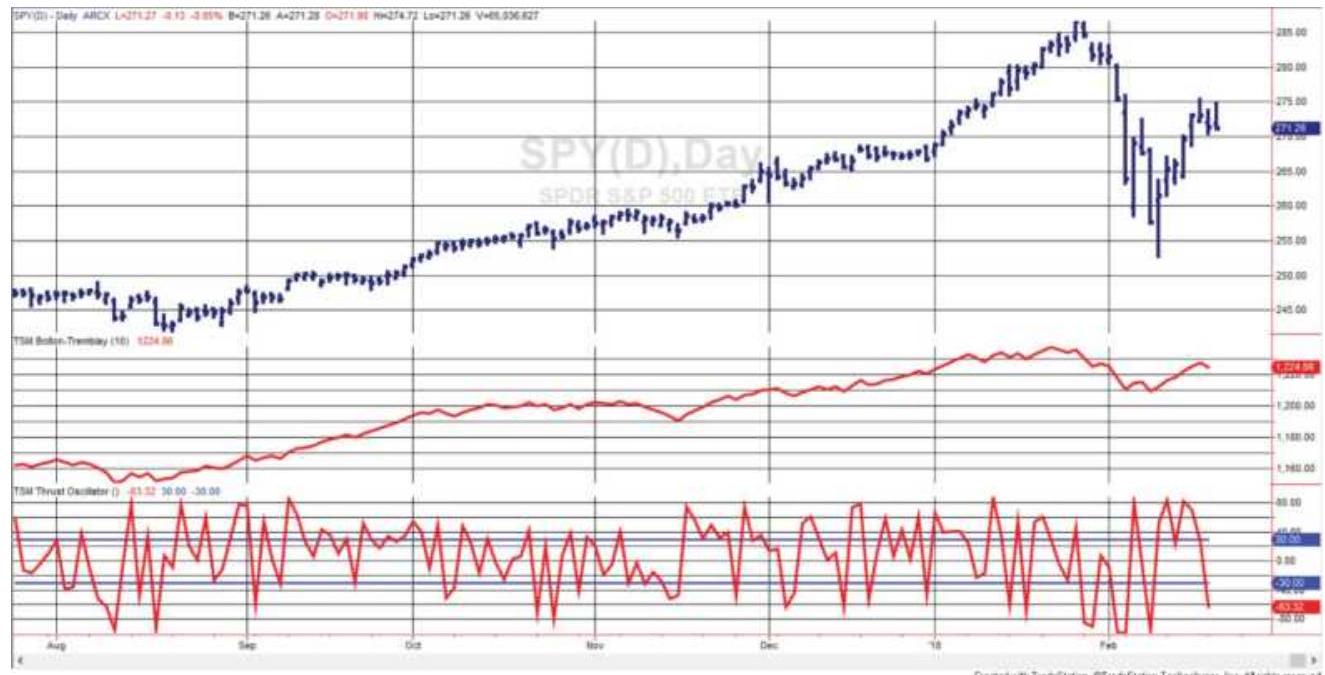

FIGURE 12.12 SPY prices ending in February 2018 with the McClellan Oscillato...

FIGURE 12.13 SPY prices with BoltonTremblay Index (center) and Thrust Oscil... FIGURE 12.14 The Arms Index (TRIN) shown with SPY during 2017.

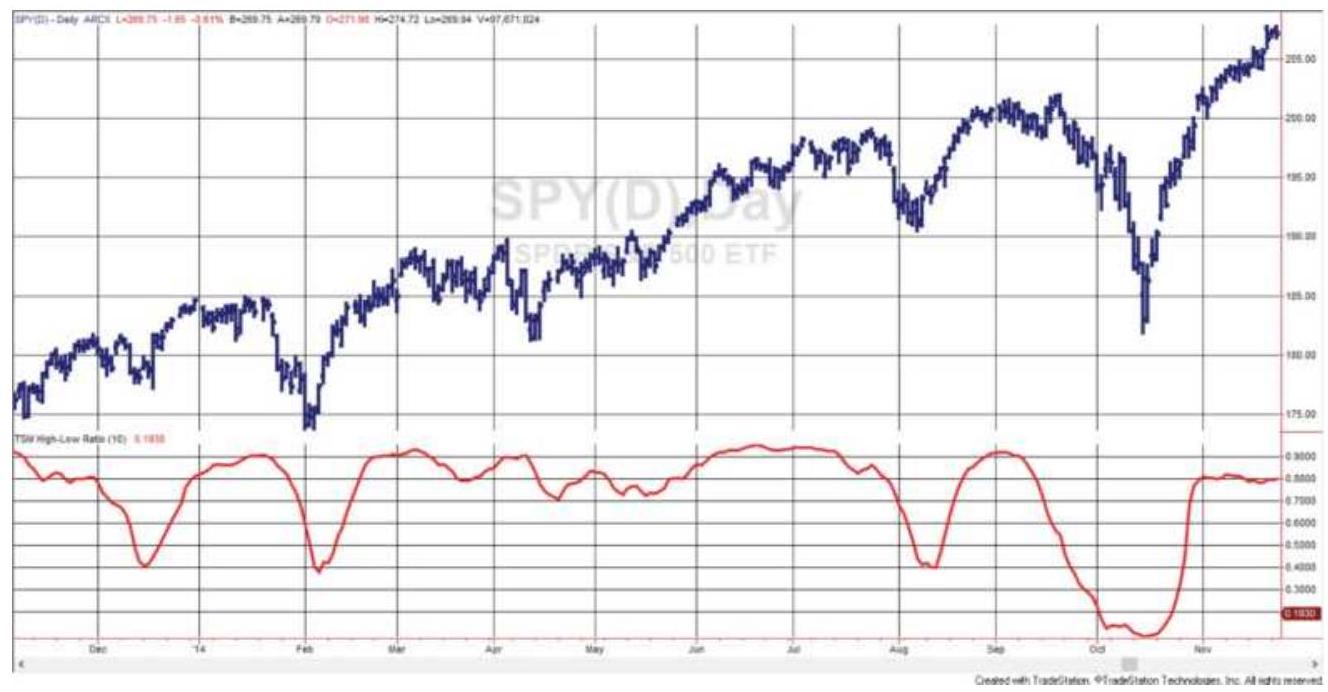

FIGURE 12.15 High-Low Ratio (bottom) shown with SPY (top).

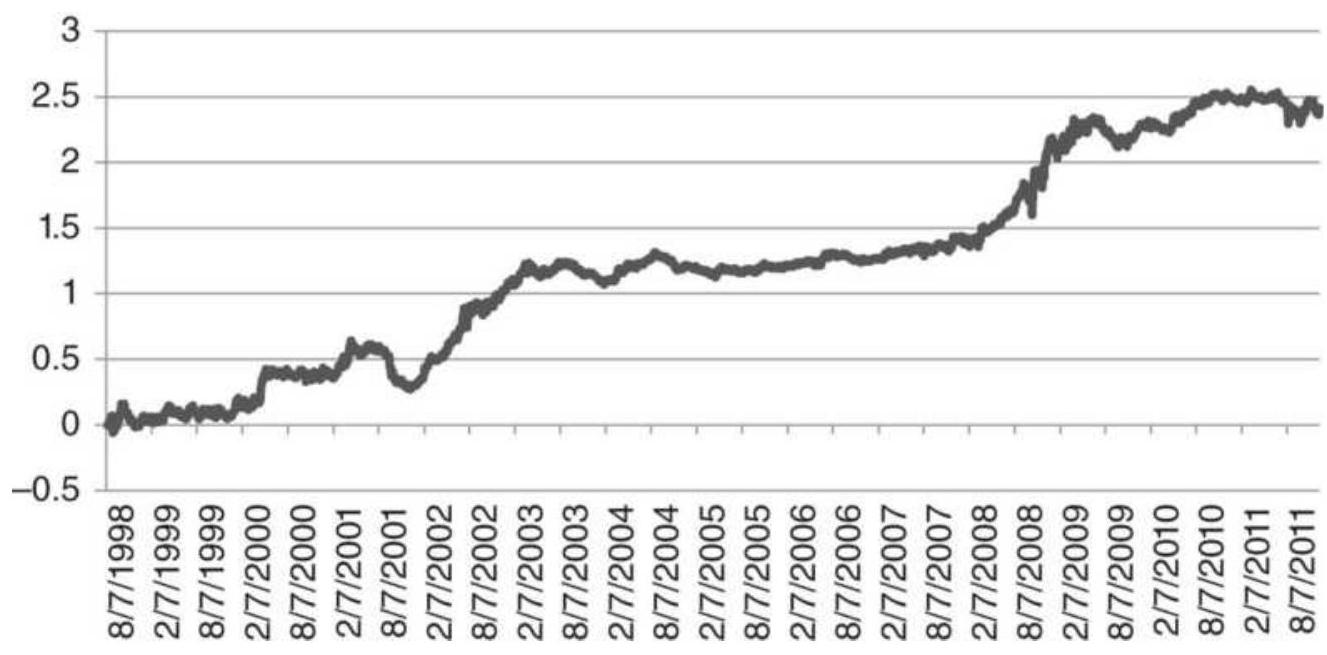

FIGURE 12.16 Total PL using the AD ratio as a countertrend signal applied to...

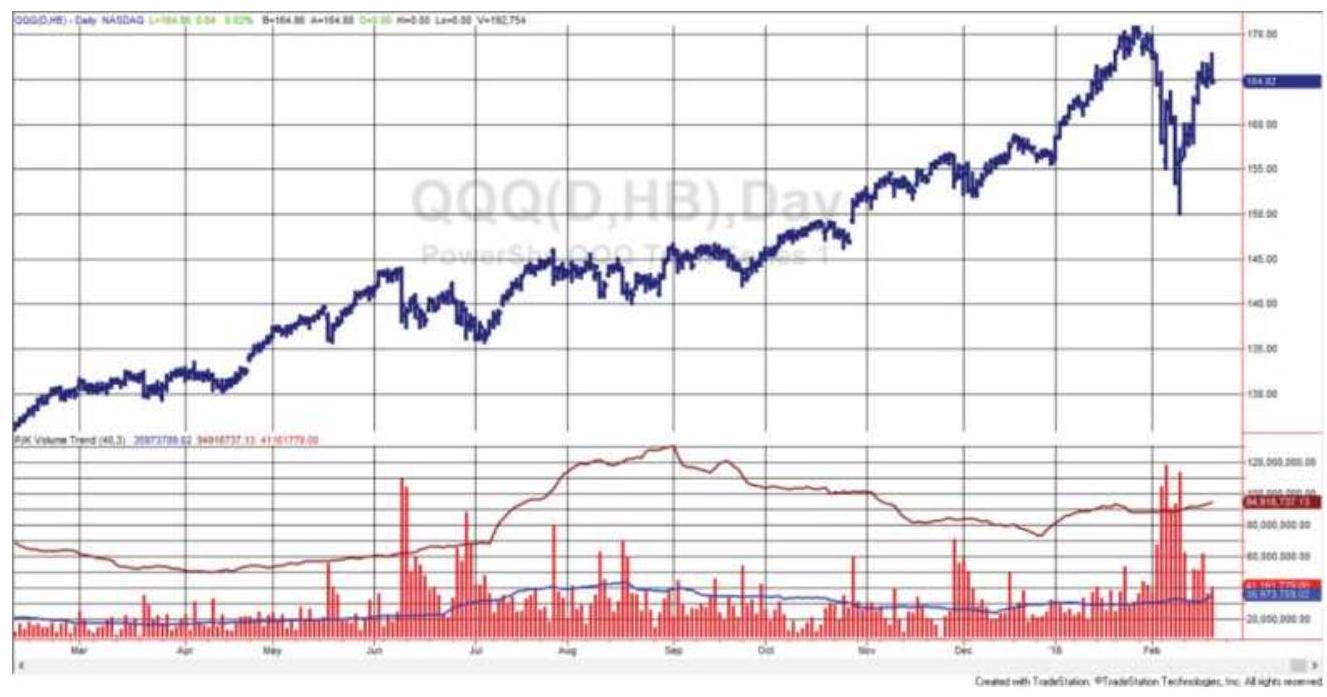

FIGURE 12.17 QQQ prices (top) with volume

(bottom), along with the lagged av...

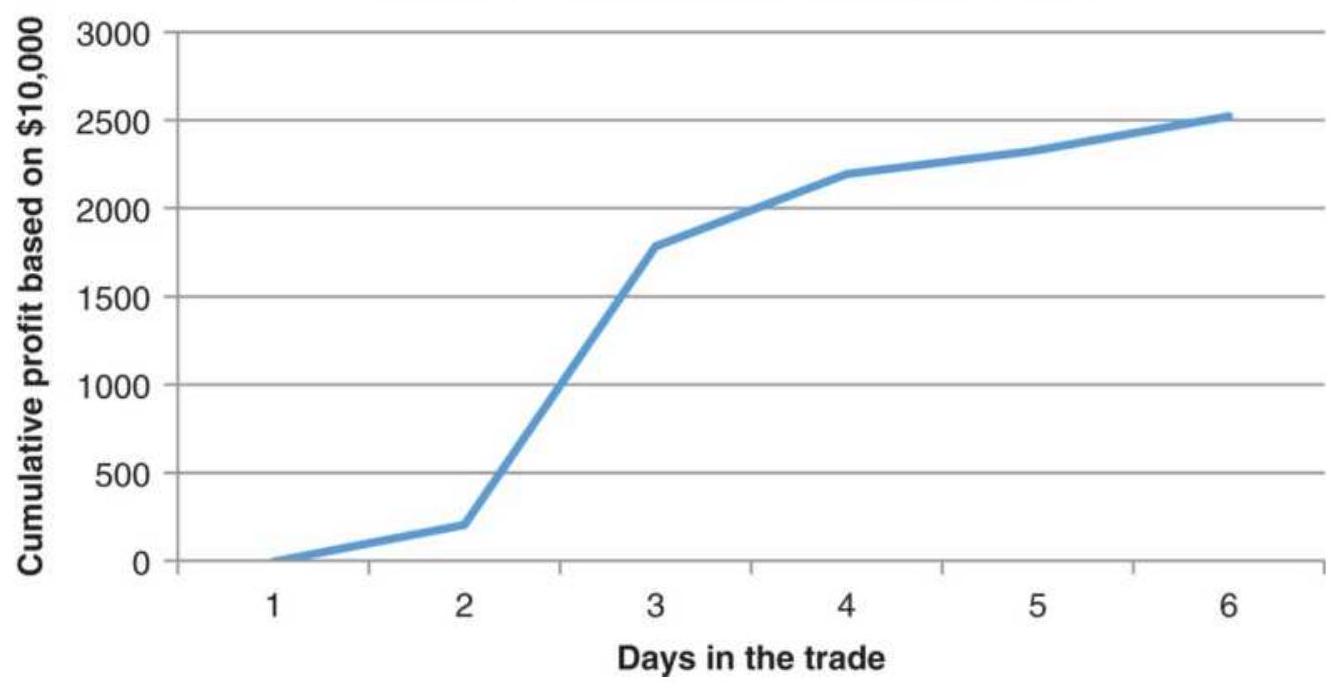

FIGURE 12.18 Average return per day for 275 stocks using the volume spike ru...

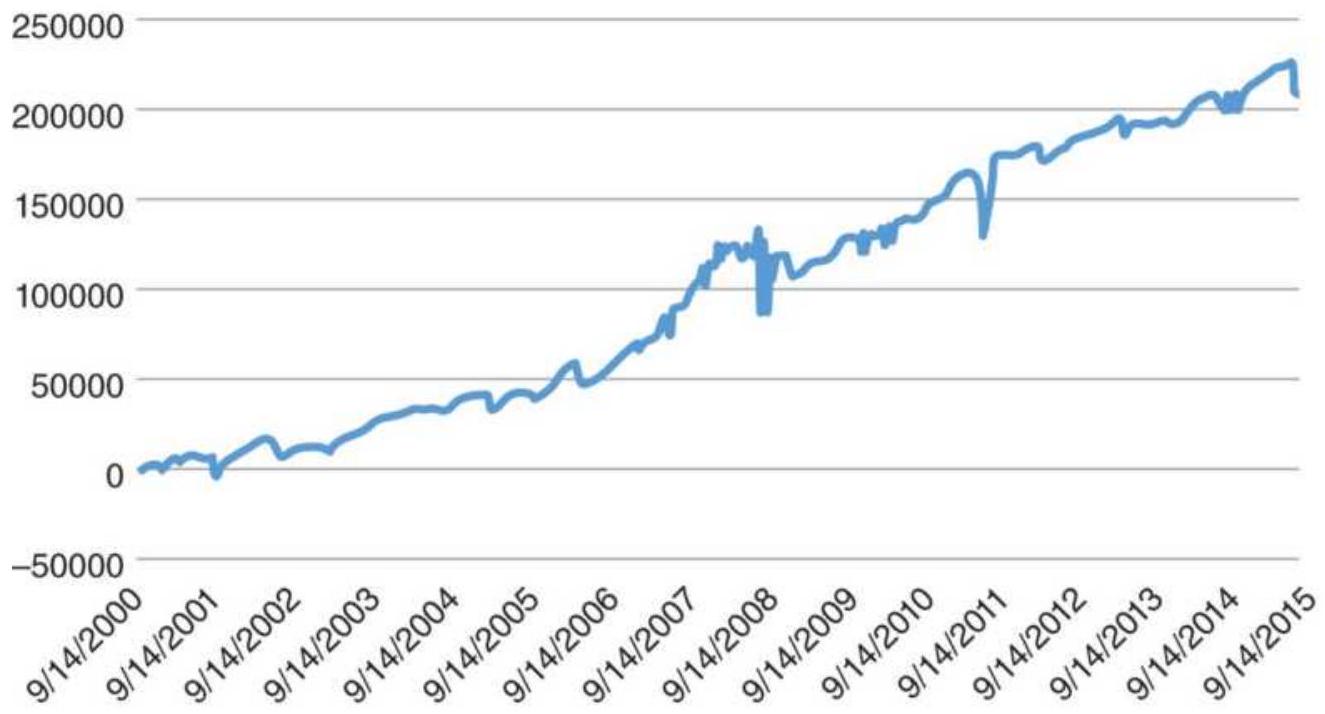

FIGURE 12.19 Total returns of all trades taken using the volume spike rules....

FIGURE 12.20 Result of trades chosen randomly using the volume spike rules....

FIGURE 12.21 Trading volatility spikes in SPY that come at the same time as ...

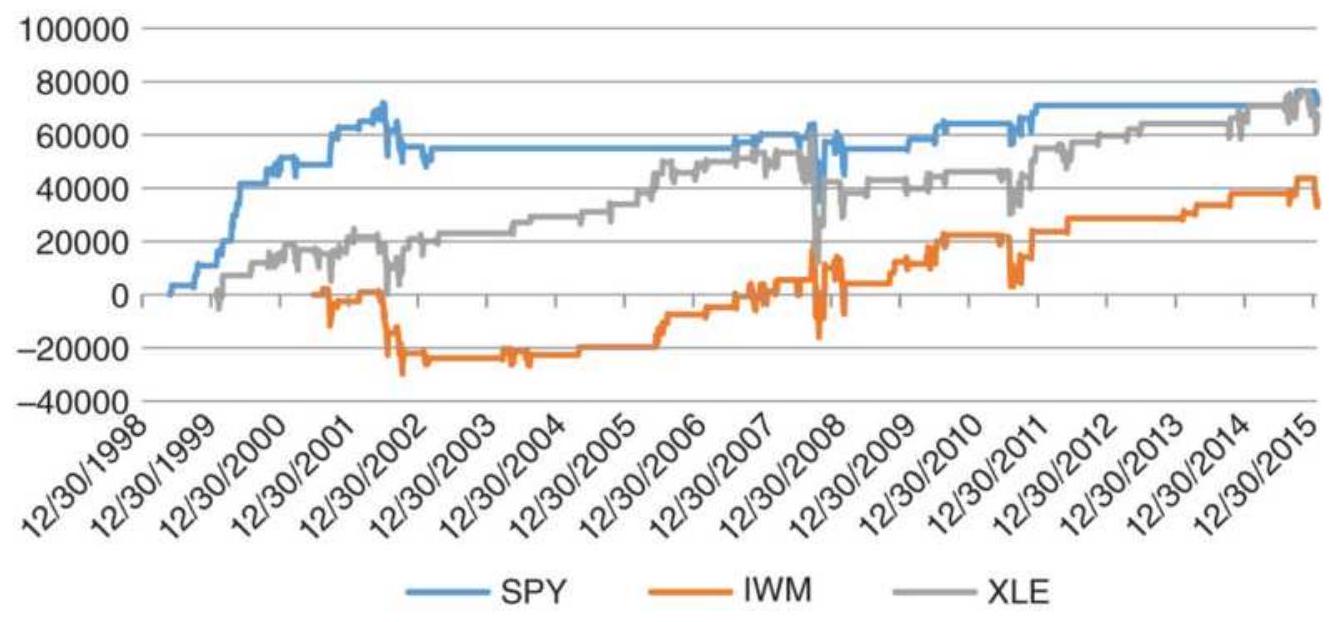

FIGURE 12.22 Results of the Pseudo-Volume Strategy for SPY, IWM, and XLE.

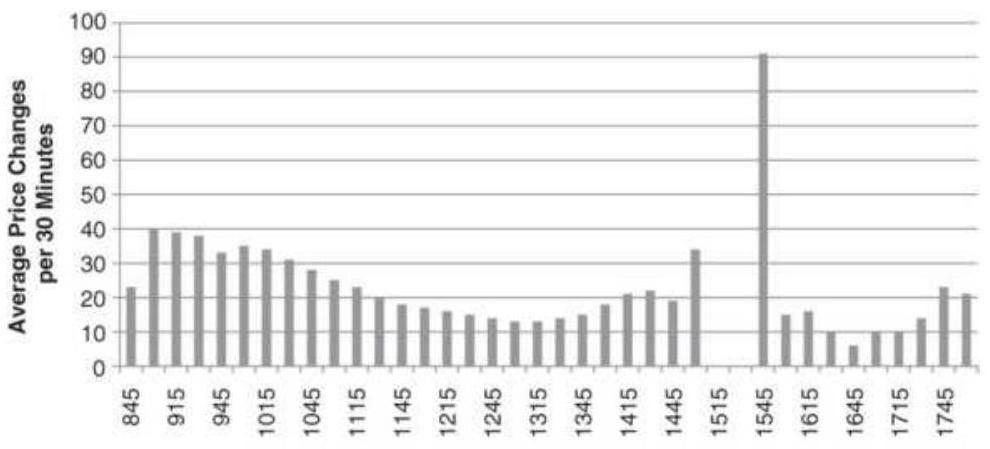

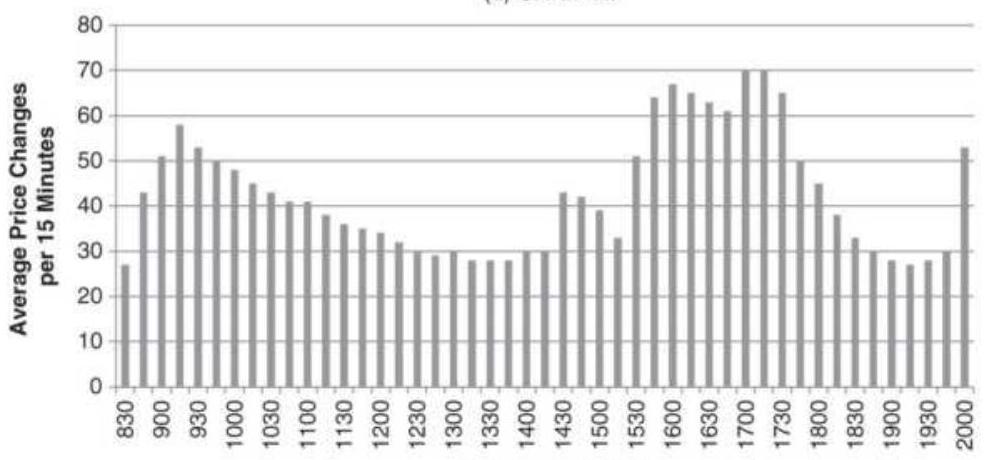

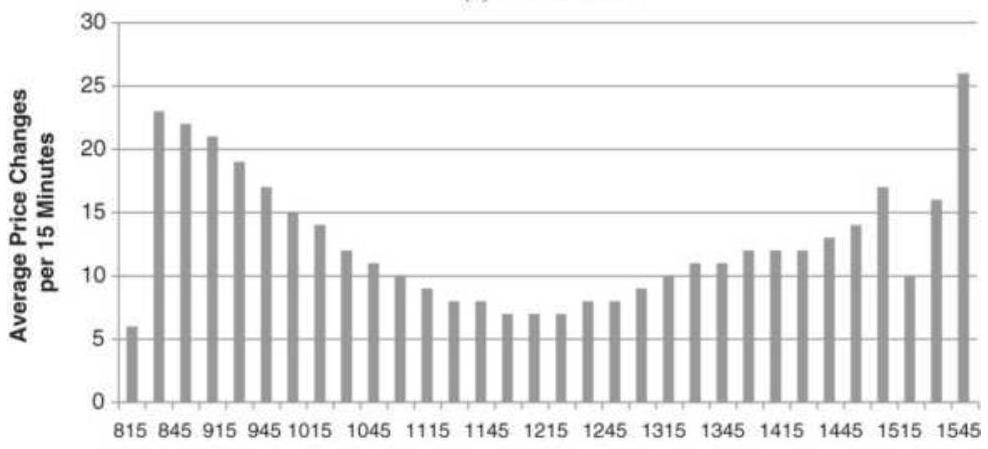

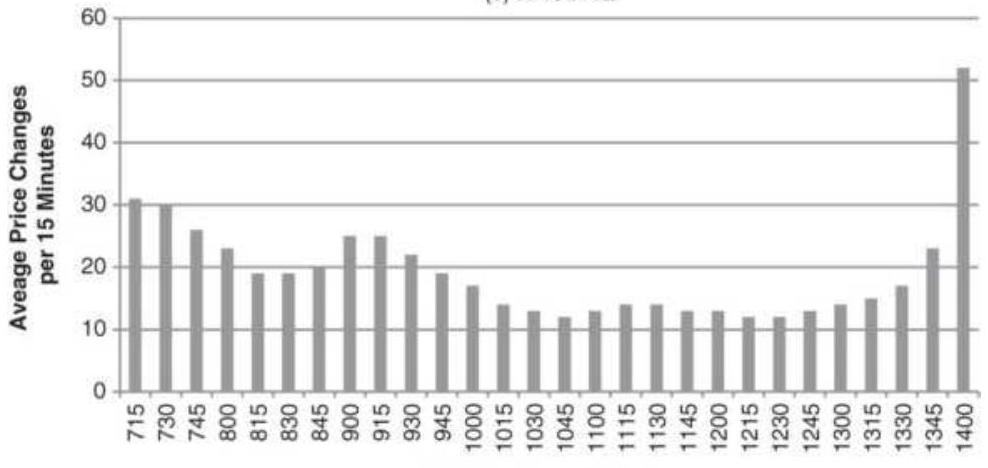

FIGURE 12.23 Intraday, 15-minute tick volume patterns, 1995-2005. (a) Crude ...

Chapter 13

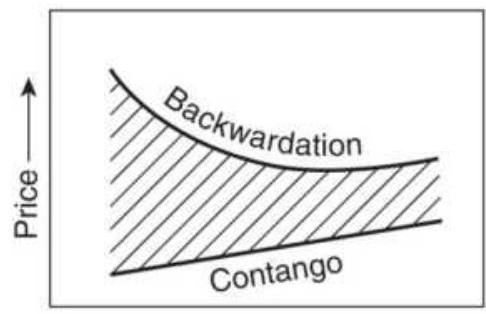

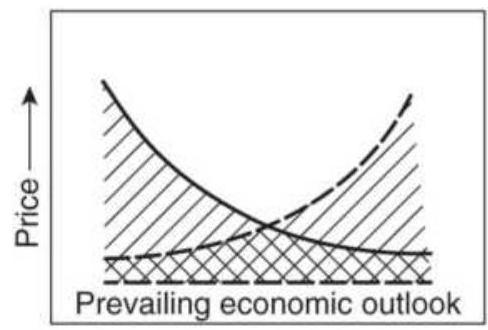

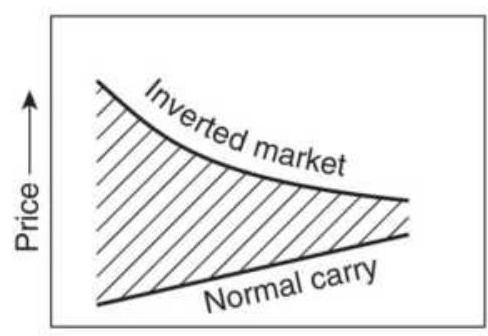

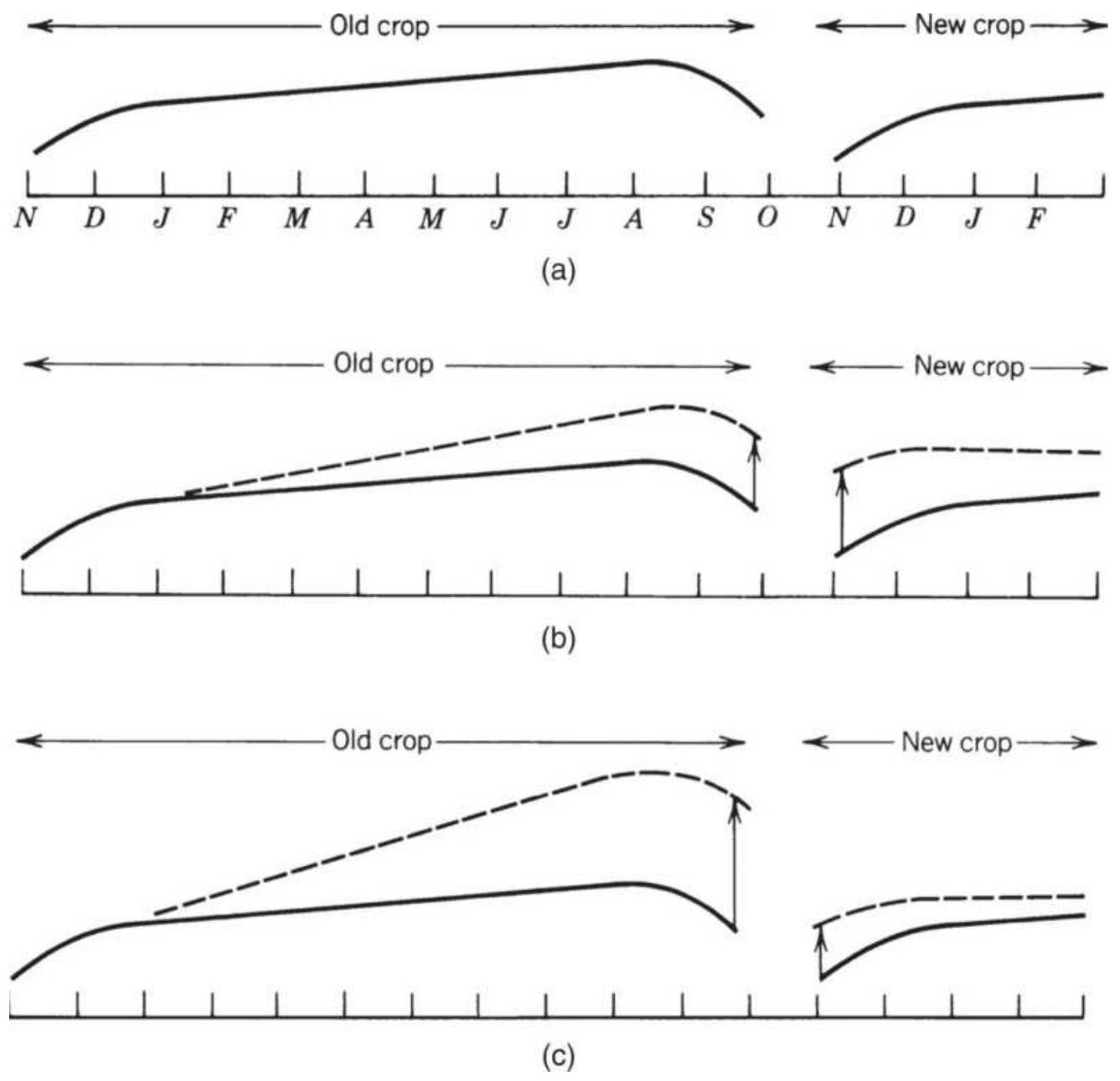

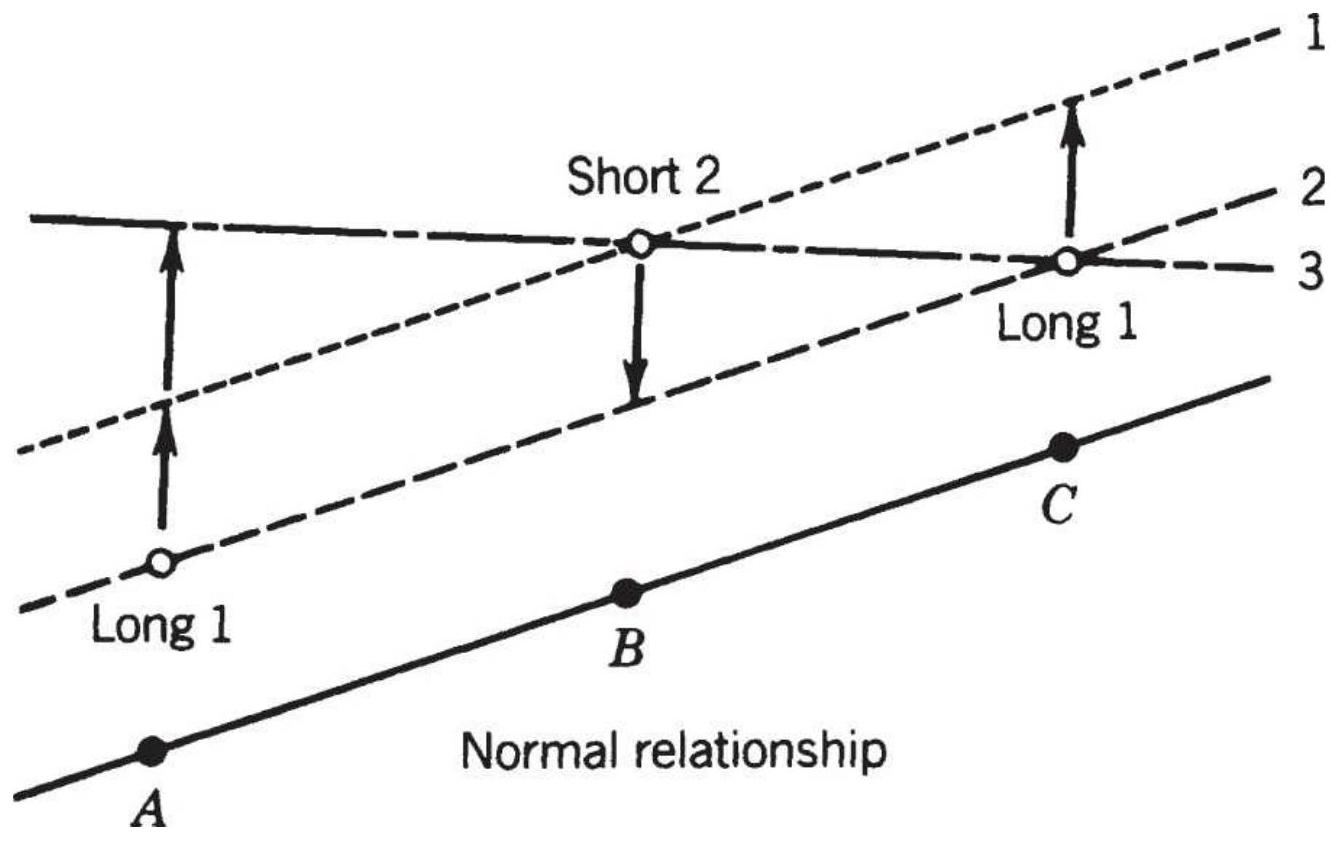

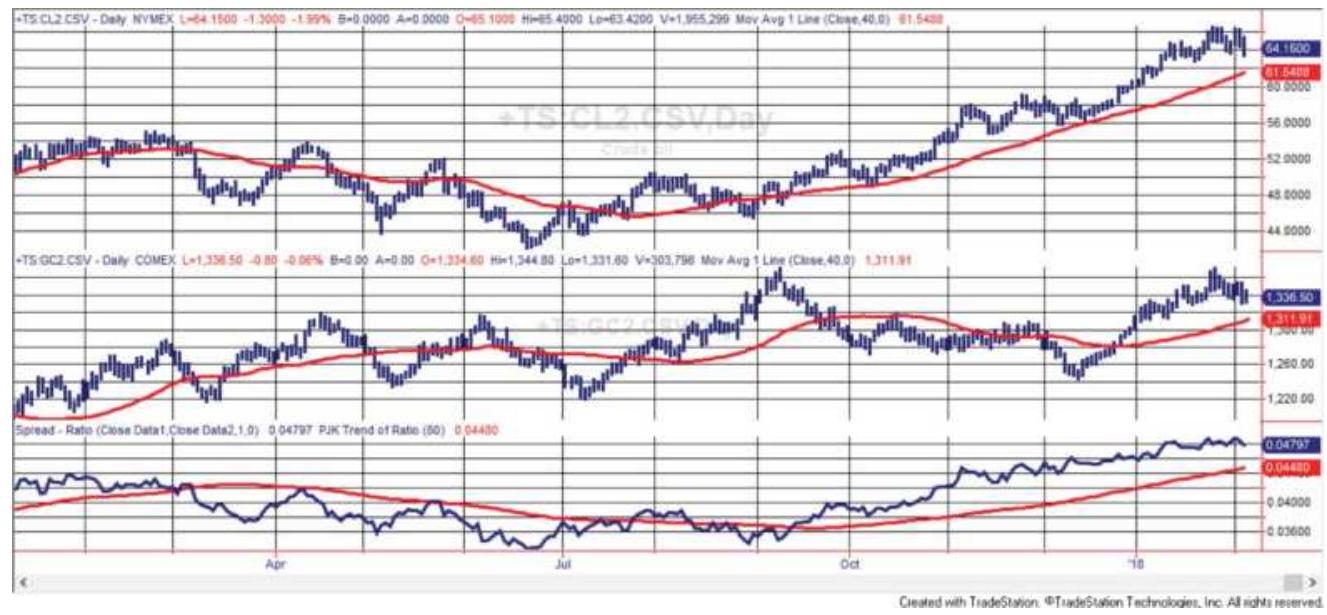

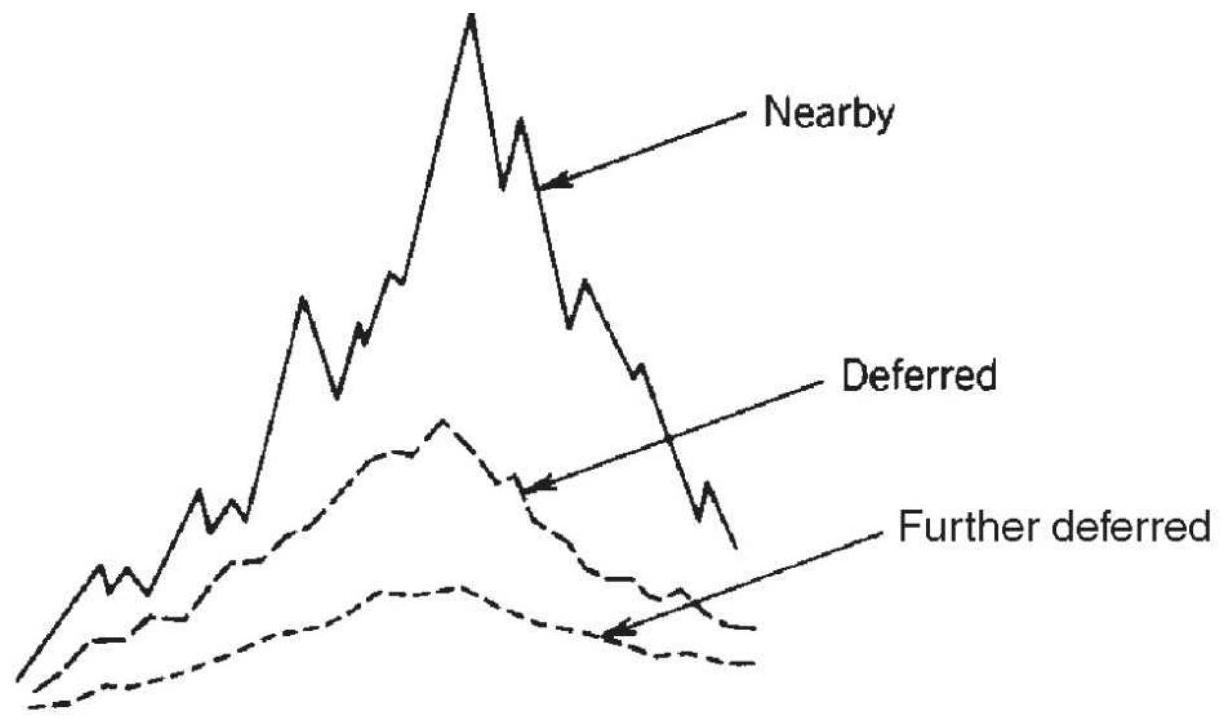

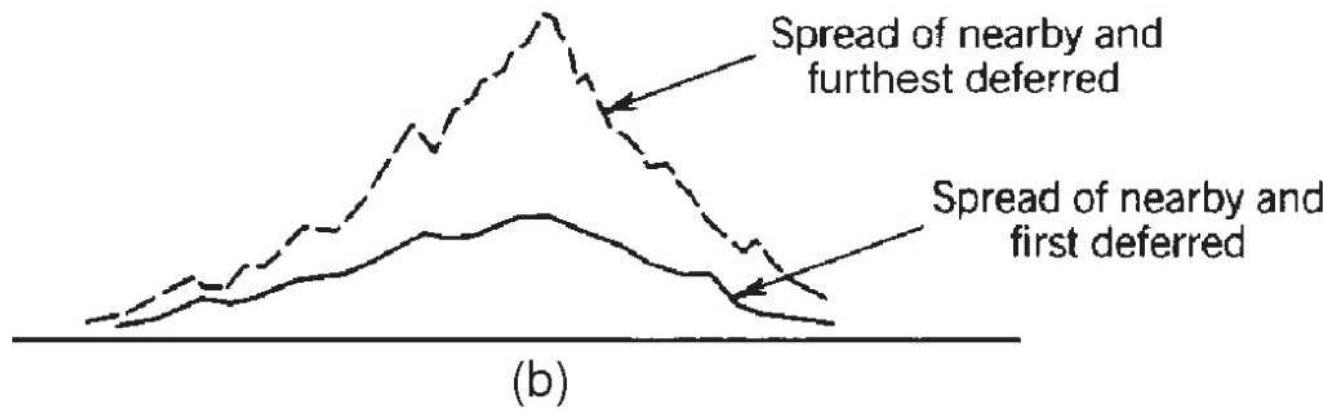

FIGURE 13.1 Interdelivery price relationship and terminology. (a) Precious m...

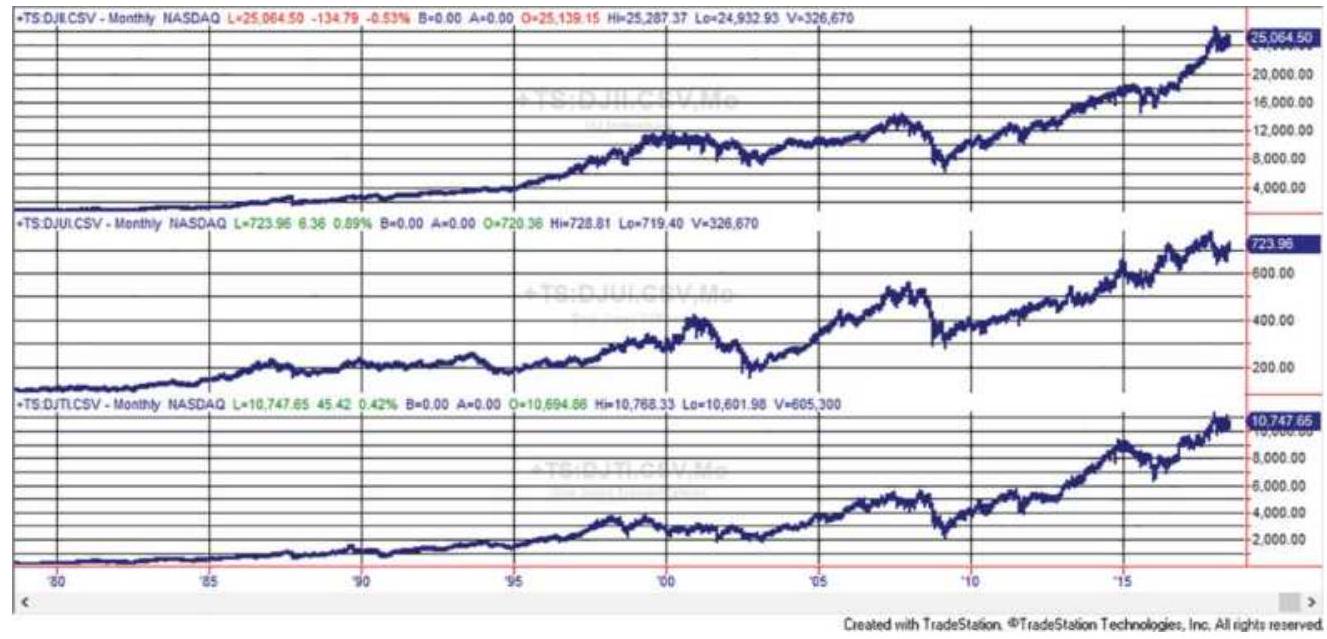

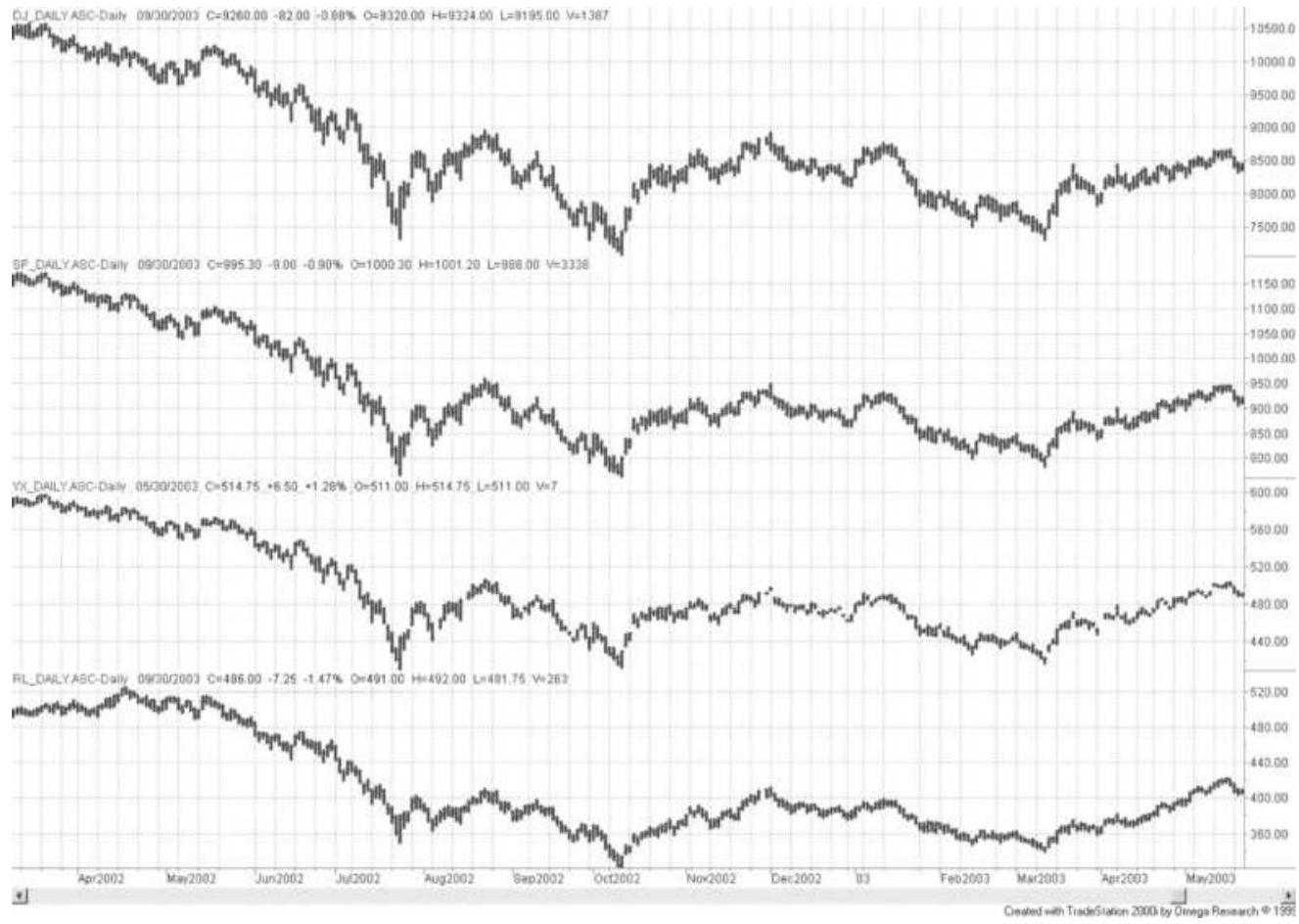

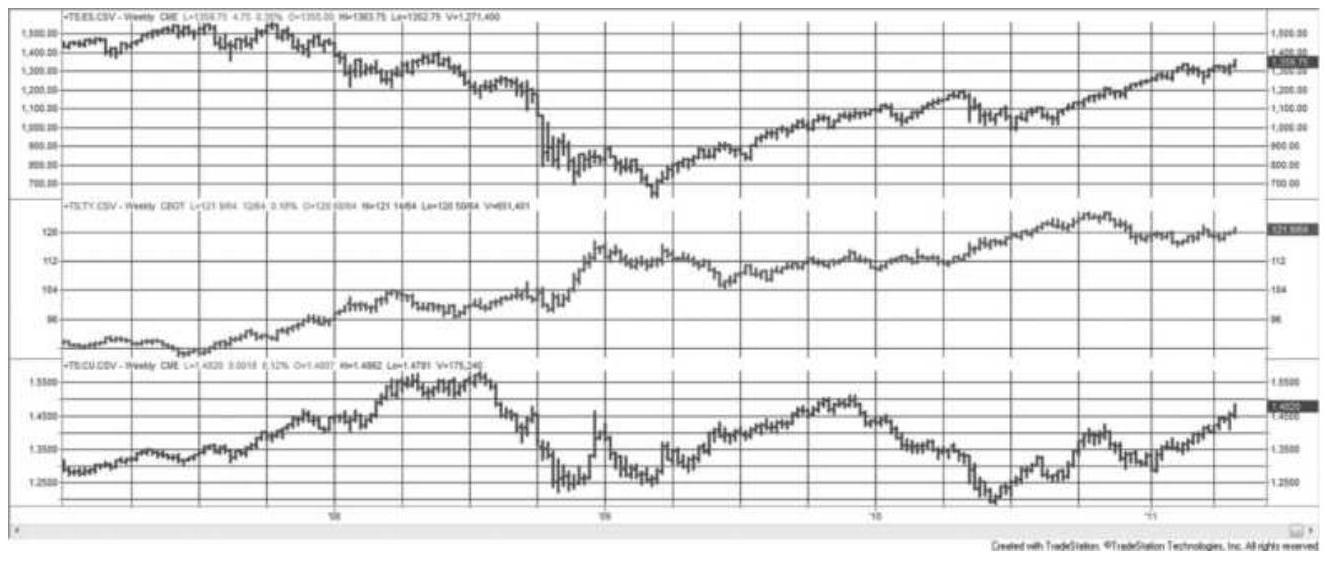

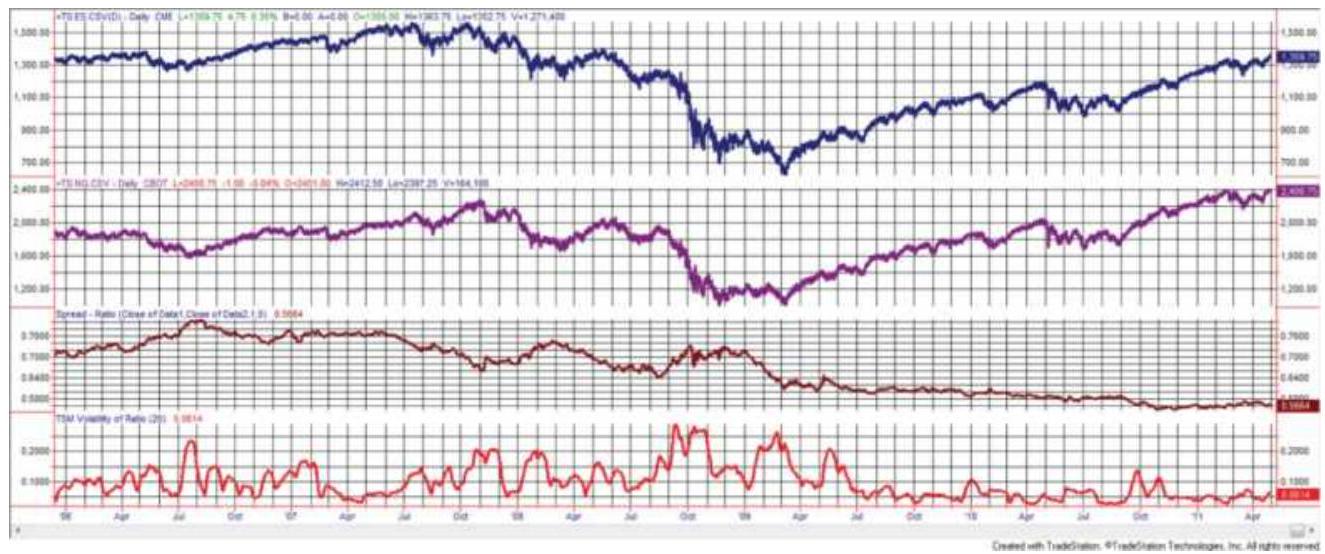

FIGURE 13.2 Major Index futures markets. From top to bottom: Dow Industrials...

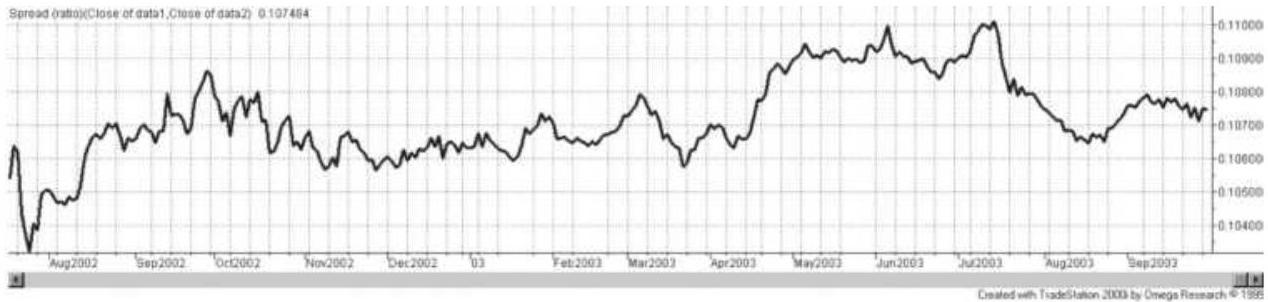

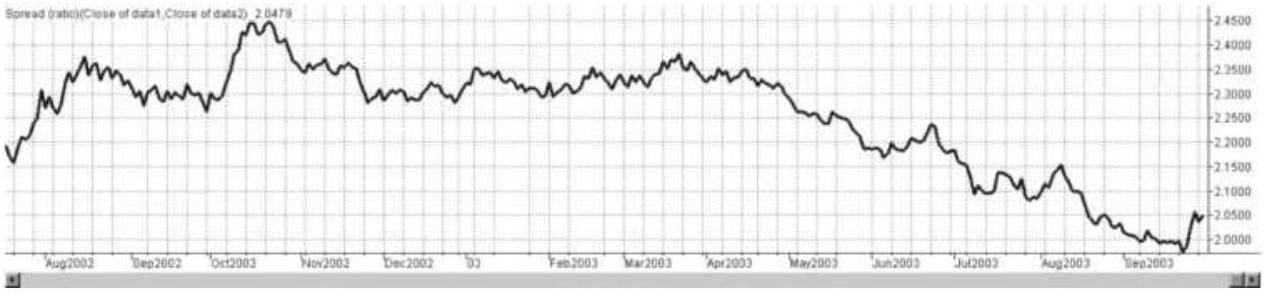

FIGURE 13.3 (a) Ratio of S\&P 500 to Dow Industrial. (b) Ratio of S...

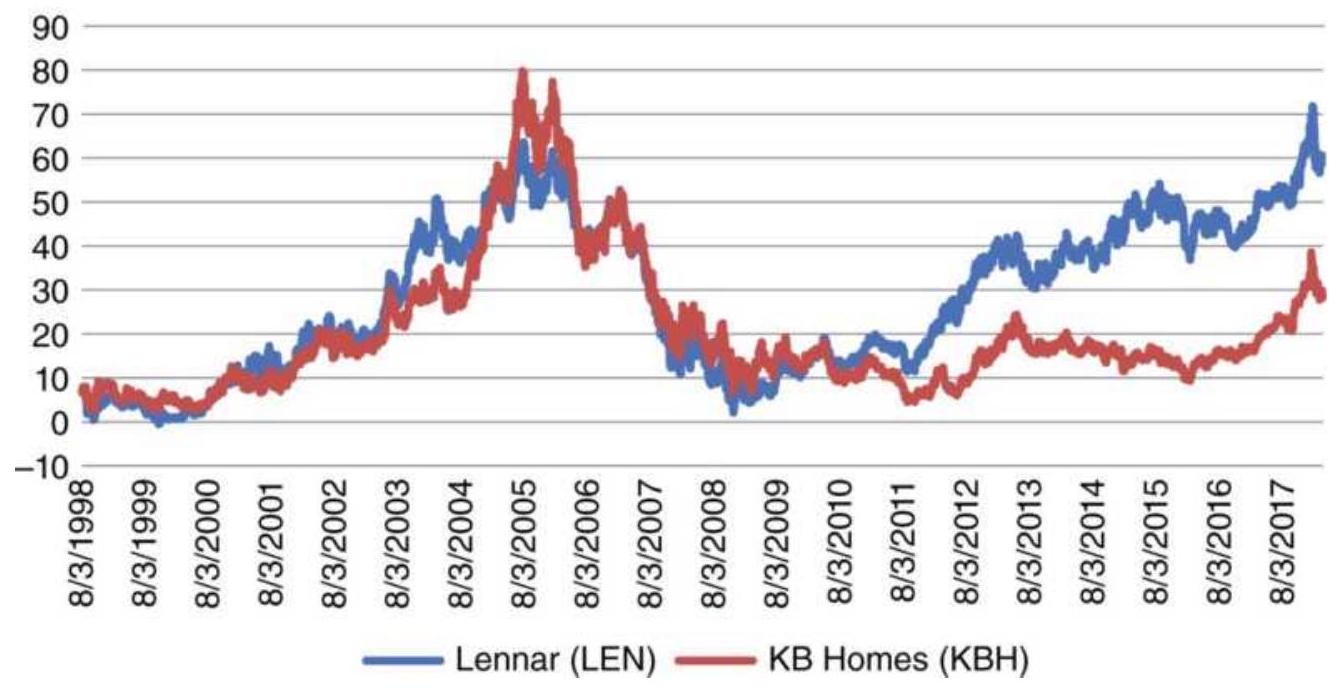

FIGURE 13.4 Lennar (LEN) and KB Home (KBH) prices.

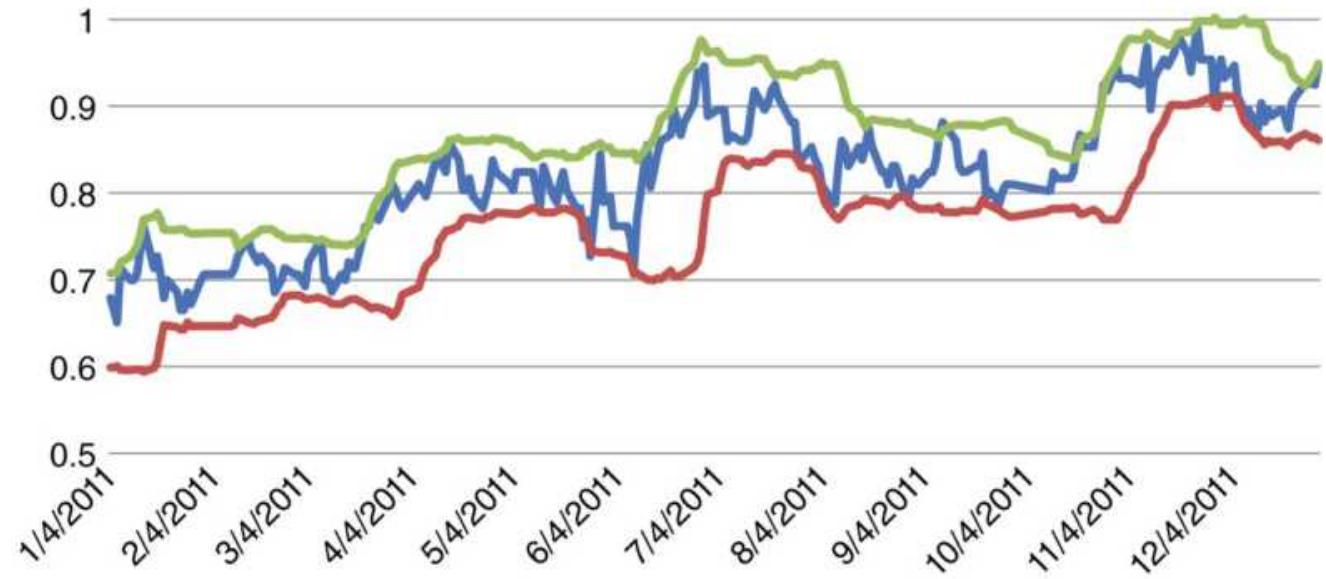

FIGURE 13.5 Rolling correlations of Lennar and \(K B\) Home returns.

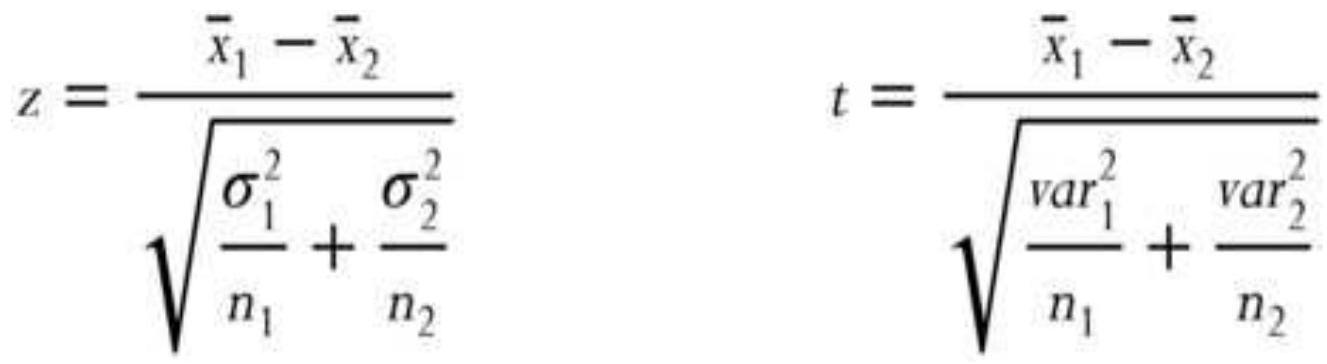

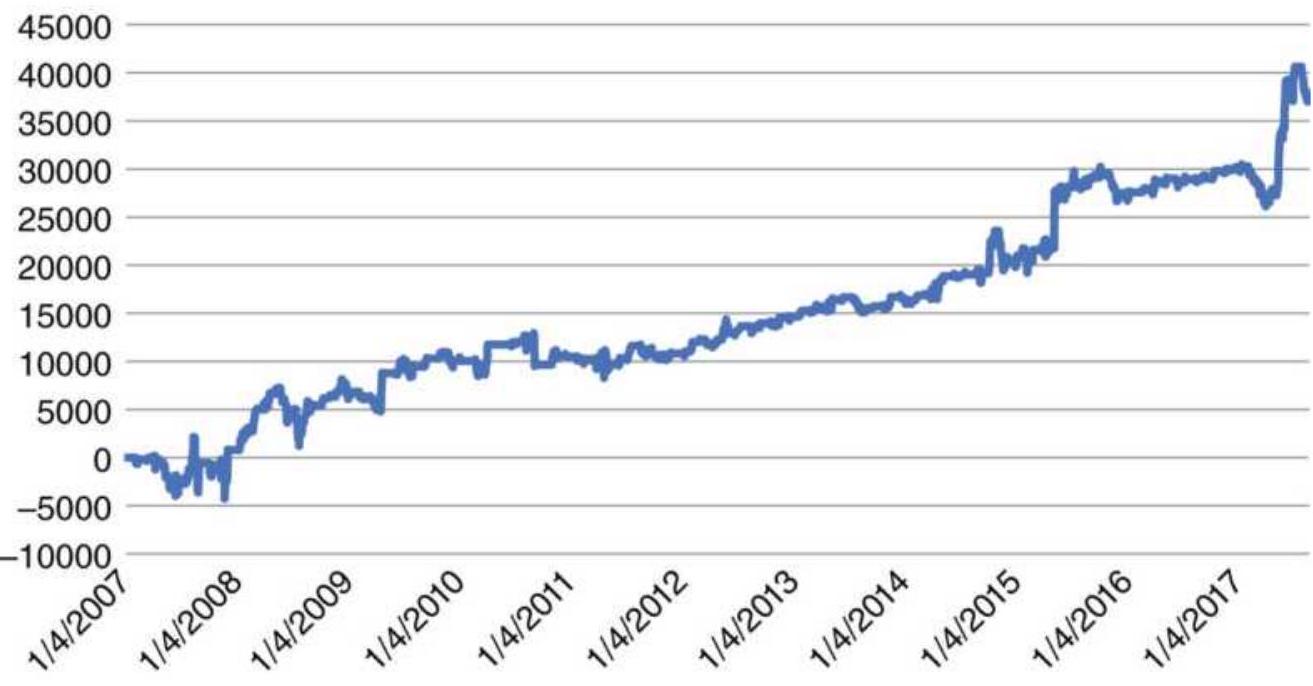

FIGURE 13.6 Price histories of ICBC and BOC from 2007, traded in Shanghai.

FIGURE 13.7 Example of standard deviation bands around the price difference ...

FIGURE 13.8 Total profits using the standard deviation of price difference \(f\)...

FIGURE 13.9 The 6-day raw stochastic of INTC, MU, and TXN prices.

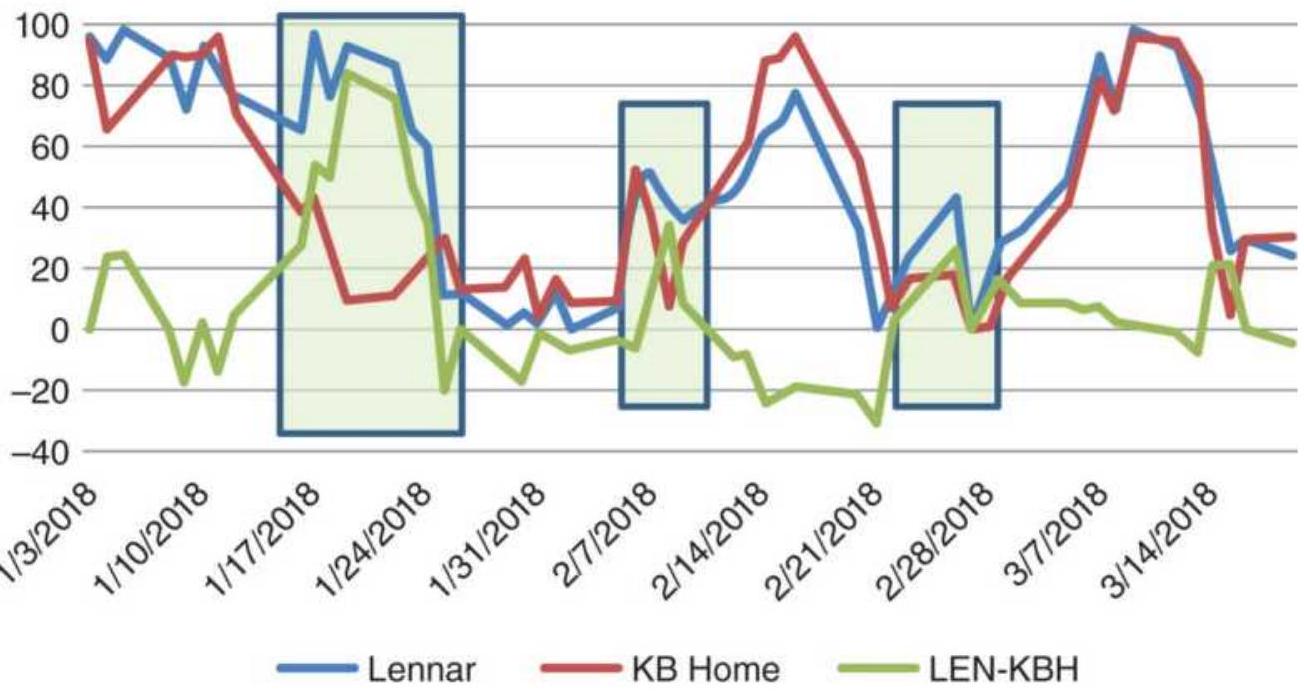

FIGURE 13.10 Total profit from the pair LENKBH using the momentum differenc...

FIGURE 13.11 Stress indicator for LEN-KBH using momentum differences as inpu...

FIGURE 13.12 Gold futures (top) and Barrick Gold (ABX) from October 2016 thr...

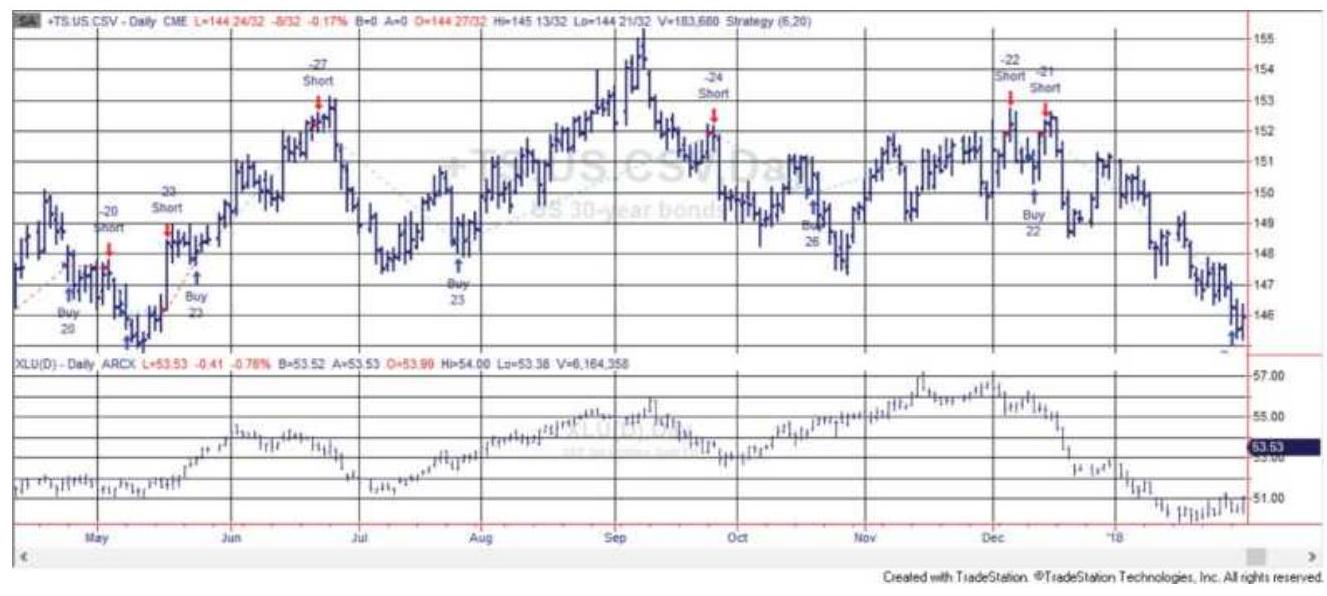

FIGURE 13.13 Trading signals for the bondutilities arbitrage strategy, May ...

FIGURE 13.14 Intercrop spreads. (a) Normal carrying charge relationship. (b)...

FIGURE 13.15 Delivery month distortions in the old crop, making a butterfly...

\section*{FIGURE 13.16 Corrections to interdelivery} patterns.

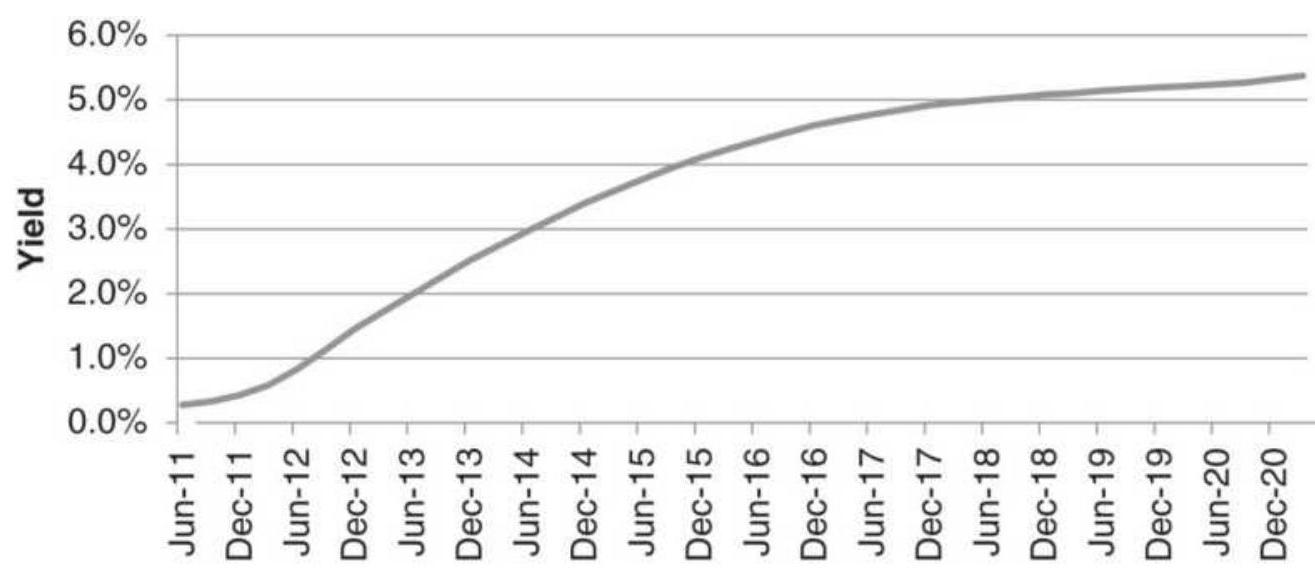

\section*{FIGURE 13.17 Eurodollar term structure of futures prices.}

FIGURE 13.18 Implied yield from gold futures, April 5, 2011.

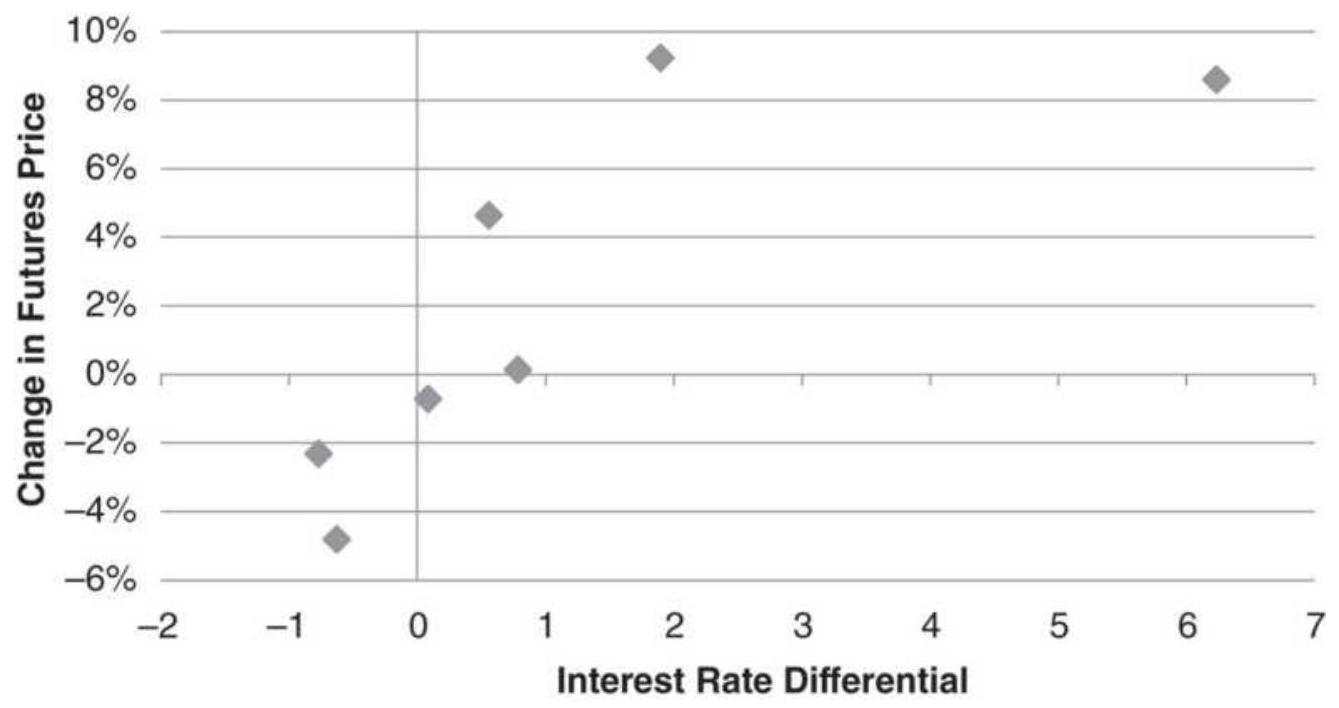

FIGURE 13.19 Interest rate differential versus change in futures prices duri...

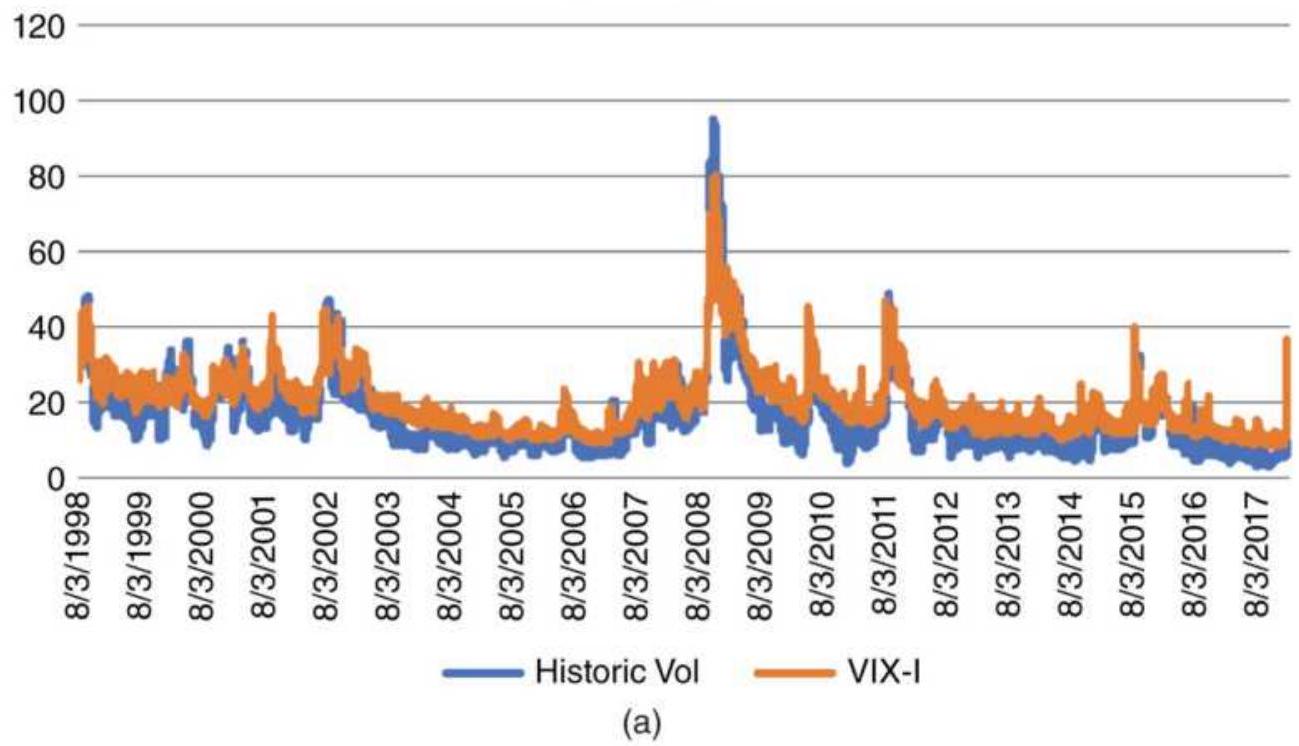

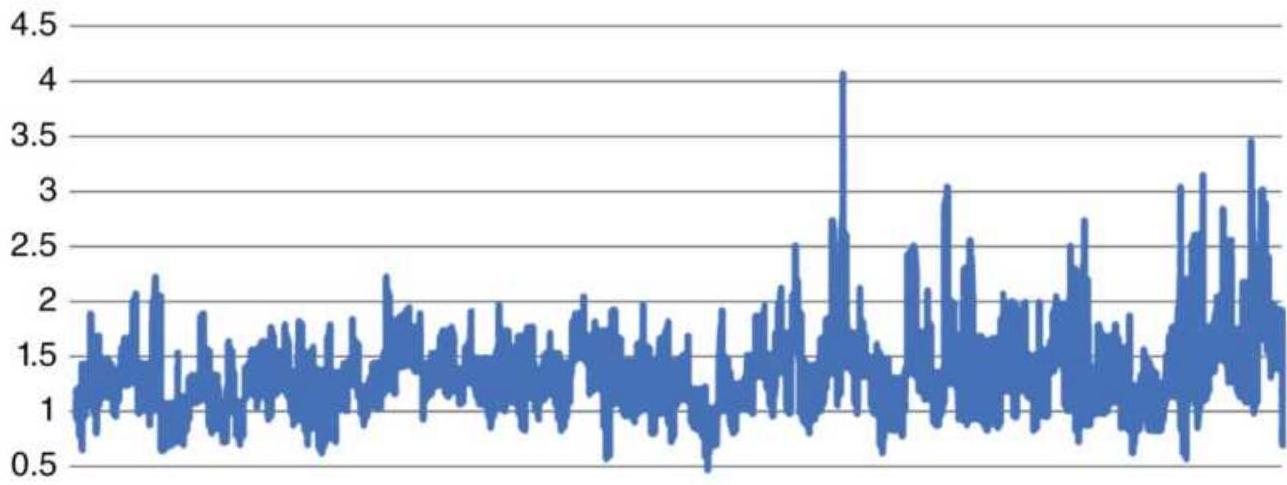

FIGURE 13.20 (a) Historic volatility and implied volatility, 1998-February 2...

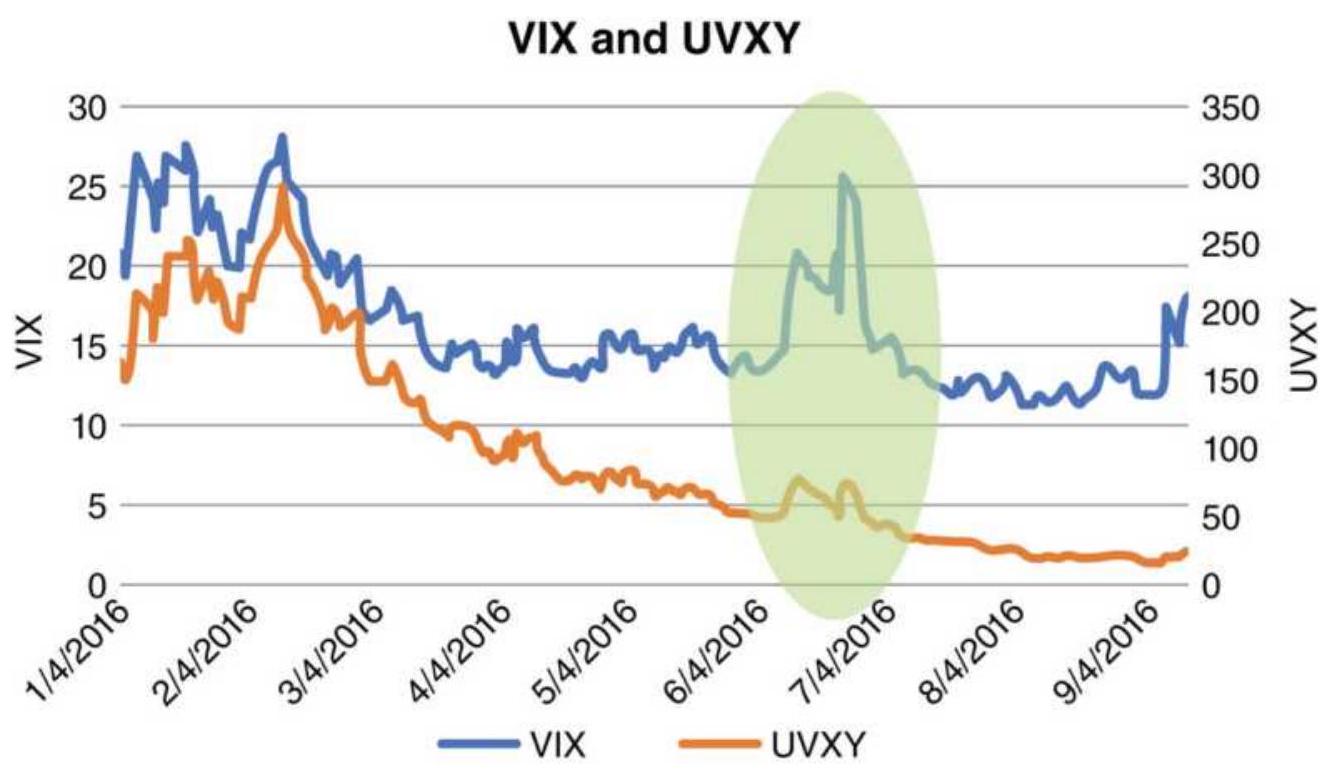

FIGURE 13.21 The VIX index (not tradeable) and UVXY (tradeable, leveraged)....

FIGURE 13.22 Implied vol (VIX index) and historic vol (calculated).

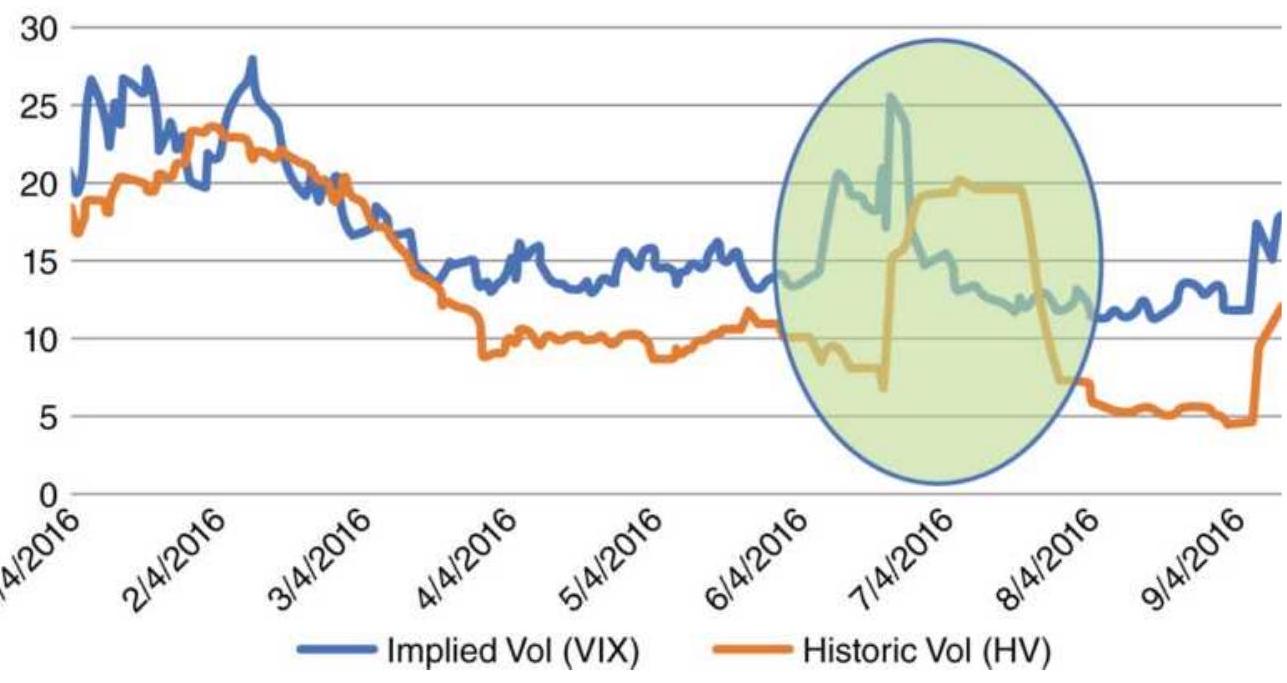

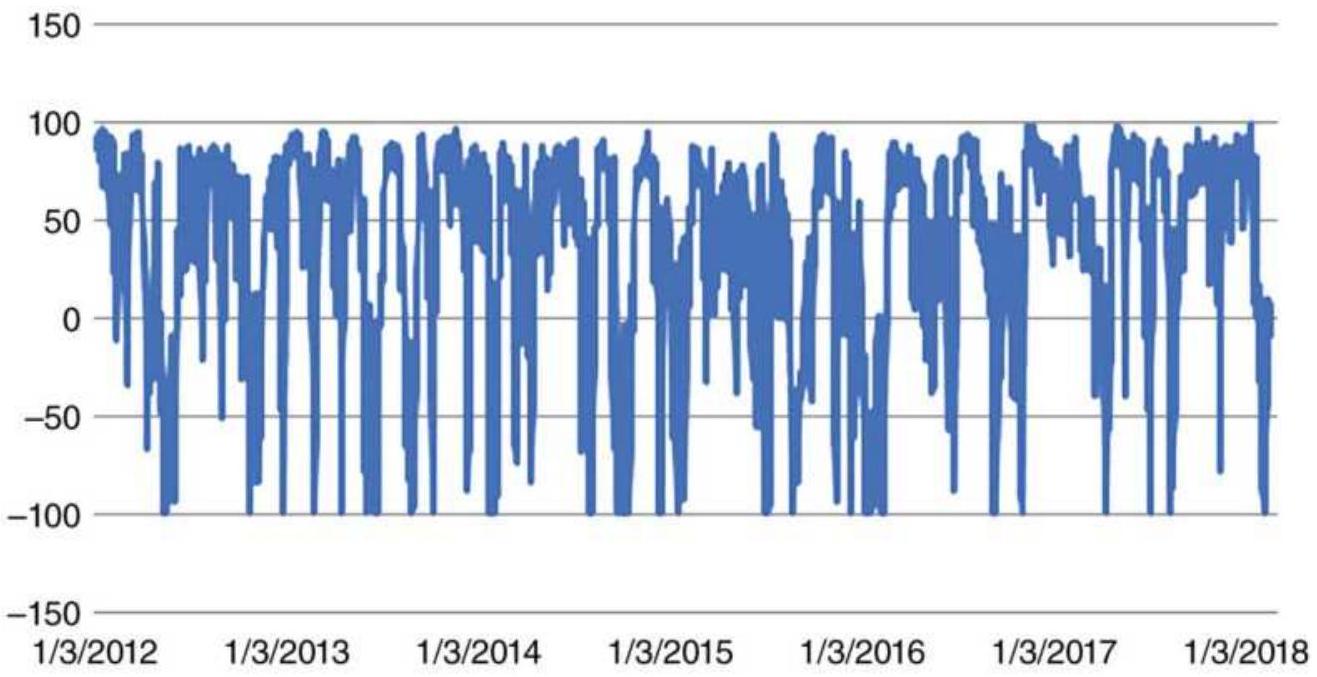

FIGURE 13.23 UVXY stochastic minus the HV stochastic.

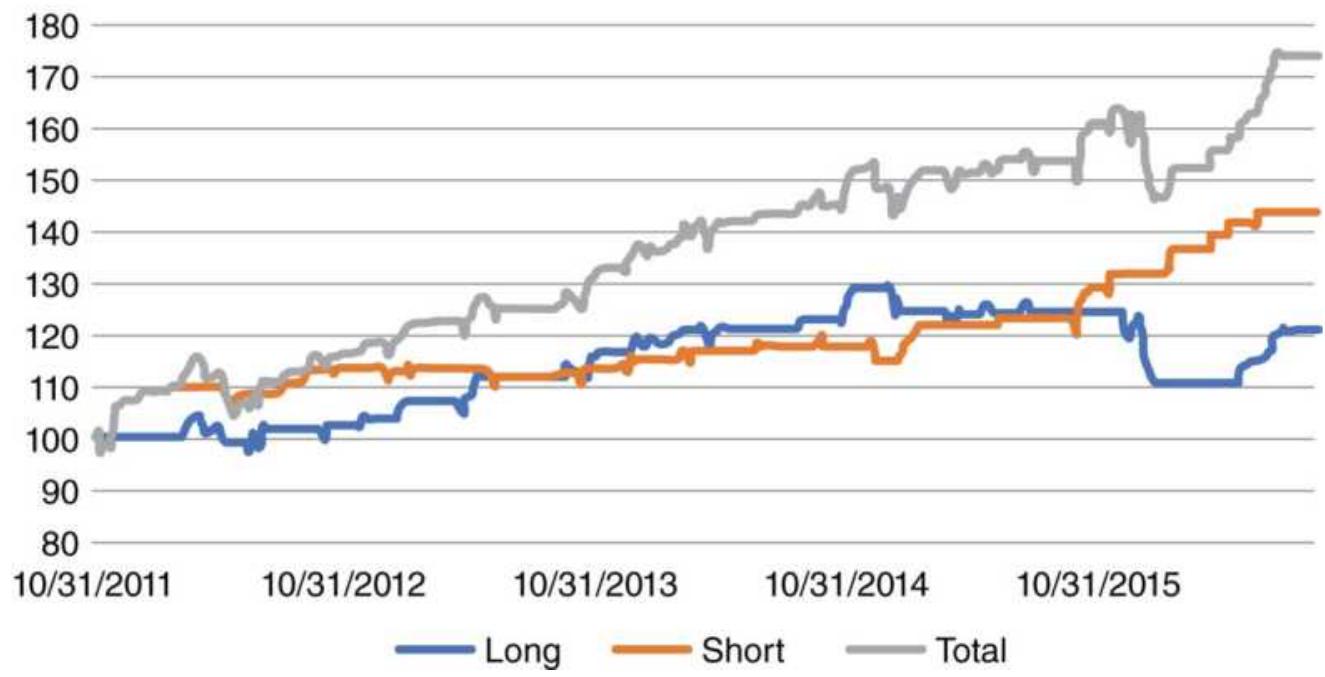

FIGURE 13.24 Net asset values of long trades, short trades, and combined lon...

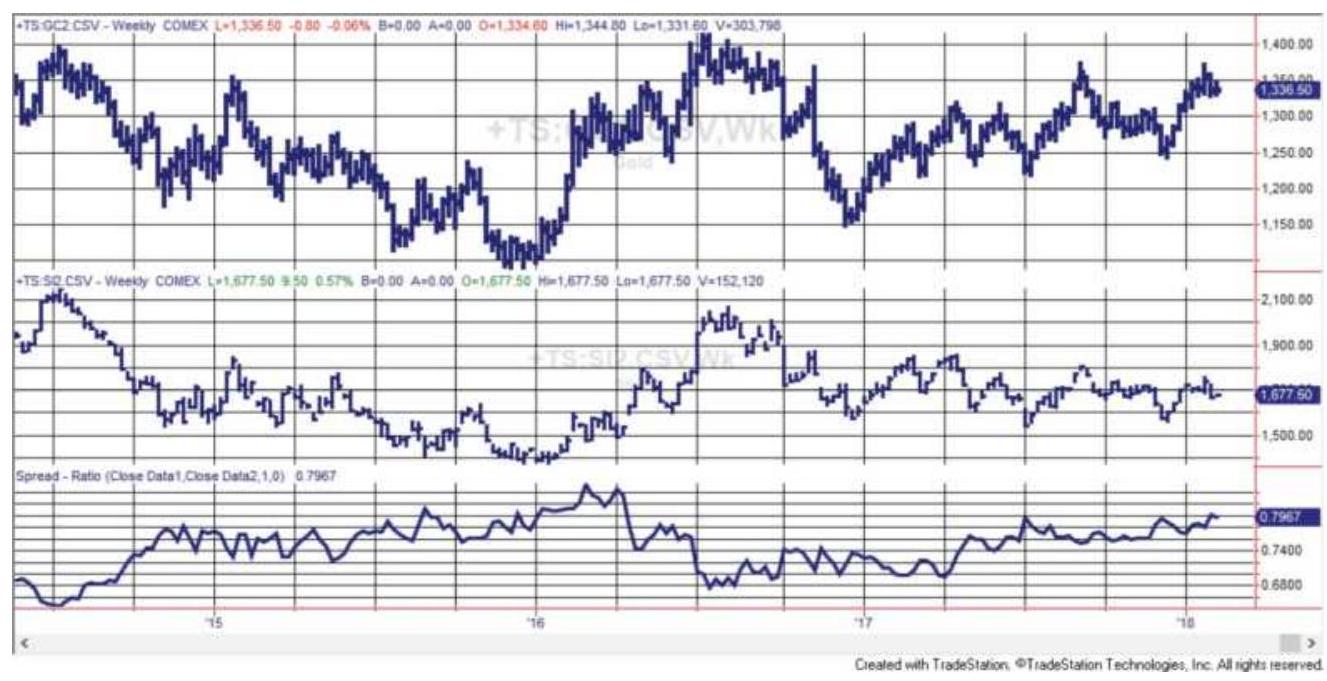

FIGURE 13.25 The weekly gold/silver ratio, from 2014 through 2017 based on n...

FIGURE 13.26 The platinum-gold ratio, shown here from 2014 through mid-2018 ...

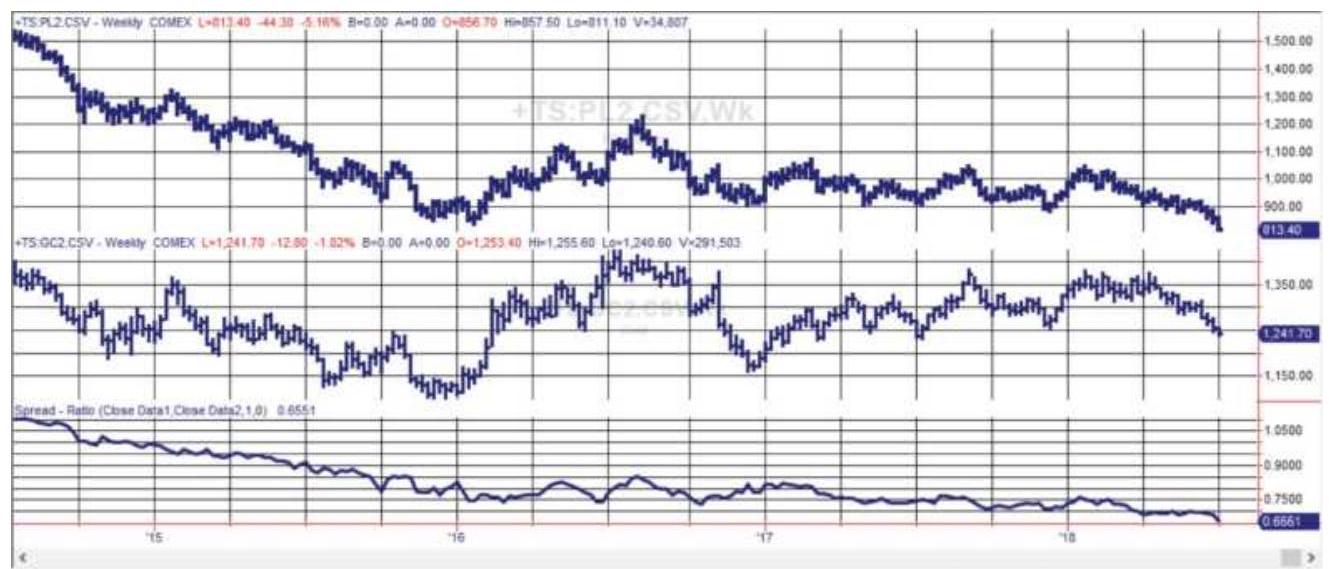

FIGURE 13.27 The decline in S\&P futures (top panel) is met by higher 10-year...

FIGURE 13.28 Rolling 20-day correlations. FIGURE 13.29 Returns based on timing SPY with HYG and JNK.

FIGURE 13.30 Crude oil (top) denominated in gold (center, daily futures) giv...

FIGURE 13.31 Total profits using a 20-day trend of the crude/gold ratio.

FIGURE 13.32 Interdelivery spread volatility. (a) Actual prices. (b) Relatio...

FIGURE 13.33 Annualized volatility of

\section*{SP/NASDAQ price ratio, using futures, ...}

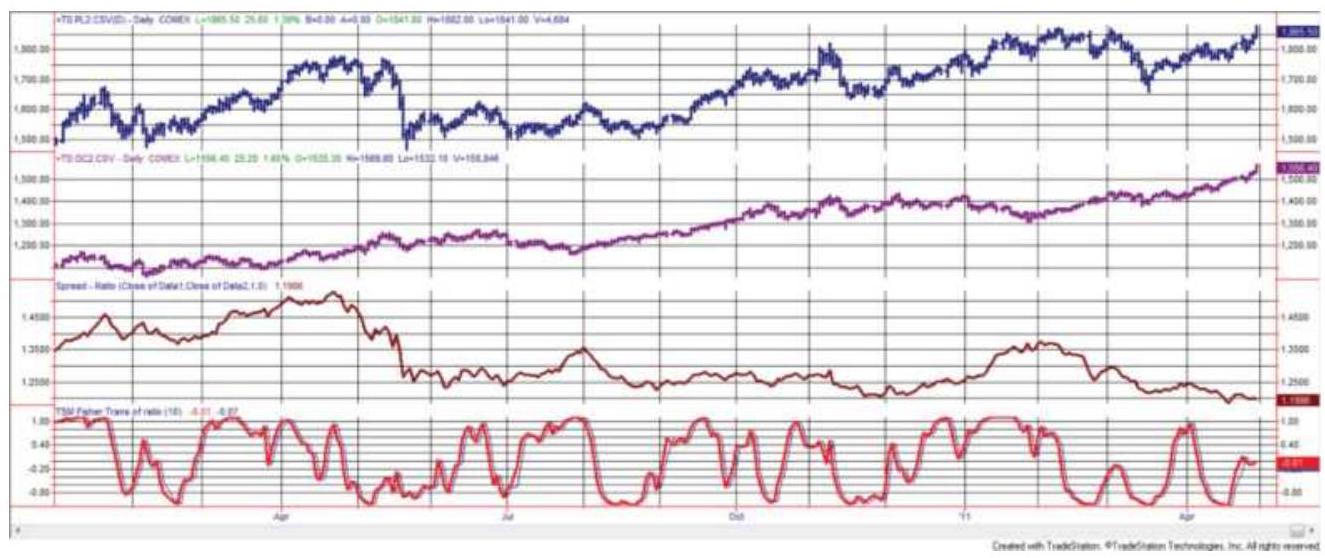

FIGURE 13.34 The platinum-gold ratio for 1 year beginning March 2010, using ...

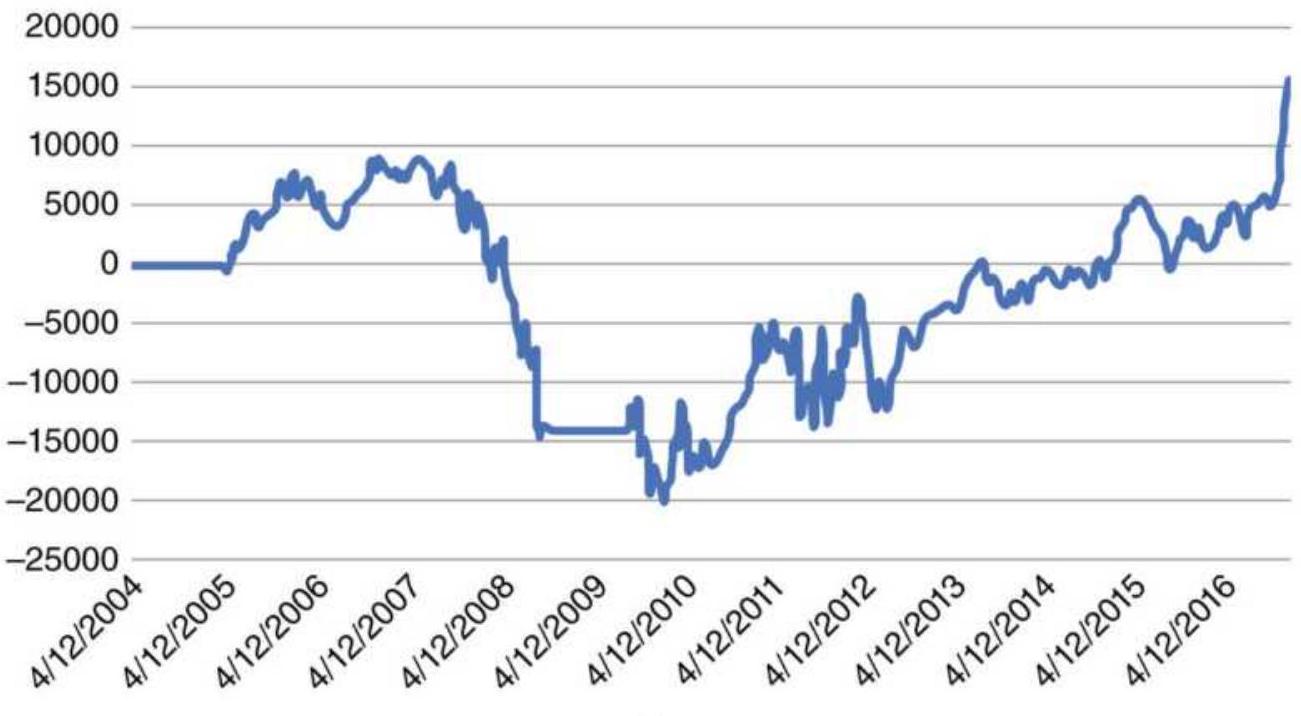

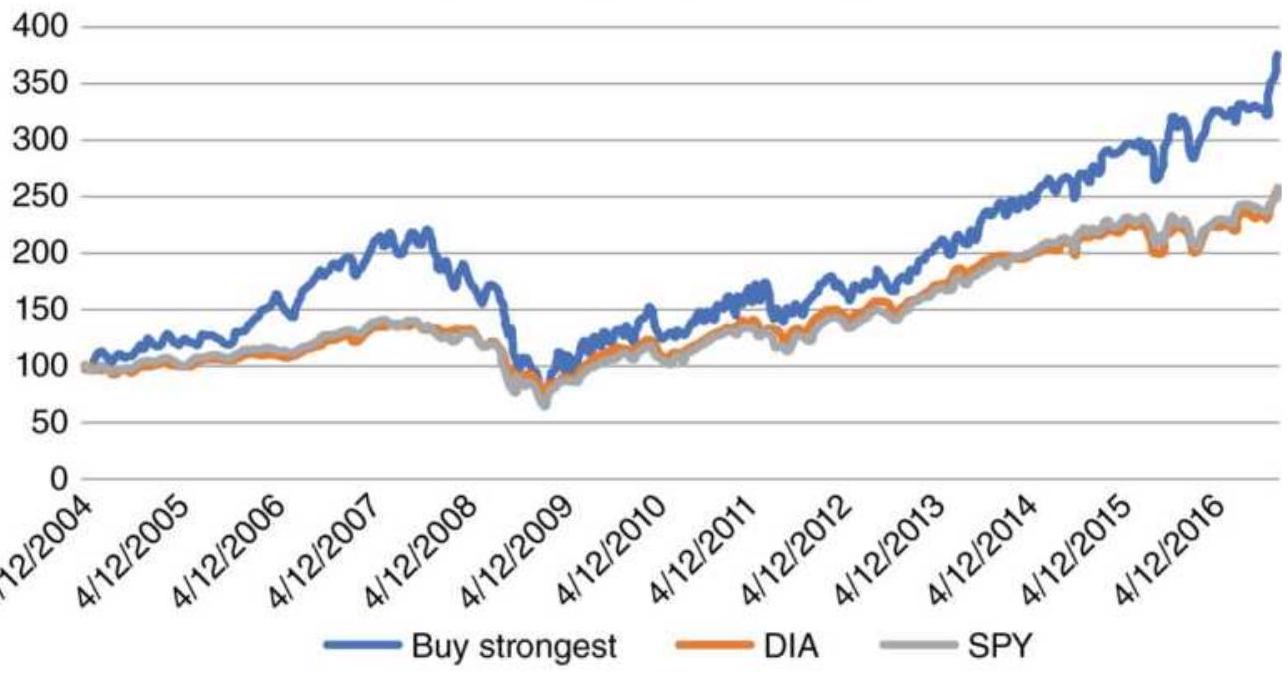

FIGURE 13.35 (a) Results of buying the 10 Dow stocks with the worst returns, ...

FIGURE 13.36 Results of Dow hedge strategy.

FIGURE 13.37 Poor spread selection. (a) Spread price. (b) Spread components....

\section*{Chapter 14}

FIGURE 14.1 Price reaction to unexpected news, including delayed response. T...

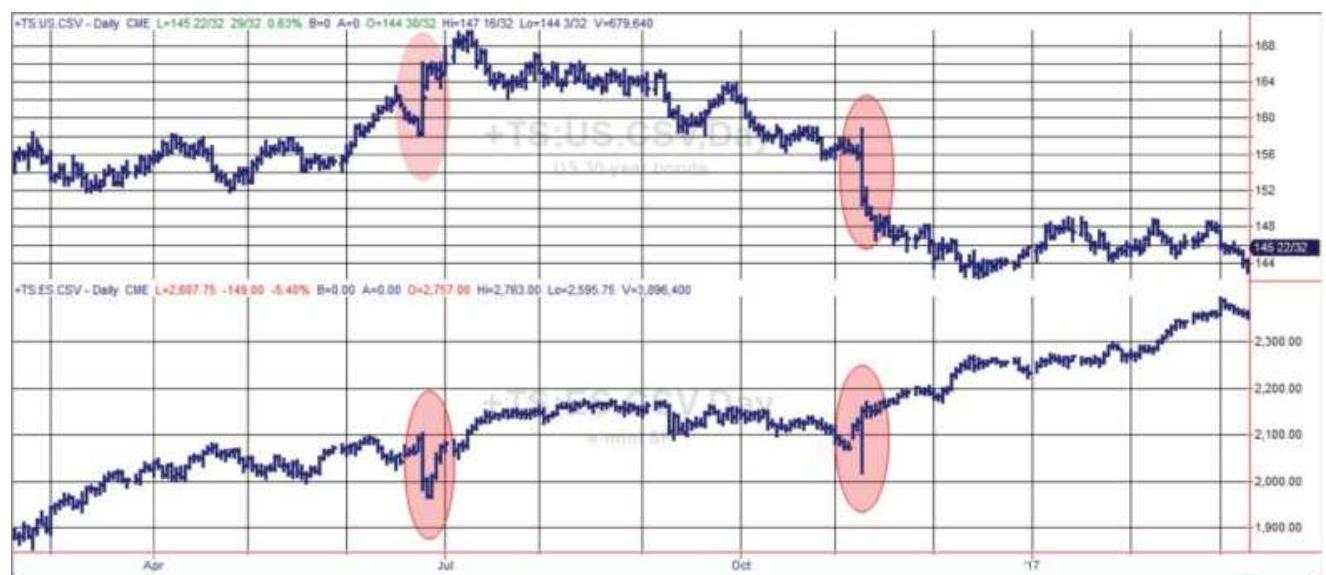

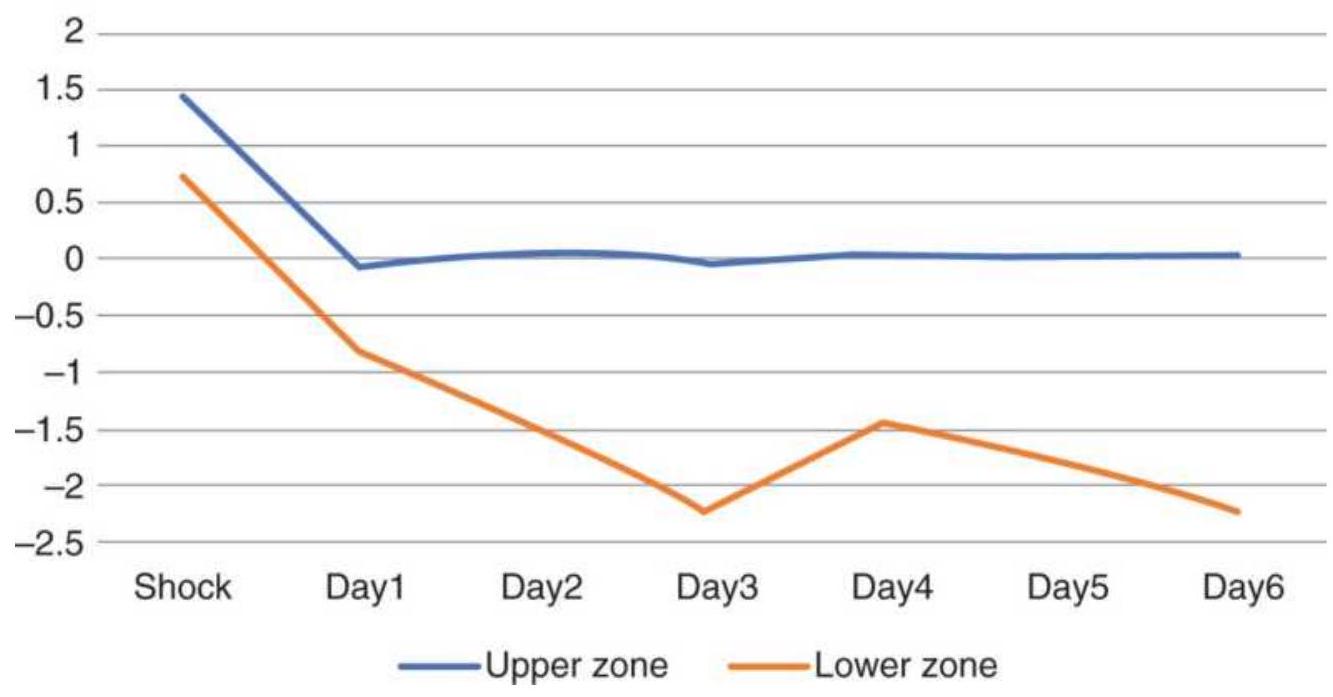

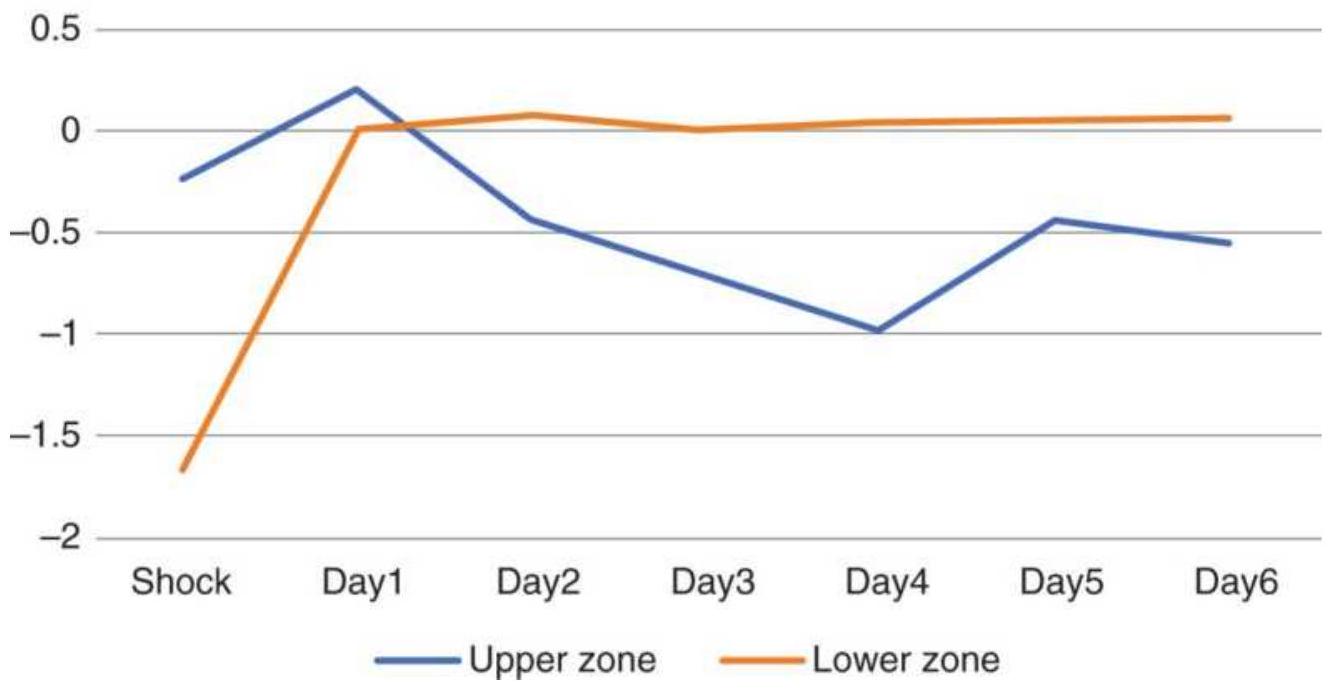

FIGURE 14.2 Price shocks in U.S. bond futures (top) and the corresponding mo...

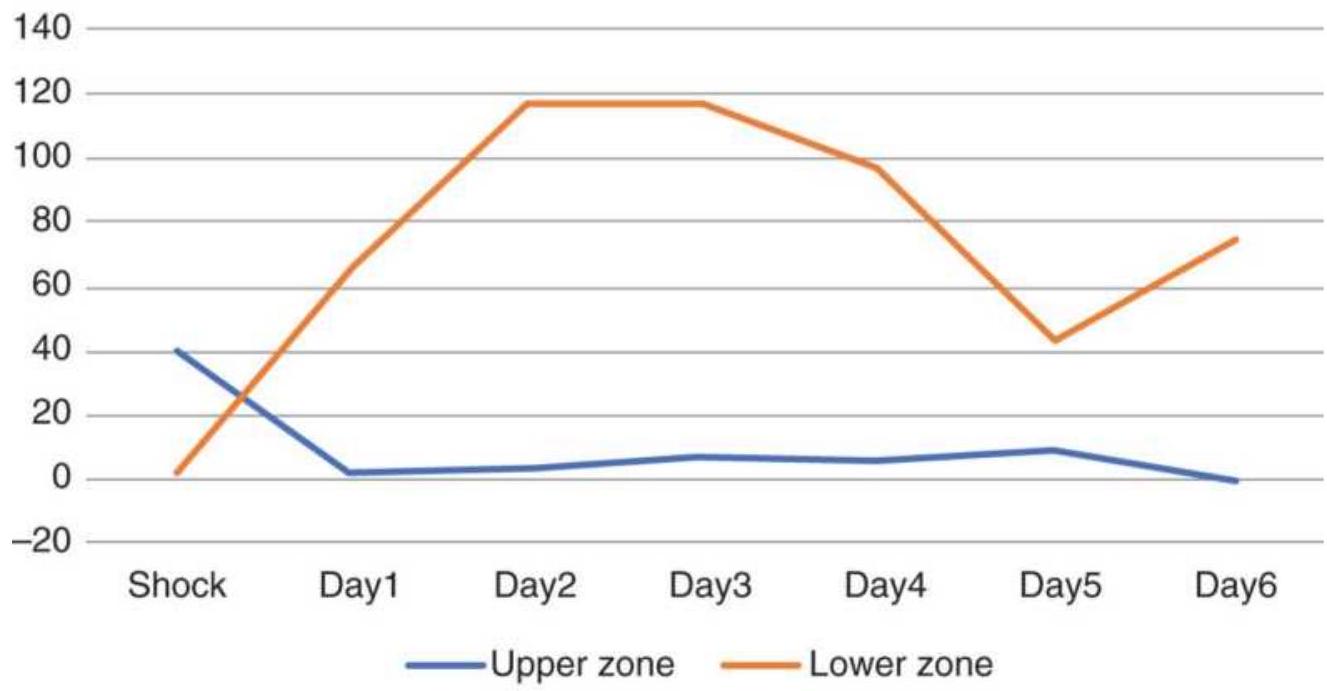

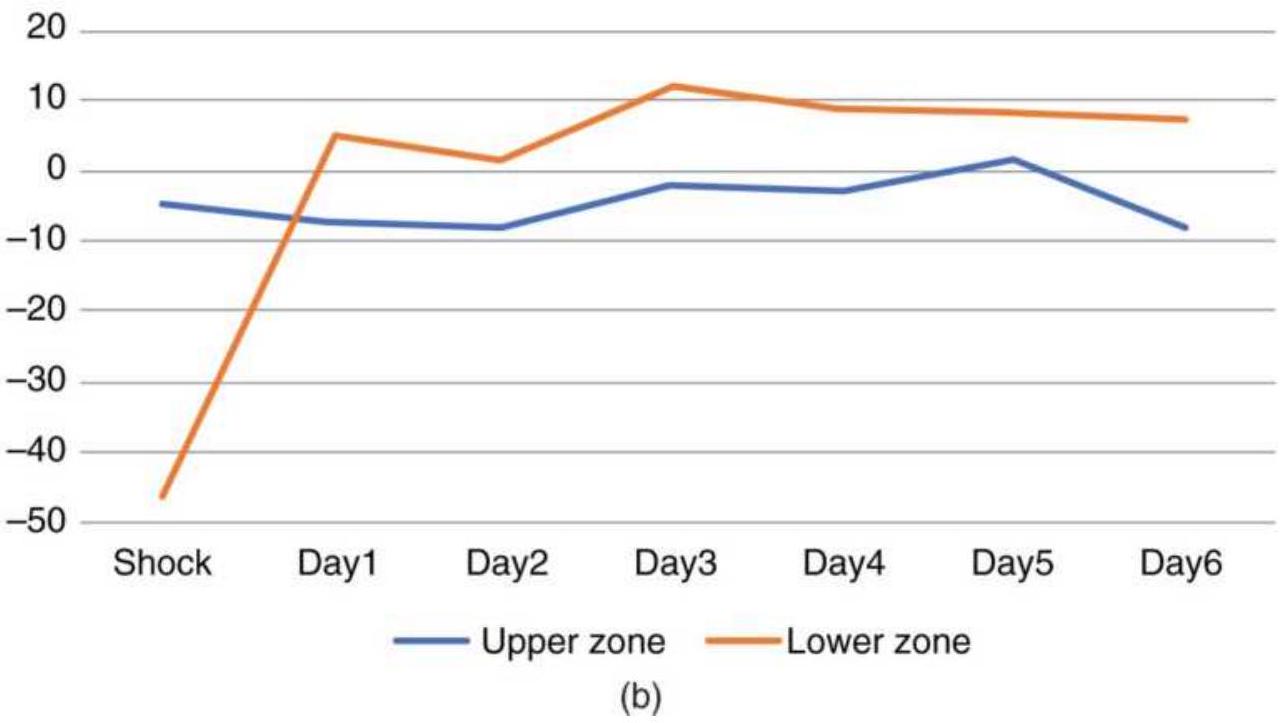

FIGURE 14.3(a) Bond futures returns from upward price shock using 2...

FIGURE 14.3(b) Figure Bond returns using a \(3 \times\) ATR threshold.

FIGURE 14.4 U.S. bond futures, returns from long positions following upward ...

FIGURE 14.5 Returns from crude oil price shocks using the threshold 2...

FIGURE 14.6 Returns from crude oil price shocks using the larger 3...

FIGURE 14.7 S\&P futures price shocks \(>2\) ATRs. (a) Upside shocks. (a) Downsi...

FIGURE 14.8 S\&P futures price shocks using 3

ATRs, 1991-2017. (a) Upward sho...

FIGURE 14.9 Commitment of Traders Report (short form) from the CFTC.gov webs...

FIGURE 14.10 Commitment of Traders Report (long form), May 10, 2011.

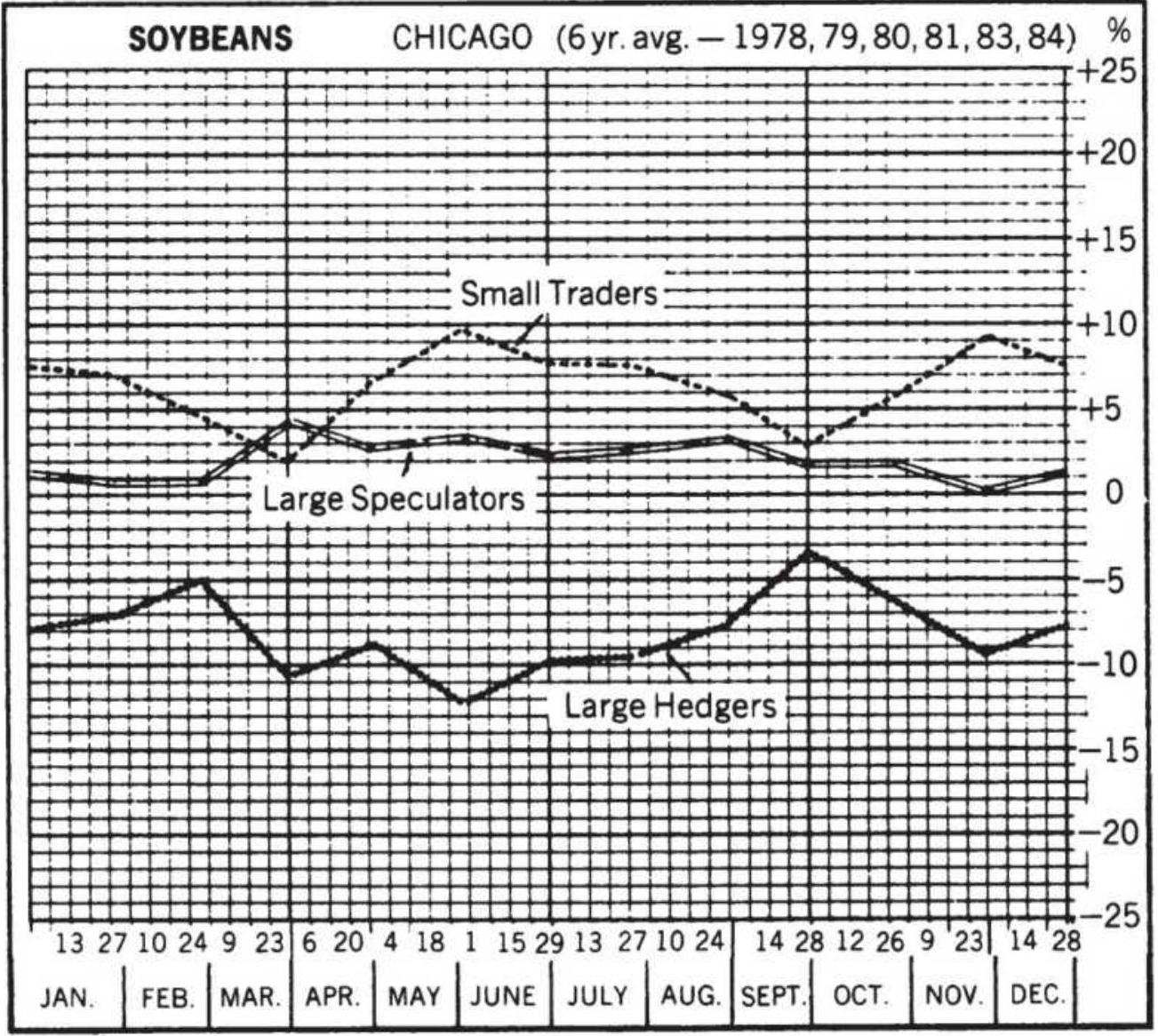

FIGURE 14.11 Jiler's normal trader positions.

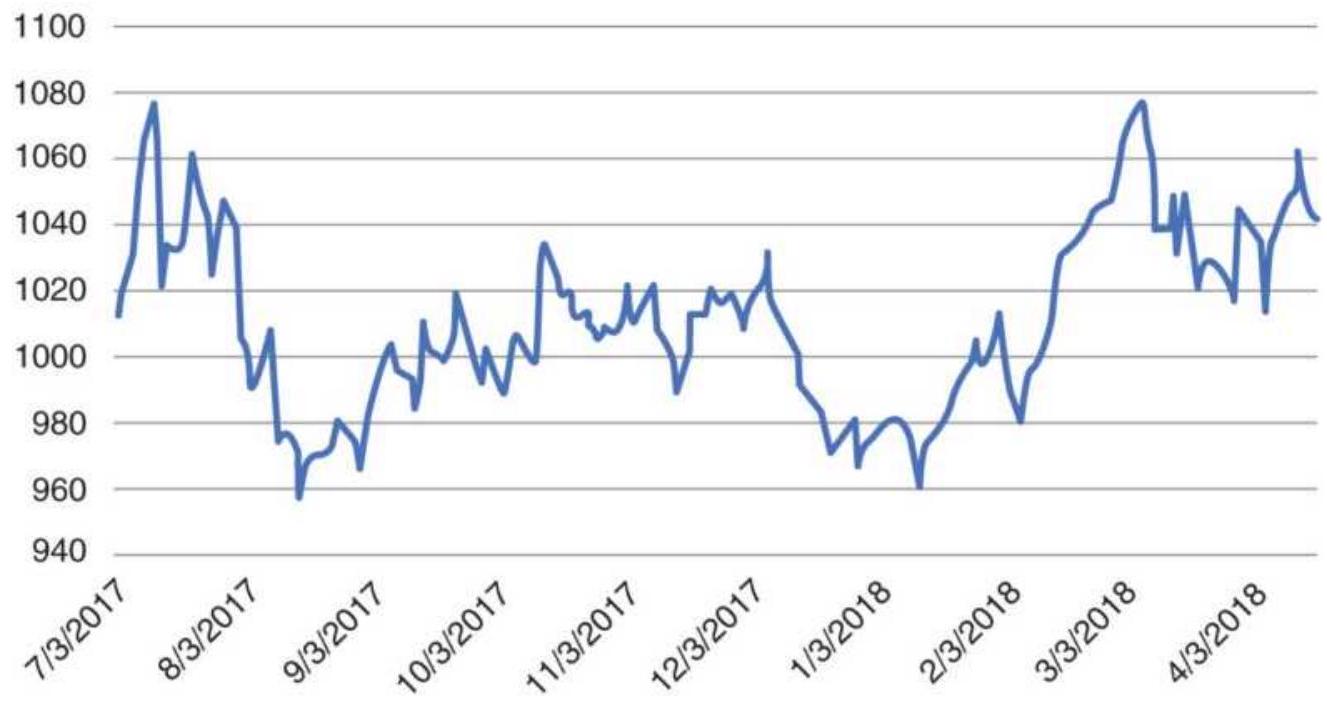

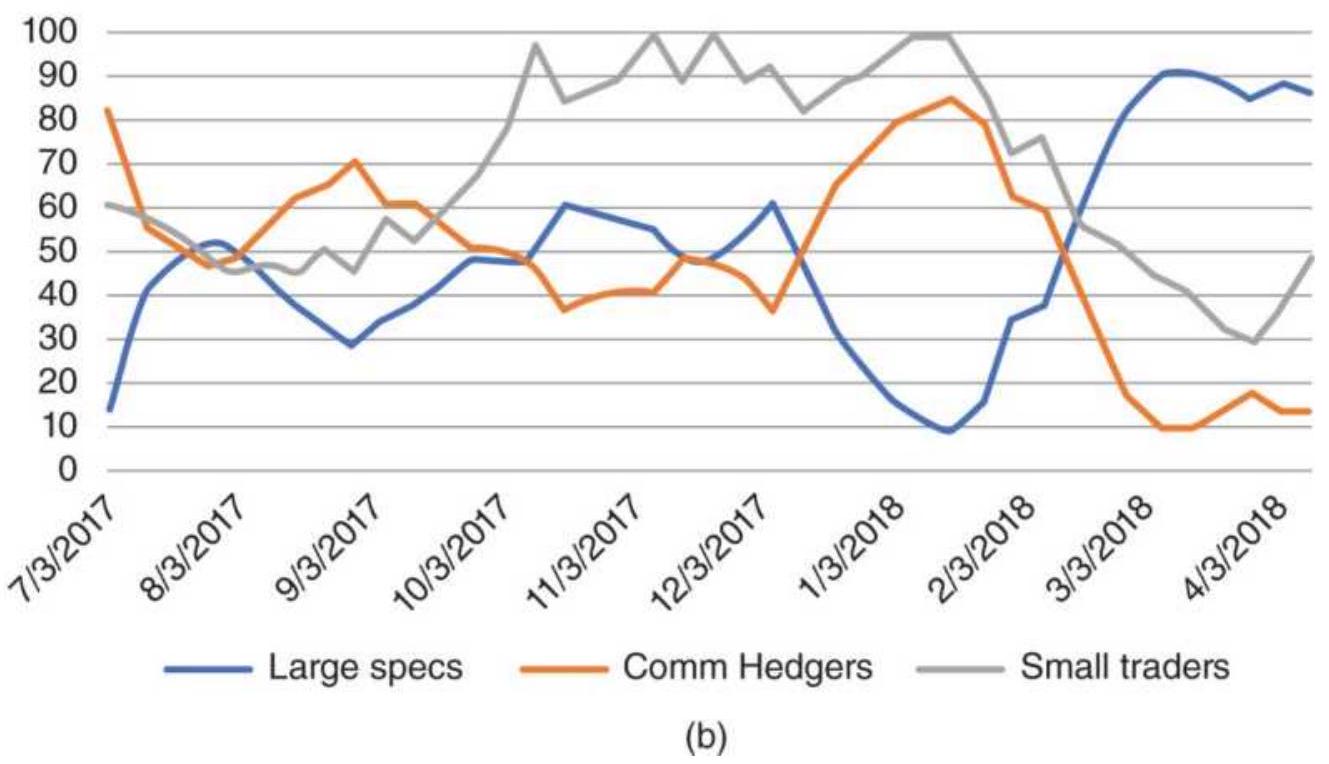

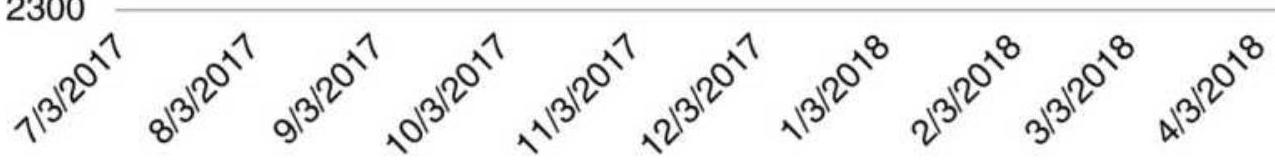

FIGURE 14.12 (a) Soybeans nearest futures, July 2017-April 2018. (b) The Bri...

\section*{FIGURE 14.13 (a) S\&P continuous futures and}

(b) the Briese COT Index for eac...

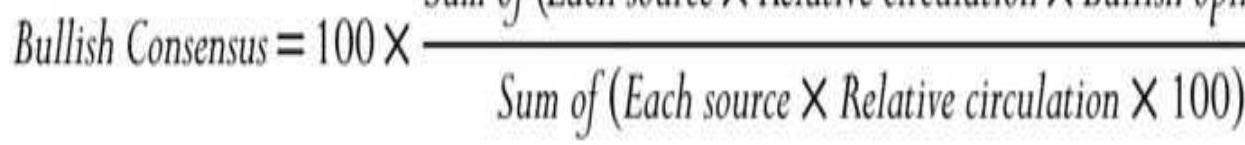

FIGURE 14.14 Interpretation of the Bullish Consensus.

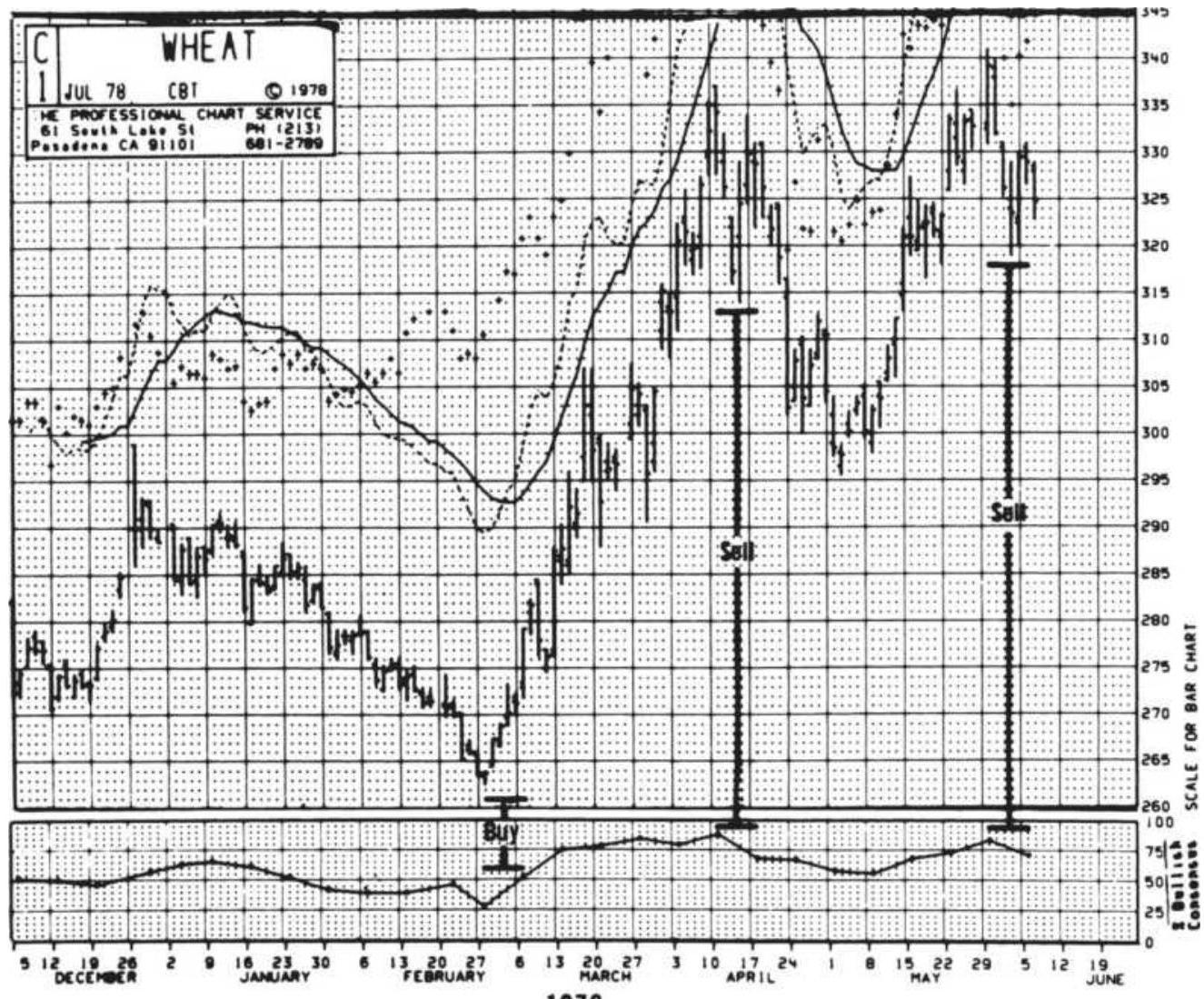

FIGURE 14.15 Typical contrarian situation: wheat, 1978.

FIGURE 14.16 The put/call ratio (\$CPCE),

October 2017-mid-April 2018.

FIGURE 14.17 The golden spiral, also the logarithmic spiral, is a perfect re...

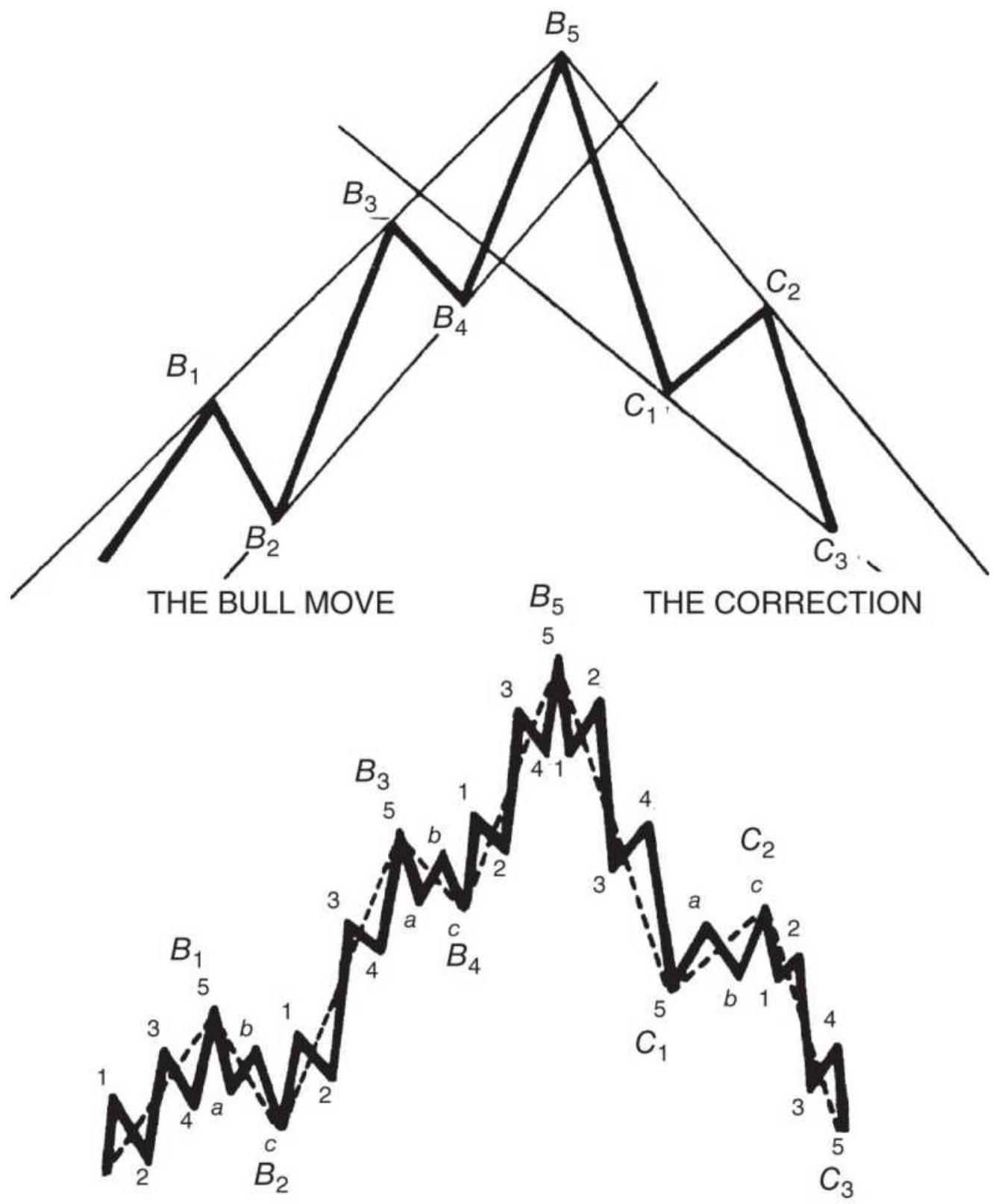

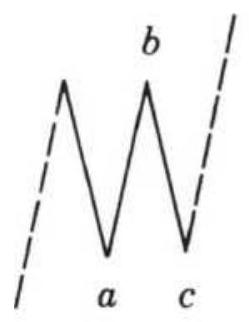

FIGURE 14.18 Basic Elliott wave.

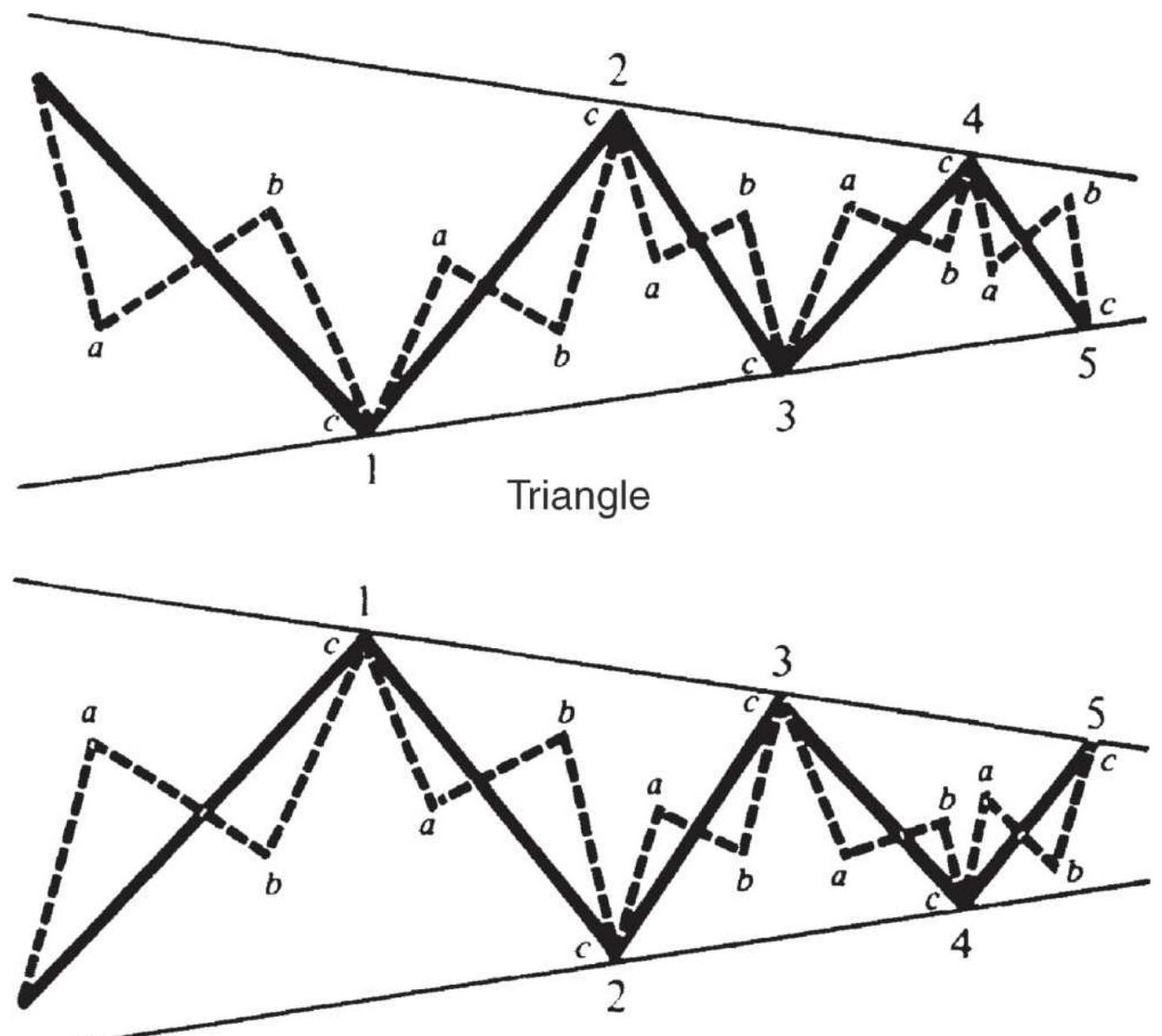

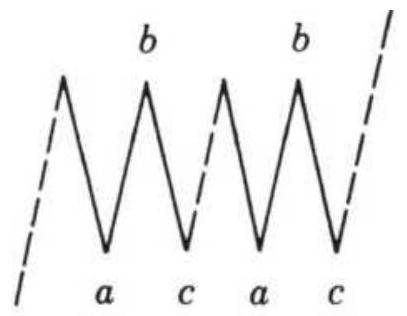

FIGURE 14.19 Triangles and ABCs.

FIGURE 14.20 Compound correction waves.

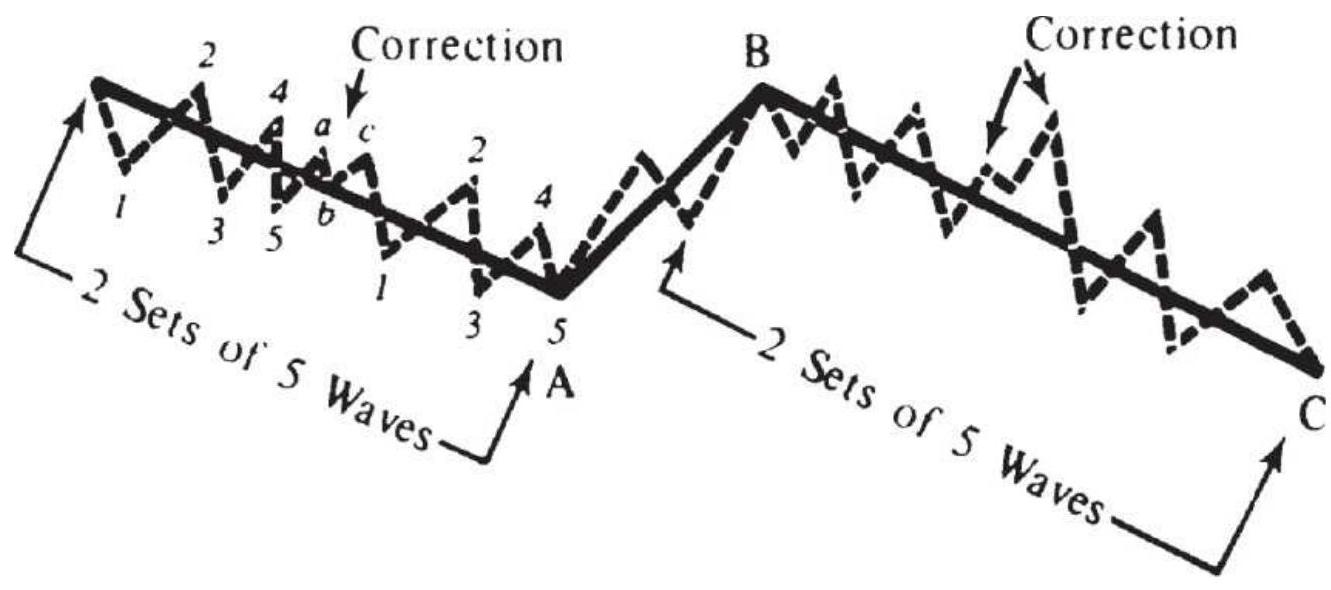

FIGURE 14.21 Elliott's threes.

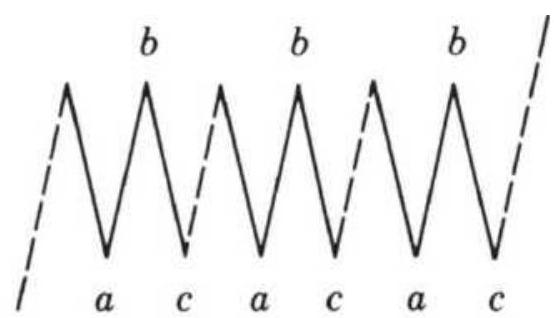

FIGURE 14.22 Price relationships in Supercycle (V).

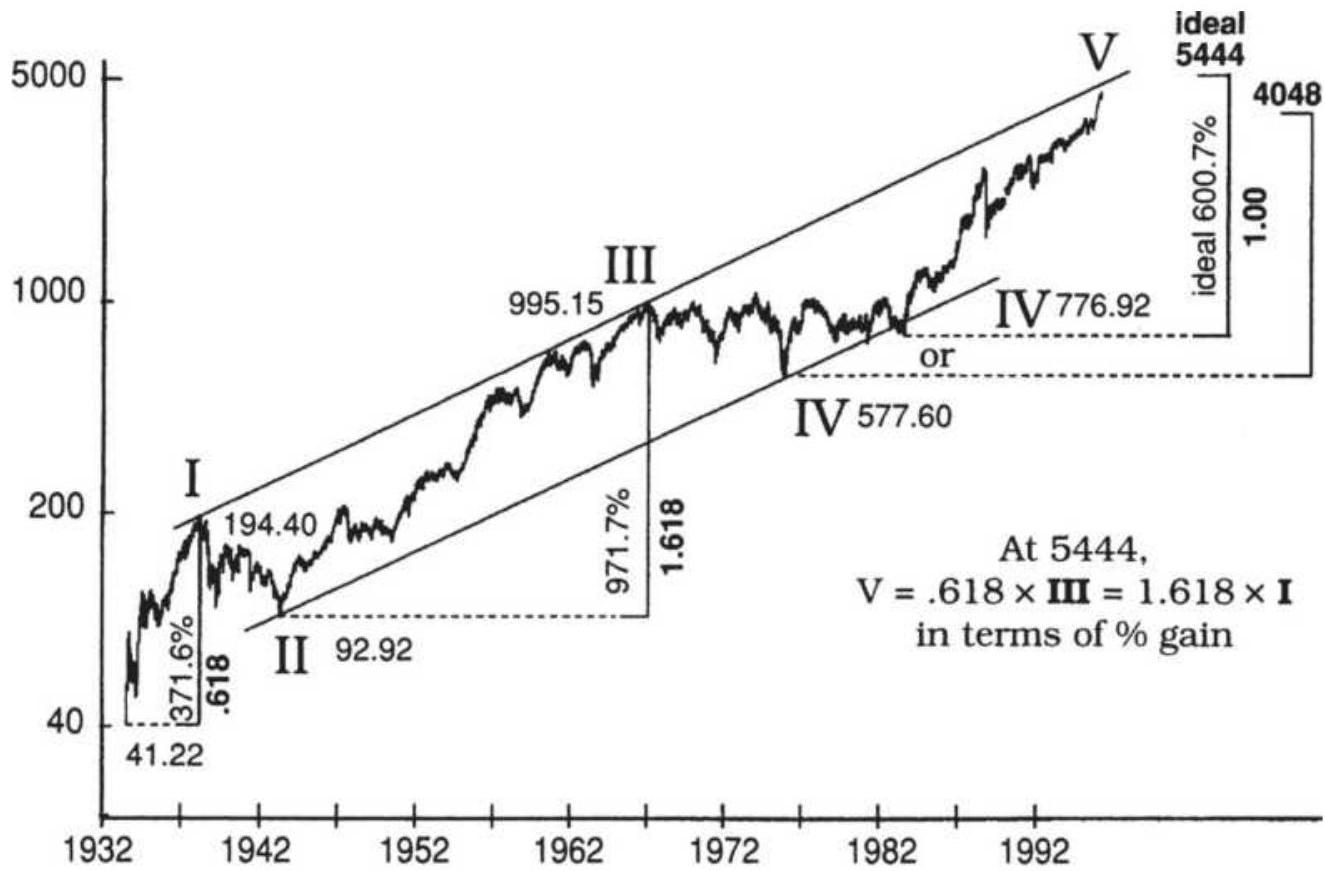

FIGURE 14.23 The Supercycle as of 2019.

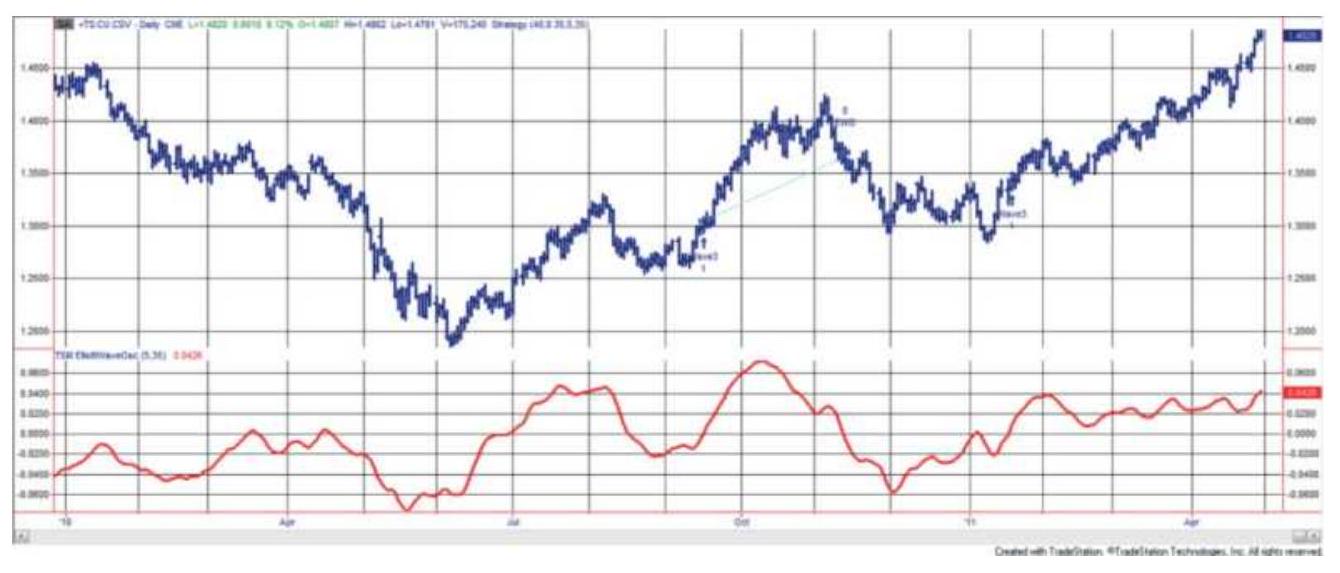

FIGURE 14.24 MTpredictor of Elliott Wave applied to yen futures.

FIGURE 14.25 Trading signals for the Elliott Wave strategy, and the Elliott ...

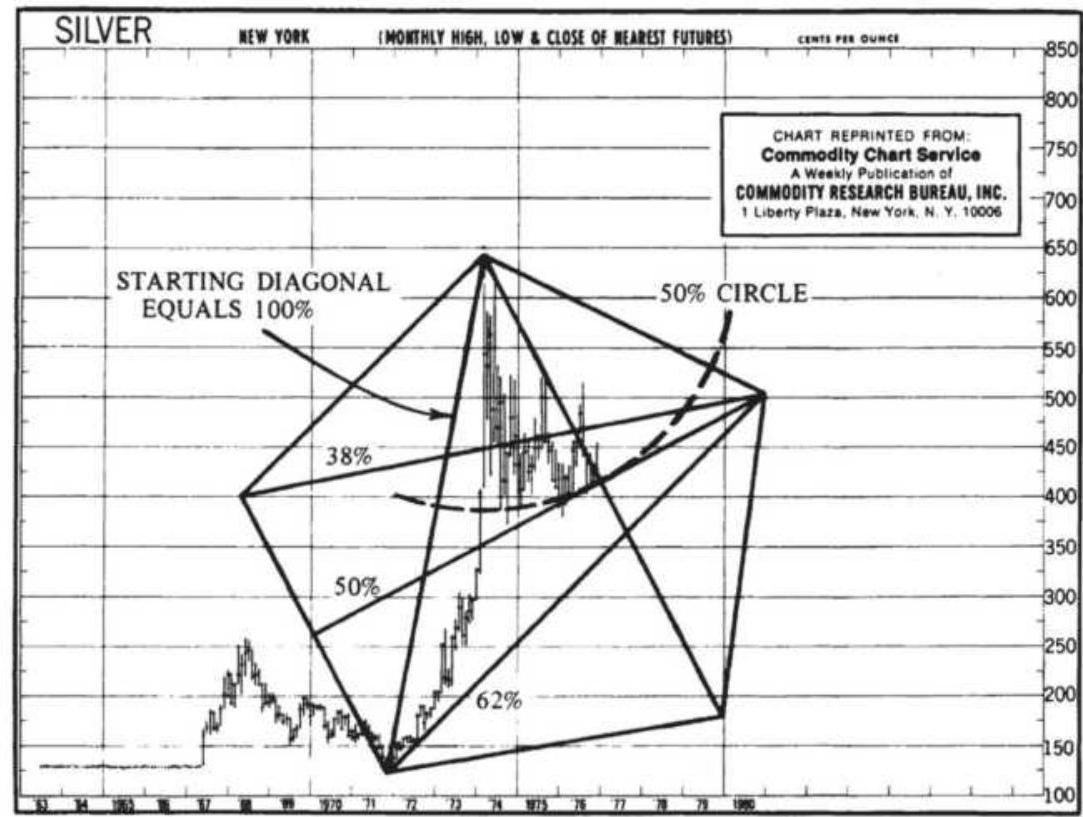

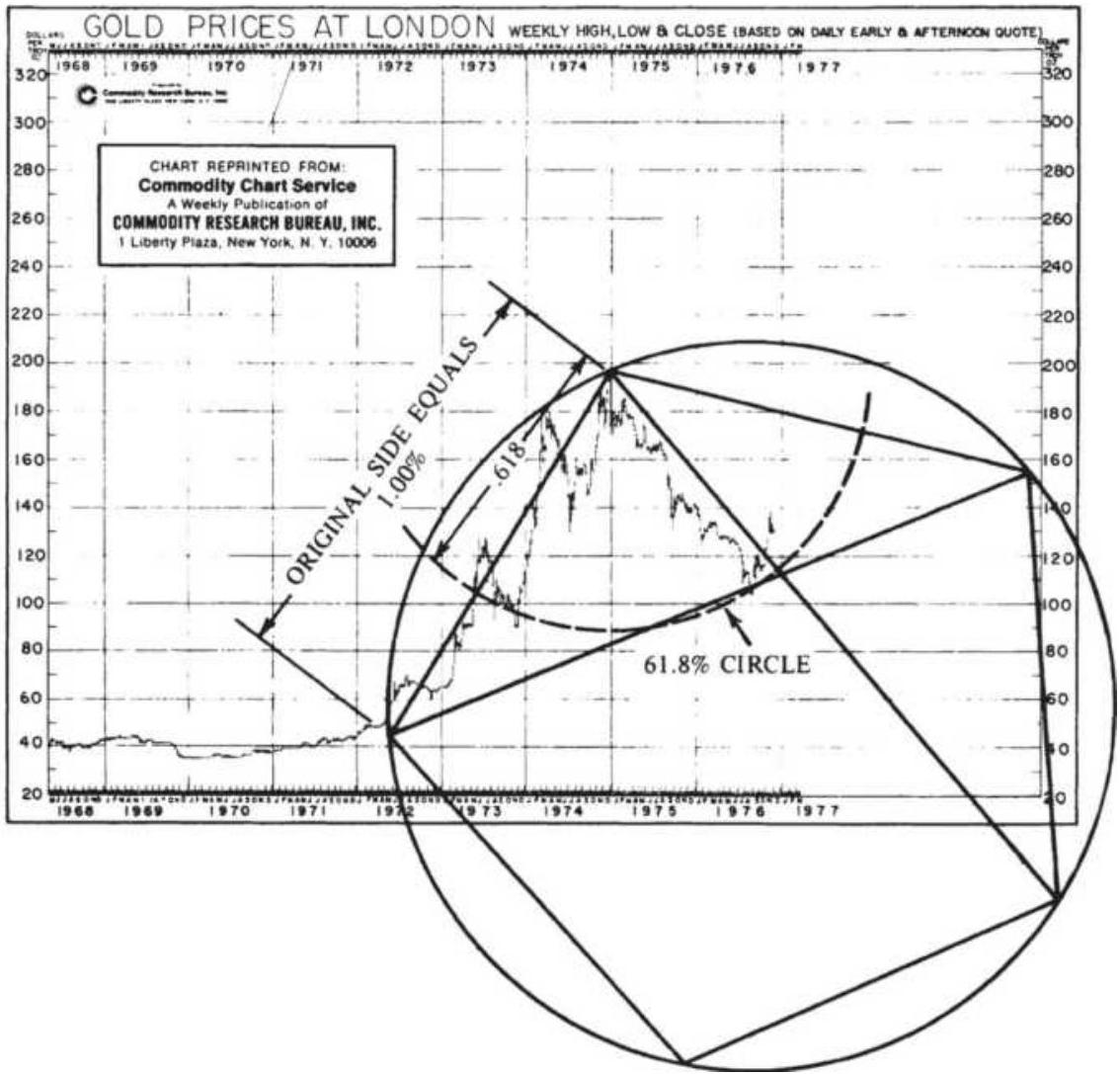

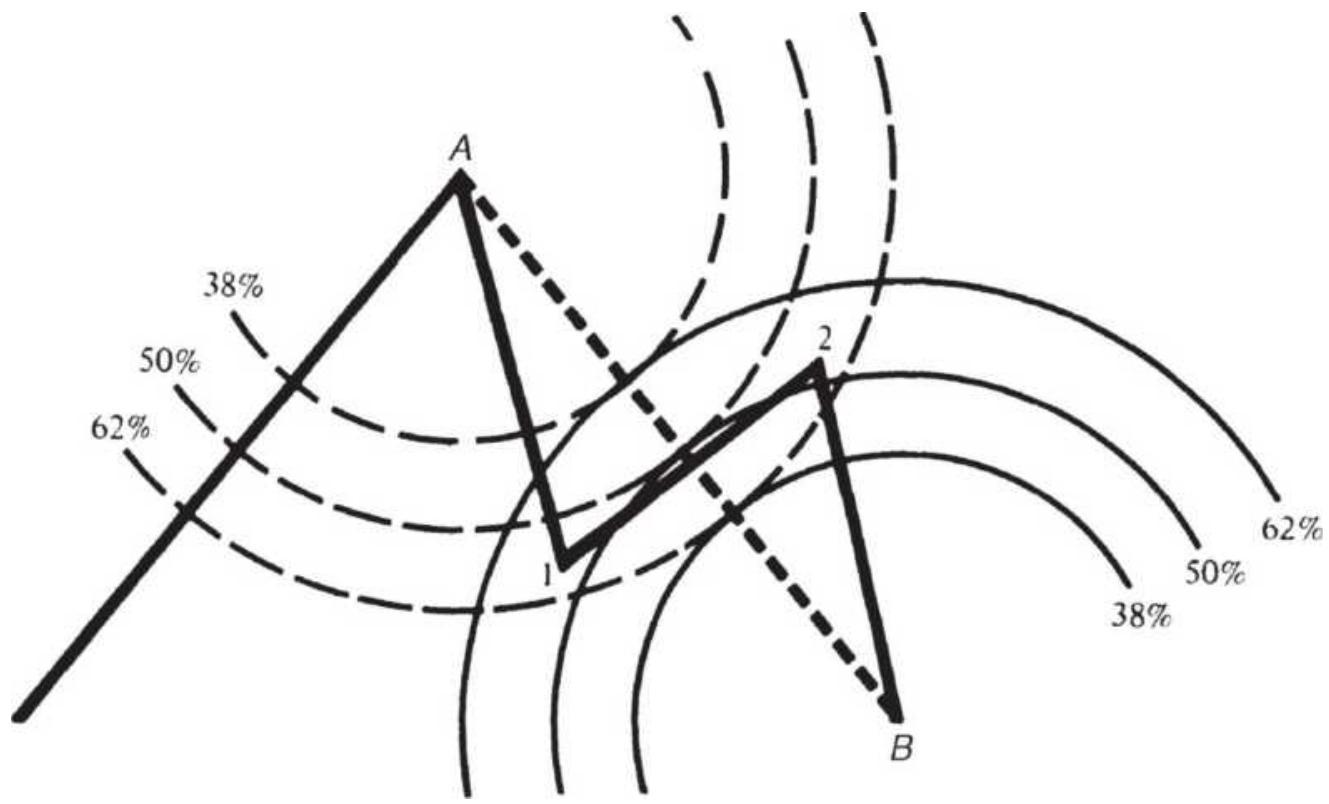

FIGURE 14.26 (a) Pentagon constructed from one diagonal. (b) Pentagon constr...

FIGURE 14.27 Using circles to find support and resistance.

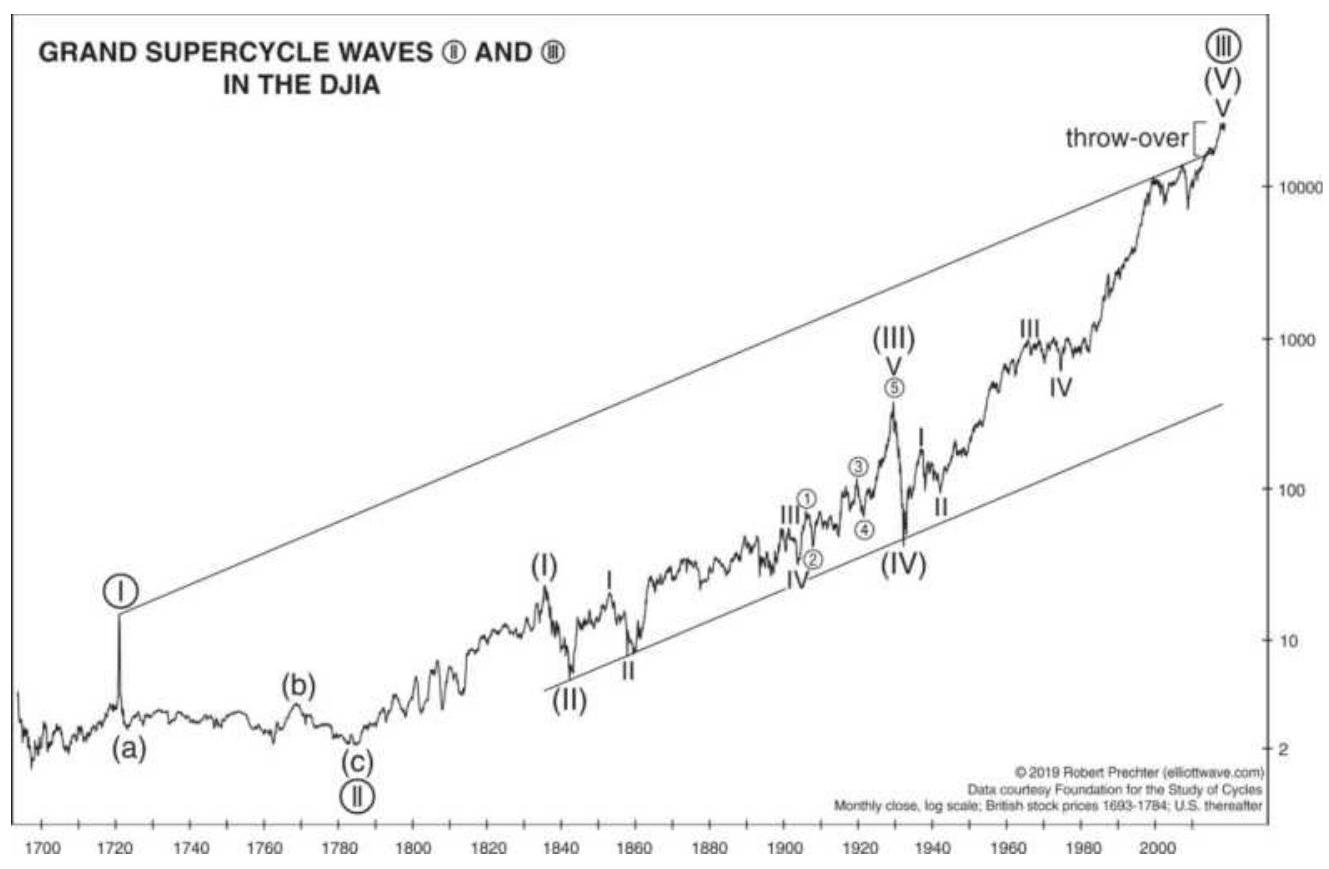

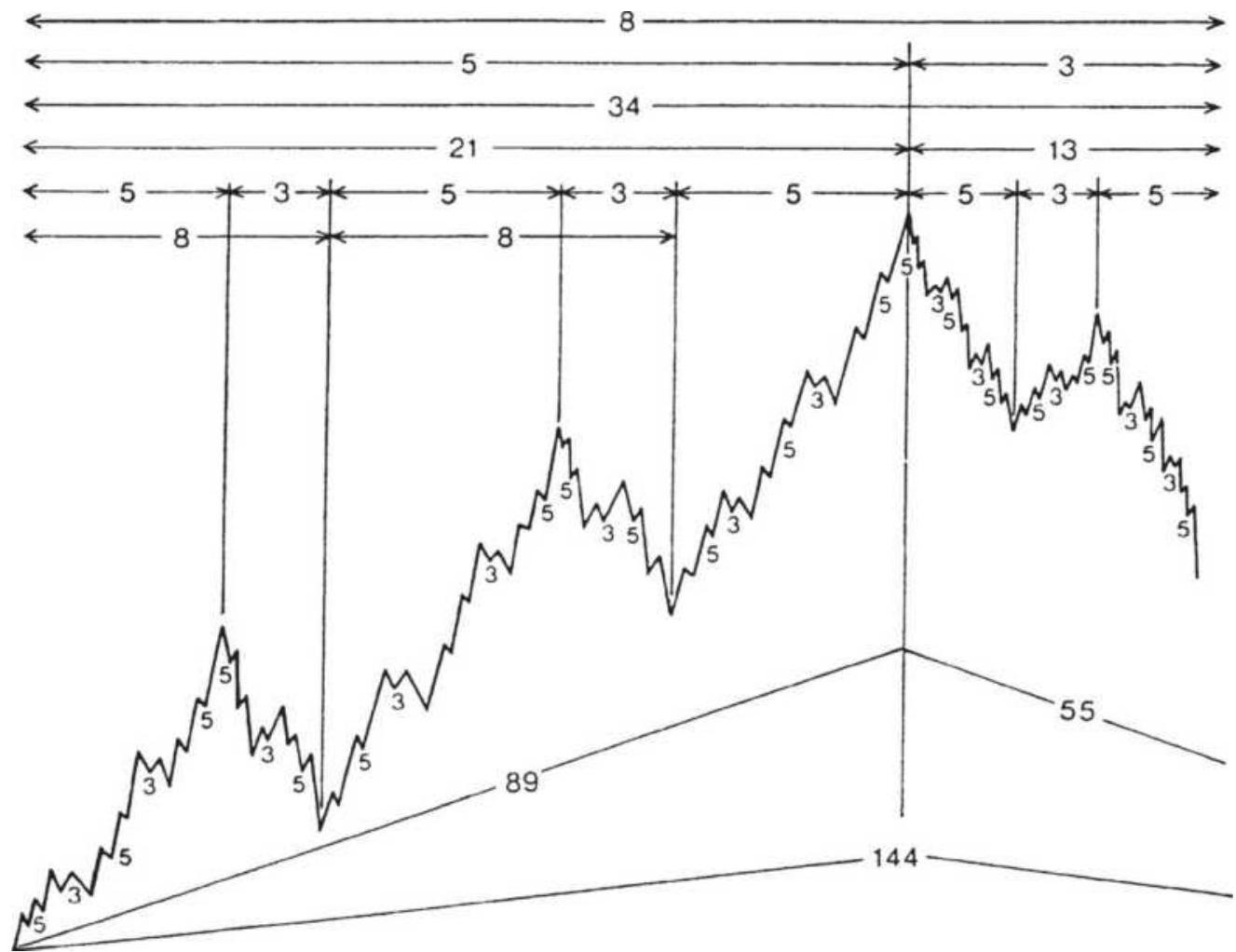

FIGURE 14.28 Elliott's complete wave cycle.

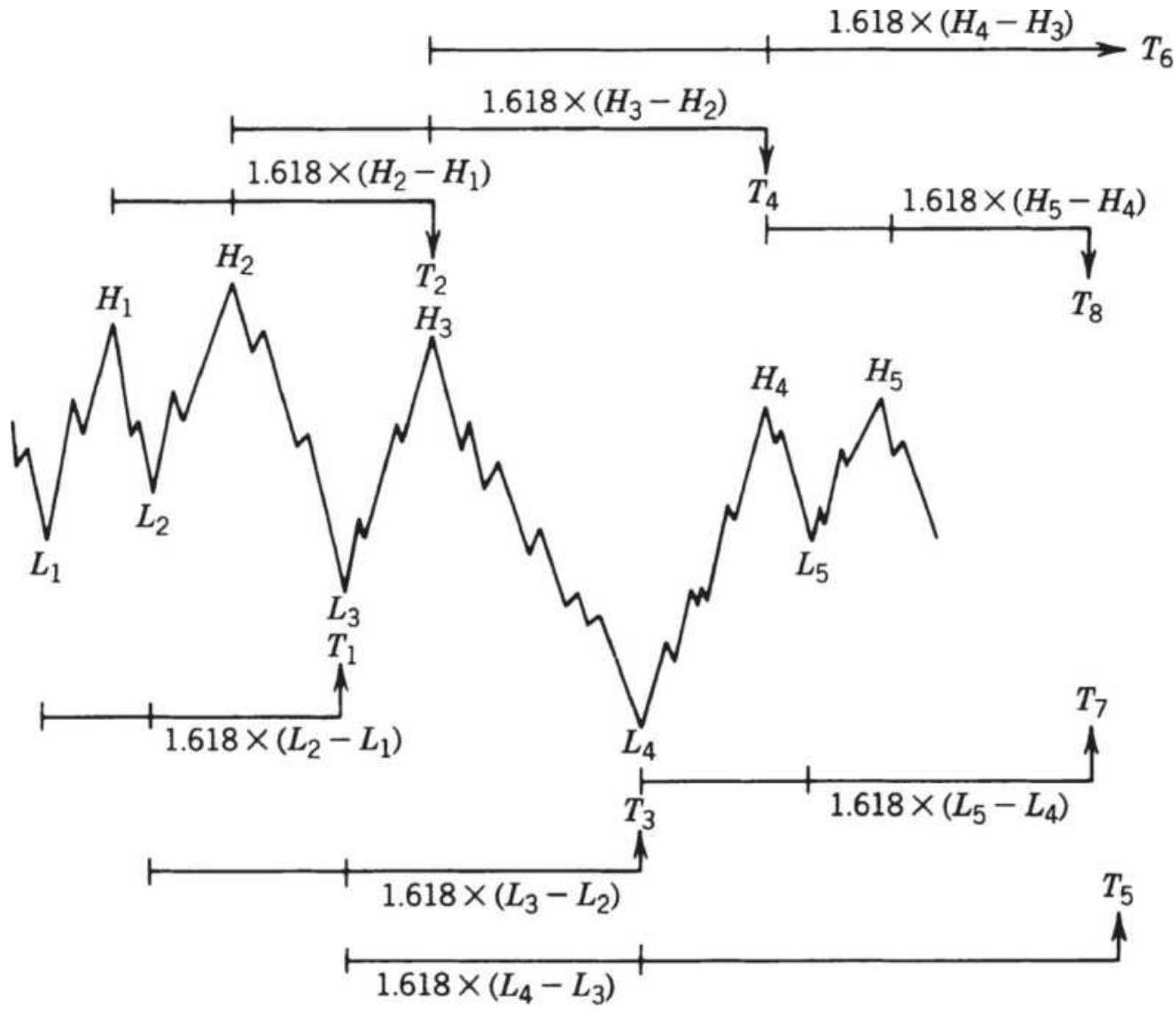

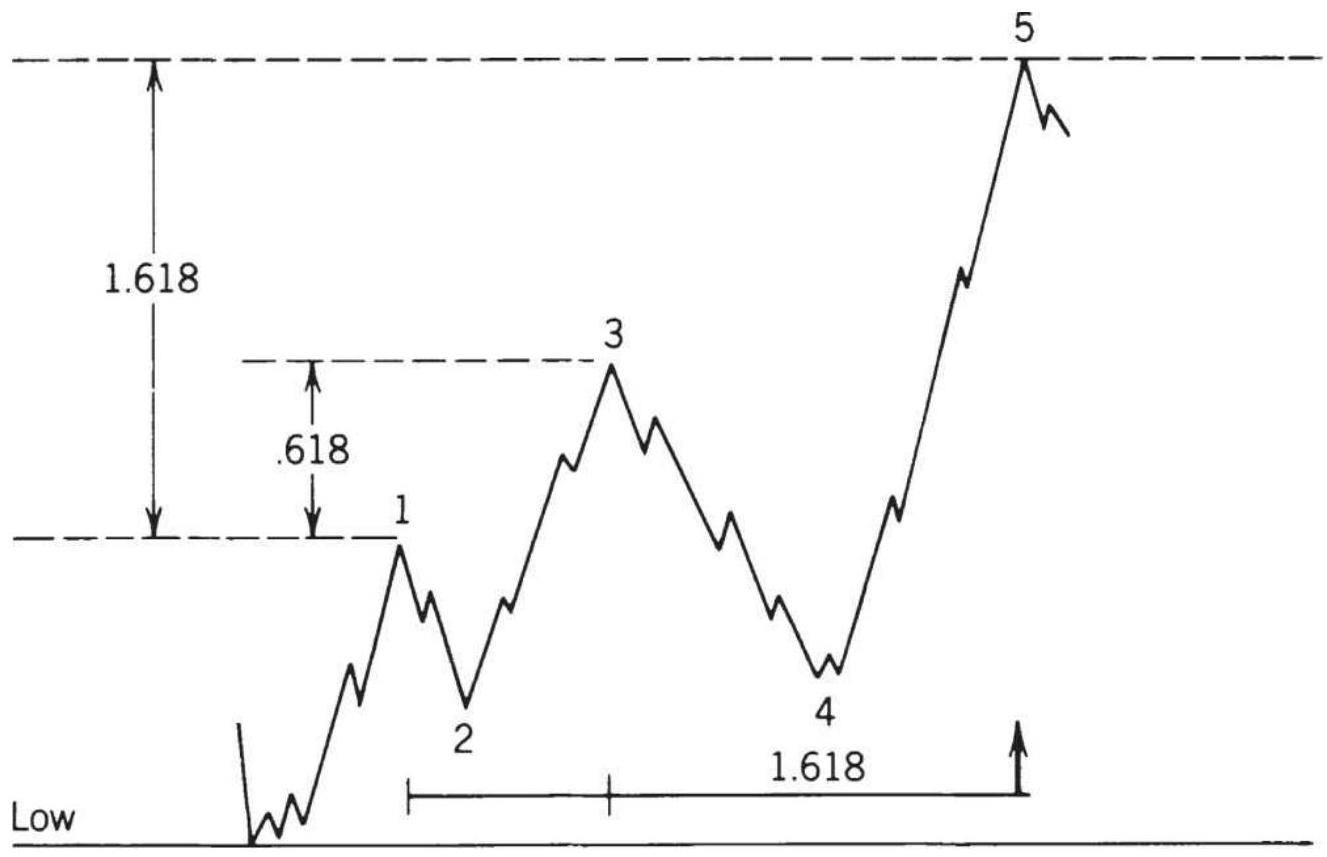

FIGURE 14.29 Calculation of time-goal days.

FIGURE 14.30 Price goals for standard 5 -wave moves.

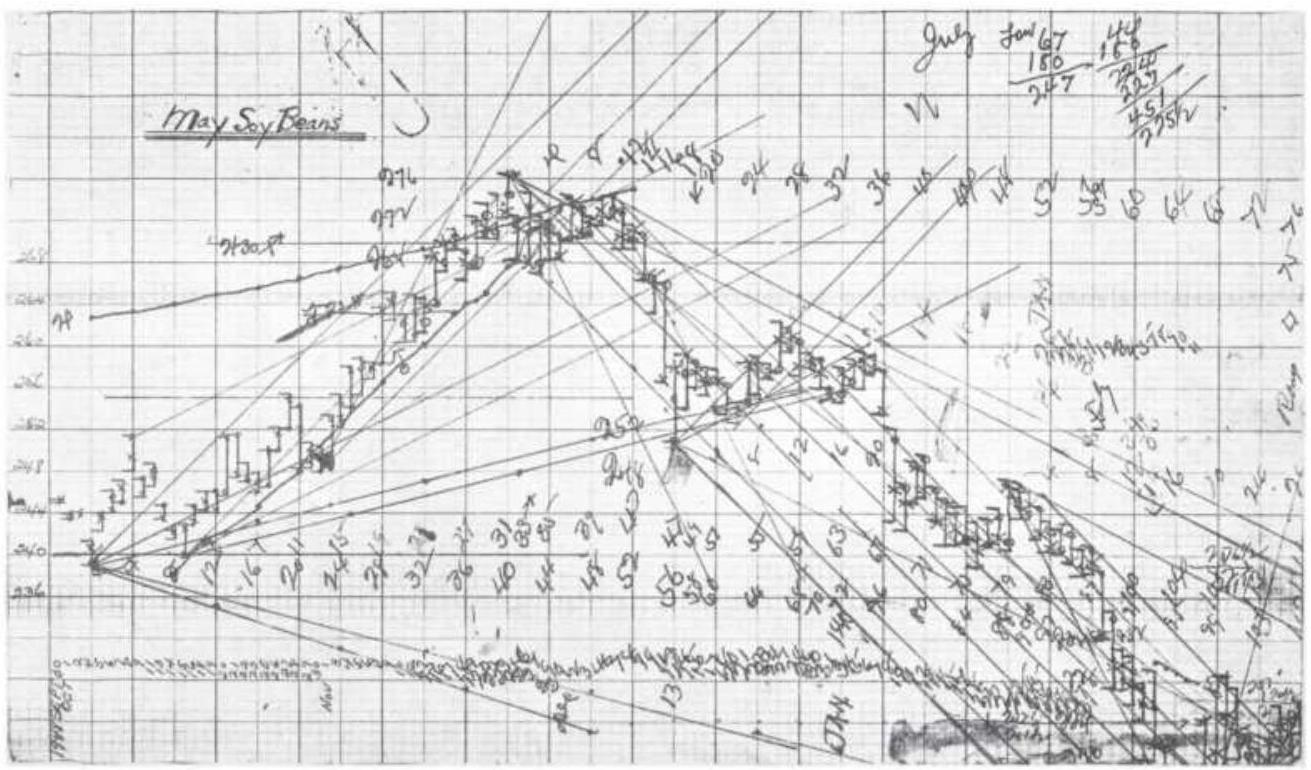

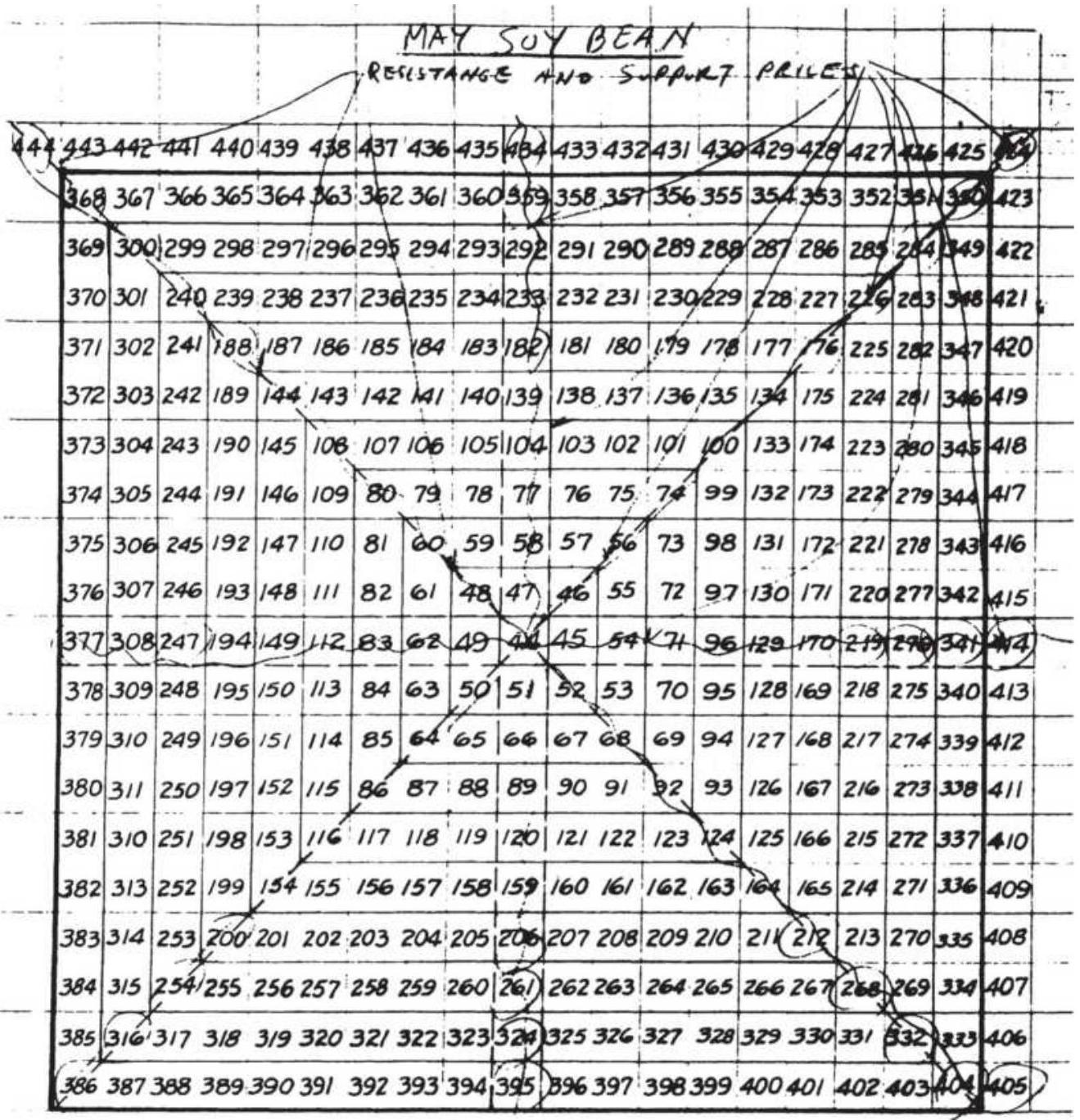

FIGURE 14.31 Gann's soybean worksheet.

FIGURE 14.32 May soybean square.

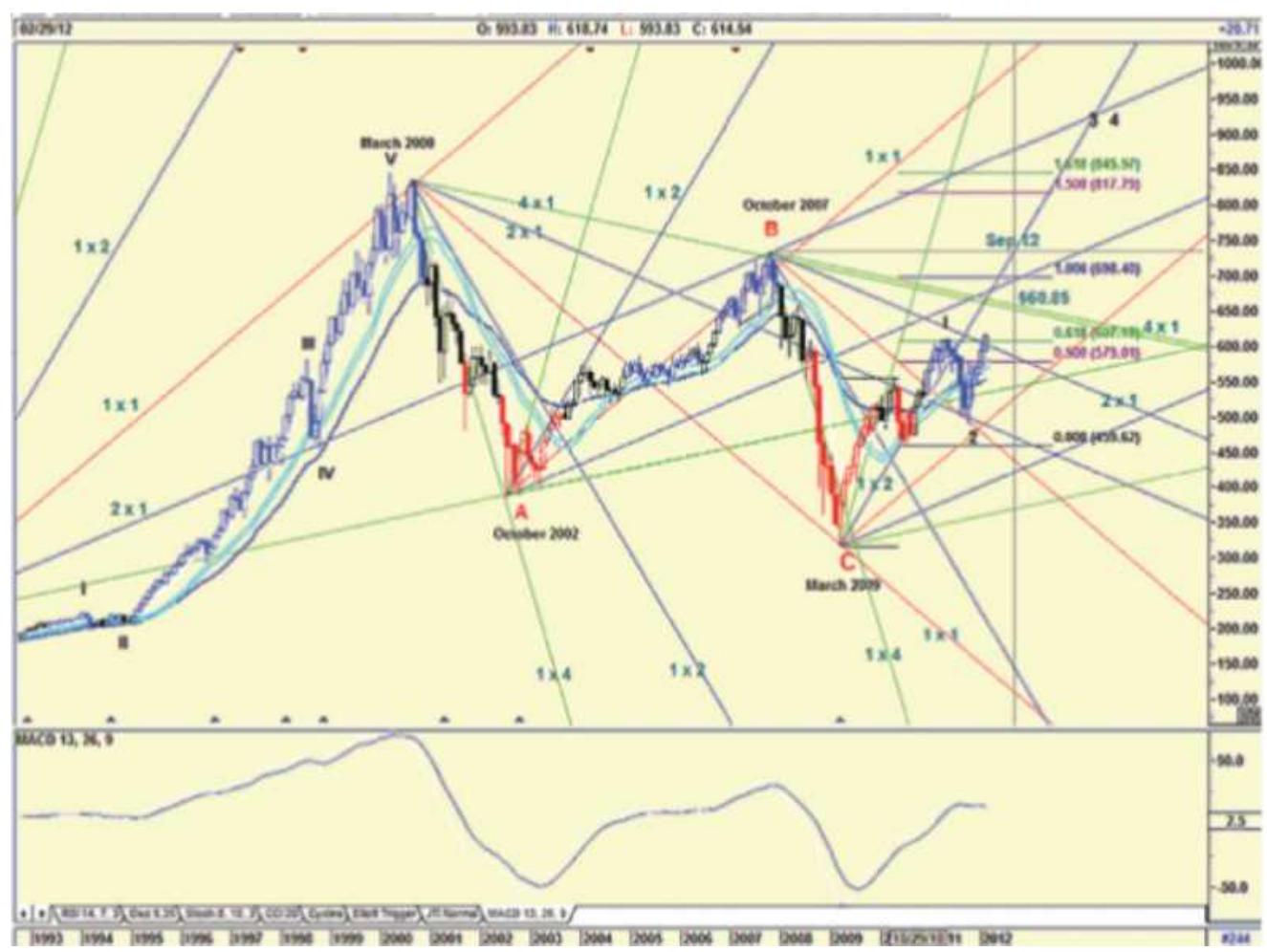

FIGURE 14.33 An example of Gann angles from ganntrader.com.

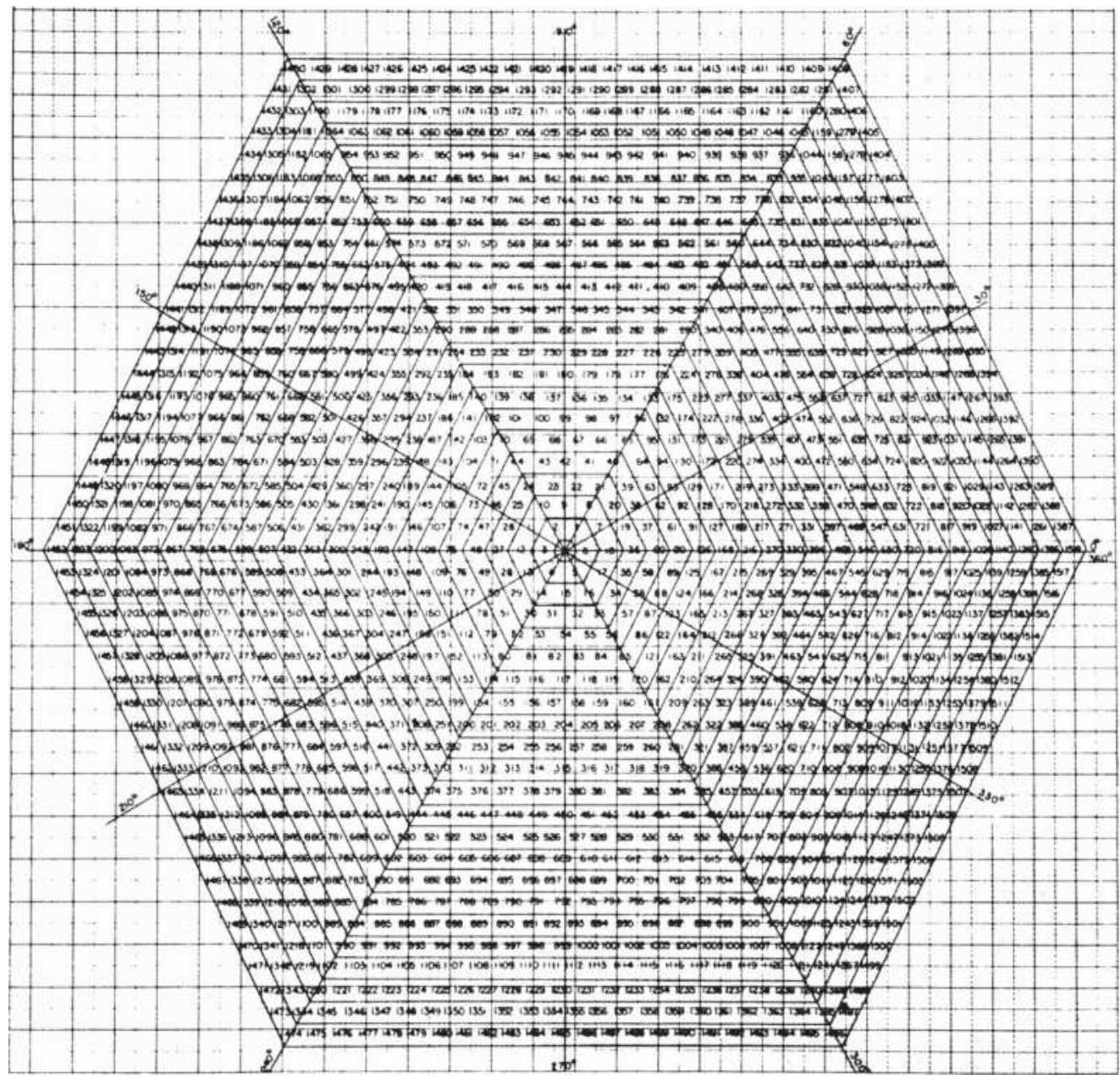

FIGURE 14.34 The Hexagon Chart.

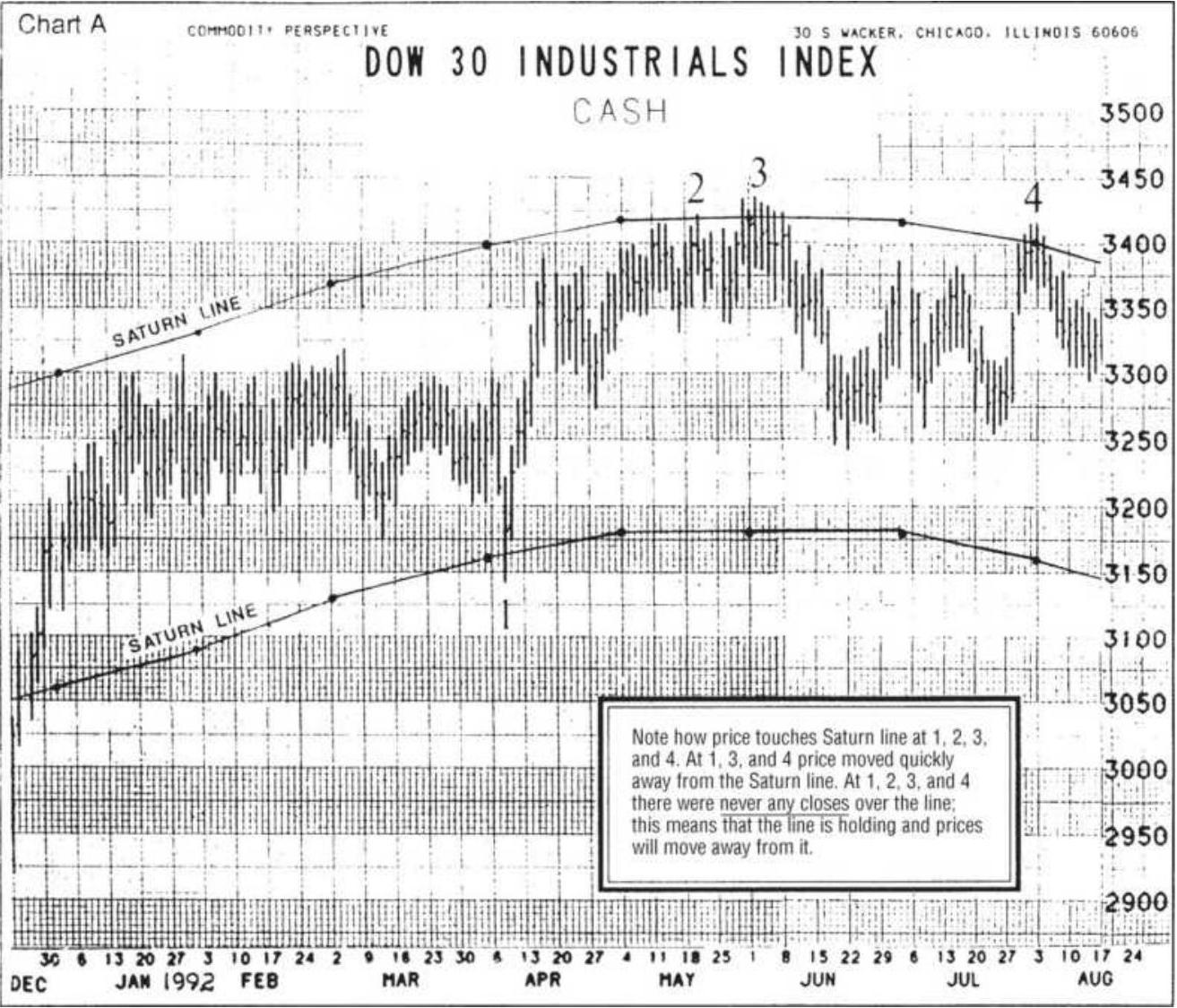

FIGURE 14.35 Saturn lines drawn on the Dow

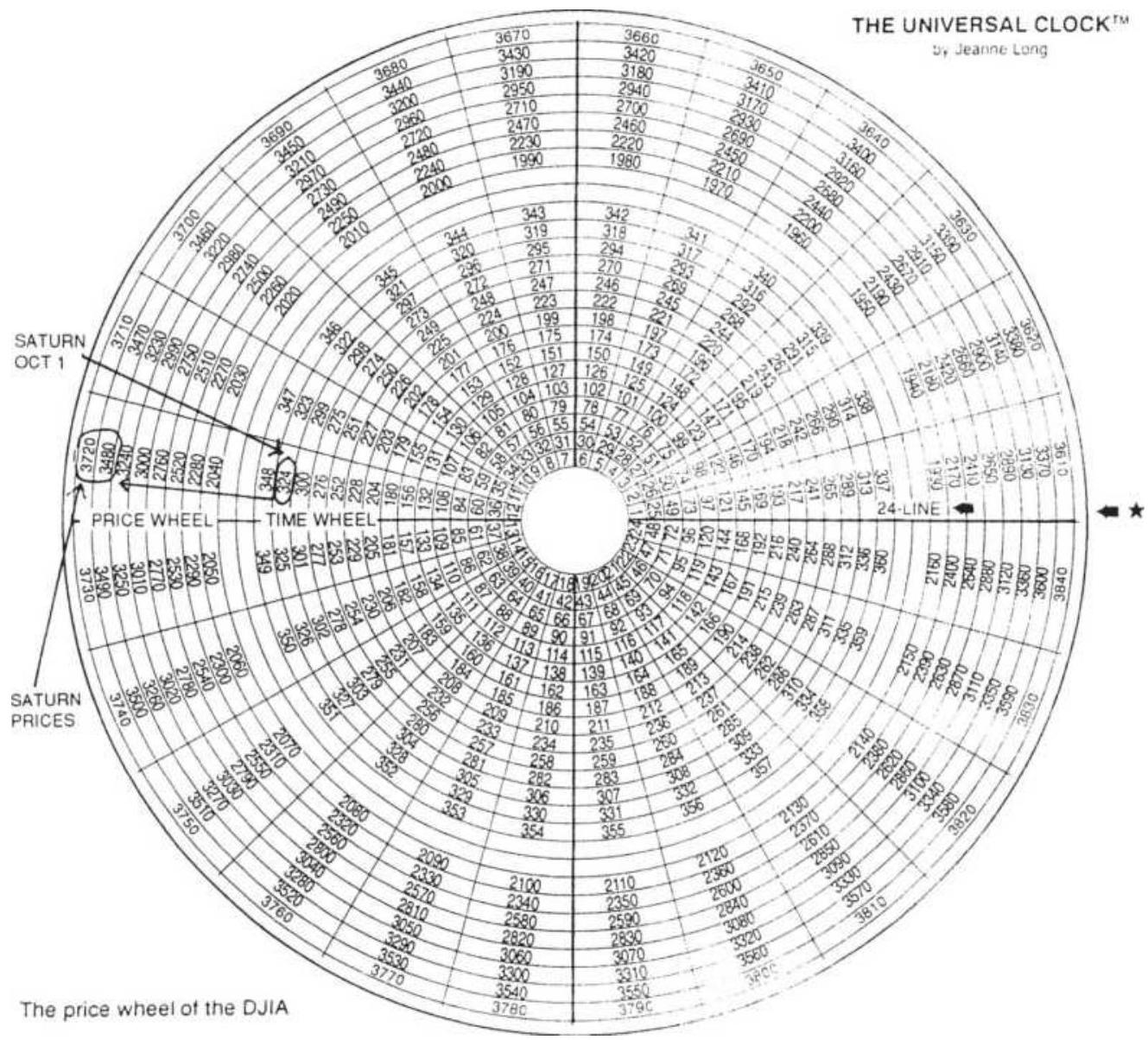

Industrial Average.

FIGURE 14.36 The DJIA Clock.

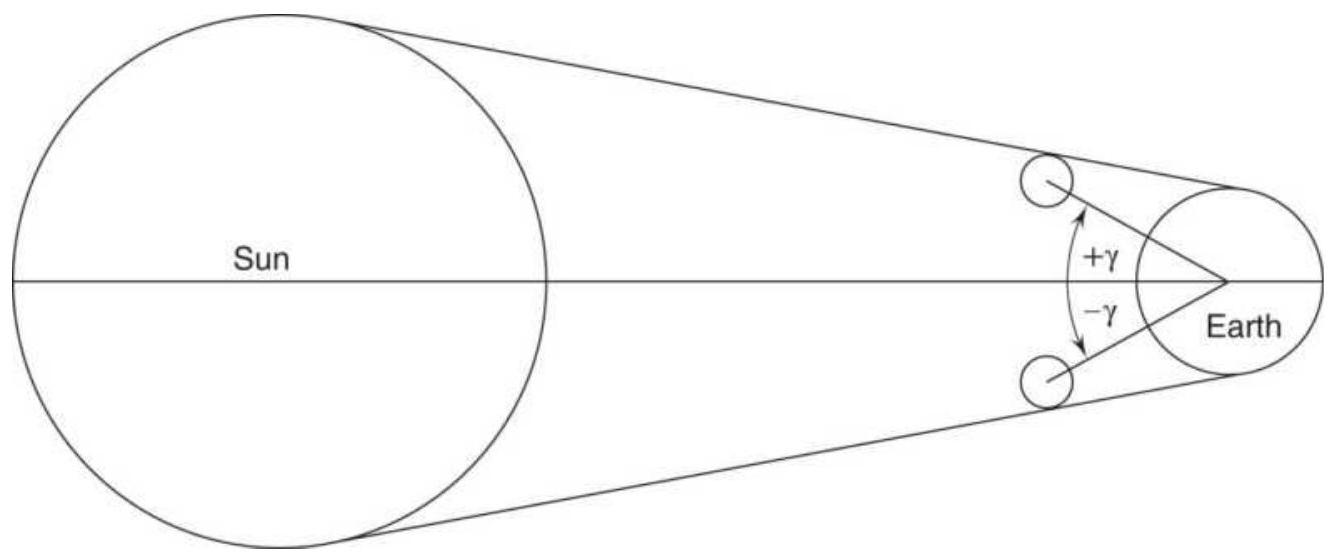

FIGURE 14.37 Geometry of a solar eclipse.

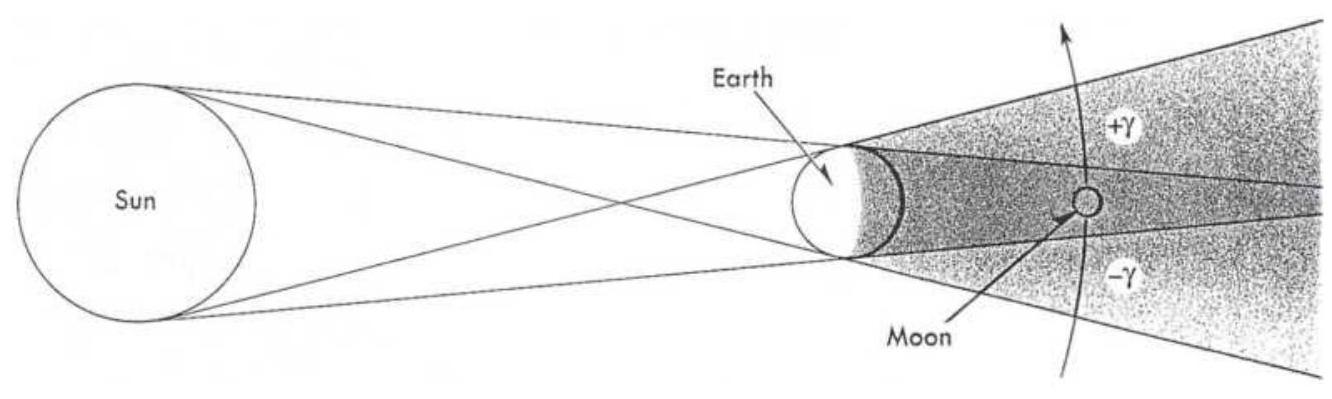

FIGURE 14.38 Geometry of a lunar eclipse.

\section*{Chapter 15}

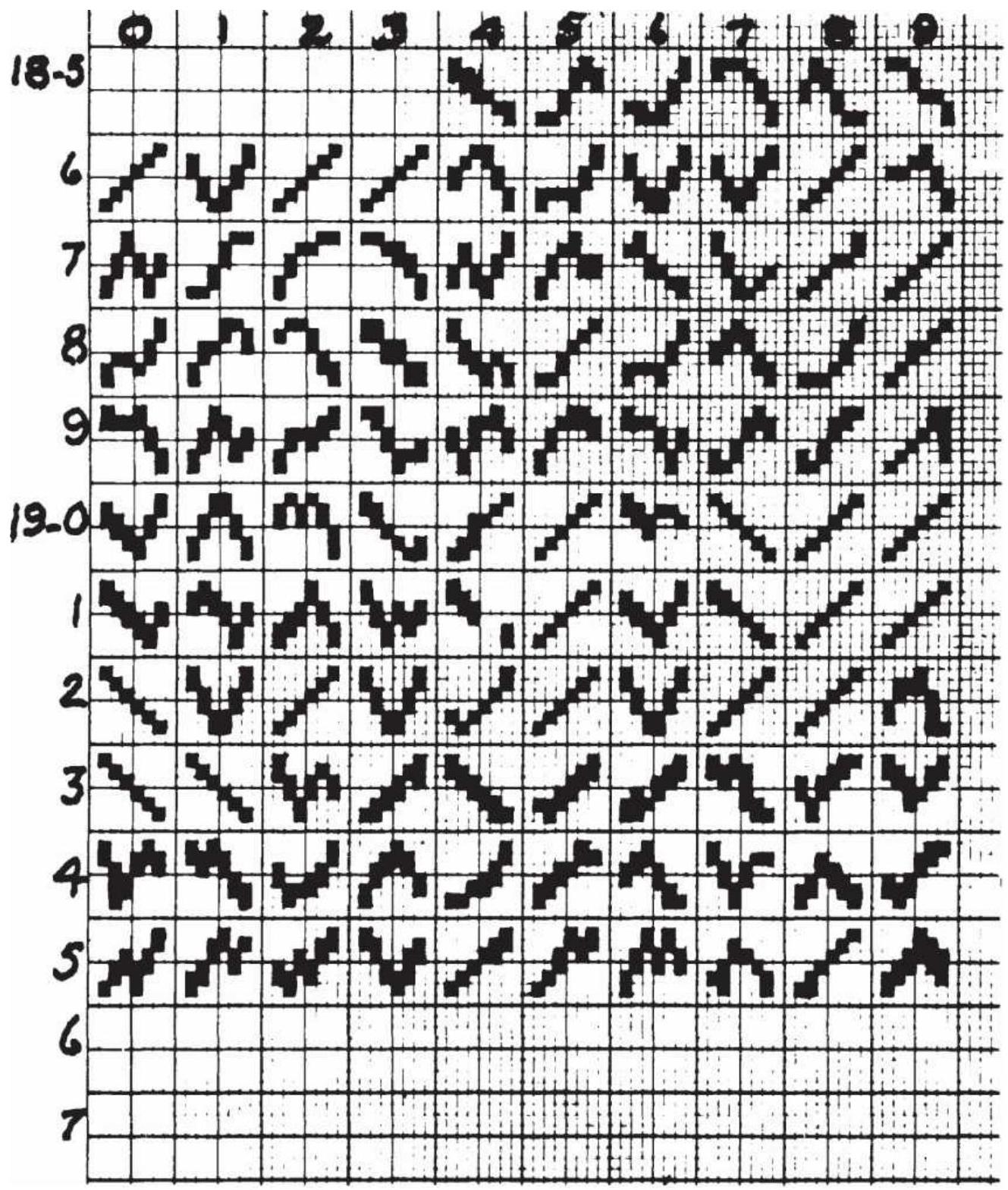

FIGURE 15.1 Graph of the New York Stock Market.

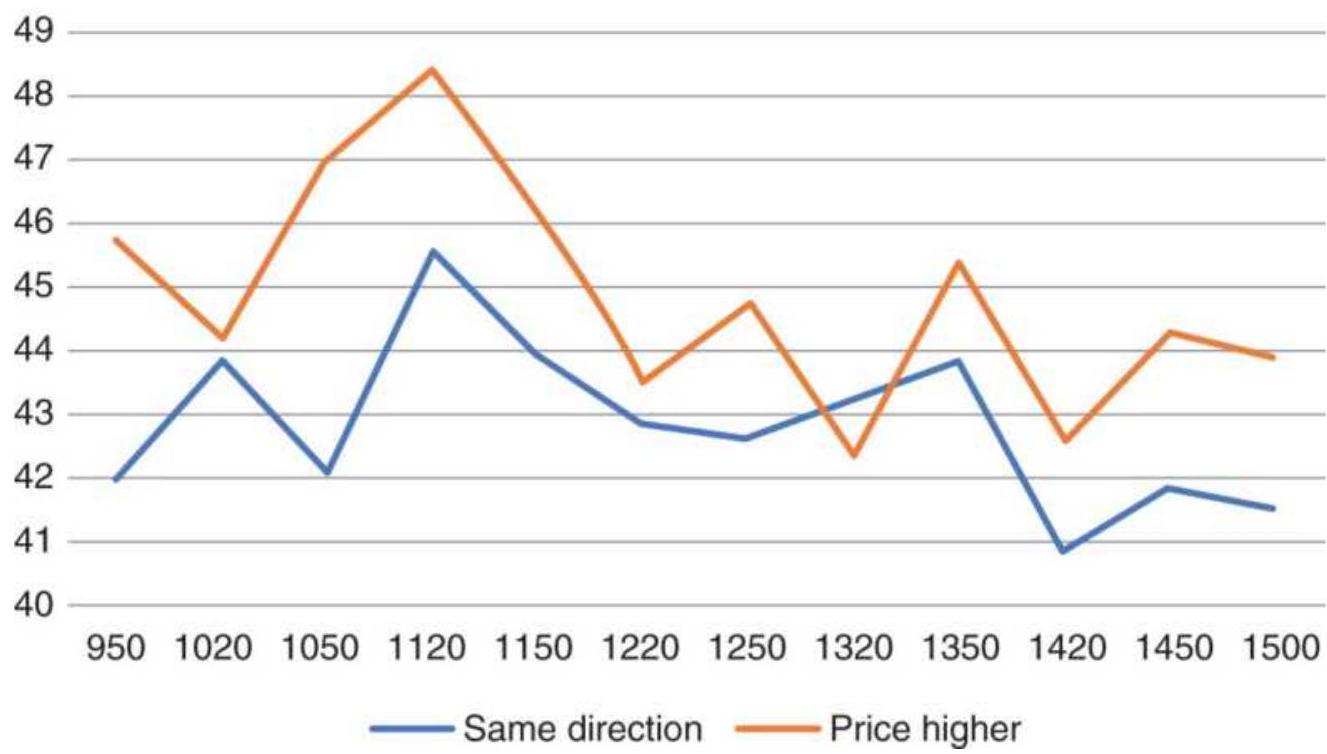

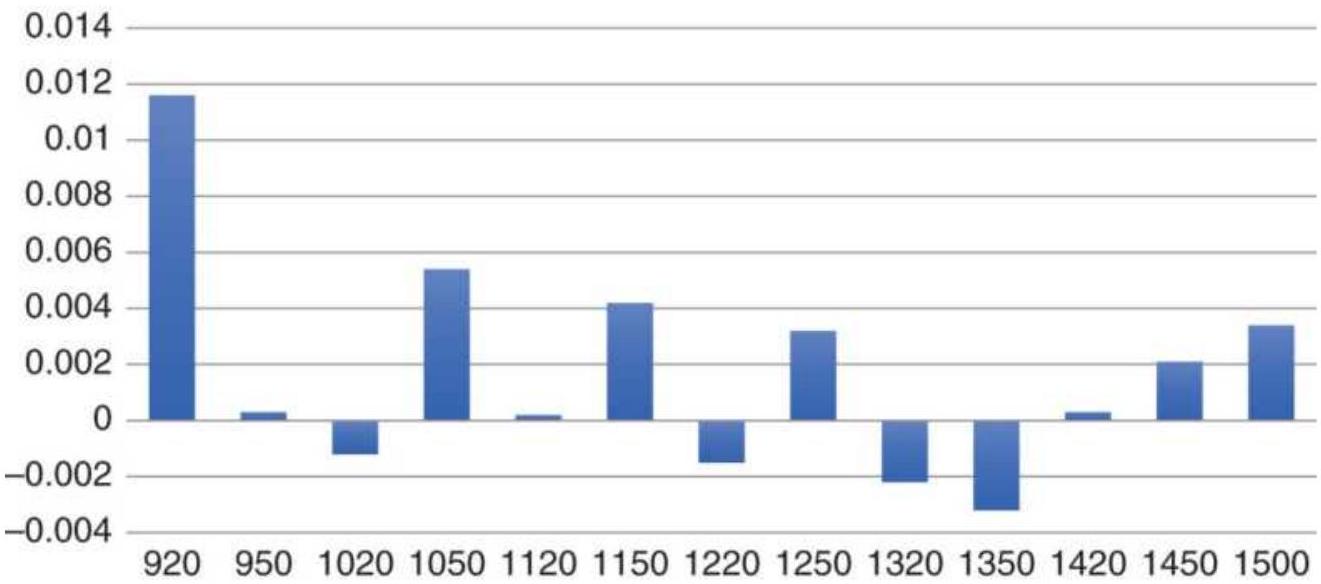

FIGURE 15.2 30-year bond futures percentage moves for bars in the same direc...

FIGURE 15.3 U.S. bond futures, average net point change by bar.

FIGURE 15.4 S\&P back-adjusted futures with intervals of study marked.

FIGURE 15.5 S\&P time patterns showing three different market periods corresp...

FIGURE 15.6 Back-adjusted futures for crude oil showing four intervals with ...

FIGURE 15.7 Crude oil time pattern for the sharp price decline from the high...

FIGURE 15.8 Crude oil time patterns for three cases of bull market, sideways...

FIGURE 15.9 U.S. 30-year bond futures, highs and lows by time.

FIGURE 15.10 Distribution of highs and lows for \(S \& P\) futures, during the bull...

FIGURE 15.11 S\&P futures, cumulative

percentage of highs and lows, 2009-2017...

FIGURE 15.12 S\&P futures, frequency of highs and lows during the financial c...

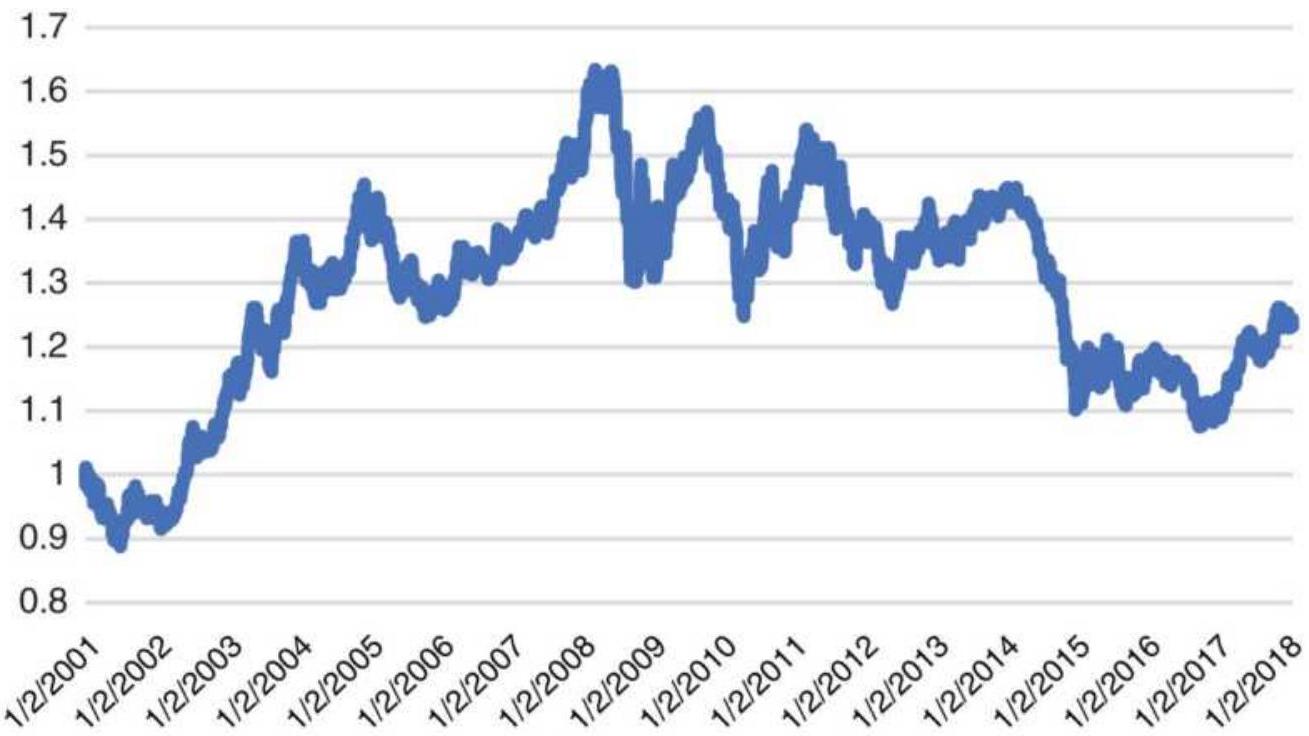

FIGURE 15.13 EURUSD futures from 2001 show wide price swings.

FIGURE 15.14 EURUSD high-lows marked with the times when the U.S. and Europe...

FIGURE 15.15 U.S. 30 -year bond weekday patterns, 2000-April 2018.

FIGURE 15.16 Weekday patterns for heating oil futures, 2000-April 2018.

FIGURE 15.17 Heating oil weekday patterns, April 2017-April 2018.

FIGURE 15.18 Weekday patterns for S\&P futures, 2000-April 2018.

FIGURE 15.19 S\&P futures weekday patterns, 2009-2017.

FIGURE 15.20 Amazon (AMZN) weekday patterns during the bull market, 2009-Apr...

FIGURE 15.21 U.S. bonds weekday patterns filtered with a 120-day moving aver...

FIGURE 15.22 S\&P futures weekday patterns filtered by three trends, \(30,60, \ldots\)

FIGURE 15.23 U.S. 30 -year bond futures, 2010-April 2018, average price chang...

FIGURE 15.24 U.S.30-year bond futures, volume ratio.

FIGURE 15.25 Euro futures price differences by day of month, 2010-2017.

FIGURE 15.26 Euro futures volume ratio by day of month, 2010-2017.

FIGURE 15.27 S\&P futures price differences,

2010-2017.

FIGURE 15.28 S\&P futures volume ratio, 20102017.

FIGURE 15.29 Amazon returns by day, 20102017.

FIGURE 15.30 Amazon volume ratio by day, 2010-2017.

FIGURE 15.31 The 3-day trade system applied to SPY, showing net P/L with and...

FIGURE 15.32 Specific buying and selling days. Chapter 16

FIGURE 16.1 Moving averages applied to a 20minute bar crude oil business da...

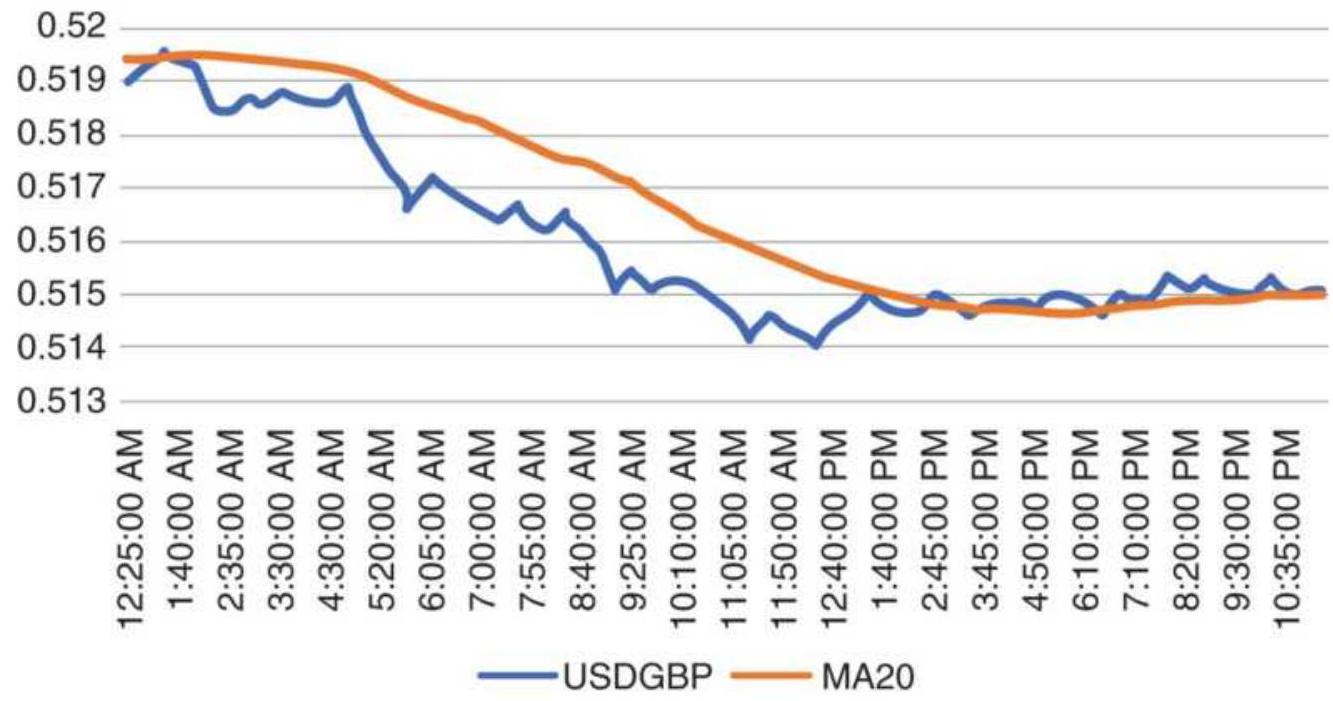

FIGURE 16.2 USDGBP 5-min, 24-hr data with a 20-bar moving average.

FIGURE 16.3 USDGBP 5-min data grouped by 100-tick bars, with a 20-bar moving...

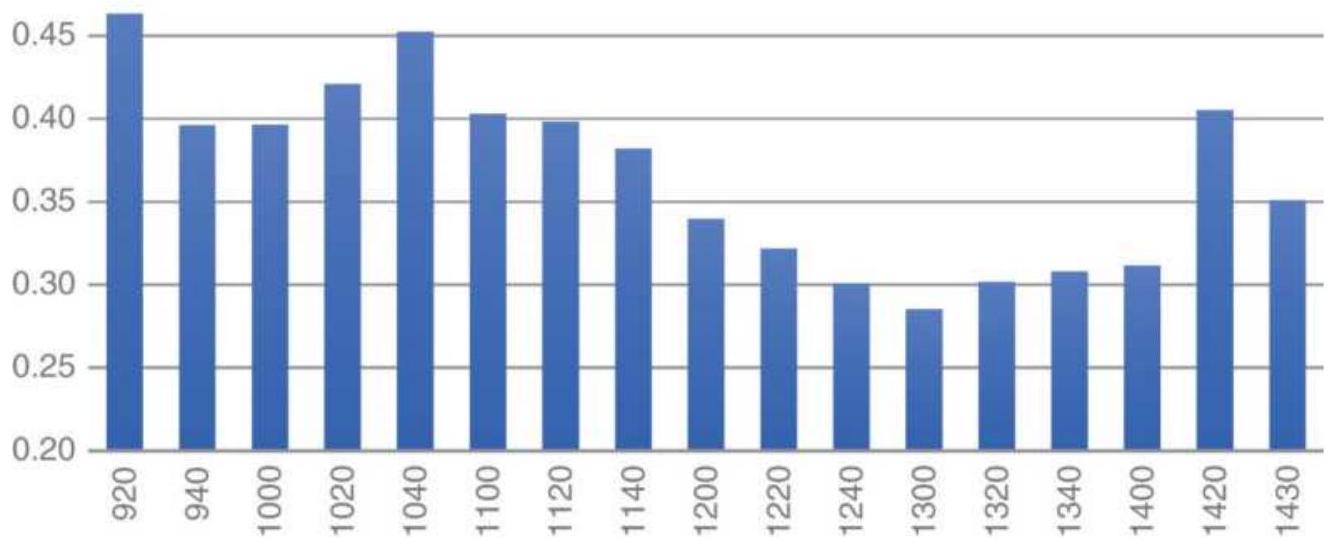

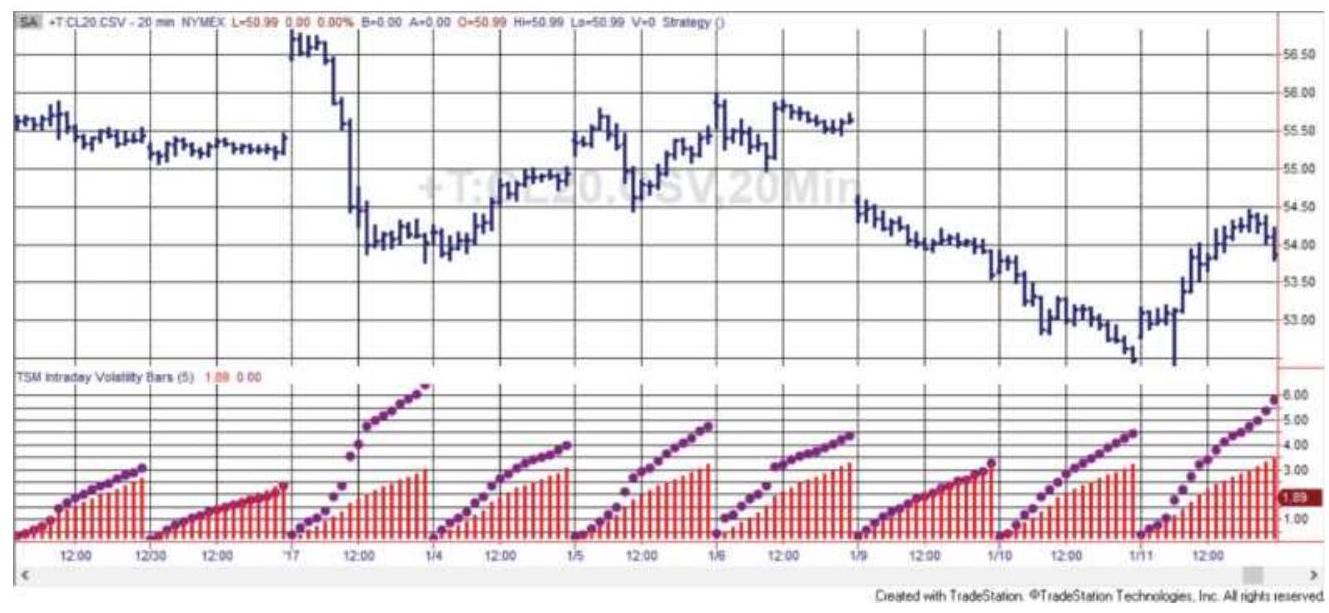

FIGURE 16.4 Intraday volatility pattern for 20min crude oil, showing a jump...

FIGURE 16.5 Intraday volatility indicator using past 5 days applied to 20-mi...

FIGURE 16.6 Intraday timing of market

\(\underline{\text { movement. }}\)

FIGURE 16.7 Midday support and resistance breakout applied to 30 -min S\&P fut...

FIGURE 16.8 S\&P 30-min bars with a 2-bar breakout.

FIGURE 16.9 Using the LBR/RSI \({ }^{\mathrm{TM}}\) indicator to trade a 1st-hour breakout.

FIGURE 16.10 Example of using \(0.5 \times\) ATR thresholds from the open.

FIGURE 16.11 Example of using \(0.5 \times\) ATR thresholds from the previous close.

FIGURE 16.12 Optimization of \(30-\mathrm{min}\) S\&P breakout using an ATR factor measure...

FIGURE 16.13 Optimization of \(30-\mathrm{min}\) S\&P breakout using an ATR factor measure...

FIGURE 16.14 S\&P trend filter optimization based on breakout from the open....

FIGURE 16.15 S\&P trend filter optimization based on a factor of the ATR meas...

FIGURE 16.16 Net profits from S\&P breakout using the open and a trend filter...

FIGURE 16.17 Net profits from S\&P breakout using the previous close and a tr...

FIGURE 16.18 Mean reversion optimization based on the open using only the cu...

FIGURE 16.19 Net profits from the S\&P mean reversion strategy based on exten...

FIGURE 16.20 Fisher's buy-and-reverse scenario.

FIGURE 16.21 HFT market-maker sequence.

FIGURE 16.22 HFT with two similar markets,

\section*{trading the one that lags.}

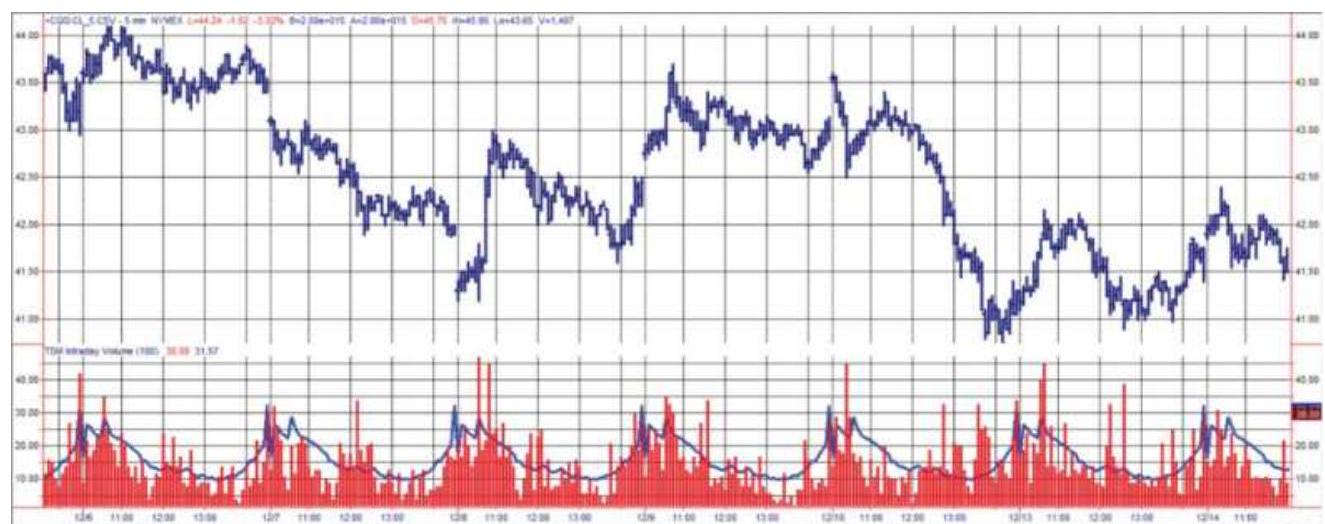

FIGURE 16.23 Intraday volume patterns for crude oil, December 2004. The bott...

FIGURE 16.24 20-minute gold bars with today's bar volatility, the average ba...

Chapter 17

FIGURE 17.1 Similar price moves with low and high noise.

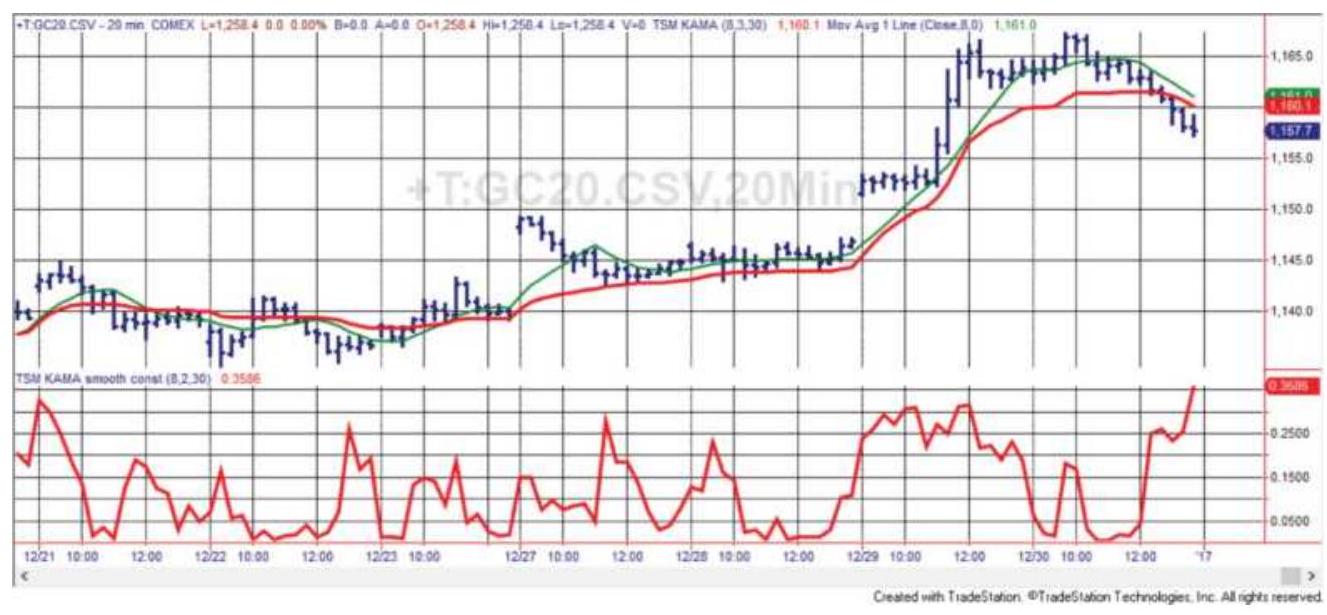

FIGURE 17.2 20-minute gold futures with an 8period Kaufman's Adaptive Movin...

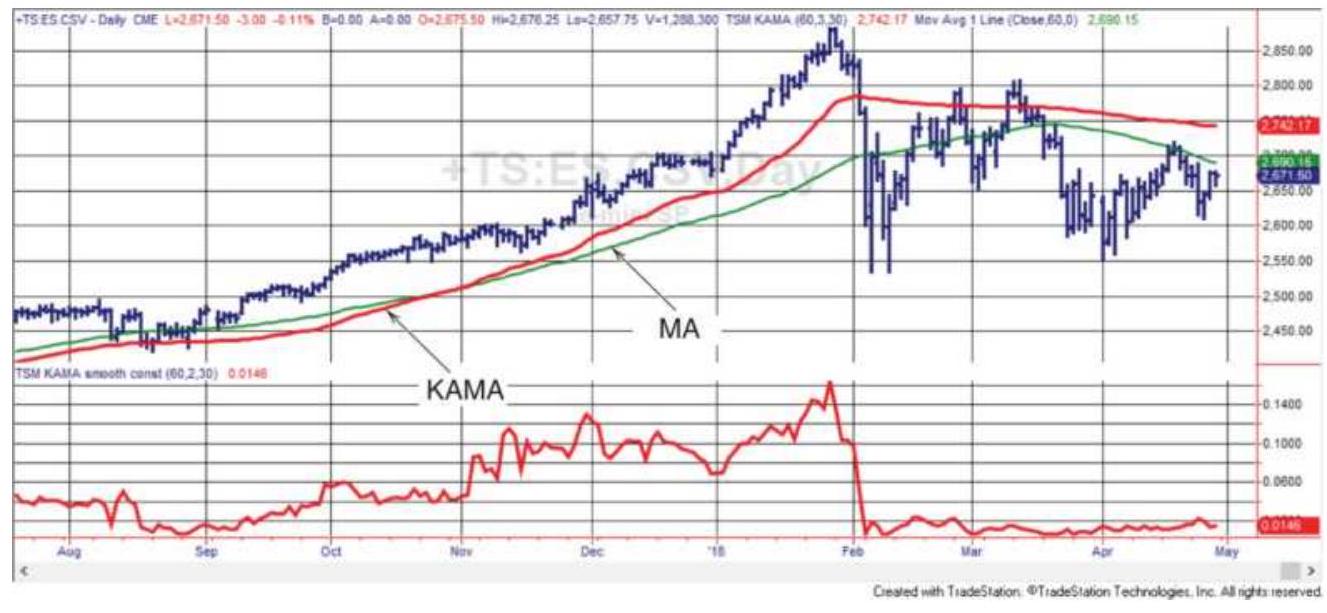

FIGURE 17.3 S\&P futures with a 60-day KAMA (the darker line) and MA in the t...

FIGURE 17.4 Total profits using an 8-period KAMA with a fixed filter applied...

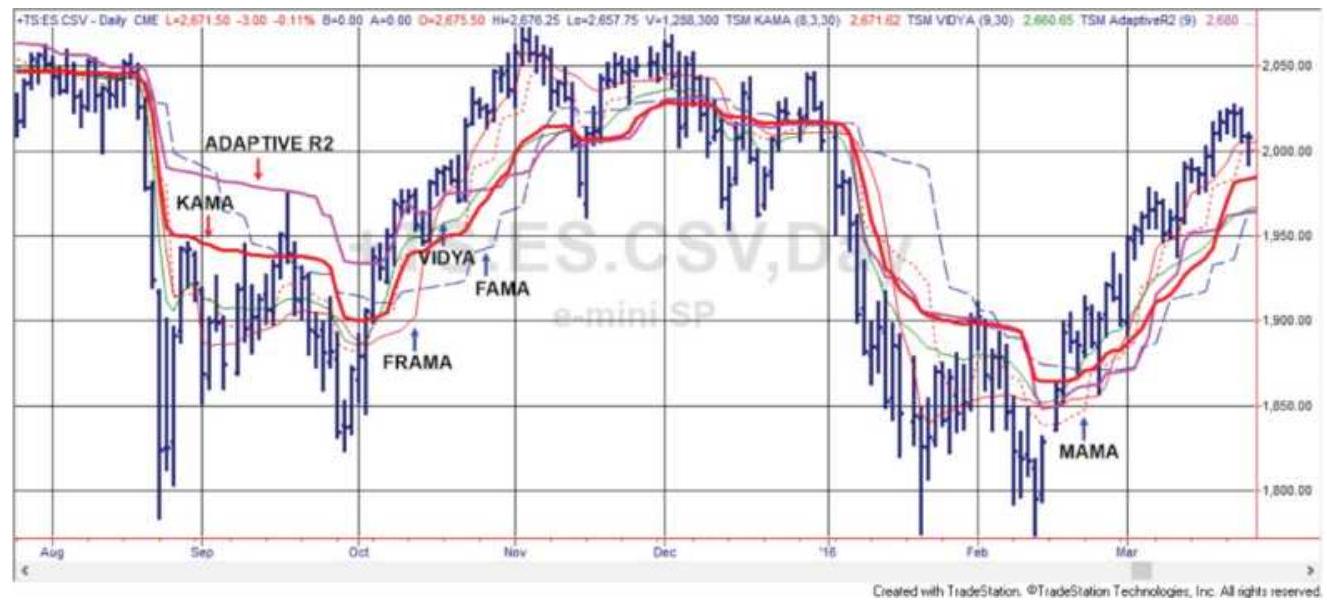

FIGURE 17.5 The adaptive indicators KAMA, VIDYA, Adaptive R2, MAMA, FAMA, an...

FIGURE 17.6 Comparison of the adaptive RSI,

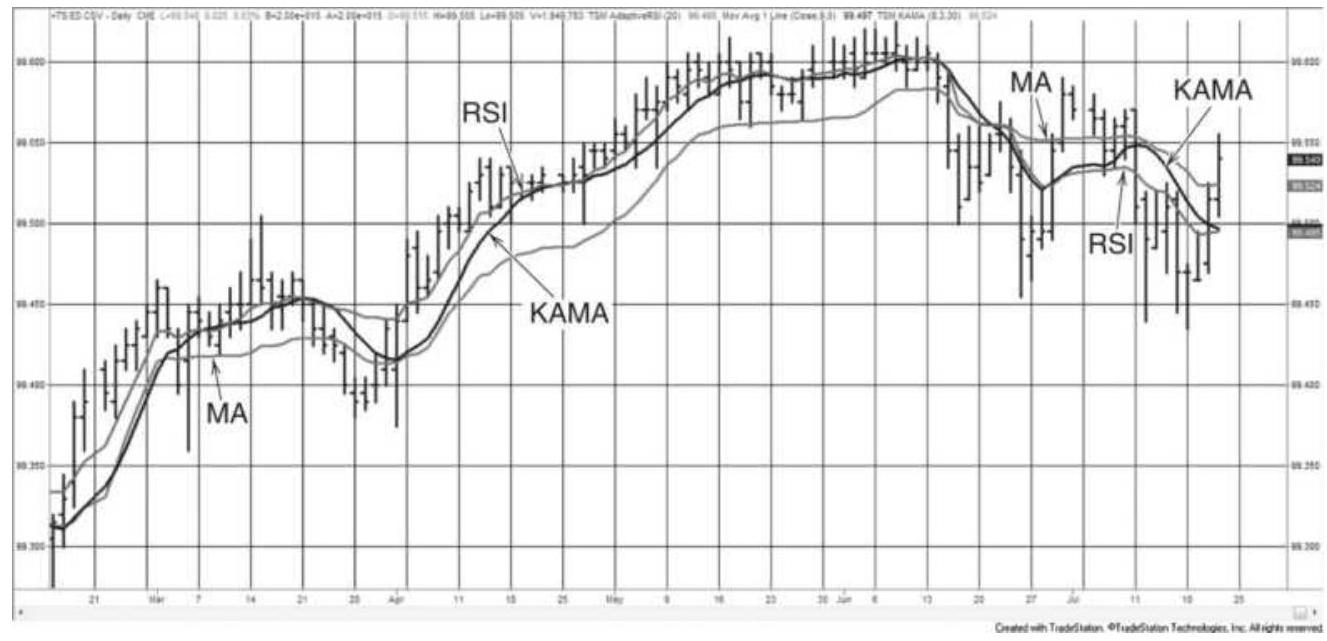

KAMA, and a 10 -day moving averag...

FIGURE 17.7 Parabolic Time/Price System

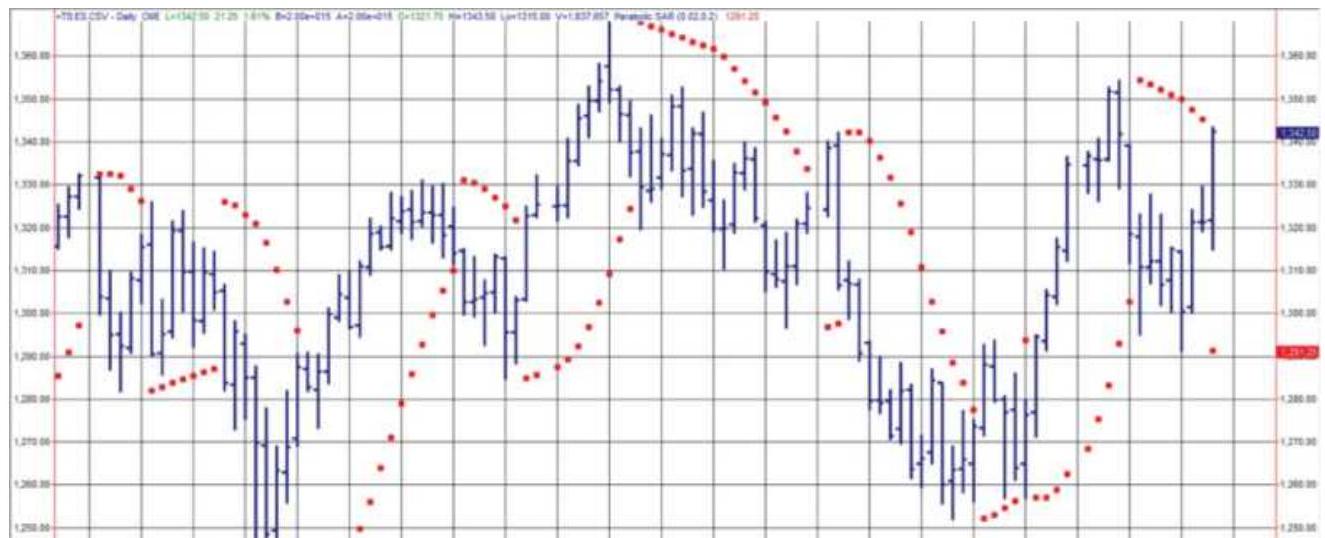

applied to emini S\&P futures, Februa...

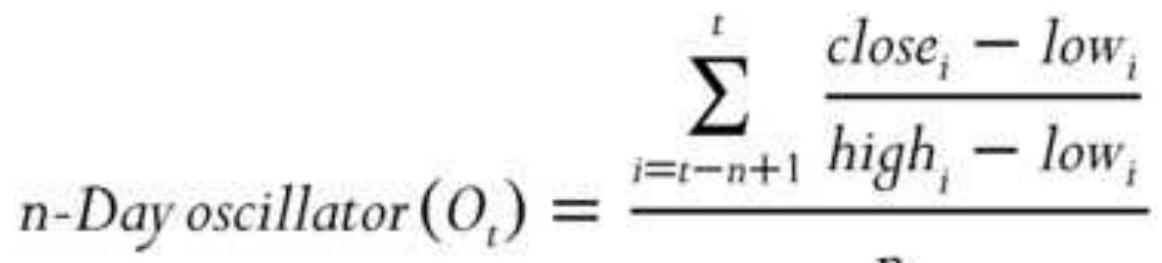

FIGURE 17.8 The Trend-Adjusted Oscillator applied to \(\mathrm{S} \& \mathrm{P}\) futures, September ...

FIGURE 17.9 Ehlers' Instantaneous Trend

applied to euro futures. The thicker...

Chapter 18

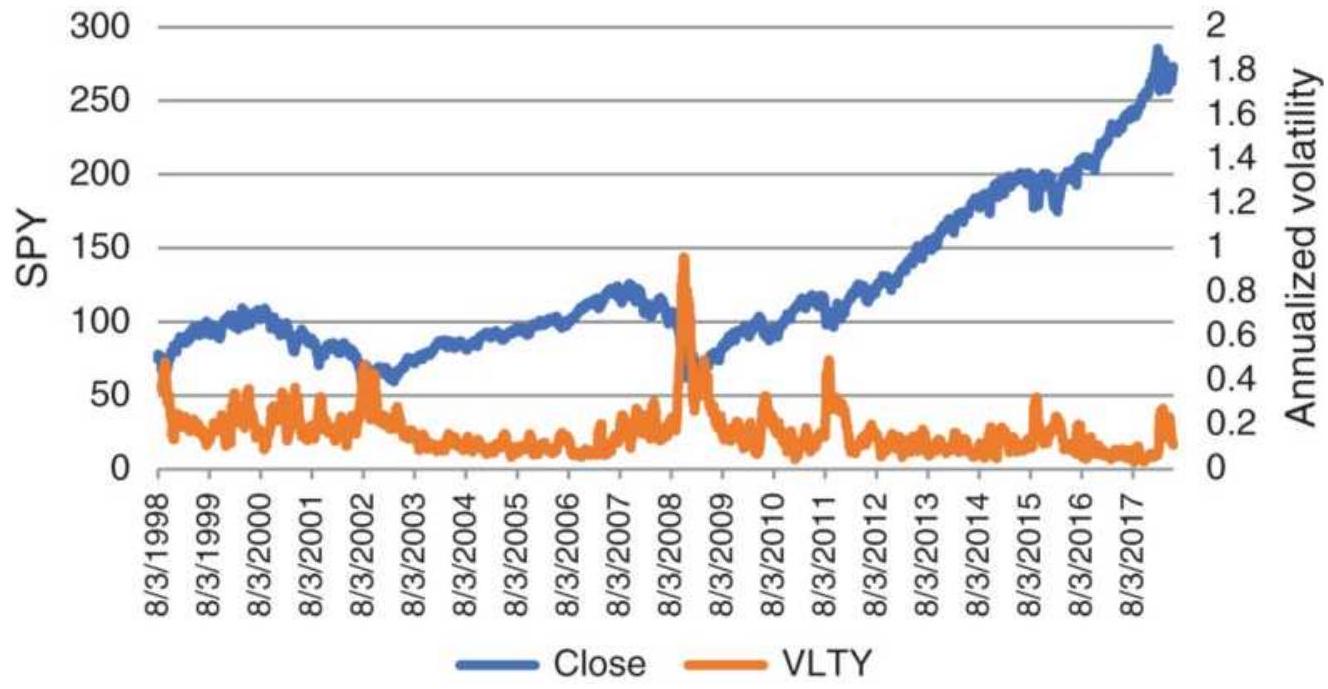

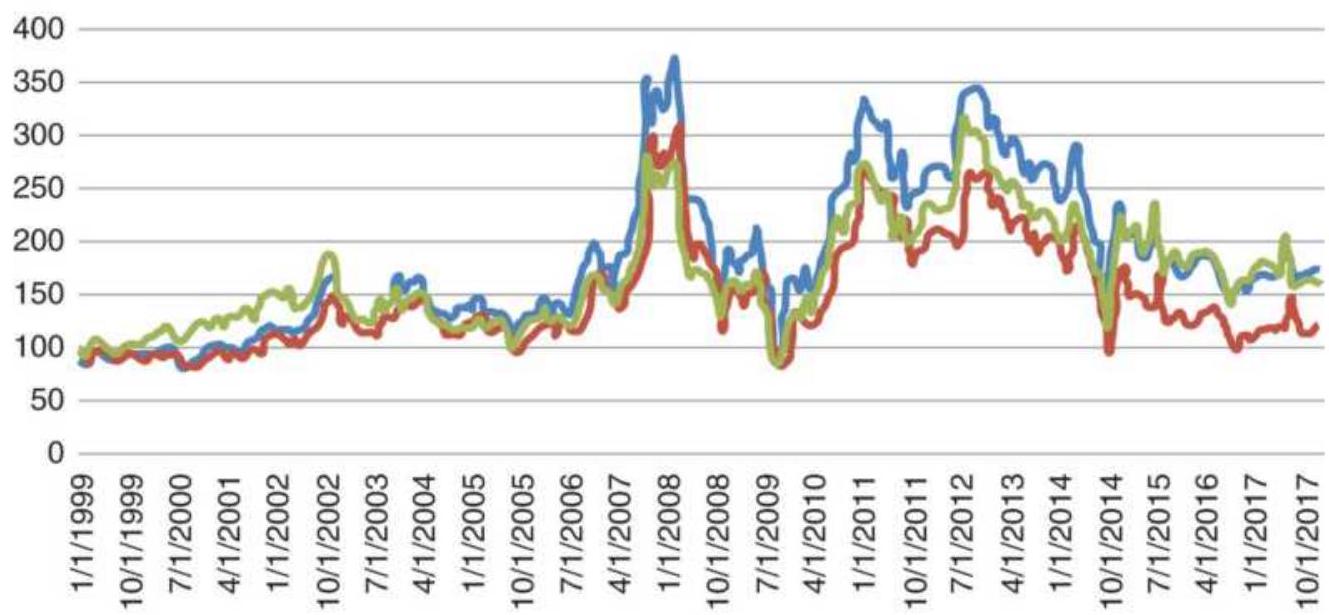

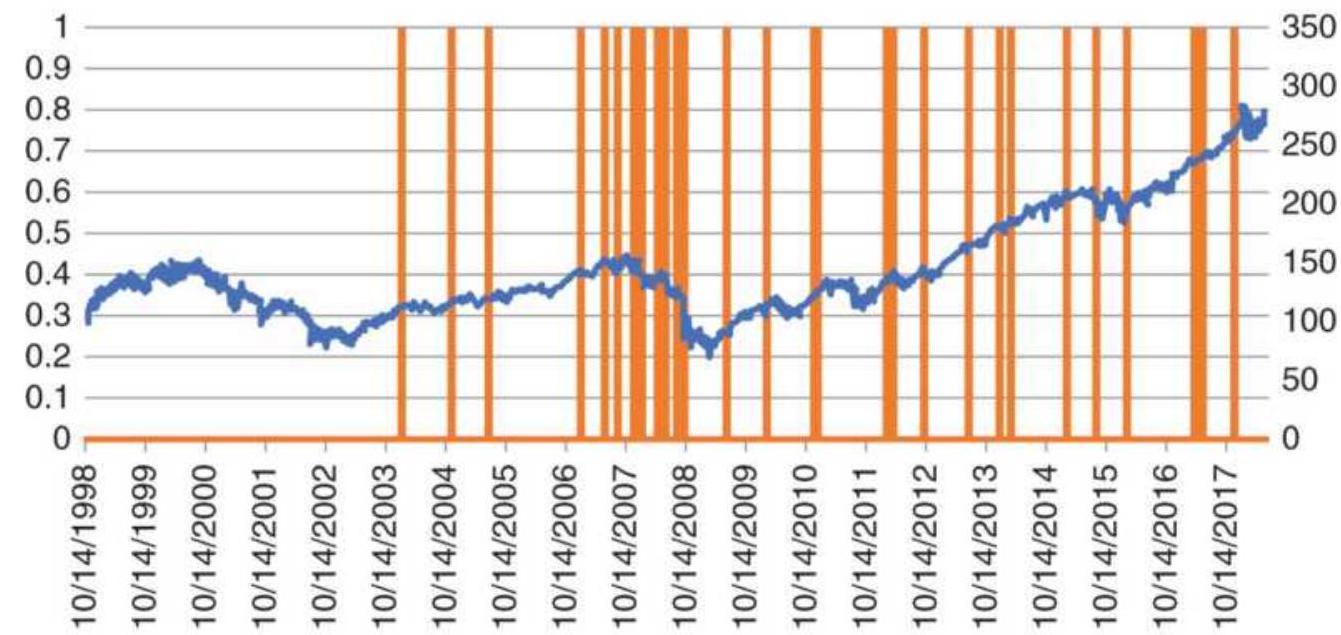

FIGURE 18.1 SPY prices and annualized

volatility from 1998 through April 201...

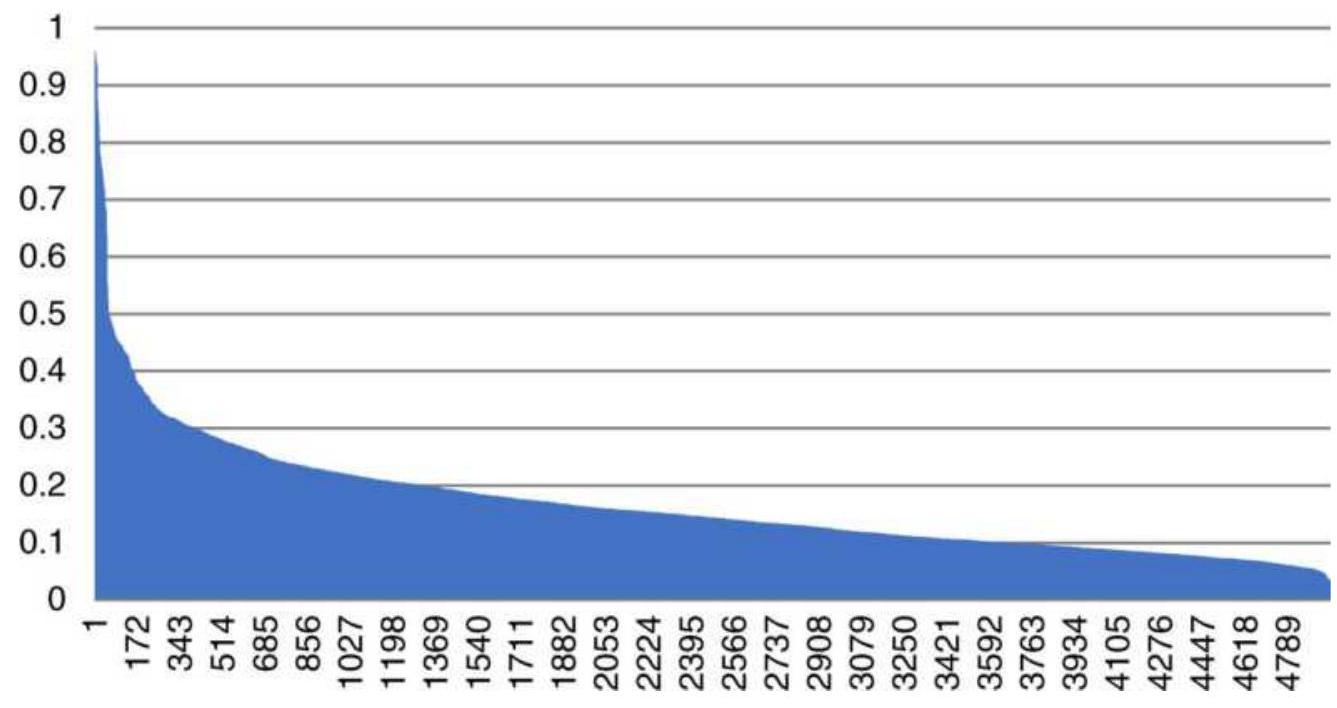

FIGURE 18.2 Daily values of 20-day annualized volatility of SPY sorted highe...

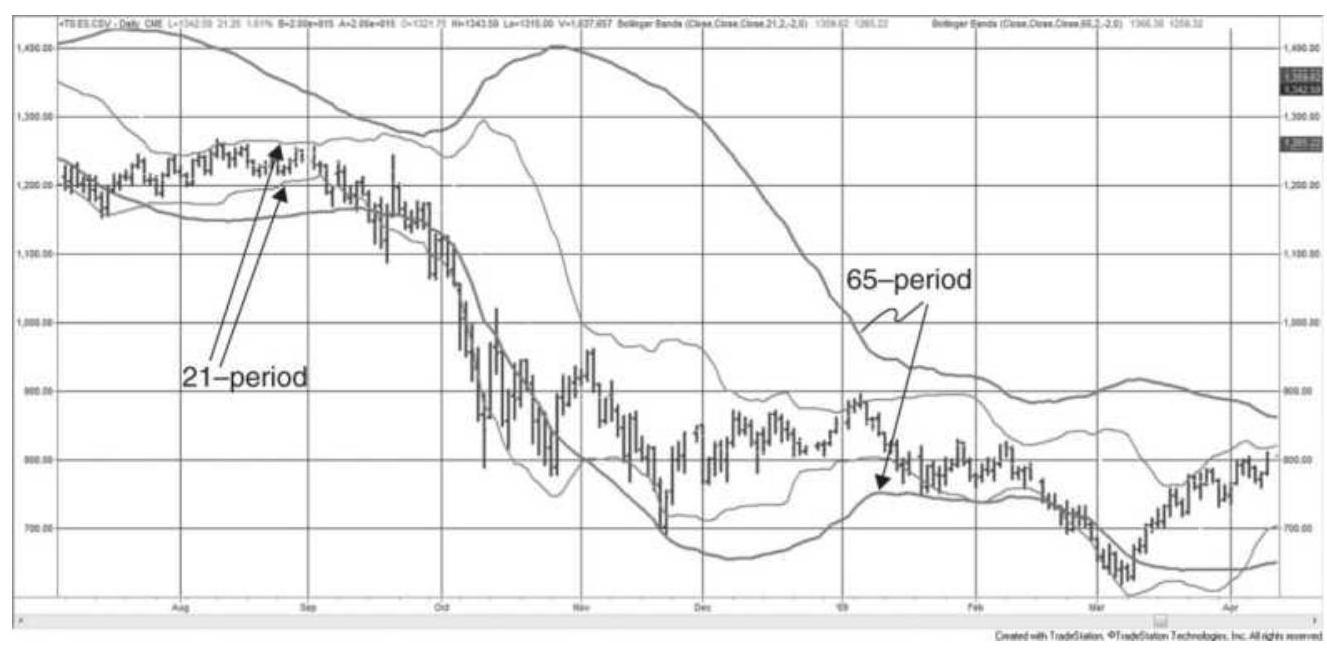

FIGURE 18.3 Comparison of 21-period and 65period Bollinger bands, applied t...

FIGURE 18.4 Kase's 3 DevStops with a "warning" line closer to the prices. Th...

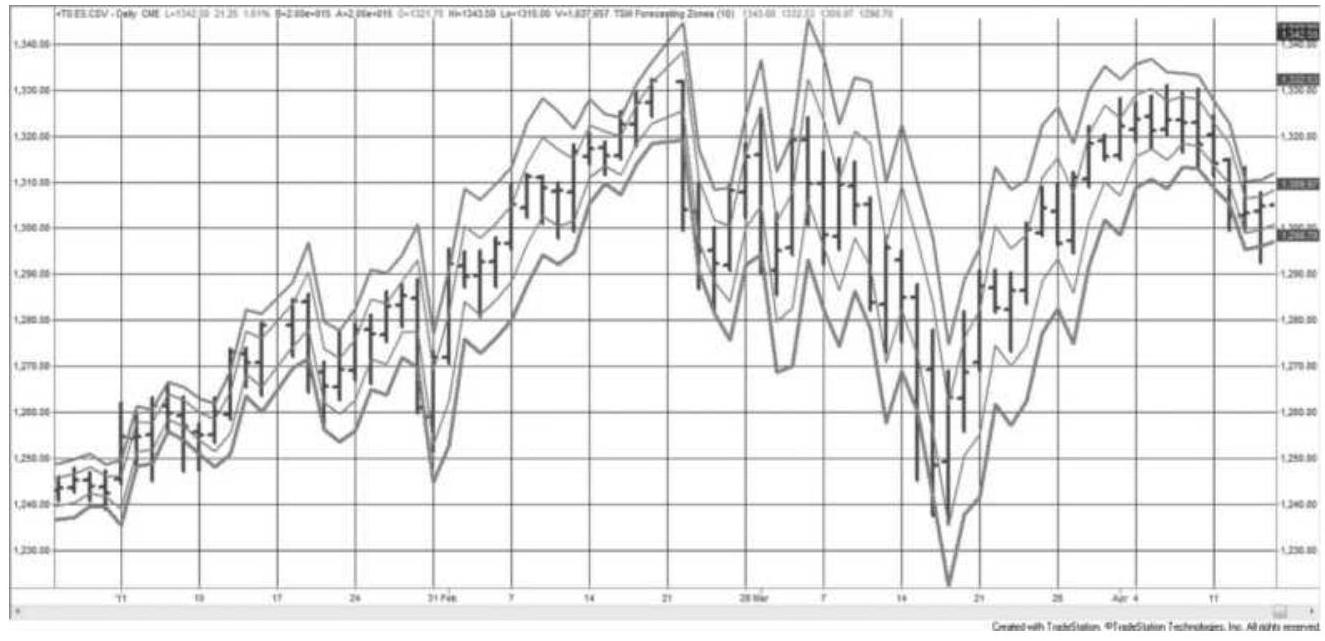

FIGURE 18.5 Forecasted trading zones using the Chande and Kroll method appli...

FIGURE 18.6 Wheat cash prices, and wheat adjusted for inflation and currency...

FIGURE 18.7 Distribution of wheat cash prices compared to the wheat price de...

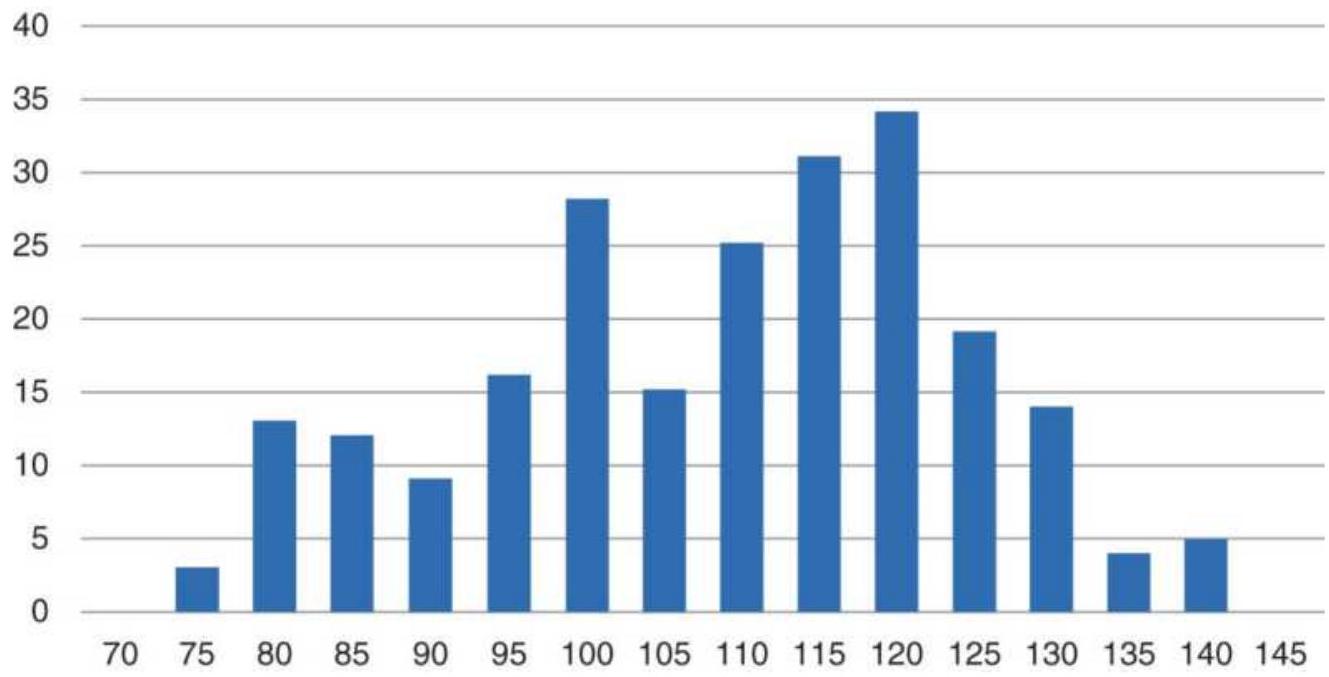

FIGURE 18.8 Price distribution of indexed cash

euro, 1999-2017.

FIGURE 18.9 Low volume (center panel) and low volatility (bottom panel), the...

FIGURE 18.10 Euro futures show that volume and volatility fall to their lowe...

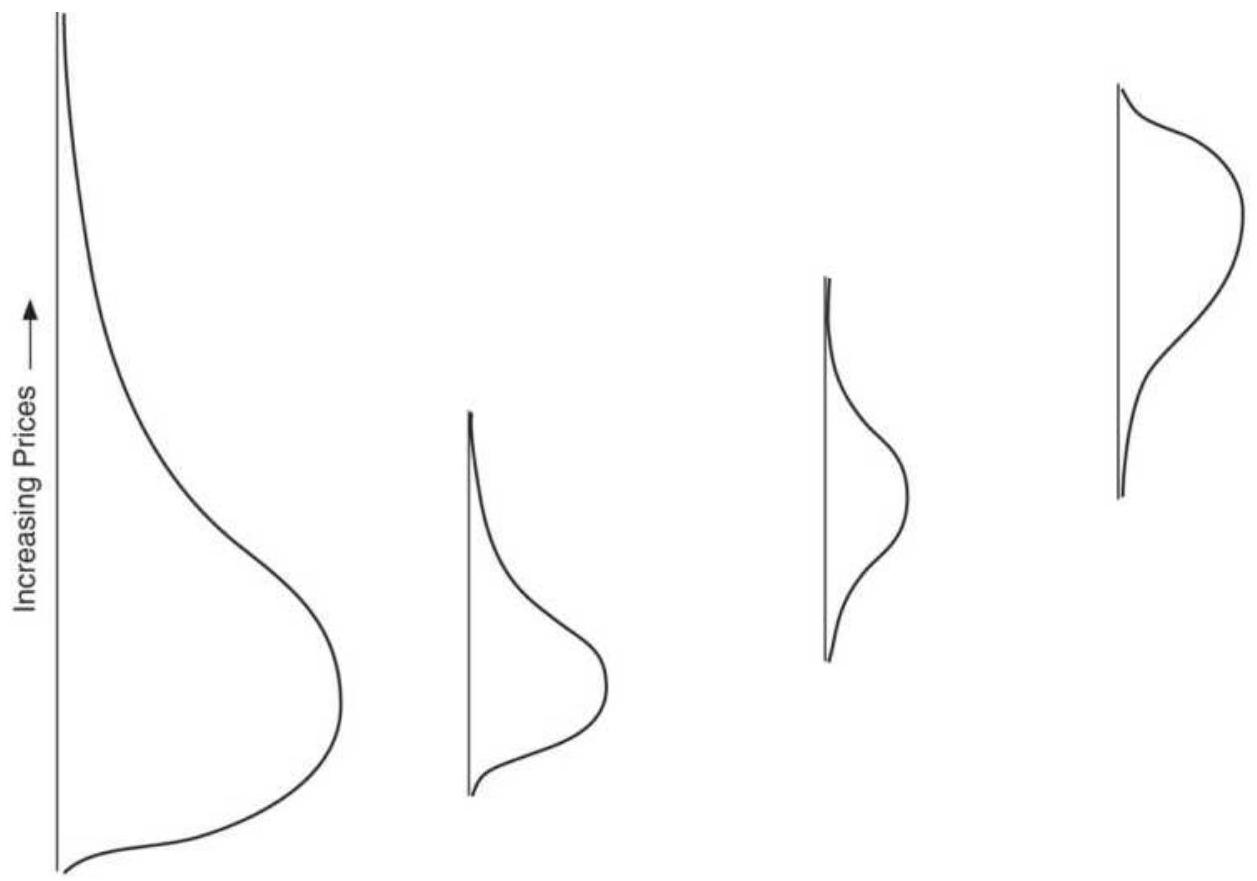

FIGURE 18.11 Three short-term distribution patterns. (a) A normal, long-term...

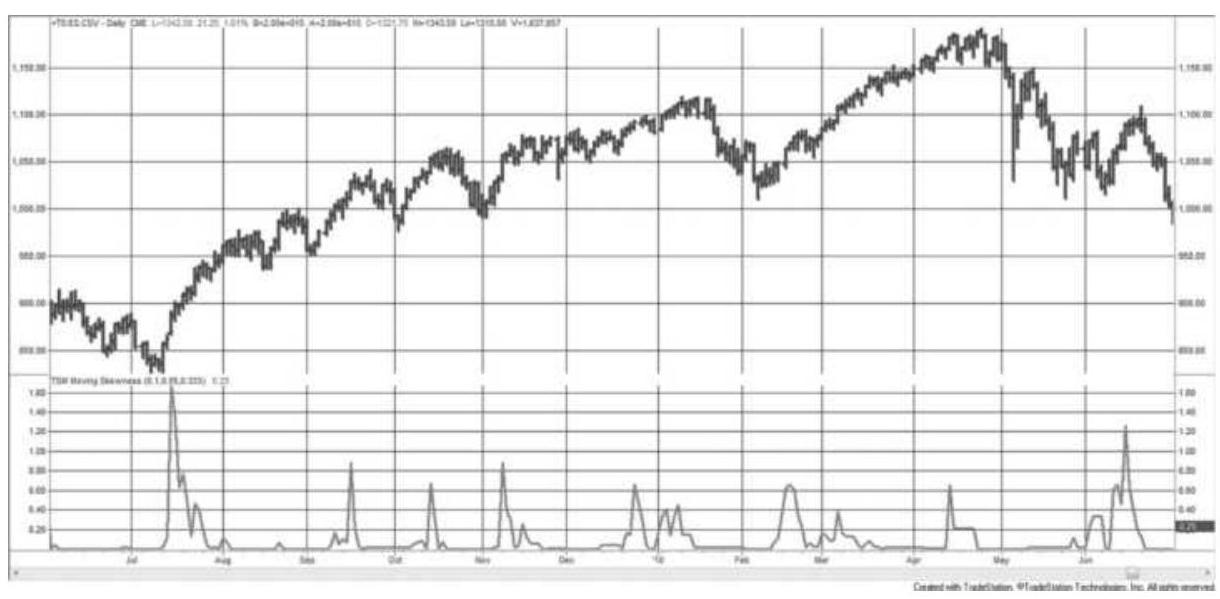

FIGURE 18.12 Moving skewness, emini S\&P futures, June 2009-June 2010.

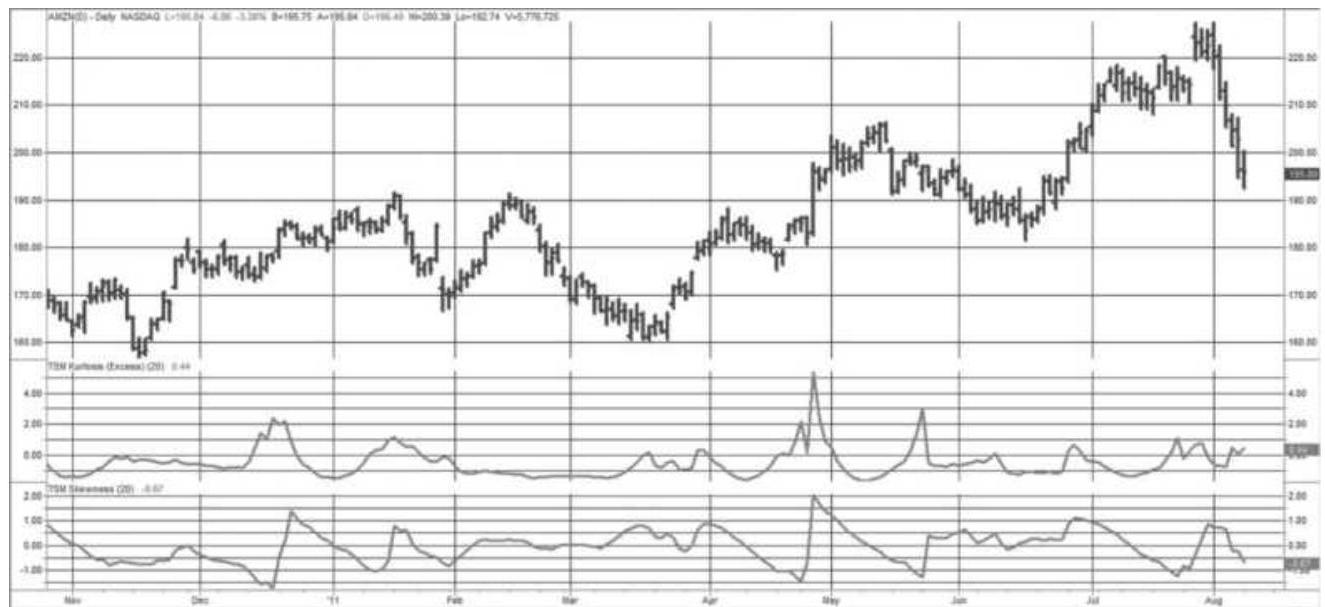

FIGURE 18.13 Excess kurtosis (center panel)

and skew (bottom panel), applied...

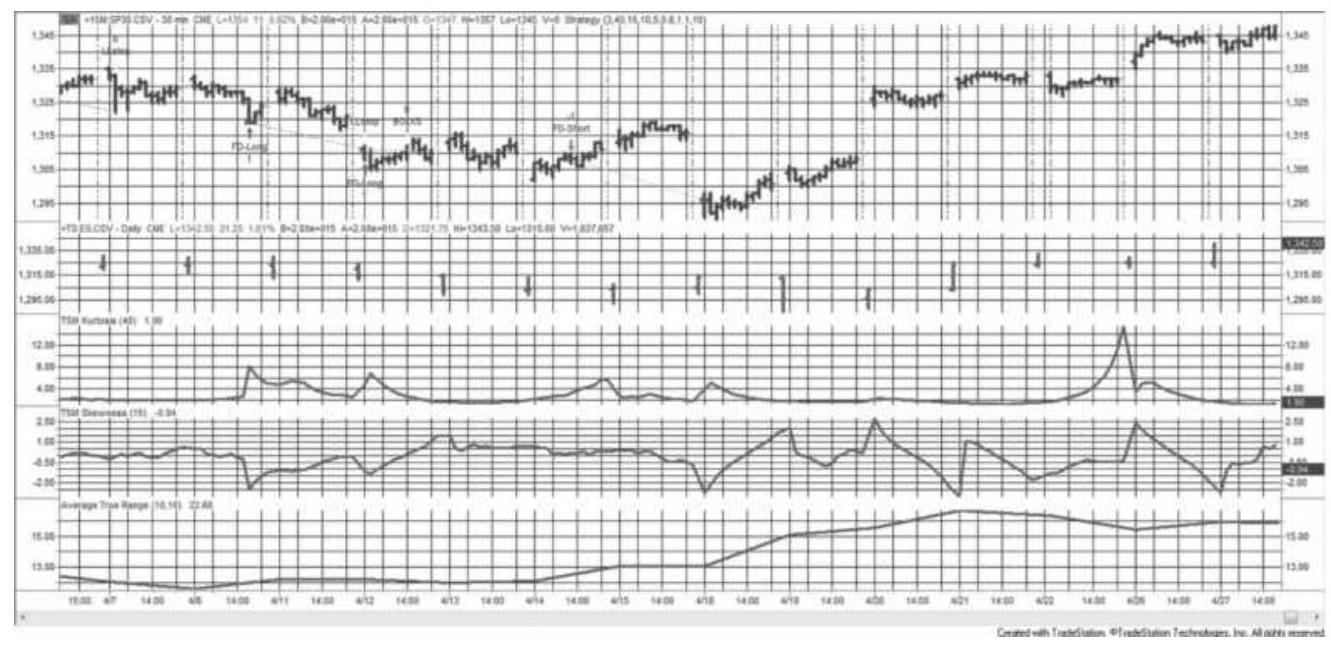

FIGURE 18.14 The Kurtosis-Skew strategy

applied to emini S\&P, 30-min data (t... FIGURE 18.15 Forecast of highest and lowest corn prices expected.

FIGURE 18.16 Stochastic indicator with and without Ehlers' roofing filter.

FIGURE 18.17 Cash and futures corn prices show futures in a long-term downtr...

FIGURE 18.18 Annualized volatility of cash corn prices.

FIGURE 18.19 Original Market Profile for 30year Treasury bonds.

\section*{FIGURE 18.20 Daily Market Profile.}

FIGURE 18.21 Buyer's and seller's curves for a sequence of days.

FIGURE 18.22 Market Profile for a trending market.

FIGURE 18.23 Monkey bars shown on the

Thinkorswim trading platform on the to...

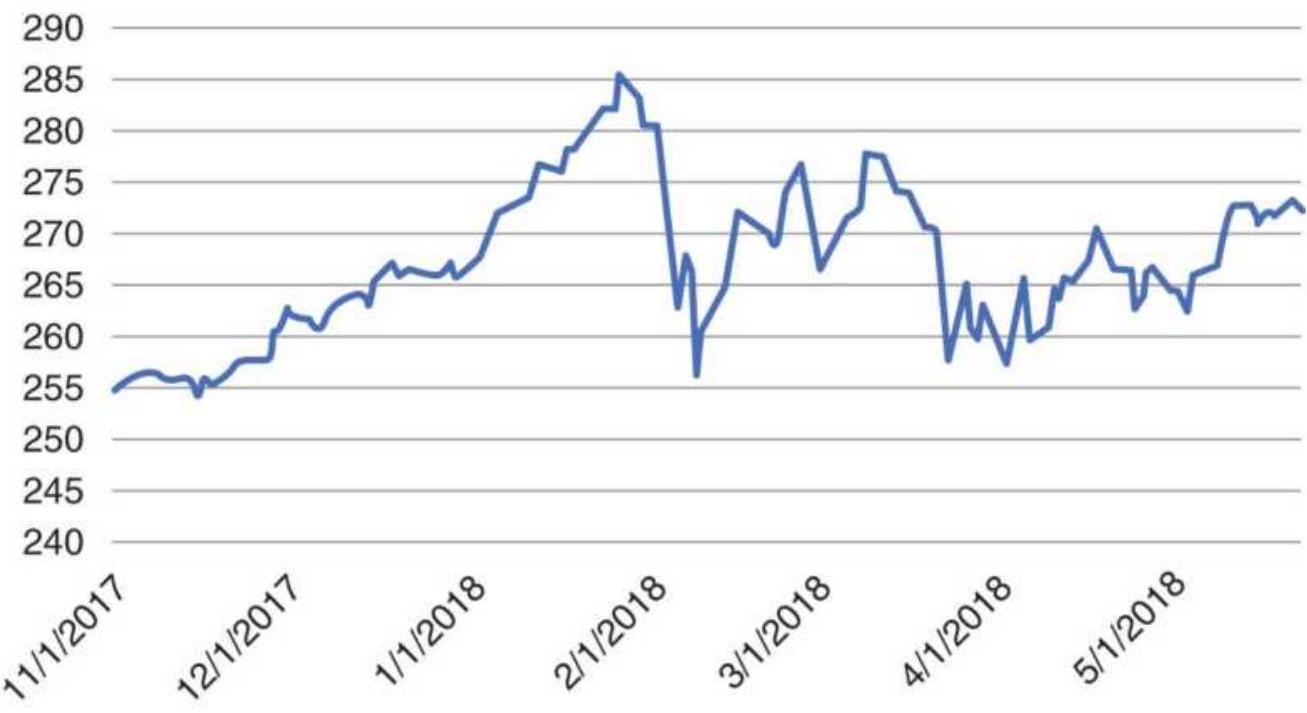

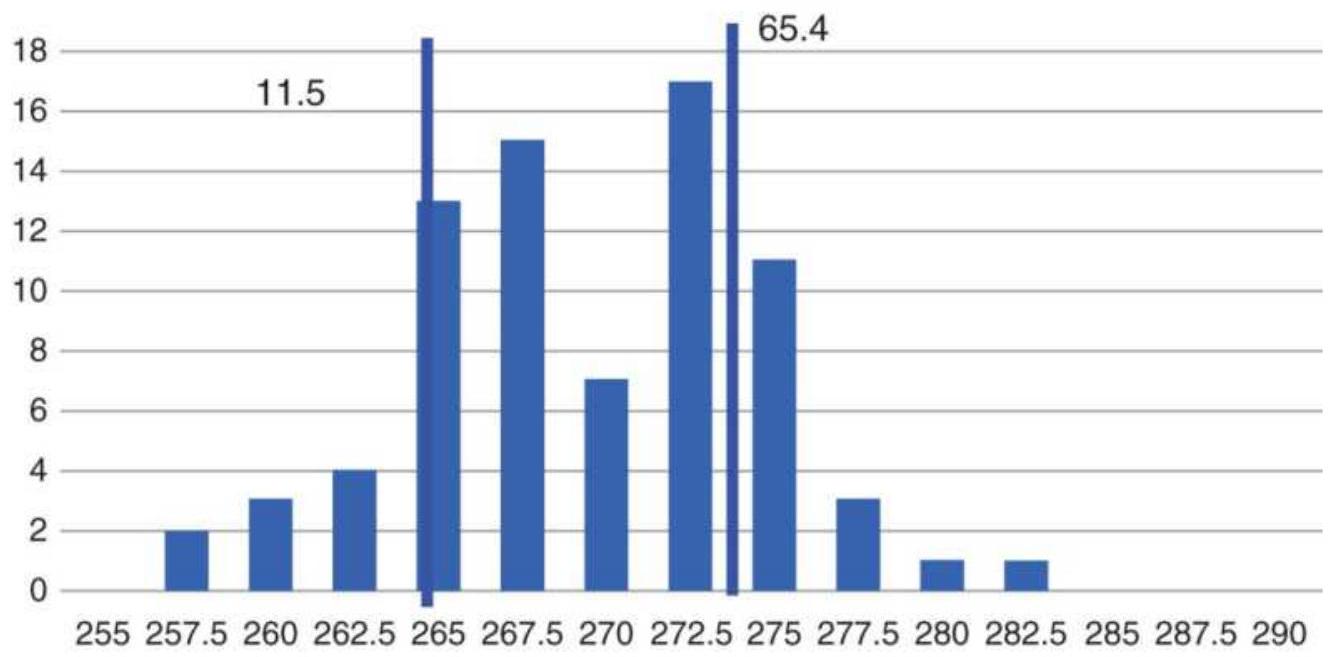

FIGURE 18.24 SPY prices, January 1-April 22, 2018.

FIGURE 18.25 Histogram of SPY prices,

\(1 / 1 / 2018-5 / 20 / 2018\)

Chapter 19

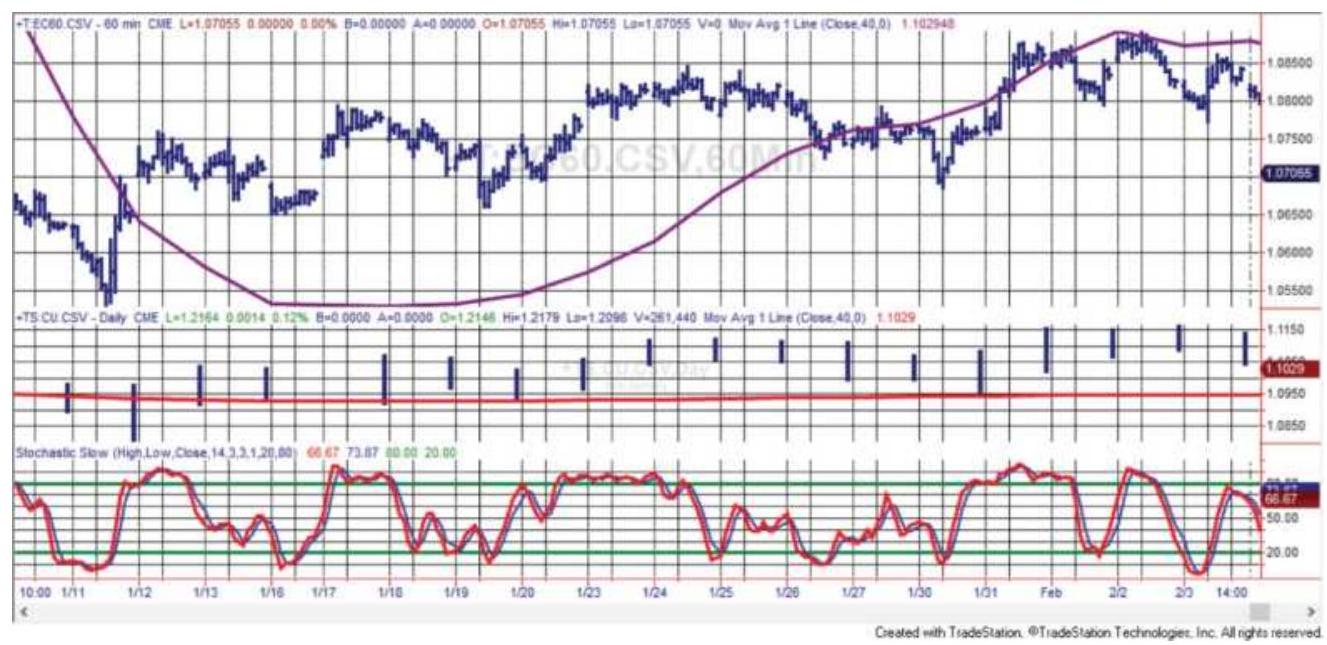

FIGURE 19.1 Euro futures 60-min data on top with 40-day trend; daily bars in...

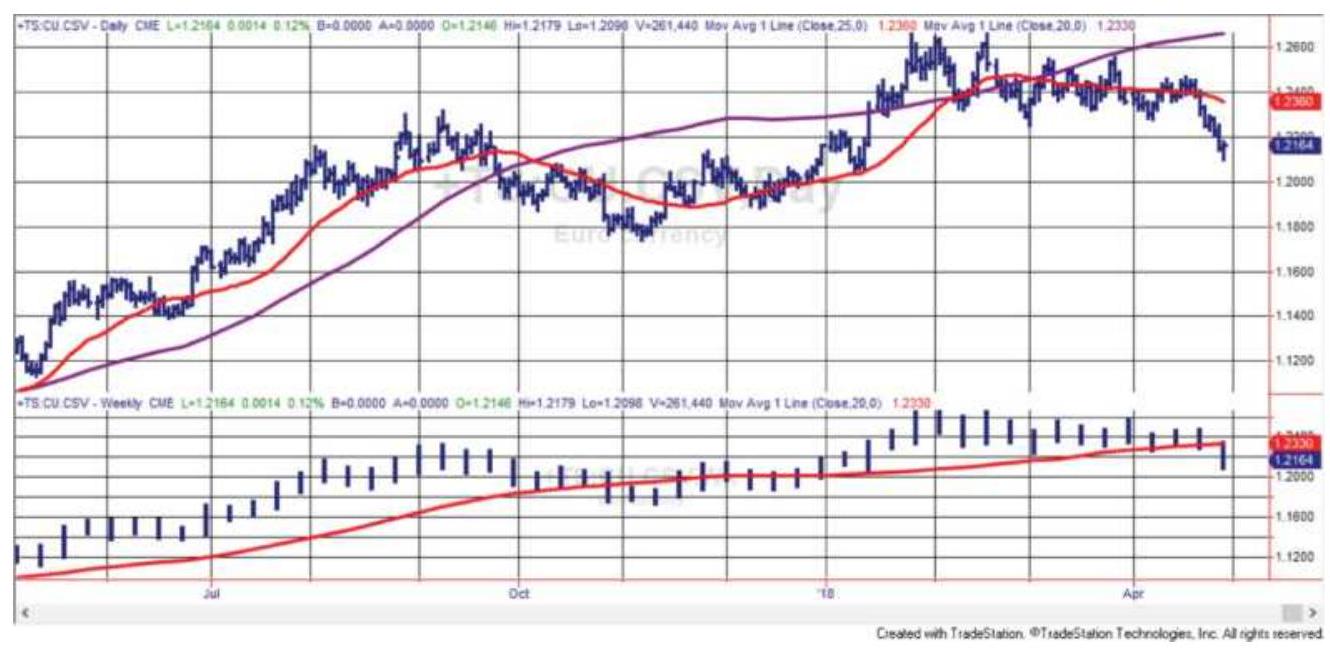

FIGURE 19.2 Daily and weekly data and trends

for euro futures are displayed ...

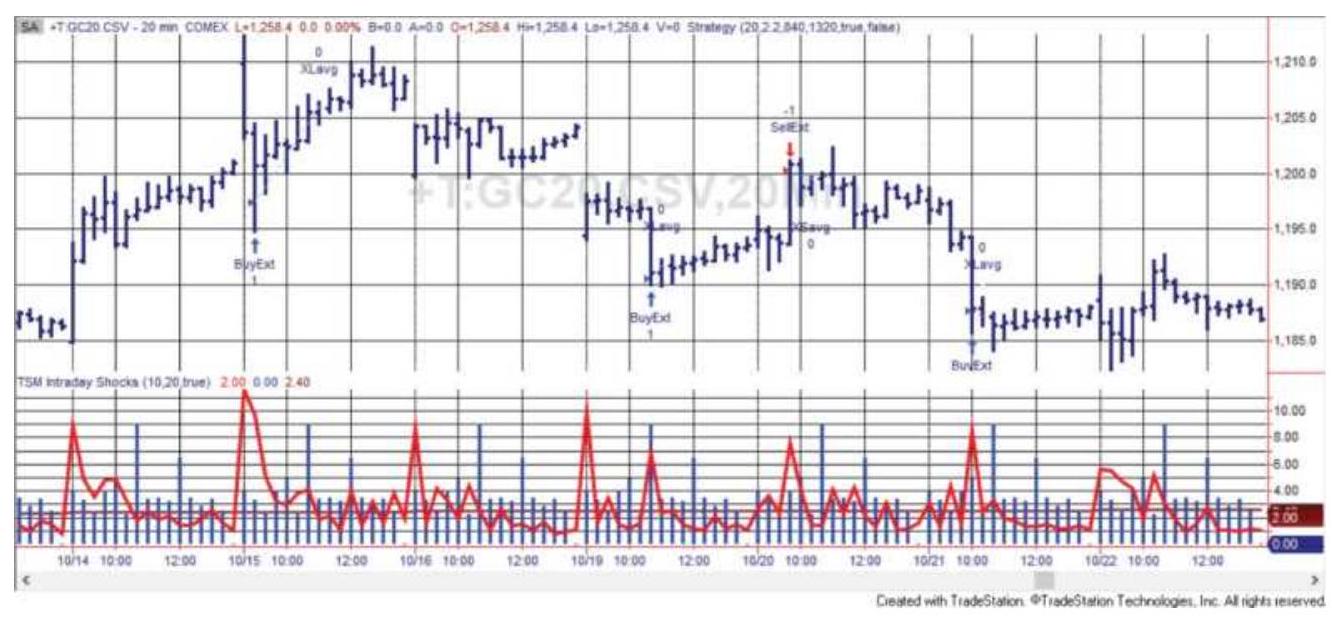

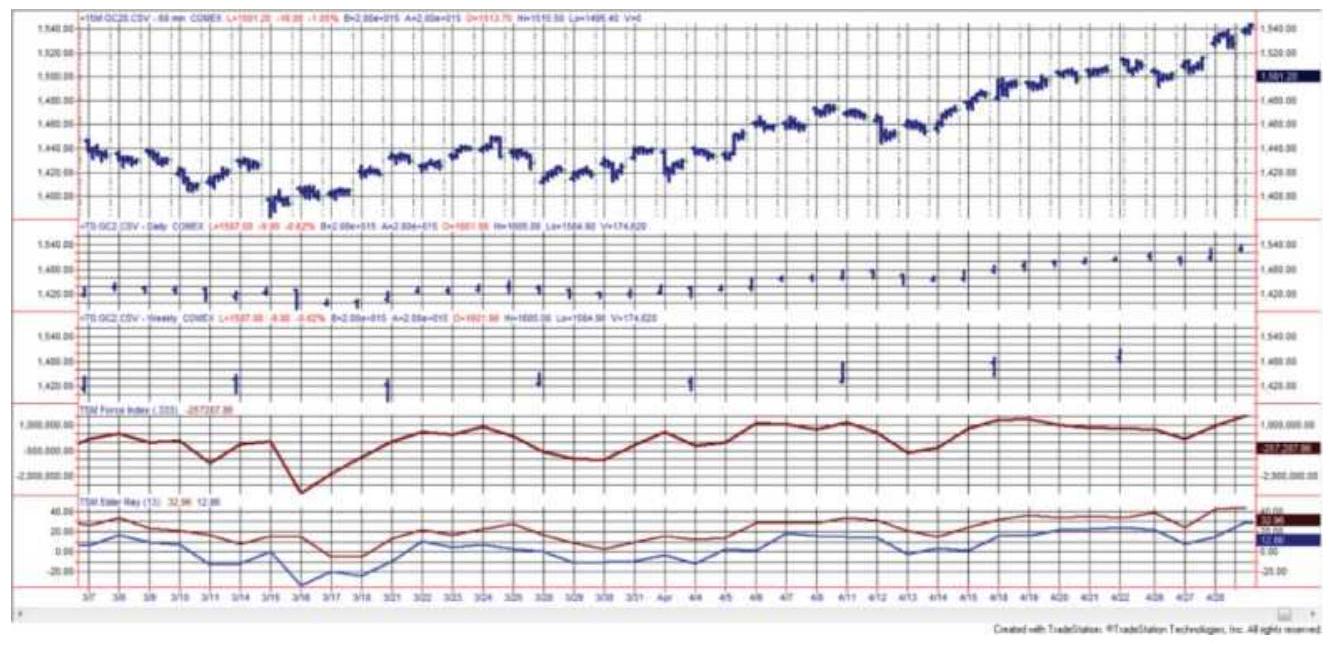

FIGURE 19.3 The Triple Screen for gold futures, March and April 2011. From t...

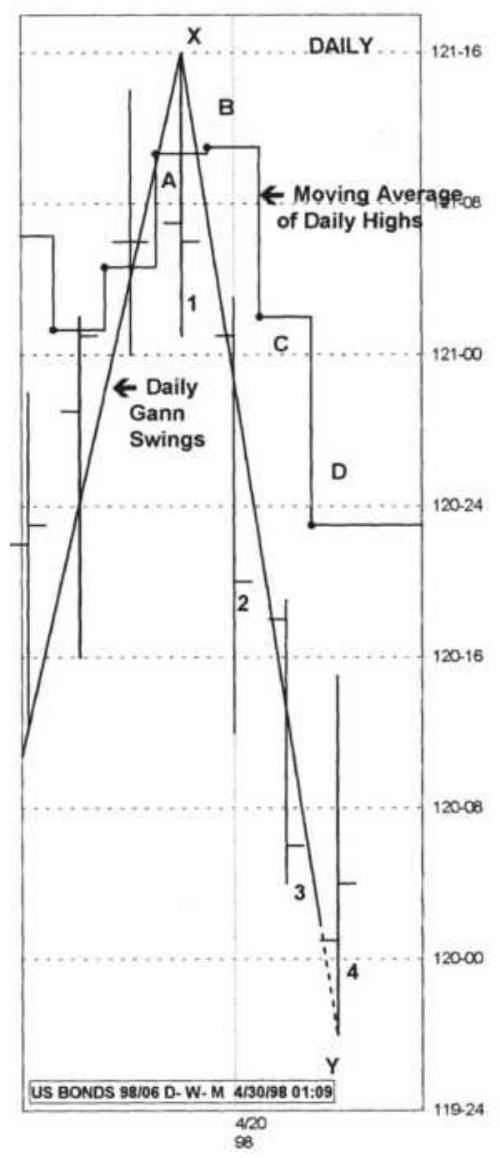

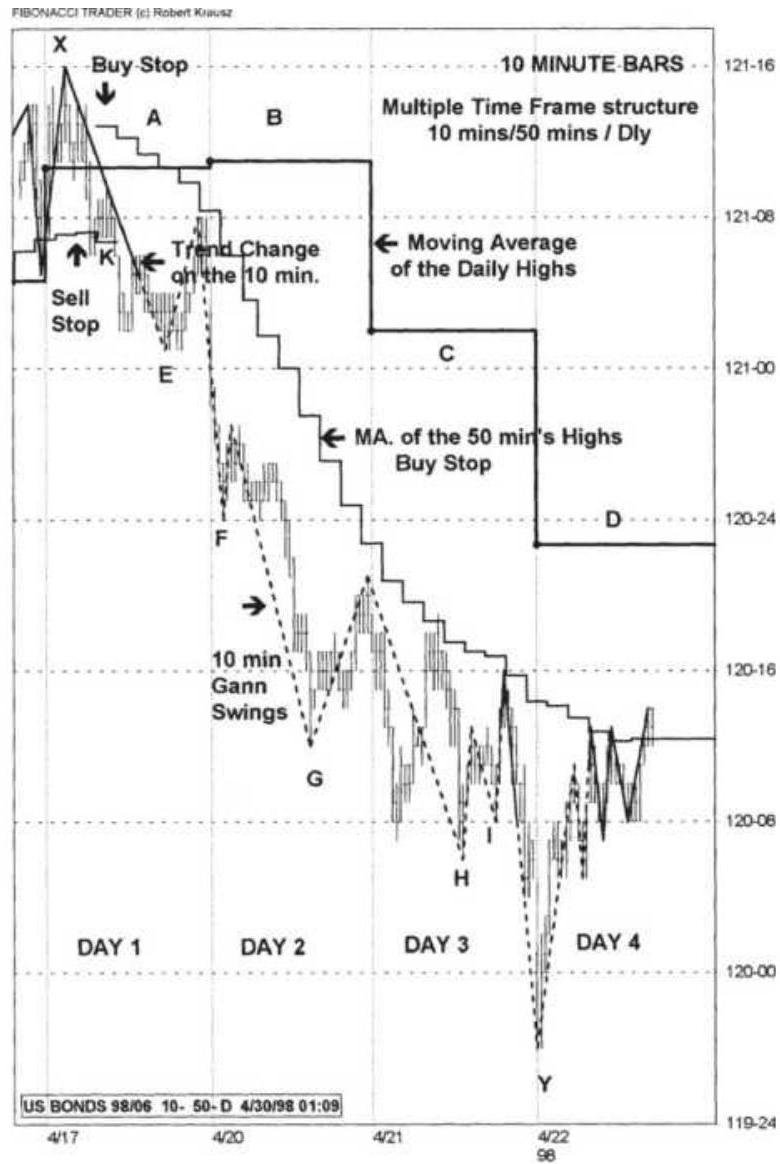

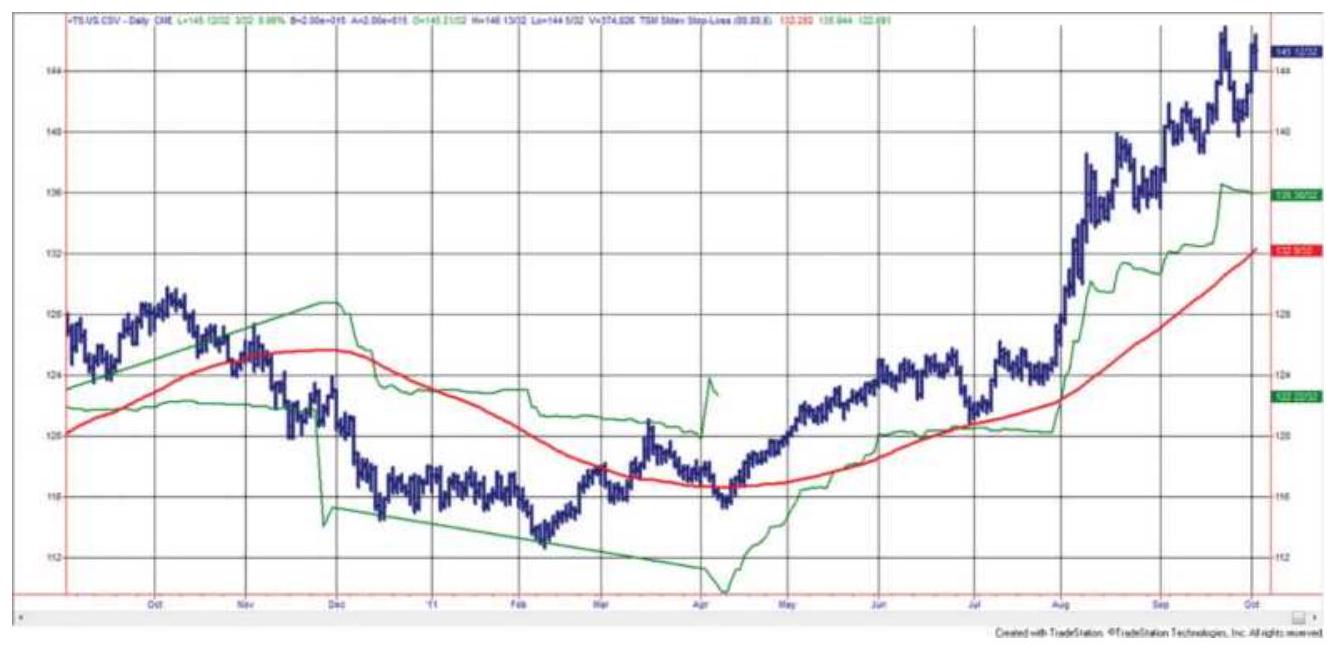

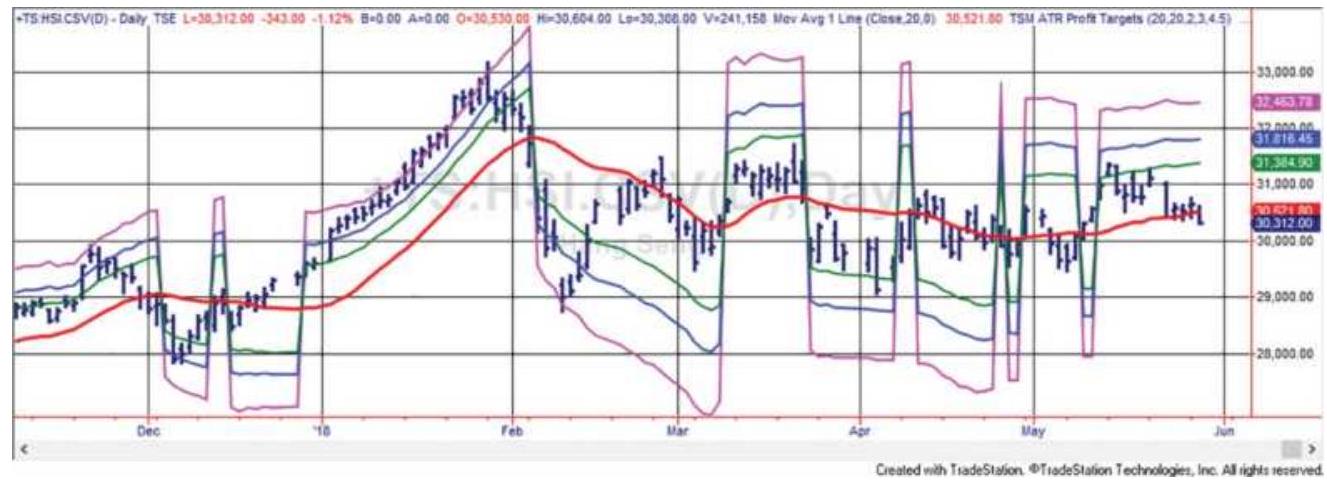

FIGURE 19.4 Krausz's Multiple Time Frames, June 1998, U.S. Bonds. (a) Daily ...

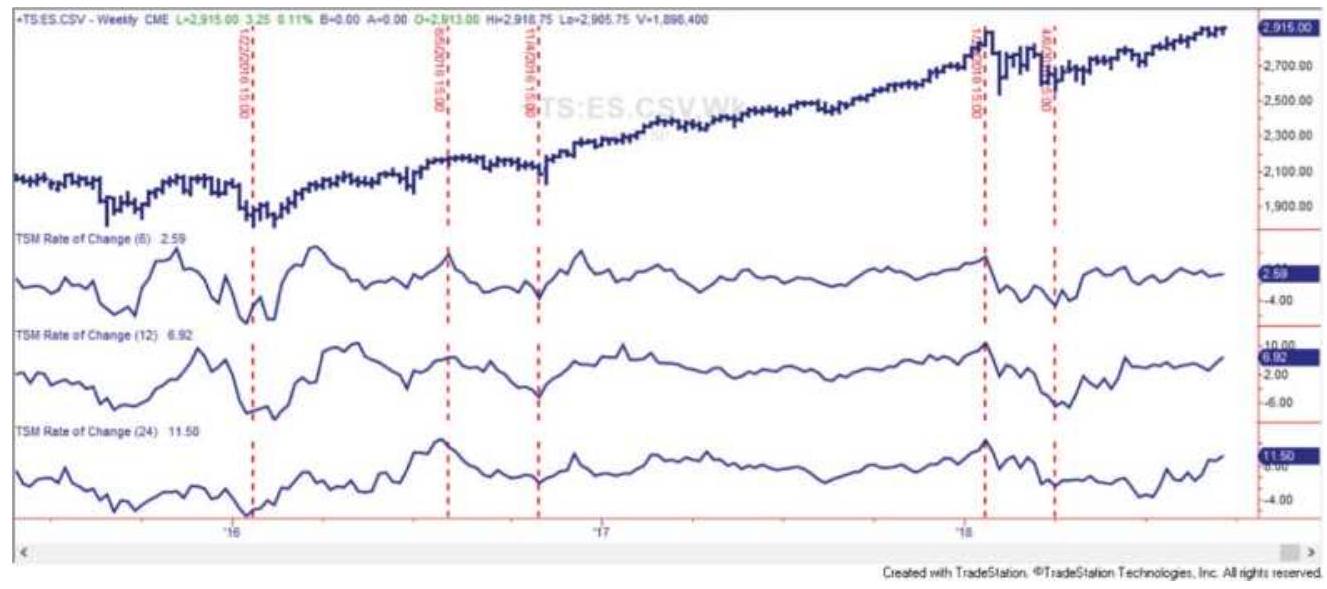

FIGURE 19.5 S\&P continuous futures with a 6-, 12 -, and 24 -week ROC (top to b...

FIGURE 19.6 KST combined with an 18-period ROC and a 12-period trendline can...

Chapter 20

FIGURE 20.1 Four volatility measures.

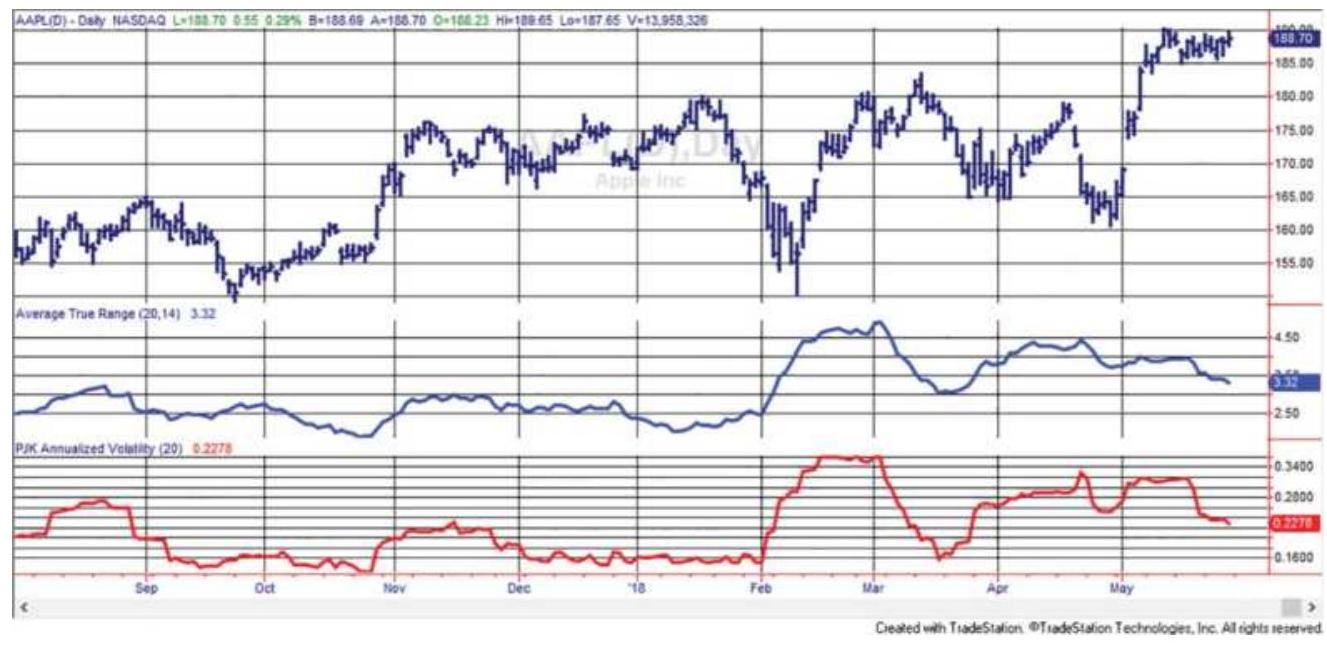

FIGURE 20.2 Apple (AAPL) with a 20-day average true range (center panel) and...

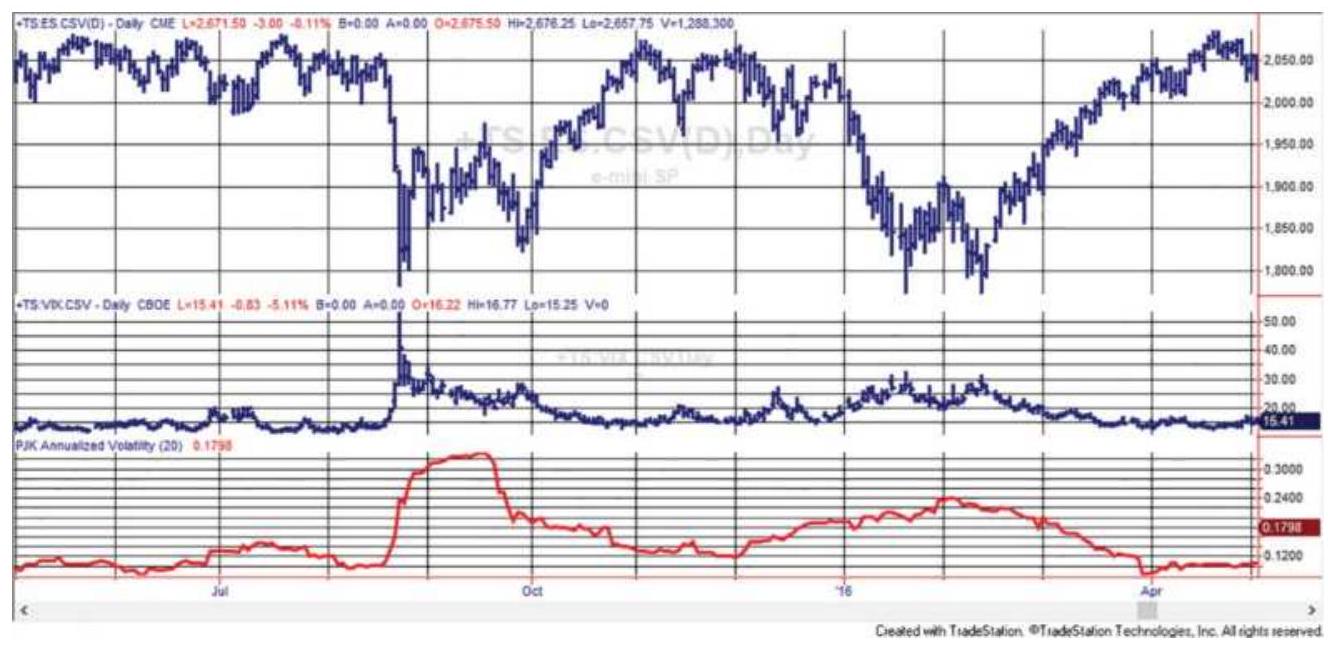

FIGURE 20.3 S\&P futures, June 2016-May 2017 (top), implied volatility (VIX) ...

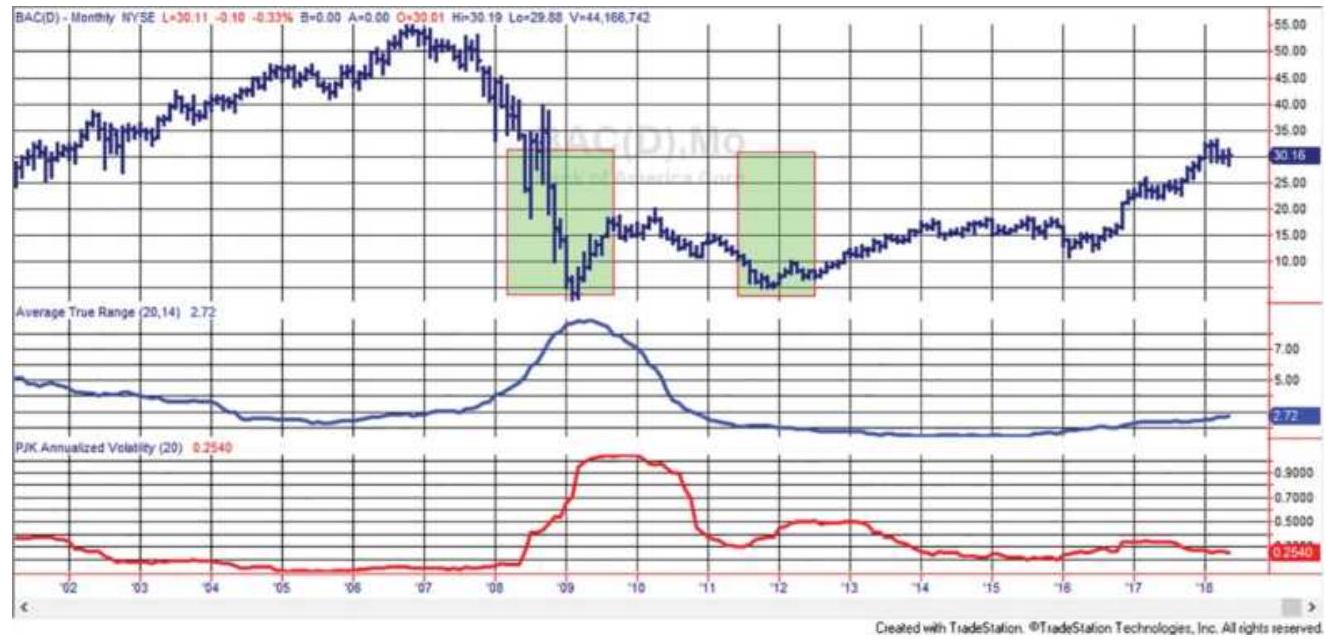

FIGURE 20.4 Bank of America with average true range (center) and annualized ...

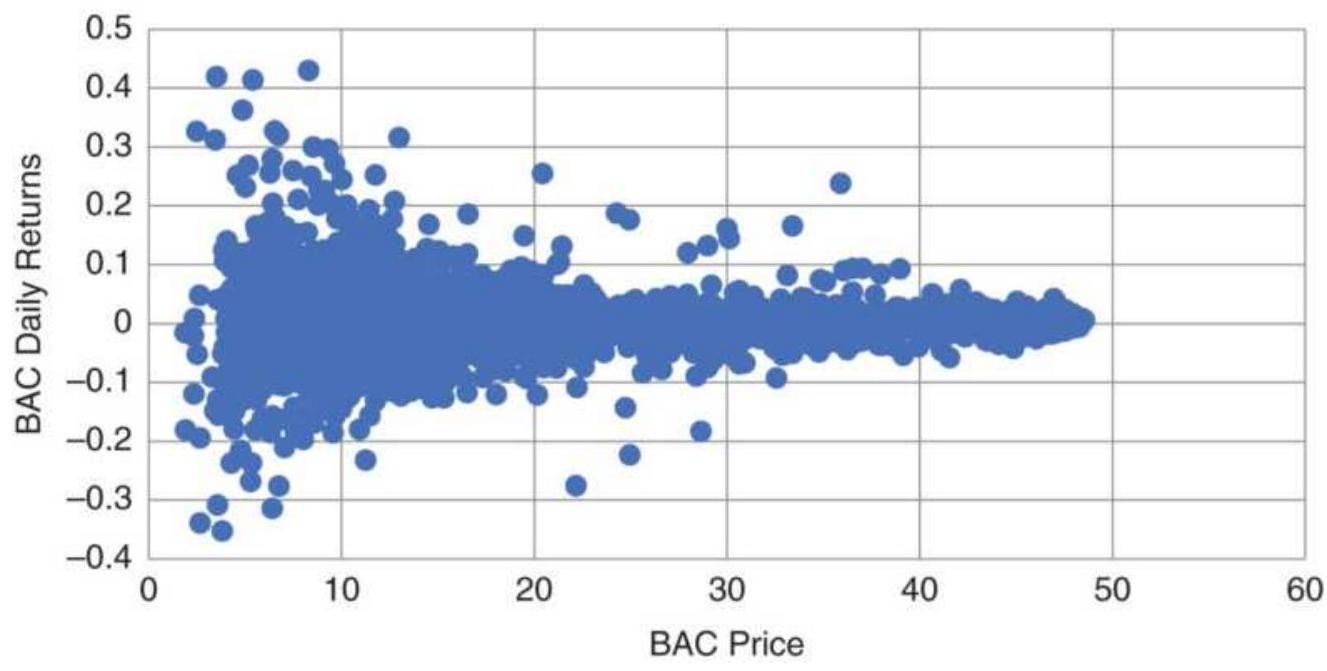

FIGURE 20.5 Bank of America price versus daily returns, 1998-May 2018.

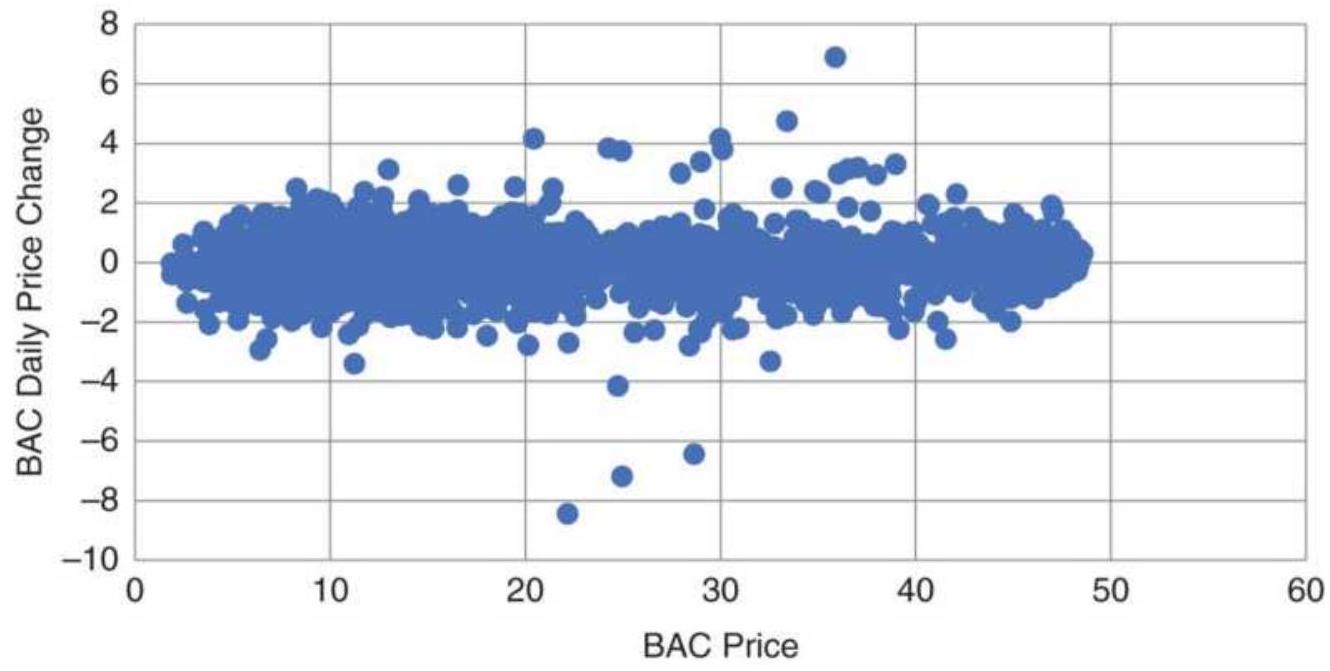

FIGURE 20.6 BAC price versus daily price differences, 1998-May 2018.

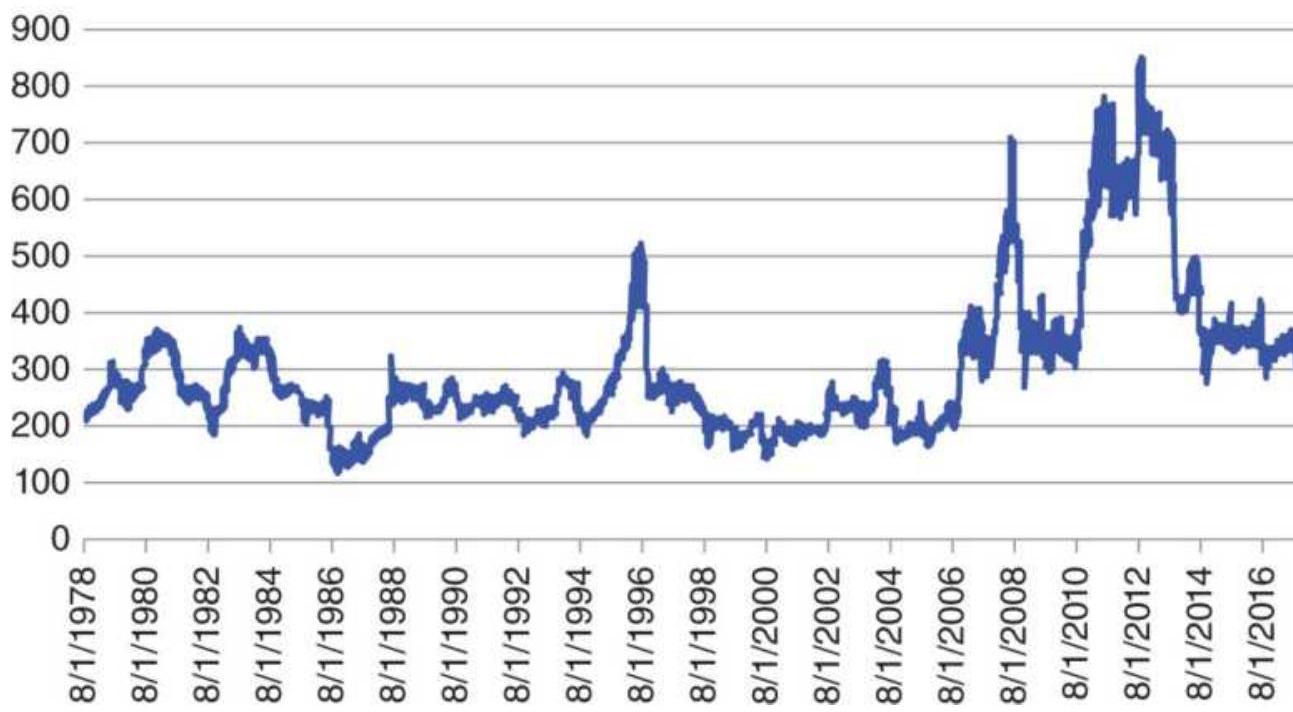

FIGURE 20.7 Cash corn prices, 1978-May 2018.

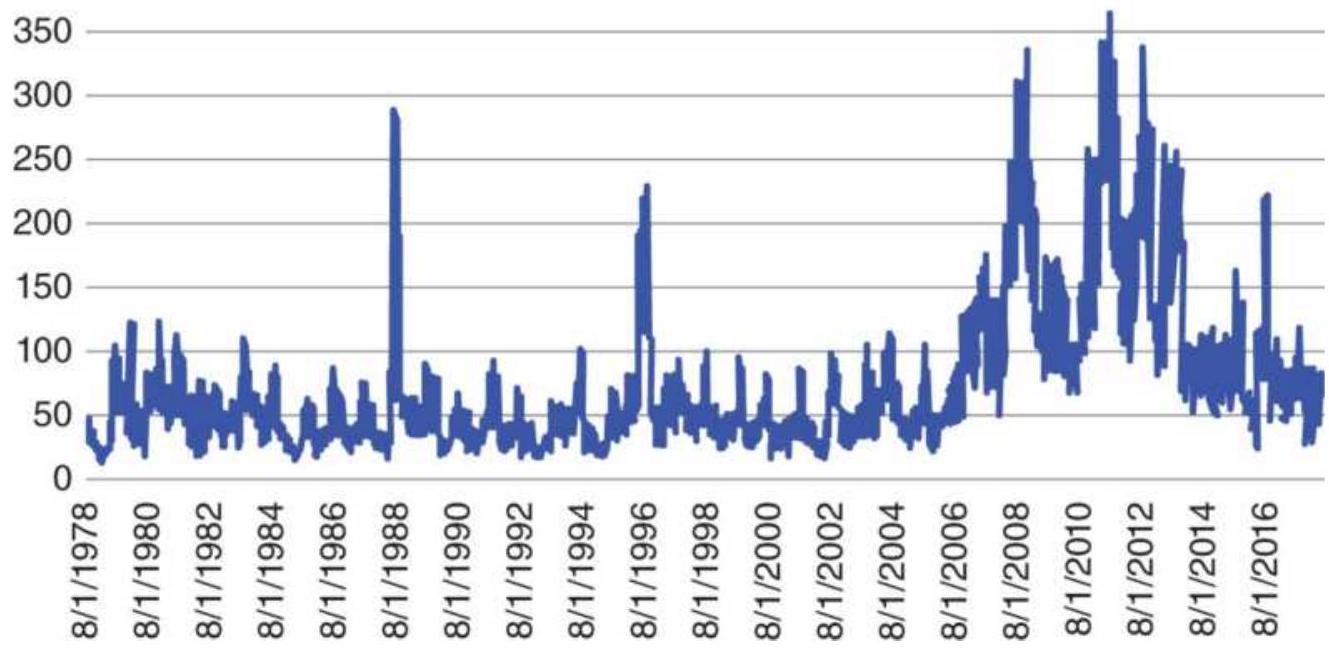

FIGURE 20.8 Annualized volatility of cash corn.

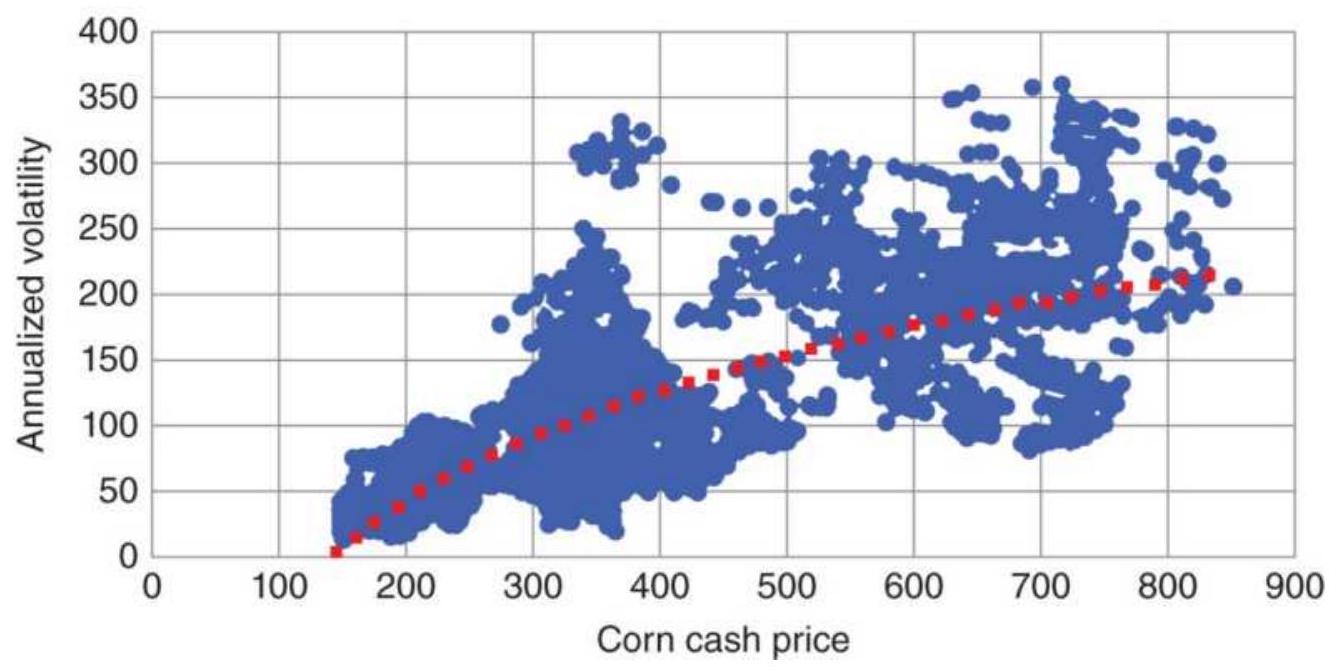

FIGURE 20.9 A scatter diagram of corn

volatility versus price, with a log cu...

FIGURE 20.10 VIX mean-reverting strategy from MarketSci Blog, triggered by t...

FIGURE 20.11 Profits from VIX Mean-

Reverting Strategy, 1998-2011.

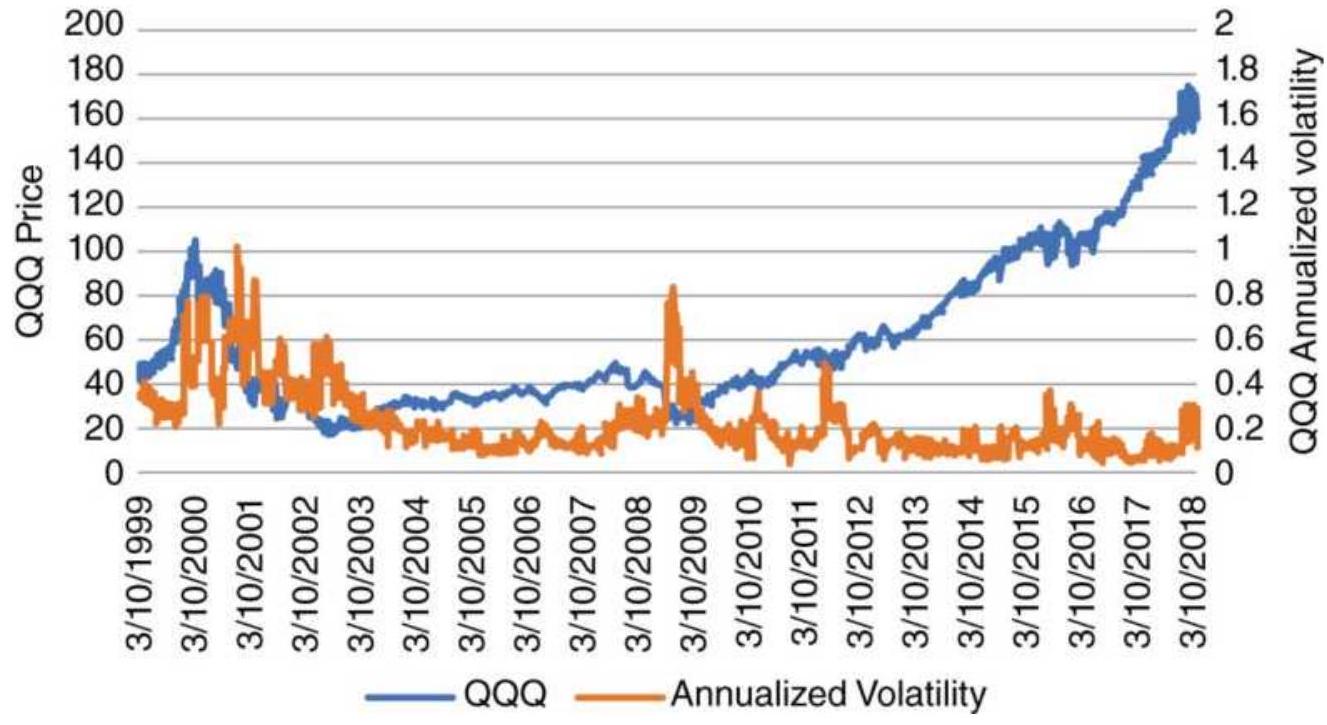

FIGURE 20.12 QQQ prices and annualized volatility, 1999-May 2018.

FIGURE 20.13 Profits from trading QQQ with a 6o-day moving average and a vol...

FIGURE 20.14 Daily price/volume distribution shows liquidity.

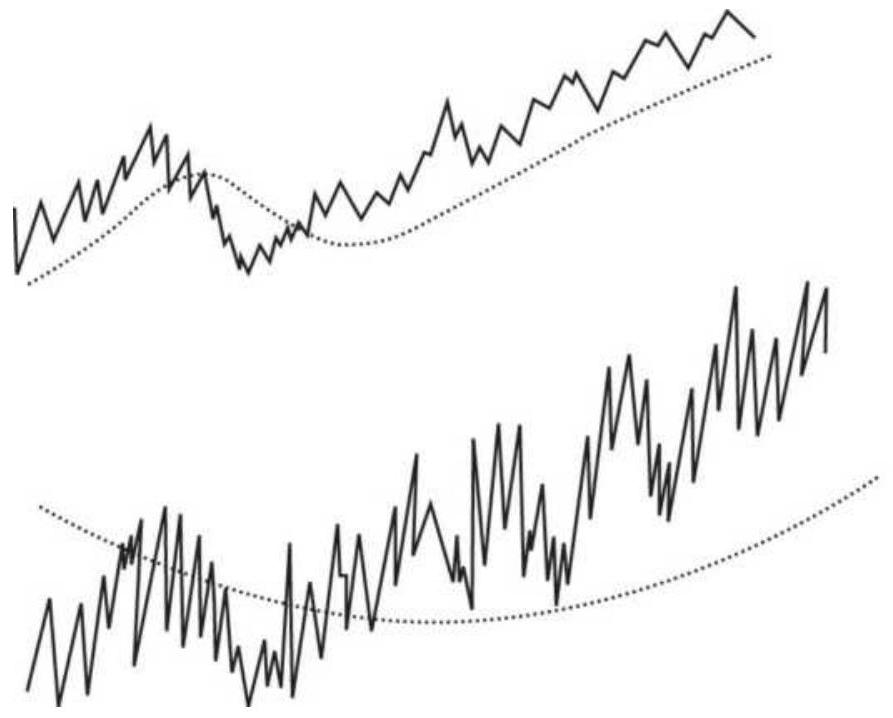

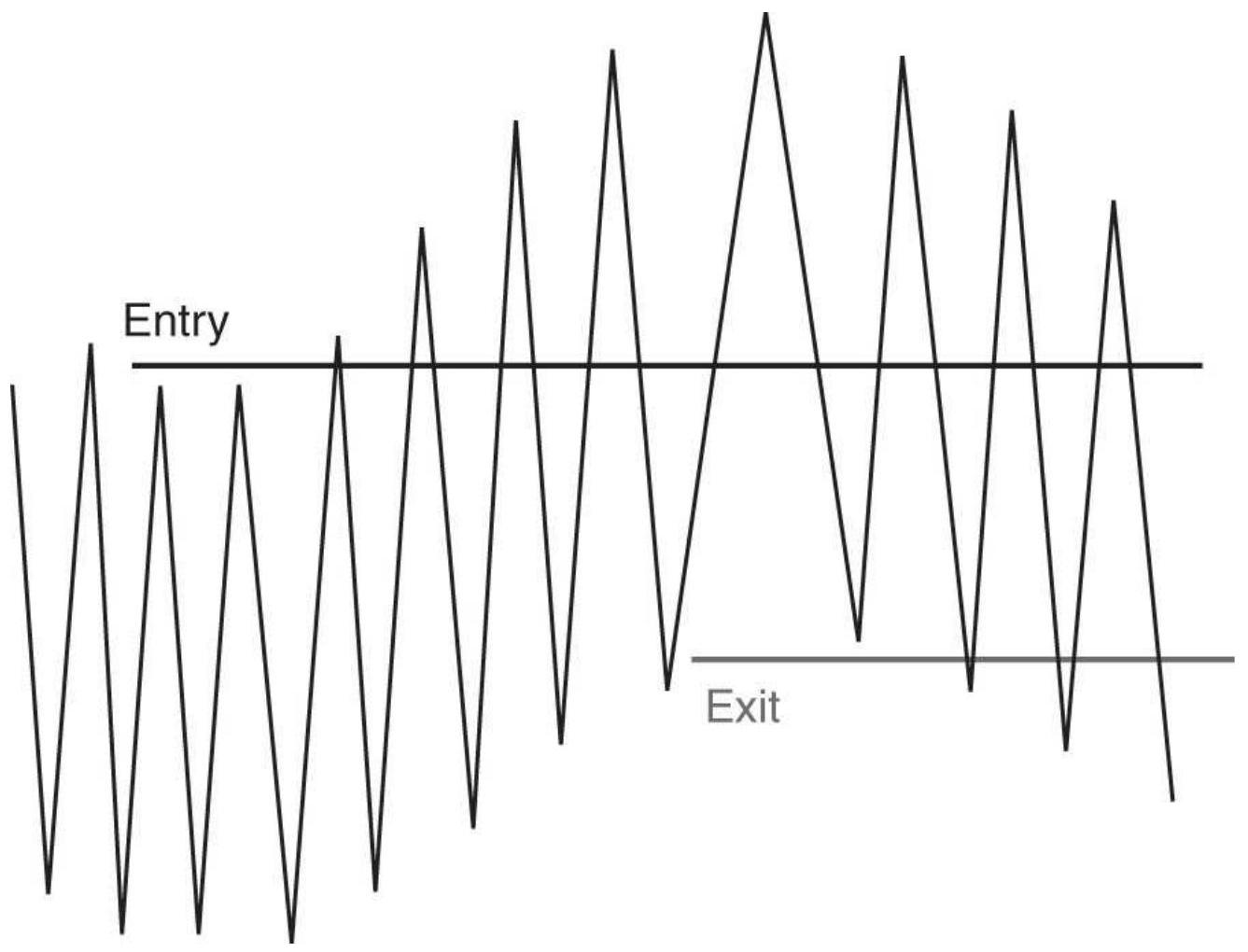

FIGURE 20.15a A low noise market allows sooner entries and exits resulting i...

FIGURE 20.15b Increased noise causes entries and exits to be delayed, result...

FIGURE 20.15c High-noise markets, typical of an equity index in a major indu...

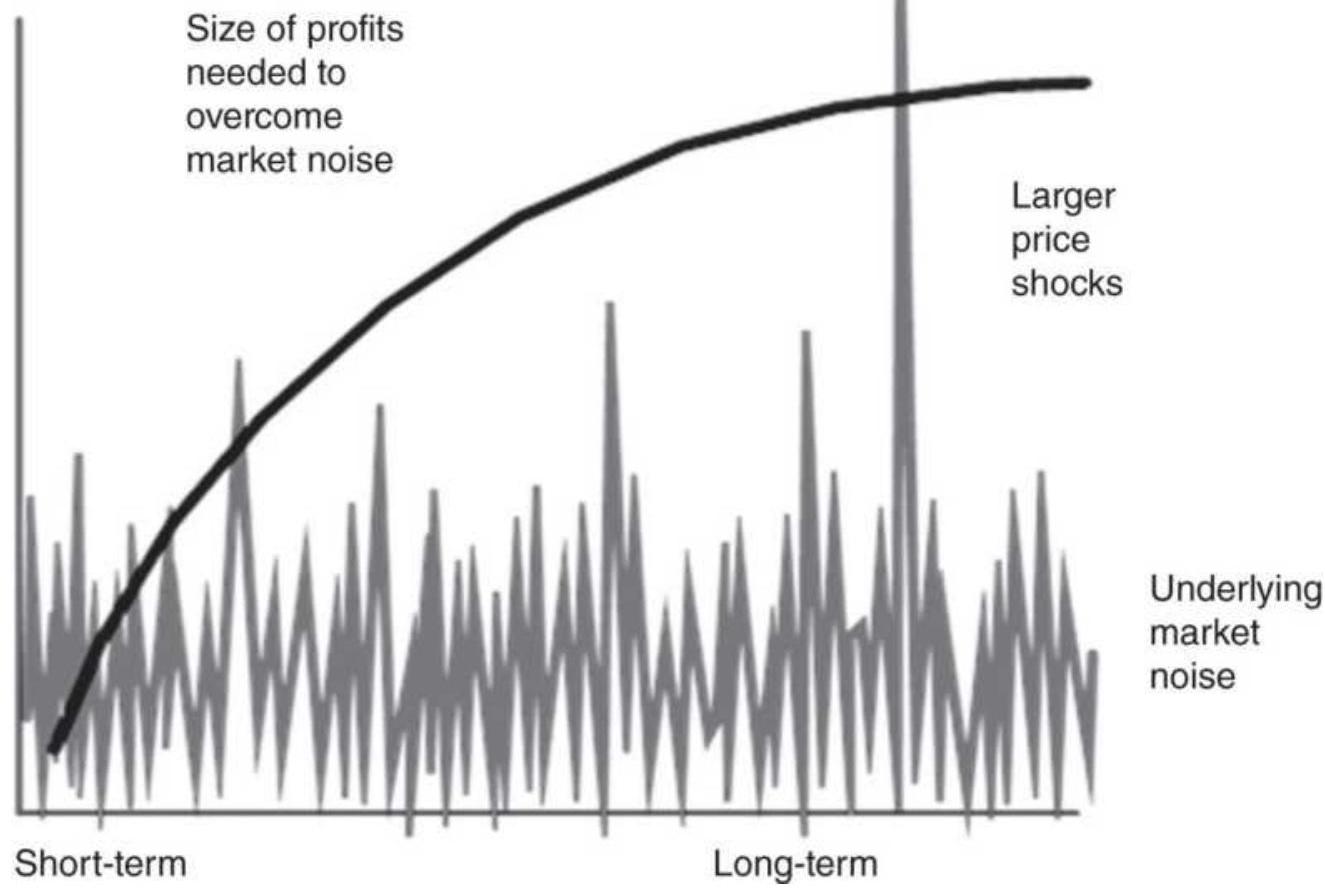

FIGURE 20.16 How the trend calculation period (the curved line) relates to m...

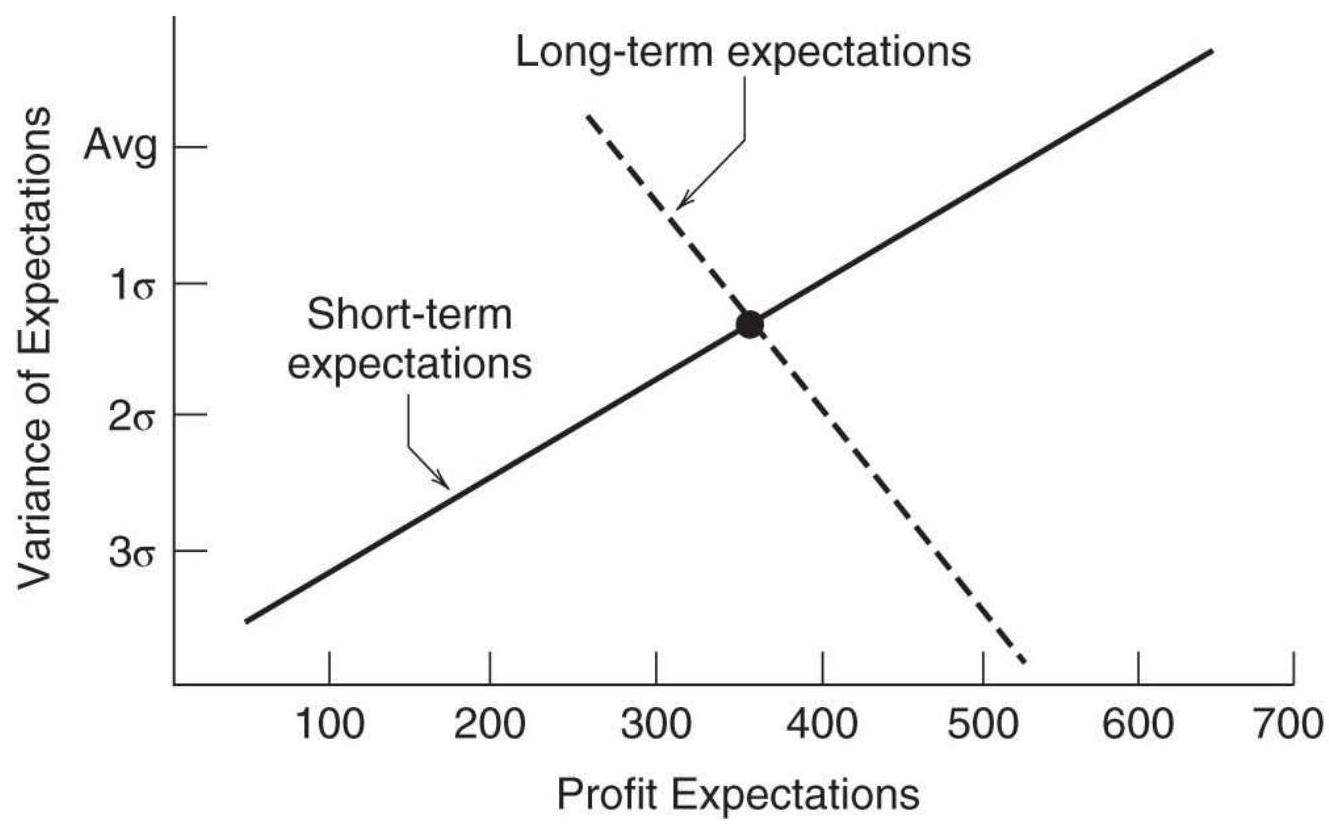

FIGURE 20.17 Expectations of a price move using two time periods.

FIGURE 20.18 Three matrices associated with trading signals for three differ...

FIGURE 20.19 Payout matrix for customer and refiner. Refiner chooses the row...

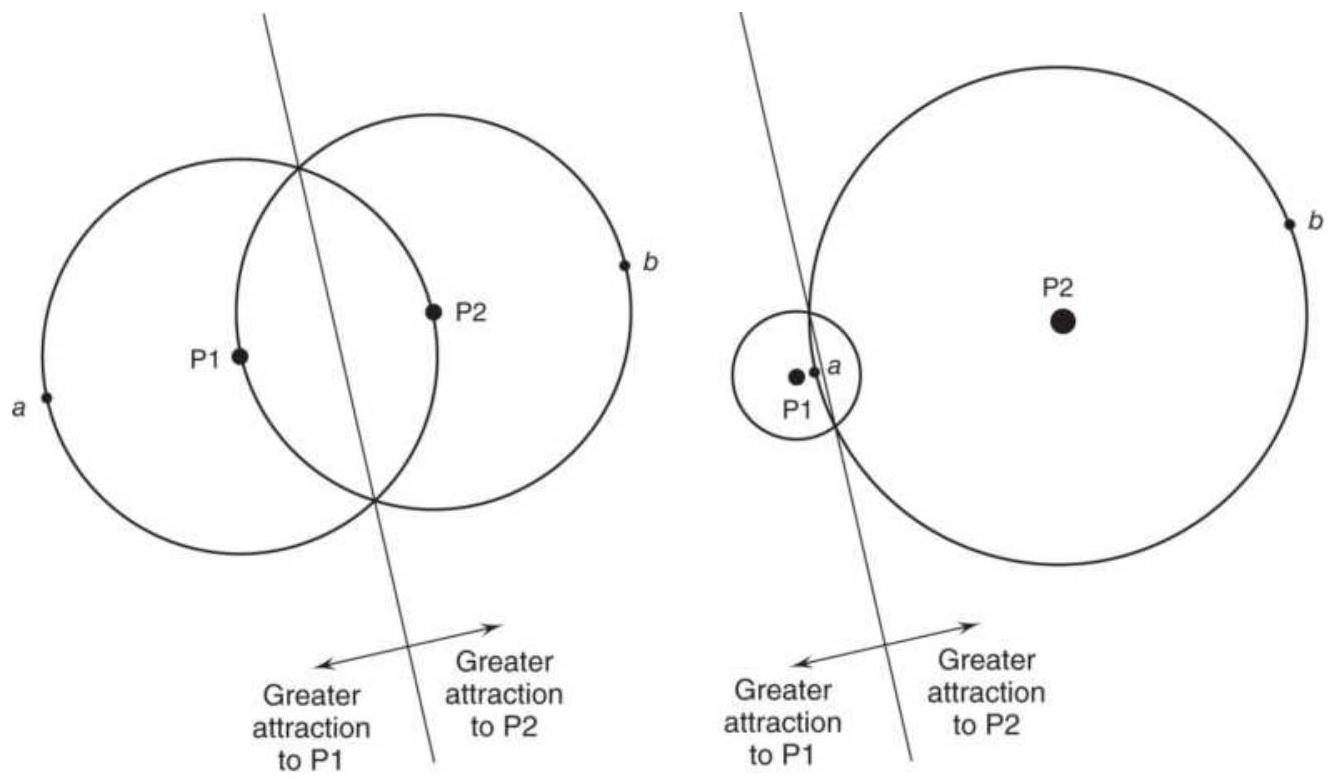

FIGURE 20.20 (a) Equal attractors cause a

symmetric pattern, often a figure ...

FIGURE 20.21 Points indicating fractal patterns as well as trading signals a...

FIGURE 20.22 D'Errico and Trombetta's genetic algorithm solution applied to ...

FIGURE 20.23 A biological neural network. Information is received through de...

FIGURE 20.24 A 3-layer artificial neural network to determine the direction ...

FIGURE 20.25 Learning by feedback.

\section*{Chapter 21}

FIGURE 21.1 Comparing heating oil backadjusted data with the nearest contra...

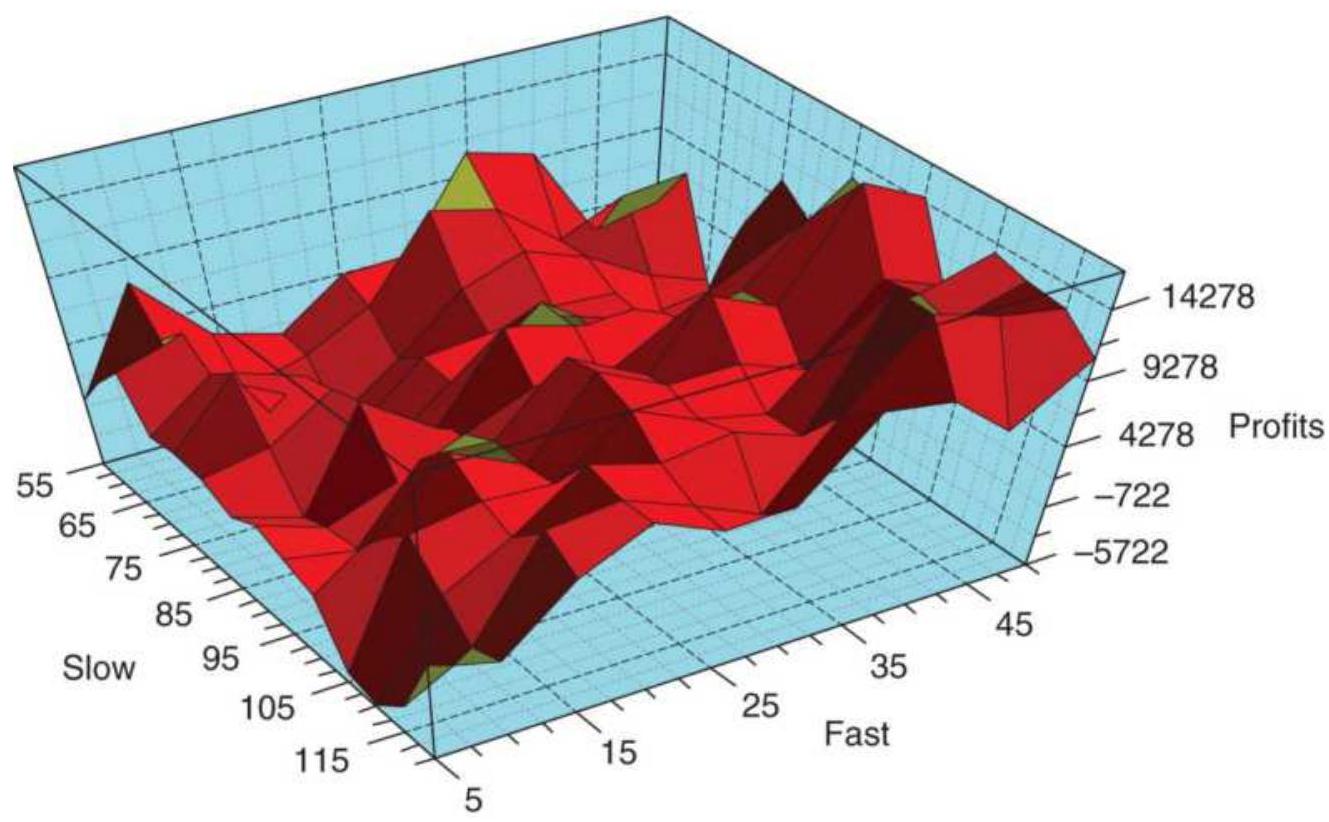

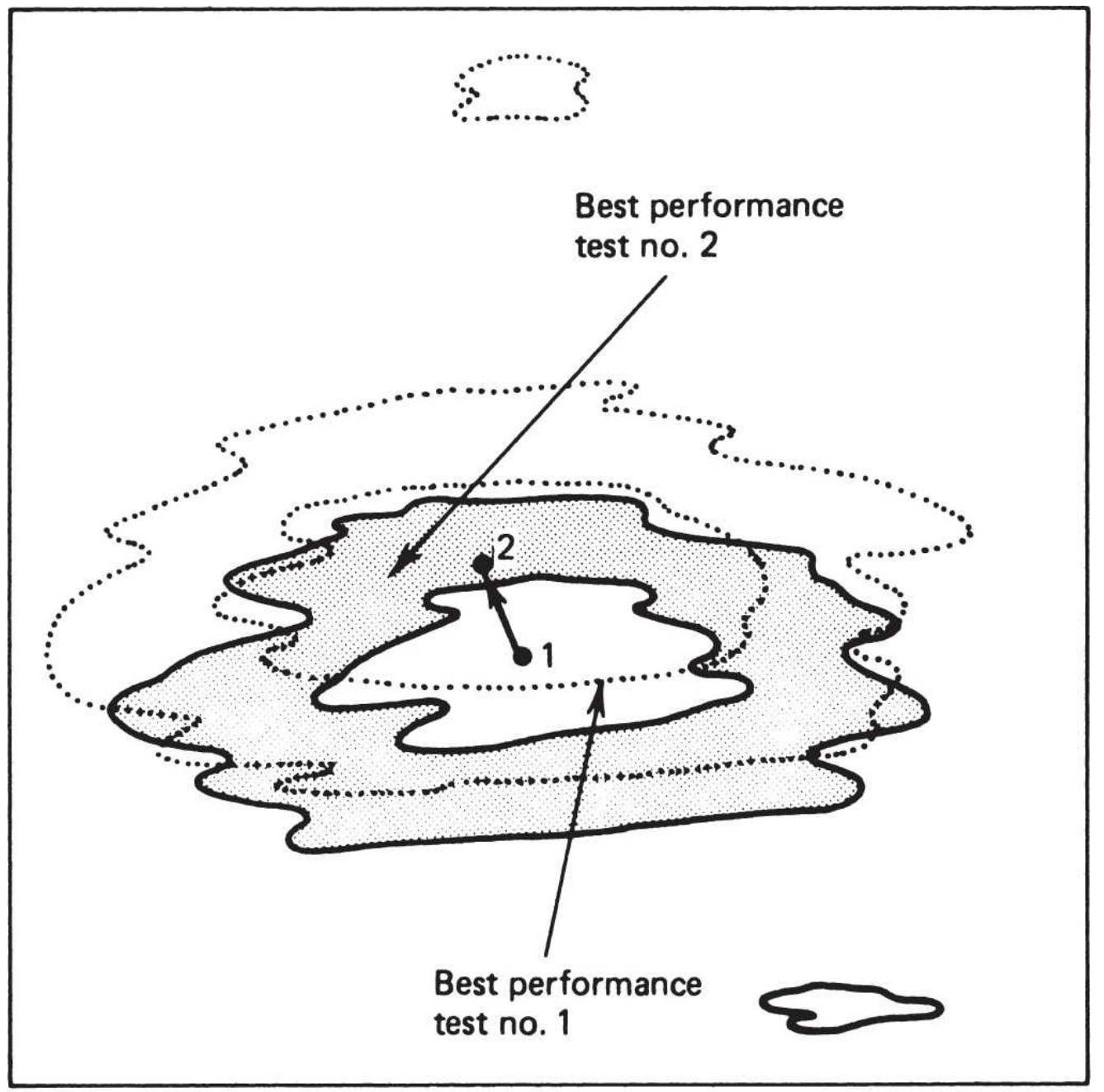

FIGURE 21.2 Visualizing the results of a 2dimensional optimization.

FIGURE 21.3 Walk-forward test results for SPY,

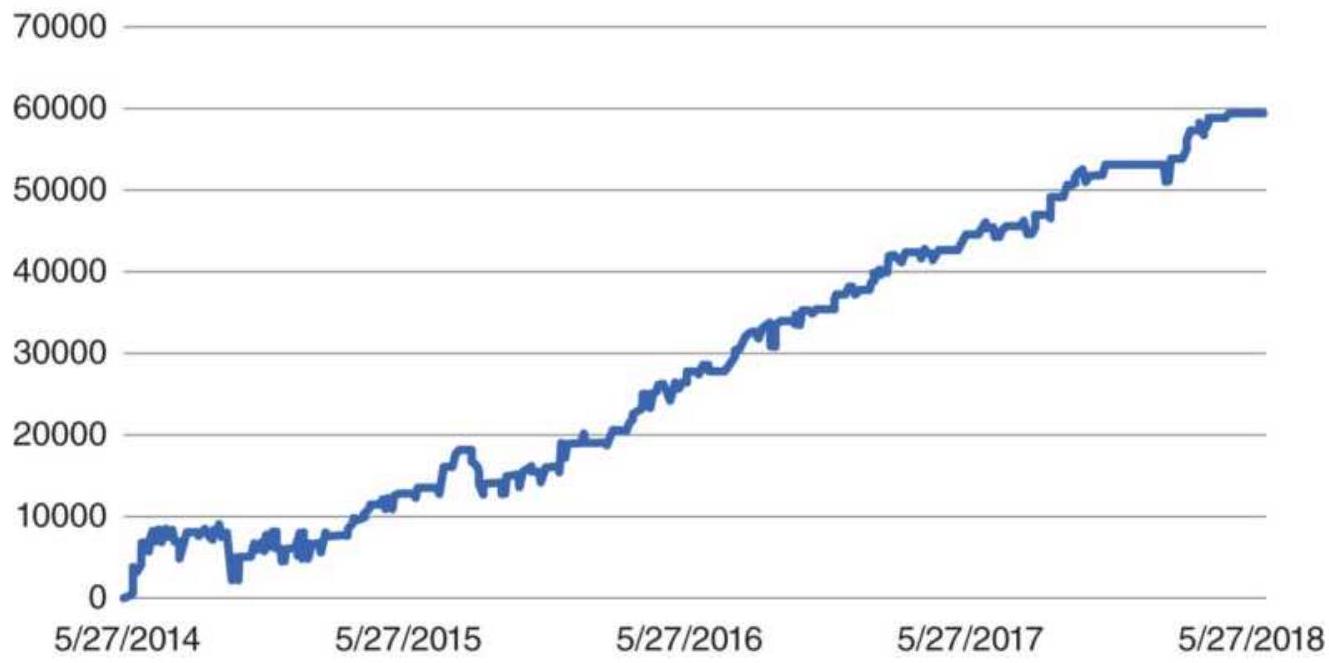

October 1998-December 2018.

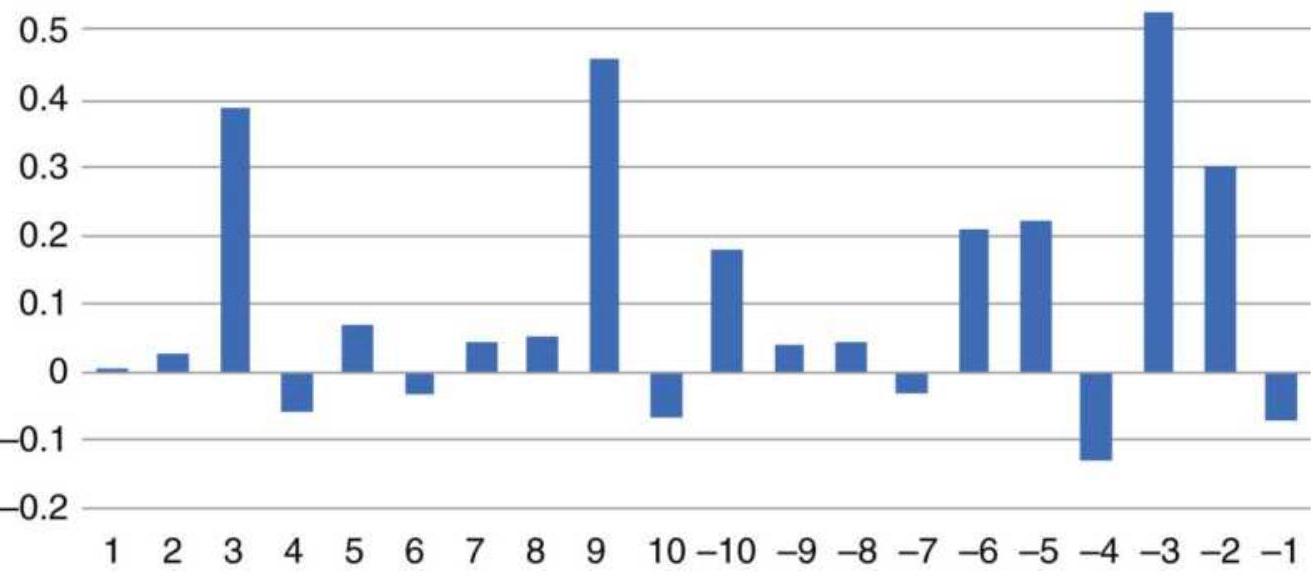

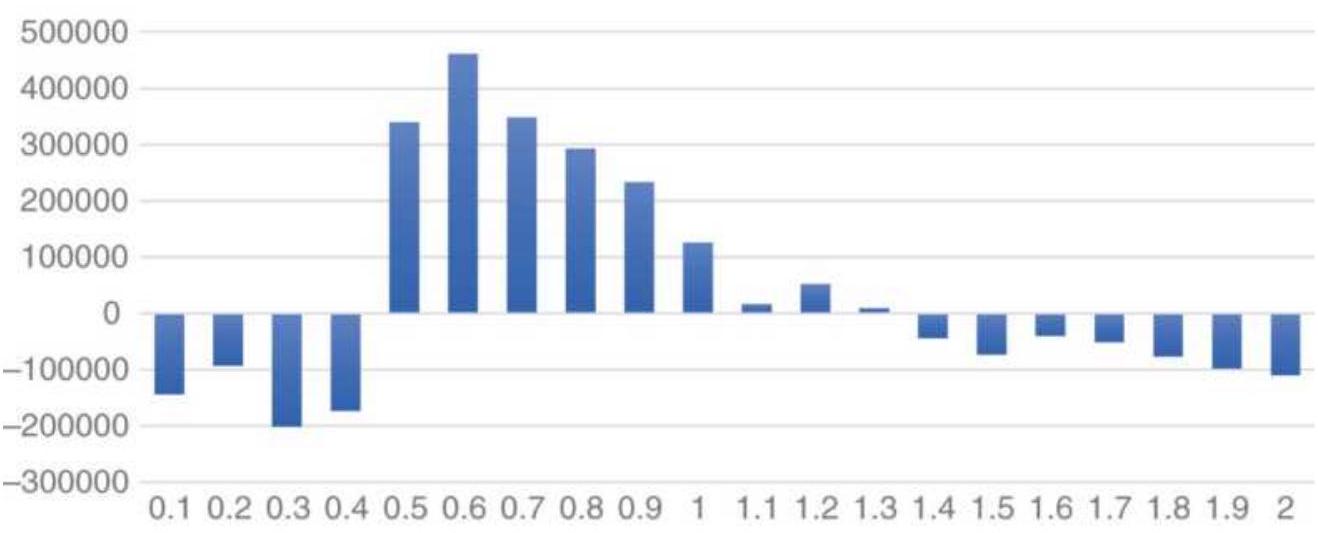

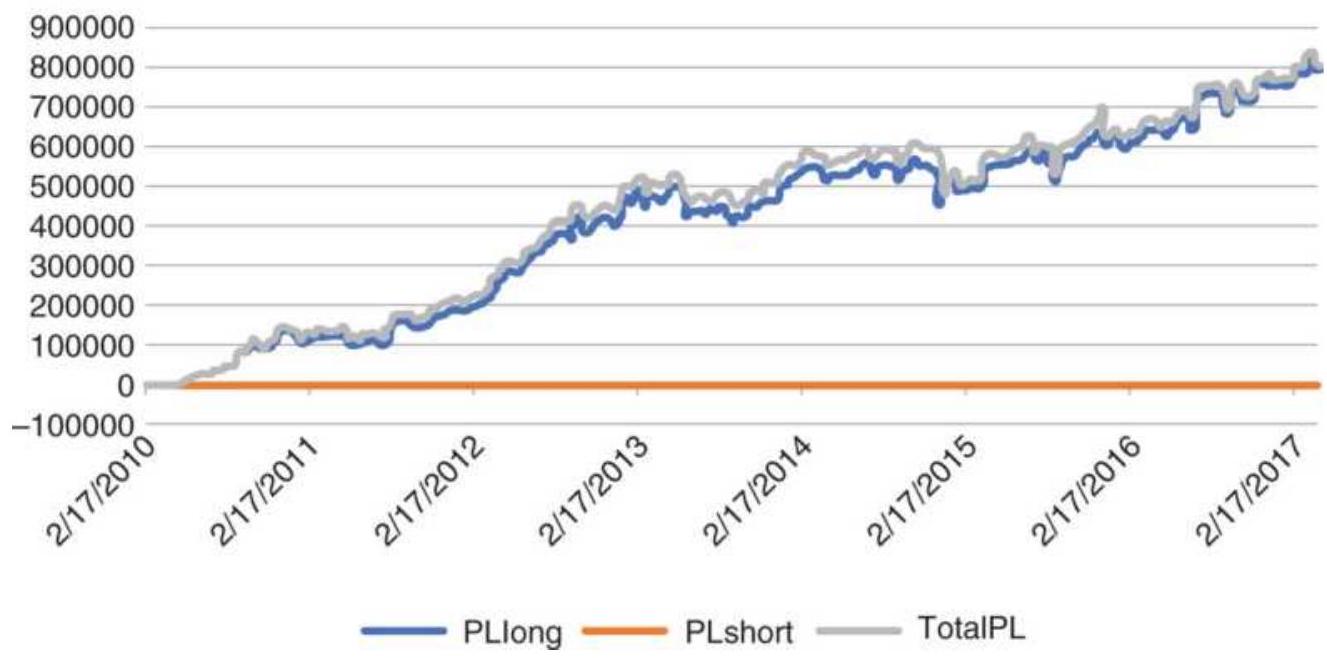

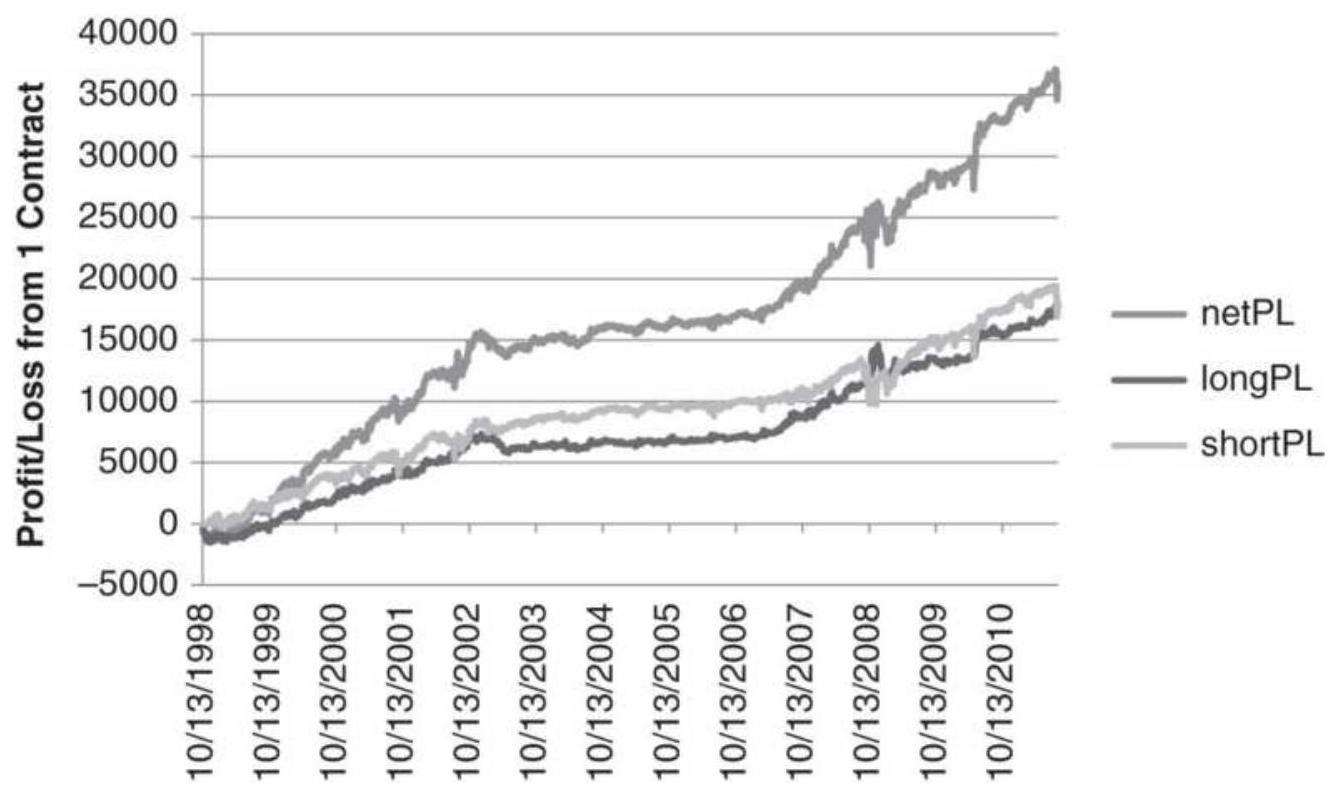

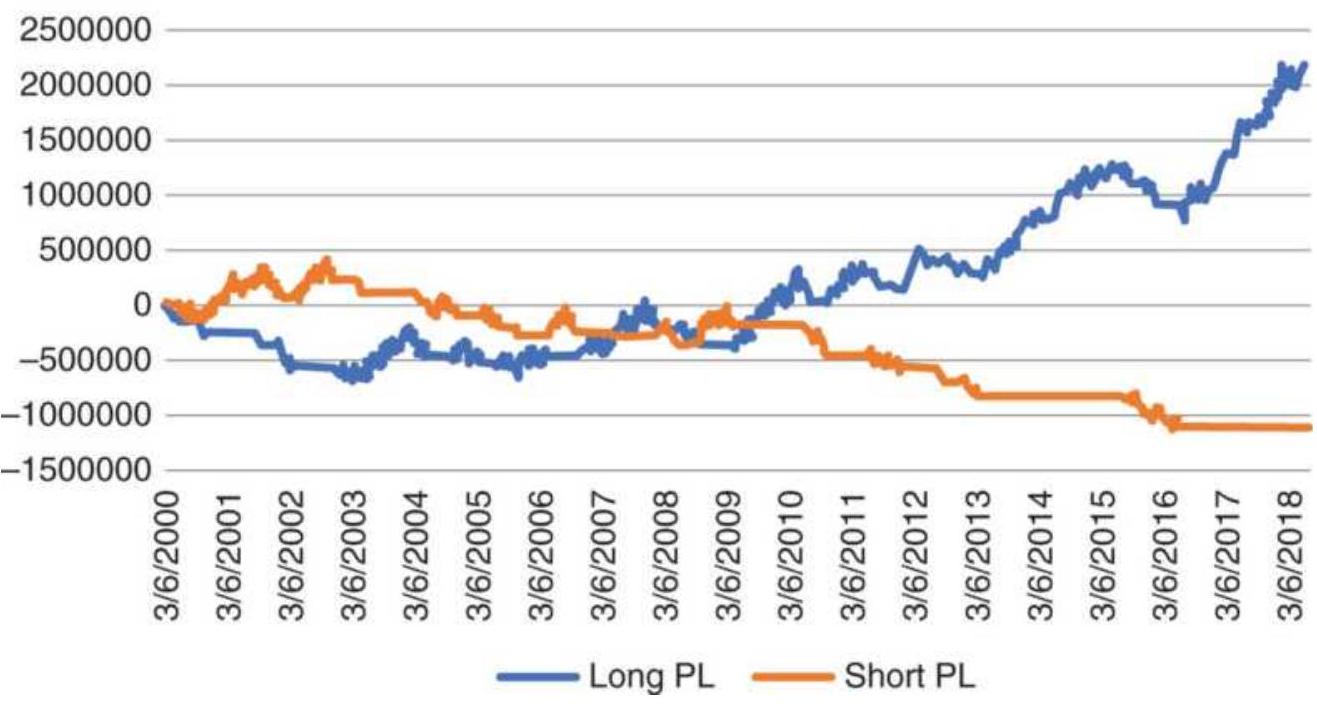

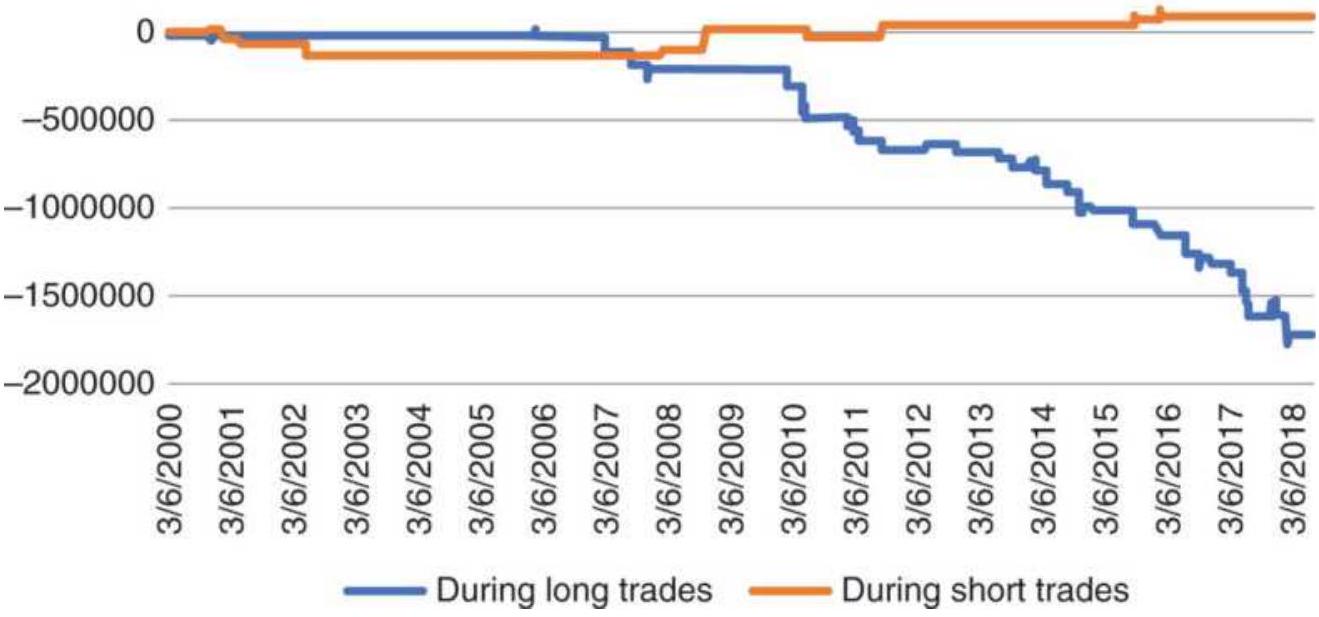

FIGURE 21.4 Net profits from longs, shorts, and combined displayed as a bar ...

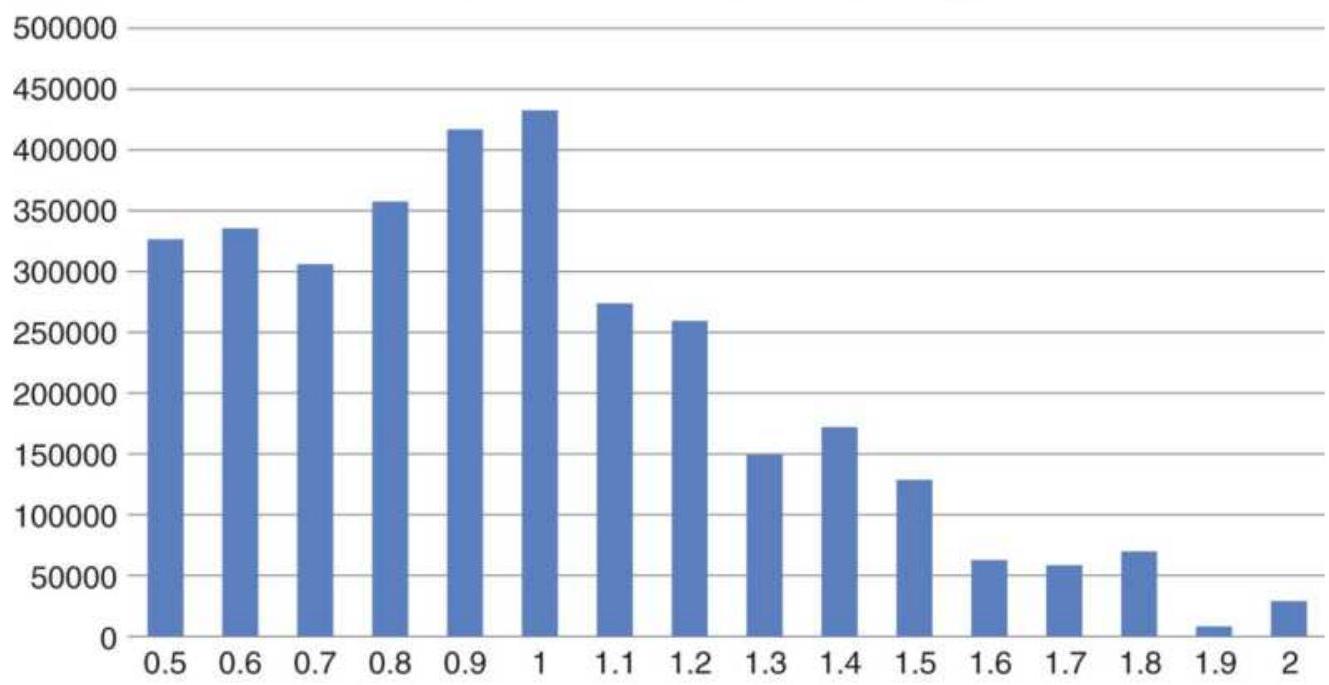

FIGURE 21.5 Optimization of QQQ shown as a line chart.

FIGURE 21.6a Euro futures optimization of a moving average system shown for ...

FIGURE 21.6b Results of euro currency futures optimization showing performan...

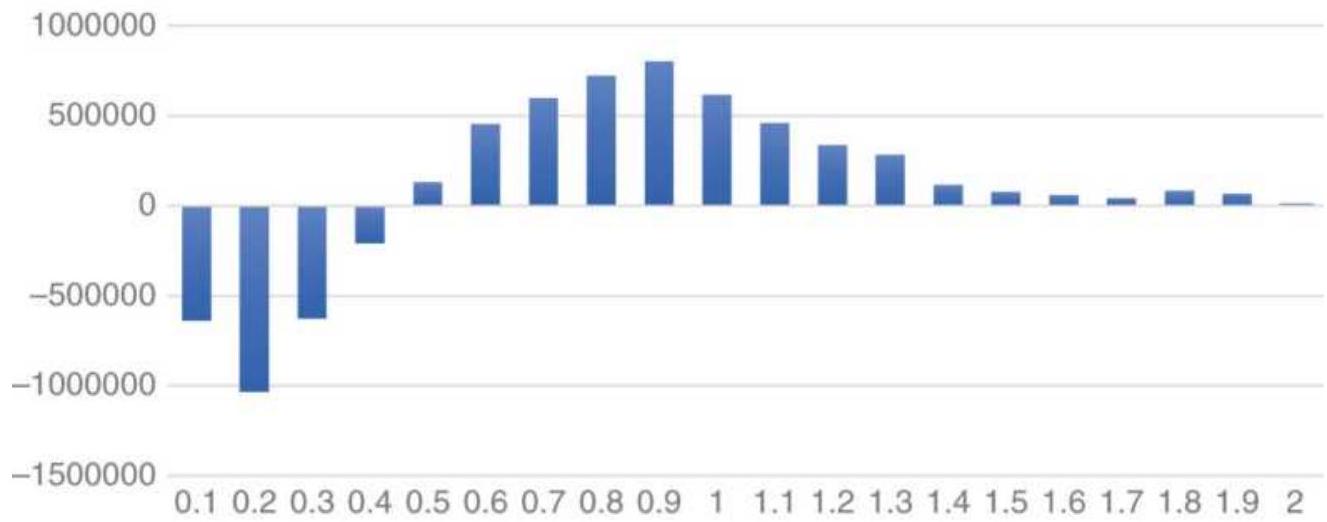

FIGURE 21.7a Eurodollar futures moving

average optimization results through ...

FIGURE 21.7b Results of an emini S\&P optimization.

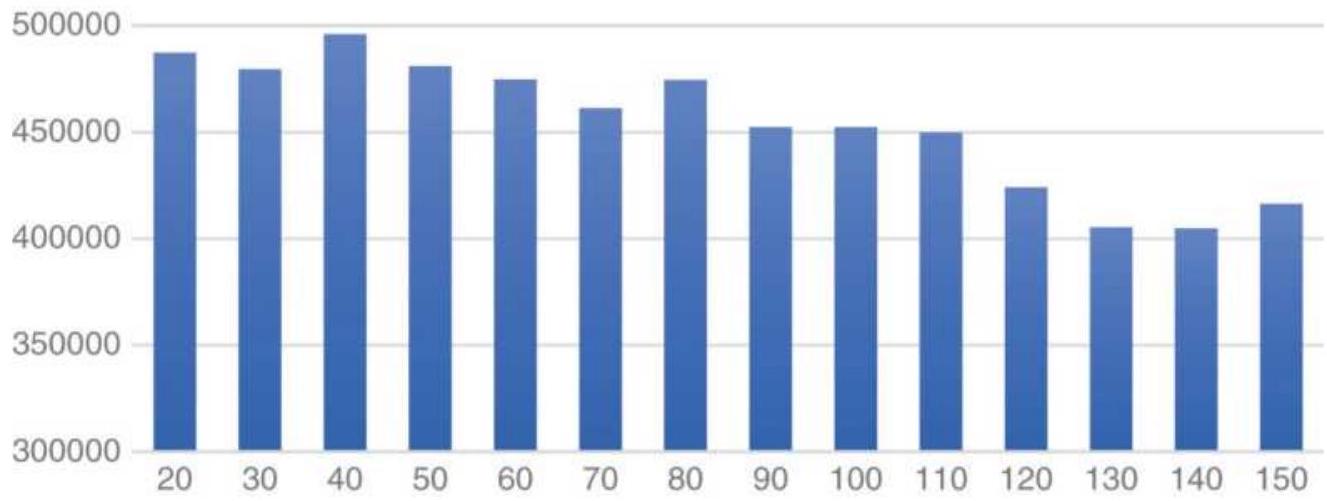

FIGURE 21.7c Wheat futures optimization shows erratic gains in the longer ca...

FIGURE 21.7d Hog futures optimization shows gains in the short-term.

FIGURE 21.7e Cotton shows a performance spike using an 80 -day moving average...

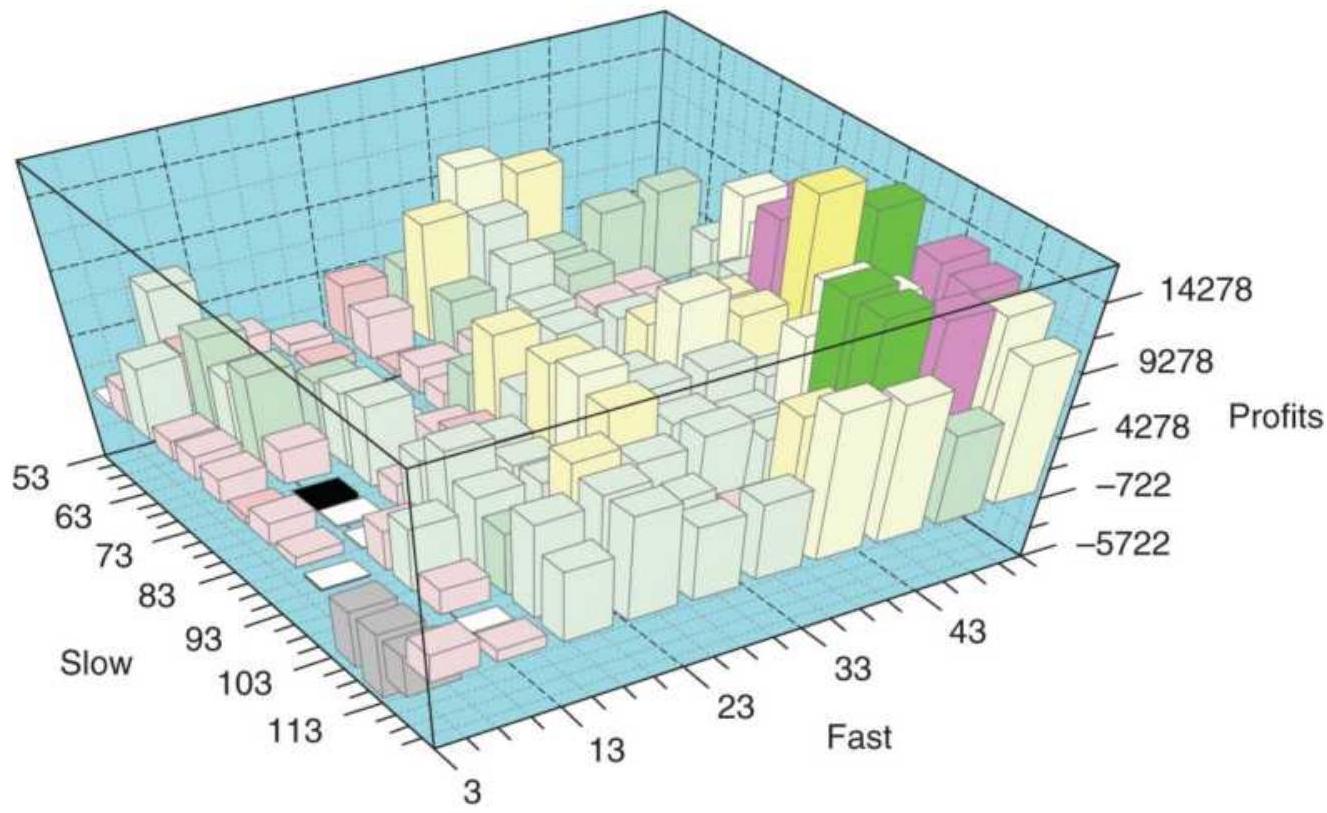

FIGURE 21.8 Heat map of the 2 moving average crossover system.

FIGURE 21.9 A 3-dimensional bar chart of QQQ returns using a moving average ...

FIGURE 21.10 QQQ optimization results shown as a surface chart. The best res...

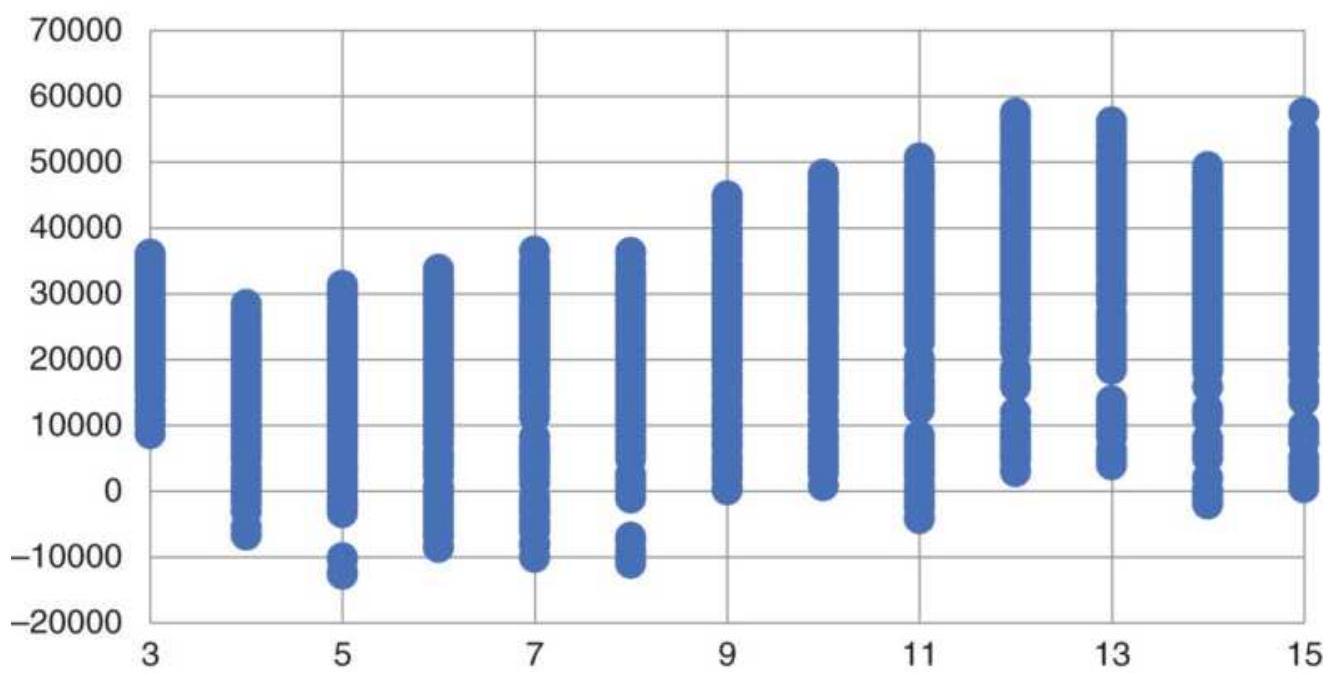

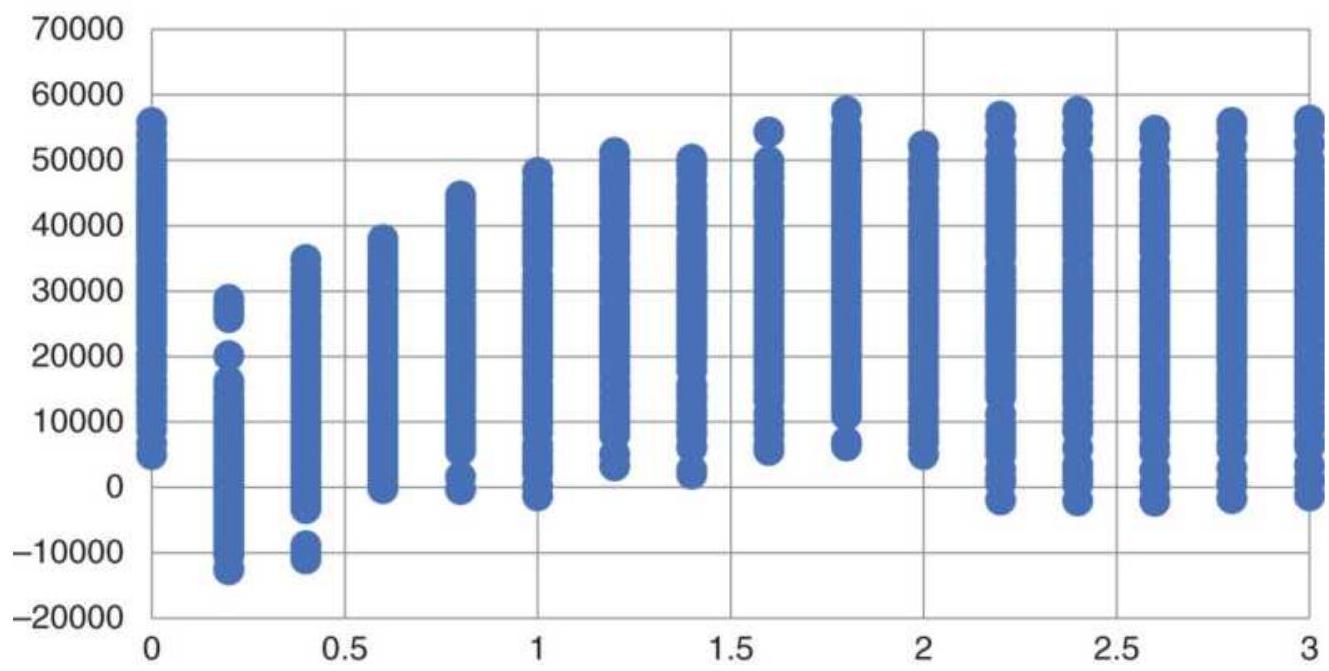

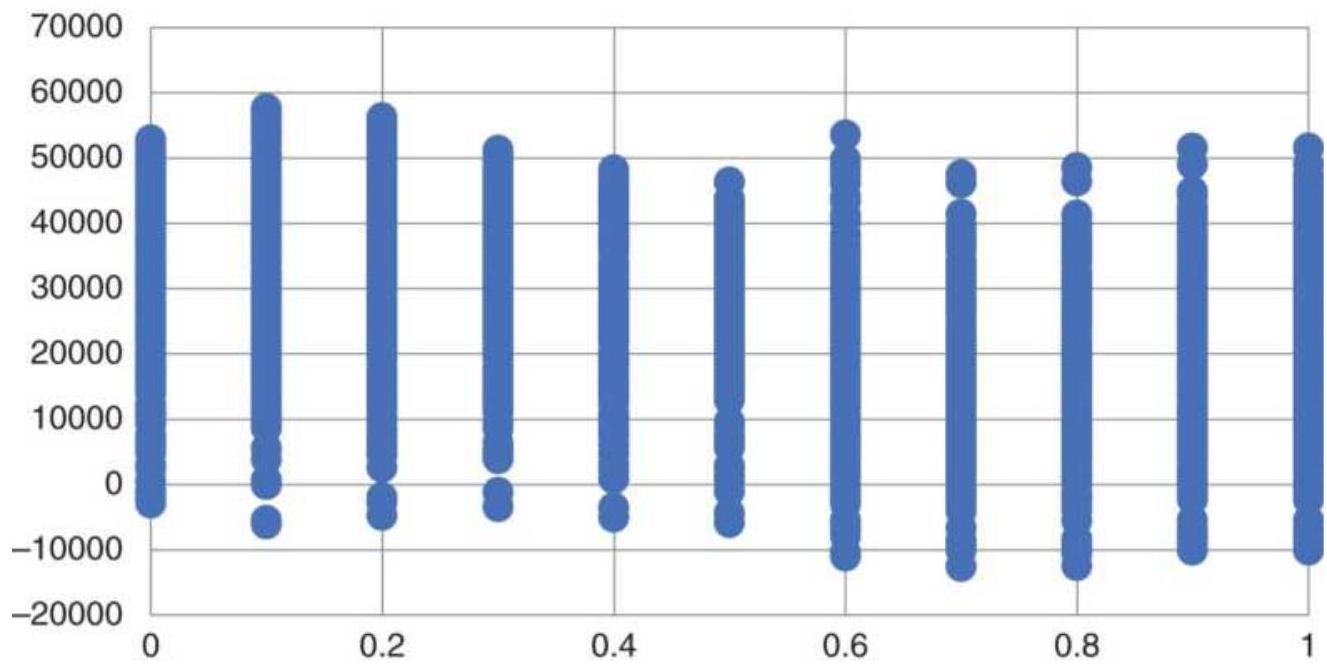

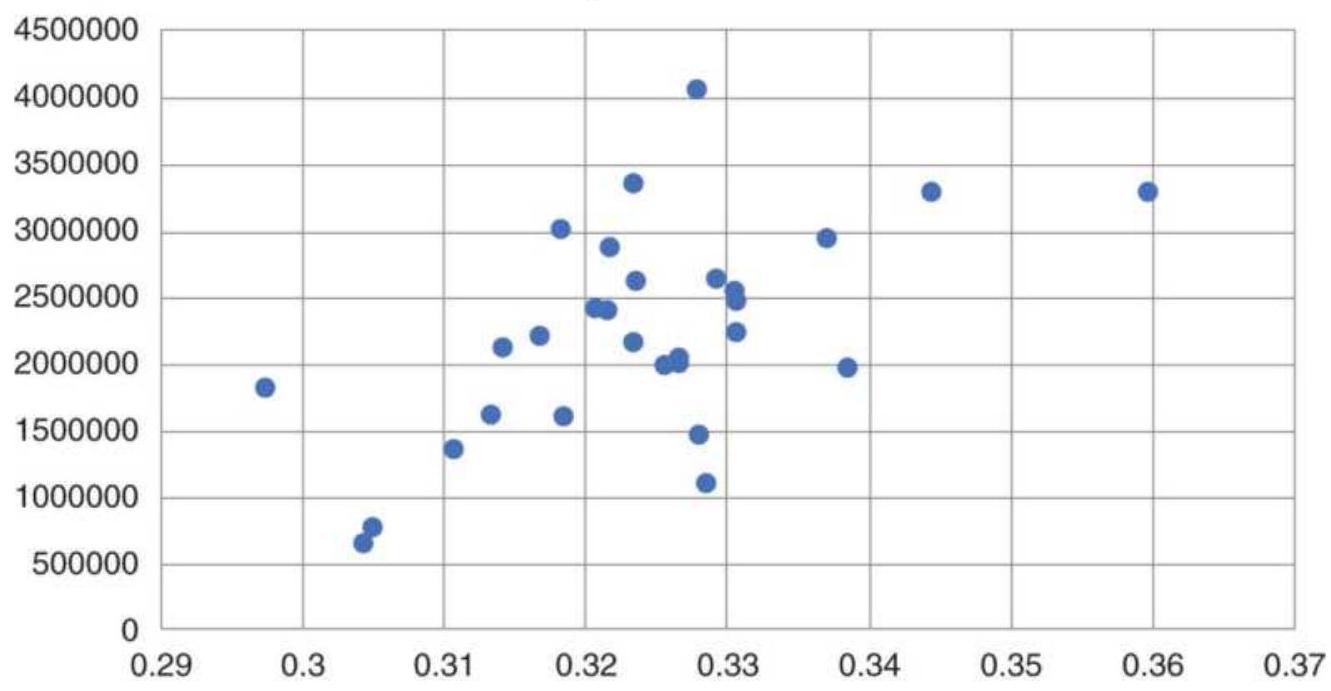

FIGURE 21.11a A scatter diagram of all test profits given the trend calculat...

FIGURE 21.11b All net profits given the profit factor along the bottom.

FIGURE 21.11 c All net profits given the stoploss percentage along the botto...

FIGURE 21.12 VIX mean-reversion strategy using optimal parameters.

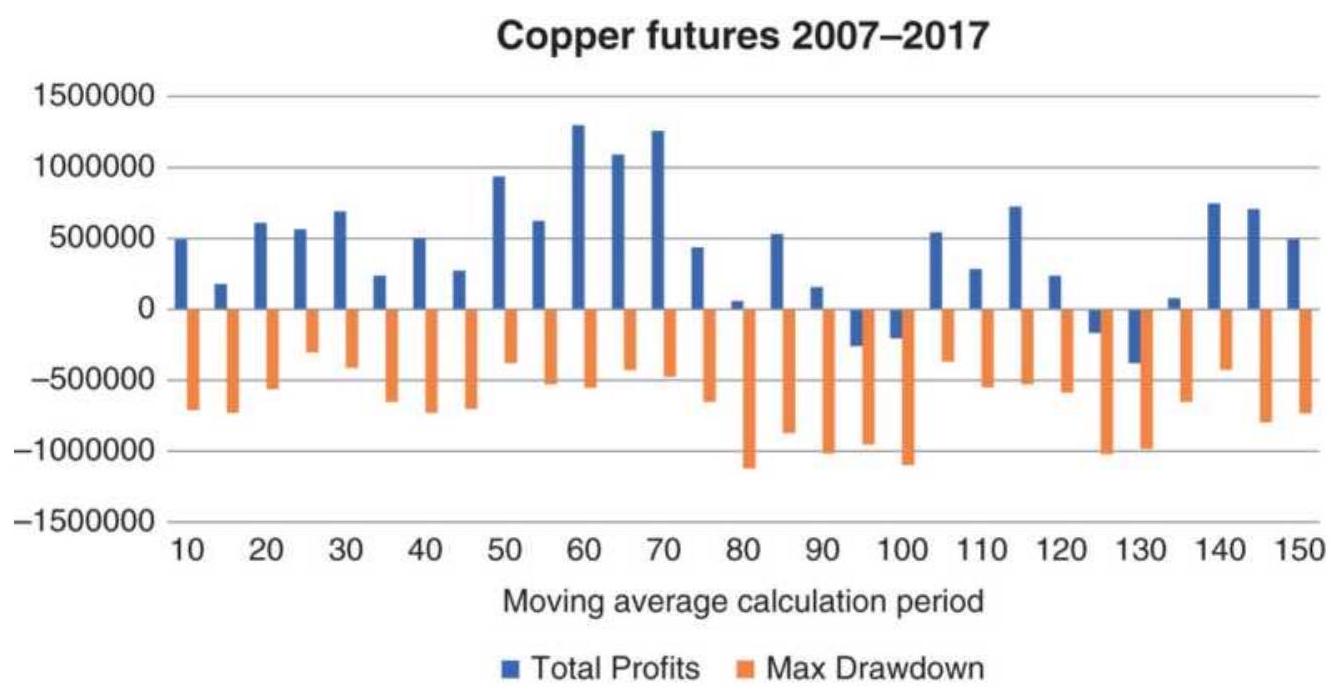

FIGURE 21.13 Moving average optimization of copper futures, 2007-2017, showi...

FIGURE 21.14 5-Bar averages of profits and

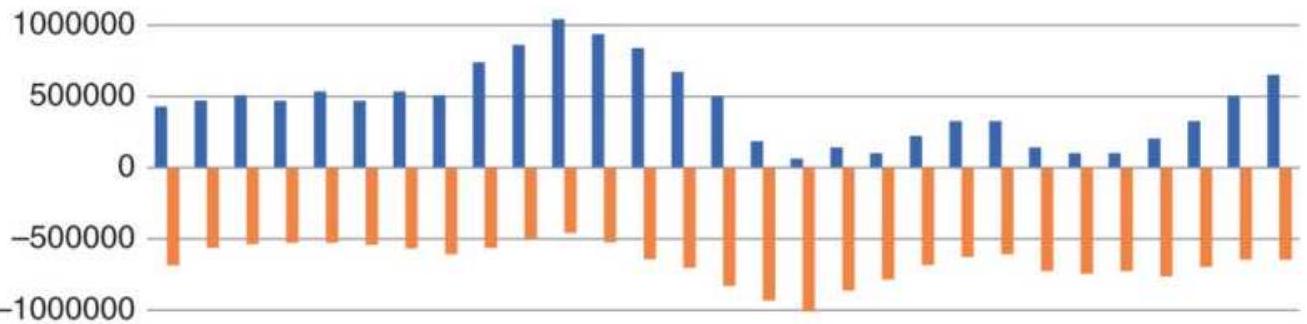

maximum drawdowns for a copper op...

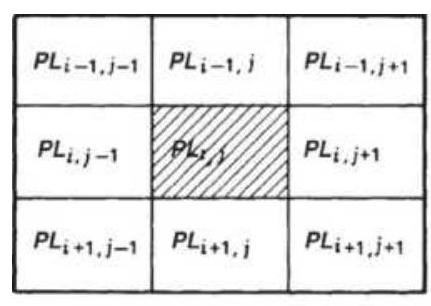

FIGURE 21.15 Averaging of map output results. (a) Center average (9 box). (b...

FIGURE 21.16 Impact of costs on the moving average system.

FIGURE 21.17 Patterns resulting from changing rules. (a) A rule change that ...

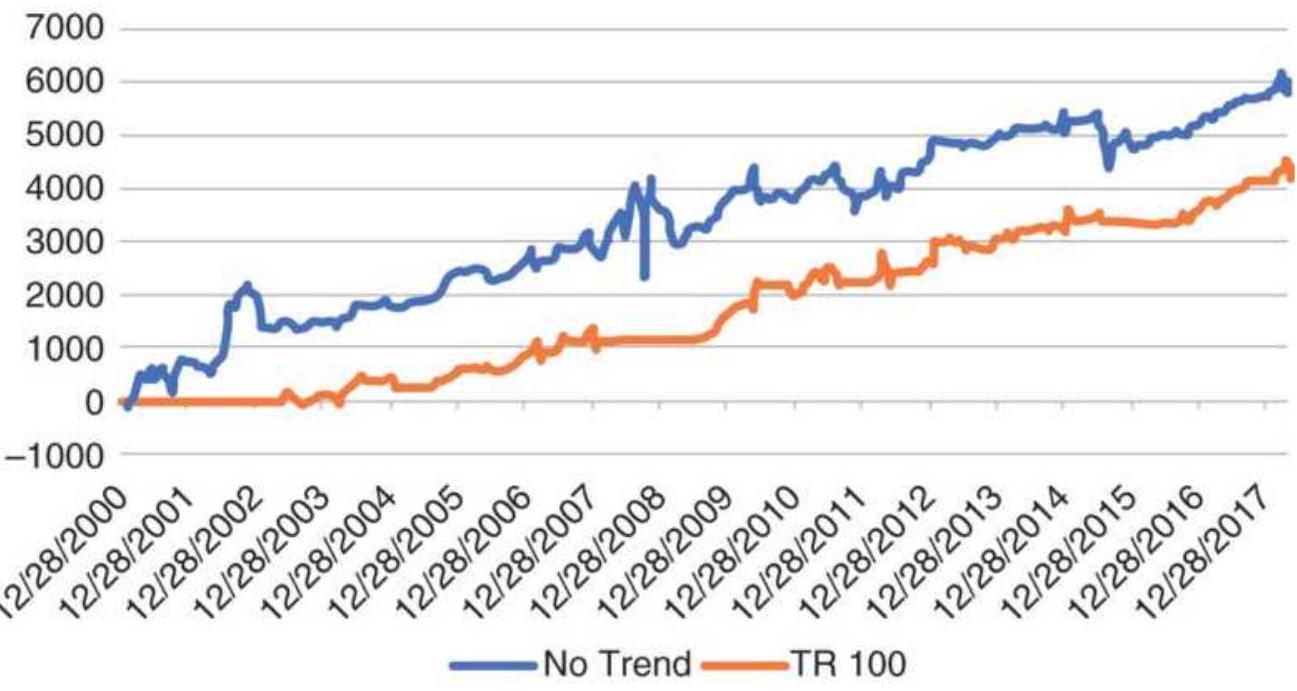

FIGURE 21.18 Net profits from a 110-period moving average and a 140-period l...

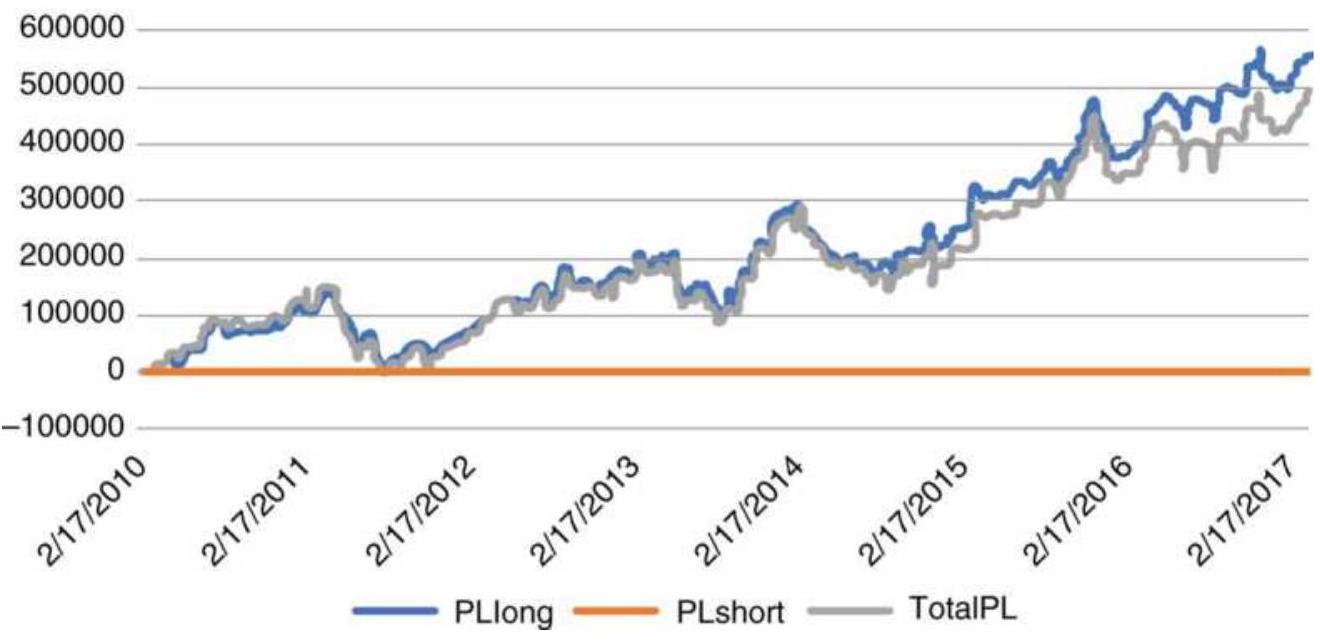

FIGURE 21.19 Net profits from the two strategies, taking only long positions...

FIGURE 21.20 Consecutive tests.

FIGURE 21.21 Heat map of ES optimization of the standard crossover system.

FIGURE 21.22 Heat map of ES optimization, "fading" the short-term trend in t...

FIGURE 21.23 Best calculation period, sorted smallest to largest.

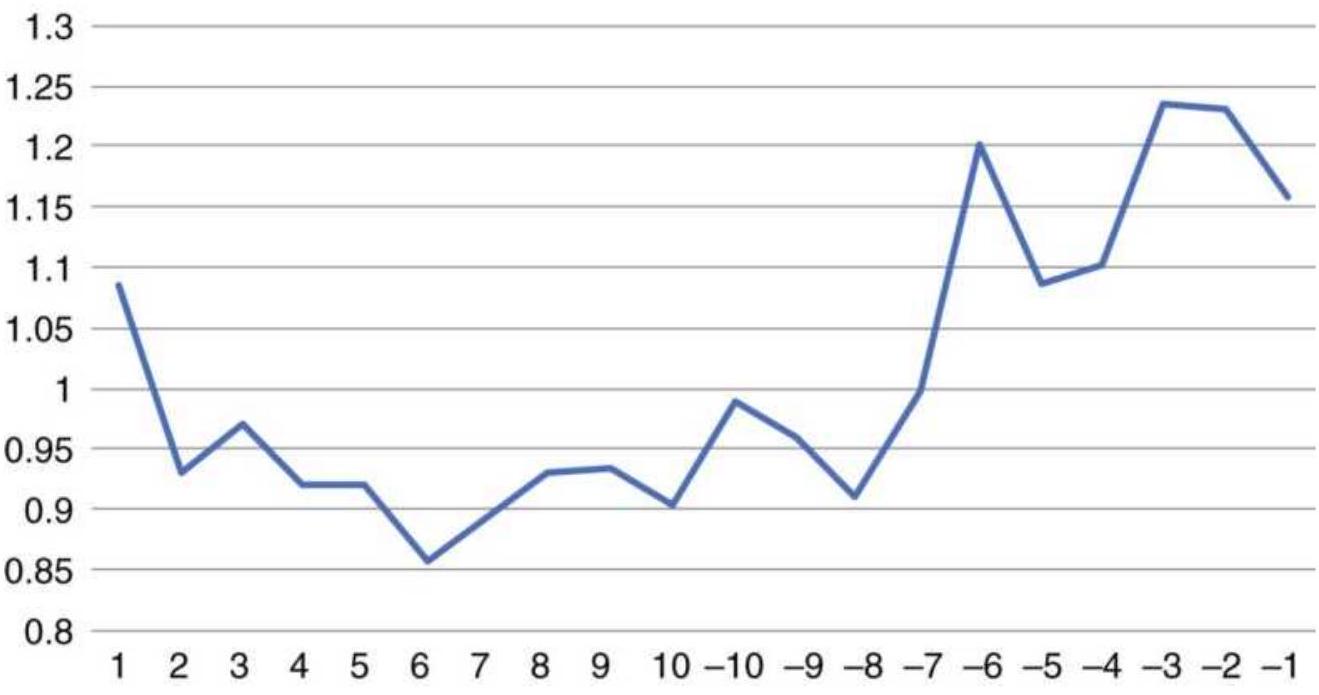

FIGURE 21.24a Total profits from long and short positions using a 100-day mo...

FIGURE 21.24b Net gain and loss from price shocks for the QQQ trend system....

Chapter 22

FIGURE 22.1 The Gorbachev abduction caused a double price shock, with most im...

FIGURE 22.2 Labour's victory in the British

election of 1992 represents a mor...

FIGURE 22.3 Crude oil prices from Iraq's invasion of Kuwait through the begin...

FIGURE 22.4 The price shock of 9/11/2001.

FIGURE 22.5 The surprising results of the

British vote on staying in the Euro...

FIGURE 22.6 The U.S. election of 2016 was a surprise to both the stock market...

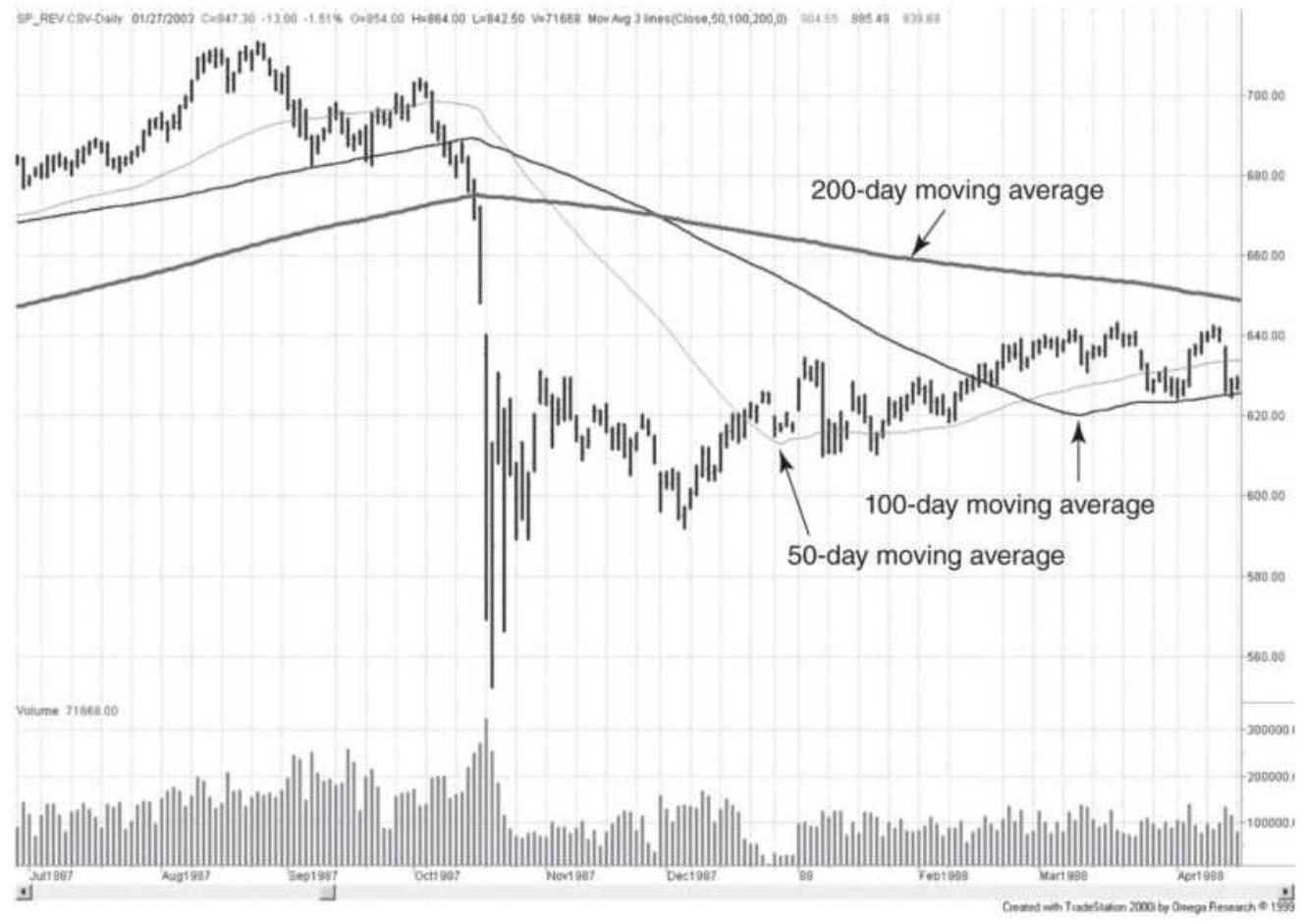

FIGURE 22.7 S\&P 500 futures. Moving average trendlines become out of phase a...

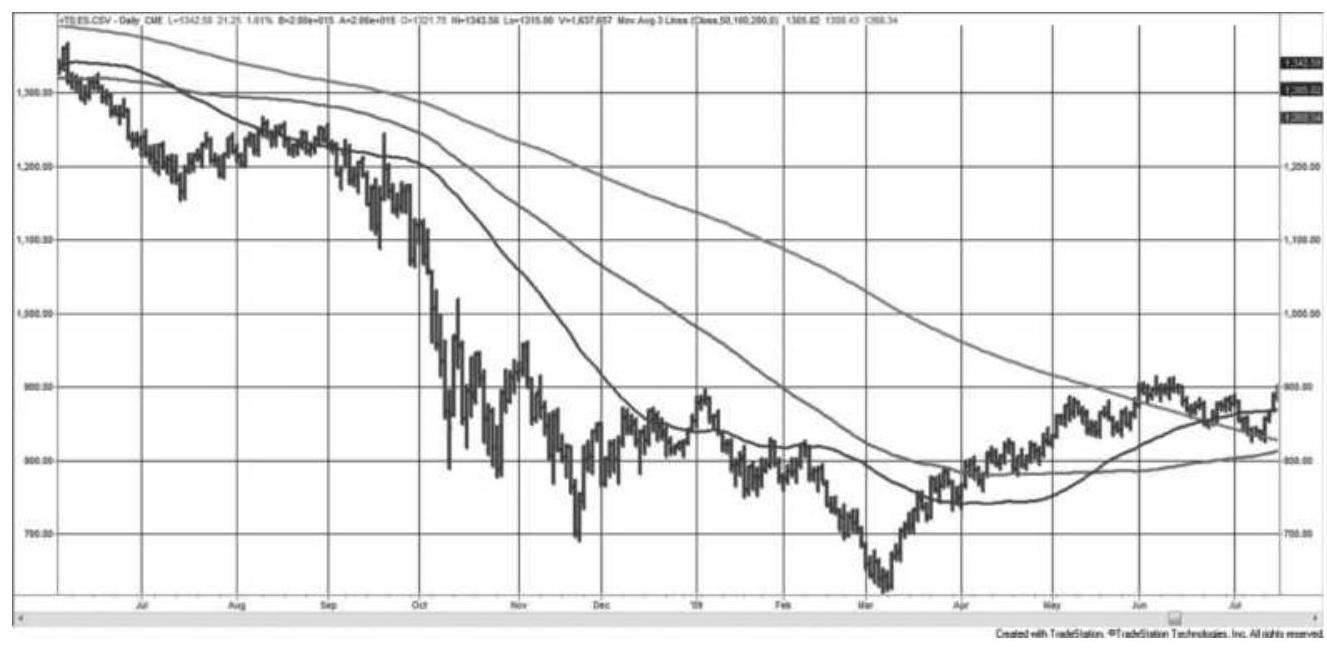

FIGURE 22.8 The S\&P decline due to the subprime crisis generated large profi...

FIGURE 22.9 Sequence of random numbers

representing occurrences of red and b...

FIGURE 22.10 Betting pattern.

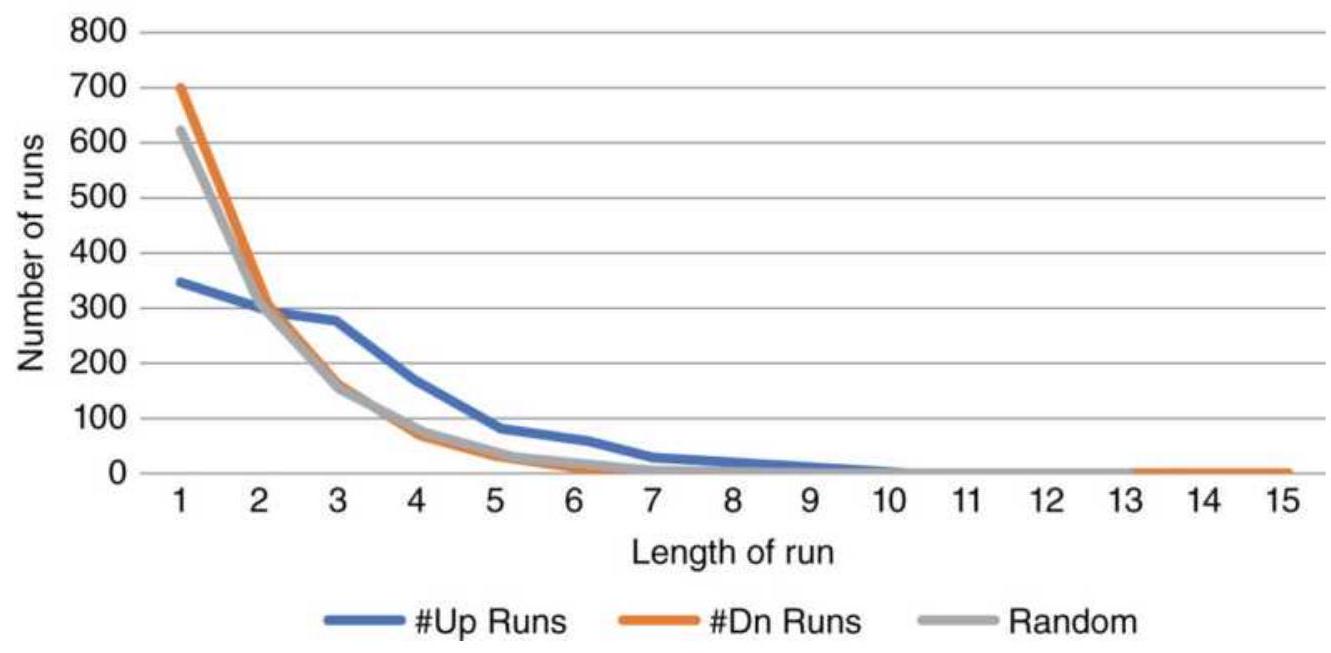

FIGURE 22.11 Runs of SPY compared to

random runs.

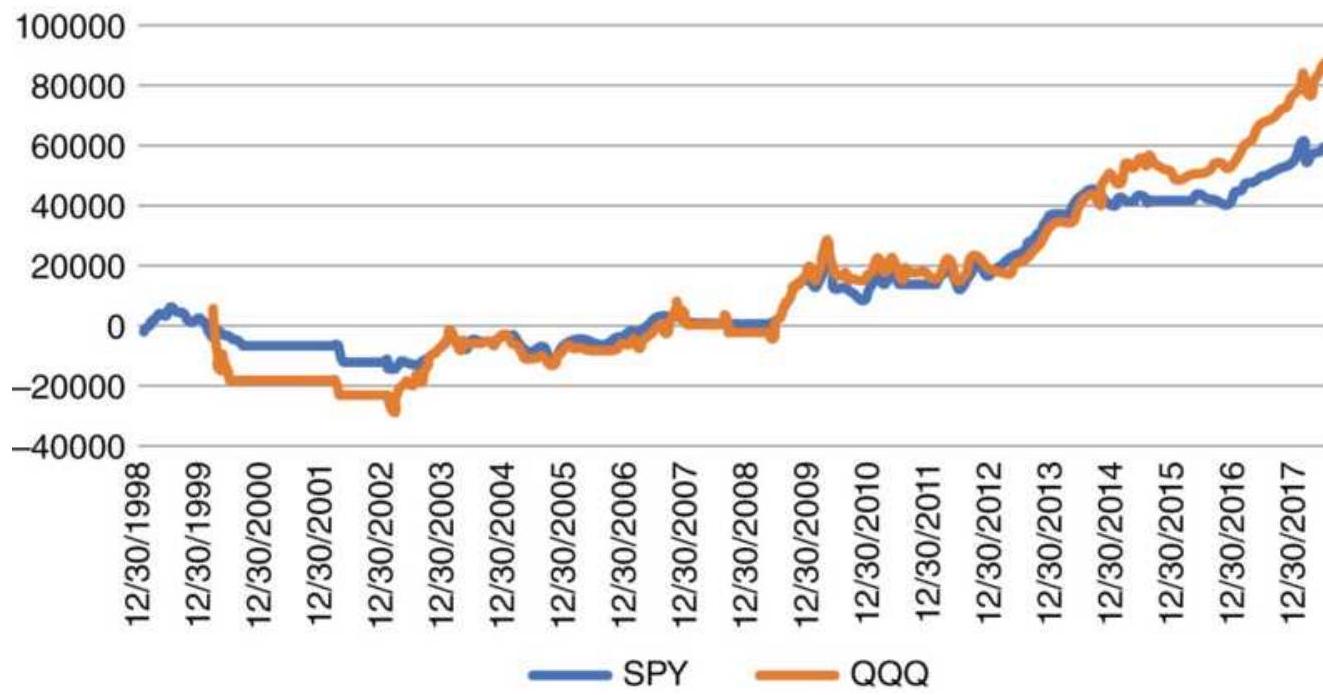

FIGURE 22.12 Comparison of total profits for SPY and QQQ using a 120-day mov...

FIGURE 22.13 Anti-Martingales applied to

QQQ, doubling the position when the...

FIGURE 22.14 Relative risk of a moving average system.

FIGURE 22.15 Entry point alternatives for a mean-reverting strategy.

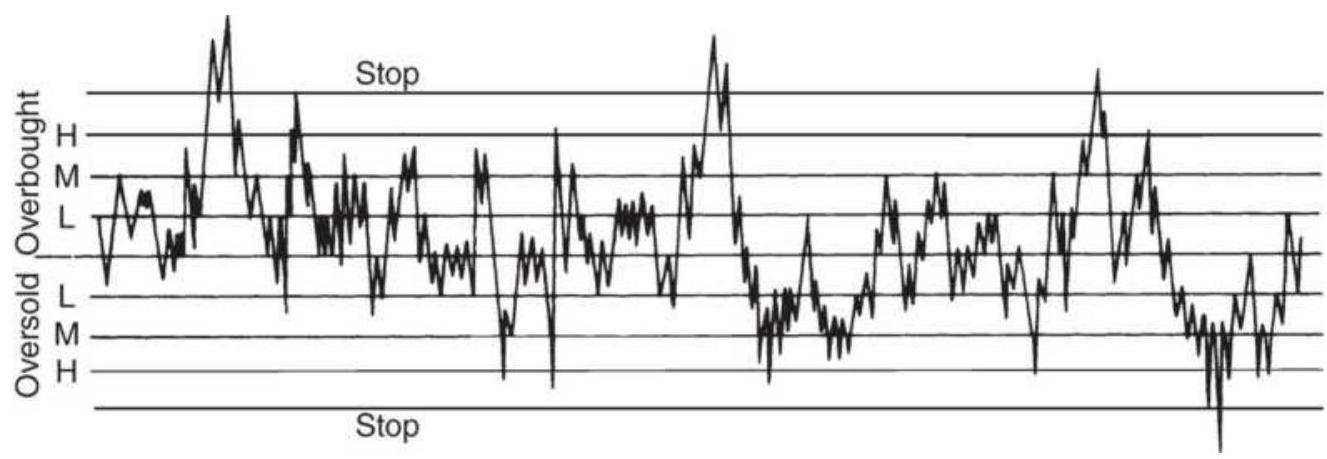

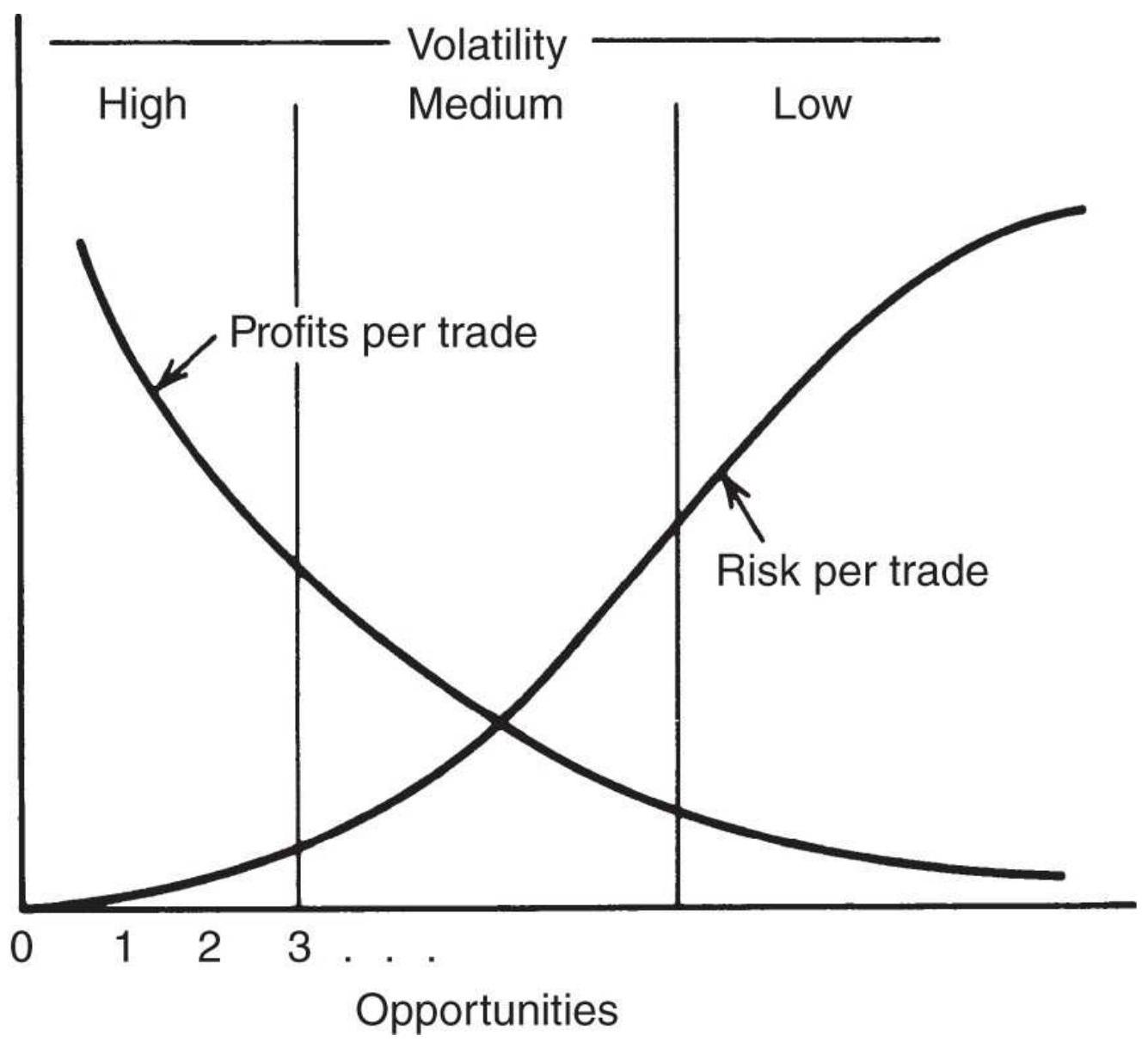

FIGURE 22.16 Relationship of profits to risk

per trade based on opportunitie...

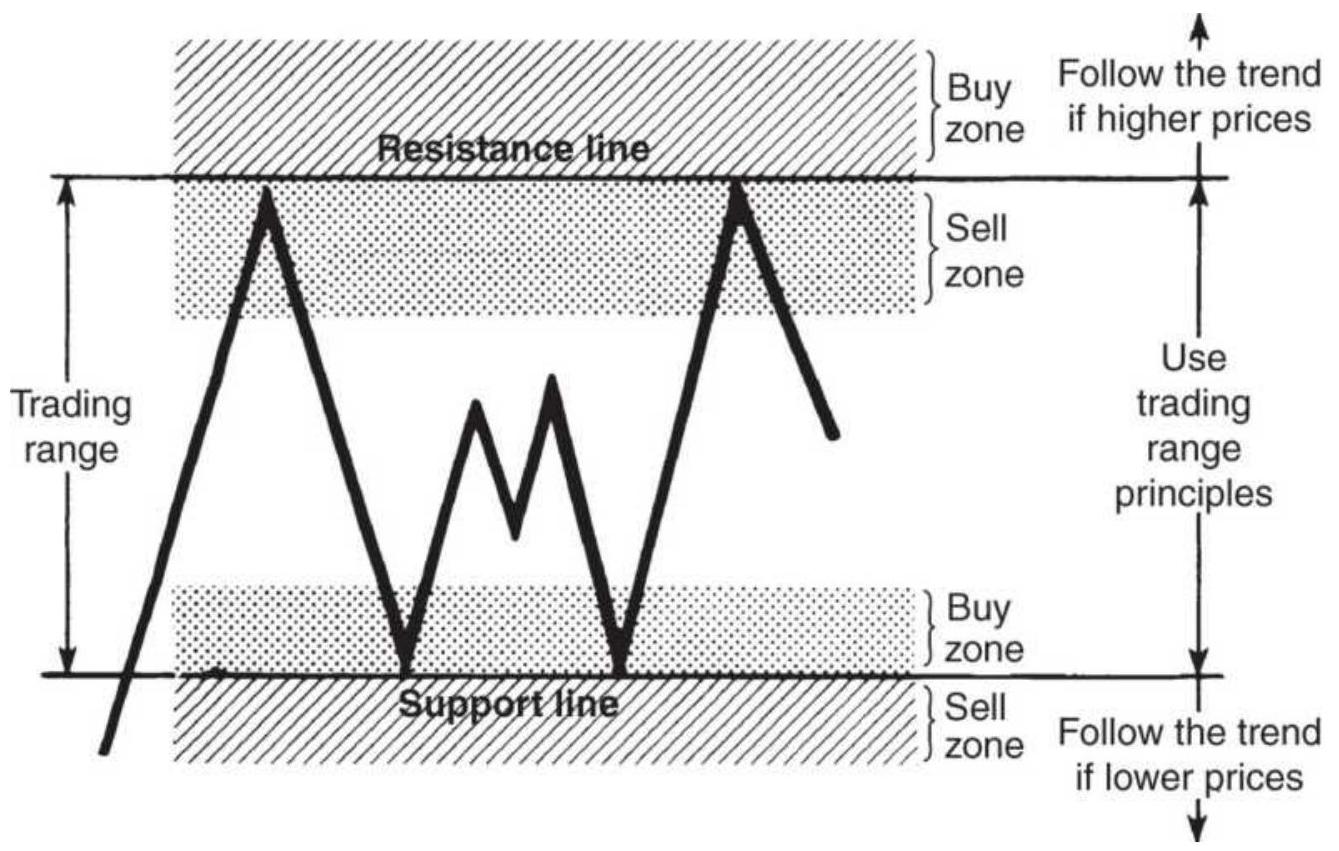

\section*{FIGURE 22.17 Combining trends and trading} ranges.

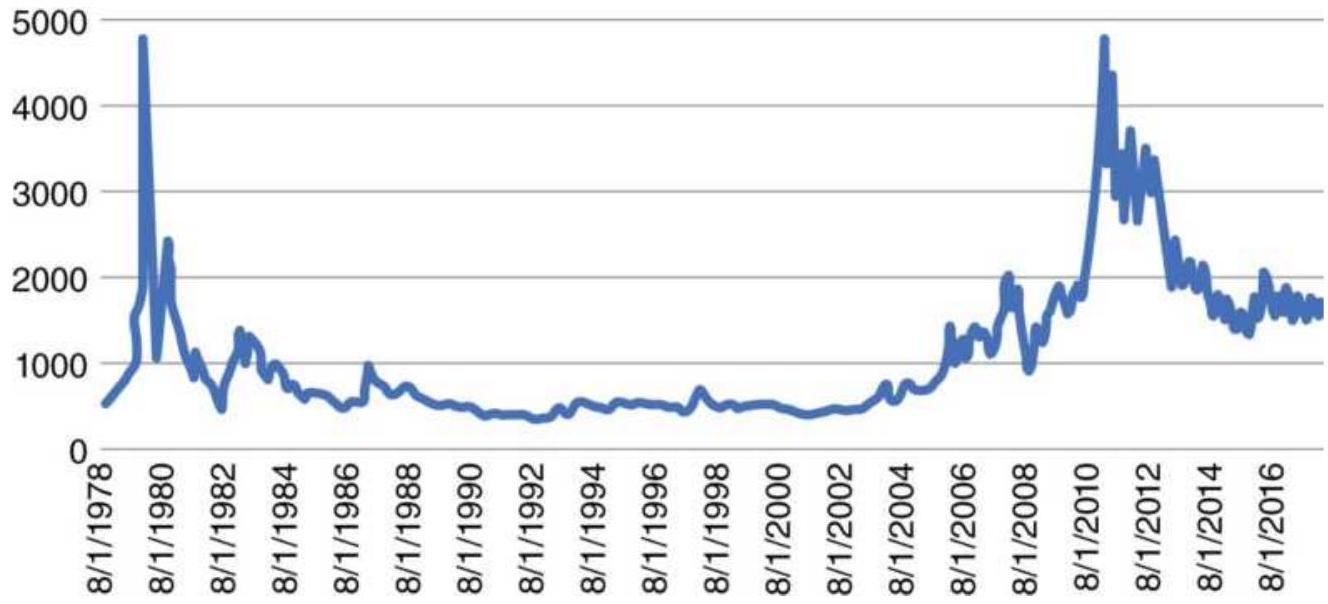

FIGURE 22.18 Cash silver prices, 1978-June 2018.

FIGURE 22.19 Moving average results for SPY using different calculation peri...

Chapter 23

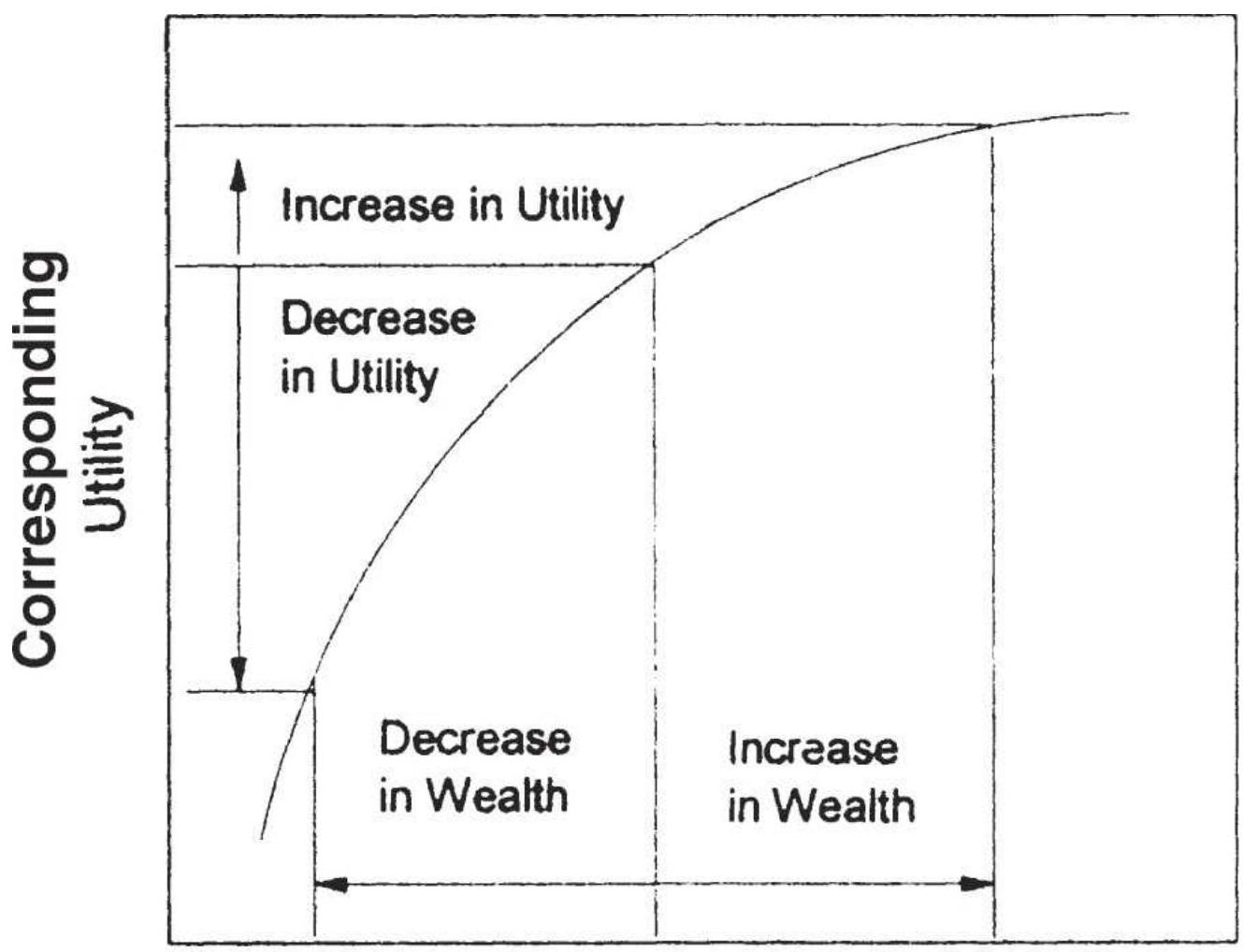

FIGURE 23.1 Changes in utility versus changes in wealth.

\section*{FIGURE 23.2 Investor utility curves.}

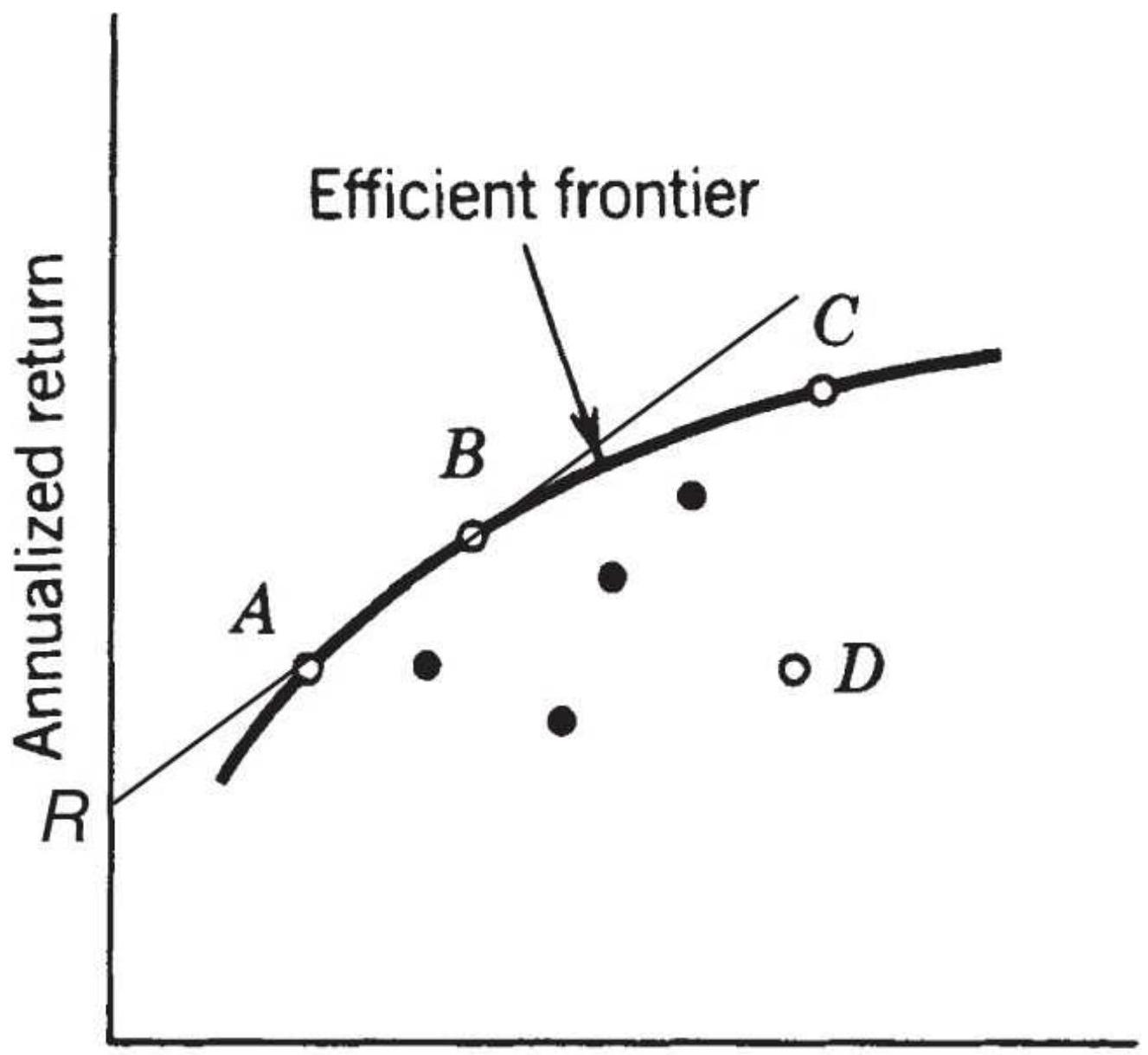

FIGURE 23.3 The efficient frontier. The line drawn from \(\underline{R}\), the risk-free ret...

FIGURE 23.4 Two cases in which the Sharpe ratio falls short. (a) The order i...

FIGURE 23.5 The Hindenburg Omen shown as vertical bars on a chart of SPY pri...

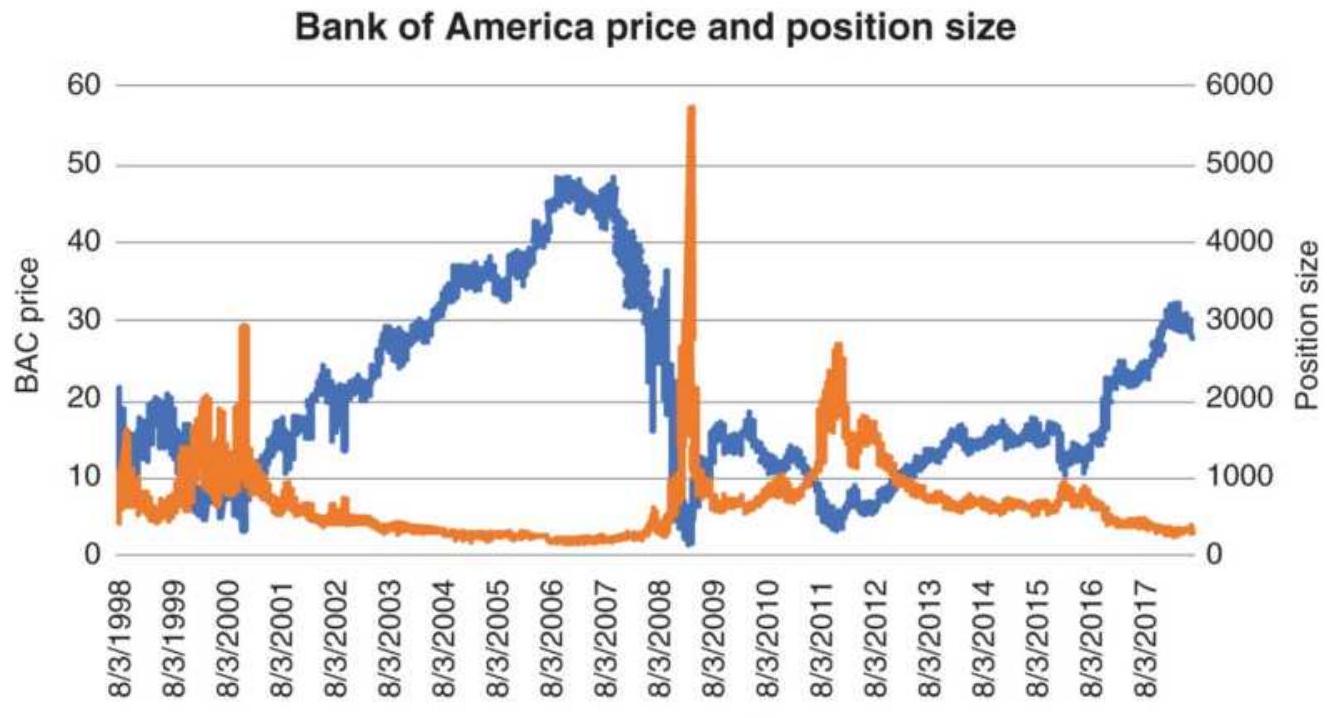

FIGURE 23.6 Bank of America prices with position sizes based on a \(\$ 10,000\) in...

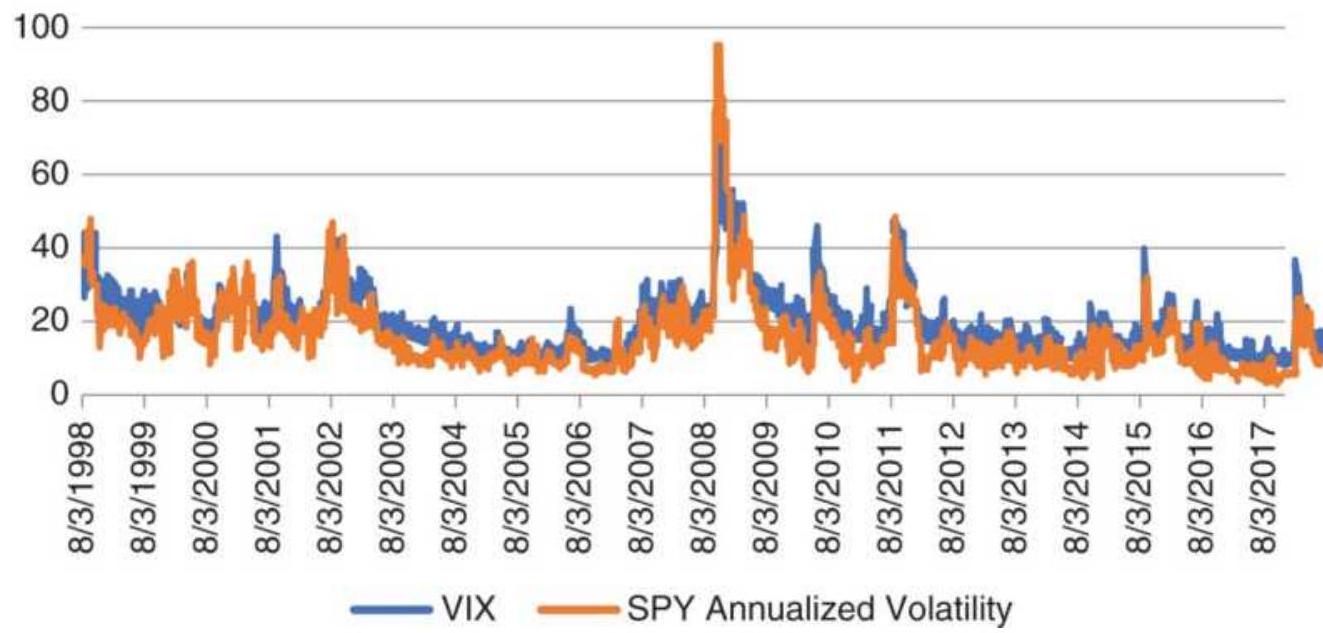

\section*{FIGURE 23.7 VIX and annualized volatility of SPY.}

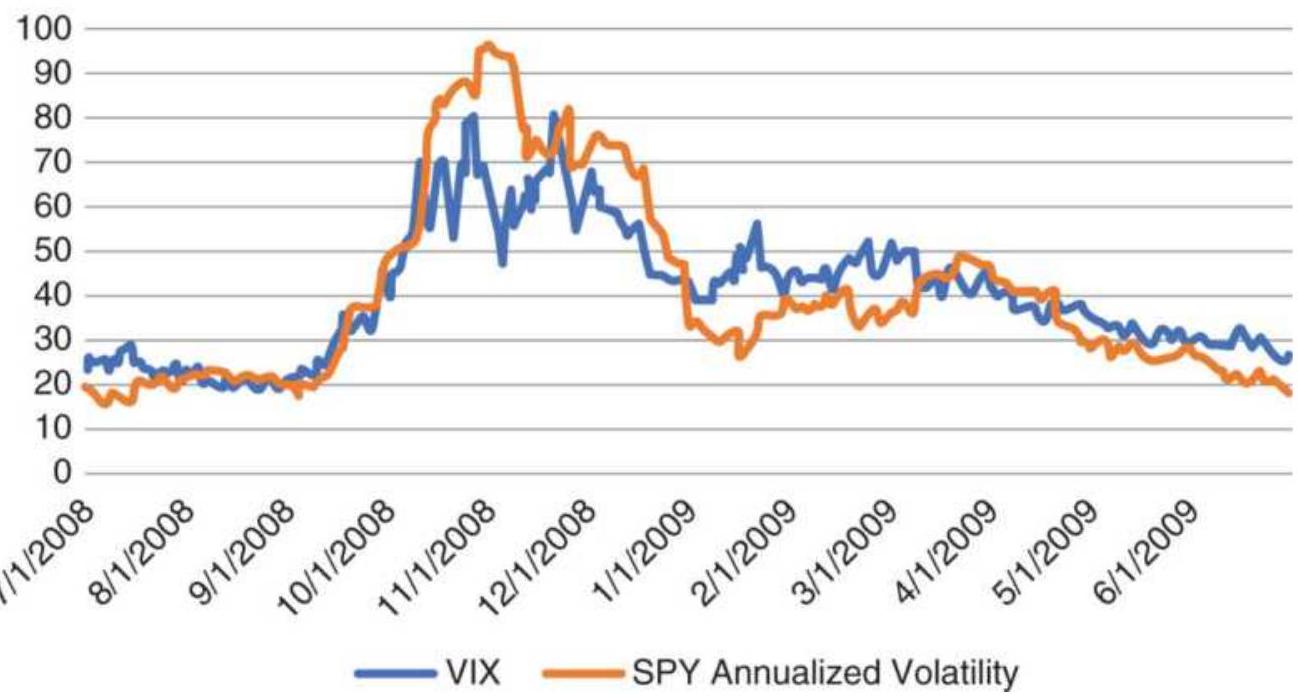

FIGURE 23.8 A closer look at VIX and SPY annualized volatility during the fi...

FIGURE 23.9 Trailing stop based on volatility, applied to Hang Seng futures....

FIGURE 23.10 30-Year bonds with an 80-day

moving average (the smooth line) a...

FIGURE 23.11 Three profit targets based on volatility and measured from the ...

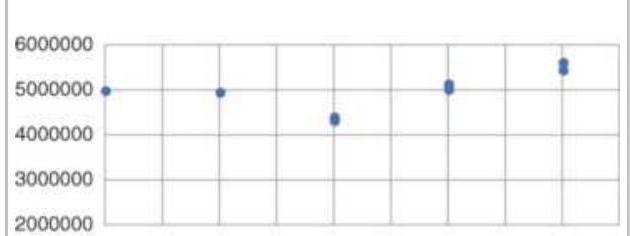

FIGURE 23.12 Wait for a pullback. Most markets show that waiting can improve...

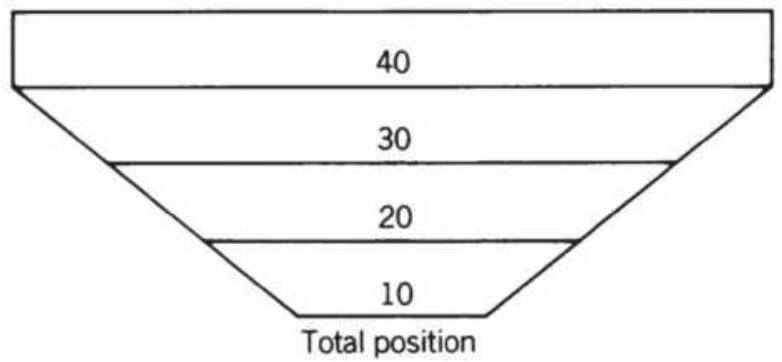

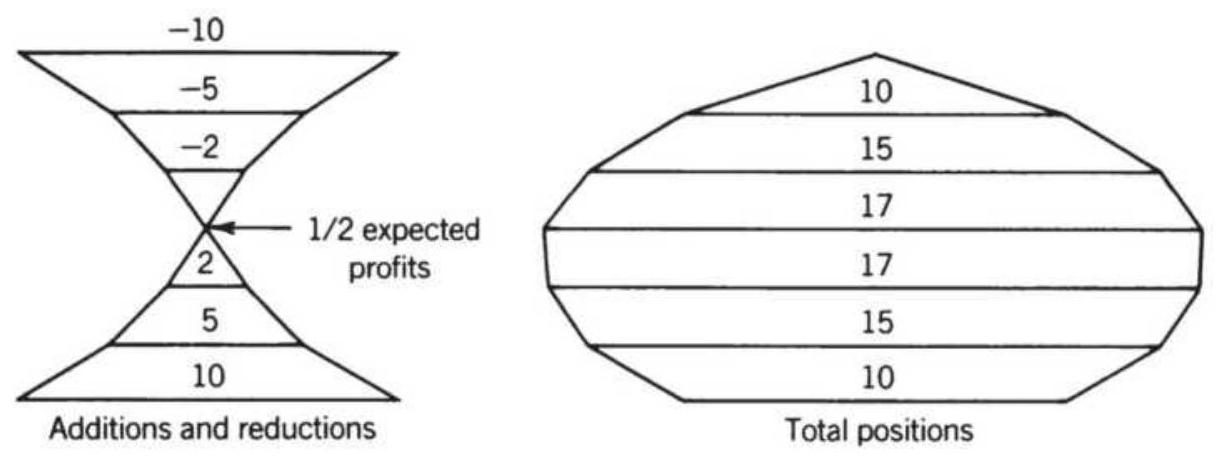

FIGURE 23.13 Compounding structures. (a) Scaled-down size (upright pyramid) ...

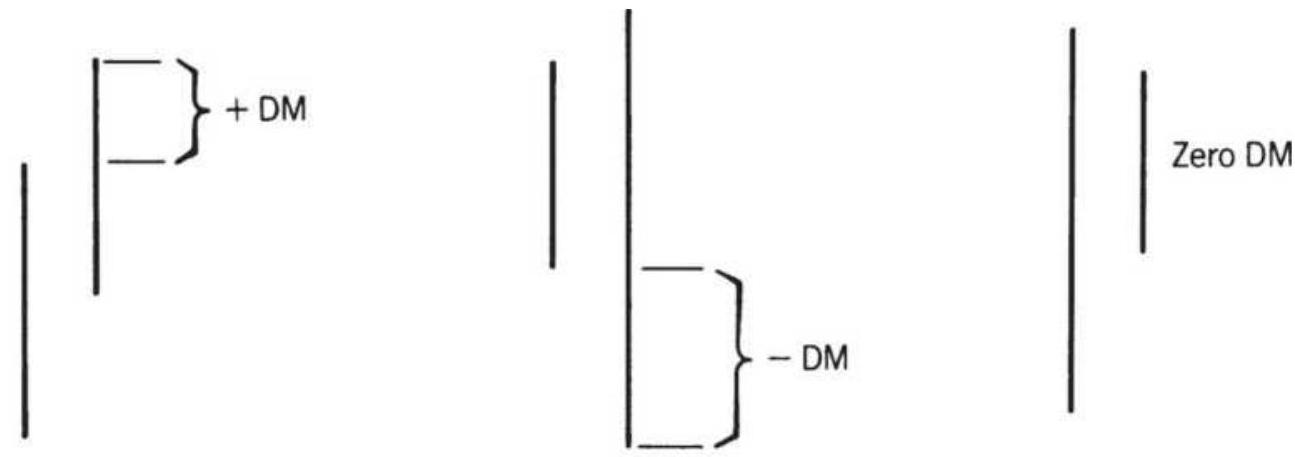

FIGURE 23.14 Defining the DM.

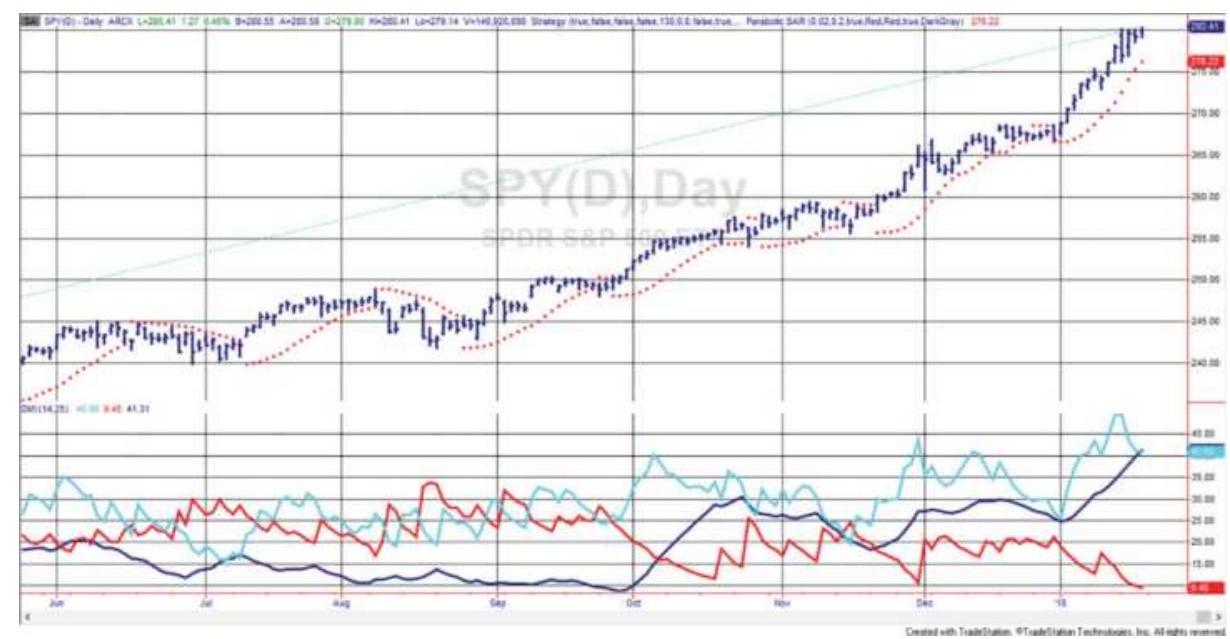

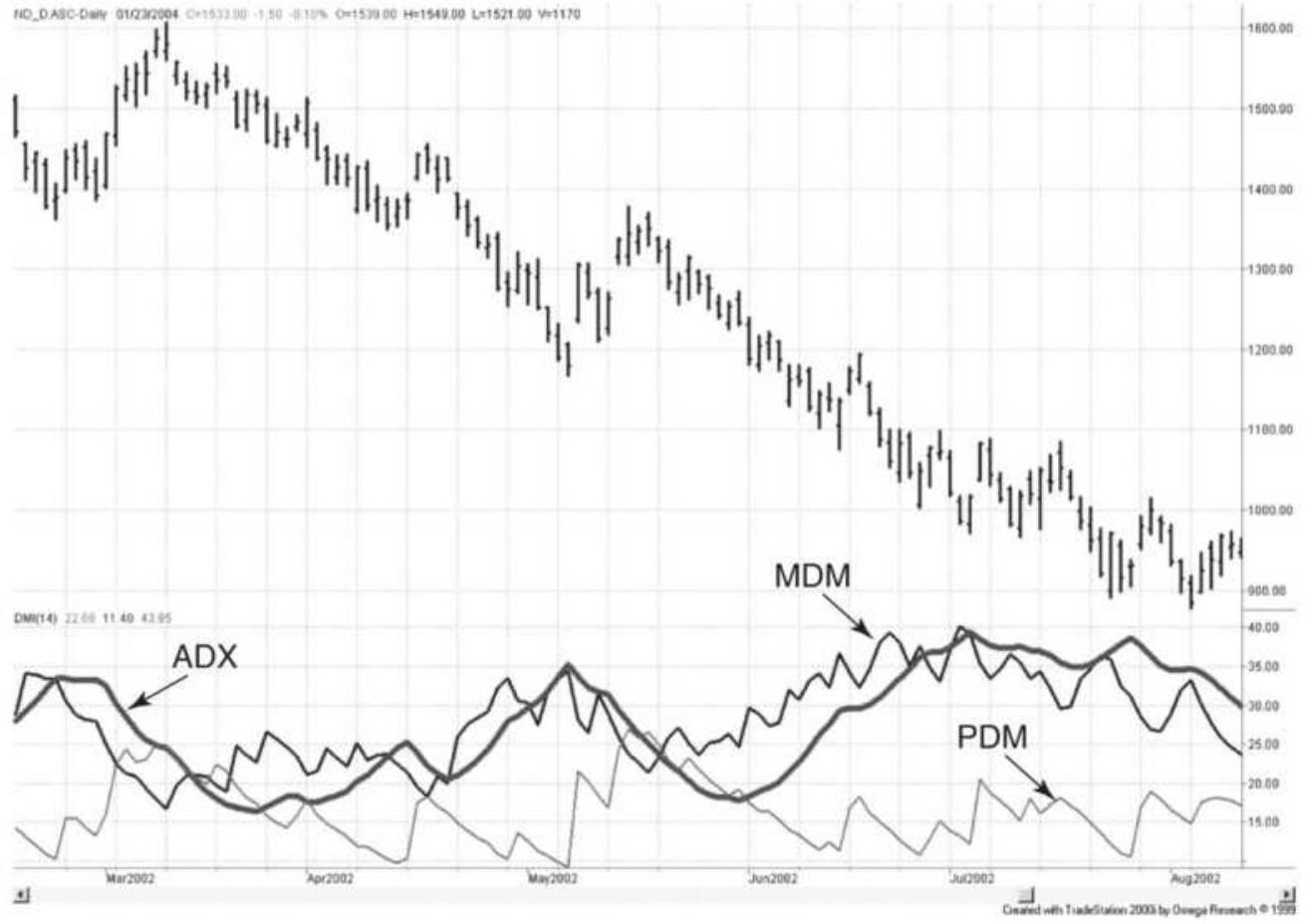

FIGURE 23.15 The 14-day ADX, PDM, and MDM, applied to NASDAQ 100 continuous ...

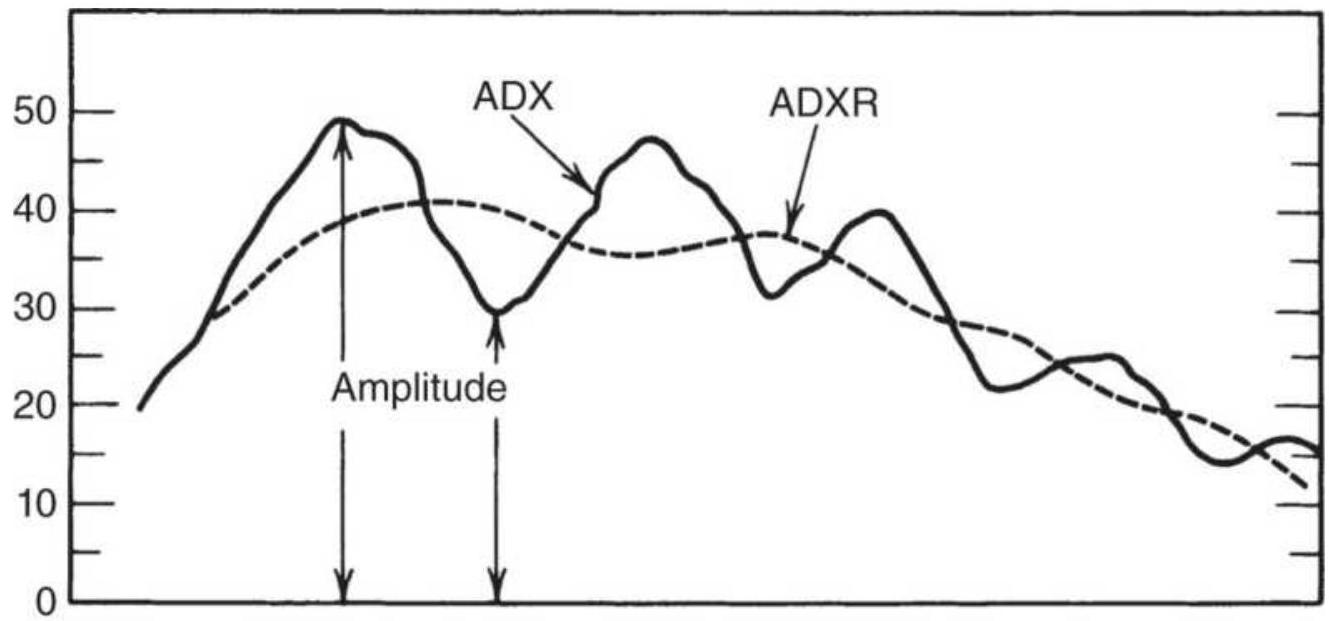

FIGURE 23.16 The ADX and ADXR.

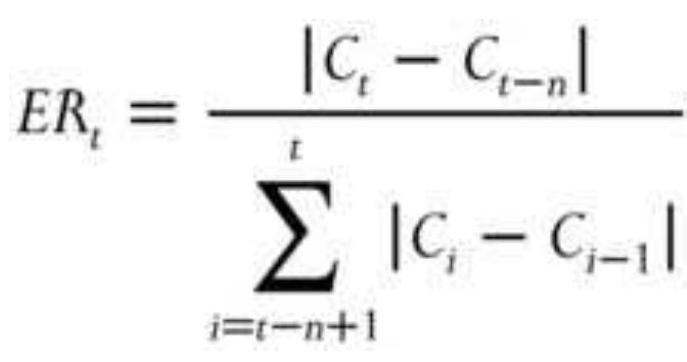

FIGURE 23.17 Scatter diagram of the profit factor versus the efficiency rati...

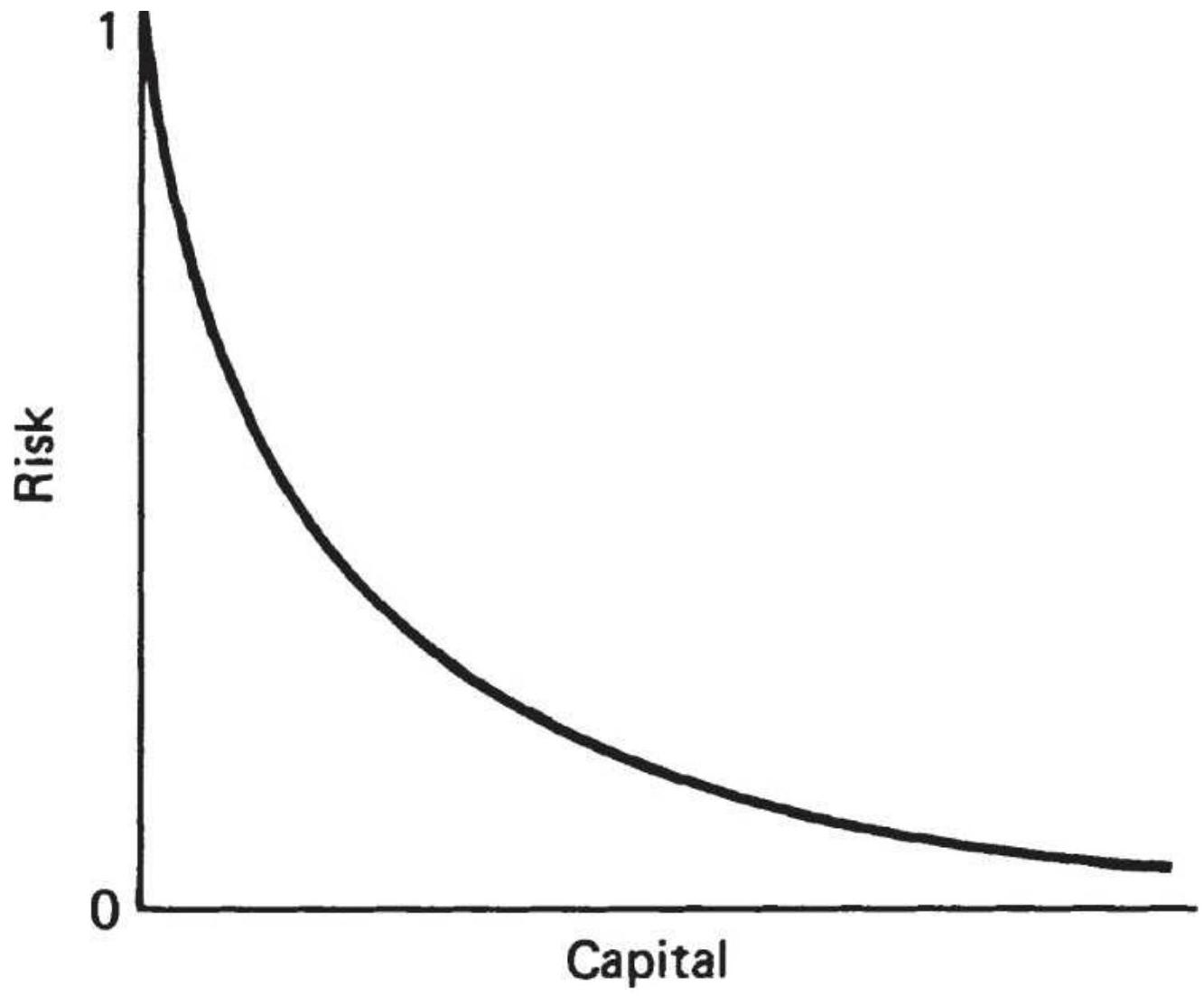

FIGURE 23.18 Risk of ruin based on invested capital.

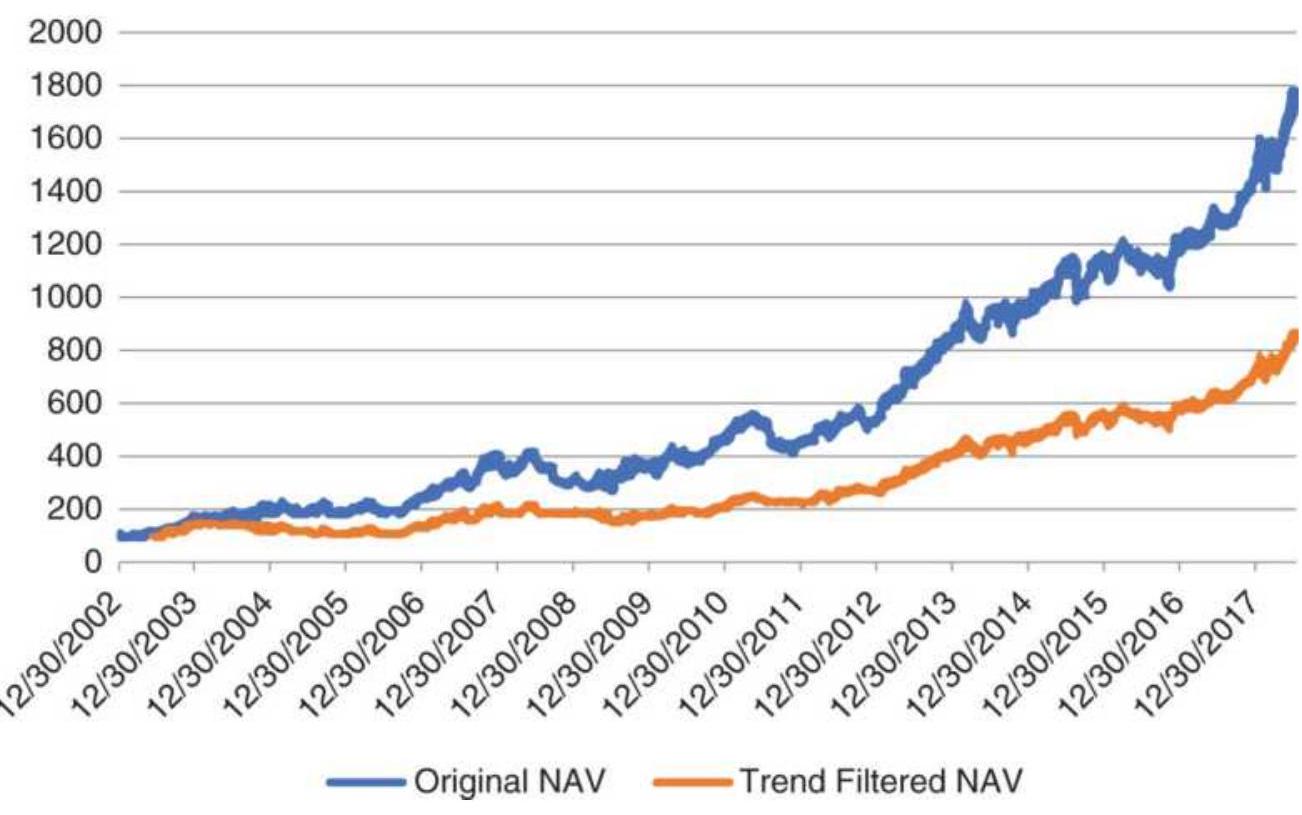

FIGURE 23.19 Original NAVs and filtered NAVs for a macrotrend system using s...

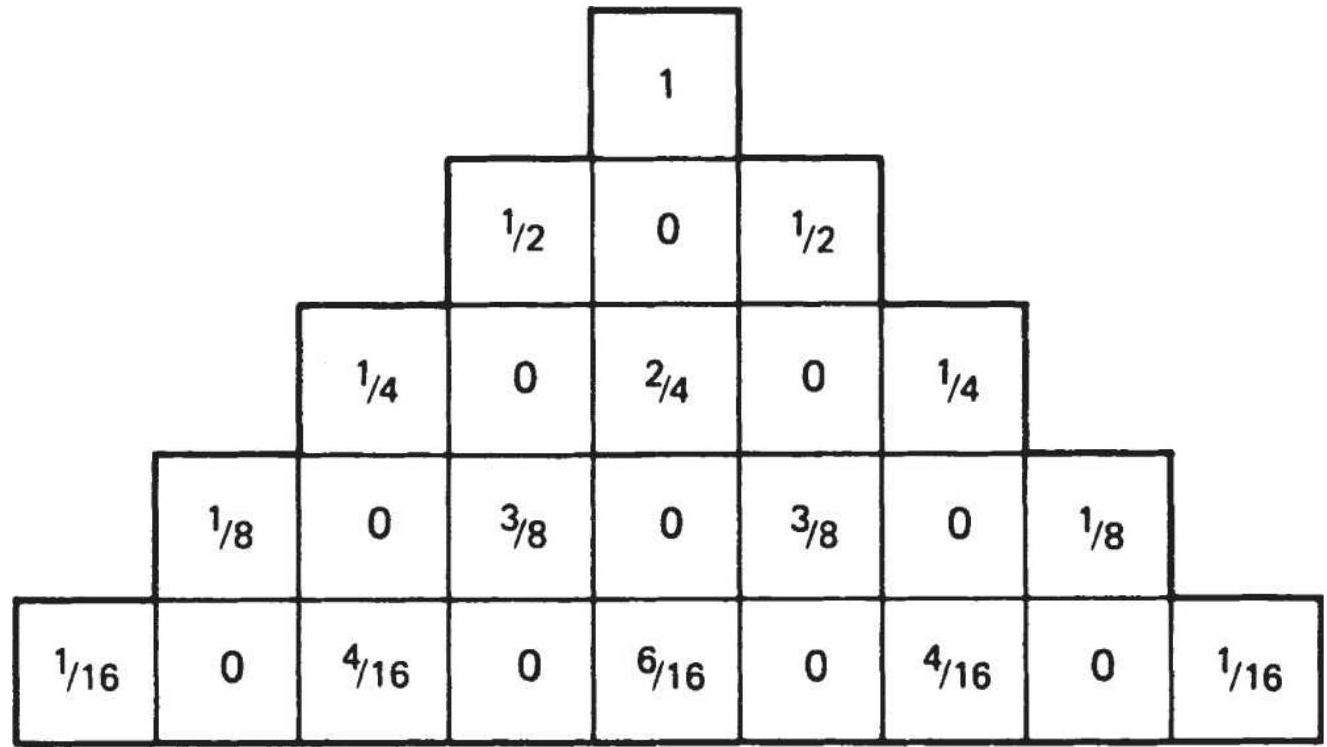

FIGURE 23.20 Pascal's triangle.

Chapter 24

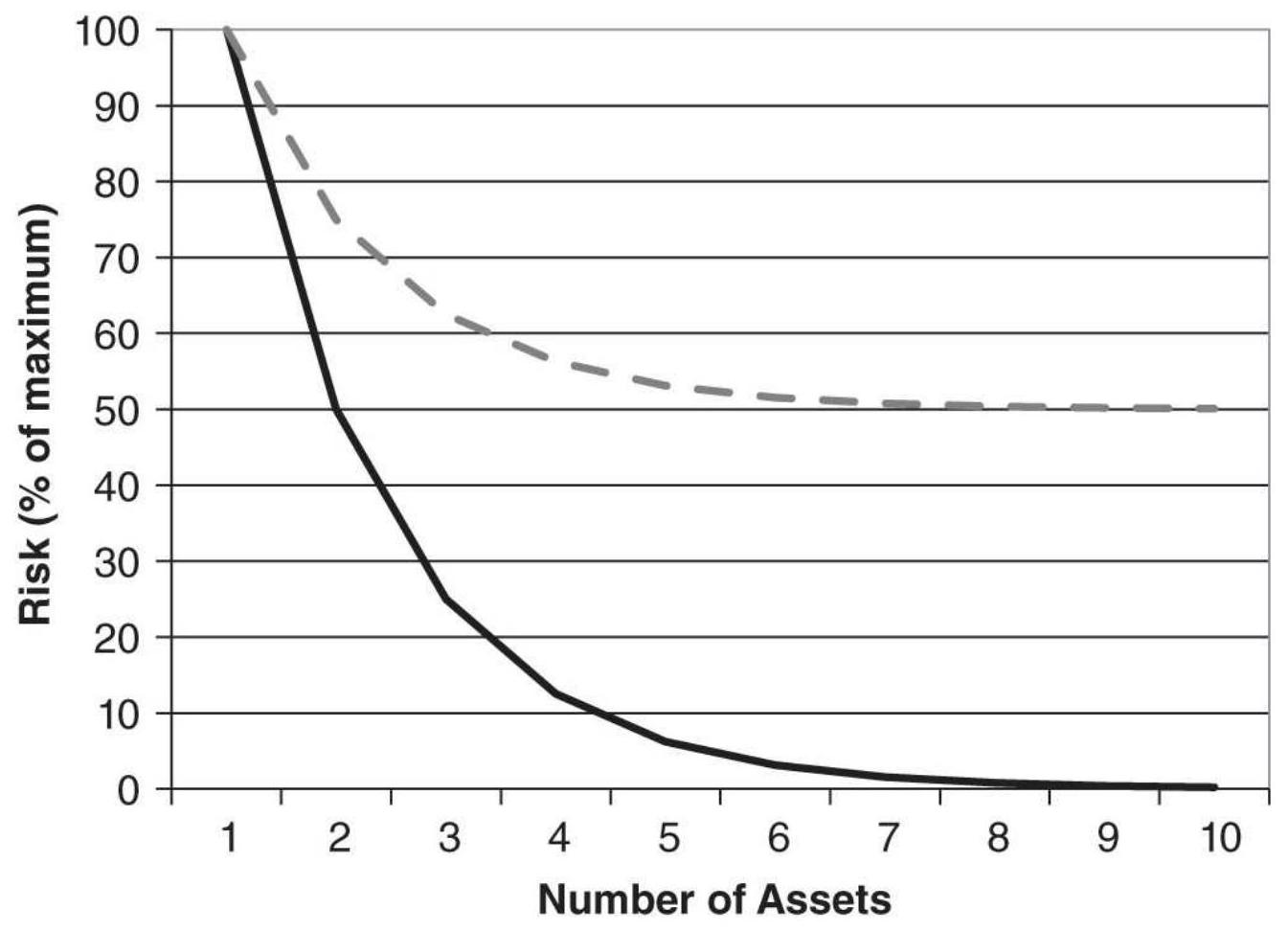

FIGURE 24.1 Effect of diversification on risk.

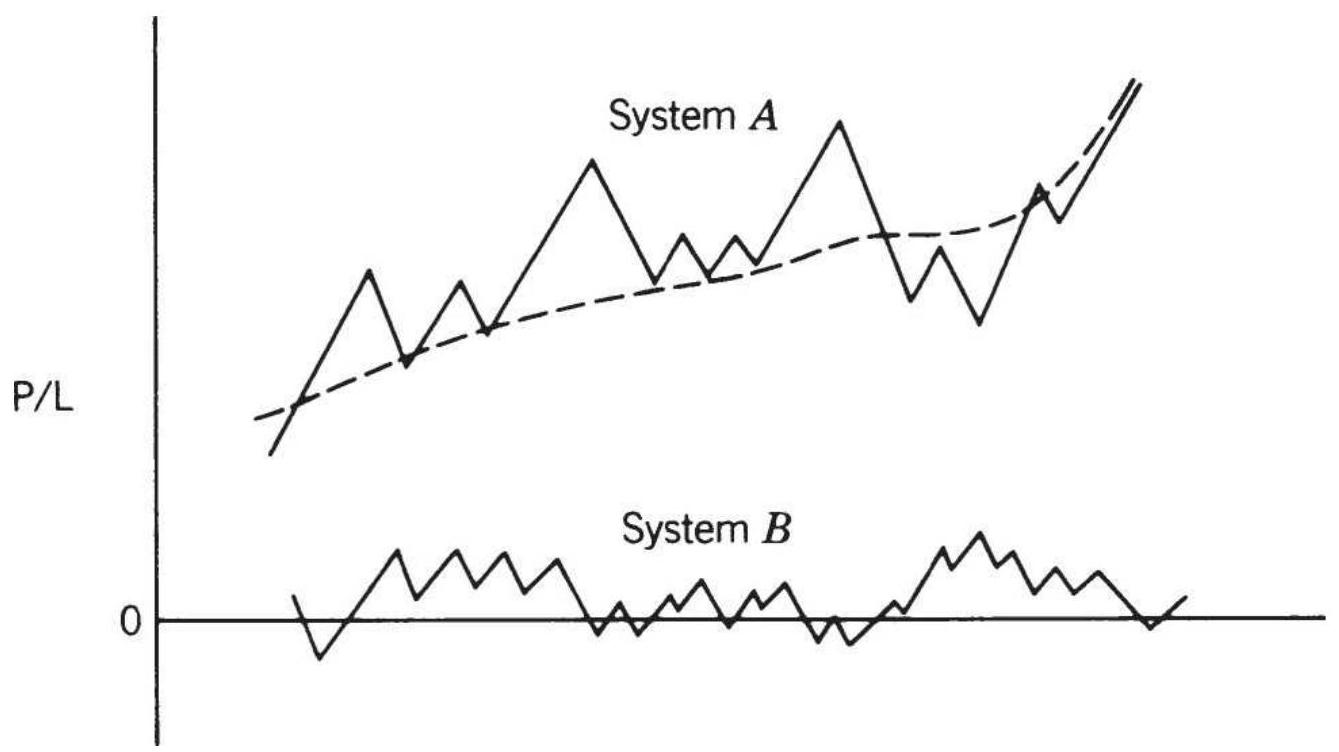

FIGURE 24.2 Improving the return ratio using negatively correlated systems....

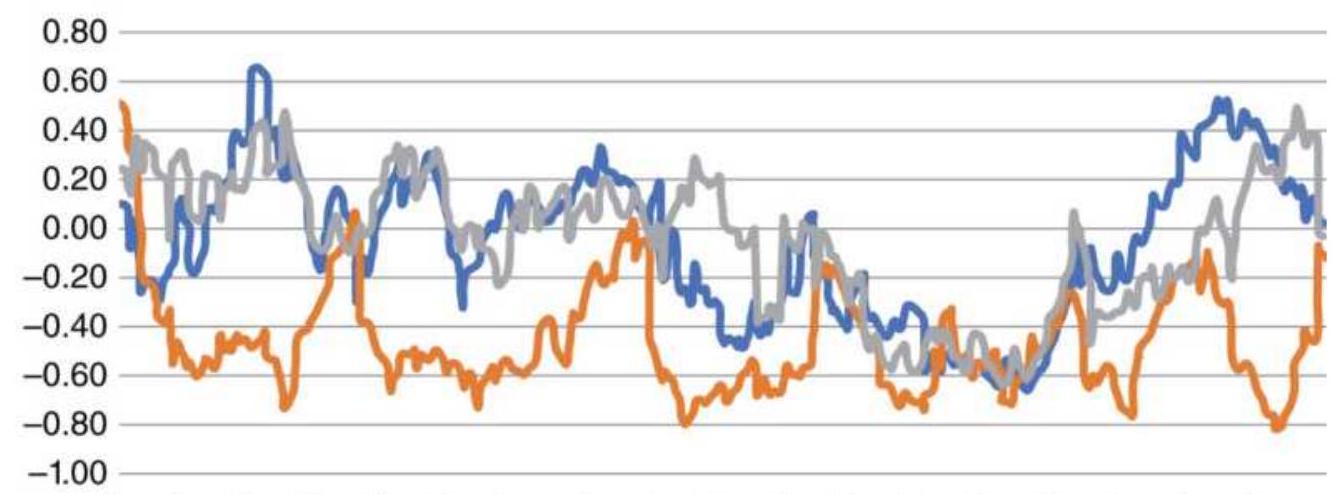

FIGURE 24.3 Rolling correlation of S\&P futures against 30-year bonds, the eu...

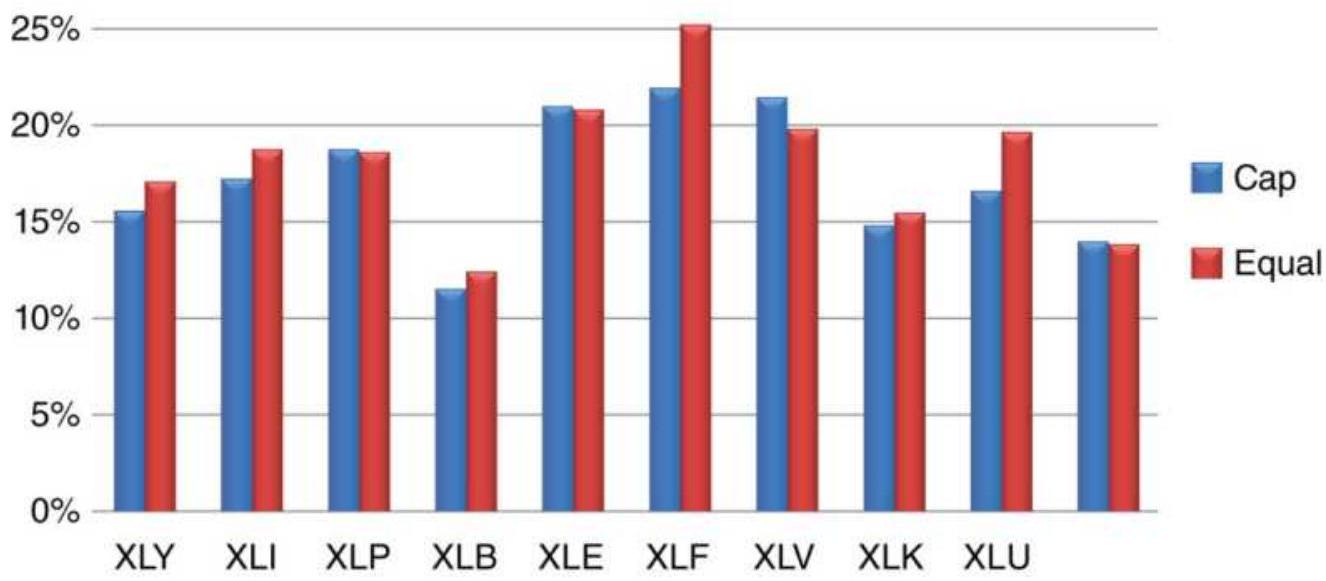

FIGURE 24.4 Returns of cap-weighted and

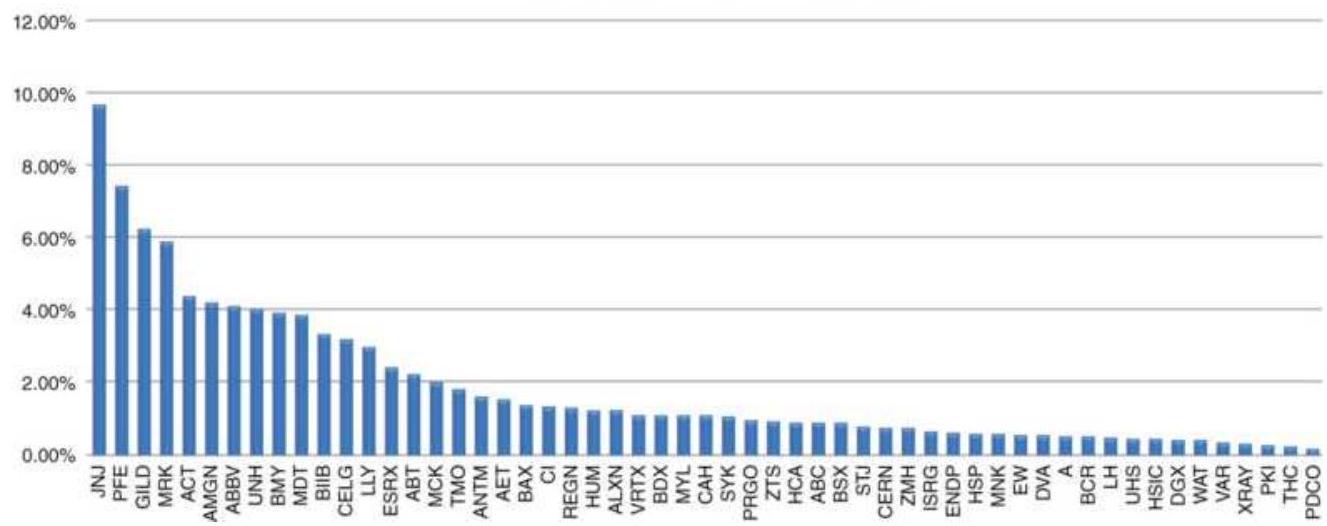

\section*{equally weighted sector ETFs.}

FIGURE 24.5 Weights of XLV components. FIGURE 24.6 Returns of XLV, capitalizationweighted 9 stocks, and equally-we...

FIGURE 24.7 SPY and BND NAVs compared to a portfolio of \(60 \%\) SPY + 40\% BND.

FIGURE 24.8 Prices of the 4 assets used in the Solver solution, converted to...

FIGURE 24.9 Solver results.

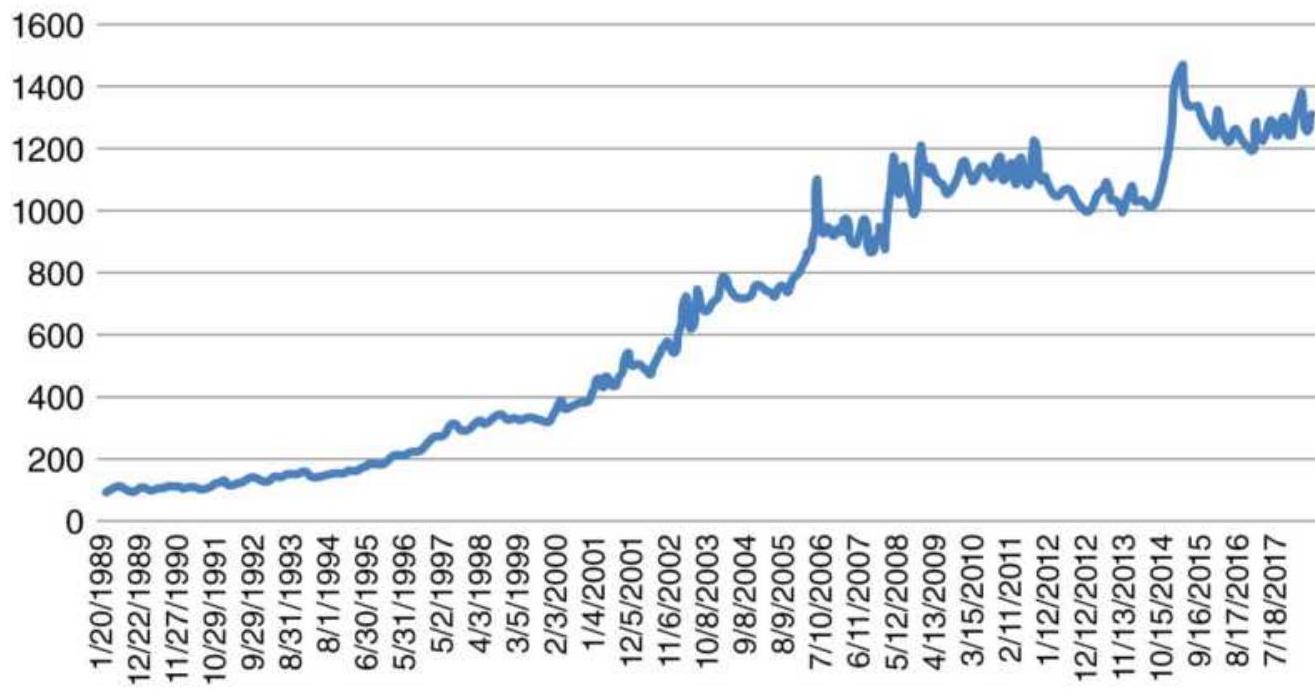

FIGURE 24.10 GASP solution for 16 NASDAQ 100 futures strategies.

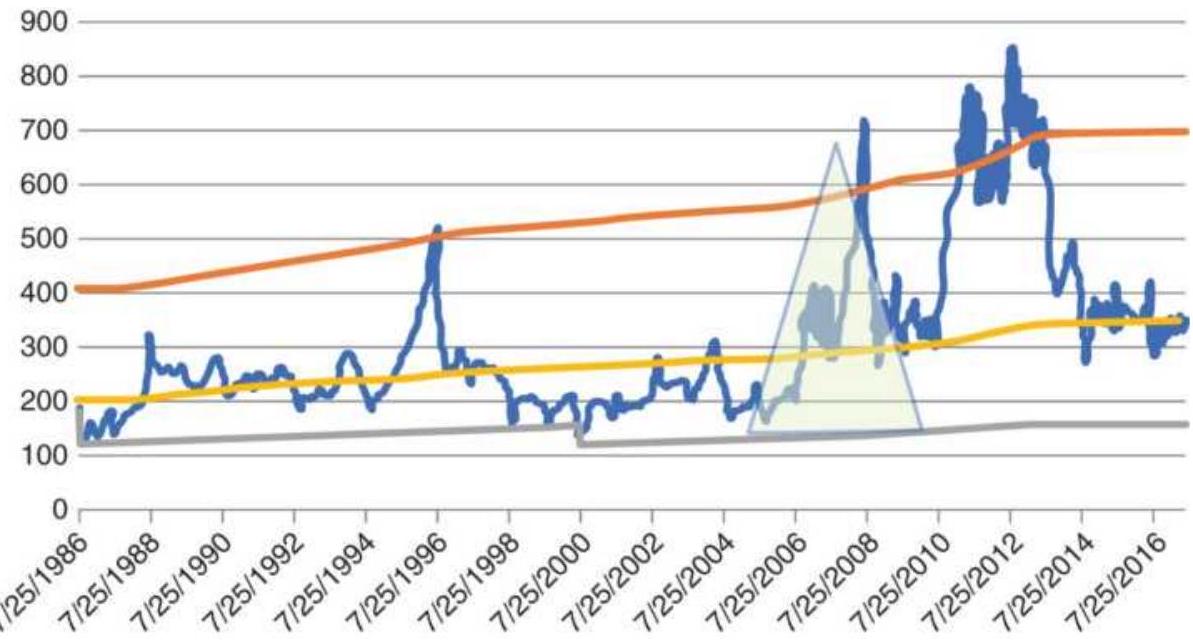

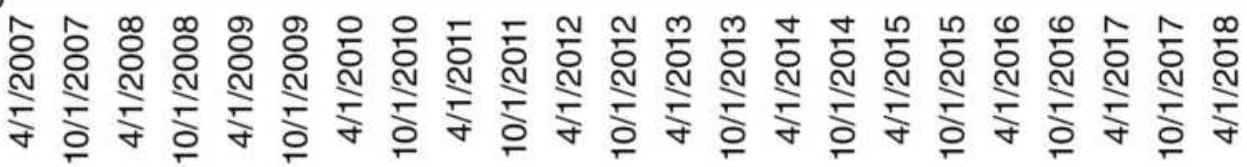

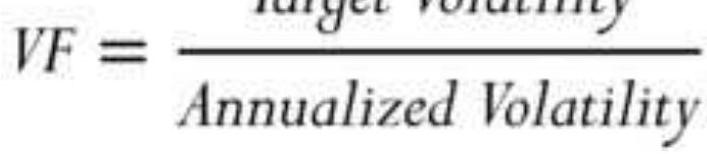

FIGURE 24.11 NAV of macrotrend system with no volatility stabilization.

FIGURE 24.12 NAV of the same macrotrend system with volatility stabilization...

\title{

TRADING SYSTEMS AND METHODS

}

Sixth Edition

\author{

Perry J. Kaufman

}

WILEY

Copyright (C) 2013, 2020 by Perry J. Kaufman. All rights reserved.

Published by John Wiley \& Sons, Inc., Hoboken, New Jersey.

Published simultaneously in Canada.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the Web at www.copyright.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley \& Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) \(748-6011\), fax (201) \(748-6008\), or online at www.wiley.com/go/permissions.

Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or completeness of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created or extended by sales representatives or written sales materials. The advice and strategies contained herein may not be suitable for your situation. You should consult with a professional where appropriate. Neither the publisher nor author shall be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages.

For general information on our other products and services or for technical support, please contact our Customer Care Department within the United States at (800) 762-2974,

outside the United States at (317) 572-3993, or fax (317) 5724002.

Wiley publishes in a variety of print and electronic formats and by print-on-demand. Some material included with standard print versions of this book may not be included in e-books or in print-on-demand. If this book refers to media such as a CD or DVD that is not included in the version you purchased, you may download this material at http://booksupport.wiley.com. For more information about Wiley products, visit www.wiley.com.

\section*{Library of Congress Cataloging-in-Publication Data is Available:}

ISBN 9781119605355 (Hardcover)

ISBN 9781119605386 (ePDF)

ISBN 9781119605393 (ePub)

Cover Design: Wiley

Cover Images: (C) Nikada/Getty Images,

(C) KTSDESIGN/SCIENCE PHOTO LIBRARY/Getty Images

Author Photo Credit: AlexZ Photography

To my mother, in her \(100^{\text {th }}\) year

\section*{PREFACE}

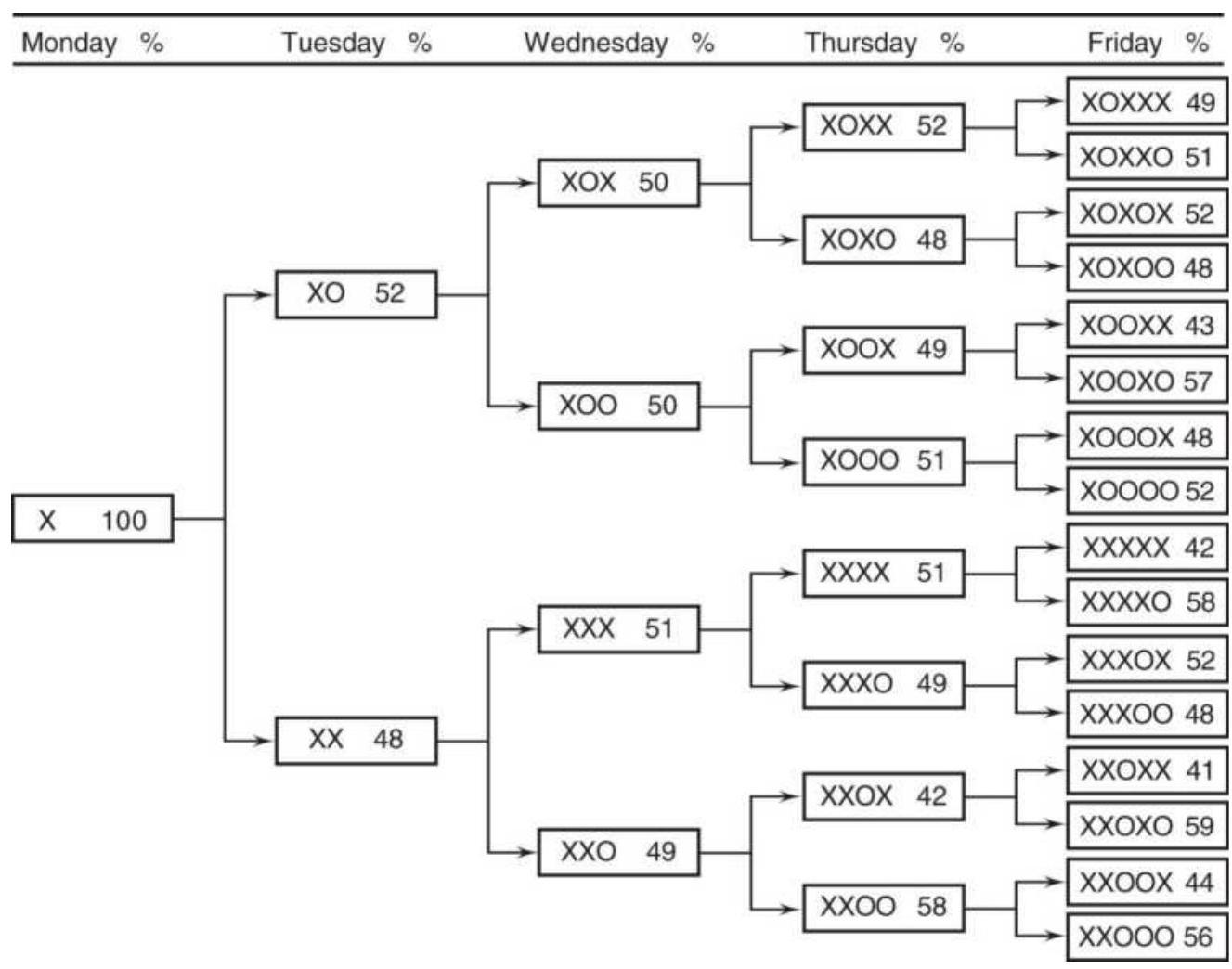

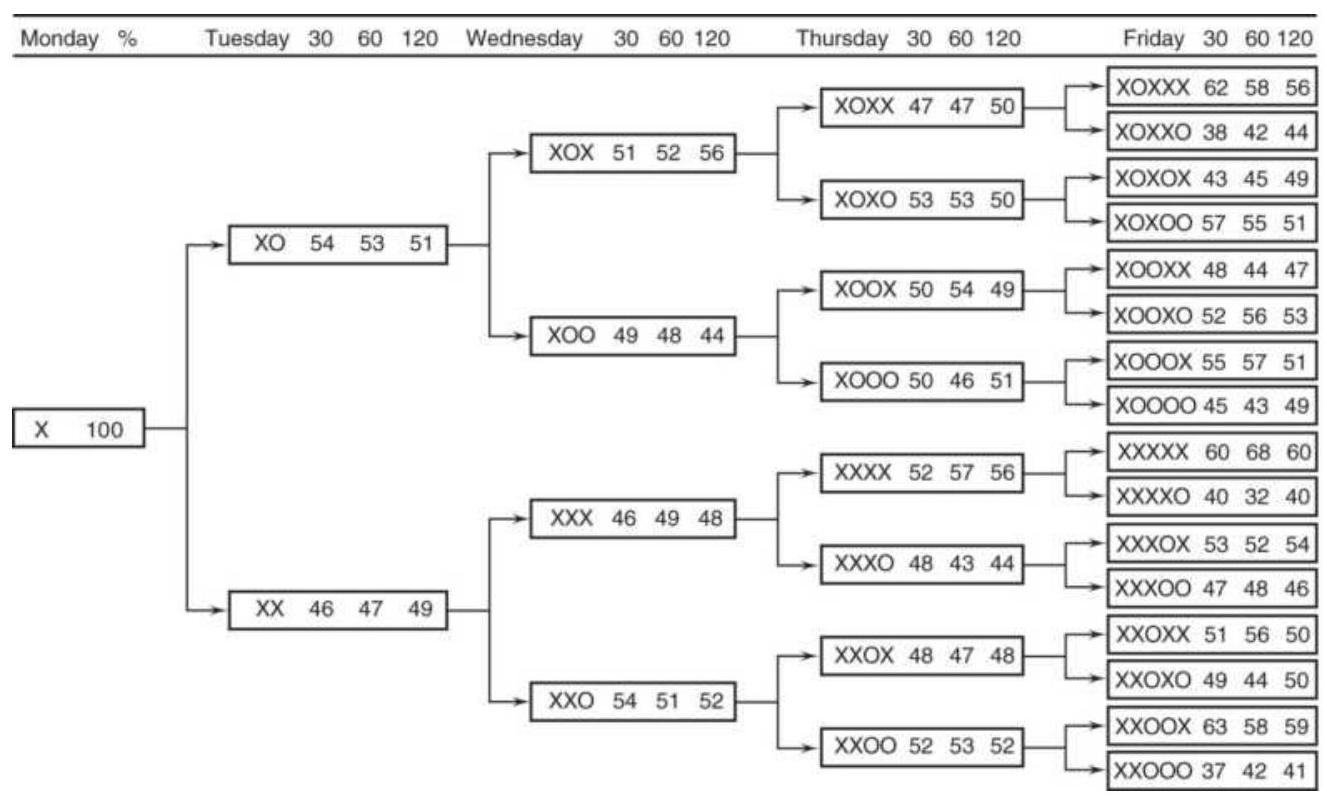

What I've learned by trading and studying the markets for many years is that markets do not repeat themselves. Yes, there are similar moves for different reasons, and seemingly the same reasons cause different moves. Where is the common ground? I believe it is in turning specific patterns into generalized ones. For example, is a weekly pattern where there are four days up and one day down on Tuesday different from four days up and a down day on Friday? It's not different if you see it only as four days up and one day down. Successful strategies move from the specific to the general.

Success in trading is in the ability to see the bigger picture, the shape of the price moves rather than the highly specific pattern. That's why long-term moving averages work. You can mix the prices around and still get the same average. Fine tuning was never a good solution. We always return to the idea "loose pants fit everyone." Because we don't know exactly how a price move will develop, we need to build in the flexibility to stay with your strategy through as many challanging scenarios as possible.

\section*{THE MOVE TOWARD MORE ALGORITHMIC APPROACH}

The algorithmic trader, myself included, is more comfortable having an idea of the risk and reward of a

system, knowing full well that future losses can be greater, but so can future profits. What makes traders nervous is the unknown and unexpected risk. Having any type of loss-limiting method, whether a stop loss or just the change in trend direction, means you have some control over risk. It may not be perfect, but it's much better than watching your equity disappear and having to make a decision under stress. "Better to be out and wish you were in, than in and wish you were out."

Institutions such as Blackrock see algorithmic solutions in a different light. It is said that a year ago they eliminated portfolio stock selection by managers in favor of computerized selection. There are methods, discussed in Chapter 24, that have proved highly successful, and don't require more than a few seconds of compute time (although a substantial database is needed). A computer may not be better than the best trader, but it can compete at a high level.

I know a trading company that is gearing up to provide artificial intelligence support for clients, including portfolio selection and individual trade

recommendations. Their approach also includes training for beginning and intermediate traders. Is this the way knowledge is going to be disseminated in the future? It may be clearer to get an answer from a computer than to ask an "expert." And, if you don't yet understand, you can keep asking and the computer won't become impatient.

\section*{COMPETITION}

Trading has become more competitive. High-frequency trading surged 10 years ago as technology made access faster and easier. Just like program trading, institutions jumped into the space, quickly reducing the chances for making big returns. Many players dropped out, not willing to allocate capital to small returns. The market seems to sort all of it out on its own.

It is the same with the deluge of ETFs. There are multiple ETFs for nearly every aspect of the markets, the S\&P 500, S\&P high dividend stocks, growth stocks, leveraged, and every sector in the S\&P, with inverse ETFs for each one, midcaps, small caps, no caps. Again, the market sorts them out. Simply look at the volume to know which will survive.

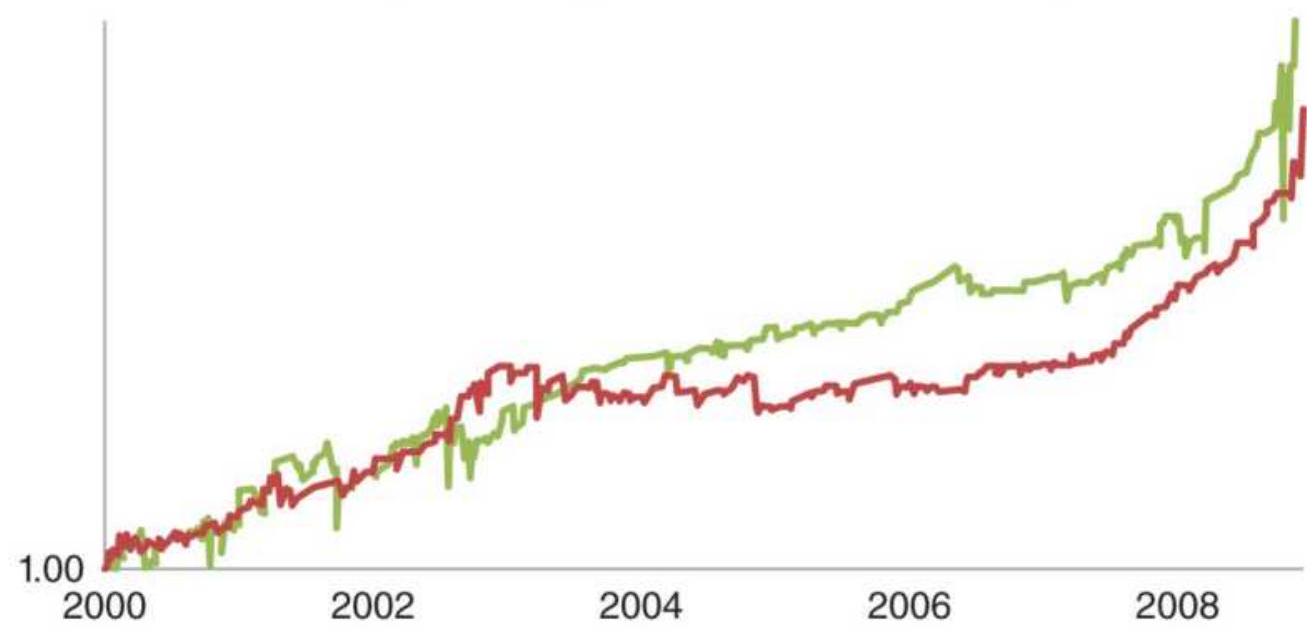

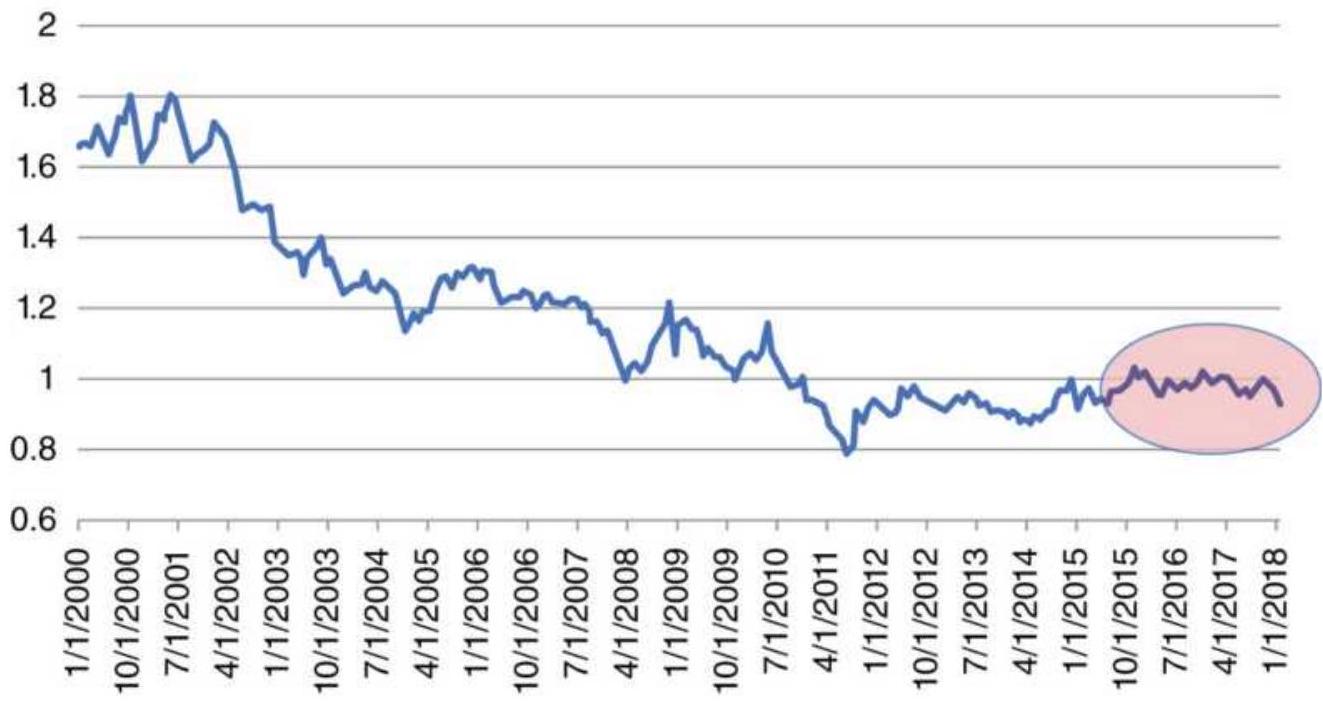

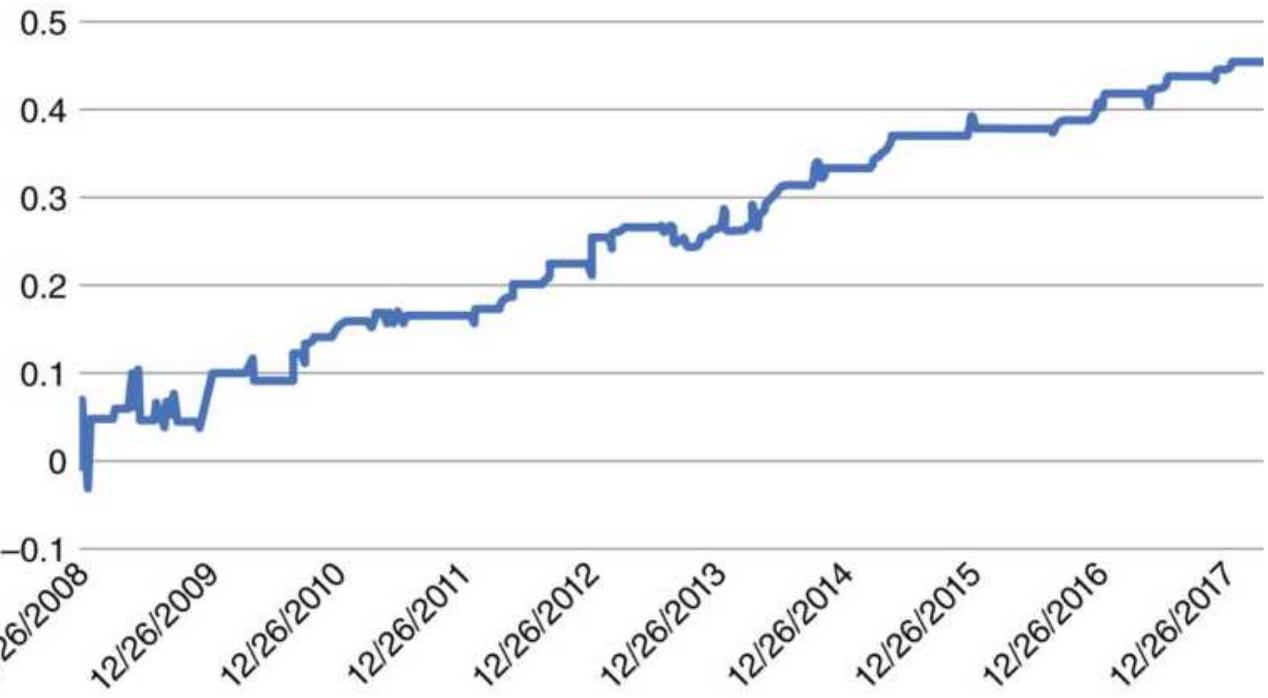

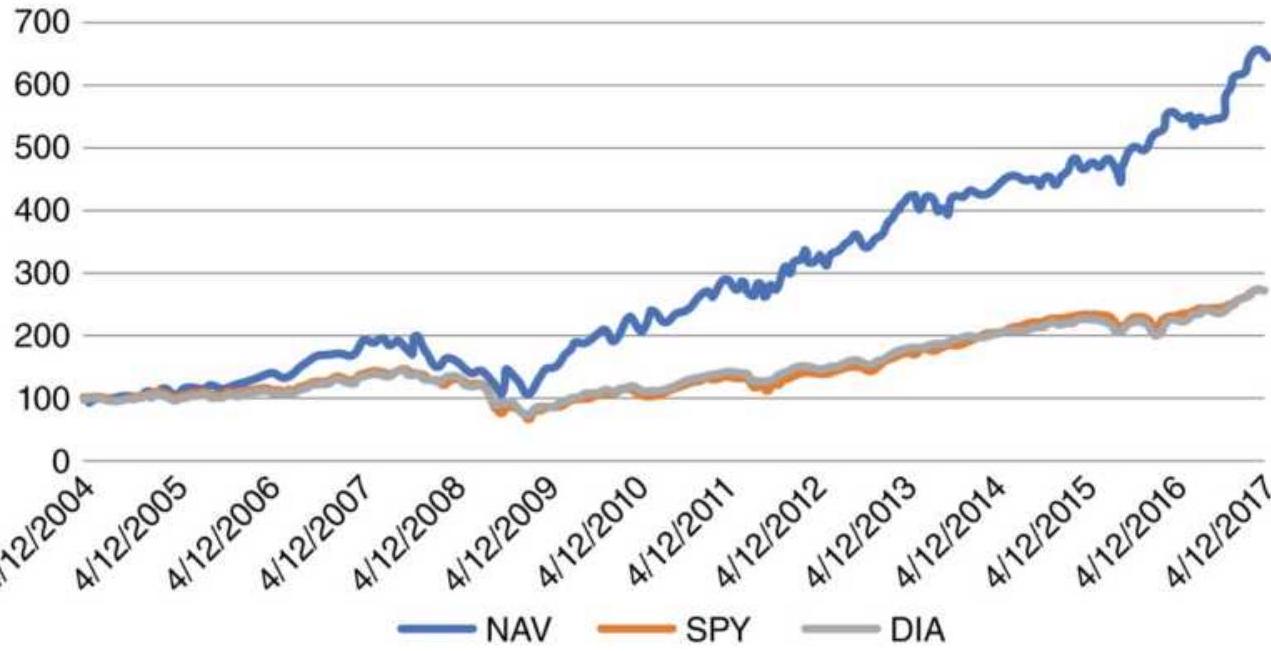

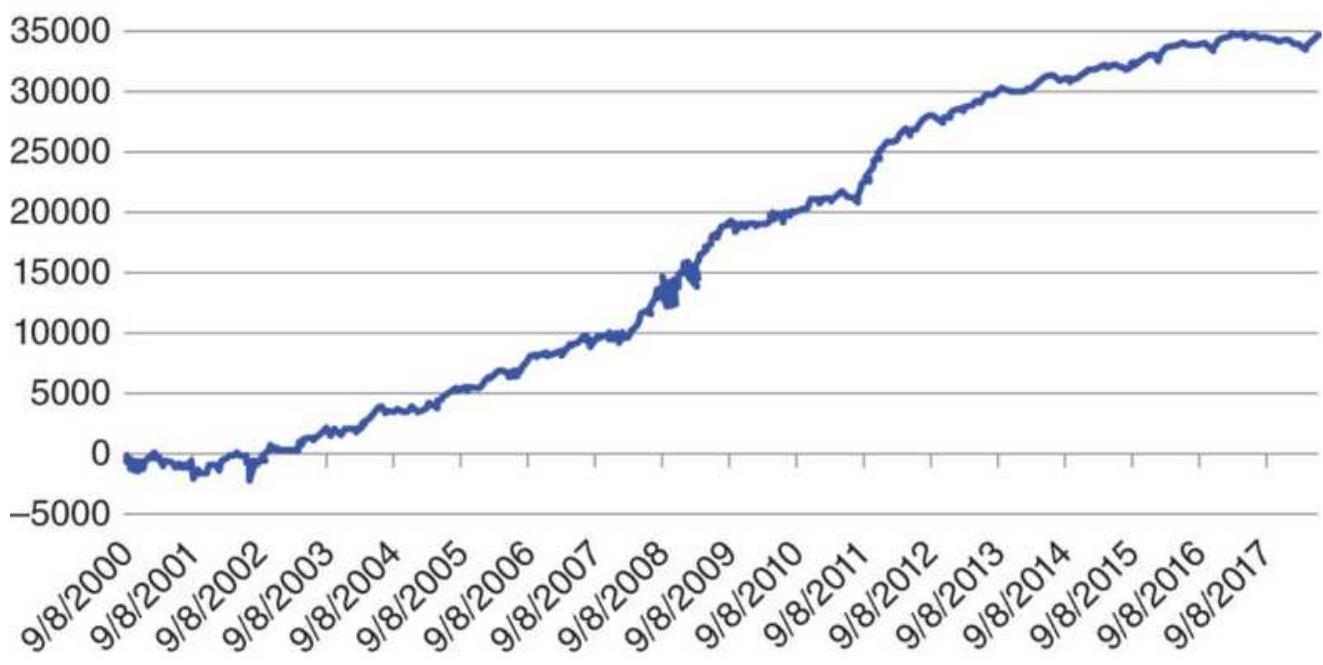

What about the trend follower? Can he or she survive? Because major trends are based on fundamentals, usually interest rate policy, growth, or trade, they continue to drive prices with persistence, sometimes lasting for six months, sometimes for six years, and in the case of U.S. interest rates, for most of 35 years. We can't capture all of that move, and there are some volatile periods along the way, but a macrotrend trader will capture enough to be rewarded.

\section*{ACCEPTING RISK}

One of the most important lessons that I've learned is to accept risk. No matter how we engineer a trading system, adding stops and profit-taking, leveraging up and down, and hedging when necessary, it's not possible to remove the risk. If you think you've eliminated it in one place, it

will pop up somewhere else. If you limit each trade to only a small loss, a series of losses will still add to a large loss.

The way to survive is first to understand the risk profile of your method. Then capitalize it so that you won't panic and do something irrational, such as sell out at the lows. As you accumulate profits, you can increase your investment without risking your initial capital. Think of it as a long-term partnership with the market.

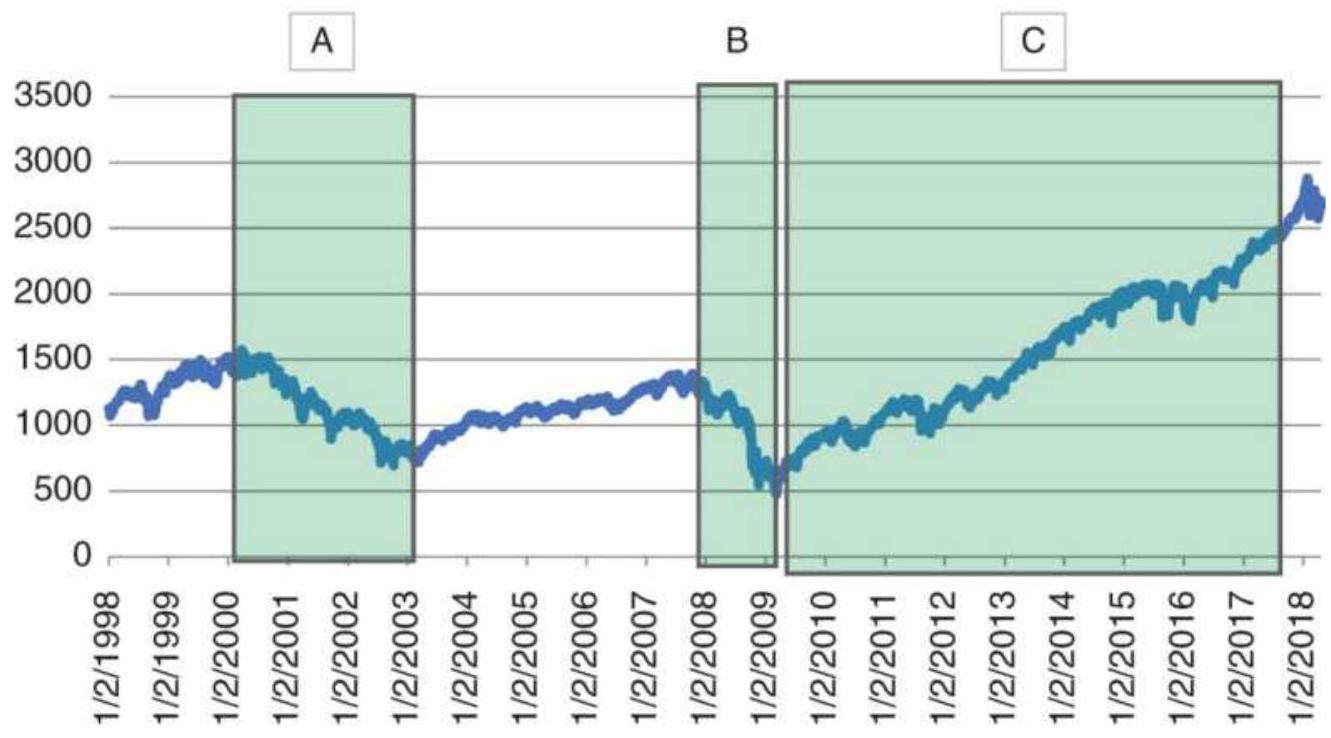

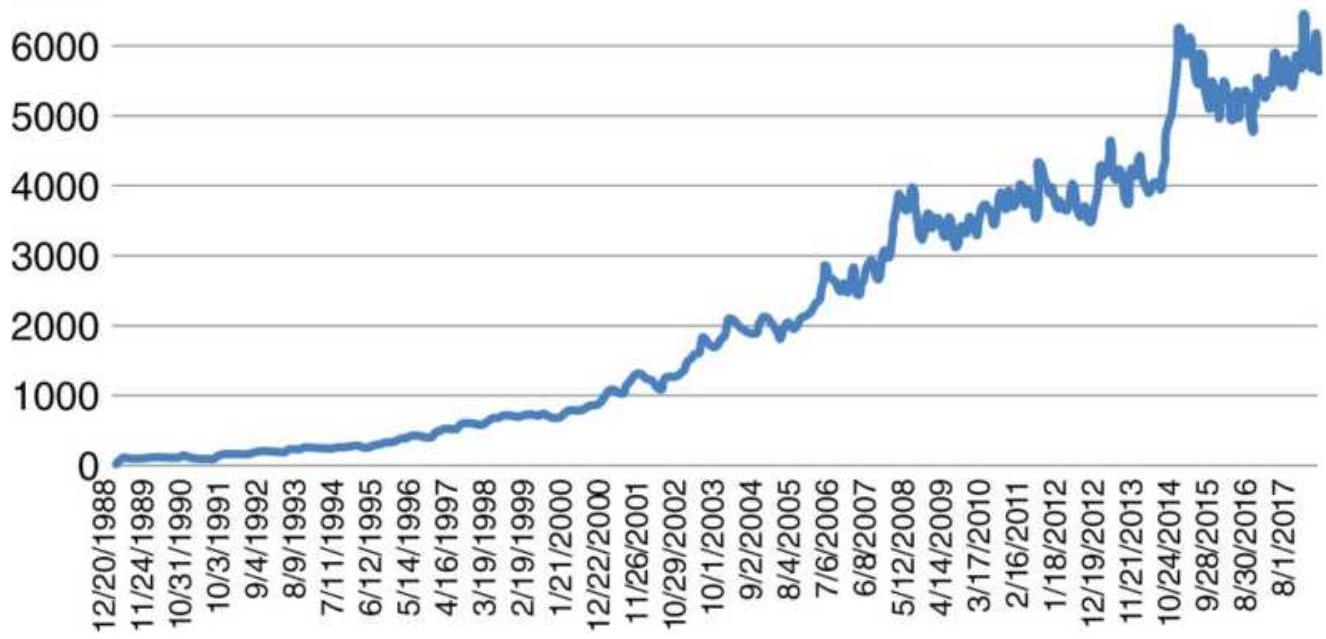

\section*{THE LONG BULL MARKET}

Following the 2008 financial crisis, the U.S. experienced one of the longest bull markets in its history. During these unusual periods, traders try to adjust to low volatility and small drawdowns. Buying any pullback is profitable. But all bull markets end, just as the Internet bubble ended in 2000. They don't all "burst," but they become far more volatile as they revert to their longterm pattern.

Taking advantage of an unusual pattern can be profitable but should only be done with a small part of your investment. The next pattern is not likely to last as long as the 8 -year bull market. Watching the way prices move can lead to changes in the way you enter orders. For example, during past few years, stocks that gap much higher on earnings reports tend to close even higher. Stocks that gap much lower tend to close near or above their open. Observations can be turned into profits. There is no substitute for watching price movement.

\section*{WHAT'S NEW IN THE SIXTH EDITION}

Besides updating many of the charts and examples, some of the chapters have been largely rewritten to make them clearer and better organized. Unnecessary detail has been removed to make room for more new material, such as artificial intelligence and game theory. More professional techniques have been added, including volatility stabilization and risk management. There are new systems and techniques, most of which have been programmed and can be found on the Companion Website. Large tables have been removed in favor of putting them online. Many of the tables now appear in Excel format, which I find easier to read. Some of the math has been removed and replaced by Excel functions and other software apps.

I recognize that a large part of the readership in now outside the United States. Some of the new examples use Asian markets. Many of the more technical words familiar to U.S. readers have been replaced by more general explanations. I'm sure that readers in all countries will find this an improvement.

\section*{COMPANION WEBSITE}

The Companion Website is an important part of this book. You will find hundreds of TradeStation programs and Excel spreadsheets, and some MetaStock programs, that allow you to test many of the strategies

with your own parameters. Look for the "e" in the margin to indicate a Website program. There is no substitute for trying it yourself, then modifying the code to reflect your own ideas.

In addition, the Appendices in the previous edition, and the Bibliography, have been moved to the Companion Website to make room for new material.

\section*{WITH APPRECIATION}

This book draws on the hard work and creativity of hundreds of traders, financial specialists, engineers, and many others who are passionate about the markets. They continue to redefine the state of the art and provide all of us with profitable techniques and valuable tools.

The team at John Wiley have provided a high professional level of support for my work over the past 40 years. It is not possible to name all of those who have helped, from Stephen Kippur to Pamela van Giessen, and now Bill Falloon and Michael Henton. I truly appreciate their efforts.

As a final note, I would like to thank all the previous readers who have asked questions that have led to clearer explanations. They are the ones who find typographical errors and omissions. They have all been corrected, making this edition that much better.

Wishing you success,

Freeport, Grand Bahama

December 2019

\section*{CHAPTER 1}

\section*{Introduction}

It is not the strongest of the species that survive, nor the most intelligent, but the ones most responsive to change.

-Charles Darwin

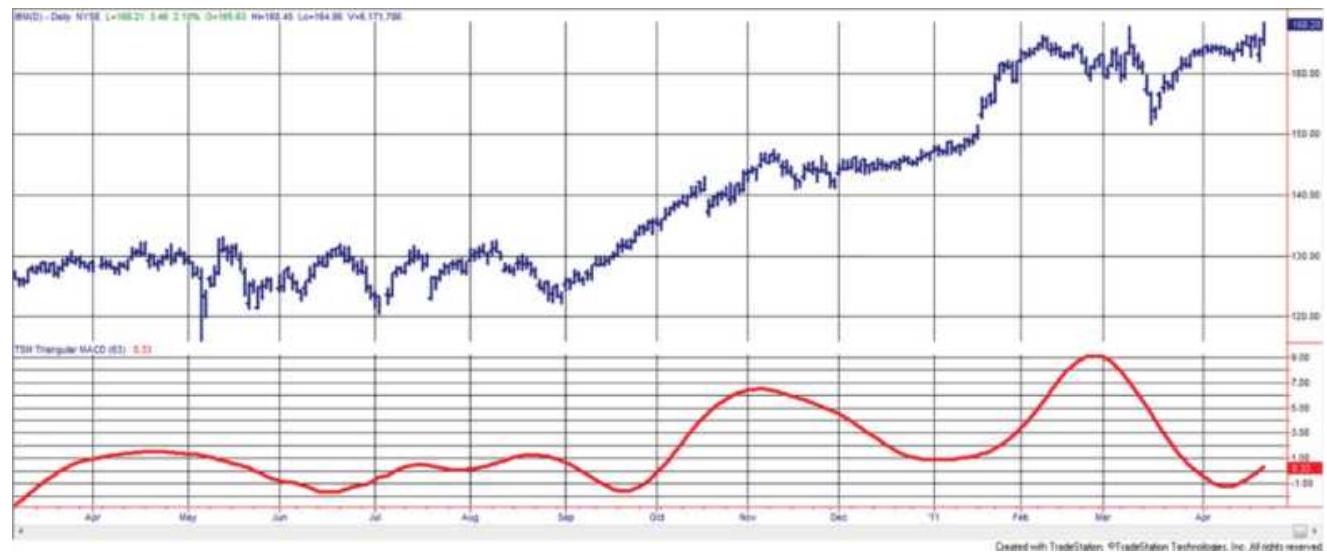

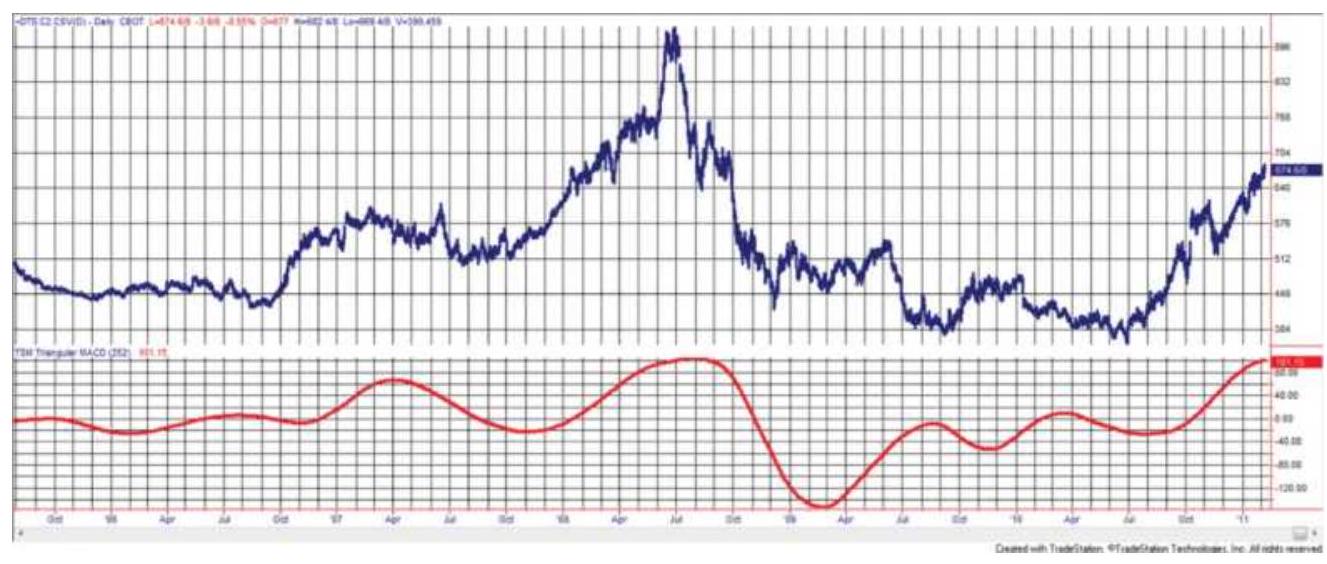

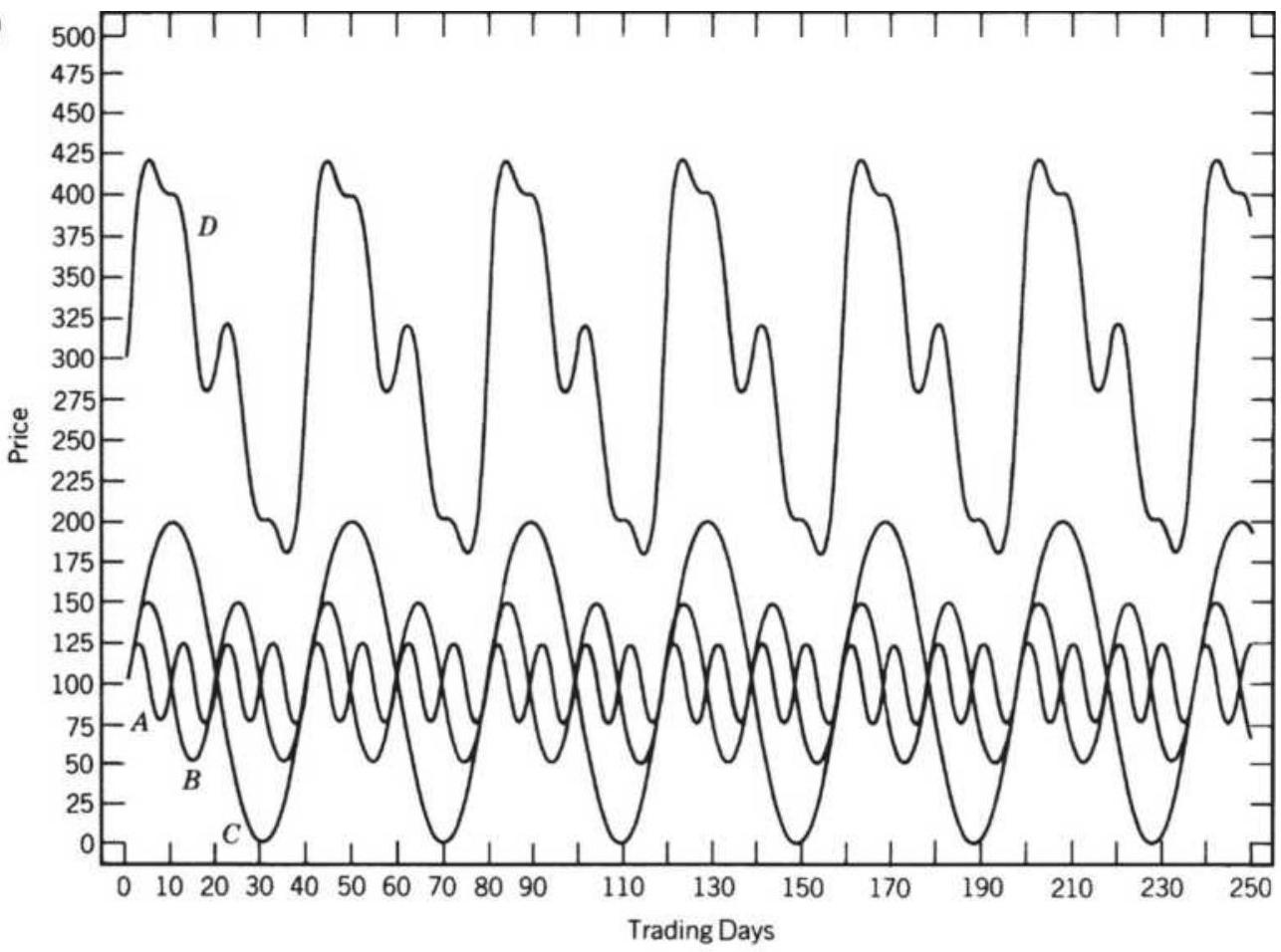

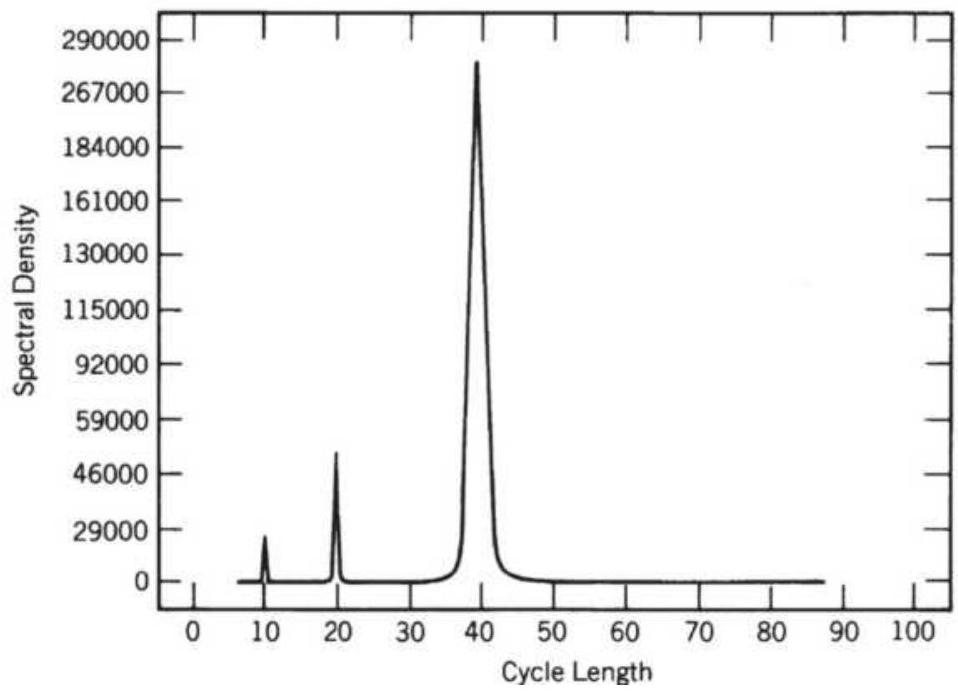

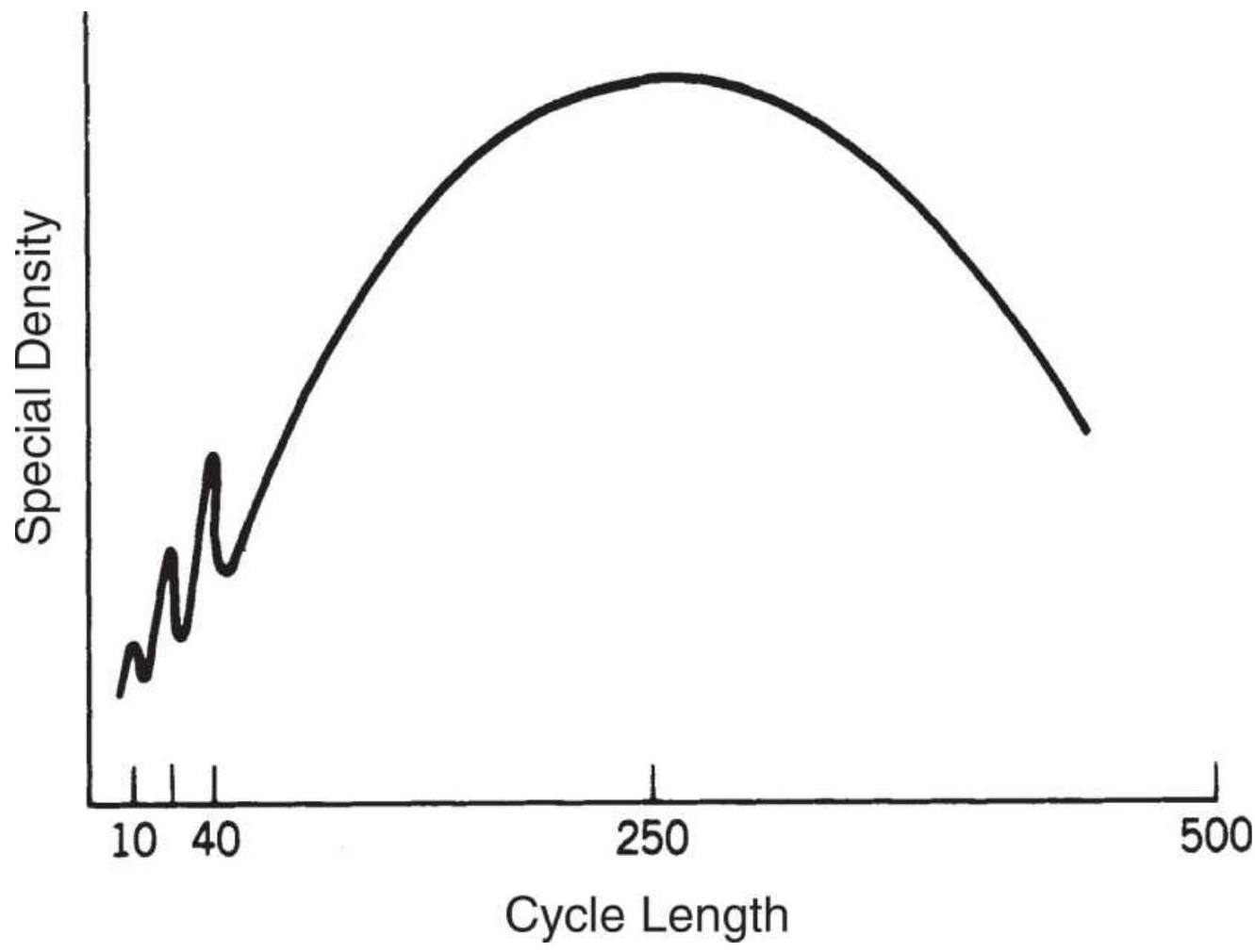

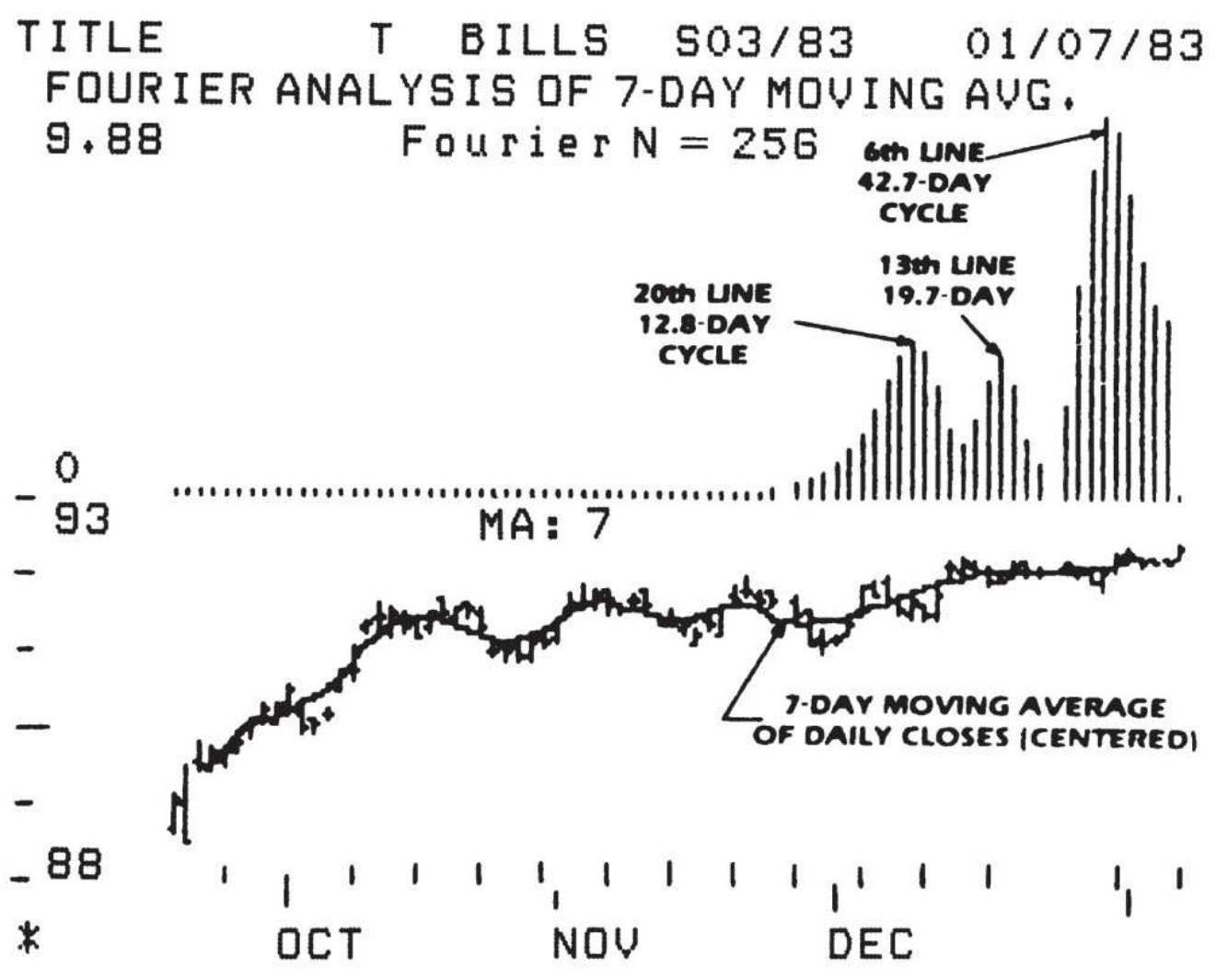

Let's start by defining the term technical analysis. Technical analysis is the systematic evaluation of price, volume, breadth, and open interest, for the purpose of price forecasting. A systematic approach may simply use a bar chart and a ruler, or it may use all the computing power available. Technical analysis may include any quantitative method as well as all forms of pattern recognition. Its objective is to decide, in advance, based on a set of clear and complete rules, where prices will go over some future period, whether 1 hour, 1 day, or 5 years.