Introduction to ResNets ResNet 简介

“我们需要更深入”Meme,当网络深度超过一定阈值时,经典 CNN 的表现就不佳。 ResNet 允许训练更深的网络。

This Article is Based on Deep Residual Learning for Image Recognition from He et al. [2] (Microsoft Research): https://arxiv.org/pdf/1512.03385.pdf

本文基于 He 等人的图像识别深度残差学习。 [2](微软研究院): https://arxiv.org/pdf/1512.03385.pdf

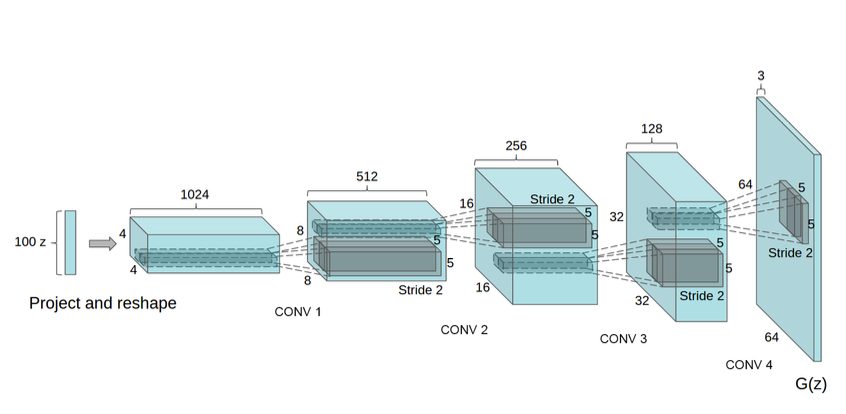

In 2012, Krizhevsky et al. [1] rolled out the red carpet for the Deep Convolutional Neural Network. This was the first time this architecture was more successful that traditional, hand-crafted feature learning on the ImageNet. Their DCNN, named AlexNet, contained 8 neural network layers, 5 convolutional and 3 fully-connected. This laid the foundational for the traditional CNN, a convolutional layer followed by an activation function followed by a max pooling operation, (sometimes the pooling operation is omitted to preserve the spatial resolution of the image).

2012 年,克里热夫斯基等人。 [1] 为深度卷积神经网络铺上了红地毯。这是该架构第一次比 ImageNet 上传统的手工特征学习更成功。他们的 DCNN 名为 AlexNet,包含 8 个神经网络层、5 个卷积层和 3 个全连接层。这为传统 CNN 奠定了基础,即卷积层、激活函数、最大池化操作(有时会省略池化操作以保留图像的空间分辨率)。

Much of the success of Deep Neural Networks has been accredited to these additional layers. The intuition behind their function is that these layers progressively learn more complex features. The first layer learns edges, the second layer learns shapes, the third layer learns objects, the fourth layer learns eyes, and so on. Despite the popular meme shared in AI communities from the Inception movie stating that “We need to go Deeper”, He et al. [2] empirically show that there is a maximum threshold for depth with the traditional CNN model.

深度神经网络的成功很大程度上归功于这些附加层。它们功能背后的直觉是这些层逐渐学习更复杂的特征。第一层学习边缘,第二层学习形状,第三层学习物体,第四层学习眼睛,依此类推。尽管《盗梦空间》电影中人工智能社区中流行的梗是“我们需要更深入”,但 He 等人。 [2] 经验表明,传统的 CNN 模型存在深度的最大阈值。

He et al. [2] plot the training and test error of a 20-layer CNN versus a 56-layer CNN. This plot defies our belief that adding more layers would create a more complex function, thus the failure would be attributed to overfitting. If this was the case, additional regularization parameters and algorithms such as dropout or L2-norms would be a successful approach for fixing these networks. However, the plot shows that the training error of the 56-layer network is higher than the 20-layer network highlighting a different phenomenon explaining it’s failure.

他等人。 [2] 绘制了 20 层 CNN 与 56 层 CNN 的训练和测试误差。该图违背了我们的信念,即添加更多层会创建更复杂的函数,因此失败将归因于过度拟合。如果是这种情况,额外的正则化参数和算法(例如 dropout 或 L2-norms)将是修复这些网络的成功方法。然而,该图显示 56 层网络的训练误差高于 20 层网络,突出显示了解释其失败的不同现象。

Evidence shows that the best ImageNet models using convolutional and fully-connected layers typically contain between 16 and 30 layers.

有证据表明,使用卷积层和全连接层的最佳 ImageNet 模型通常包含 16 到 30 层。

The failure of the 56-layer CNN could be blamed on the optimization function, initialization of the network, or the famous vanishing/exploding gradient problem. Vanishing gradients are especially easy to blame for this, however, the authors argue that the use of Batch Normalization ensures that the gradients have healthy norms. Amongst the many theories explaining why Deeper Networks fail to perform better than their Shallow counterparts, it is sometimes better to look for empirical results for explanation and work backwards from there. The problem of training very deep networks has been alleviated with the introduction of a new neural network layer — The Residual Block.

56 层 CNN 的失败可以归咎于优化函数、网络初始化或著名的梯度消失/爆炸问题。梯度消失特别容易造成这种情况,然而,作者认为使用批量归一化可以确保梯度具有健康的规范。在解释为什么深层网络无法比浅层网络表现更好的许多理论中,有时最好寻找实证结果进行解释并从那里向后推论。通过引入新的神经网络层——残差块,训练非常深的网络的问题得到了缓解。

The picture above is the most important thing to learn from this article. For developers looking to quickly implement this and test it out, the most important modification to understand is the ‘Skip Connection’, identity mapping. This identity mapping does not have any parameters and is just there to add the output from the previous layer to the layer ahead. However, sometimes x and F(x) will not have the same dimension. Recall that a convolution operation typically shrinks the spatial resolution of an image, e.g. a 3x3 convolution on a 32 x 32 image results in a 30 x 30 image. The identity mapping is multiplied by a linear projection W to expand the channels of shortcut to match the residual. This allows for the input x and F(x) to be combined as input to the next layer.

上图是这篇文章最值得学习的地方。对于希望快速实现并测试它的开发人员来说,需要理解的最重要的修改是“跳过连接”,即身份映射。该恒等映射没有任何参数,只是将前一层的输出添加到前面的层。然而,有时 x 和 F(x) 不会具有相同的维度。回想一下,卷积运算通常会缩小图像的空间分辨率,例如,32 x 32 图像上的 3x3 卷积会产生 30 x 30 图像。恒等映射乘以线性投影W以扩展捷径的通道以匹配残差。这允许将输入 x 和 F(x) 组合起来作为下一层的输入。

Equation used when F(x) and x have a different dimensionality such as 32x32 and 30x30. This Ws term can be implemented with 1x1 convolutions, this introduces additional parameters to the model.

当 F(x) 和 x 具有不同维度(例如 32x32 和 30x30)时使用的方程。该 Ws 项可以通过 1x1 卷积来实现,这为模型引入了额外的参数。

An implementation of the shortcut block with keras from https://github.com/raghakot/keras-resnet/blob/master/resnet.py. This shortcut connection is based on a more advanced description from the subsequent paper, “Identity Mappings in Deep Residual Networks” [3].

来自https://github.com/raghakot/keras-resnet/blob/master/resnet.py的 keras 快捷方式块的实现。这种快捷方式连接基于后续论文“深度残差网络中的身份映射”[3] 中更高级的描述。

Another great implementation of Residual Nets in keras can be found here →

可以在此处找到 keras 中残差网络的另一个出色实现→

The Skip Connections between layers add the outputs from previous layers to the outputs of stacked layers. This results in the ability to train much deeper networks than what was previously possible. The authors of the ResNet architecture test their network with 100 and 1,000 layers on the CIFAR-10 dataset. They test on the ImageNet dataset with 152 layers, which still has less parameters than the VGG network [4], another very popular Deep CNN architecture. An ensemble of deep residual networks achieved a 3.57% error rate on ImageNet which achieved 1st place in the ILSVRC 2015 classification competition.

层之间的跳过连接将前一层的输出添加到堆叠层的输出中。这使得能够训练比以前更深的网络。 ResNet 架构的作者在 CIFAR-10 数据集上使用 100 层和 1,000 层测试他们的网络。他们在 152 层的 ImageNet 数据集上进行了测试,该数据集的参数仍然比另一种非常流行的深度 CNN 架构 VGG 网络 [4] 少。深度残差网络集合在 ImageNet 上实现了 3.57% 的错误率,在 ILSVRC 2015 分类竞赛中获得第一名。

A similar approach to ResNets is known as “highway networks”. These networks also implement a skip connection, however, similar to an LSTM these skip connections are passed through parametric gates. These gates determine how much information passes through the skip connection. The authors note that when the gates approach being closed, the layers represent non-residual functions whereas the ResNet’s identity functions are never closed. Empirically, the authors note that the authors of the highway networks have not shown accuracy gains with networks as deep as they have shown with ResNets.

与 ResNet 类似的方法被称为“高速公路网络”。这些网络还实现了跳跃连接,但是,与 LSTM 类似,这些跳跃连接通过参数门传递。这些门决定有多少信息通过跳跃连接。作者指出,当门接近关闭时,各层代表非残差函数,而 ResNet 的恒等函数永远不会关闭。根据经验,作者指出,高速公路网络的作者并没有显示出像 ResNet 那样深度的网络的准确性增益。

The architecture they used to test the Skip Connections followed 2 heuristics inspired from the VGG network [4].

他们用于测试 Skip Connections 的架构遵循受 VGG 网络启发的 2 个启发式 [4]。

- If the output feature maps have the same resolution e.g. 32 x 32 → 32 x 32, then the filter map depth remains the same

如果输出特征图具有相同的分辨率,例如 32 x 32 → 32 x 32,则滤波器图深度保持不变 - If the output feature map size is halved e.g. 32 x 32 → 16 x 16, then the filter map depth is doubled.

如果输出特征图大小减半,例如 32 x 32 → 16 x 16,则滤波器图深度加倍。

Overall, the design of a 34-layer residual network is illustrated in the image below:

总的来说,34层残差网络的设计如下图所示:

在上图中,虚线跳跃连接表示将恒等映射乘以前面讨论的 Ws 线性投影项以对齐输入的维度。

上图所示架构的训练结果:直线表示训练误差,静态线表示测试误差。与左图的普通网络不同,34 层 ResNet 的错误率低于 30%。 34 层 ResNet 比 18 层 ResNet 性能高 2.8%。

表显示不同深度的测试误差和剩余连接的使用

In Conclusion, the Skip Connection is a very interesting extension to Deep Convolutional Networks that have empirically shown to increase performance in ImageNet classification. These layers can be used in other tasks requiring Deep networks as well such as Localization, Semantic Segmentation, Generative Adversarial Networks, Super-Resolution, and others. Residual Networks are different from LSTMs which gate previous information such that not all information passes through. Additionally, the Skip Connections shown in this article are essentially arranged in 2-layer blocks, they do not use the input from same layer 3 to layer 8. Residual Networks are more similar to Attention Mechanisms in that they model the internal state of the network opposed to the inputs. Hopefully this article was a useful introduction to ResNets, thanks for reading!

总之,Skip Connection 是深度卷积网络的一个非常有趣的扩展,经验表明它可以提高 ImageNet 分类的性能。这些层可用于其他需要深度网络的任务,例如本地化、语义分割、生成对抗网络、超分辨率等。残差网络与 LSTM 不同,后者对先前的信息进行门控,使得并非所有信息都通过。此外,本文中显示的 Skip Connections 本质上是排列在 2 层块中,它们不使用同一层 3 到层 8 的输入。残差网络与注意力机制更相似,因为它们对网络的内部状态进行建模反对输入。希望本文对 ResNets 有所帮助,感谢您的阅读!

References 参考

[1] Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton. ImageNet Classification with Deep Convolutional Neural Networks. 2012.

[1] 亚历克斯·克里热夫斯基、伊利亚·苏茨克韦尔、杰弗里·E·辛顿。使用深度卷积神经网络进行 ImageNet 分类。 2012年。

[2] Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. Deep Residual Learning for Image Recognition. 2015.

[3] Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. Identity Mappings in Deep Residual Networks. 2016.

[4] Karen Simonyan, Andrew Zisserman. Very Deep Convolutional Networks for Large-Scale Image Recognition. 2014.

[4] 凯伦·西蒙尼安,安德鲁·齐瑟曼。用于大规模图像识别的超深卷积网络。 2014年。