OpenAI喜提姚班学霸姚顺雨:思维树作者,普林斯顿博士,还是个Rapper

OpenAI welcomes Yao Shunyu, a top student from Yao Class: author of Thinking Tree, Princeton PhD, and also a Rapper

Author: ThePaper.cn

Length: • 1 min

Annotated by howie.serious

原创 关注前沿科技 量子位 Original Follow Frontier Technology Quantum Bit

金磊 西风 发自 凹非寺 Jin Lei, West Wind, reporting from Aofeisi

量子位 | 公众号 QbitAI Quantum Bit | WeChat Official Account QbitAI

清华姚班学霸姚顺雨,官宣加入了OpenAI。 Tsinghua Yao Class top student Yao Shunyu officially announced joining OpenAI.

而就是这么一则简短的消息,却引来了圈内众人的围观和祝福,来感受一下这个feel:

And it is this brief piece of news that has attracted the attention and blessings of many in the circle. Feel this vibe:

其中不乏像OpenAI前沿研究主管、美国IOI教练Mark Chen,以及AI领域的教授、投资人等等。

Among them are notable figures like OpenAI's Director of Frontier Research, U.S. IOI coach Mark Chen, as well as professors and investors in the AI field.

那么这位姚顺雨,到底为何能够引来如此关注? So why has this Yao Shunyu attracted so much attention?

从他过往的履历来看,我们可以提炼出这样几个关键词:

From his past resume, we can extract the following keywords:

清华姚班 Tsinghua Yao Class

姚班联席会主席 Chairman of the Yao Class Joint Committee

清华大学学生说唱社联合创始人 Co-founder of Tsinghua University Student Rap Club

普林斯顿计算机博士 Princeton Computer Science PhD

△姚顺雨,图源:个人主页 △ Yao Shunyu, Image Source: Personal Homepage

但除了较为亮点的履历之外,真正让姚顺雨步入公众的视野,还是因为他的多项科研成果:

But aside from his impressive resume, what truly brought Yao Shunyu into the public eye are his numerous research achievements:

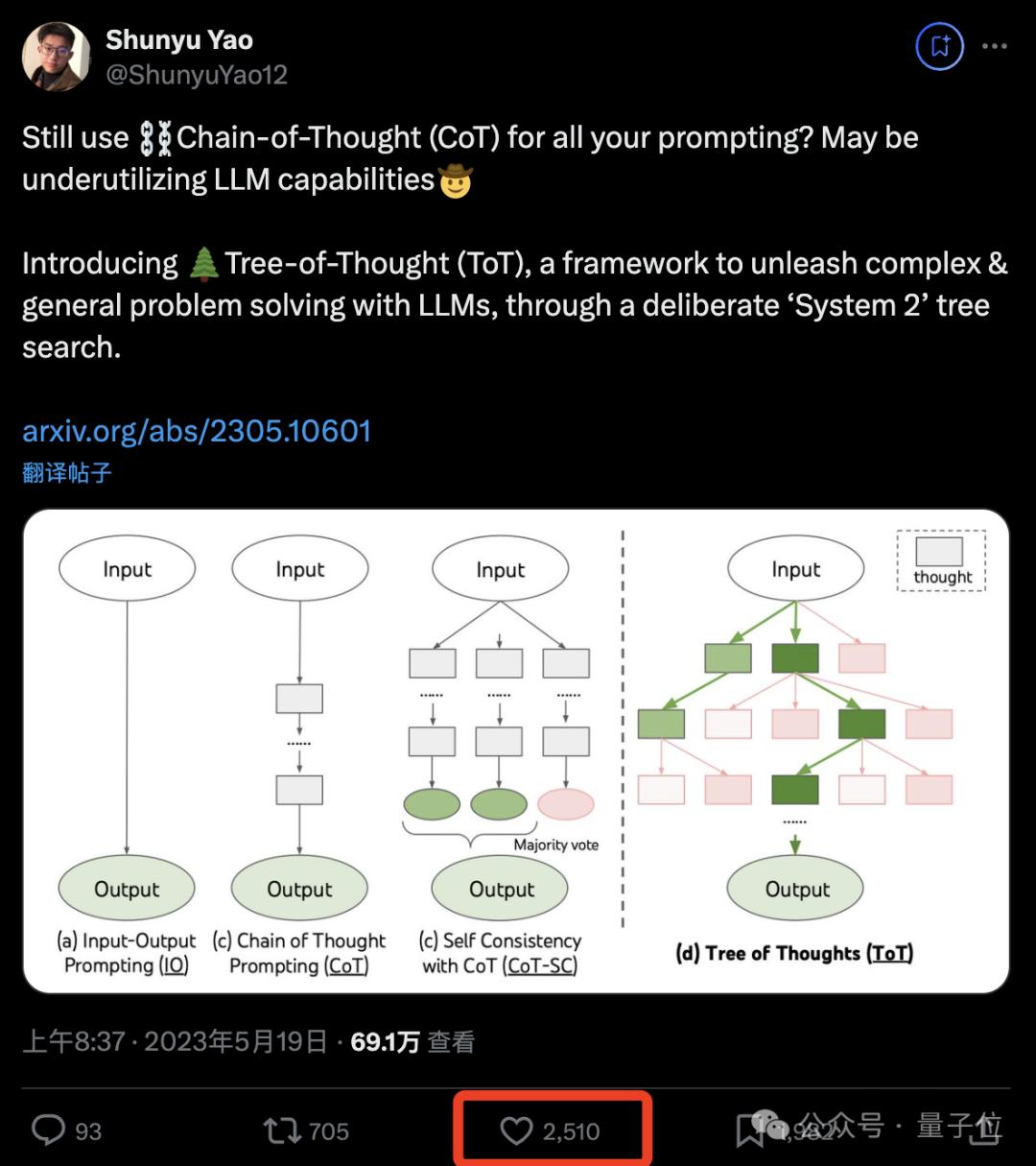

思维树(Tree of Thoughts):让LLM反复思考,大幅提高推理能力。

Tree of Thoughts: Allowing LLM to think repeatedly, significantly improving reasoning ability.

SWE-bench:一个大模型能力评估数据集。 SWE-bench: A dataset for evaluating the capabilities of large models.

SWE-agent:一个开源AI程序员。 SWE-agent: An open-source AI programmer.

毫不夸张的说,几乎每项研究都在圈里产生了不小的涟漪;并且非常明显的一点是,它们都是深深围绕着大模型而展开。

It is no exaggeration to say that almost every study has created quite a ripple in the community; and it is very obvious that they are all deeply centered around large models.

这或许也正应了姚顺雨此次官宣里的一句话: This might also echo a sentence from Yao Shunyu's recent announcement:

是时候将研究愿景转变为现实了。 It is time to turn research visions into reality.

至于这个“研究愿景”,我们继续深入了解一下。 As for this "research vision," let's delve deeper into it.

研究关键词:Language Agents Research Keywords: Language Agents

如果纵观姚顺雨的主页,尤其是论文研究部分,就不难发现有一个出镜频率极高的词组——Language Agents。

If you look through Shunyu Yao's homepage, especially the research papers section, you will easily find a frequently appearing phrase—Language Agents.

包括在他X主页中的简介,第一句话上来也是Language Agents:

Including the introduction on his X homepage, the first sentence also starts with Language Agents:

而这,也正是他博士毕业论文的题目:Language Agents: From Next-Token Prediction to Digital Automation。

And this is also the title of his doctoral dissertation: Language Agents: From Next-Token Prediction to Digital Automation.

Language Agents,即语言智能体,是姚顺雨提出来了一种新的智能体类别。

Language Agents, or language intelligent agents, is a new category of agents proposed by Shunyu Yao.

和传统智能体不同的是,这种方法是将语言模型用于智能体的推理和行动,主打一个让它们实现数字自动化(Digital Automation)。

Unlike traditional agents, this method uses language models for agent reasoning and action, focusing on achieving digital automation.

至于具体的实现方法,则有三个关键技术(均有独立的论文),它们分别是:

As for the specific implementation methods, there are three key technologies (each with independent papers), which are:

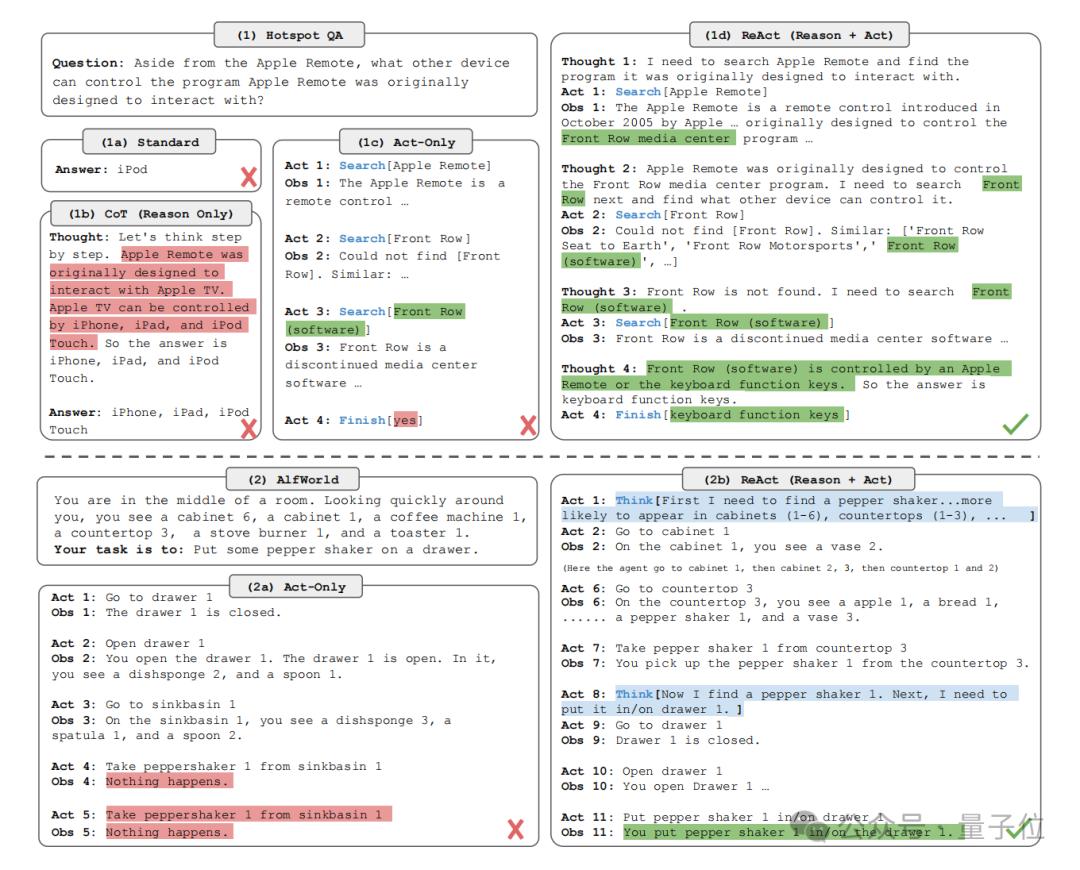

ReAct:一种将推理和行动相结合的方法,通过语言模型生成推理轨迹和行动,来解决各种语言推理和决策任务。

ReAct: A method that combines reasoning and action, generating reasoning trajectories and actions through language models to solve various language reasoning and decision-making tasks.

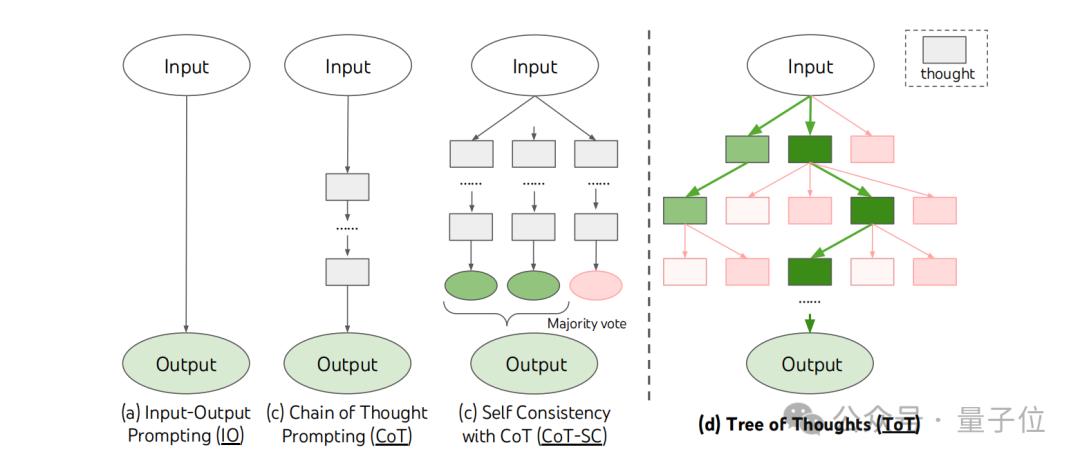

思维树:一种基于树搜索的方法,通过生成和评估多个思维路径来解决复杂问题,提高语言模型的推理能力。

Tree of Thoughts: A tree search-based method that solves complex problems by generating and evaluating multiple thought paths, improving the reasoning ability of language models.

CoALA:一个概念框架,用于组织和设计语言代理,包括内存、行动空间和决策制定等方面。

CoALA: A conceptual framework for organizing and designing language agents, including aspects such as memory, action space, and decision-making.

以ReAct为例,研究是将语言模型的动作空间扩充为动作集和语言空间的并集。

Taking ReAct as an example, the research expands the action space of the language model to the union of the action set and the language space.

语言空间中的动作(即思维或推理轨迹)不影响外部环境,但能通过对当前上下文的推理来更新上下文,可以支持未来的推理或行动。

Actions in the language space (i.e., thought or reasoning trajectories) do not affect the external environment, but can update the context through reasoning about the current context, supporting future reasoning or actions.

例如在下图展示的对话中,采用ReAct的方法,可以引导智能体把“产生想法→采取行动→观察结果”这个过程进行循环。

For example, in the dialogue shown in the figure below, using the ReAct method can guide the agent to cycle through the process of "generating ideas → taking action → observing results."

如此一来,便可以结合推理的轨迹和操作,允许模型进行动态的推理,让智能体的决策和最终结果变得更优。

In this way, it is possible to combine reasoning trajectories and operations, allowing the model to perform dynamic reasoning, making the agent's decisions and final results better.

若是把ReAct的方法归结为让智能体“reason to act”,那么下一个方法,即思维树,则重在让智能体“reason to plan”。

If the ReAct method is summarized as allowing the agent to "reason to act," then the next method, the thinking tree, focuses on allowing the agent to "reason to plan."

思维树是把问题表示为在树结构上的搜索,每个节点是一个状态,代表部分解决方案,分支对应于修改状态的操作。

A thinking tree represents a problem as a search on a tree structure, where each node is a state representing a partial solution, and branches correspond to operations that modify the state.

它主要涉及四个问题: It mainly involves four issues:

思维分解:将复杂问题分解为一系列中间步骤,每个步骤都可以看作是树的一个节点。

Thought decomposition: Decomposing a complex problem into a series of intermediate steps, each of which can be seen as a node of the tree.

思维生成:利用语言模型生成每个节点的潜在思维,这些思维是解决问题的中间步骤或策略。

Thought generation: Using a language model to generate potential thoughts for each node, which are intermediate steps or strategies for solving the problem.

状态评估:通过语言模型对每个节点的状态进行评估,判断其在解决问题中的进展和潜力。

State evaluation: Evaluating the state of each node through a language model to determine its progress and potential in solving the problem.

搜索算法:采用不同的搜索算法(如广度优先搜索 BFS 或深度优先搜索 DFS)来探索思维树,找到最优的解决方案。

Search algorithms: Use different search algorithms (such as Breadth-First Search BFS or Depth-First Search DFS) to explore the thought tree and find the optimal solution.

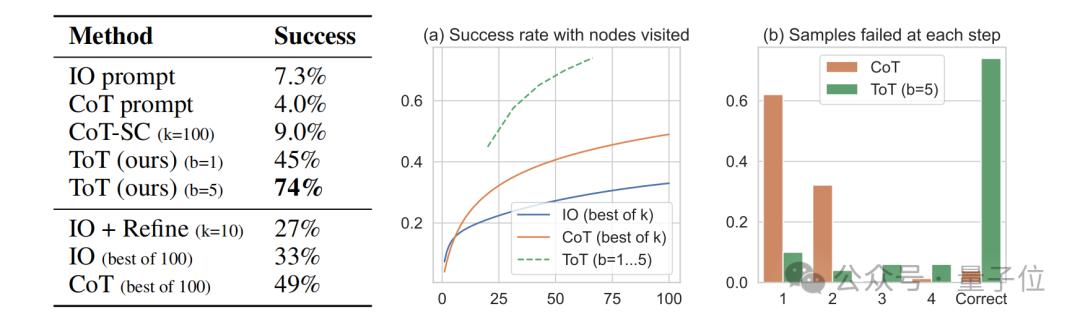

将思维树应用到“24点”游戏中,与此前的思维链(CoT)相比,准确率有了明显提高。

Applying the thought tree to the "24-point" game has significantly improved accuracy compared to the previous Chain of Thought (CoT).

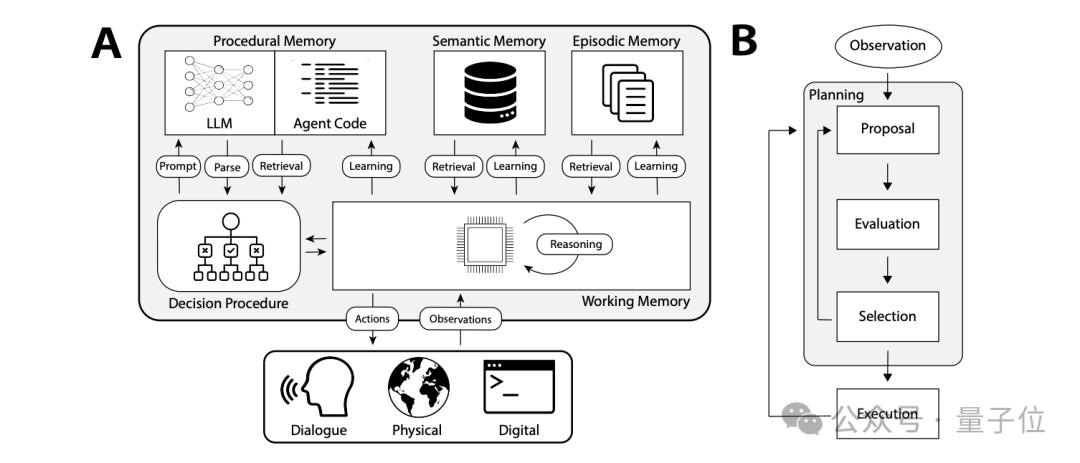

至于Language Agents中的最后一个关键技术,即CoALA,则是一种用于组织和设计语言智能体的概念框架。

As for the last key technology in Language Agents, CoALA, it is a conceptual framework for organizing and designing language agents.

从下面的结构图来看,它大致分为信息存储、行动空间和决策制定三大模块。

From the structural diagram below, it is roughly divided into three major modules: information storage, action space, and decision making.

信息存储是指语言智能体将信息存储在多个内存模块中,包括短期工作记忆和长期记忆(如语义记忆、情景记忆和程序记忆)。

Information storage refers to the language agent storing information in multiple memory modules, including short-term working memory and long-term memory (such as semantic memory, episodic memory, and procedural memory).

这些内存模块用于存储不同类型的信息,如感知输入、知识、经验等,并在智能体的决策过程中发挥作用。

These memory modules are used to store different types of information, such as sensory inputs, knowledge, experiences, etc., and play a role in the decision-making process of the agent.

除此之外,CoALA 将智能体的行动空间分为外部行动和内部行动;外部行动涉及与外部环境的交互,如控制机器人、与人类交流或在数字环境中执行操作。

In addition, CoALA divides the action space of the agent into external actions and internal actions; external actions involve interaction with the external environment, such as controlling robots, communicating with humans, or performing operations in a digital environment.

内部行动则与智能体的内部状态和记忆交互,包括推理、检索和学习等操作。

Internal actions interact with the agent's internal state and memory, including operations such as reasoning, retrieval, and learning.

最终,语言智能体会通过决策制定过程选择要执行的行动;而这个过程也是会根据各种因素、反馈,从中找出最优解。

Ultimately, the language agent will choose the actions to execute through the decision-making process; this process will also find the optimal solution based on various factors and feedback.

除此之外,还有像开源AI程序员的工作SWE-agent等,也在圈里广泛传播。

In addition, there are also widely circulated projects in the community, such as the open-source AI programmer SWE-agent.

但我们从姚顺雨众多的科研课题中,除了Language Agents之外,还能看到他所追求的另一个关键词——计算的思维。

But from the numerous research projects of Yao Shunyu, besides Language Agents, we can also see another keyword he pursues - computational thinking.

而这一点,其实在他念本科的时候便已经有所透露。 And this point was actually hinted at when he was an undergraduate.

在即将奔赴普林斯顿大学攻读计算机博士学位前,作为2015级学长,姚顺雨曾在清华2019年各类型自主选拔复试的开营仪式上向复试考生分享了本人在清华的学习成长经历。

Before heading to Princeton University to pursue a PhD in computer science, as a senior from the class of 2015, Yao Shunyu shared his learning and growth experiences at Tsinghua University with candidates during the opening ceremony of various independent selection interviews in 2019.

相关内容记录在他自己写的名为“你在清华姚班学到了什么?姚顺雨:足以改变世界”的文章中。

The related content is recorded in an article he wrote himself titled "What did you learn in the Yao Class at Tsinghua? Yao Shunyu: Enough to change the world."

当时他从理论和实践两方面重点分享了计算的思维,并透露觉得四年下来,最大的收获就是计算的思维:

At that time, he focused on sharing computational thinking from both theoretical and practical aspects, and revealed that after four years, the biggest gain was computational thinking.

从理论上我们现在看到很多不可能做到的事情。所谓理论指导实践,我觉得更多的是说,我们得从一个高度理解一个系统的能力极限和事情难易,然后再选择能做的、有意义的事情去做。

Theoretically, we now see many things that are impossible to achieve. The so-called theory guides practice, I think it is more about understanding the system's capability limits and the difficulty of things from a high level, and then choosing feasible and meaningful things to do.

和阳光开朗大男孩tag锁死,姚顺雨还分享了因清华南方浸润计划项目,前往阿根廷的经历:

Tagged as a sunny and cheerful big boy, Yao Shunyu also shared his experience of going to Argentina due to the Tsinghua Southern Immersion Program:

我遇到了一群阿根廷的孩子……英语并不是世界通用的,阿根廷人说西班牙语。我曾经试图学西班牙语,但是我放弃了,因为我学计算机,我拿出了谷歌翻译。我跟他们说北京的故宫和长城……

I met a group of Argentine children... English is not universally spoken; Argentinians speak Spanish. I once tried to learn Spanish, but I gave up because I studied computer science. I took out Google Translate. I told them about the Forbidden City and the Great Wall in Beijing...

△来源:清华招生 公众号 △ Source: Tsinghua Admissions WeChat Public Account

在他看来,这个时代,计算能和任何学科相结合,而世界很大,在清华可以做你想做的事。

In his view, in this era, computing can be combined with any discipline, and the world is vast. At Tsinghua, you can do what you want to do.

说完姚顺雨,姚班还有哪些人在搞大模型? After talking about Yao Shunyu, who else in the Yao class is working on large models?

爆火的大模型,姚班还有谁在搞? Who else in the Yao class is working on the popular large models?

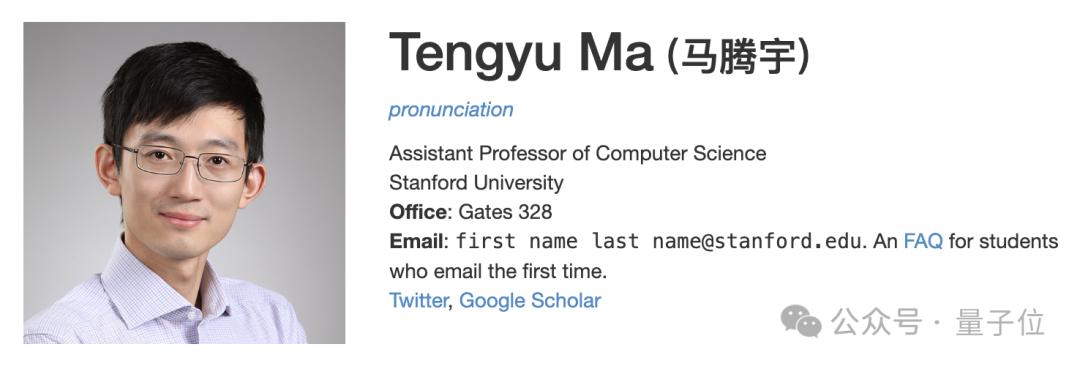

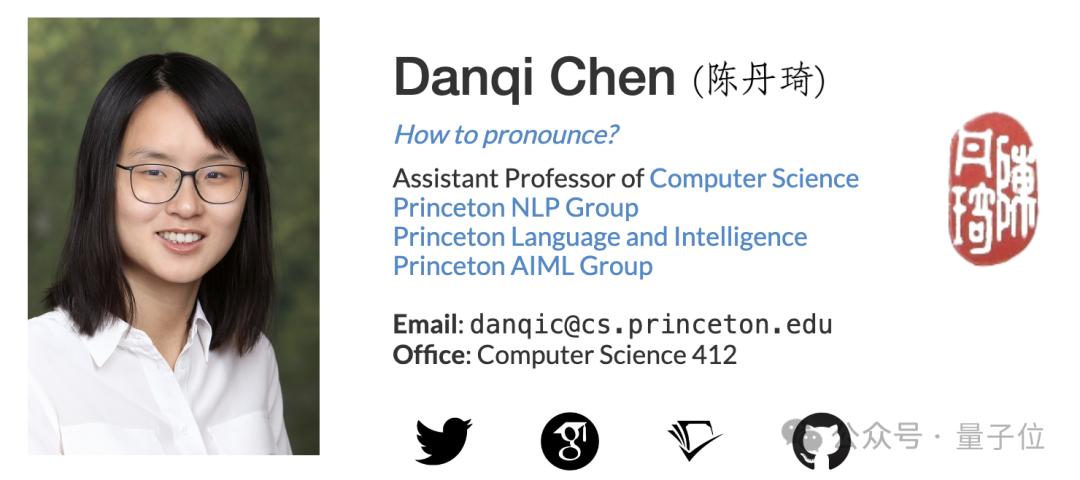

不得不提的有马腾宇和陈丹琦。 We have to mention Ma Tengyu and Chen Danqi.

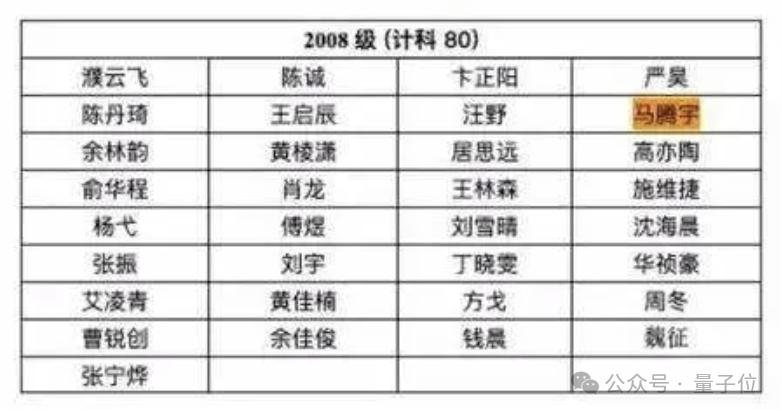

俩人当年是同班同学,清华姚班2008级校友,并且之后都拿了具有“诺奖风向标”之称的斯隆奖。

The two were classmates back then, alumni of Tsinghua Yao class of 2008, and both later received the Sloan Award, known as the "Nobel Prize indicator."

马腾宇博士就读于普林斯顿大学,导师是理论计算机科学家、两届哥德尔奖得主Sanjeev Arora教授。

Dr. Ma Tengyu studied at Princeton University, under the supervision of theoretical computer scientist and two-time Gödel Prize winner Professor Sanjeev Arora.

博士毕业后,MIT、哈佛、斯坦福等顶尖高校都给了他助理教授的Offer,马腾宇最终选择了斯坦福。

After graduating with a PhD, top universities such as MIT, Harvard, and Stanford all offered him assistant professor positions. Ma Tengyu ultimately chose Stanford.

去年年底,马腾宇还正式宣布大模型创业了——创立Voyage AI,透露将带队打造目前最好的嵌入模型,还会提供专注于某个领域或企业的定制化模型。

At the end of last year, Ma Tengyu officially announced his venture into large model entrepreneurship by founding Voyage AI. He revealed that he would lead a team to create the best embedding models currently available and also provide customized models focused on specific fields or enterprises.

斯坦福人工智能实验室主任Christopher Manning、AI领域著名华人学者李飞飞等三名教授担任Voyage AI的学术顾问。

Three professors, including Christopher Manning, Director of the Stanford Artificial Intelligence Laboratory, and renowned Chinese-American AI scholar Fei-Fei Li, serve as academic advisors for Voyage AI.

陈丹琦这边,清华姚班完成本科学业后,2018年又在斯坦福大学拿下博士学位,主攻NLP,最终成为普林斯顿大学计算机科学系助理教授、普林斯顿语言与智能项目副主任,共同领导普林斯顿NLP小组。

As for Chen Danqi, after completing her undergraduate studies at Tsinghua University's Yao Class, she obtained a PhD from Stanford University in 2018, specializing in NLP. She eventually became an assistant professor in the Department of Computer Science at Princeton University and the associate director of the Princeton Language and Intelligence Project, co-leading the Princeton NLP group.

其个人主页显示,“这些天主要被开发大模型吸引”,正在研究主题包括:

Her personal homepage shows that she is "mainly attracted to developing large models these days," and the research topics she is currently exploring include:

检索如何在下一代模型中发挥重要作用,提高真实性、适应性、可解释性和可信度。

Retrieval plays an important role in the next-generation models, enhancing authenticity, adaptability, interpretability, and credibility.

大模型的低成本训练和部署,改进训练方法、数据管理、模型压缩和下游任务适应优化。

Low-cost training and deployment of large models, improving training methods, data management, model compression, and optimization for downstream tasks.

还对真正增进对当前大模型功能和局限性理解的工作感兴趣,无论在经验上还是理论上。

Also interested in work that truly enhances the understanding of the capabilities and limitations of current large models, both empirically and theoretically.

陈丹琦团队的大模型工作,量子位也有持续关注。 The large model work of Danqi Chen's team is also continuously followed by Quantum Bit.

比如,提出的大模型降本大法——数据选择算法LESS, 只筛选出与任务最相关5%数据来进行指令微调,效果比用整个数据集还要好。

For example, the proposed cost-reduction method for large models—the data selection algorithm LESS, which only selects the 5% of data most relevant to the task for instruction fine-tuning, performs better than using the entire dataset.

而指令微调正是让基础模型成为类ChatGPT助手模型的关键一步。

Instruction fine-tuning is a crucial step in transforming a foundational model into a ChatGPT-like assistant model.

提出爆火的“羊驼剪毛”大法——LLM-Shearing大模型剪枝法,只用3%的计算量、5%的成本取得SOTA,统治了1B-3B规模的开源大模型。

Introducing the viral "Alpaca Shearing" method—LLM-Shearing large model pruning method, achieving SOTA with only 3% of the computation and 5% of the cost, dominating the 1B-3B scale open-source large models.

除了这两位,业界、学术界姚班校友在搞大模型的还有很多。

Besides these two, many alumni from the Yao Class in the industry and academia are also working on large models.

之前火爆全网的大模型原生应用《完蛋!我被大模型包围了》及其续作《我把大模型玩坏了》,就是由姚班学霸带队开发的。

The previously viral native large model applications "Oh no! I'm Surrounded by Large Models" and its sequel "I Broke the Large Model" were developed by a team led by Yao Class top students.

游戏作者范浩强,旷视6号员工。当年以IOI金牌、保送清华姚班、高二实习等传奇事迹被誉为天才少年。如今他已是旷视科技研究总经理,谷歌学术h-index 32的行业大佬。

The game author, Fan Haoqiang, is the 6th employee of Megvii. He was hailed as a prodigy for his legendary achievements such as winning a gold medal at IOI, being recommended to Tsinghua Yao Class, and interning in his second year of high school. Today, he is the General Manager of Research at Megvii Technology and an industry leader with a Google Scholar h-index of 32.

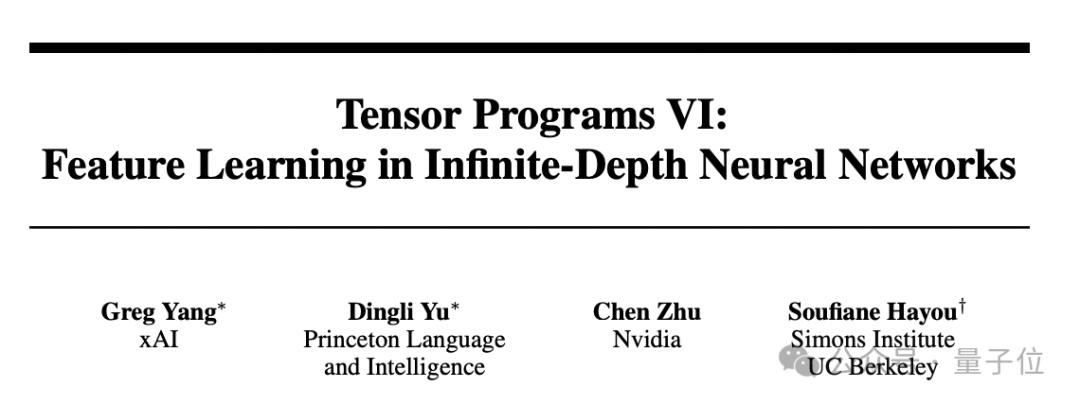

马斯克xAI首个研究成果——Tensor Programs VI,共同一作中也有姚班校友的身影。

The first research result of Musk's xAI - Tensor Programs VI, also features an alumnus from Yao Class as a co-author.

Tensor Programs VI是xAI创始成员、丘成桐弟子杨格(Greg Yang)之前Tensor Programs系列工作的延续,论文重点探讨了“如何训练无限深度网络”。

Tensor Programs VI is a continuation of the Tensor Programs series by xAI founding member Greg Yang, a disciple of Shing-Tung Yau. The paper focuses on "how to train infinitely deep networks."

据说Tensor Programs相关成果,在GPT-4中已有应用。为解读论文,杨格本人当时还专门在X上进行了一场直播分享。

It is said that the results related to Tensor Programs have already been applied in GPT-4. To interpret the paper, Greg Yang even held a live sharing session on X at that time.

共同一作Dingli Yu,本科毕业于清华姚班,目前Dingli Yu也快要在普林斯顿计算机科学系博士毕业了。

Co-author Dingli Yu graduated from Tsinghua's Yao Class and is currently about to complete his PhD in Computer Science at Princeton.

还有很多很多………… And many, many more...

说回这次姚顺雨被挖到OpenAI,OpenAI这边的招聘动作还在继续。

Speaking of Yao Shunyu being recruited by OpenAI this time, the recruitment actions on OpenAI's side are still ongoing.

OpenAI工程师Karina Nguyen发布最新招聘帖:

OpenAI engineer Karina Nguyen posted the latest job listing:

OpenAI模型行为团队招人啦!这是一个集设计工程与训练后研究于一体的梦想职位,也是世界上最稀有的工作❤️

The OpenAI Model Behavior Team is hiring! This is a dream position that combines design engineering and post-training research, and it's one of the rarest jobs in the world ❤️

我们使用诸如RLHF/RLAIF等对齐方法定义模型核心行为,以体现基本价值观并提升AGI的创造性智能。通过这些成果,我们与产品+模型设计及工程团队共同开创AI界面和交互新模式,这将影响数百万用户……

We use alignment methods such as RLHF/RLAIF to define the core behaviors of models, reflecting fundamental values and enhancing the creative intelligence of AGI. Through these achievements, we are pioneering new modes of AI interfaces and interactions with the product + model design and engineering teams, which will impact millions of users...

有意思的是,Karina Nguyen其实之前是Anthropic AI(Claude团队)研究员,去年五月还和思维链“开山论文”一作、OpenAI的Jason Wei一同在X(原Twitter)上进行提示词决斗。

Interestingly, Karina Nguyen was previously a researcher at Anthropic AI (Claude team). Last May, she even had a prompt duel on X (formerly Twitter) with Jason Wei from OpenAI, who is also the first author of the "Chain of Thought" foundational paper.

没想到Karina Nguyen这么快就跳槽到了OpenAI……

I didn't expect Karina Nguyen to switch to OpenAI so quickly...

顺便提一嘴,就在昨天有消息爆料,谷歌DeepMind研究员Thibault Sottiaux也被挖到了OpenAI。

By the way, just yesterday there was news that Google DeepMind researcher Thibault Sottiaux was also poached by OpenAI.

要知道,Thibault Sottiaux在Gemini初代和Gemini 1.5等论文中都是核心贡献者。

It should be noted that Thibault Sottiaux was a core contributor to papers such as Gemini 1.0 and Gemini 1.5.

由此可见大模型赛道目前火爆程度,各家抢赛道的抢赛道,抢人的抢人。

This shows the current popularity of the large model track, with companies competing for the track and talent.

One More Thing

跟姚顺雨同年从清华毕业的,还有2位姚顺yu! Along with Yao Shunyu, who graduated from Tsinghua University in the same year, there are also two other Yao Shunyu!

清华大学官方在2019年三位姚顺yu毕业时,发了一条微博,还晒了三人的合照。

In 2019, when three students named Yao Shunyu graduated from Tsinghua University, the official account posted a Weibo and shared a group photo of the three.

除了现已加入OpenAI的姚顺雨,还有一个姚顺雨是来自人文学院日语专业的一位女生。

Besides Yao Shunyu who has now joined OpenAI, there is another Yao Shunyu who is a female student from the Japanese major in the School of Humanities.

另一位姚顺yu是姚顺宇,来自物理系,他是2018年本科生特奖得主,本科期间就以第一作者在物理顶刊PRL(Physical Review Letters)上发表论文两篇、PRB(Physical Review B)一篇。

The other Yao Shunyu is Yao Shunyu from the Department of Physics. He was the recipient of the undergraduate special award in 2018 and published two papers in the top physics journal PRL (Physical Review Letters) and one in PRB (Physical Review B) as the first author during his undergraduate studies.

参考链接: Reference link:

[1]https://x.com/ShunyuYao12/status/1818807946756997624

[2]https://ysymyth.github.io

[3]https://x.com/karinanguyen_/status/1819082842238079371

[4]https://weibo.com/1676317545/HCR7yuXAl?refer_flag=1001030103_

— 完 — — The End —

特别声明 Special Statement

本文为澎湃号作者或机构在澎湃新闻上传并发布,仅代表该作者或机构观点,不代表澎湃新闻的观点或立场,澎湃新闻仅提供信息发布平台。申请澎湃号请用电脑访问http://renzheng.thepaper.cn。

This article is uploaded and published by the author or organization of the Pengpai account on Pengpai News. It only represents the views of the author or organization and does not represent the views or positions of Pengpai News. Pengpai News only provides an information publishing platform. To apply for a Pengpai account, please visit http://renzheng.thepaper.cn using a computer.